Karl Ho

University of Texas at Dallas

AI Readiness in Research and Teaching of Ecological Capital Governance

Prepared for presentation at The 16th International Conference and Practical Forum on Public Governance –Prospective Governance in Ecological Capital and Sustainability Advancement, National Chung Hsing University, Taichung, Taiwan, November 9-10, 2024

-

Karl Ho is:

- Professor of Instruction at University of Texas at Dallas (UTD) School of Economic, Political and Policy Sciences (EPPS)

- Co-founder of the UTD Social Data Analytics and Research program (SDAR)

- Founder of DataGeneration.io

- Author of Data Programming

- Co-Principal Investigator of the Hong Kong Election Study project

- Website: karlho.com (talks, lecture, publications)

Speaker bio.

Characteristics of Ecological Capital

Barbier (2013, 2016) identifies three key characteristics of ecological capital:

-

Depreciation: Similar to physical capital, ecological capital can depreciate over time, particularly through degradation or unsustainable use.

-

Irreplaceability: Many ecosystems are unique and cannot be substituted, making their loss particularly critical.

-

Potential for Abrupt Collapse: Ecosystems may experience sudden and irreversible changes if pushed beyond certain thresholds (Barbier 2013, 2016)

Ecological capital

In Taichung, Dasyueshan National Forest Recreation Area (大雪山國家森林)offers a range of valuable services, including water regulation, biodiversity conservation, carbon sequestration, and recreational opportunities. The forests help regulate water flows by capturing rainfall and releasing it slowly, which is crucial for reducing flood risks in the city and maintaining a steady supply of water during drier seasons. This water regulation service supports agriculture, urban needs, and the region’s resilience against extreme weather events.

Ecological capital: examples

Additionally, Taichung’s forests are home to diverse flora and fauna, including endemic species like the Formosan landlocked salmon and various bird species, which contribute to Taiwan’s rich biodiversity. These forests act as carbon sinks, sequestering carbon dioxide and thus helping Taiwan meet its climate change mitigation goals. The natural beauty of these forested areas also attracts eco-tourism, providing economic benefits to local communities and raising awareness about environmental conservation.

Ecological capital: examples

The recognition of these forest ecosystems as ecological capital emphasizes their value not just in terms of biodiversity and climate regulation but also for the broader socioeconomic benefits they provide to Taichung and its residents. Sustainable management of these forests is crucial to ensure that these ecosystem services continue to support the city’s environmental health and resilience.

Ecological capital: examples

Motivation

-

The integration of artificial intelligence (AI) into the sustainable governance of ecological capital presents both opportunities and challenges across various disciplines.

-

AI readiness in research is crucial for leveraging AI's potential to enhance ecological governance. This involves equipping research ecosystems with the necessary infrastructure, skills, and policies to integrate AI effectively.

-

Importance of data management principles like FAIR and CARE to support AI-driven ecological research.

Motivation

-

Preparing the next generation of leaders with AI literacy

-

Initiatives like aiEDU's AI Readiness Framework to equip students and educators with the skills needed to navigate an AI-driven world.

-

-

Emphasizing AI literacy and readiness, focusing on understanding AI's interdisciplinary impacts and fostering collaboration and creativity alongside technology critical in developing leaders who can apply AI insights to sustainable governance challenges.

Motivation

-

AI-forward governance involves adopting policies that ensure ethical and effective use of AI in managing ecological capital. UNESCO's Global AI Ethics and Governance Observatory's AI Readiness Methodology and the Indonesia example serve as a model for how countries can evaluate and enhance their AI governance frameworks.

-

UT System proposes an AI-Forward - AI-Responsible Initiative to provide guidance to use AI in secure and responsible (ethical and legal) manner.

Motivation

-

Sources

[1] aiEDU: The AI Education Project unveils AI Readiness Framework to ... https://www.prnewswire.com/news-releases/aiedu-the-ai-education-project-unveils-ai-readiness-framework-to-help-students-teachers-school-systems-prepare-for-the-transformation-driven-by-ai-302270994.html

[2] [PDF] 432 THE READINESS TO USE AI IN TEACHING SCIENCE ... - ERIC https://files.eric.ed.gov/fulltext/EJ1429748.pdf

[3] Preparing National Research Ecosystems for AI: Strategies and ... https://council.science/publications/ai-science-systems/

[4] The AI Revolution: Awareness of and Readiness for AI-based Digital ... https://jrbe.nbea.org/index.php/jrbe/article/view/106

[5] UNESCO and KOMINFO Completed AI Readiness Assessment https://www.unesco.org/en/articles/unesco-and-kominfo-completed-ai-readiness-assessment-indonesia-ready-ai

[6] Mapping the Worlds Readiness for Artificial Intelligence Shows ... https://www.imf.org/en/Blogs/Articles/2024/06/25/mapping-the-worlds-readiness-for-artificial-intelligence-shows-prospects-diverge

[7] AI Readiness in Higher Education - GovTech https://papers.govtech.com/AI-Readiness-in-Higher-Education-143078.html

[8] Empowering educators to be AI-ready - ScienceDirect.com https://www.sciencedirect.com/science/article/pii/S2666920X22000315

Why Governance of EC needs AI?

The governance of ecological capital presents multi-faceted challenges that stem from both direct and indirect human activities, as well as the complexities inherent in managing natural resources sustainably.

-

Depletion and Biodiversity Loss

-

Economic and Regulatory Pressures

-

Data and Methodological Gaps

-

Corporate Governance Challenges

-

Intrinsic Value Recognition

Motivation

Depletion and Biodiversity Loss

Natural capital is under severe threat due to factors such as land use changes, overexploitation of resources, invasive species, climate change, and pollution. These threats lead to habitat destruction, species migration, and increased extinction risks, with approximately one million species currently at risk of extinction (Purvis 2019). This depletion results in significant economic impacts, including a projected decline in global GDP of $2.7 trillion annually by 2030 (Niles et al 2022).

Motivation

Economic and Regulatory Pressures:

Businesses face challenges related to increased raw material costs and supply chain disruptions due to natural capital depletion. Stricter ecological regulations necessitate more sustainable operations, raising compliance costs and posing reputational risks if businesses are seen as contributors to environmental degradation. Additionally, the lack of external incentives for undertaking natural capital projects complicates efforts to integrate these considerations into business strategies.

Motivation

Data and Methodological Gaps

There is a lack of trusted data and methodologies for quantifying the use and impact of natural capital. This gap hinders the ability to make informed decisions and assess the benefits of ecosystem services accurately. Furthermore, the complexity of ecosystem degradation, with its multiple causes and effects, makes it challenging to translate ecological resilience into actionable policy measures

Motivation

Corporate Governance Challenges

Companies struggle with integrating sustainability into corporate governance due to knowledge gaps among directors regarding sustainability issues. This gap limits their ability to make informed decisions that balance corporate interests with societal and environmental needs. The need for expertise in sustainability is crucial to fulfilling fiduciary duties and avoiding potential litigation risks.

Motivation

Intrinsic Value Recognition

The commodification of natural capital raises concerns about recognizing the intrinsic value of biodiversity and a healthy environment. While economic valuation can aid in policy integration, it often fails to capture the full cultural and spiritual significance of nature.

Motivation

Why AI readiness is essential?

-

Data Management and Analysis

-

Enhanced Decision-Making

-

Addressing ESG Challenge

-

Risk Management

-

Infrastructure Development

Motivation

Data Management and Analysis:

AI facilitates the processing and analysis of large datasets, which is crucial for understanding and managing ecological systems. For instance, AI can optimize resource use and enhance productivity in sectors like agriculture, water, energy, and transport, potentially boosting global GDP by 3.1–4.4% while reducing greenhouse gas emissions by 1.5–4.0% by 2030 (Herweijer et al. undated)

Motivation

Enhanced Decision-Making

AI supports informed decision-making by providing accurate predictions and recommendations, helping policymakers develop effective strategies for climate change mitigation and resource management. This capability is vital for sustainable governance as it allows for more precise and timely interventions.

Motivation

Addressing ESG Challenges

The integration of AI into Environmental, Social, and Governance (ESG) practices can enhance corporate governance by analyzing data to assess performance and identify improvements. This alignment is crucial as businesses face increasing pressure from stakeholders to demonstrate transparency and sustainability.

Motivation

Risk Management

AI can help identify and mitigate risks associated with ecological governance, such as environmental degradation or resource depletion. A harmonized regulatory approach is necessary to ensure that AI applications are accountable and ethical, preventing potential negative impacts like bias or privacy infringements.

Motivation

Infrastructure Development

Establishing a robust data ecosystem is essential for AI readiness. Organizations must develop scalable data management systems to handle the increasing volume and complexity of data required for AI applications in ecological governance.

Outline

-

This presentation discusses AI-readiness in the domains in research and teaching.

-

Practical examples will be provided to illustrate actual practices in a higher education institution

AI-Readiness

FAIR

The FAIR data principles are a set of guidelines designed to enhance the management and stewardship of scientific data, ensuring that they are Findable, Accessible, Interoperable, and Reusable. These principles were first published in 2016 to address the increasing reliance on computational systems for data handling due to the growing volume and complexity of data.

FAIR

Findable

- Unique Identifiers: Data and metadata should be assigned globally unique and persistent identifiers to ensure they can be easily located.

- Rich Metadata: Data should be described with rich metadata, which includes the identifier of the data it describes.

- Searchability: Both data and metadata should be registered or indexed in searchable resources to facilitate discovery by humans and machines

FAIR

Accessible

- Retrievability: Data should be retrievable by their identifier using standardized communication protocols. These protocols should be open, free, and universally implementable.

- Metadata Availability: Metadata should remain accessible even if the data itself is no longer available, ensuring continued awareness of the data's existence

- Interoperable

- Standardized Language: Data and metadata should use a formal, accessible, shared, and broadly applicable language for knowledge representation.

- Vocabularies: They should employ vocabularies that adhere to FAIR principles to ensure consistency and compatibility with other datasets.

FAIR

Reusable

- Detailed Description: Data should be richly described with accurate and relevant attributes to support reuse in different contexts.

- Usage License: A clear and accessible data usage license should accompany the data.

- Provenance and Standards**: Data should be associated with detailed provenance information and conform to domain-relevant community standards

FAIR

These principles are integral to promoting open science by ensuring that research data can be effectively shared, discovered, and reused across various platforms and disciplines.

CARE

- The CARE Principles for Indigenous Data Governance, developed by the Research Data Alliance International Indigenous Data Sovereignty Interest Group, are designed to ensure that data practices respect the rights and interests of Indigenous communities. These principles are particularly relevant in AI-driven ecological research, where data involving Indigenous lands and resources are often utilized. Here’s a breakdown of the CARE Principles:

- Collective Benefit

- Authority to Control

- Responsibility

- Ethics

CARE

Collective Benefit

This principle emphasizes that data should be used in ways that benefit Indigenous communities collectively. The use of data should lead to inclusive development, innovation, improved governance, and equitable outcomes for these communities.

CARE

Authority to Control

Indigenous communities should have the authority to control their data. This includes decision-making power over how their data is collected, accessed, and used. It supports self-determination and ensures that Indigenous nations are actively involved in the governance of their data

CARE

Responsibility

There is a responsibility to engage with Indigenous communities respectfully and ensure that the use of their data supports capacity development and strengthens community capabilities. This principle highlights the importance of conducting research that aligns with the values and needs of Indigenous communities.

CARE

Ethics

Ethical considerations are paramount when working with Indigenous data. Researchers must adhere to Indigenous ethical frameworks, ensuring transparency and integrity in how data is used and shared. This involves clear communication with Indigenous communities about research processes and outcomes.

CARE

These principles complement the FAIR Data Principles by adding a focus on people and purpose, ensuring that data governance practices advance Indigenous innovation and self-determination while addressing historical inequities.

Key Competencies for AI Literacy

Understanding AI

Ethical AI

Interdisciplinary Collaboration

Critical Thinking and Evaluation

Communicating AI

Policy on using generative AI in class

There are generally three policies on governing using generative AI in course setup:

-

Prohibiting AI Use

-

Allowing AI Use Without Restrictions

-

Permitting AI Use with Disclosure

Michigan

"In principle you may submit AI-generated code, or code that is based on or derived from AI-generated code, as long as this use is properly documented in the comments: you need to include the prompt and the significant parts of the response. AI tools may help you avoid syntax errors, but there is no guarantee that the generated code is correct. It is your responsibility to identify errors in program logic through comprehensive, documented testing. Moreover, generated code, even if syntactically correct, may have significant scope for improvement, in particular regarding separation of concerns and avoiding repetitions. The submission itself should meet our standards of attribution and validation.

Policy on using generative AI in class

2. Harvard

Certain assignments in this course will permit or even encourage the use of generative artificial intelligence (AI) tools, such as ChatGPT. When AI use is permissible, it will be clearly stated in the assignment prompt posted in Canvas. Otherwise, the default is that use of generative AI is disallowed. In assignments where generative AI tools are allowed, their use must be appropriately acknowledged and cited. For instance, if you generated the whole document through ChatGPT and edited it for accuracy, your submitted work would need to include a note such as “I generated this work through Chat GPT and edited the content for accuracy.” Paraphrasing or quoting smaller samples of AI generated content must be appropriately acknowledged and cited, following the guidelines established by the APA Style Guide. It is each student’s responsibility to assess the validity and applicability of any AI output that is submitted. You may not earn full credit if inaccurate on invalid information is found in your work. Deviations from the guidelines above will be considered violations of CMU’s academic integrity policy. Note that expectations for “plagiarism, cheating, and acceptable assistance” on student work may vary across your courses and instructors. Please email me if you have questions regarding what is permissible and not for a particular course or assignment.

Policy on using generative AI in class

3. Carnegie Mellon University

You are welcome to use generative AI programs (ChatGPT, DALL-E, etc.) in this course. These programs can be powerful tools for learning and other productive pursuits, including completing some assignments in less time, helping you generate new ideas, or serving as a personalized learning tool.

However, your ethical responsibilities as a student remain the same. You must follow CMU’s academic integrity policy. Note that this policy applies to all uncited or improperly cited use of content, whether that work is created by human beings alone or in collaboration with a generative AI. If you use a generative AI tool to develop content for an assignment, you are required to cite the tool’s contribution to your work. In practice, cutting and pasting content from any source without citation is plagiarism. Likewise, paraphrasing content from a generative AI without citation is plagiarism. Similarly, using any generative AI tool without appropriate acknowledgement will be treated as plagiarism.

Policy on using generative AI in class

4. UTD (some courses)

Cheating and plagiarism will not be tolerated.

The emergence of generative AI tools (such as ChatGPT and DALL-E) has sparked large interest among many students and researchers. The use of these tools for brainstorming ideas, exploring possible responses to questions or problems, and creative engagement with the materials may be useful for you as you craft responses to class assignments. While there is no substitute for working directly with your instructor, the potential for generative AI tools to provide automatic feedback, assistive technology and language assistance is clearly developing. Course assignments may use Generative AI tools if indicated in the syllabus. AI-generated content can only be presented as your own work with the instructor’s written permission. Include an acknowledgment of how generative AI has been used after your reference or Works Cited page. TurnItIn or other methods may be used to detect the use of AI. Under UTD rules about due process, referrals may

be made to the Office of Community Standards and Conduct (OCSC). Inappropriate use of AI may result in penalties, including a 0 on an assignment.

Policy on using generative AI in class

Using generative AI may not save time, but will improve quality and deepen thought process.

Policy on using generative AI in class

UT System

Prohibits:

-

University data

-

Non-public data

-

Companies of AI products sharing usage data

Policy advising:

-

Adoption of AI products

-

Use of AI Detectors in class

-

Individual faculty class policy on usage

AI in research

-

Convention: Survey driven public opinion research

- e.g. 2024 Election polls: mostly wrong

- experients: generalizability

- New era: GenAI role in public opinion research

- Agent-based appoach

- Syndicated data approach

The design of this study is composed of three elements:

-

Big data

-

Generative Pre-trained Transformer (GPT) models

-

Predictive Scenario Analysis

It begins with the collection of diverse datasets to build text data corpora, followed by extensive data preprocessing and feature engineering and predictive models with scenario analysis.

Illustration: GPT-based prediction

The use of GPT models facilitates a deep contextual understanding of public sentiments and perspectives. In parallel, generative data methods, particularly Generative Adversarial Networks (GANs), are employed for scenario analysis.

GPT models

Generative data methods generate synthetic data representing various hypothetical scenarios, enabling the exploration of how public opinion might shift in response to different policy changes. Sentiment analysis and opinion mining are then applied to gauge public support or opposition and to identify specific policy aspects that drive public opinion.

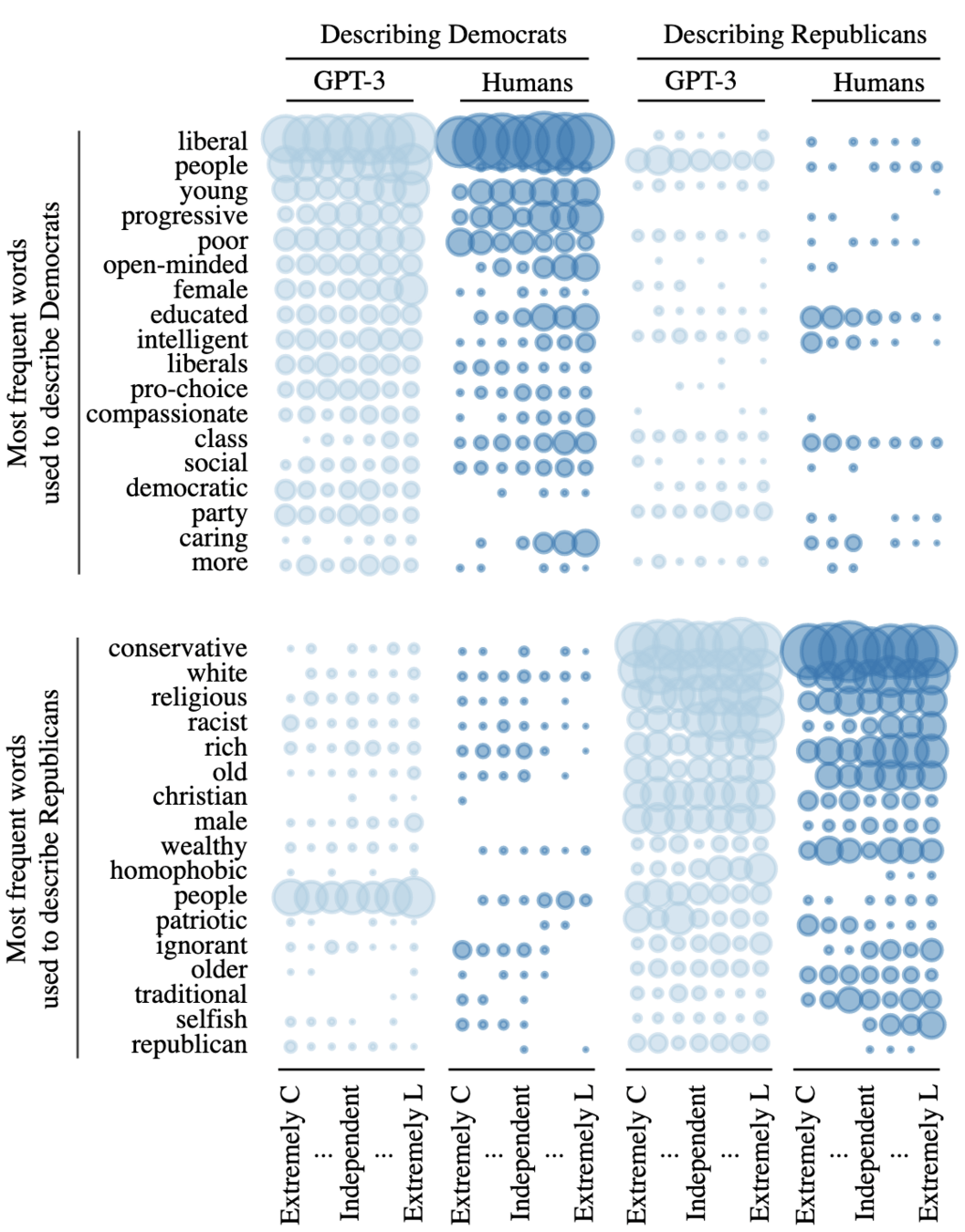

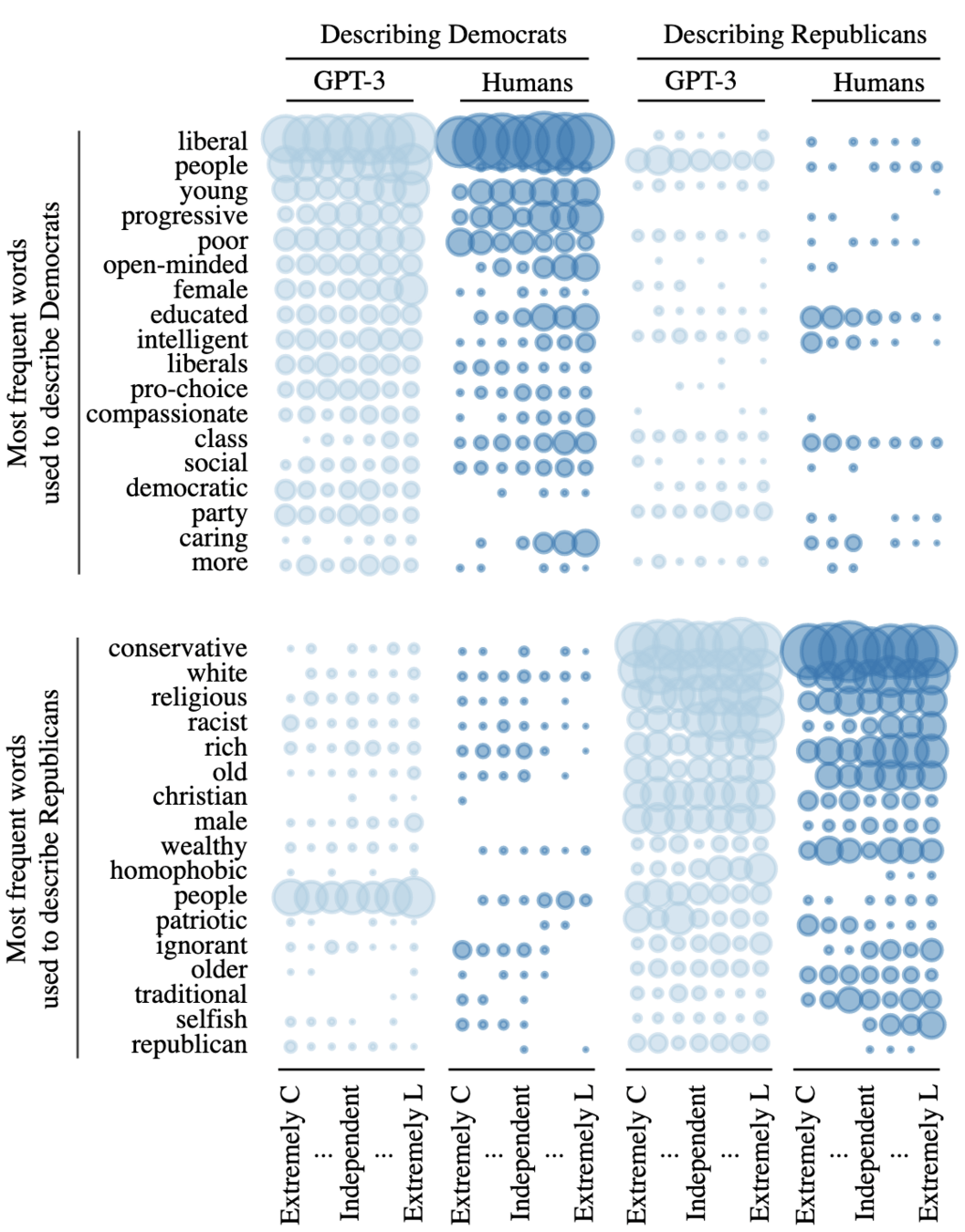

Synthetic data

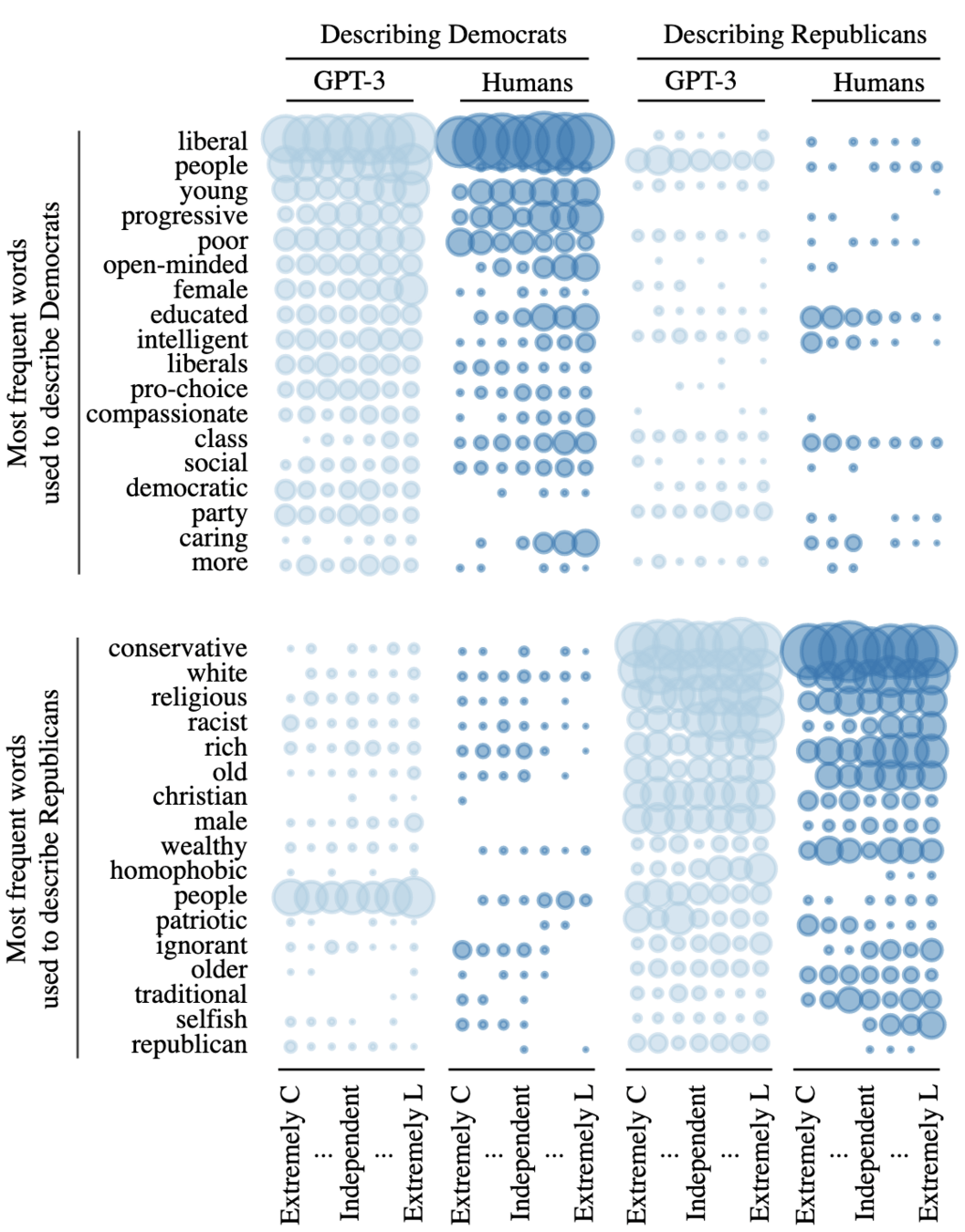

Most recent studies demonstrate GPT can be used to generate "virtual populations" using "silicon sampling" to simulate targeted human responses (Argyle et al. 2023).

Synthetic data

Most recent studies demonstrate GPT can be used to generate "virtual populations" using "silicon sampling" to simulate targeted human responses (Argyle et al. 2023).

Synthetic data

Most recent studies demonstrate GPT can be used to generate "virtual populations" using "silicon sampling" to simulate targeted human responses (Argyle et al. 2023).

Synthetic data

The study also proposes including predictive modeling and scenario forecasting, using the developed models to predict future trends in public opinion and to forecast how these might evolve under different policy scenarios. This approach not only offers a comprehensive understanding of current public sentiments but also equips policymakers with tools to align policies more closely with public preferences and concerns, thereby enhancing the effectiveness of environmental policy-making.

Predictive models and scenario forecasting

The following outlines the steps in building a GPT-based policy advising system to more accurately integrate public opinion in policy making:

Data collection: Corpora building

Data Preprocessing and Feature Engineering

Application of GPT for Contextual Analysis

Sentiment Analysis and Opinion Mining

Generative Data Modeling for Scenario Analysis

Steps

Gather large datasets of public opinion data related to CO2 management and energy policies, including:

Social media posts

Survey responses

-

Forum discussions

PTT

DCard

News comments (e.g. editorials)

Step 1: Corpora building

-

Preprocess the data for NLP tasks:

-

Perform feature engineering to identify key variables influencing public opinion, such as:

-

sentiments

-

slow onset events and extreme weather events

-

frequency of policy mentions

-

other factors such as demographics

-

-

-

Utilize NLP techniques to extract and construct meaningful features from unstructured data.

Step 2: Data Preprocessing and Feature Engineering

-

Use GPT-based models for deep contextual analysis of public opinions, understanding underlying sentiments and perspectives.

Build context for conditioning in silicon sampling

Step 3: Application of GPT for Contextual Analysis

-

Conduct temporal sentiment analysis to gauge public support or opposition over time.

-

Use opinion mining to identify specific policy aspects that are most influential in public opinion.

Step 4: Sentiment Analysis and Opinion Mining:

-

Employ generative models like GANs to create synthetic data representing various hypothetical public opinion scenarios under different policy conditions especially in contexts with limited data (few-shot learning).

-

Analyze how public opinion might shift in response to changes in CO2 management and energy policies.

Step 5: Generative Data Modeling for Scenario Analysis

-

This proposal will lay out future roadmap to achieve comprehensive understanding of public sentiments and opinion movements

-

Challenges:

-

Fine-tuning

High computational cost for model

Spurious features of training data (e.g. fake news, biased surveys)

-

Expected Outcomes and Challenges

-

Project Significance:

New technology in AI allows better understanding public opinion via GPT and predictive scenario analysis

It will significantly enhance environmental policy-making

It helps stake-holders align policies with true, real-time public preferences.

Conclusion

-

Q: Why not just use surveys?

-

A: Surveys are snapshots of public opinion, which could be subject to change over time and different scenarios (e.g. ECFA). Using GPT-based methods will scale and improve overtime, giving consistent metrics for evaluating biases and stable vs. precarious public opinions.

-

Q: Can A.I. methods be absolutely correct?

-

A: There is always a part of public opinion we can never fully measure or understand. With AI assistance, we will learn about this sub-population better and better over time.

Q & A

-

AI-driven Data Collection

-

Data Preprocessing and Feature Engineering

-

Application of GPT for Contextual Analysis

-

Generative Data Modeling for Scenario Analysis

-

Sentiment Analysis and Opinion Mining

-

Predictive Modeling and Scenario Forecasting

-

Interactive Dashboard and Visualization

Directions

Thank you!

NCHU & UTD Dual degree in Data Science

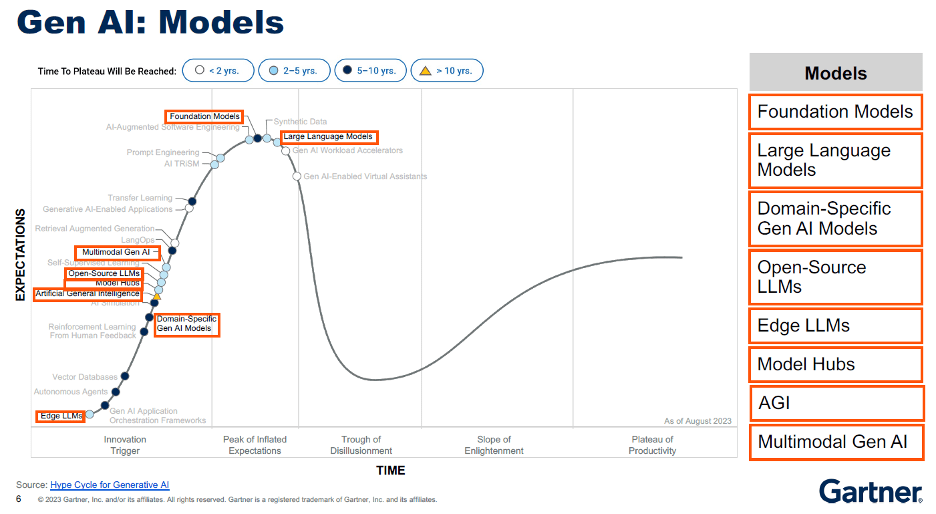

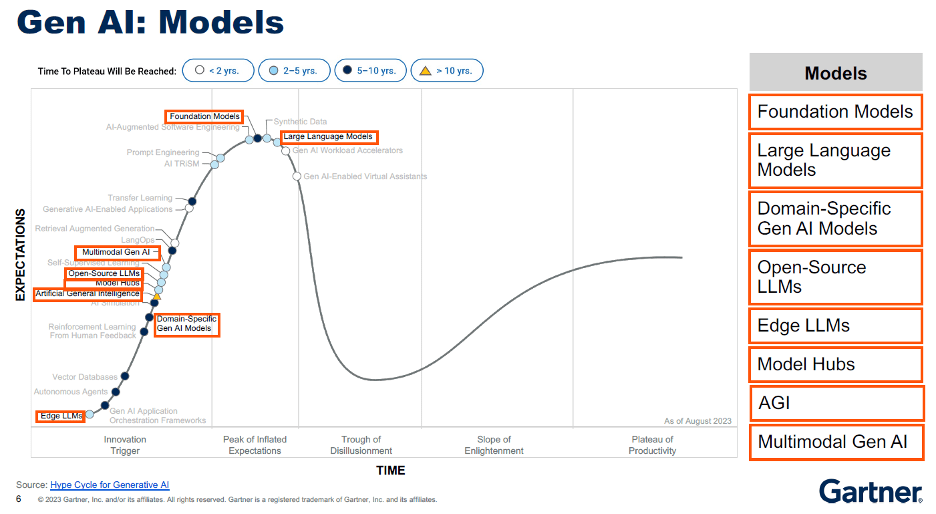

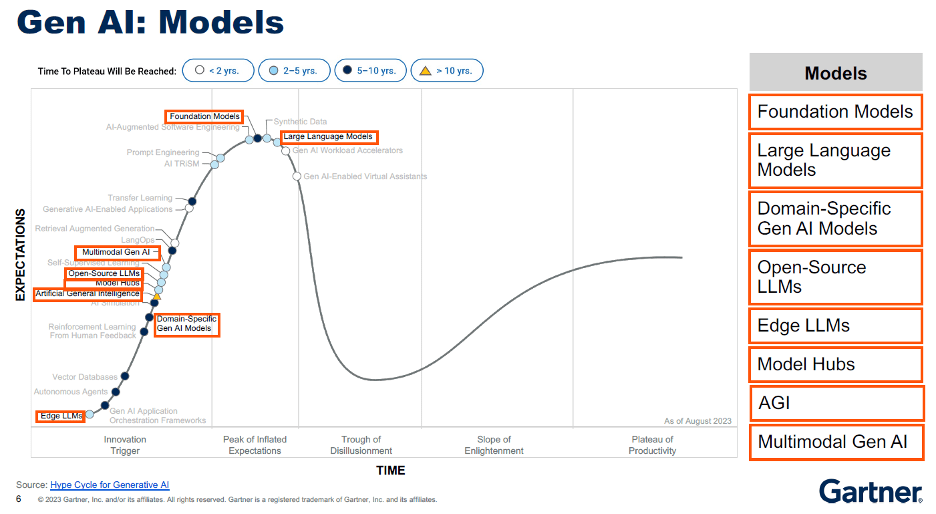

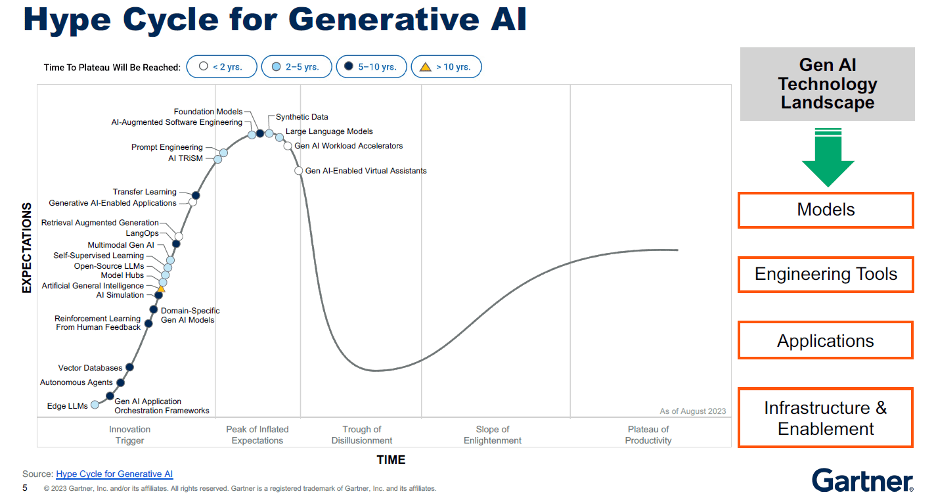

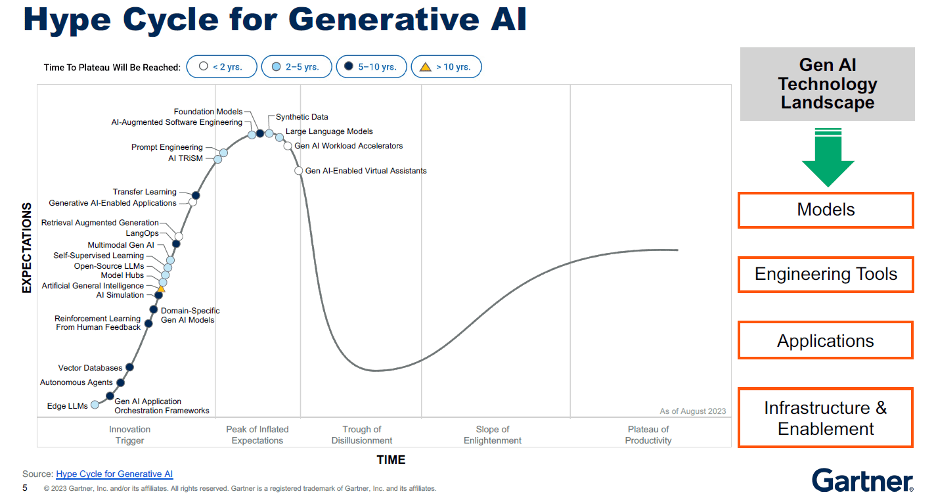

What is Generative AI (GenAI)?

Generative AI is a subset of artificial intelligence that focuses on creating new content such as text, images, videos, and audio in response to user prompts. This technology leverages sophisticated machine learning models, particularly deep learning models, to simulate human-like creativity and decision-making processes by identifying patterns in large datasets (Amazon Q, IBM, Lawton) .

What is Generative AI (GenAI)?

History

-

Generative AI's roots trace back to the 1960s with early chatbots.

-

Significant advancements came in 2014 with the development of Generative Adversarial Networks (GANs), which enabled the creation of realistic images and audio.

-

Introduction of transformer-based models in 2017 further propelled generative AI's capabilities, leading to the creation of large language models like GPT-3, which can generate coherent text based on minimal input.

What is Generative AI (GenAI)?

Applications

Generative AI is widely used across various fields:

Content Creation: Tools like ChatGPT and DALL-E generate text and images, respectively, aiding in creative writing, graphic design, and marketing.

Software Development: AI models assist in coding by generating code snippets or translating between programming languages.

Healthcare: In drug discovery, generative AI helps design new molecular structures.

Entertainment: It is used for creating virtual simulations and special effects in video games and movies.

What is Generative AI (GenAI)?

Future Developments

- Advancements in multimodal models that can process and generate multiple types of content simultaneously.

- Personalized content creation and more sophisticated virtual assistants.

- User interfaces more accessible to non-experts, expanding use across industries.

What is Generative AI (GenAI)?

Sources

[1] What is Generative AI? - Gen AI Explained - AWS https://aws.amazon.com/what-is/generative-ai/

[2] What is Generative AI? - IBM https://www.ibm.com/topics/generative-ai

[3] What is Gen AI? Generative AI Explained - TechTarget https://www.techtarget.com/searchenterpriseai/definition/generative-AI

[4] Generative artificial intelligence - Wikipedia https://en.wikipedia.org/wiki/Generative_artificial_intelligence

[5] What Is Generative AI? Definition, Applications, and Impact - Coursera https://www.coursera.org/articles/what-is-generative-ai

[6] generative AI Definition & Meaning - Merriam-Webster https://www.merriam-webster.com/dictionary/generative%20AI

[7] Explained: Generative AI | MIT News https://news.mit.edu/2023/explained-generative-ai-1109

[8] What is generative AI? - IBM Research https://research.ibm.com/blog/what-is-generative-AI