Software Engineering

Research

LUCAS CORDOVA, PH.D.

he/él, him, his

LUCAS CORDOVA, PH.D.

Education

-

Ph.D. Software Engineering

-

M.S. Software Development & Management

-

B.S. Computer Science

Teaching experience

-

Western Oregon University (2019 - Present)

-

Oregon Institute of Technology (2016 - 2019)

Industry & teaching skills

-

Distributed computing

-

Web development

-

Mobile development

-

Cloud computing / DevOps

-

Database design

-

OS development

-

Project / program management

-

Project-based courses

-

Teach cross-program: CS, MIS, IS, IT, Data Analytics.

he/él, him, his

What we'll discuss today

Research interest areas:

- Software testing pedagogy

- Cross-disciplinary app development

- Academic Innovation fellowship research

- Other research interests

Don't hesitate to interrupt and ask questions!

1. Software testing pedagogy

Investigate how to improve students' ability to build higher-quality software.

Industry reality

National Institute of Standards and Technology found that software developers spend on average 70-80% of their time debugging and taking 17.4 hours to fix a bug (costing the US economy over $113 billion annually [1].

CS education reality

Many students today are graduating with a knowledge gap in software testing, leading them to resort to trial and error while programming and debugging [2].

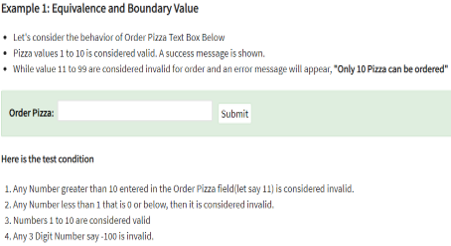

Student misconceptions

Early CS students often limit their testing efforts due to these misconceptions.

-

If code achieves a clean compile, there are no mistakes in the code [5].

-

When code passes a few test cases (i.e., those provided by the instructor), it is correct [5, 6].

-

When code looks ’correct’ but gives an incorrect answer, there is no way to understand why.

-

Playing with the code by switching things around will fix the problem [5].

-

Code coverage tools alone provide the information necessary to improve their test suites [7].

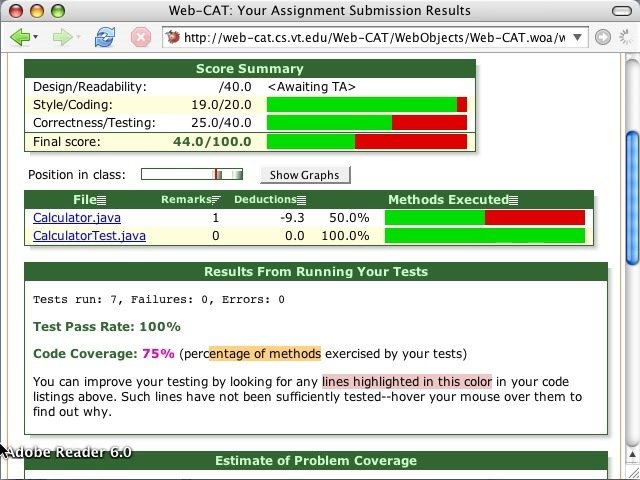

CS education challenges

- Conventional programming assignments typically only evaluate the end-product of students' work.

- Instructors are usually blind to students' adherence to the particular processes.

- It is impractical for instructors to manually review every student’s work often enough to understand their development processes and to intervene when necessary.

- Current real-time automated assessment pedagogy tools (i.e., Web-CAT, GradeScope) tend to provide only numerical or binary-based feedback.

Issues with current tools

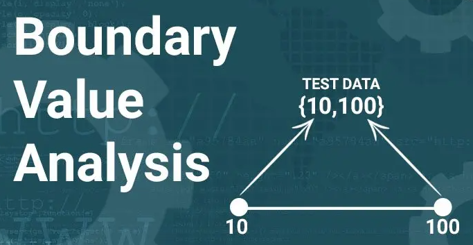

An alternative approach

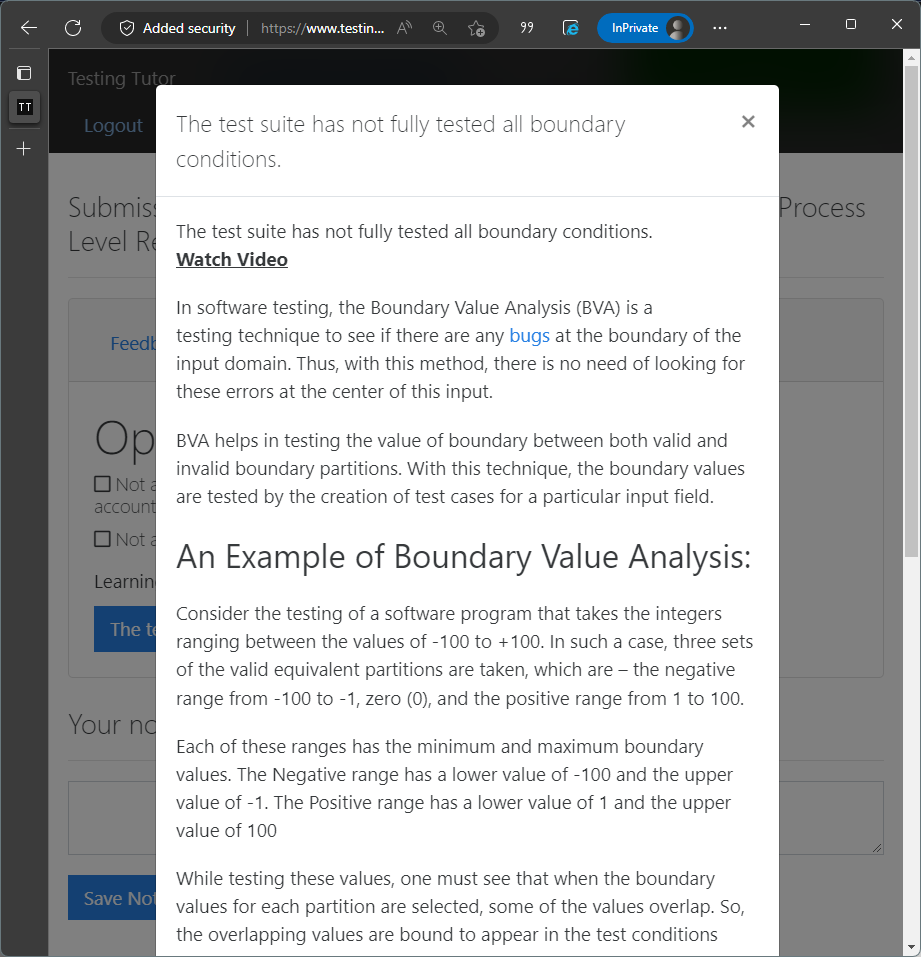

Provide students with Conceptual Feedback (feedback that informs the student which underlying fundamental testing concepts their test suite does not cover and provides hints and resources for the student to initiate their own learning process).

"The test suite has not fully tested all boundary conditions."

"The test suite misses part of a compound Boolean expression."

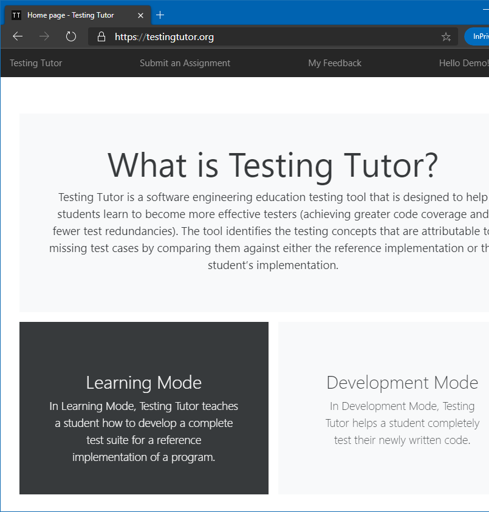

Testing Tutor

- An intervention tool that uses Inquiry-Based Learning (IBL).

- Students submit their source and test code, and they instantly receive conceptual feedback.

- Tailored feedback engine allows instructor to customize feedback.

https://testingtutor.org

Empirical validation

Students experiencing Conceptual Feedback produced higher-quality test suites than those having standard code coverage in learning mode [7].

-

32% higher overall code coverage (statement, branch, conditional).

-

50% fewer tests that were redundant.

-

27% higher instructor grades.

-

Concepts were retained after conceptual feedback treatment was taken away.

Current work

Supported by a National Science Foundation IUSE multi-institution level-2 grant: "Collaborative research: Testing Tutor - An Inquiry-Based Pedagogy and Supporting Tool to Improve Student Learning of Software Testing Concepts".

-

Support and train students on software development and testing.

-

Added support for Python, C#.

-

Improved Java and file handling support.

-

C++ support in progress.

-

IDE and GitHub integration in progress.

-

Currently running studies at North Dakota State University and Georgia Southern University.

2. Software development methodologies in project-based courses

Investigate how to apply industry software development methodologies to project-based courses.

Industry-aligned curriculum

CS educators are injecting Agile processes into courses to prepare students for industry where Agile has become mainstream.

Agile in CS education reality

It is difficult to model a true industry setting due to academic constraints.

Academic constraints

Constraints on students, instructors, and time make it very difficult to model industry setting.

-

Students are unable to contribute to a project at full capacity.

-

Instructors are also constrained by other classes and responsibilities.

-

Terms are too short to experience enough of the project lifecycle.

Grant opportunity

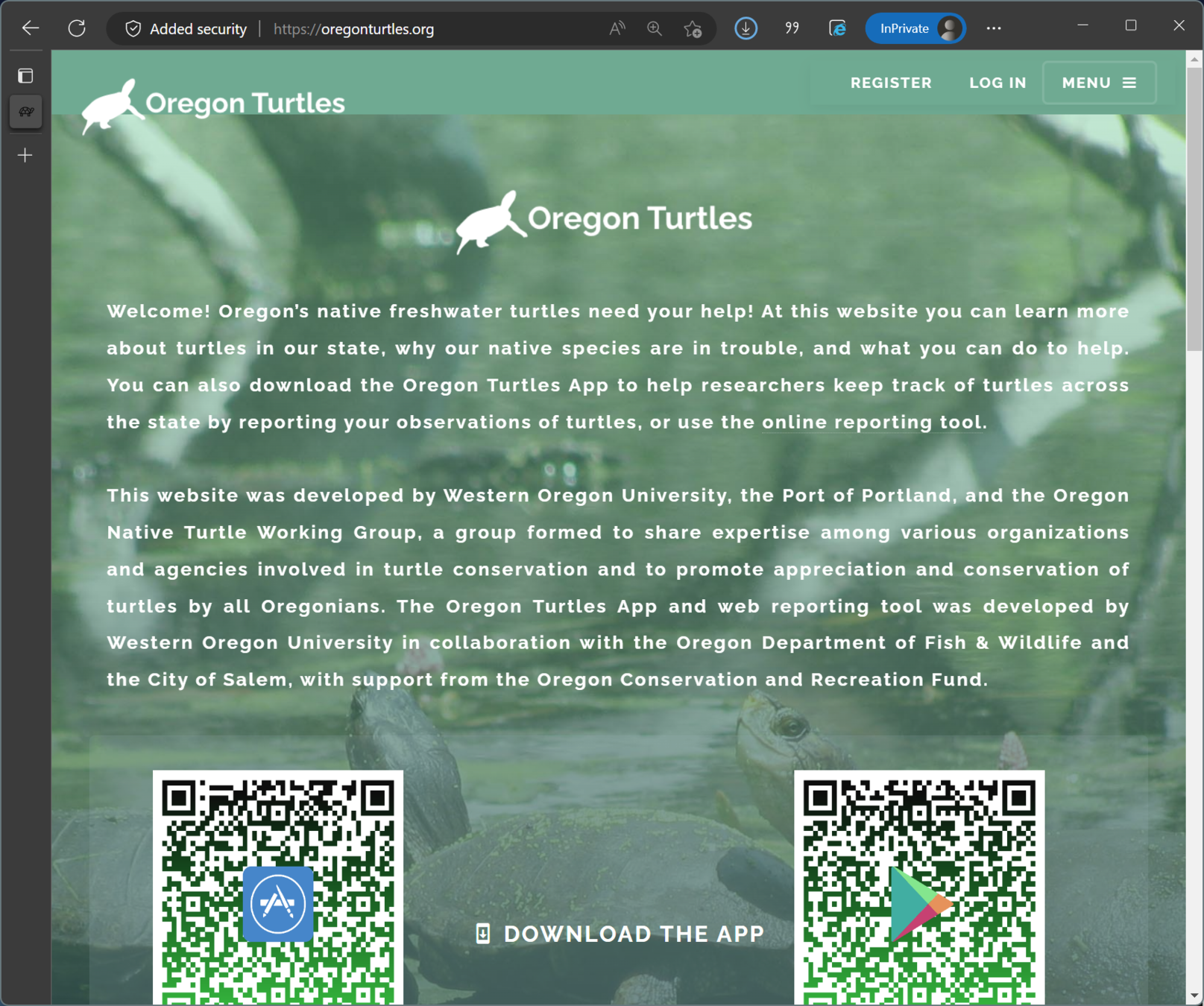

Cross-disciplinary grant opportunity awarded for Biology and CS research and development for turtle conservation by the Oregon Conservation and Recreation Fund.

-

Supports biological research and public activism for the conservation of Oregon's freshwater turtle population.

-

Portion of grant funds the development of web and mobile apps for biologists and the public.

-

Partnerships created with multiple Oregon agencies.

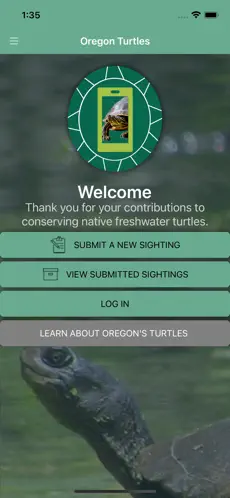

Oregon Turtles

- Web and mobile apps for recreationalists to submit turtle sightings.

- Mobile apps for biologists to conduct basking and nesting surveys.

- Data analysis of sightings and surveys data.

www.oregonturtles.org

CS education and research

- Train students in web and cross-platform app development across multiple terms.

- Investigate the application of agile development on a real-world project in an educational setting.

- Data source for data analytics program.

3. Academic Innovation fellowship

Investigating using alternative-based grading systems in computing courses.

Issues with traditional grading

Traditional grading systems can be a poor feedback mechanism and can reinforce inequities.

-

Receiving and integrating feedback is an essential part of the learning process, but because grades are retrospective, they serve as a poor feedback mechanism.

-

Students from different demographic groups experience very different outcomes in terms of both grades and college completion rates [8].

Alternative grading systems

Development of an alternative grading system framework for computing courses.

-

Combining aspects of contract-based, specification-based, and labor-based grading systems into a framework.

-

Investigating application of the framework to other disciplines.

-

Designing methods to integrate into points based LMS.

4. Other research endeavors and interests

Experience of minorities in computing

Understanding the experience of minorities in computing education and industry.

-

Qualitative research methods to understand the experiences of minorities in computing education and implementing processes to promote better experiences in both academia and industry.

Metaverse in education

Investigating how the metaverse will impact computing education in the future.

-

Augment the classroom with fully immersive and multimedia environments that leverage both the physical and digital world.

-

Making education more gamified.

-

Ensuring that the metaverse does not contribute further to the digital divide.

AI in LMS

Integrating AI chatbots into LMS course room to improve the student experience and increase student engagement.

-

Most AI chatbots in education are being used in upstream processes (admissions, financial aid).

-

Gap in the literature for usage in the LMS course room and in computing courses.

-

Use ML and NLP so chatbot improves over time.

-

Provides data analytics for instructors to gauge student engagement and opportunities to intervene when concepts need to be reinforced.

Grant applications in progress

-

NSF S-STEM focused on transfer students into Data Analytics major.

-

Next level of NSF IUSE funding for Testing Tutor.

Thank

You!

LUCAS CORDOVA

lpcordova@outlook.com

These slides available at:

References

[1] E. Jones, “Sprae: A framework for teaching software testing in the undergraduate curriculum,” in Proceedings of the Symposium on Computing at Minority Institutions, 2000.

[2] L. Osterweil, “Strategic directions in software quality,” ACM Computer Survey, vol. 28, no. 4, pp. 738–750, Dec. 1996. [Online]. Available: http://doi.acm.org/10.1145/242223.242288

[3] T. Shepard, M. Lamb, and D. Kelly, “More testing should be taught,” Commun. ACM, vol. 44, no. 6, pp. 103–108, Jun. 2001.

[4] G. Tassey, “The economic impacts of inadequate infrastructure for software testing,” National Institute of Standards and Technology, Tech. Rep. 7007.011, 2002.

References

[5] “Using software testing to move students from trial-and-error to reflection-in-action,” in Proceedingsof the 35th SIGCSE Technical Symposium on Computer Science Education, 2004, pp. 26–30.

[6] R. E. Noonan and R. H. Prosl, “Unit testing frameworks,” in Proceedings of the 33rd SIGCSE Technical Symposium on Computer Science Education, 2002, pp. 232–236.

[7] K. Buffardi and S. H. Edwards, “Reconsidering automated feedback: A test-driven approach,” in

Proceedings of the 46th ACM Technical Symposium on Computer Science Education, 2015, pp. 416–420.