Software Testing

We are all doing it wrong

As ironic as it seems, the challenge of a tester is to test as little as possible. Test less, but test smarter.

— Federico Toledo

What are we doing wrong?

In short, Open Source projects have few resources available to them, driving testing to simple and low-level code. Enterprises often have many resources available, allowing complacent teams to spend too much time testing higher-level services ignoring or undervaluing the low-level testing.

There is often* an imbalance in testing in both Enterprise Software Development and Open Source Software Development.

- Enterprise teams often have access to end-to-end environments which are used for long-running tests.

- Open Source Projects rarely have an end-to-end environment.

- Open Source Projects focus on short-lived low-level testing.

- Enterprise teams oftentimes over-index on high-level service testing.

* Note: This pattern may not apply everywhere, Especially within large companies where practices may differ from team to team

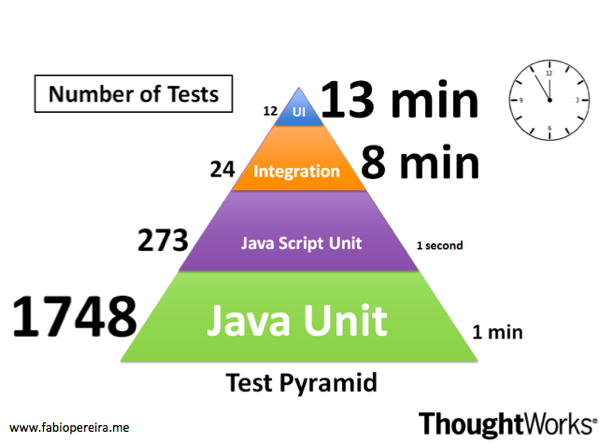

Obligatory Test Pyramid Slide

The premier tool for finding the right balance of software testing is the coveted "Test Pyramid."

Test Pyramid Takeaways:

- Unit tests should be plentiful

- Functional Testing (Integration, E2E) should be less

- The lower end of the pyramid is easier to create, more stable, and run faster.

- The top of the pyramid gives you more confidence.

The Test Pyramid is a great guide, but software development has changed since its creation.

Finding Balance

How I, Breakdown Testing*

When finding the right balance of testing, especially in a backend microservices context. I breakdown testing into four categories.

- Low-Level Unit Testing

- Low-Level Functional Testing

- Service-Level Functional Testing

- Platform-Level Functional Testing

* Focused on a Backend Microservices world

Low-Level "Unit" Testing

This testing is often referred to as Unit testing. This level of testing is the lowest level possible. Focused on testing a single method/function and testing the return of those calls.

func TestNewCreation(t *testing.T) {

_, err := New(Config{})

if err == nil {

t.Errorf("New should have errored when Config is empty")

}

}- Focused on Method & Function input/output testing

- Can use internal Mocks

- Can call dependent services using Docker/MiniKube

- Easy to run locally or anywhere

Low-Level Functional Testing

This type of testing is technically functional testing, but it leverages the same testing frameworks as unit testing. The goal is to test the basic functionality of the service while maintaining fine-grain control of the tests.

func TestAPI(t *testing.T) {

go func() {

server, err := app.Start(Config{...})

if err != nil {

// do stuff

}

}()

defer server.Shutdown()

t.Run("Verify GET on /", func(t *testing.T) {

r, err := http.Get("https://example.com")

if err != nil {

// do stuff

}

// verify response

})

}- Focused on a single service external interfaces

- Can still use internal Mocks

- Can call dependent services using Docker/MiniKube

- Easy to run locally or anywhere

Service-Level Functional Testing

Focused more on external functionality, this type of testing is similar to low-level functional testing. The major difference is that this type of testing can act as an external dependency and verify the tested service's actions.

Feature: Check Status

In order to know my service is up

As a caller of my microservice

I need to be able to call /ready

Scenario: /ready returns 200

Given Ready is true

When I call /ready

Then we should get a 200 ok- Focused on testing actions when provided with given input

- Opportunity to leverage BDD frameworks

- Can't use internal Mocks, but can act as a Service Mock

- Can call dependent services

- Able to run locally

Platform-Level Functional Testing

Focused on testing the functional interactions for an end-to-end platform. This type of testing is useful for verifying how the overall system behaves with various inputs and scenarios.

Feature: Run Transaction

In order to know my service is working

As a caller of my microservice

I need to be able to call /transaction

Scenario: /transaction returns 200

Given we call Transaction API

When I call /transaction

Then we should get a 200 ok- Focused on testing interactions between multiple services within a platform

- Opportunity to leverage BDD frameworks

- Service Mocks are limited to non-Platform dependencies

- Code can call dependent services

- Harder, but still possible to run locally

Which Tests Should I focus on?

While not exactly a pyramid, these various types of testing have different levels of focus. The goal is to balance low-level testing and high-level testing. Not singularly relying on only one level of testing.

| Testing Type | Amount of Testing | Comment |

|---|---|---|

| Low-Level "Unit" Testing | High | Highest value with least amount of effort, focus heavily on this level. |

| Low-Level Functional Testing | High | Similar type of testing as Service Level, but with less complexity. |

| Service-Level Functional Testing | Low | Reserve this testing for things that need a lot of external coordination/validation. |

| Platform-Level Functional Testing | Medium | Test the end to end system, but don't neglect the previous levels. |

Effortless Testing

Creating a better Testing Experience

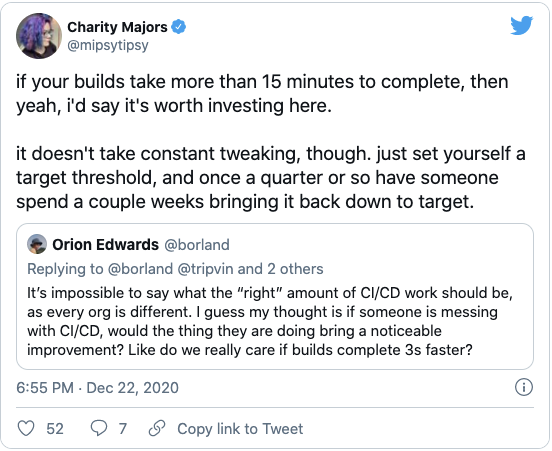

Software Engineering has changed over the last few years. The capabilities to enable testing have advanced, giving more flexibility and speed. But the expectations of Engineers have grown as well. Long gone are the days where it was acceptable to have software testing take several days to complete.

Today, Modern Software Testing should feel effortless and built into the software build pipeline. The below are characteristics expected from today's tests.

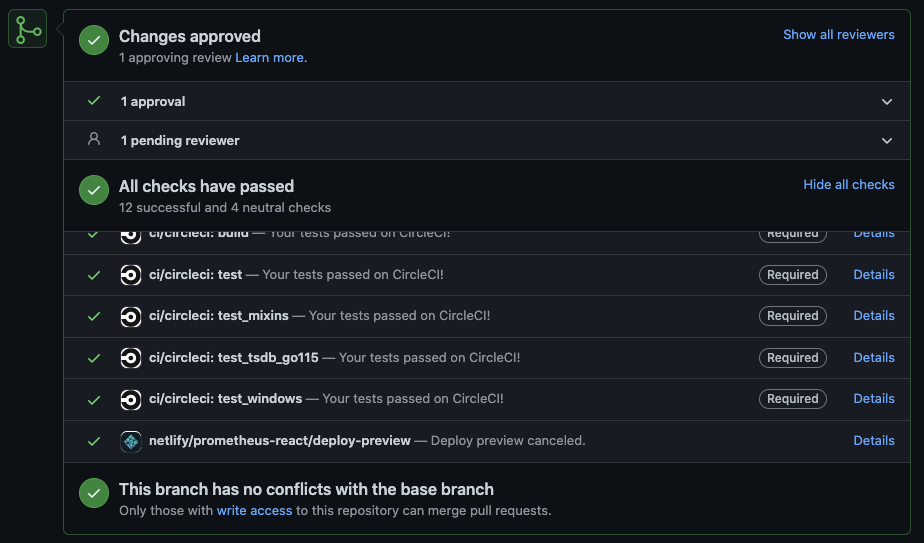

- Pull Requests should trigger full test execution.

- Builds should take no longer than 15 to 30 minutes.

- Tests should run the same on a local machine as they do within the CI/CD pipeline.

- Build & Test Environments should be Ephemeral; pre-setup or post-teardown should be automatic.

Knowing the Quality result before merge

A practice that Open Source communities do well is ensuring that all software testing occurs as part of each pull request.

The goal should be to perform as much testing as possible before the code is merged.

For low-level testing, this is easy to accomplish; high-level testing is a bit more complex. For many, it means creating dynamic environments are part of the CI/CD process.

Dynamic Environments

When doing Platform Level End to End testing, you must run the service being changed and any other services that make up the platform.

Dynamic Environments can be solved in many different ways, sometimes simple, sometimes complex:

- ✅ Services such as Circle CI, GitHub Actions, GitLab CI, etc. all allow users to run multiple containers as part of their build environments

- ✅ Some teams may use Docker Compose or Minikube to orchestrate running services in the build

- ⚠️ Other teams build complex automation to create and deploy whole platforms to test Kubernetes clusters, making for some very complex build pipelines

Regardless of how Dynamic Environments are created, the most successful ones are those that Engineers can run on their local machine just as easy as their build pipeline.

Faster Build Times

With all of the different testing occurring as part of the build, it is important to be mindful of how long a build takes.

A faster build encourages smaller, more succinct changes. But with all of these different tests and validations running on a build, it is easy to let build times increase.

This doesn't mean we should test less, but rather we should optimize how we test. Focus on running multiple tests in parallel, optimizing the frameworks executing the tests, and focusing on the right levels of testing.

Summary

Many teams struggle to find the balance between low-level and high-level testing. Losing sight of the larger goal in the process.

"Does my change to this software work and not break things?"

When creating your Software Testing strategy/framework, focus on the goals:

- Testing must identify problems early in the process.

- Only thoroughly tested code is merged.

- Test execution must be fast.

- Tests should be runnable from anywhere.

EOF

Benjamin Cane

Twitter: @madflojo

LinkedIn: Benjamin Cane

Blog: BenCane.com

Principal Engineer - American Express