Web Scraping

Beginning Python Programming

Makzan, 2020 April.

Web Scraping

- Downloading files in Python

- Open web URL

- Fetch data with API

- Web scraping with Requests and BeautifulSoup

- Web automation with Selenium

- Converting Wikipedia tabular data into CSV

Code Snippet: Download file

'''Download chart from AAStock server with given stock numbers.'''

from urllib.request import urlretrieve

stock_numbers = ['0011','0005','0001','0700','3333','0002','0012']

for stock_number in stock_numbers:

url = "http://charts.aastocks.com/servlet/Charts?fontsize=12&15MinDelay=T&lang=1&titlestyle=1&vol=1&Indicator=1&indpara1=10&indpara2=20&indpara3=50&indpara4=100&indpara5=150&subChart1=2&ref1para1=14&ref1para2=0&ref1para3=0&subChart2=3&ref2para1=12&ref2para2=26&ref2para3=9&subChart3=12&ref3para1=0&ref3para2=0&ref3para3=0&scheme=3&com=100&chartwidth=660&chartheight=855&stockid=00{}.HK&period=6&type=1&logoStyle=1".format(stock_number)

urlretrieve(url, '{}-chart.gif'.format(stock_number))Example: Open Web URL

import webbrowser

query = input("Please input search query to search Python doc. ")

url = "https://docs.python.org/3/search.html?q=" + query + "&check_keywords=yes&area=default"

webbrowser.open(url)Example: Open Google map

import webbrowser

import urllib.parse

query = input("Please input search query to search near-by Macao. ")

# A map search in Macao.

url = f"https://www.google.com/maps/search/{query}/@22.1612464,113.5303786,13z"

webbrowser.open(url)Example: SMG.gov.mo

import untangle

import datetime

def fetch():

obj = untangle.parse('http://xml.smg.gov.mo/c_actual_brief.xml')

temperature = obj.ActualWeatherBrief.Custom.Temperature.Value.cdata

humidity = obj.ActualWeatherBrief.Custom.Humidity.Value.cdata

print("現時澳門氣溫 " + temperature + " 度,濕度 " + humidity + "%。")

fetch()Fetching XML

import untangle

import datetime

year = datetime.date.today().year

# list begins at 0, and we look for previous month.

last_month = datetime.date.today().month -1 -1

if last_month < 0:

year = year - 1

last_month = 11 # list beings at 0.

url = f"http://www.dicj.gov.mo/web/cn/information/DadosEstat_mensal/{year}/report_cn.xml?id=8"

data = untangle.parse(url)

month_data = data.STATISTICS.REPORT.DATA.RECORD[last_month]

month = month_data.DATA[0].cdata

net_income = month_data.DATA[1].cdata

last_net_income = month_data.DATA[2].cdata

change_rate = month_data.DATA[3].cdata

acc_net_income = month_data.DATA[4].cdata

acc_last_net_income = month_data.DATA[5].cdata

acc_change_rate = month_data.DATA[6].cdata

print(f"{month} 毛收入 {net_income} ({year-1}:{last_net_income}), {change_rate}")

print(f"{month} 累計毛收入 {acc_net_income} ({year-1}:{acc_last_net_income}), {acc_change_rate}")Example: 博彩月計毛收入

Fetching XML

Example: Exchange rate

import json

import requests

url = "https://api.exchangeratesapi.io/latest?symbols=HKD&base=CNY"

response = requests.get(url)

data = json.loads(response.text)

print(data)

print(data['rates']['HKD'])

Fetching JSON

Web Scraping

- Querying web page

- Parse the DOM tree

- Get the data we want from the HTML code

Fetching news title

from bs4 import BeautifulSoup

import requests

try:

res = requests.get("https://news.gov.mo/home/zh-hant")

except requests.exceptions.ConnectionError:

print("Error: Invalid URL")

exit()

soup = BeautifulSoup(res.text, "html.parser")

print("\n".join( map(lambda x: x.getText().strip(), soup.select("h5"))) )Web Scraping

Fetching gov.mo holiday

import requests

from bs4 import BeautifulSoup

response = requests.get("https://www.gov.mo/zh-hant/public-holidays/year-2020/")

soup = BeautifulSoup(response.text, "html.parser")

tables = soup.select(".table")

for row in tables[0].select("tr"):

if len(row.select("td")) > 0:

is_obligatory = (row.select("td")[0].text == "*")

if is_obligatory:

date = row.select("td")[1].text

name = row.select("td")[3].text

print(f"{date}: {name}")

Web Scraping

Is today government holiday?

import requests

from bs4 import BeautifulSoup

import datetime

# Get today's year, month and day

today = datetime.date.today()

year = today.year

month = today.month

day = today.day

today_weekday = today.weekday()

today_date = f"{month}月{day}日"

# Fetch gov.mo

url = f"https://www.gov.mo/zh-hant/public-holidays/year-{year}/"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

tables = soup.select(".table")

holidays = {}

for table in tables:

for row in table.select("tr"):

if len(row.select("td")) > 0:

date = row.select("td")[1].text

weekday = row.select("td")[2].text

name = row.select("td")[3].text

holidays[date] = name

# Query holidays

print(today_date)

if today_date in holidays:

holiday = holidays[today_date]

print(f"今天是公眾假期:{holiday}")

elif today_weekday == 0:

print("今天是星期日,但不是公眾假期。")

elif today_weekday == 6:

print("今天是星期六,但不是公眾假期。")

else:

print("今天不是公眾假期。")Web Scraping

Is today obligatory holiday?

import requests

from bs4 import BeautifulSoup

import datetime

# Get today's year, month and day

today = datetime.date.today()

year = today.year

month = today.month

day = today.day

today_weekday = today.weekday()

today_date = f"{month}月{day}日"

# Fetch gov.mo

url = f"https://www.gov.mo/zh-hant/public-holidays/year-{year}/"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

tables = soup.select(".table")

holidays = {}

for table in tables:

for row in table.select("tr"):

if len(row.select("td")) > 0:

is_obligatory = (row.select("td")[0].text == "*")

date = row.select("td")[1].text

weekday = row.select("td")[2].text

name = row.select("td")[3].text

holidays[date] = {

'date': date,

'weekday': weekday,

'name': name,

'is_obligatory': is_obligatory,

}

# Query holidays

print(today_date)

if today_date in holidays:

holiday = holidays[today_date]

if holiday['is_obligatory']:

print(f"今天是強制公眾假期:{holiday['name']}")

else:

print(f"今天是公眾假期:{holiday['name']}")

elif today_weekday == 0:

print("今天是星期日,但不是公眾假期。")

elif today_weekday == 6:

print("今天是星期六,但不是公眾假期。")

else:

print("今天不是公眾假期。")Web Scraping

Web Automation with Selenium

- We use Selenium when:

- When Requests and BeautifulSoup does not work.

- When page requires JavaScript to render the data.

- Pros:

- It launches real browser and automate browser.

- Better compatibility .

- Cons:

- Slow because it launches real browser.

Selenium Cheat Sheet

- https://codoid.com/selenium-webdriver-python-cheat-sheet/

Downloading Browser Driver

- Gecko Driver for Firefox

https://github.com/mozilla/geckodriver/releases - Chrome Driver

https://chromedriver.chromium.org/

Take screenshot

'''Capture the screenshot of a website via Headless Firefox.'''

from selenium import webdriver

from selenium.webdriver.firefox.options import Options

options = Options()

options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

browser.get('https://makclass.com/')

browser.save_screenshot('makclass.png')

browser.quit()Web Automation with Selenium

Filling in forms

from selenium import webdriver

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.firefox.options import Options

from selenium.webdriver.support.ui import Select

import time

url = 'https://niioa.immigration.gov.tw/NIA_OnlineApply_inter/visafreeApply/visafreeApplyForm.action'

visitor = {

'chinese_name': '陳大文',

'last_name': 'Chan',

'first_name': 'Tai Man',

'passport_no': 'MA0012345',

'gender': 'm',

'birthday': '1986/10/20',

}

def fill_input(selector, value):

'''find the input with given sselector and fill in the value.'''

return browser.find_element_by_css_selector(selector).send_keys(value)

options = Options()

# options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

browser.get(url)

radio = browser.find_element_by_css_selector('#isHKMOVisaN')

radio.click()

browser.execute_script('$.unblockUI();')

time.sleep(0.5)

select = Select(browser.find_element_by_css_selector('#tsk01Type'))

# print(select.select_by_value.__doc__)

select.select_by_value('1')

fill_input('#chineseName', visitor['chinese_name'])

fill_input('#englishName1', visitor['last_name'])

fill_input('#englishName2', visitor['first_name'])

fill_input('#passportNo', visitor['passport_no'])

fill_input('#birthDateStr', visitor['birthday'])

# ... and more fields to fill in ...

if visitor['gender'] == 'm':

browser.find_element_by_css_selector('#gender0').click()

else:

browser.find_element_by_css_selector('#gender1').click()Web Automation with Selenium

Example: aastock (v1)

'''Fetch current stock from aastock.'''

from selenium import webdriver

from selenium.webdriver.firefox.options import Options

import time

stock_number = '0011'

options = Options()

options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

browser.get('http://www.aastocks.com/')

element = browser.find_element_by_css_selector('#txtHKQuote')

element.send_keys(stock_number)

browser.execute_script("shhkquote($('#txtHKQuote').val(), 'quote', mainPageMarket)")

time.sleep(3)

element = browser.find_element_by_css_selector('.font28')

print(element.text)

browser.quit()Web Automation with Selenium

Example: aastock (v2)

'''Fetch current stock from aastock.'''

from selenium import webdriver

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.firefox.options import Options

import time

stock_number = '0011'

options = Options()

options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

browser.get('http://www.aastocks.com/tc/stocks/aboutus/companyinfo.aspx')

element = browser.find_element_by_css_selector('#txtHKQuote')

element.send_keys(stock_number)

browser.execute_script("shhkquote($('#txtHKQuote').val(), 'quote', mainPageMarket)")

trial_time = 0

while True:

if trial_time > 5:

break

to_sleep = 0.1

time.sleep(to_sleep)

trial_time += to_sleep

try:

element = browser.find_element_by_css_selector('.font28')

print(element.text)

break

except NoSuchElementException as e:

pass

browser.quit()Web Automation with Selenium

Example: aastock (v3)

'''Fetch stock price of given list of stock number from aastock.'''

from selenium import webdriver

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.firefox.options import Options

import time

stock_numbers = '0011,0005,0700,1810'

options = Options()

# options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

browser.get('http://www.aastocks.com/tc/stocks/aboutus/companyinfo.aspx')

for stock_number in stock_numbers.split(','):

#print(stock_number)

element = browser.find_element_by_css_selector('#txtHKQuote')

element.clear()

element.send_keys(stock_number)

browser.execute_script("shhkquote($('#txtHKQuote').val(), 'quote', mainPageMarket)")

trial_time = 0

while True:

if trial_time > 10:

break

to_sleep = 0.1

time.sleep(to_sleep)

trial_time += to_sleep

try:

element = browser.find_element_by_css_selector('.font28')

print(stock_number + ": " + element.text)

break

except NoSuchElementException as e:

pass

browser.quit()Web Automation with Selenium

Example: aastock (v4)

'''Fetch current stock from aastock.'''

from selenium import webdriver

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.firefox.options import Options

from selenium.webdriver.common.keys import Keys

import time

stock_numbers_input = input("Please enter a list of number separated by comma, in 4 digit: ")

stock_numbers = stock_numbers_input.split(",")

print(stock_numbers)

options = Options()

options.add_argument('-headless')

browser = webdriver.Firefox(options=options)

browser.maximize_window()

for stock_number in stock_numbers:

browser.get('http://www.aastocks.com/tc/mobile/MwFeedback.aspx')

element = browser.find_element_by_css_selector('#symbol')

element.send_keys(stock_number)

element.send_keys(Keys.RETURN);

trial_time = 0

while True:

if trial_time > 5:

break

to_sleep = 0.1

time.sleep(to_sleep)

trial_time += to_sleep

try:

element = browser.find_element_by_css_selector('.text_last')

print(stock_number)

print(element.text)

break

except NoSuchElementException as e:

pass

browser.quit()

input("Press enter to exit")Web Automation with Selenium

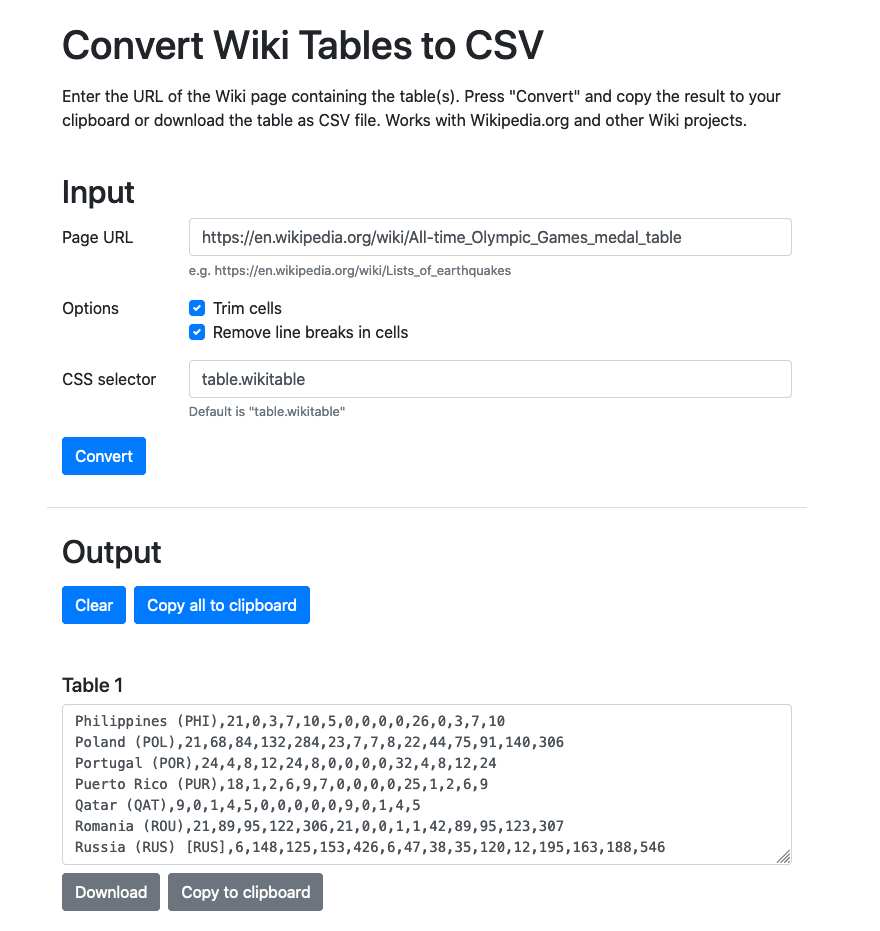

Convert Wiki Tables to CSV

Summary

Web Scraping

- Downloading files in Python

- Open web URL

- Fetch data with API

- Web scraping with Requests and BeautifulSoup

- Web automation with Selenium

- Converting Wikipedia tabular data into CSV