Global hierarchy vs. local structure:

Spurious self-feedback in Barabási-Albert Ising networks

Claudia Merger, Timo Reinartz, Stefan Wessel, Andreas Schuppert, Carsten Honerkamp, Moritz Helias

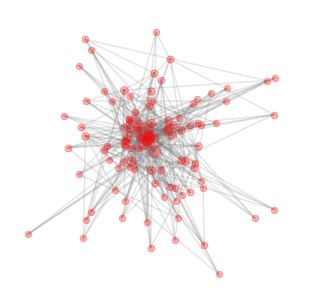

Barabási-Albert networks

+

Ising model

Barabási-Albert networks

have hubs!

Complicated connectivity

Monte-Carlo

Effective action

Methods

High temperature expansion

"Ground truth"

Monte-Carlo

Methods

"Ground truth"

Problem of "freezing hubs"

Timo Reinartz, Stefan Wessel

Monte-Carlo

"Ground truth"

"conventional" Metropolis Monte Carlo: Hubs freeze out

Parallel Tempering

Swedensen and Wang,1986

\( T \)

\(\beta_i \)

\(\beta_j \)

\( p_{ij} = \min \left( 1, e^{(E_i-E_j)(\beta_i -\beta_j)}\right) \)

Timo Reinartz, Stefan Wessel

Effective action

Methods

High temperature expansion

Mean-field self-consistency

Find transition temperature!

Local structure

\( \rightarrow \) solve for largest eigenvalue of \( A \)

Goh, Kahng, Kim, 2001

"onion structure of eigenvector"

\( N = 10^4 \)

Local structure

\( \rightarrow \) solve for largest eigenvalue of \( A \)

Bianconi, 2002

Goh, Kahng, Kim, 2001

"onion structure of eigenvector"

\( N = 10^4 \)

degreewise parametrization

\( A_{ij} \leftrightarrow p_c(k_i,k_j)=\frac{k_i k_j}{2 m_0 N} \)

Local structure

\( \rightarrow \) solve for largest eigenvalue of \( A \)

degreewise parametrization

\( A_{ij} \leftrightarrow p_c(k_i,k_j)=\frac{k_i k_j}{2 m_0 N} \)

Bianconi, 2002

Goh, Kahng, Kim, 2001

"onion structure of eigenvector"

Monte-Carlo:

Transition Temperature?

Local structure

\( \rightarrow \) solve for largest eigenvalue of \( A \)

degreewise parametrization

"onion structure of eigenvector"

Monte-Carlo:

Onion structure?

\( m_i \sim k_i \)

TAP self-consistency

TAP

mean-field

\( \rightarrow \) solve for special \(A\)

Find transition temperature!

Full TAP solution

\( \rightarrow \) Good general agreement

between Monte-Carlo and TAP

(better than mean-field)

Why is TAP better than local meanfield?

TAP

mean-field

TAP

mean-field

expand \( m_j \) around \( m_i =0 \)

\( m_{i}=\beta K_{0}\sum_{j}\left[A_{ij}\left(\quad\Big|_{m_{i}=0}+m_{i}A_{ji}\beta K_{\text{0}}\right)-\beta K_{0}\delta_{ij}k_{i}m_{i}\right]+ \mathcal{O} (\beta^3 K_0^3).\)

\(m_j \)

\( i\)

\( j\)

TAP

mean-field

expand \( m_j \) around \( m_i =0 \)

\( m_{i}=\beta K_{0}\sum_{j}\left[A_{ij}\left(\quad\Big|_{m_{i}=0}+m_{i}A_{ji}\beta K_{\text{0}}\right)-\beta K_{0}\delta_{ij}k_{i}m_{i}\right]+ \mathcal{O} (\beta^3 K_0^3).\)

\(m_j \)

\( i\)

\( j\)

\( \leftrightarrow \) Mezard, Parisi

and Virasoro, 1978

TAP

mean-field

Local structure

\( \rightarrow \) solve : \( T_T = K_0 \lambda_{B, max} (T_T) \)

degreewise parametrization

insert \( A_{ij} \leftrightarrow p_c(k_i,k_j)=\frac{k_i k_j}{2 m_0 N} \) into

\( m_{i}=\beta K_{0}\underbrace{\sum_{j}A_{ij}m_{j}\Big|_{m_{i}=0}}_{\text{field in the absence of }i\, \approx S} \sim k_i S \)

\( \rightarrow\) Same as meanfield

Global effective field \( S \)

\( S(\beta K_{0})= \frac{1}{\langle k \rangle}\langle k\,m \left(k \right)\rangle_{p(k)} \)

\( m(k)= \tanh\left(\beta K_{0}\,k\,S(\beta K_{0})\right) \)

\( M (S) = \langle \tanh\left(\beta K_{0}\,k\,S(\beta K_{0})\right)\rangle_{p(k)} \)

recover local structure:

\( m_{ NN, h} (k)= \tanh( \beta K_{0} \,(k-1) \, S( \beta K_{0} )+\beta K_{0} m(k_{h}) ) \)

Summary and outlook

- Self-consistent solution without self-feedback

- local "onion" structure plays minor role

Hierarchical nature of connectivity dominates the behaviour

Summary and outlook

- Self-consistent solution without self-feedback

- local "onion" structure plays minor role

Hierarchical nature of connectivity dominates the behaviour

personal takeaway:

- Know what confuses you

- Never give up hope for a simple explanation

Averaging over all configurations

Cumulant generating function

Effective action