Latency: The Whole Story

Hi, I'm Ben (aka @obensource)!

Our itinerary today?

The Latencies that

Users

Experience

Where latencies count

How to measure them

What to do about them

Humans

Hardware

Web Apps

(we'll mostly 🏕 out ☝️)

Human Latency

Mental Chronometry

Level Set: When presented with a point of stimulus

(example: an interactive component on a screen),

the average human response time is 215ms

(source: the humanbenchmark study)

In other words

those 215ms intervals (and their results) are the

exciting part of the user experience

Everything in between

is in the waiting place...

What questions do humans ask while they're in

the waiting place?

Is it happening?

Can I navigate now? (Did the server respond?)

Is it useful?

Has enough content loaded for me to interact with?

Is it usable?

Does the page respond, or is it busy when I do something?

Is it delightful?

Does this site feel smooth and natural, or does it lag when I interact with it?

This is why we

gather metrics like Time To Interactive

–aka–

DOM Interactive

In the Browser

Because hard measurements create real answers

for our ambiguous questions

(In this case, we can understand initial web app load times)

So what meaningful thresholds of latency

should be measured?

Where do I start?

A Classic Move

film interactions with your app with a high-speed camera

Hardware Latency

Understand your user's fundamental limitations

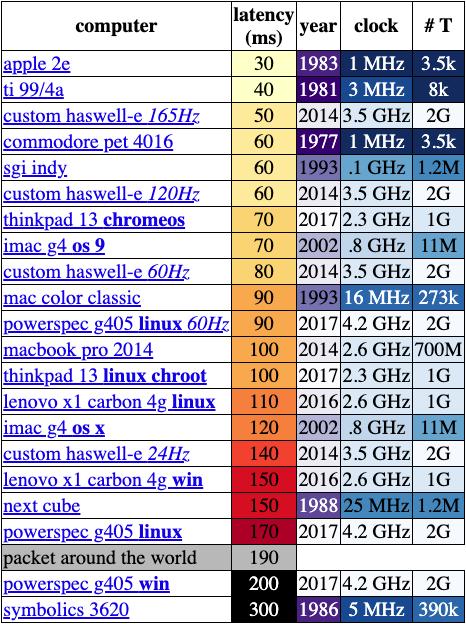

I/O hardware latency varies greatly

creating a very diverse set of user experiences

Keypress & terminal response, measured by a high-speed camera

(Dan Luu, "Computer Latency: 1977–2017")

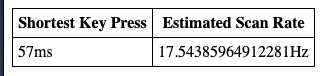

My own scan rate test adds significant delay to the I/O process

with just the action of a keypress

(Apple Magic Keyboard)

(Source: Keyboard Scanrate Tool)

(Microsoft Research: Applied Sciences Group, 2012)

Consider the inherent latency

of all

common devices

Accurately understand the boundaries

of your app's

performance benchmarks

Latency in Apps

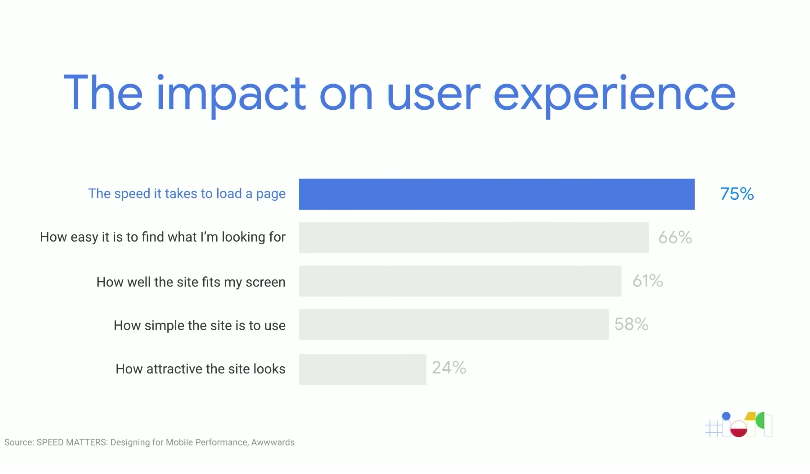

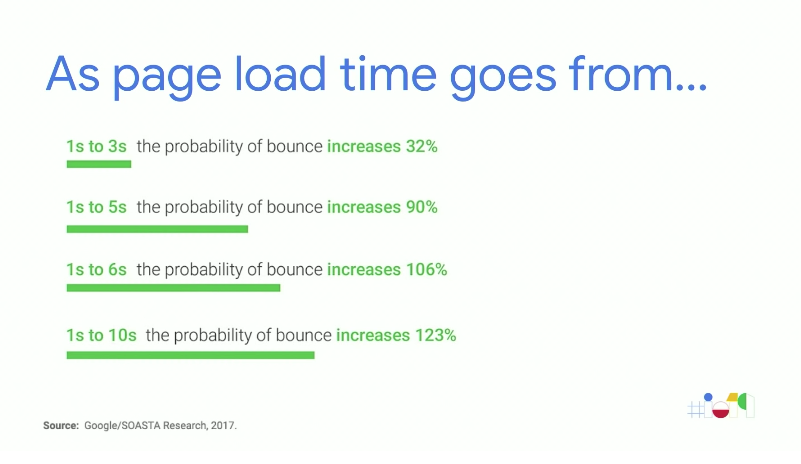

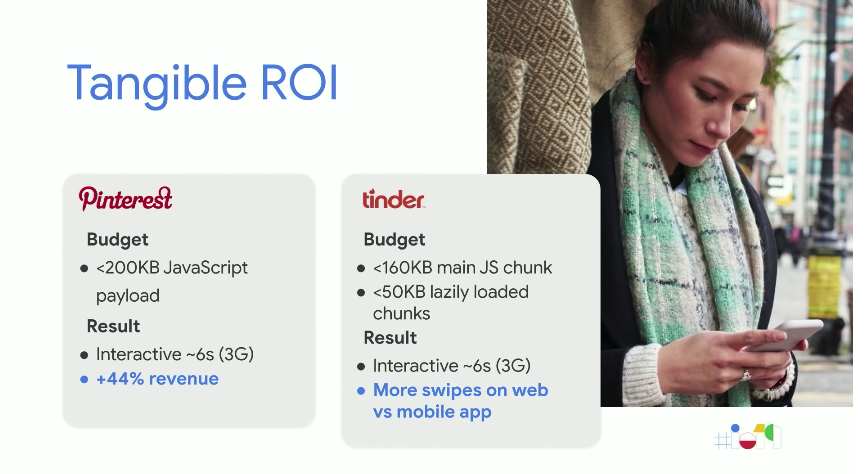

Why should we

bother so much?

Is the payoff really that great?

(Robert Belson: AWS re:Invent 2020)

Relevant Thresholds

Where do I start?

Back End / Front End

Back End

How can the backend facilitate a great UX?

Optimize delivery distribution

Containers, k8s, CDNs / Image CDNs

(eg. Cloudflare, Netlify, Akamai, AWS)

Enable better network protocols

HTTP/2, QUIC

(More parallel TCP connections than 6, less round trips, congestion control, customizable, etc)

Enable on demand content with Serverless

AWS lambda, Azure, GCP

Propagation

Time required for a message to travel from the sender to receiver, which is a function of distance over speed with which the signal propagates.

Transmission

Time required to push all the packet’s bits into the link, which is a function of the packet’s length and data rate of the link.

Processing

Amount of Time required to process the packet header, check for bit-level errors, and determine the packet’s destination.

Queuing

Time the packet is waiting in the queue until it can be processed.

Measurable latency thresholds

Front End Latency

(Things are about to get interesting)

What metrics to people care about?

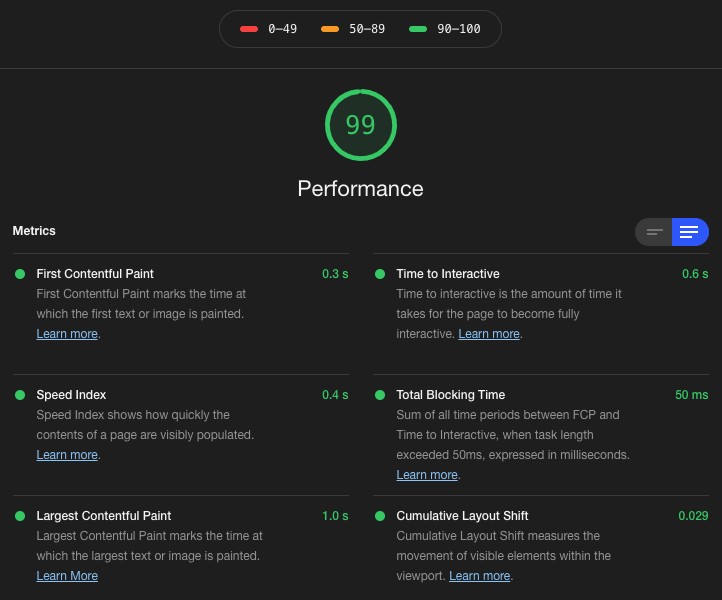

The metrics that Google’s Lighthouse / Perf team cares about for a single audit (aka a snapshot in time)

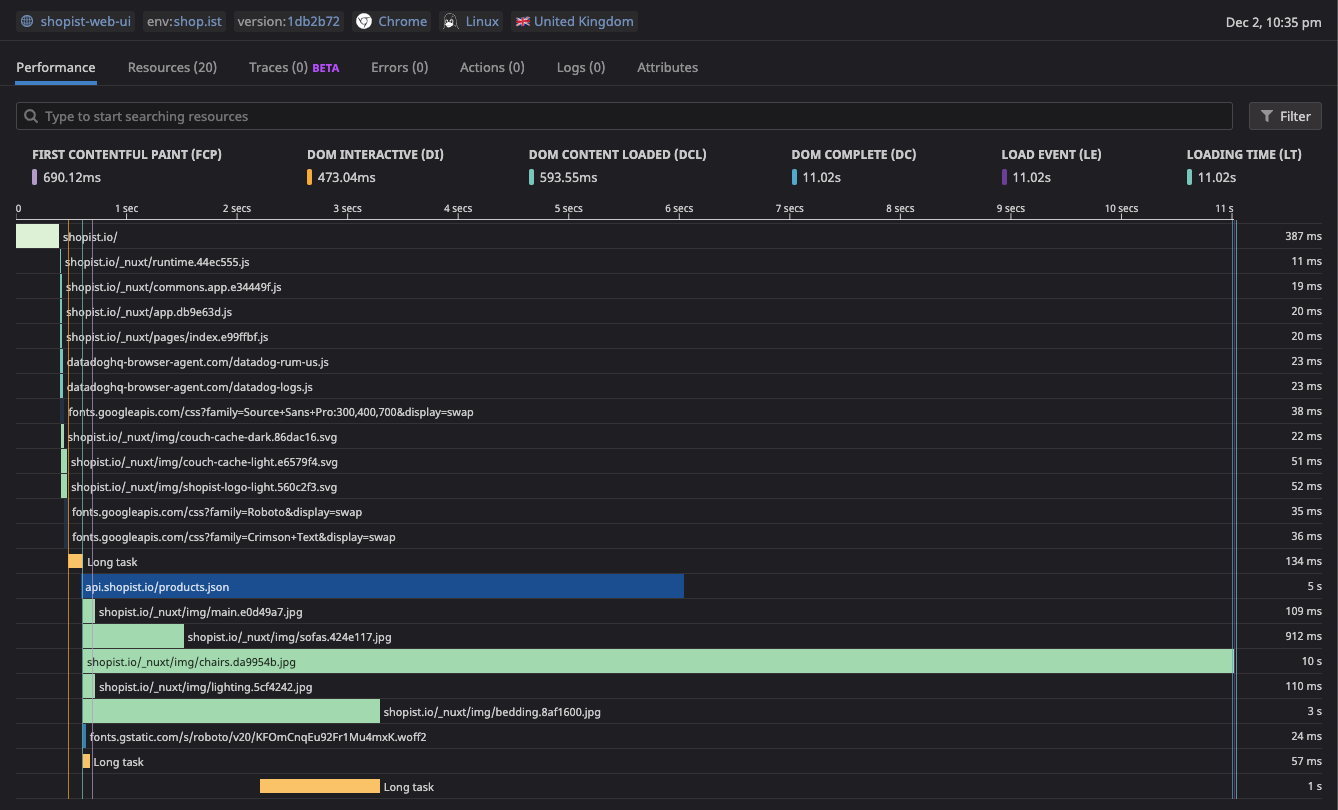

The metrics that Datadog’s Real User Monitoring (RUM) cares about when continually auditing your app (aka long-term latency mitigation)

Before we dive into why these tools are relevant and see

how to use them,

let’s talk about where we can meaningfully measure web app latencies.

The DOM: DOM + CSSOM =>

Render Tree

The browser's rendering, layout, painting, and compositing processes

The constraints and impositions of JavaScript, Node.js, and related libraries

The constraints of HTTP 1.1

We can measure what's going on in

Things

we can

do about it

Known Best Practices

Keep things: Small, Smart, and Smooth

This will reduce the amount of time consuming HTTP requests and DNS lookups that are performed.

Example: you'll be resolving DNS queries, and adding HTTP handshakes every time you need to get an image from an external source. It definitely adds up.

Make as few server calls as necessary

Like your: HTML, CSS, and JS

(Uglify, Minify, bundle, etc)

Compress things

Like your assets.

Locally (PWAs), on CDNs, Image CDNs, etc

Cache things

Consider the impact of libraries and frameworks

JS: TypeScript, Lodash, Node.js, etc.

JAMStack approach: Next.js, Gatsby, etc (pre-bundling / smart bundling, SSR, route pre-fetching)

Data Layer: redux, GraphQL (querying front end data)

Component Frameworks: Svelte vs React (best-in-class perf benchmarks because it doesn't construct the a VDOM and then build the DOM from it like React).

Optimizing your fonts

(use compression, don't fetch if possible)

Smart bundling

(only bundle what you need)

Treeshaking

(get rid of unused modules)

Domain Sharding

(Use multiple domains to get multiple assets. 'How many parallel download can be smooshed into 6 TCP connections via http 1.1?)

Codesplitting & lazy loading

(Reduce unused code bloat, faster load times)

Practice

Determine your critical styles

Prioritize your Critical Rendering path

Use debouncing and/or throttle your inputs

Also

Use Webworkers

(Sorry, no DOM manipulation)

Reduce the

number of images the site needs for the initial page load

Keep browser painting unblocked

(get rid of render blocking)

16ms is the benchmark for JS computations between paints, which are typically ~16ms per paint

Optimize your CSS / Styles

Reduce CSS Selector Complexity

(the deeper the styles go, the longer it’ll take the browser to figure out if there there’s a match on the DOM Tree)

Optimize Animations

Use Request Animation Frame

(the browser can optimize timed animation loops, they're smoother)

Always measure latency first: asses it before you decide you need to spend time optimizing things

Assess First

What tools

can we use to

do all this?

Browser Dev Tools

(Quick Audit)

Lighthouse, Performance, and Network Tools

(Assess, Audit, Fix, Audit again)

User Simulation

Track render blocking

Watch the main thread for bottlenecks

RUM / Synthetics

APM

Dashboards

Datadog

(ongoing insights and production mitigation)