Diagnostics

Software is Complicated

You are going to get it wrong.

Everybody does.

Because nobody really knows how to do this stuff!

Which is why, even after 50 years of trying, large-scale software projects continue to run over time and over budget.

...while also being under-featured and under-performant.

There Will Be Bugs

I don't want to depress you (too much) but, during development, software projects are always broken...

This is the natural state of a work-in-progress.

After all, if it wasn't broken, it would be finished!

This isn't to excuse sloppiness, of course, and

the "brokenness" should, ideally, be in the form of incompleteness --- but, let's face it, bugs do happen.

the "brokenness" should, ideally, be in the form of incompleteness --- but, let's face it, bugs do happen.

Fixing It

One of the particular problems with games and simulations

is that they are complex, dynamic, real-time systems

with a lot of mutating state.

is that they are complex, dynamic, real-time systems

with a lot of mutating state.

Such things are hard to debug.

Traditional methods such as diagnostic "print statements",

or even stepping through the execution in a debugger, are often a poor fit to this domain. If we attempt to apply the diagnostic tools of a 1970s-era batch-processing environment, then we are going to be caught in a painful mismatch!

or even stepping through the execution in a debugger, are often a poor fit to this domain. If we attempt to apply the diagnostic tools of a 1970s-era batch-processing environment, then we are going to be caught in a painful mismatch!

Visualising Dynamic State

The key to making our diagnostics fit the domain is to make them as visual, dynamic and (where appropriate) interactive

as the system that we are building / debugging.

as the system that we are building / debugging.

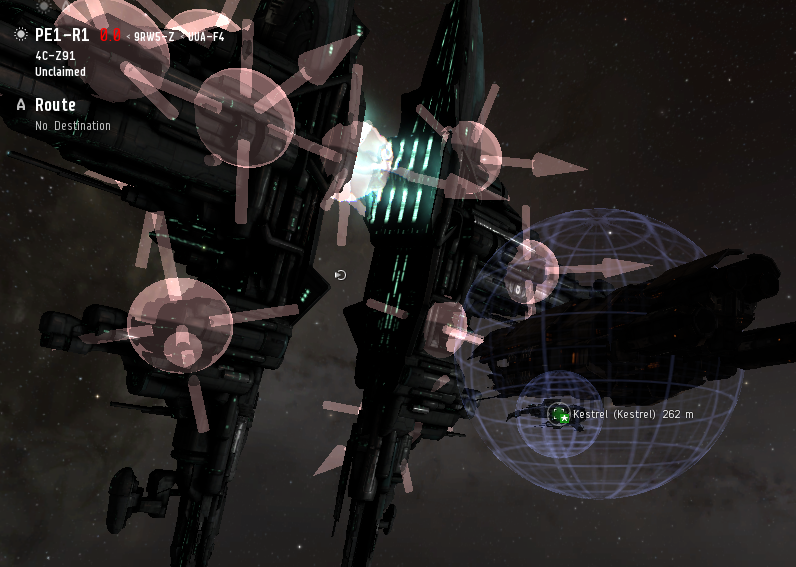

For example, I often add a diagnostic object-selection system which allows me to "pick" game entities at runtime and have them dump information about themselves to the screen, perhaps in some kind of "speech bubble" window.

Likewise, we can add an interactive text console that allows query commands (etc.) to be sent into the game at runtime.

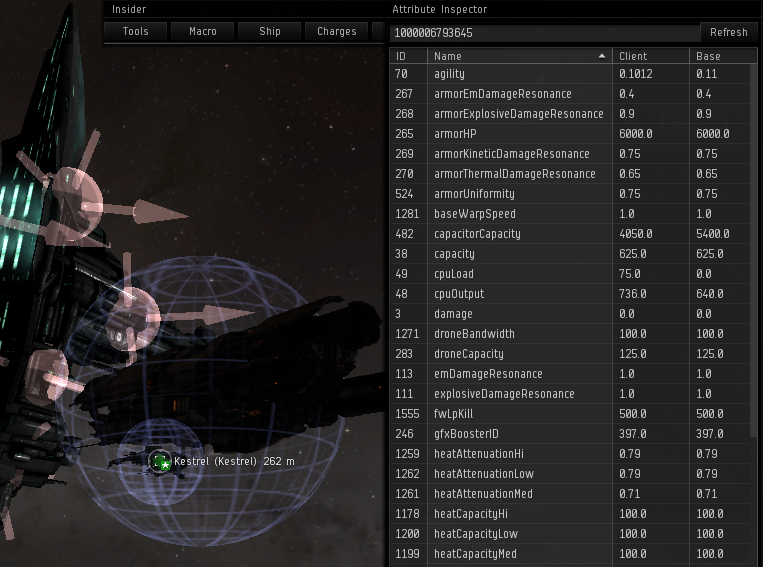

Eve Diagnostics

Viewing Attributes

Wading through Logs...

(it's not all pretty graphics)

Debug Pages

For important "manager" objects I also implement dedicated

"debug pages" which allow them to dump relevant state info (e.g. a summary of all the active entity types from the entityManager) to an interactive onscreen overlay.

"debug pages" which allow them to dump relevant state info (e.g. a summary of all the active entity types from the entityManager) to an interactive onscreen overlay.

It then becomes possible to navigate all of the manager objects at run-time, to see what they are doing, and figure out what is actually happening inside their otherwise "invisible" internal data structures.

Drilling In

With good visualisation, it at least becomes

possible to see what is going on.

possible to see what is going on.

By making those visualisations interactive,

we can more easily "probe" a running system

to get a feel for its actual behaviour.

we can more easily "probe" a running system

to get a feel for its actual behaviour.

This lets us "drill in" to the core of an observed problem,

and helps us to localise the cause, which is pretty much

the first (and, often, the most significant)

step in any debugging exercise.

and helps us to localise the cause, which is pretty much

the first (and, often, the most significant)

step in any debugging exercise.

Most Bugs Are Simple

..and, with good diagnostic infrastructure,

most of them are easy to find too.

most of them are easy to find too.

But some aren't.

For one thing, if you can't reproduce the bug,

you might struggle to fix it --- or, relatedly,

struggle to demonstrate that you have fixed it.

you might struggle to fix it --- or, relatedly,

struggle to demonstrate that you have fixed it.

Have you ever experienced a "Heisenbug"?

Need Repro!

Bugs which are difficult to reproduce are the bane of

many developers' lives: they are rare enough to be

hard to "capture", but common enough that you

can't release the game without fixing them.

many developers' lives: they are rare enough to be

hard to "capture", but common enough that you

can't release the game without fixing them.

Some "QA" departments actually use perpetual video-capture on their testers, so that the existence of a bug, and the apparent path to triggering is at least recorded.

But, where possible, I try to use a better system...

Perfect Diagnostic Replays

Wouldn't it be nice if we had those?

Well, we can! (sometimes).

Most of the games I've written implement such a system,

by simply recording all user-input to a little replay-buffer

(and, from there, to a file) and carefully designing the game code to be perfectly repeatable under equivalent inputs.

by simply recording all user-input to a little replay-buffer

(and, from there, to a file) and carefully designing the game code to be perfectly repeatable under equivalent inputs.

You've perhaps wondered why I always do

"gatherInputs" as a single, isolated step?

"gatherInputs" as a single, isolated step?

Answer : It lets me (almost trivially) do "replayInputs" too!

Inputs: Gotta Catch 'Em All!

To make a robust replay system, you need to have a very

clear idea of what all of your inputs are, and when/where

they enter the system...

clear idea of what all of your inputs are, and when/where

they enter the system...

It's remarkably easy to forget about things like:

"time" (e.g. any calls to the live system clock or timers),

"randomness" (the "seeds" must be recorded), and

"async loading" (the arrival of an async load

is an "input", like any other).

"time" (e.g. any calls to the live system clock or timers),

"randomness" (the "seeds" must be recorded), and

"async loading" (the arrival of an async load

is an "input", like any other).

Also, threads are a pain.

(Because of their unpredictable scheduling).

In Principle...

...if we can catch all of our inputs in some controlled fashion, and record them for future playback --- and if we build our code so that the evolution of its state is repeatably based only on those inputs (i.e. like a big "state machine") --- then we

can make a good diagnostic replay system.

can make a good diagnostic replay system.

By simply switching our input routines into a "playback" mode, they can be set to re-inject a stream of previously recorded inputs, and everything will proceed as before...

...except, this time, you can pause and single-step and investigate things with all the debug tools on hand!

How?

The key to making this work is to clearly separate the "logical" inputs of the replay stream from the live "diagnostic" inputs that you combine them with.

As long as the diagnostics are "passive", you can do whatever you like without damaging the integrity of the replay.

This includes stuff like single-stepping, changing the camera position, toggling wireframe on/off, and so on...

As long as the rendering methods don't perform any logic!

In Practice...

..it can actually be quite tricky to make this work,

but it's often worth it.

but it's often worth it.

It requires discipline, certainly, but much of that discipline (e.g. separating the logic from the rendering, controlling "randomness", taming unpredictable threads)

is general good practice anyway.

is general good practice anyway.

Self-Checking

You can also make the system somewhat self-checking

by dumping out occasional (whole or, more likely, partial)

state "snapshots", and then testing whether the snapshots

match the reality during playback.

by dumping out occasional (whole or, more likely, partial)

state "snapshots", and then testing whether the snapshots

match the reality during playback.

This allows any possible "divergence bugs" in the system

to be auto-detected and, hopefully, fixed before you

stumble across your next hard-to-repro bug.

to be auto-detected and, hopefully, fixed before you

stumble across your next hard-to-repro bug.

This is particularly useful for catching those pervasive "uninitialised data" bugs in a C or C++ program.

Fast Forward

If you make sure that the rendering process doesn't have any logical side-effects (and it shouldn't), then you can implement a crude fast-forwarding system on the playback, simply by dropping some of the renders.

In particular, you can fast-forward to the time at which the bug (or whatever) you are investigating is about to occur,

even if it is inside a long replay session.

even if it is inside a long replay session.

(I used this to find and fix a really tricky, game-breaking, release-blocking bug in Crackdown, for example).

Rewind?

Ah, if only...

Implementing rewind isn't really possible in the kind of replay system that I'm advocating here, because the update logic of a game is not directly "reversible" in general...

There is more than one way to arrive at a particular state in

the simulation, so there isn't any automatic way of working backwards to the previous ones without extra state-tracking.

the simulation, so there isn't any automatic way of working backwards to the previous ones without extra state-tracking.

However, explicit state-dumping does allow this, but it's more expensive to implement. A compromise is possible though.

Rewind Compromises

If we dump out occasional state dumps (e.g. every 30 seconds or something), we can jump back to those, and then quickly play forward to the point that we actually care about.

This approach requires the state dumps to be "perfect" and "restorable" though, which means they have to be pretty big.

Other approaches rely on using approximate state dumps

(e.g. just positions), which then allows for easy forward-and-rewind (and, indeed, "random access") playback, but without enough detail to let you actually trace through the code.

(e.g. just positions), which then allows for easy forward-and-rewind (and, indeed, "random access") playback, but without enough detail to let you actually trace through the code.

Barriers

Two big developments in recent years have made diagnostic replay systems a tougher proposition to implement...

...one is the advent of MMO type games,

in which the total amount of user input data is massive

(often too massive to fully record)

in which the total amount of user input data is massive

(often too massive to fully record)

...the other is the increasing reliance on hardware parallelism and, specifically, the use of "threads" to access this.

BTW: I think threads are just a terrible idea, and we should replace them with a more "hygienic" model e.g. tasks / jobs.

Summary

You should think about the diagnostics of your program

as a core part of your implementation strategy.

as a core part of your implementation strategy.

In the case of games and simulations, you need diagnostics which are as dynamic, real-time and interactive as the thing you are building.

Replay Systems can be super useful, and are a

great tool to have when you can get away with them.

great tool to have when you can get away with them.

These techniques can make you seem like a miracle-worker.

(Sufficiently advanced tech is indistinguishable from magic!)

(Sufficiently advanced tech is indistinguishable from magic!)

When All Else Fails...

This one is a true "expert strategy". Use with care.