Moore's Law

History in Context

Dubious Definitions of "History"*:

-

History is just one damned thing after another...

- What is history but a fable agreed upon?

- An account mostly false, of events mostly unimportant.

However, it has also been said (by Cicero) that:

"The causes of events are ever more interesting

than the events themselves..."

than the events themselves..."

*Be skeptical about the attributions of such quotes. They're often wrong, especially when Churchill is involved.

...but first, a digression...

A Series Of Tubes

On June 28th, 2006,

former Alaskan Senator Ted Stevens told the world:

"The internet is not a big truck. It’s a series of tubes."

former Alaskan Senator Ted Stevens told the world:

"The internet is not a big truck. It’s a series of tubes."

This was something of a misunderstanding...

A Series Of Tubes

These are tubes --- vacuum tubes, aka thermionic valves.

Diodes

The simplest vacuum tube, the diode, is similar to an incandescent light bulb with an added electrode inside.

When the bulb's filament (cathode) is heated red-hot, electrons are "boiled" off its surface into the vacuum inside the bulb, and can be attracted to a positively charged plate (anode).

When the bulb's filament (cathode) is heated red-hot, electrons are "boiled" off its surface into the vacuum inside the bulb, and can be attracted to a positively charged plate (anode).

Triodes

The triode adds a third element -- the "grid", which is used to control the flow from cathode to anode.

The voltage difference between grid and cathode governs the flow of electrons from cathode to anode.

This allows for electrically controlled switching, and even amplification, of the input signal.

The voltage difference between grid and cathode governs the flow of electrons from cathode to anode.

This allows for electrically controlled switching, and even amplification, of the input signal.

What can you do with these?

Well, among other things, you can build

high-speed digital electronic computers

to help defeat Hitler and the Nazis!

high-speed digital electronic computers

to help defeat Hitler and the Nazis!

(Later, you'll also be able to use them for playing games).

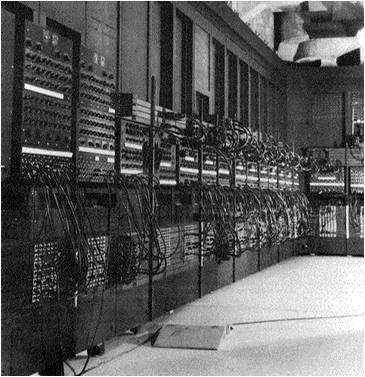

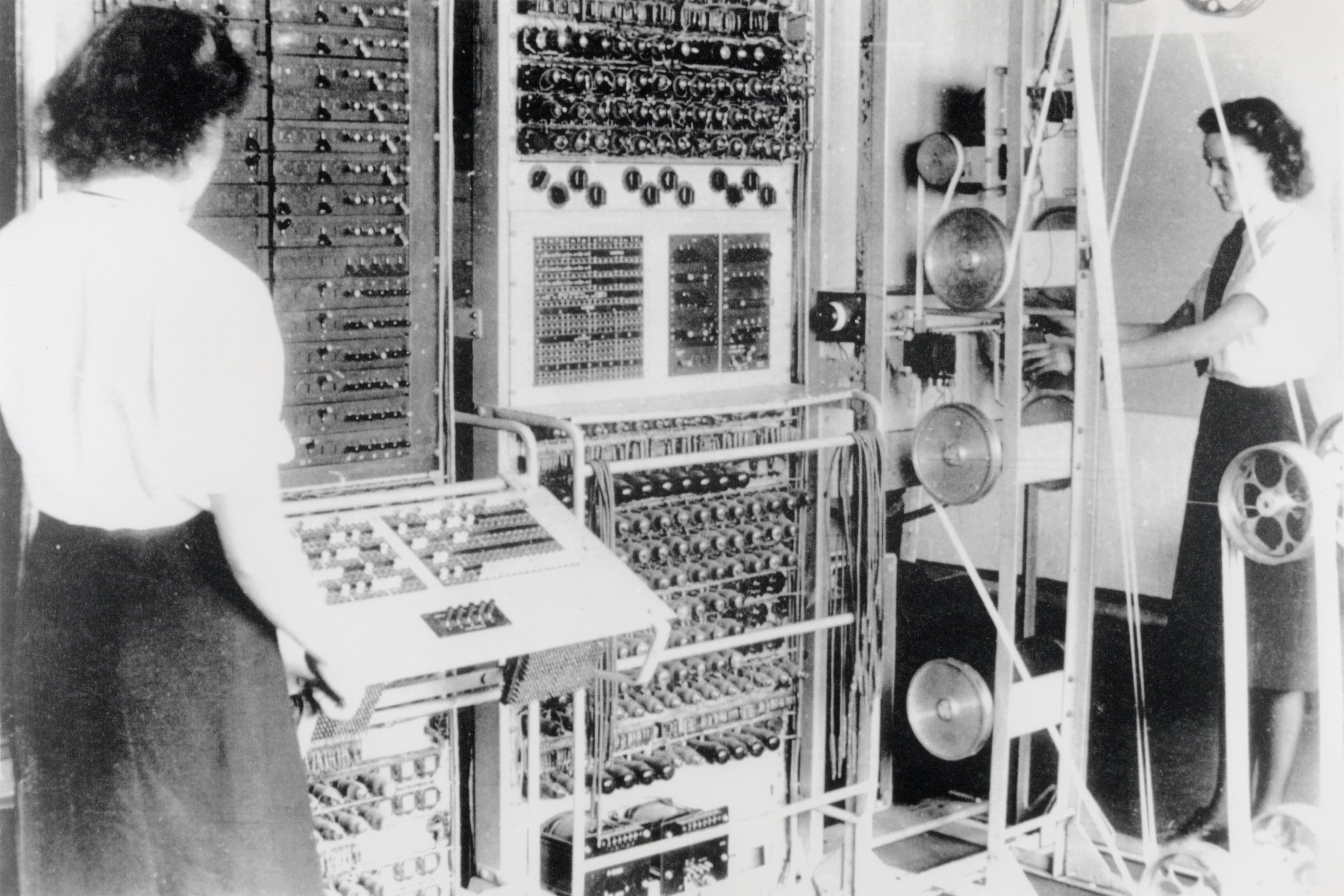

A Series Of Tubes

The Original Valve Steam Machine?

Using about 1,500 vacuum tubes (2,400 for Mk2), in 1944

Colossus replaced a mostly electro-mechanical system

(the "Heath Robinson") for cracking Nazi codes.

Colossus replaced a mostly electro-mechanical system

(the "Heath Robinson") for cracking Nazi codes.

Well, it

did

use valves (aka tubes), but it didn't use steam...

Once Colossus was built and installed, it ran continuously, powered by diesel generators (the steam-driven "mains" electricity supply being too unreliable in wartime).

Valves improved on relays, but were BIG and ran hot.

(After all, they were basically just overgrown light-bulbs!)

"As We May Think"

In 1945, Vannevar Bush wrote a classic essay called

"As We May Think", in which he speculated about a future

where miniaturisation made it possible for people to store huge amounts of information in (comparatively)

tiny amounts of physical space.

"As We May Think", in which he speculated about a future

where miniaturisation made it possible for people to store huge amounts of information in (comparatively)

tiny amounts of physical space.

It contains one of the most brilliant and prescient understatements I've ever read...

"The world has arrived at an age of cheap complex devices of great reliability; and something is bound to come of it."

Scaling the Valve

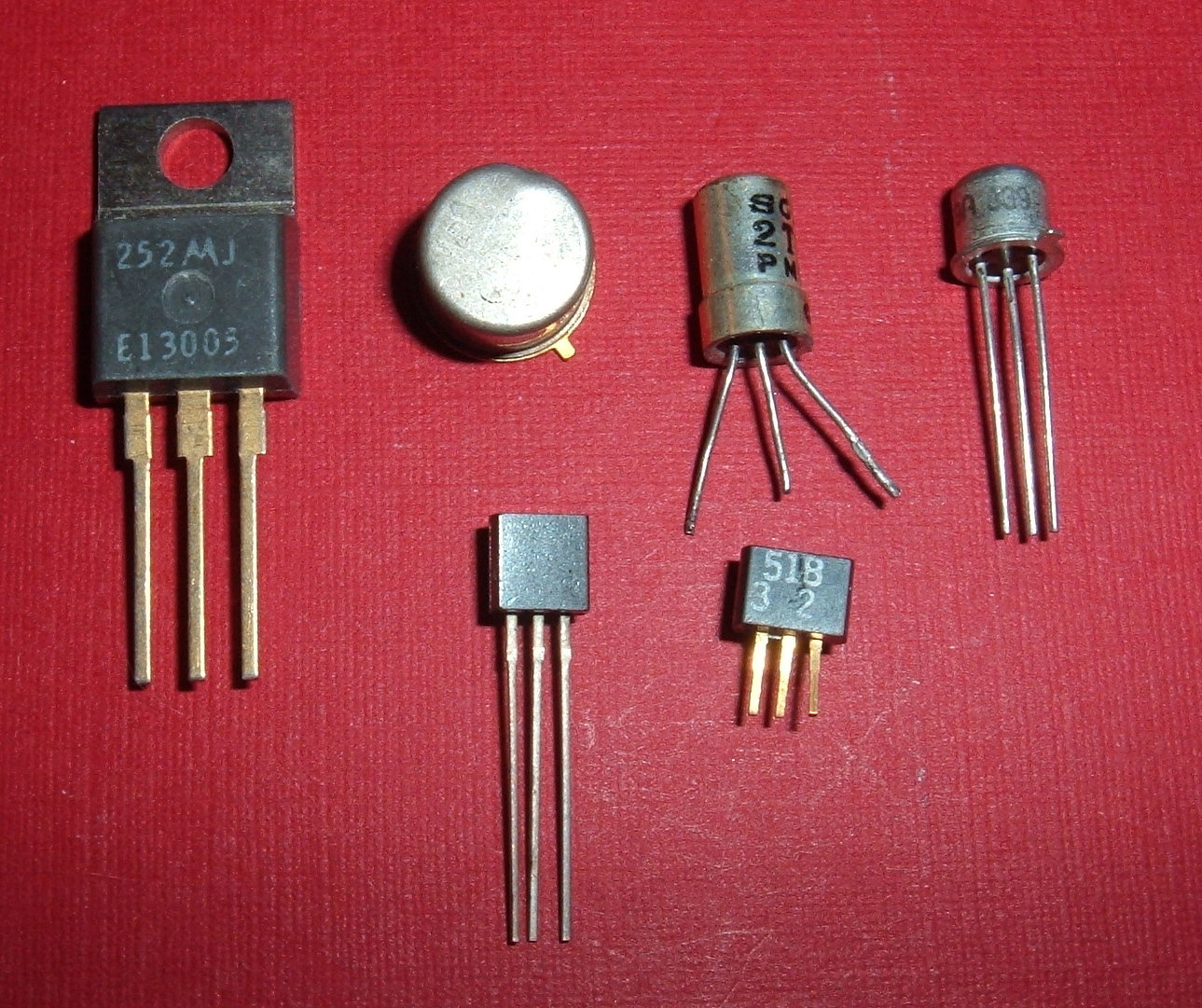

In 1947 a team at Bell Labs invented (or, arguably, re-invented) a small, low-powered, non-thermal, efficient, reliable, shock-resistant equivalent of the triode valve.

They called it the transistor.

And it changed the world.

The Transistor

(have you ever seen one in person?)

Lots of Transistors

Progress!

From our brief review of the history of computing

in the previous lecture, it's apparent that there was a tremendous amount of progress made over a fairly

in the previous lecture, it's apparent that there was a tremendous amount of progress made over a fairly

short period of time following the introduction of the

transistor and, from there, the "integrated circuit"

(aka "microchip") in the late 1950s.

transistor and, from there, the "integrated circuit"

(aka "microchip") in the late 1950s.

There were obviously many factors involved in this,

but the underlying technological enabler was the remarkable increase in the number of transistors per chip.

but the underlying technological enabler was the remarkable increase in the number of transistors per chip.

From PONG to Pac-Man

In just 12 years! (1968 to 1980)

Thanks to a several 1000x increase in transistor count.

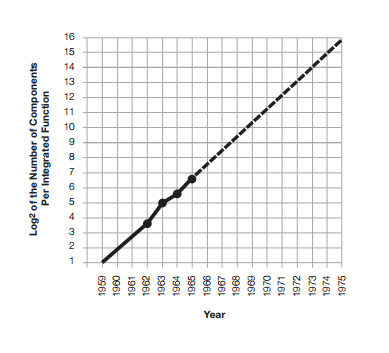

Moore's 1965 Paper

In 1965, an engineer called Gordon Moore (who would go

on to co-found Intel in 1968) wrote an article for Electronics magazine in which he anticipated a doubling of the

number of transistors per chip roughly ever year.

on to co-found Intel in 1968) wrote an article for Electronics magazine in which he anticipated a doubling of the

number of transistors per chip roughly ever year.

In around 1970, Carver Mead named this prediction as

"Moore's Law"

"Moore's Law"

The original article makes for very interesting reading,

and it's only 3 pages long, so do please attempt it...

and it's only 3 pages long, so do please attempt it...

What Moore Actually Said

"

Integrated circuits will lead to such wonders as home

computers—or at least terminals connected to a central

computer—automatic controls for automobiles,

and personal portable communications equipment.

and personal portable communications equipment.

The electronic wristwatch needs only a display

to be feasible today."

-- Gordon Moore, 1965

The

Pulsar watch arrived in 1972. James Bond had one.

Cost per Component

"There is a minimum cost at any given time in the

evolution of the technology. At present [in 1965], it is

reached when 50 components are used per circuit...

evolution of the technology. At present [in 1965], it is

reached when 50 components are used per circuit...

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year...

Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years."

The Cost Graph

Note the use of logarithmic scales.

The Component Graph

The basis for Moore's Extrapolation

Prediction is Difficult

Moore also said:

"By 1975, the number of components per

integrated circuit for minimum cost will be 65,000. "

"By 1975, the number of components per

integrated circuit for minimum cost will be 65,000. "

Reality said:

"The Intel 8085, introduced in 1976, had 6,500 transistors."

"The Intel 8085, introduced in 1976, had 6,500 transistors."

However, Moore was counting "components"... and Intel was apparently developing an (unreleased) 32,000 component (16,000 transistor) CCD memory chip in 1975.

Version 2.0

Actually, in 1975, Moore had already tweaked his prediction: he clarified the various contributions to the doubling trend (smaller components, larger chips, cleverer designs) and, based on his belief that the potential for "cleverer designs" was now reaching a limit, he revised his prediction downward to

a doubling every two years.

a doubling every two years.

Here's an Intel-specific "infographic"

putting all of this into context.

putting all of this into context.

A less Intel-centric version appears on the next slide...

Transistor Counts (to 2011)

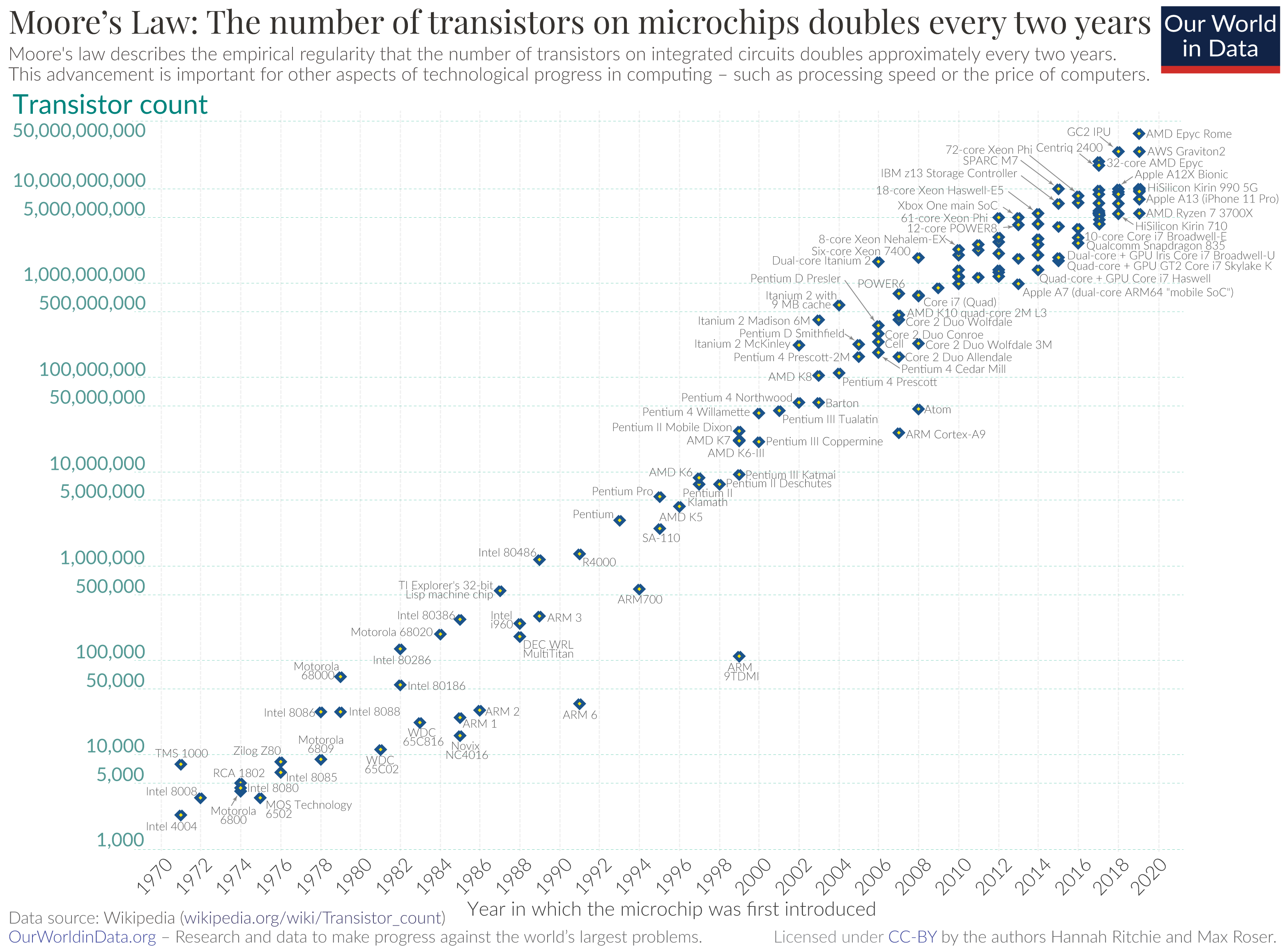

Transistor Counts (to 2020)

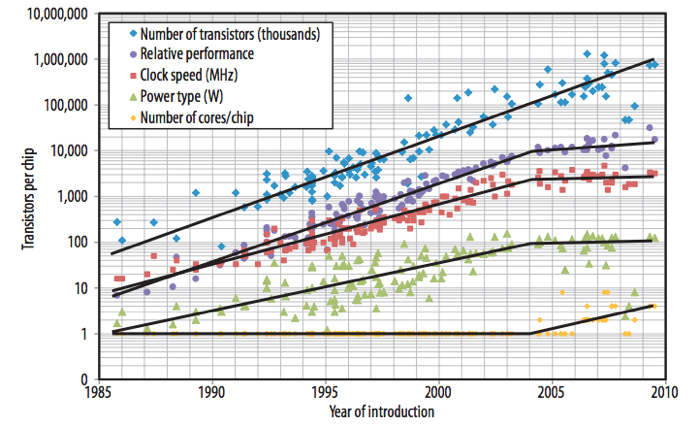

Performance

These increases in transistor count also have an effect on overall performance: more transistors can perform more computations!

Also, smaller transistors can be run at higher clock-speeds, which compounds the benefits.

This led Moore's colleague David House to observe that,

under Moore's Law, overall chip performance would

double every 18 months.

under Moore's Law, overall chip performance would

double every 18 months.

Exponential!

This form of growth (or, indeed, decay) is properly called "exponential". It means that the rate of change is

proportional to the current value.

proportional to the current value.

Which means you can also say:

"It changes by a fixed factor of f

over some fixed period of time t".

"It changes by a fixed factor of f

over some fixed period of time t".

It implies that, if you graph the trend on a logarithmic axis,

it will be a straight line.

it will be a straight line.

Commonly Misused!

Not Exponential!

"Exponential" does not just mean "it grows really fast".

...or, even, "it grows by an ever increasing amount".

In particular, functions such as x^2 are

not

exponential.

(but 2^x, or a^bx, or e^x etc. are)

(but 2^x, or a^bx, or e^x etc. are)

This observation is, perhaps, a bit pedantic for general usage, but it matters a lot in technical fields and is worth remembering.

Top Tips

A doubling every 1 year gives 10 doublings per decade.

2^10 is 1024, so that's approx 1000-fold increase per decade.

2^10 is 1024, so that's approx 1000-fold increase per decade.

A doubling every 2 years gives 5 doublings per decade.

2^5 is 32, so that's approx 30-fold increase per decade.

2^5 is 32, so that's approx 30-fold increase per decade.

A doubling every 1.5 years gives 6.6 doublings per decade.

2^6.6 is ~100, so that's approx 100-fold increase per decade

(or, equivalently, 10-fold every 5 years).

2^6.6 is ~100, so that's approx 100-fold increase per decade

(or, equivalently, 10-fold every 5 years).

Learn Your Powers of Two

2^32 = 2^10 * 2^10 * 2^10 * 2^2

(because multiplication adds "powers")

= 1024 * 1024 * 1024 * 4

= 4,294,967,296

Let's call it four billion.

Why?

So, Moore's Law has been a pretty good guide to 50+ years

of integrated circuit growth. But why does it hold?

of integrated circuit growth. But why does it hold?

The full set of reasons appears to be rather complex, but

it looks like a combination of ongoing improvements in manufacturing technology, driven by economic factors (demand, growth), and building on its own prior results.

it looks like a combination of ongoing improvements in manufacturing technology, driven by economic factors (demand, growth), and building on its own prior results.

It's also a well-known target that everyone in the industry

aims for in their planning, which leads it to be something

of a self-fulfilling prophecy!

aims for in their planning, which leads it to be something

of a self-fulfilling prophecy!

Predictive Power

Over the years Moore's Law has been used by a lot of smart people to anticipate future developments, extrapolate trends, and,

in the words of Wayne Gretzky,

"skate to where the puck is going, not to where it is".

Despite not being a true "Law", the fact that it actually gave some predictive power surely puts it ahead of most theories of history and/or economics, and arguably makes it something close to science. ;-)

Danny Hillis' Knob

I once met Danny Hillis, the founder of massively-parallel supercomputer manufacturer Thinking Machines Corp.,

and he told me that he'd projected in the 1970s that, as a consequence of Moore's Law etc., there would eventually

be more computers in the world than people.

and he told me that he'd projected in the 1970s that, as a consequence of Moore's Law etc., there would eventually

be more computers in the world than people.

"What will we do with them?" one man had asked,

incredulously, "Put them in doorknobs?"

incredulously, "Put them in doorknobs?"

The next time Hillis visited the hotel where this took place,

his room door had a computerised electronic lock in it.

his room door had a computerised electronic lock in it.

Let's Take A Year Off!

Years ago, I read a paper by a researcher who was

working on some highly complex data-intensive task.

The data was going to take 4 years to process.

working on some highly complex data-intensive task.

The data was going to take 4 years to process.

So, he proposed that his funders send him to the Bahamas for a year --

to do nothing

-- trusting that Moore's Law etc. would, in that time, produce computers that were twice as powerful. Then they could buy one of those, and complete the work in a further 2 years (a year ahead of schedule).

It was a win-win situation!

They didn't go for it though. :-(

Planning A Game

When you're planning a large-scale professional game (or, perhaps, one which you just expect to be around for a long period of time), using Moore's Law is hugely valuable...

One rule of thumb, with PC games, is that the best machine you can buy at the start of a 2 year project will be the minimum spec by the time you launch.

Misjudging this, in either direction, can be hazardous

to your success. Moore's Law matters!

to your success. Moore's Law matters!

Moore's Law In Action

Evolution of The Tomb Raider:

Will it continue?

In a finite world, exponential growth

cannot continue indefinitely.

cannot continue indefinitely.

There is a (molecular) limit to how small a transistor can possibly be, and practical limits about handling electrical interference effects at truly tiny scales.

Difficulties related to power-consumption and heat

dissipation are also constraining how much faster the

circuits can be clocked, which is why clock rates

have increased so little in recent years...

dissipation are also constraining how much faster the

circuits can be clocked, which is why clock rates

have increased so little in recent years...

Something Changed in 2004

(taken from this fascinating article)

Hitting the wall in 2020?

That's what this guy, Pentium Pro architect Bob Colwell

(of Intel and DARPA) predicts... (in 2013)

(of Intel and DARPA) predicts... (in 2013)

On the other hand...

Throughout its life there have been claims that an end to Moore's Law is imminent, but a succession of technical breakthroughs has allowed it to continue.

There is still the possibility that some new form of technology will arise, allowing growth to continue beyond the expected near-term limits.

However, the tailing-off of clock speeds (about which the original law says nothing, remember!) is certainly real, alongside the corresponding move to multi-core systems.

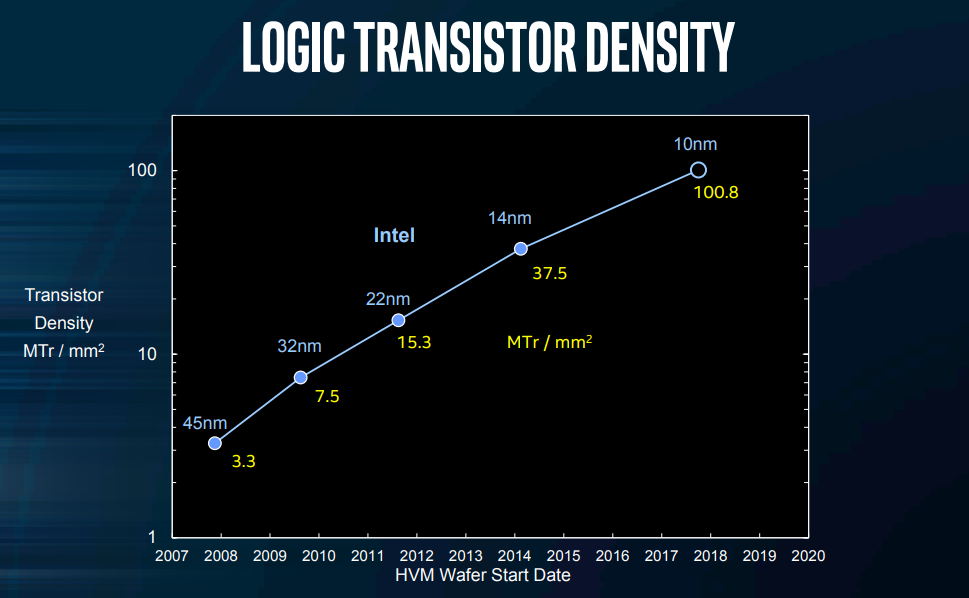

"3D" Transistors (from 2011)

Intel's "10nm" tech (2017)

Getting to 5nm is HARD

"The 5 nm node was once assumed by some experts to be the end of Moore's law.

Transistors smaller than 7 nm will experience quantum tunnelling through their logic gates.

Due to the costs involved in development, 5 nm is predicted to take longer to reach market

than the 2 years estimated by Moore's Law. Beyond 7 nm, major technological advances

would have to be made; possible candidates include vortex laser, MOSFET-BJT dual-mode transistor, 3D packaging, microfluidic cooling, PCMOS, vacuum transistors, t-rays, extreme ultraviolet lithography, carbon nanotube transistors, silicon photonics, graphene, phosphorene, organic semiconductors, gallium arsenide, indium gallium arsenide, nano-patterning, and reconfigurable chaos-based microchips."

-- Wikipedia, July 2017

Transistors smaller than 7 nm will experience quantum tunnelling through their logic gates.

Due to the costs involved in development, 5 nm is predicted to take longer to reach market

than the 2 years estimated by Moore's Law. Beyond 7 nm, major technological advances

would have to be made; possible candidates include vortex laser, MOSFET-BJT dual-mode transistor, 3D packaging, microfluidic cooling, PCMOS, vacuum transistors, t-rays, extreme ultraviolet lithography, carbon nanotube transistors, silicon photonics, graphene, phosphorene, organic semiconductors, gallium arsenide, indium gallium arsenide, nano-patterning, and reconfigurable chaos-based microchips."

-- Wikipedia, July 2017

The Actual Status in 2020?

The Future?

I don't know --- but the transition to multi-core and

super-parallelism is very real. It still seems likely that "computers" will continue to increase in their overall

calculating abilities for a while yet, especially on

mobile devices, which is the growth area.

super-parallelism is very real. It still seems likely that "computers" will continue to increase in their overall

calculating abilities for a while yet, especially on

mobile devices, which is the growth area.

-

History is a pack of lies about events that never

happened, told by people who weren't there.

-

History is merely a list of surprises.

It can only prepare us to be surprised yet again.

- History will be kind to me, for I intend to write it.*

(*That last one is attributed to Winston Churchill.)

Questions to Consider

How many computers are there in the world?

What counts as a computer?

How many transistors are in this room?

How many transistors per person?

Transistor Memories

Static RAM: Typically uses 6T (or 4T, 8T, 10T) per bit

Flash Memory: 1T (or less... 1/2T, 1/3T, 1/4T!) per bit

Here's another map of global mobile-phone ownership.