Rendering

(Part Two)

Recap

We've been looking at the process of artificial image generation, as implemented via our TVs and Monitors.

...and, as it turns out, it's all...

JUST AN ILLUSION!!

But it's a wonderful one!

MORPHEUS EXPLAINS

MIND BLOWN!

The Present Day

Of course, modern displays are typically LCDs (or similar)

and can be driven at much higher update rates than those

used by analogue film and television...

and can be driven at much higher update rates than those

used by analogue film and television...

60 lies-per-second (aka Hz) remains common,

but higher rates such as 120Hz (or more)

are becoming fashionable.

but higher rates such as 120Hz (or more)

are becoming fashionable.

Nevertheless, as currently configured, these displays are essentially "emulating" the characteristics of old CRTs,

and are subject to the same problems and techniques...

and are subject to the same problems and techniques...

The Side-Effects of Scanning

If the frame buffer memory is being modified

while the scan is taking place, you can get a

confused "partial image" on the display.

while the scan is taking place, you can get a

confused "partial image" on the display.

This can manifest as "object flicker" if half-drawn images become visible on the display, or as "screen tearing"

if the scan ends up combining pixels from multiple

separate "frames" into a single hybrid picture.

if the scan ends up combining pixels from multiple

separate "frames" into a single hybrid picture.

Screen Flicker

A famously flickery version of Pac-Man:

Screen Tearing

Scanning is Tricky!

Mitigations

Yes, it's all very upsetting, but there are some

standard techniques for limiting the damage...

standard techniques for limiting the damage...

Racing The Beam

If you know exactly when the scan is being performed,

and you are able to construct your image progressively,

line by line, then it's theoretically possible to make sure

that you're only modifying the part of the frame buffer

which has already been shown or, equivalently,

the part which hasn't been shown yet.

and you are able to construct your image progressively,

line by line, then it's theoretically possible to make sure

that you're only modifying the part of the frame buffer

which has already been shown or, equivalently,

the part which hasn't been shown yet.

If you do that, you'll never be "crossing the beam"

while it is scanning the image, so you will be

insulated from partial images.

while it is scanning the image, so you will be

insulated from partial images.

Don't Cross The Streams

OK, it's not quite that bad...

In fact, the Atari 2600 (available from 1977 to 1983)

had

to be programmed in this manner, because it didn't have enough memory for a full frame-buffer,

and was only able to store

the information for one image-line at a time.

the information for one image-line at a time.

The machine was therefore forced to compute each line

of the image while the TV was scanning it out.

of the image while the TV was scanning it out.

This is utterly impractical, requires some completely

incredible programming heroism, and is the

subject of a nice little book.

incredible programming heroism, and is the

subject of a nice little book.

..and an article in Wired.

Avoiding The Eye of Sauron

V-Sync

The simpler, brute-force, approach to avoiding the display

of partial images is simply to avoid writing anything

to the frame buffer while it is being scanned.

of partial images is simply to avoid writing anything

to the frame buffer while it is being scanned.

Historically,

CRT-based systems had a short delay at the end (or start) of each "frame", during which the

scanning beam from the electron gun would have to be temporarily

"switched off" and then moved back to the top-left

corner of the display for the next frame.

"switched off" and then moved back to the top-left

corner of the display for the next frame.

This was

called the "vertical blanking interval".

Atari 2600 TV Frame

The VBLANK Interval

The VBLANK generally lasts for about 1 millisecond

(and has been retained in more modern video systems,

even though we're not generally waiting for

CRTs and big electromagnets these days).

(and has been retained in more modern video systems,

even though we're not generally waiting for

CRTs and big electromagnets these days).

Anyway, if you can write a new image into the frame buffer

during that blanking period, then you can prevent any

"partial images" from being seen by the user.

"partial images" from being seen by the user.

Then, during the other 15 milliseconds you can, erm,

do some other things instead...

do some other things instead...

Erm...

Unfortunately, drawing the image is usually the

time-consuming part, so this isn't ideal...

time-consuming part, so this isn't ideal...

...but the basic idea led to a technique which is

much more practical, and is used to this day...

much more practical, and is used to this day...

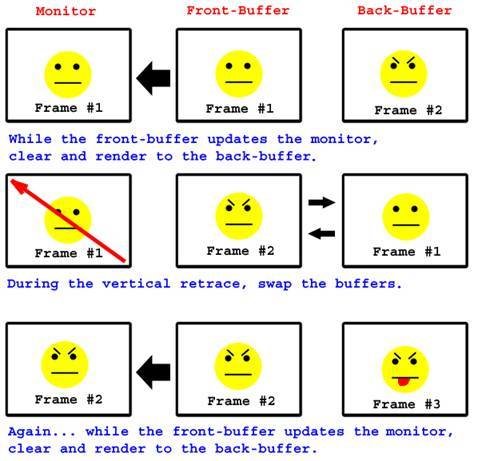

Double-Buffering

Instead of drawing the entire image to the frame buffer during the VBLANK we could, if we

had enough memory, prepare the next image in a separate buffer ("at our leisure") and

then simply copy it into place during the VBLANK.

then simply copy it into place during the VBLANK.

If you can perform the copy to the "front buffer"

during the 1ms period of the VBLANK,

then this approach becomes viable.

during the 1ms period of the VBLANK,

then this approach becomes viable.

...albeit at the expense of allocating another frame buffer.

Buffer Copy Illustration

Copy or Flip?

Better yet, instead of copying it, why not just tell the

graphics card to flip over to using the newly written

buffer and scan the next frame from there instead?

graphics card to flip over to using the newly written

buffer and scan the next frame from there instead?

If this switch-over happens during the VBLANK,

it won't be visible, and we'll have solved

the partial image problem.

it won't be visible, and we'll have solved

the partial image problem.

Buffer Flip Illustration

Buffer Flipped Animation

Problem Solved?

Well... not quite.

In modern systems, if they are running in full-screen mode

(as, for example, console games do), this kind of buffer flipping is usually being done behind the scenes...

(as, for example, console games do), this kind of buffer flipping is usually being done behind the scenes...

However, in a windowed environment (such as a browser), you are sharing the screen with other applications and

don't have full control over it, so you have to rely

on the buffer-copy approach instead.

don't have full control over it, so you have to rely

on the buffer-copy approach instead.

Canvas To The Rescue!

When you're working with canvas, you are in fact writing

into a special "back buffer", and the browser will usually be configured to only perform the copy into the "front buffer" during the V-Sync period (if it possibly can).

into a special "back buffer", and the browser will usually be configured to only perform the copy into the "front buffer" during the V-Sync period (if it possibly can).

So, V-Synced Double-Buffered copying is "built in"!

In other systems, you have to implement this yourself

(or choose to use some other approach).

(or choose to use some other approach).

Slow Down

Double-buffering with V-Sync usually works very well,

but is not without its problems.

but is not without its problems.

The

main drawback is that we sometimes have to wait for the graphics hardware to finish with the

current "front buffer" before we can start using it again.

In a well-balanced system running

at the full hardware

frame-rate, this waiting period is minimal,

and causes few problems...

frame-rate, this waiting period is minimal,

and causes few problems...

But!

But, if a particular frame takes a little too long to draw, you might be forced to wait for

almost an entire frame-period before catching the next VBLANK.

This can cause massive

temporary slowdowns, especially

on games which haven't been specially written

to cope with "late frames".

on games which haven't been specially written

to cope with "late frames".

This is something you may have noticed during

busy scenes on a console game...

busy scenes on a console game...

Console Fail

...e.g. the game

expects to be running at 60FPS, say, (i.e. drawing a frame in about 16ms) but a bunch of

on-screen explosions or some hectic burst of activity causes some extra load and means that

the frame takes, perhaps 20ms to draw.

In so doing, it misses the "scheduled" VBLANK by 4ms,

and then has to wait an extra 12ms for the next one!

and then has to wait an extra 12ms for the next one!

Idle Waiting

During this 12ms delay the game can't

really do anything:

The front-buffer is in use, being rescanned (with the same image as

the frame before -- doh!), and the back-buffer can't

be touched because that image hasn't been shown yet.

So, the game just waits.

be touched because that image hasn't been shown yet.

So, the game just waits.

If a series of these slightly slow frames occurs,

the perceived frame-rate will "drop off a cliff" to just 30FPS.

(Or, if the game runs at 30FPS normally,

it will drop down to 20FPS).

the perceived frame-rate will "drop off a cliff" to just 30FPS.

(Or, if the game runs at 30FPS normally,

it will drop down to 20FPS).

It Gets Worse!

Furthermore, if the game logic is working on "frames"

(as in many console games), then you'll also see things MOVING more slowly (not just DRAWING more slowly).

(as in many console games), then you'll also see things MOVING more slowly (not just DRAWING more slowly).

i.e. If an object's velocity is being computed as a

"distance per frame" instead of a "distance per second",

then the presence of slow frames will cause a

proportionate slowdown in movement speeds.

"distance per frame" instead of a "distance per second",

then the presence of slow frames will cause a

proportionate slowdown in movement speeds.

This is completely horrible, and potentially avoidable,

but it still happens.

but it still happens.

The Corleone Solution:

"Triple Buffering"

"The family had a lot of buffers!"

-- The Godfather: Part II

Instead of waiting for the current front-buffer to become available at

the VBLANK, we can just move our drawing into some 3rd buffer and

continue working there without delay. Then, when the VBLANK arrives,

the "buffer flip" occurs as before, but now it is essentially happening

in the background and it doesn't slow us down!

Is it worth it?

With this approach, although we might still have

the occasional late-frame, the consequences tend

to be less severe. Instead of "falling off the FPS cliff",

the occasional late-frame, the consequences tend

to be less severe. Instead of "falling off the FPS cliff",

the system will degrade more

gradually...

which is clearly preferable.

which is clearly preferable.

However, it requires allocating an extra buffer,

and screen-buffers are generally large enough

(and video memory scarce enough) that this

isn't an easy trade-off to balance in practice.

and screen-buffers are generally large enough

(and video memory scarce enough) that this

isn't an easy trade-off to balance in practice.

Adaptive V-Sync

An alternative to having yet-more-buffers is simply

to "admit defeat" on the late frames and disable

the v-sync when you are running slow.

to "admit defeat" on the late frames and disable

the v-sync when you are running slow.

If you do that, you still avoid partial images

at high frame rates (where v-sync is enabled), but will accept "tearing" on

the slow frames as an alternative to falling off the FPS cliff.

the slow frames as an alternative to falling off the FPS cliff.

...and avoid having to spend more of your precious

video memory on a third (and, possibly, rarely-used) buffer.

"FPS" is nonsense anyway!

There is tendency in the gaming community to place a lot of stock in "FPS" rates, sometimes to the point of absurdity.

For one thing, there are definite diminishing-returns to increasing your frames-per-second beyond a certain limit,

and it is basically pointless to have an FPS higher than

your actual screen refresh-rate.

and it is basically pointless to have an FPS higher than

your actual screen refresh-rate.

(The only defence being that it arguably reduces

"input lag", but there are better ways of achieving that).

"input lag", but there are better ways of achieving that).

Dodgy Benchmarks

"Average FPS" benchmarks ignore how important FPS

variance is to the smoothness of the play experience.

It is often better to have a lower, but more steady,

frame-rate than to have a higher, but erratic, one.

variance is to the smoothness of the play experience.

It is often better to have a lower, but more steady,

frame-rate than to have a higher, but erratic, one.

Furthermore, FPS isn't even a very good metric. It occupies

an "inverse time" space (i.e. something per second), when

what really matters is time-per-frame. Talking about an improvement of e.g. "5 FPS" has no absolute meaning.

an "inverse time" space (i.e. something per second), when

what really matters is time-per-frame. Talking about an improvement of e.g. "5 FPS" has no absolute meaning.

Learn to think in milliseconds per frame instead.

GPU Weirdness

A particularly subtle problem occurs in the case of 3D rendering on modern "Graphics Processing Units"...

Modern GPUs typically work on a big buffer of drawing commands which have been generated by the CPU,

with the GPU actually working a few frames behind.

with the GPU actually working a few frames behind.

In extreme cases, variation between the time taken to generate frames on the CPU,

and the time taken for them to flow through the GPU, can lead to some anomalies...

Anomalies?

The rhythm of CPU and GPU processing can fall out-of-sync in such a way that,

while the output FPS appears to be

smooth and regular, the underlying CPU time-deltas

are erratic, leading to jerky motion even at high FPS.

smooth and regular, the underlying CPU time-deltas

are erratic, leading to jerky motion even at high FPS.

For example, if we switch from a GPU-bound frame

(where the GPU buffer is full, and the CPU waits for it)

to a heavily CPU-bound frame, the GPU will have time to work through its backlog, leading to a mini-surge of jerky

"too fast frames" when the CPU has finally recovered.

(where the GPU buffer is full, and the CPU waits for it)

to a heavily CPU-bound frame, the GPU will have time to work through its backlog, leading to a mini-surge of jerky

"too fast frames" when the CPU has finally recovered.

A Summary of Scanning

A naive rendering system would be prone to unwanted "partial images" exhibiting both "flickering" and "tearing".

V-Sync prevents you from seeing "partial images"

by only modifying the Frame Buffer in the period

when it isn't being scanned (i.e. during the VBLANK).

by only modifying the Frame Buffer in the period

when it isn't being scanned (i.e. during the VBLANK).

Double-buffering gives you the freedom to create your

next image "at any time" (i.e. not just in the VBLANK)

without the "work in progress" ever being seen.

This approach prevents "flickering".

next image "at any time" (i.e. not just in the VBLANK)

without the "work in progress" ever being seen.

This approach prevents "flickering".

Triple-buffering can reduce the delays caused by

waiting for a V-Synced buffer-switch. This provides

some protection against "falling off the frame-rate cliff",

waiting for a V-Synced buffer-switch. This provides

some protection against "falling off the frame-rate cliff",

at the expense of allocating an additional screen buffer.

Having a consistent frame-rate can be more important

to a good user-experience than merely having a high one.

to a good user-experience than merely having a high one.

As a developer, you should think in terms of

"(milli-)seconds per frame", not "frames per second".

"(milli-)seconds per frame", not "frames per second".