ENPM809V

Kernel Internals - Part 2

What we will go over:

- System Calls in the Kernels

- Devices

- Kernel Memory Management

- Virtual Memory

- Virtual File System

System Calls

System Calls

- Who remembers what a system call is?

- Allows user-facing applications to request the kernel to take certain actions

- Write, Open, send network data, get PID

- Think of it as an API to the operating system

- Allows user-facing applications to request the kernel to take certain actions

How are they defined?

- Define syscalls by using the macro

SYSCALL_DEFINE<n>- Example:

SYSCALL_DEFINE5(example_func) - Prototype it turns to -

asmlinkage long sys_exazmple_func

- Example:

How are they executed?

Executing System Calls

- Syscall instruction - https://www.felixcloutier.com/x86/syscall

- Built-in functions - man 2 write, man 2 read

System Call Table

- Exactly as it sounds: a table for looking up system calls

- When making a system call, the user-space application refers to a system call number

- The kernel then looks it up in the sys_call_table, which contains the prop syscall function mapping.

- arch/x86/entry/syscall_64.c

- include/linux/syscalls.h

- These do not change in Linux (unlike Windows)

Executing System Calls

userspace calls syscall

Save Context

We need to make a context switch

Kernel Executes System Call

Restore Context

Context Switching back to user space

kernel calls sysexit

But wait...

- The kernel doesn't trust anything coming from user-space

- Why?

- The parameters could be invalid

- Pointers, file descriptors, process ID's might all be invalid

- The Kernel has an API to enforce these safety measures

But wait...

- access_ok - Check to see if a pointer is valid

- copy_to_user

- copy_from_user

- And many others...

- Variety of helper functions to help you do safe work

Devices

What is it?

- They are just files on the filesystem...like any other file

- But have different properties

- File operations are implemented by the kernel module implemented

What is it?

- The kernel decides what read/write/open means

- Each device has it implemented differently

- Each device is defined by a major/minor number

- ls -l /dev, cat /proc/devices

- C for character devices

- B for block devices

- Inside the kernel, major/minor uses dev_t, an unsigned 32 bit number

- 12 bits for major

- 20 bits for minor

- Helper macros for assignment

We will focus primarily on character devices today.

Creating the Device

- register_chrdev_region

- registers a set number range with the kernel

- Starting at (maj/min) and requesting a given number of devices

- alloc_chrdev_region

- Request for a free region in the kernel

- Starting at (maj/min) and requesting a given number of devices

- Mknod - Creates the character device file in userspace

- sudo mknod ./dev c <maj> <min>

- Also a system call

What happens when we call mknod

- We create an inode in the VFS. (What's an Inode)?

- Contains a dev_t to specify the device associated

- i_rdev

- For character devices - contains a struct c_dev

- i_cdev

- Contain a pointer to the file_operations associated

- i_fops

- Contains a dev_t to specify the device associated

Character Devices

- A type of device that operates character by character

- Unlike block devices, which work with multiple characters at a time

- Information about it in the kerenl is contained in a cdev

- Has pointer to its owner (struct module)

- a dev_t field

- and a file_operations structure as a field

- Allocate it with cdev_alloc

- Free with kfree

- Can be embedded within another structure, but needs to be initialized with cdev_init

- Register the device with cdev_add

File Operations

struct file_operations my_fops =

{

.owner = THIS_MODULE,

.read = read_func,

.write = write_func,

.open = open_func,

.ioctl = ioctl_func,

.release = release_func,

};File Operations

- A structure of function pointers, which will be the operations for interacting with the device

- Implementation dependent, all based on how the developer wants the behavior to occur

- Common file operations implemented include the ones listed above and close (try to find file_operations struct on Elixir)

File Structure

-

struct fileis a kernel structure associated with an open file.- Goes away when all references are closed

- Can be found in the processes

struct file_structs- Found in

current->files->fd_array[]

- Found in

- Contains references to its inode, file operations, mode, and more.

- Why is this important?

- All file operations take this structure as a parameter. Why?

- So they know what file they are operating on.

Classwork/Homework

You are going to create a character device and interact with it.-

On pwn.college I added a character device challenge in Kernel Internals.Follow the directions in the README and template.Get the flag and submit your code!

Virtual Memory in the Kernel

Review of Physical Addresses

- Just like it sounds - address of physical memory

- Restricted to 52 bits on x86_64 machines

- Might be RAM, ROM, Devices on the bus, etc.

Review of Virtual Memory

- The way the operating system organizes physical memory so we can easily access it.

- Also allows for secondary memory to be utilized as if it was a part of the main memory

- Compensates if there are physical memory shortages

- Temporarily moving data in RAM to disk

- Divided between user space and kernel space

- Userspace is bottom half, Kernel space is top half

Why do we care?

- Remember: we don't use physical addresses in modern systems

- Potentially in embedded, will get to it later

- When we refer to an address, it's always virtual memory

- This makes our lives a lot easier

- Don't have to worry about managing where data goes in physical memory

- Gives us additional features

- Permissions (RWX)

- Containment of process memory

- Shared memory

- etc.

Restrictions of User and Kernel Memory Interaction

- Userspace programs cannot directly access kernel space memory

-

access_ok, user_addr_max- kernel API to check if address is userspace -

copy_from/in/to_iter/user- Handling transfer/usage of userspace & kernelspace data interaction

-

This cannot be achieved without the operating system

Live Example

Virtual Memory Translation

Process 1

Process 2

Process 3

Read 0x10000

Read 0x10000

Read 0x10000

Reads:

Proc 1: 0xcdf1200

Proc 2: 0x7f86c00

Proc 3: 0xab89200

What does the operating system do?

- Translate virtual addresses to physical addresses

- The CPU DOES NOT UNDERSTAND VIRTUAL ADDRESSES

- Ensuring that if data needs to be put in physical memory, it goes in the right location

- Might not be as logical as virtual memory

- Multiple different ways of doing this.

Virtual Memory to Physical Memory translation is different for each kind of CPU (arm, x86, mips, etc.)

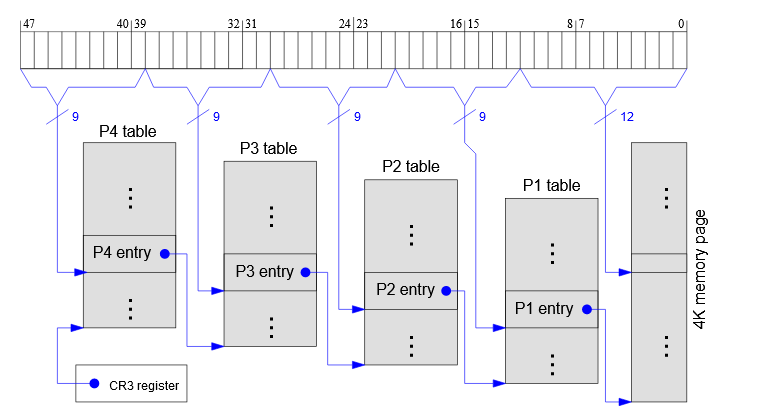

Page Tables

- Entries in virtual memory that hold metadata for the CPU to understand virtual memory

- Translations from virtual addresses to physical

- Permissions

- Indicating pages are modified (dirty)

- Specific to the hardware/CPU

- x86_64 contains a 4-tier and 5-tier page tables

- Tree-like structure

- x86_64 contains a 4-tier and 5-tier page tables

Page Table Example

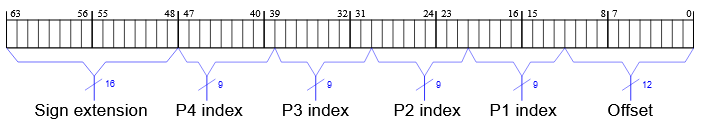

- For a four tier x86 page-table system, it uses 48 bits (this is what most systems use)

- Bits 39-47 are the fourth level index

- Bits 30-38 are the third level index

- Bits 21-29 are the second level index

- Bits 12-20 are the first level index

- Bits 0-11 are the offset

- What happens to bit 48-63?

- Sign extension! Basically not used (copy bit 47 repeatedly)

Page Table Example

Images from: https://os.phil-opp.com/page-tables/

Page Tables

- x86_64 page table entries also contain information about the page they reference

- This is set in bits (permission bits, present bit, dirty bit, etc.)

- See arch/x86/include/asm/pgtable_types.h for more info

- Multiple page tables per system

- Could be per-process

- CPU knows where to look

- In the kernel, the task keeps track of the associated page table

-

current->mm->pgd- Top level of the associated page table

-

Page Tables Macros

- Macros are based on the page level names

- Example: PGD = Page Global Directory

- P4D = 4th Level Directory (only used in 5 -tier page table

- PUD - Page Upper Directory

- Etc.

- Three different types of macros - SHIFT, SIZE, and MASK

- Combine level+type of macro to get your macro

- PAGE_SHIFT, PUD_SIZE, PGDIR_MASK

- Combine level+type of macro to get your macro

Page Tables Keep Going...

- Your own exercise - Look at the functions to read entries and example code to traverse the kernel

- /mm/pagewalk.c -

walk_page_range - /arch/x86/include/asm/pgtable.h (or pgtable_64.h)

- /mm/pagewalk.c -

Making Page Tables More Efficient

Translation Lookaside Buffer (TLB)

- Caches page table entries - Why Do we do this?

- Page table lookups are expensive

- Need to find the right page and the right offset, and then do it multiple times

- TLB is constantly updated to match current page table

- When do you think these might be?

- Context switches, unmapping memory, allocating memory, etc.

- Functions:

flush_tlb_all, flush_tlb_page, flush_tlb_range

- When do you think these might be?

Paging

- When the kernel moves currently unused pages to disk.

- Frees up physical memory

- This is known as the swap partition in Linux

- Managed by kswapd kernel thread

- Can't be accessed by usermode programs

- What happens when the kernel accesses paged out memory

- PAGE FAULT!

- This can lead to crashes if done at the wrong time/place

- PAGE FAULT!

Accessing Task Memory

- Task's memory can be accessed in the mm field in the task_struct

-

current->mm- of typestruct mm_struct- If anonymous process, this will be NULL

-

current->active_mmwill contain the memory that the anonymous process will be currently attached to

-

-

current->mm- contains useful fields- pgd - pointer to page global directory

- mmap - list of virtual memory regions (organized in an rb_tree)

- get_unmapped_area - function to find unused virtual address

- vm_ops - Pointer to the operations for virtual memory

Kernel Memory Management

Goals of Kernel Memory

- Needs to run very quickly

- Be able to allocate memory without continually searching through one massive region of memory

- Handle special allocators (meta-level allocator) that dedicates memory regions for special purposes

SLOB, SLAB, SLUB, ...

We have various allocators to retrieve free memory

SLOB

- Oldest Allocator

- Memory usage is Compact

- Fragments quickly

- Requires traversing a list to find the correct size

- Slow!

SLAB

- Solaris Type Allocator

- Cache Friendly

- Complex structures

- Utilizes lots of queues (per CPU and per node)

- Exponential growth of caches...

SLUB

- The unqueued allocator

- Newest allocator

- Default since Linux 2.6.23

- Works with the SLAB API, but without all the complex queues

- Makes execution time friendly

- Enables runtime debugging and inspection

- Allocation/freeing pastpath doesn't disable interrupts

- See /include/linux/slab.h

- Operates on caches (of type

struct kmem_cache)

kmem_cache

- Allow the allocation of only one size/type of object

- Might even have custom constructors/destructors

- If you don't know what this is, Google it!

- Might even have custom constructors/destructors

- Cache's might have multiple unique identifiers

- Own name, object alignment, and object size

- Underneath the hood, kmem_cache uses slabs (NOT SLAB ALLOCATOR)

- Data containers that act like a container of one or more contiguous pages of a certain size

- Contains pointer to first free object, meta data for bookeeping, pointer to partially-full slabs.

- Slabs and its pages are defined by

struct page

Slab Usage

- When nnew objects that need to be allocated to the kmem_cache...

- Scans list of partial slabs to find a location for the object

- If no partial slabs exist, create a new empty slab.

- Create new object inside of it

- Mark the slab as partial

- Look at the

alloc_pagesfunction

- Slabs that change from full to partial are moved back to the partial list

- Remember, there are multiple slabs per kmem_cache

How does SLUB play into the picture

- SLUB creates a kmem_cache_cpu per CPU

- When allocating memory

- Attempts from the local free list first

- Then attempts from other slabs on the CPU

- Then attempts from the partial slabs of the cache - SLOW

- Then finds any free object. If necessary SLUB will allocate another slab

- If necessary, it will fail instead of growing

- SLUB tries to make efficient usage of memory/CPU cycles

- Merges similar caches into the same cache (saving time and space)

Kernel Versions of Malloc

- Two functions for allocating memory in kernel space - kmalloc and vmalloc

- kmalloc is the more efficient version of the two

- Utilizes caches for allocating memory (and an allocator)

- Keeps track of them via arrays (based on types and sizes)

- Very large allocations are handled by kmalloc_large (just alloc_pages behind the scenes)

- vmalloc

- Doesn't utilize slabs

- Used to allocate buffers larger than what kmalloc can do

Kernel Versions of Malloc

- Important features to note about both:

- They have an api where you can get the physical address of the allocated location

- Important features about kmalloc:

- Has flags and types to help handle allocation

- flags: GFP_NOWARN, GFP_ATOMIC, GFP_ZERO

- Types: KMALLOC_NORMAL, KMALLOC_RECLAIM, KMALLOC_DMA

- Array of caches:

kmalloc_caches[kmalloc_type(flags)][kmalloc_slab(size)]

- Has flags and types to help handle allocation

What if we want to use neither

- We can still work with pages directly!

- alloc_pages - allocate the number of pages

- get_user_pages - pins usermode pages (locks them)

- remap_pfn_range - remap kernel pages to usermode

- ioremap - Make bus memory CPU accessible

- kmap - map kernel pages into kernel address space

And finally... Kernel Address Sanitzer

- An error detector that catches dynamic memory behavior bugs

- Examples: use-after-free, double free, out of bound access, etc.

- At compile time, instruments the code so that at run time it checks every memory access

- Can output a stack trace when it detects a problem

- Some examples:

- kmemleak - Finds memory leaks and reports it to /sys/kernel/debug/kmemleak (CONFIG_DEBUG_KMEMLEAK must be enabled at build time)

- UBSAN

- Undefined Behavior Sanitizer

- Does what the name implies, watches for undefined behavior.

Kernel Virtual Filesystem

What is it?

- The Virtual File System creates a single interface for file I/O operations

- Located in the Linux Kernel, accessed by user-applications via system calls (write, read, open, etc.)

- Allows for multiple implementations of file systems without needing knowledge of them to interact with them

- Also allows one system to handle multiple implementations

- External drives, network drives, etc.

VFS Under the Hood

- A standard API for user applications to call that will call a syscall

- The kernel can then choose which function to call based on what the user wants to do (and which file system they are using)

read()

sys_read

EXT4

Hard Drive

VFS Under the Hood

- The user calls the action they want to do

- The application performs a context switch and by calling the syscall associated

- In the kernel, it looks up the filesystem API it needs to use based on the device it is writing to (and filesystem implementation)

- EXT4, CIFS, XFS, FUSE, NFS, NTFS, etc.

- Write to the physical device (hard drive, USB, network drive, etc)

read()

sys_read

EXT4

Hard Drive

VFS Under the Hood

- To add new filesystem types, install a new kernel module

- The Kernel has an API for registering a new file system

-

register_filesystem- register the filesystem- Takes pointer to

file_system_type - Adds the FS to

file_systemsarray - Eventually calls

sget_userns- Creates a super block structure for the FS

- Takes pointer to

-

- Wait, what's a super block?

VFS Structs/Objects

- Yes - objects as in Object Oriented Programming, we will get to that in a second.

- The primary structures

- super_block - file system metadata

- inode - The Index Node

- dentry - An entry in the dirent cache -

- file

Superblocks

- Contains metadata about the filesystem

- The super_block structure contains

- The file system's block size

- Operations (s_ops)

- Pointer to the dentry at the filesystems root (s_root)

- UUID

- Max File Size

- Lots of other stuff

Inodes

- Contains metadata as well, but of the directories and files within the file system

- Has operations to interact with the directories/files

- Native file systems have inodes on disk while other file systems have to emulate it

- ALL FILES HAVE AN INDOE (include special files like devices, /proc and psuedo filesystems)

-

struct inodecan be found in /include/linux/fs.h

dentrys

- Cached information about the directory structure (created and used by the VFS)

- Each directory/file layer is its own dentry

- The cache itself is called a dcache

- Makes it easier to lookup information regarding paths without doing string manipulation

- See

struct dentryin /include/linux/dcache.h - /home/user/example.txt contains four dentrys

- What are they?

- /

- home

- user

- example.txt

Files

- Represent a file - contains information about that particular open file

- Also contains function pointers to file operations (remember how we had to implement it for character devices)

- See

struct filein /include/linux/fs.h

Linux VFS is Object Oriented?

- Not quite - but it has lots of Object Oriented Concepts

- The VFS defines various structs which has structures within them

- Think of them as classes with class variables and methods

- For every file system, they create their own instance of these structures

- Define their own values, members, and methods

- This also creates a form of polymorphism

- So think of it like a C based implementation of Object Oriented Programming

- Other parts of the Linux Kernel has this idea too.