Learning Model-based Control for (Aerial) Manipulation

Roberto Calandra

Facebook AI Research

RSS 2019 Workshop on Aerial Interaction and Manipulation - 23 June 2019

Learning to Interact with the World

- Interactions with the world are caused by forces (Newton's laws of motion)

- Current robots are almost "blind" w.r.t. forces

- Why not using tactile sensors to measure contact forces (like in humans) ?

- As humans, we create complex models of the world

- These models are useful to predict the effects of our actions

- Can robots learn similar models from complex multi-modal inputs?

- Can these learned models be used for control?

The Importance of Touch (in Robots)

The Importance of Touch (in Humans)

From the lab of Dr. Ronald Johansson, Dept. of Physiology, University of Umea, Sweden

The Importance of Touch (in Humans)

Challenges and Goal

- Opportunity: Tactile sensors allow to accurately measure contact forces, and enable real feedback control during manipulation.

- Goal: Improve current robot manipulation capabilities by integrating raw tactile sensors (through machine learning).

- Problem: Integrating tactile sensors in control schemes is challenging, and requires a lot of engineering and expertise.

Previous Literature

[Allen et al. 1999]

[Chebotar et al. 2016]

[Bekiroglu et al. 2011]

[Sommer and Billard 2016]

[Schill et al. 2012]

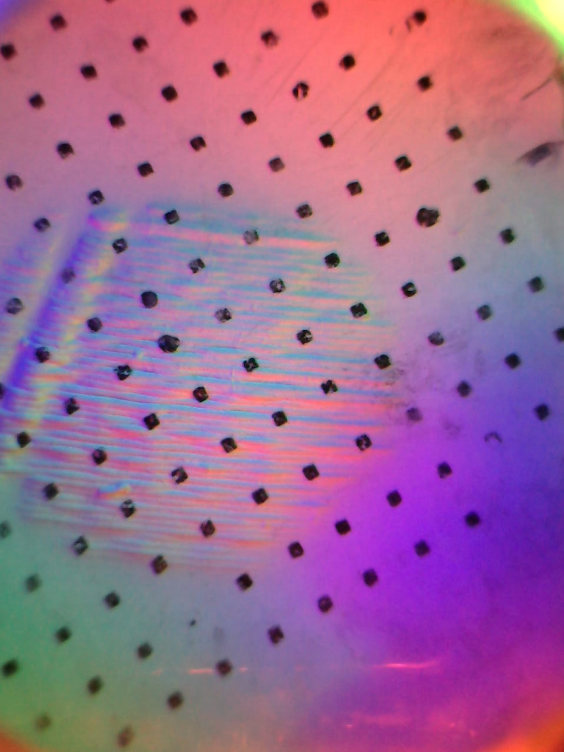

GelSight Sensor

[Yuan, W.; Dong, S. & Adelson, E. H. GelSight: High-Resolution Robot Tactile Sensors for Estimating Geometry and Force Sensors, 2017]

- Optical tactile sensor

- Highly sensitive

- High resolution

(1280x960 @30Hz) - Grid structure

Examples of GelSight Measurements

Self-supervised Data Collection

- Setting:

- 7-DOF Sawyer arm

- Weiss WSG-50 Parallel gripper

- one GelSight on each finger

- Two RGB-D cameras in front and on top

- (Almost) fully autonomous data collection:

- Estimates the object position using depth, and perform a random grasp of the object.

- Labels automatically generated by looking at the presence of contacts after each attempted lift

Examples of Training Objects

Collected 6450 grasps from over 60 training objects over ~2 weeks.

Visuo-tactile Learned Model

Grasp Success on Unseen Objects

83.8% grasp success on 22 unseen objects

(using only vision yields 56.6% success rate)

Gentle Grasping

- Since our model considers forces, we can select grasps that are effective, but gentle

- Reduces the amount of force used by 50%, with no significant loss in grasp success

Human Collaborators

Calandra, R.; Owens, A.; Jayaraman, D.; Yuan, W.; Lin, J.; Malik, J.; Adelson, E. H. & Levine, S.

More Than a Feeling: Learning to Grasp and Regrasp using Vision and Touch

IEEE Robotics and Automation Letters (RA-L), 2018, 3, 3300-3307

Andrew

Owens

Dinesh

Jayaraman

Wenzhen

Yuan

Justin

Lin

Jitendra

Malik

Sergey

Levine

Edward H.

Adelson

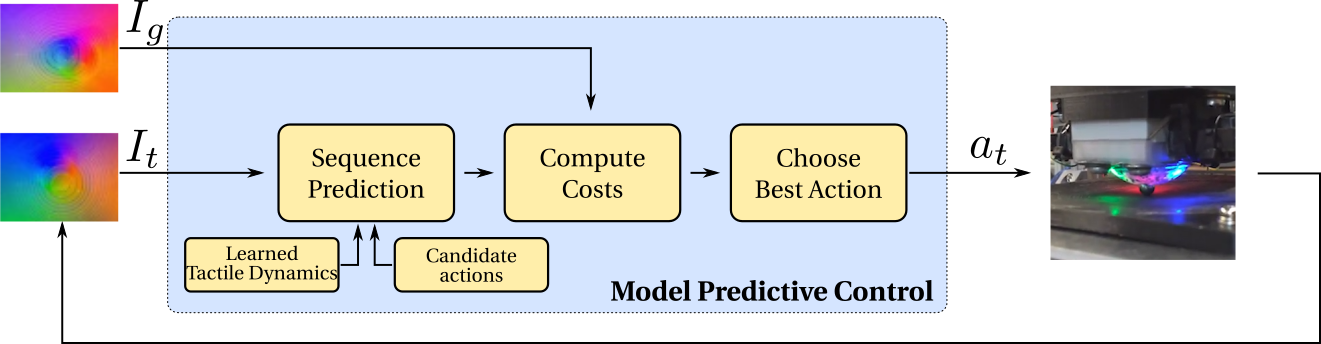

Can we learn dynamics models directly in raw tactile space?

(Yes, we can)

Learning Dynamics Models in Tactile Space

Trajectory Prediction

Model Predictive Control in Tactile Space

Experimental Setting

Results on Ball Bearing

Results on Joystick

Results on D20

Experimental Results

Human Collaborators

Tian, S.; Ebert, F.; Jayaraman, D.; Mudigonda, M.; Finn, C.; Calandra, R. & Levine, S.

Manipulation by Feel: Touch-Based Control with Deep Predictive Models

IEEE International Conference on Robotics and Automation (ICRA), 2019

Sergey

Levine

Dinesh

Jayaraman

Chelsea

Finn

Stephen

Tian

Frederik

Ebert

Mayur

Mudigonda

A Few Lessons Learned

- Good engineering is (often) under-appreciated, but super-important!

- Machine Learning is extremely powerful (with the right data)

- The more, the better

- Variety Matter (complex models are good at interpolating, not extrapolating)

- Automatize, Automatize, Automatize

- Self-supervised learning will save you A LOT of time

- Experiment design need to be carefully though, and robust

- (Always save the version of the experiment in the data)

- Visualization is always useful

Computational Cost

- The computational cost of previous models presented is quite significant:

- Dozens of hours for training

- 0.2 - 2 seconds for inference (using non-linear MPC) on a top-of-the-line GPU

- How can we reduce the computational cost to run the models on-board?

- SqueezeNet-style model compression

- Use smaller, but highly tuned architectures

- Reduce numerical precision (e.g., from 64 to 8 bits)

- Explicit pruning of networks

- Policy distillation (or policy optimization)

- SqueezeNet-style model compression

Overview

- Presented two works where we use tactile sensing in conjunction with learned model:

- Learning to grasp and re-grasp from vision and touch

- MPC with learned dynamics models in raw tactile space

- Thinking in terms of Forces for Manipulation

- Strongly motivates the use of Tactile Sensors

- Data-driven Model-based Control

- ML can help to learn accurate models

- Self-supervised Learning to collect large amount of data autonomously

- High computational cost, but it is possible to reduce it

Thank you for your attention

References

- Calandra, R.; Owens, A.; Jayaraman, D.; Yuan, W.; Lin, J.; Malik, J.; Adelson, E. H. & Levine, S.

More Than a Feeling: Learning to Grasp and Regrasp using Vision and Touch

IEEE Robotics and Automation Letters (RA-L), 2018, 3, 3300-3307 -

Tian, S.; Ebert, F.; Jayaraman, D.; Mudigonda, M.; Finn, C.; Calandra, R. & Levine, S.

Manipulation by Feel: Touch-Based Control with Deep Predictive Models

IEEE International Conference on Robotics and Automation (ICRA), 2019 - Allen, P. K.; Miller, A. T.; Oh, P. Y. & Leibowitz, B. S.

Integration of vision, force and tactile sensing for grasping

Int. J. Intelligent Machines, 1999, 4, 129-149 - Chebotar, Y.; Hausman, K.; Su, Z.; Sukhatme, G. S. & Schaal, S.

Self-supervised regrasping using spatio-temporal tactile features and reinforcement learning

International Conference on Intelligent Robots and Systems (IROS), 2016 - Schill, J.; Laaksonen, J.; Przybylski, M.; Kyrki, V.; Asfour, T. & Dillmann, R.

Learning continuous grasp stability for a humanoid robot hand based on tactile sensing

BioRob, 2012 - Bekiroglu, Y.; Laaksonen, J.; Jorgensen, J. A.; Kyrki, V. & Kragic, D.

Assessing grasp stability based on learning and haptic data

Transactions on Robotics, 2011, 27 - Sommer, N. & Billard, A.

Multi-contact haptic exploration and grasping with tactile sensors

Robotics and autonomous systems, 2016, 85, 48-61

Learning Quadcopter Dynamics from PWM and IMU

Lambert, N.O.; Drew, D.S.; Yaconelli, J; Calandra, R.; Levine, S.; & Pister, K.S.J.

Low Level Control of a Quadrotor with Deep Model-Based Reinforcement Learning

To appear, International Conference on Intelligent Robots and Systems (IROS), 2019

Failure Case

Understanding the Learned Model

Understanding the Learned Model

Understanding the Learned Model

Full Grasping Results