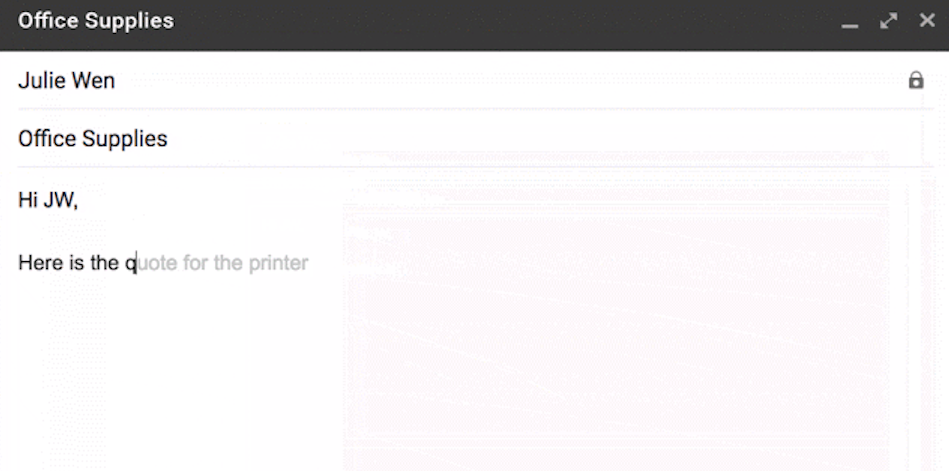

Robotics and

Generative AI

Russ Tedrake

VP, Robotics Research

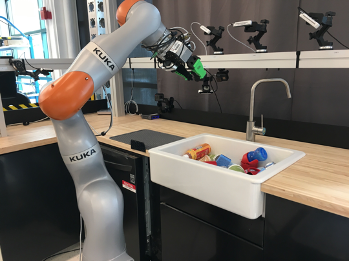

Dexterous Manipulation at TRI

My story

- Undergrad at UMich

- PhD at MIT

- Professor at MIT since 2005.

- in 2016, I helped start TRI

DARPA Robotics Competition, 2015

Robots are dancing and doing parkour.

Now computer vision is really starting to work...

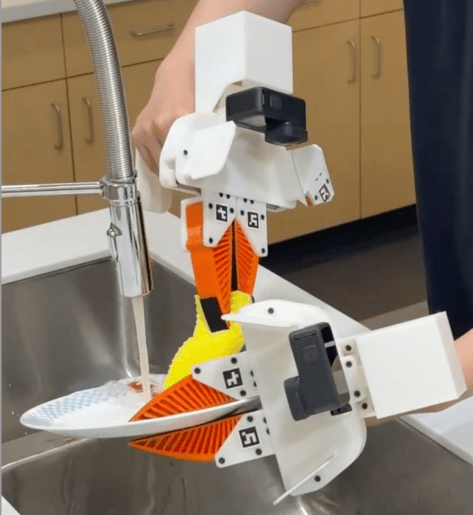

can they load the dishwasher?

The newest Machine Learning Revolution: Generative AI

for robotics; in a few slides

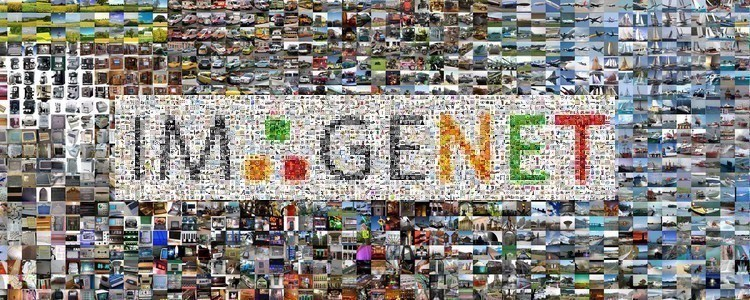

ImageNet: 14 Million labeled images

Released in 2009

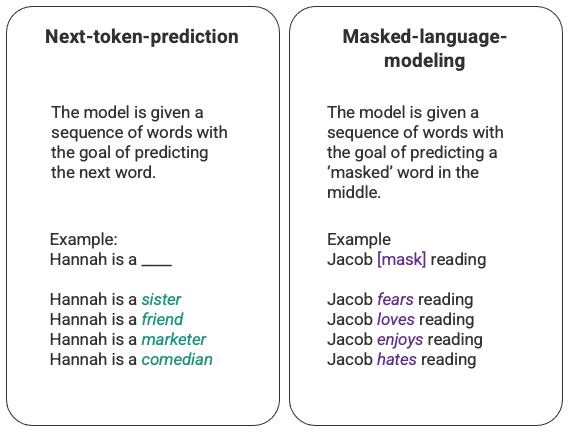

"Self-supervised" learning

Example: Text completion

No extra "labeling" of the data required!

GPT-4 is "just" doing next-word prediction

But it's trained on the entire internet...

And it's a really big network

Generative AI for Images

Humans have also put lots of captioned images on the web

...

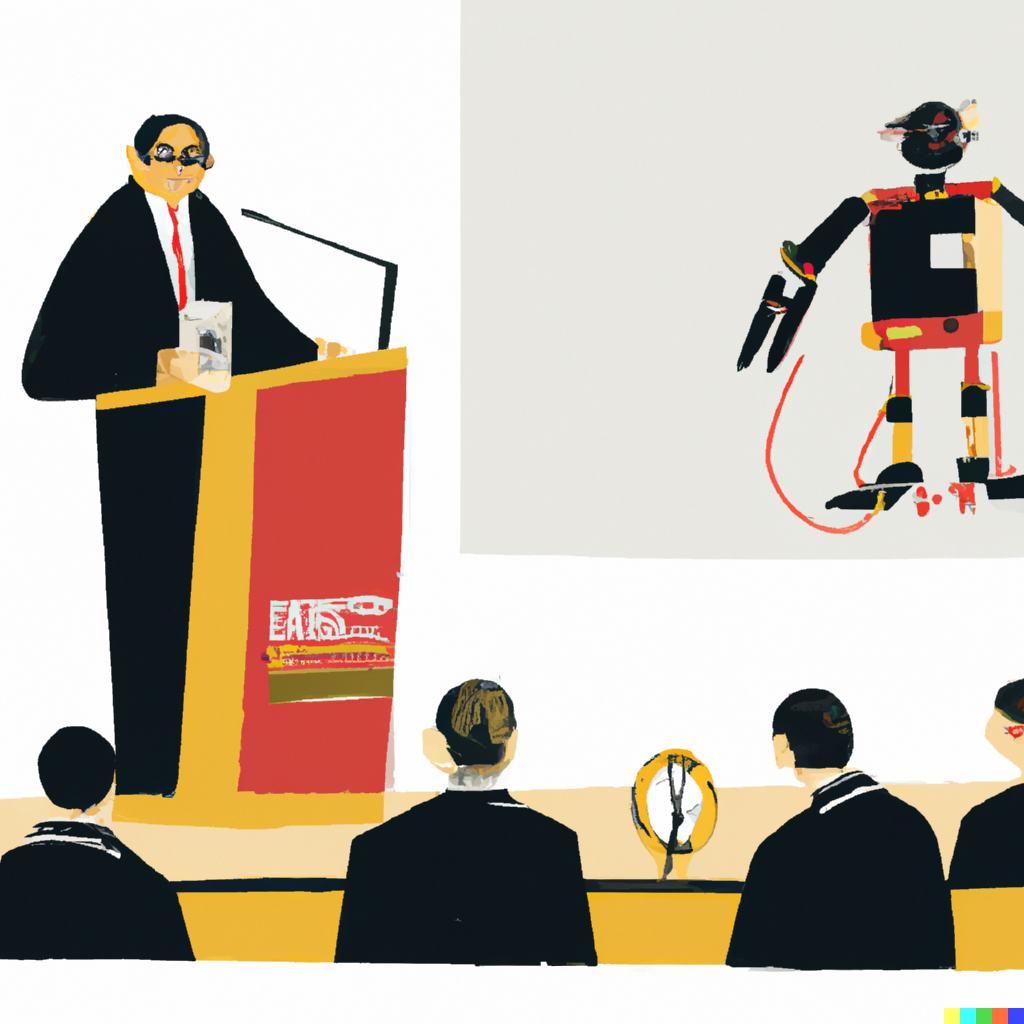

Dall-E 2. Tested in Sept, 2022

"A painting of a professor giving a talk at a robotics competition kickoff"

Input:

Output:

Dall-E 3. Sept, 2023

"a painting of a handsome MIT professor giving a talk about robotics and generative AI at brimmer and may school in newton, ma"

Input:

Output:

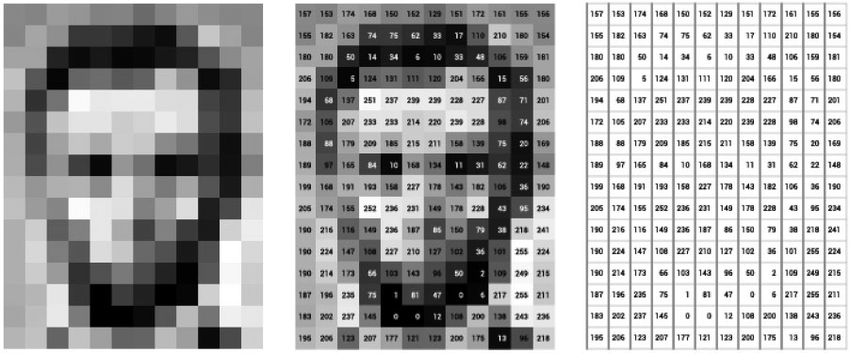

An image is just a list of numbers (pixel values)

Is Dall-E just next pixel prediction?

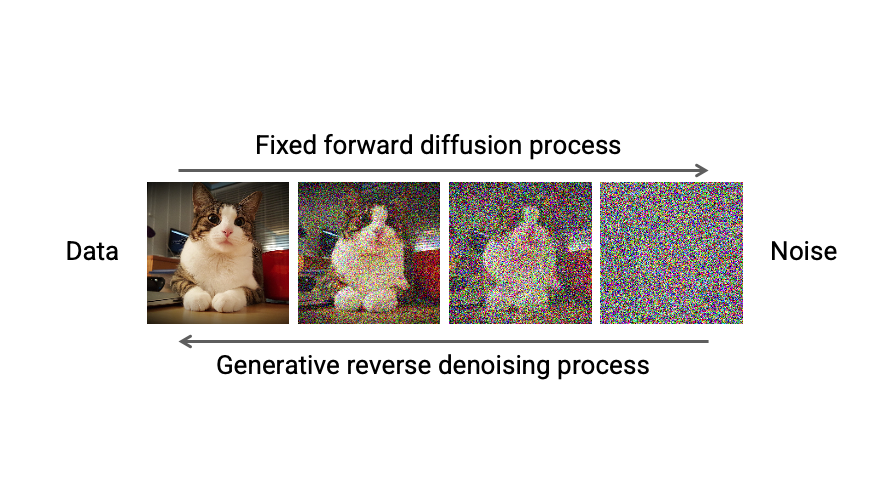

"Diffusion" models

Generative AI for robots?

Generative AI + data

+ very careful engineering

Our engineering design process

+ rigorous thinking

Haptic Teleop Interface

Excellent robot control

Cameras in the hands!

Open source:

LLMs \(\Rightarrow\) VLMs \(\Rightarrow\) LBMs

large language models

visually-conditioned language models

large behavior models

\(\sim\) VLA (vision-language-action)

\(\sim\) EFM (embodied foundation model)

Q: Is predicting actions fundamentally different?

Why actions (for dexterous manipulation) could be different:

- Actions are continuous (language tokens are discrete)

- Have to obey physics, deal with stochasticity

- Feedback / stability

- ...

should we expect similar generalization / scaling-laws?

Success in (single-task) behavior cloning suggests that these are not blockers

Prediction actions is different

- We don't have internet scale action data (yet)

- We still need rigorous/scalable "Eval"

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

robot teleop

(the "transfer learning bet")

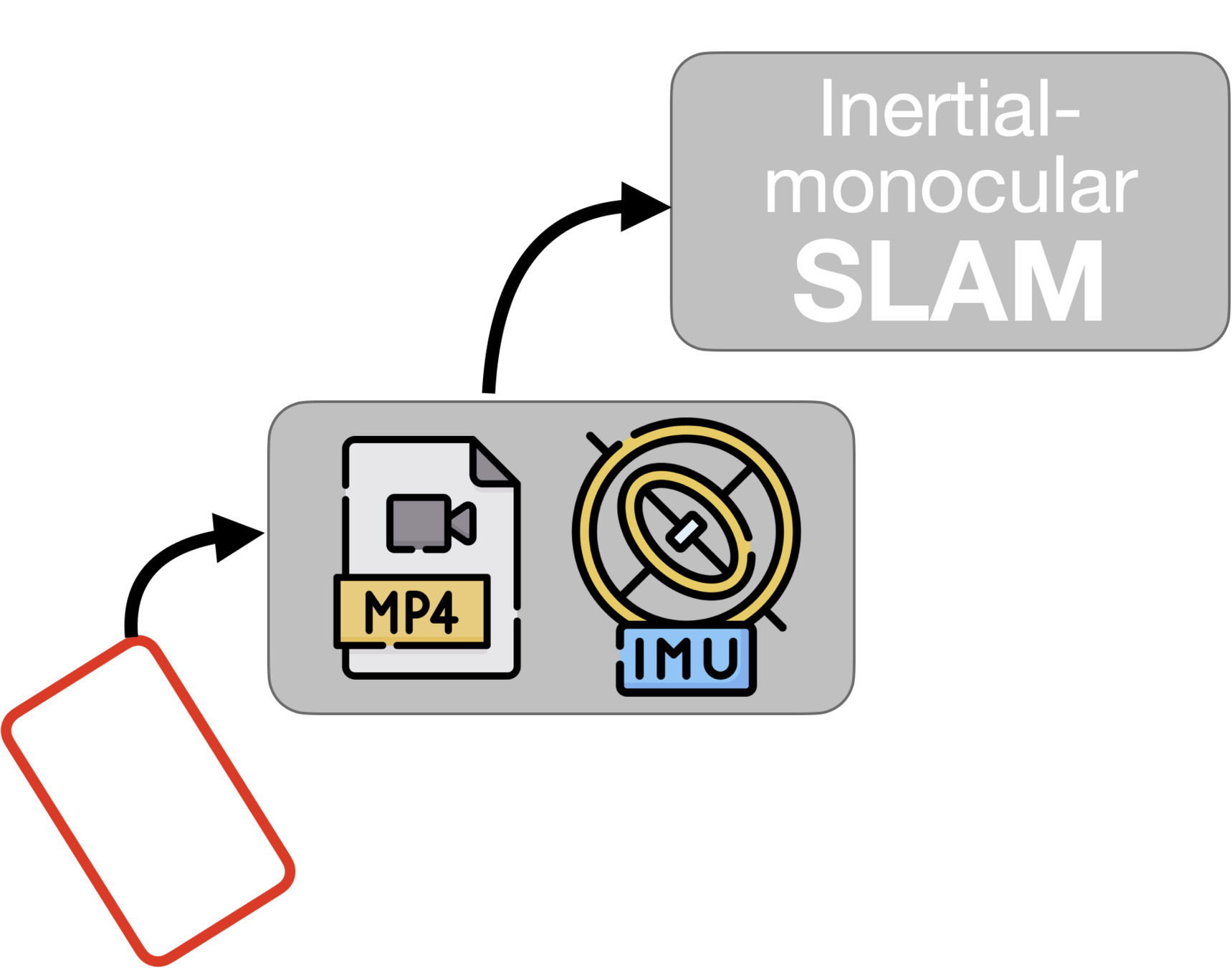

Open-X

simulation rollouts

novel devices

Cumulative Number of Skills Collected Over Time

The (bimanual, dexterous) TRI CAM dataset

CAM data collect

TRI has a special role to play

- Expertise across robotics, ML, and software

- Resources to train large models and do rigorous evaluation

- Resources to build large high-quality datasets

- Ability to advance robot hardware

- Our charter is basic research ("invent and prove")

- Strong tradition of open source

+ Amazing university partners

Just one piece of TRI's robotics portfolio...

Online classes (videos + lecture notes + code)

http://manipulation.mit.edu

http://underactuated.mit.edu