Supervised Policy Learning for Real Robots

Part 1: Introduction

Nur Muhammad

"Mahi" Shafiullah

New York University

Siyuan Feng

Toyota Research

Institute

Lerrel Pinto

New York University

Russ Tedrake

MIT, Toyota Research Institute

Imitation Learning

Behavior Cloning (BC)

Inverse Reinforcement Learning (IRL)

Today: BC of end-to-end (visuomotor) policies, with a bias for manipulation

Supervised Policy Learning ≈ "Behavior Cloning"

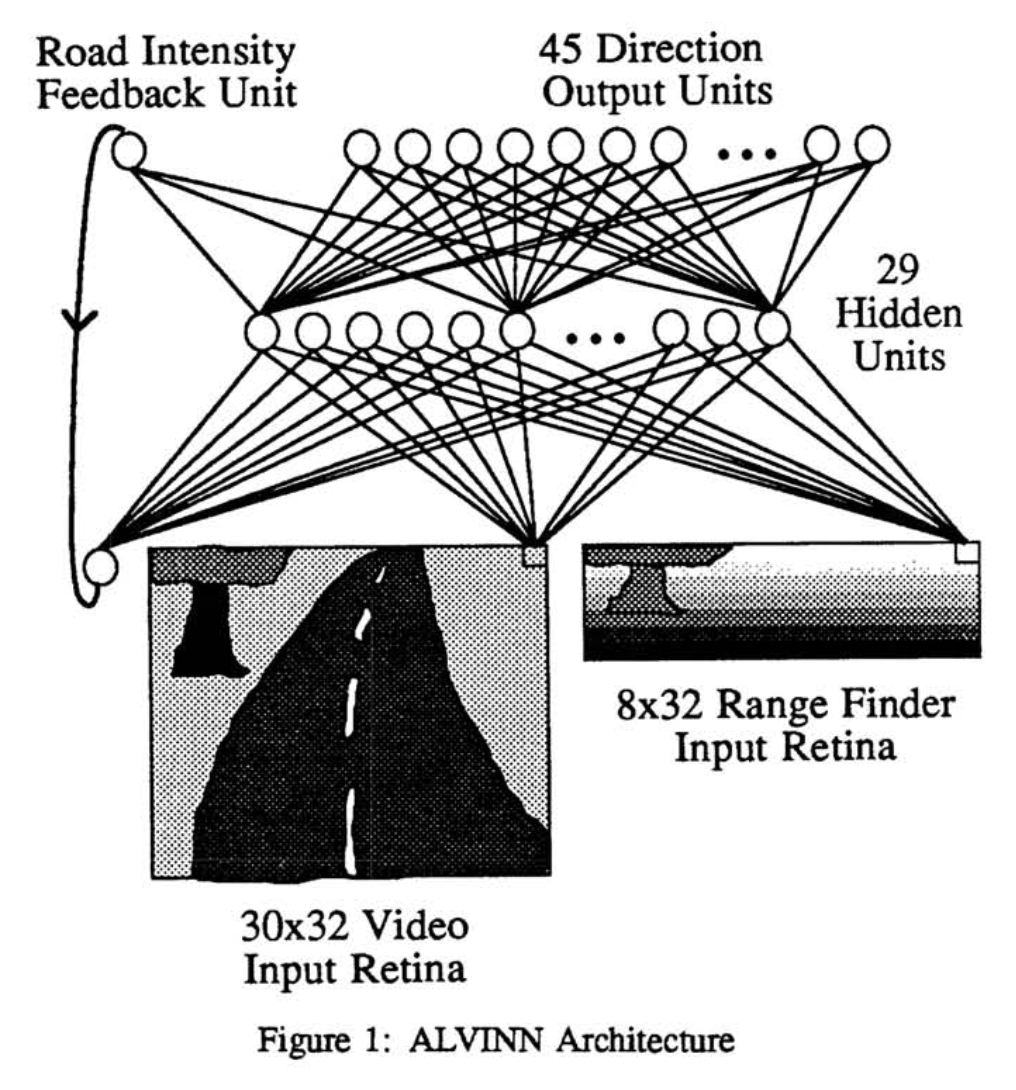

NeurIPS 1988

BC has a long history

and rich connections to RL and control

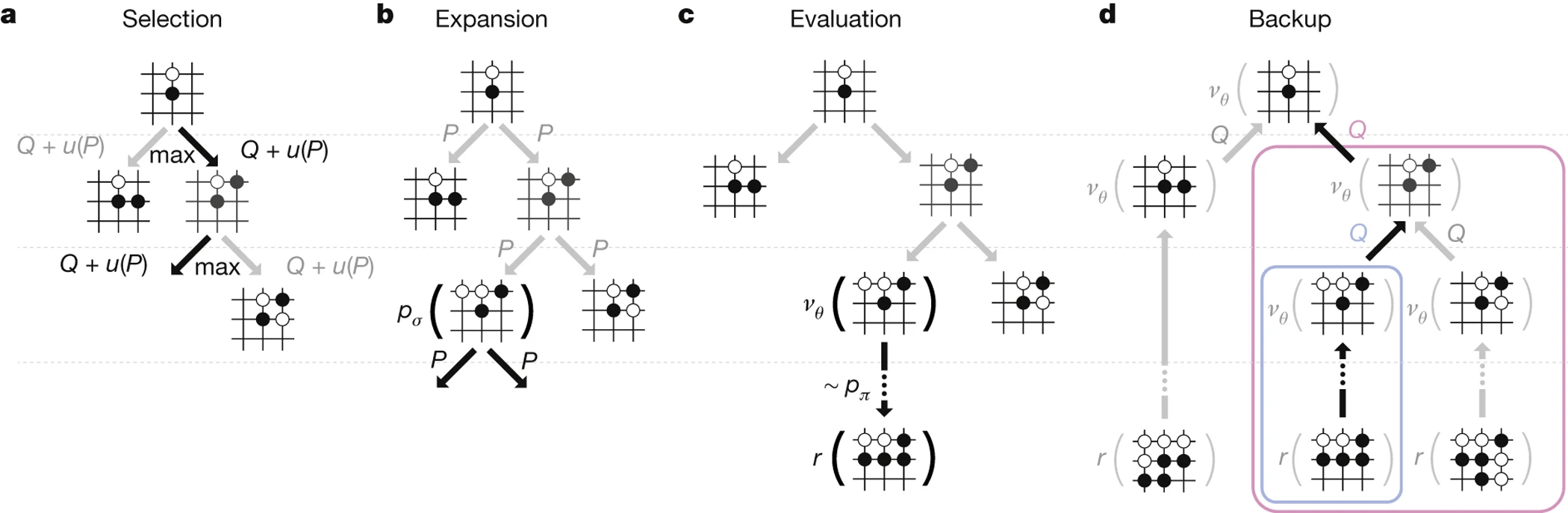

- Step 1: BC from human experts

- Step 2: Self-play

Teacher-student distillation

"Offline RL as weighted BC"

My main points for this intro

- BC is working surprisingly well; enabling robots to do tasks that were impossible just a few years ago.

- But often at < 90% success rate*

- A few key lessons / architectures have emerged...

- plus a lot of folk wisdom.

- We (I?) still don’t completely understand the basics.

- Rigorous eval will help.

- There are many open (basic research) questions!

* - heavily dependent on task complexity/test diversity

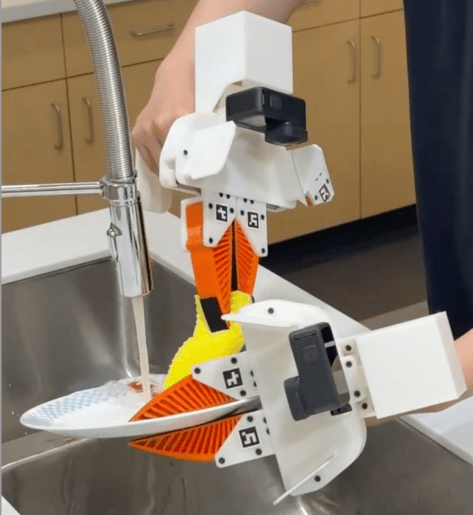

BC is working surprisingly well!

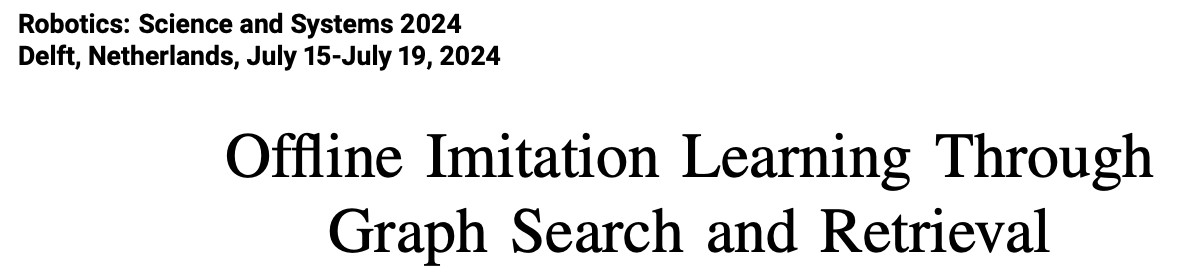

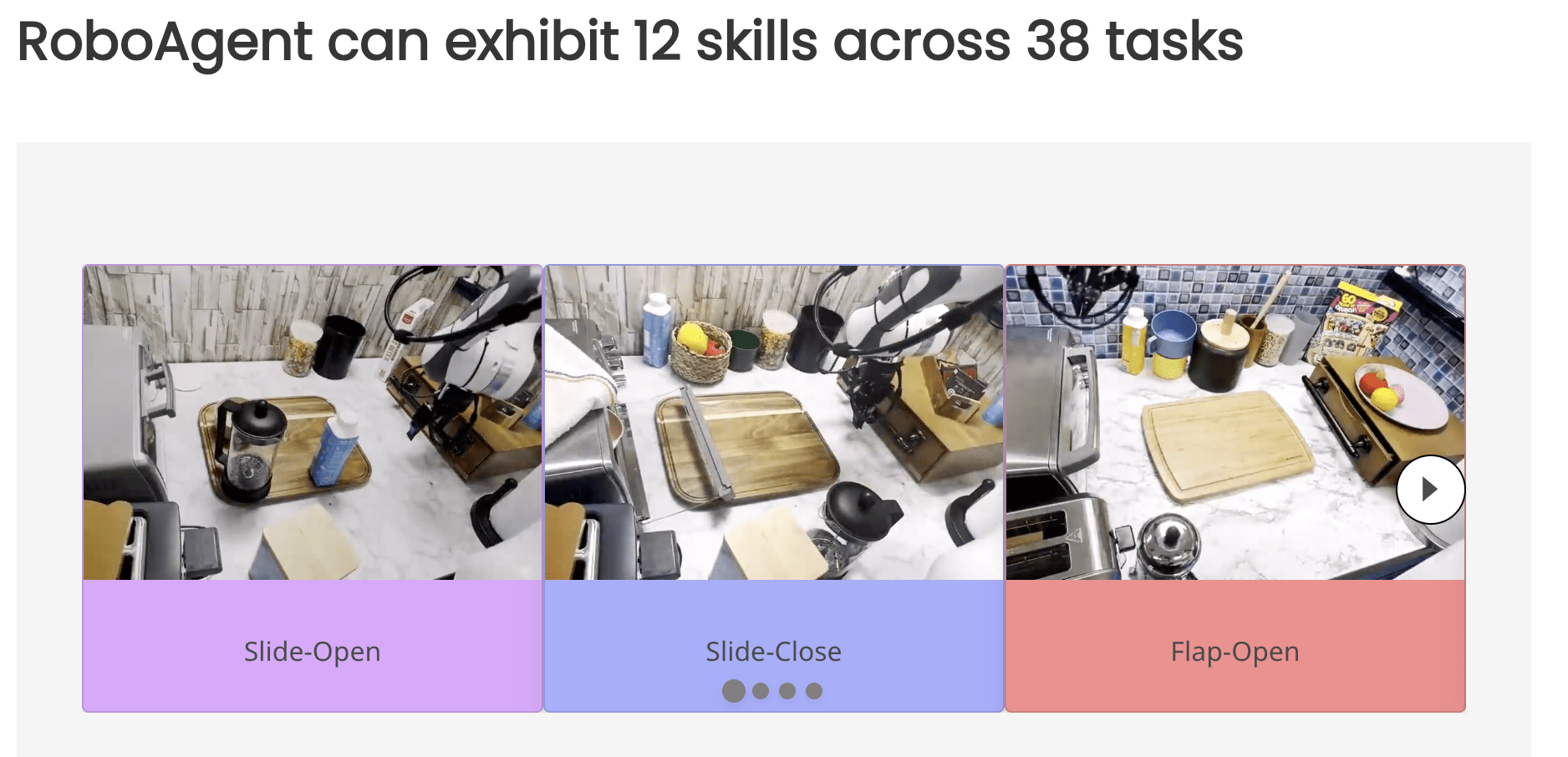

VINN, BeT, Dobb-E, ... (from Mahi, Lerrel, et al)

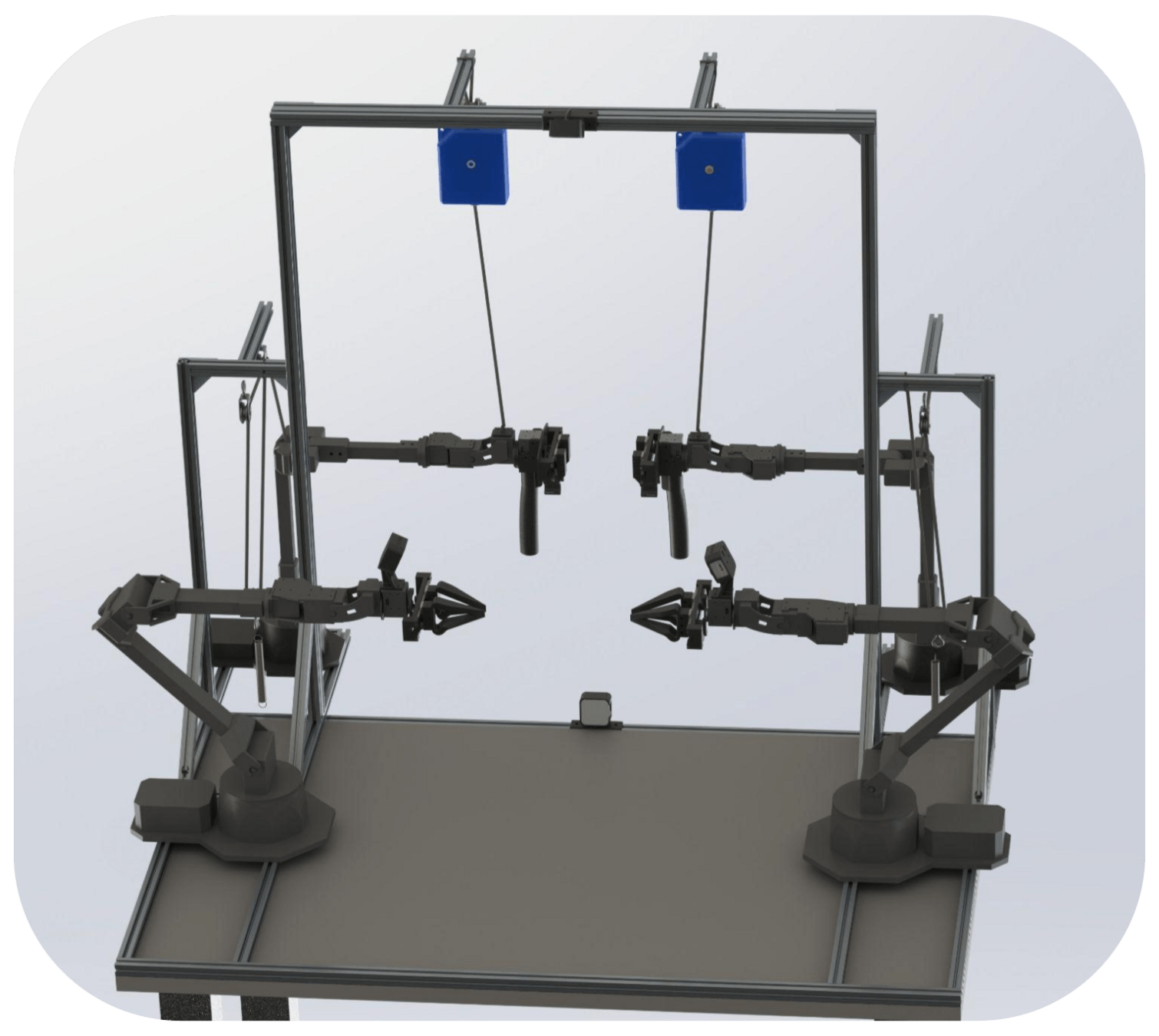

Diffusion Policy

ALOHA

Mobile ALOHA

Why BC? Why now?

At the banquet dinner this Wednesday I got asked...

Why BC? Why now?

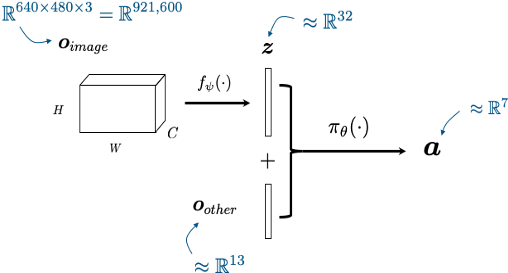

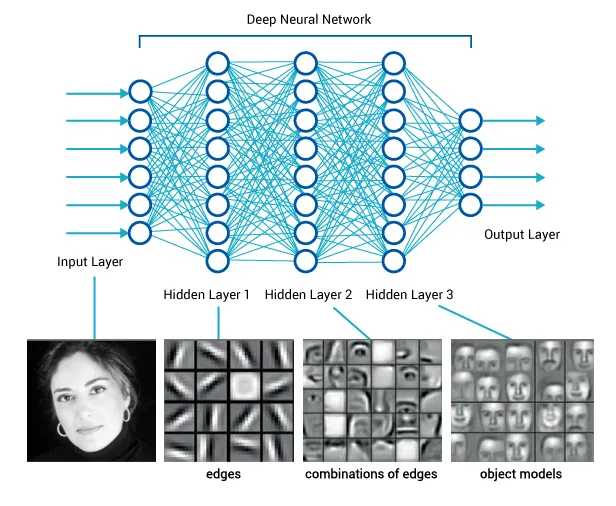

- Control from RGB

- without any explicit state estimation/representation

- no explicit dynamics model (intuitive physics)

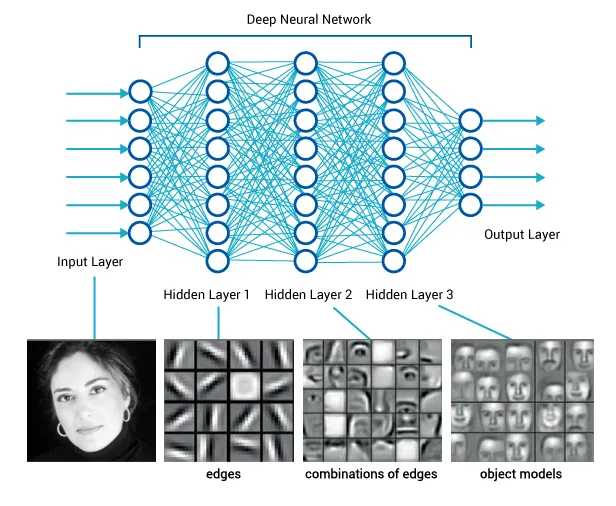

Levine*, Finn*, Darrel, Abbeel, JMLR 2016

The original "visuomotor policies"

perception network

(often pre-trained)

policy network

other robot sensors

learned state representation

actions

x history

My first taste

A very simple teleop interface

Why BC? Why now?

- Control from RGB

- without any explicit state estimation/representation

- no explicit dynamics model (intuitive physics)

- I had under-estimated how valuable high-rate RGB feedback could be

- no cost function hacking / tuning

- also enables other sensing modalities (audio, tactile, ...)

Why BC? Why now?

- Control from RGB

- GPT was "discovered"

- The optimization landscape appears to fundamentally change in the high-capacity models.

- LLMs => VLMs => VLAs (LBMs).

LLMs \(\Rightarrow\) VLMs \(\Rightarrow\) LBMs

large language models

visually-conditioned language models

large behavior models

\(\sim\) VLA (vision-language-action)

\(\sim\) EFM (embodied foundation model)

TRI's LBM division is hiring!

Q: Is predicting actions fundamentally different?

Why actions (for dexterous manipulation) could be different:

- Actions are continuous (language tokens are discrete)

- Have to obey physics, deal with stochasticity

- Feedback / stability

- ...

should we expect similar generalization / scaling-laws?

Success in (single-task) behavior cloning suggests that these are not blockers

Why BC? Why now?

- Control from RGB

- GPT was "discovered"

- The optimization landscape appears to fundamentally change in the high-capacity models.

- LLMs => VLMs => VLAs (LBMs).

- Single-task (Diffusion policy) \(\Rightarrow\) multi-task (LBM)

- What does common sense look like for control?

- We might even improve LLMs/VLMs (with our math, and our data)

Why not BC? (not everyone believes)

- Requires lots of data

-

but... much less that we might have guessed?

(~100 demos per skill) - Single task \(\Rightarrow\) multitask

- The "transfer learning bet"

-

but... much less that we might have guessed?

Andy Zeng's MIT CSL Seminar, April 4, 2022

Andy's slides.com presentation

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

robot teleop

(the "transfer learning bet")

Open-X

simulation rollouts

novel devices

Why not BC? (not everyone believes)

- Requires lots of data

- Requires lots of compute

- I agree. Yuck. But we must study this (and improve it).

- Slow inference (for control rates)

- 1-10Hz is more than enough for many tasks.

- Can certainly add layers and optimize inference.

- Not yet robust

- Creating a new type of robustness (physical common sense)

Pete Florence, Corey Linch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrien Wong, Johnny Lee, Igor Mordatch, Jonathan Thompson

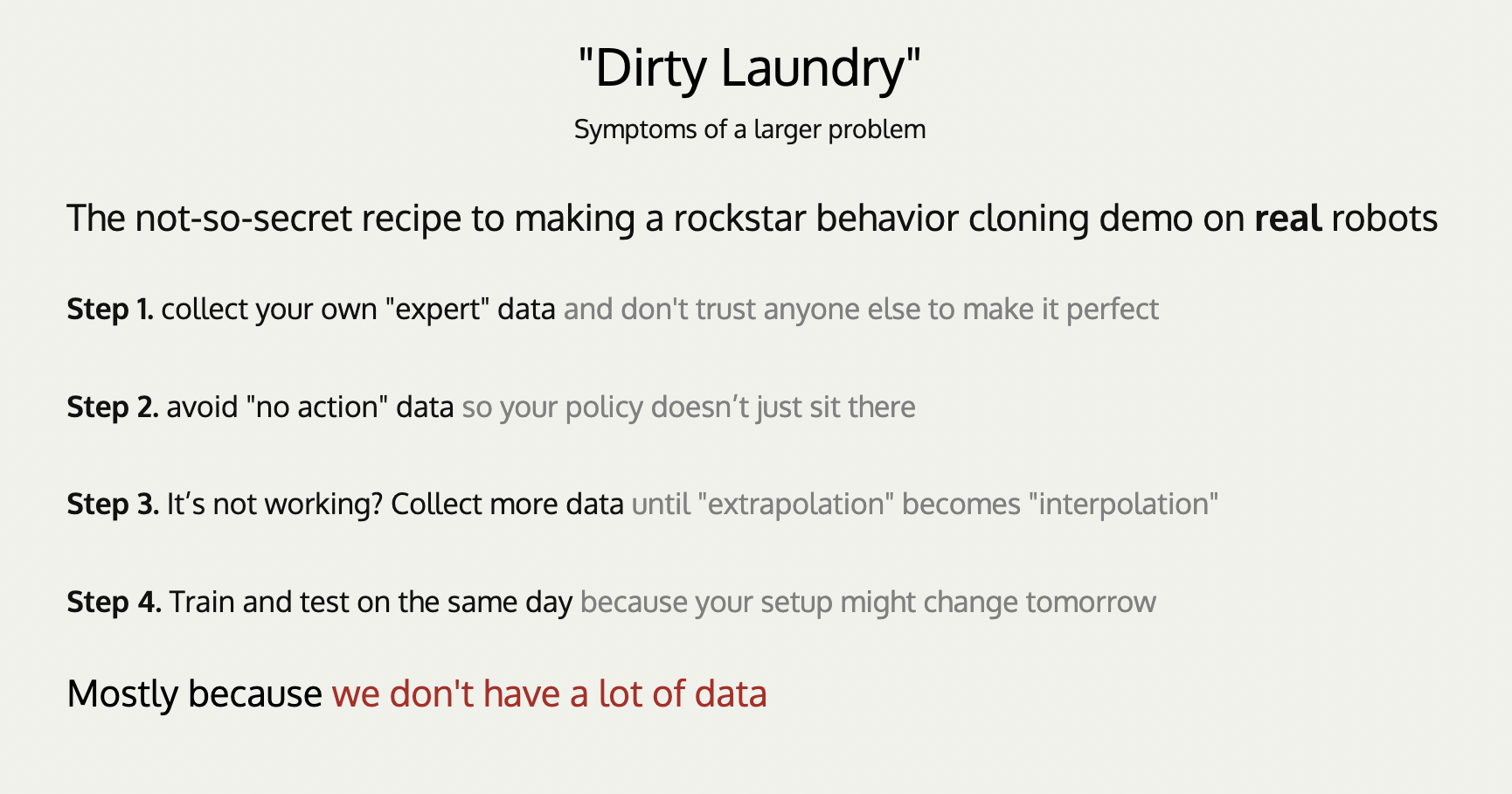

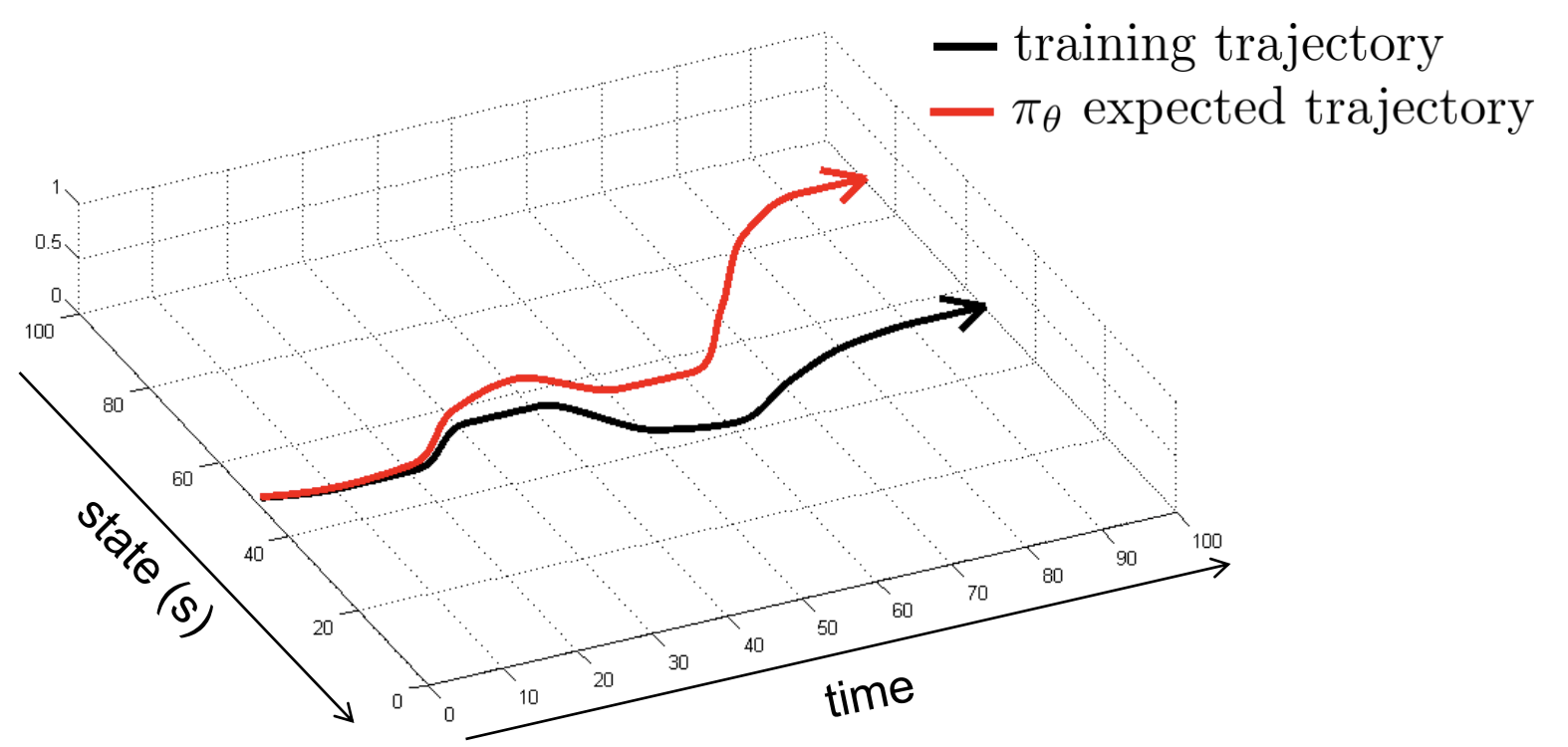

Core ideas/challenges

Dealing with distribution shift

https://rail.eecs.berkeley.edu/deeprlcourse

proposed fix: DAgger (Dataset Aggregation)

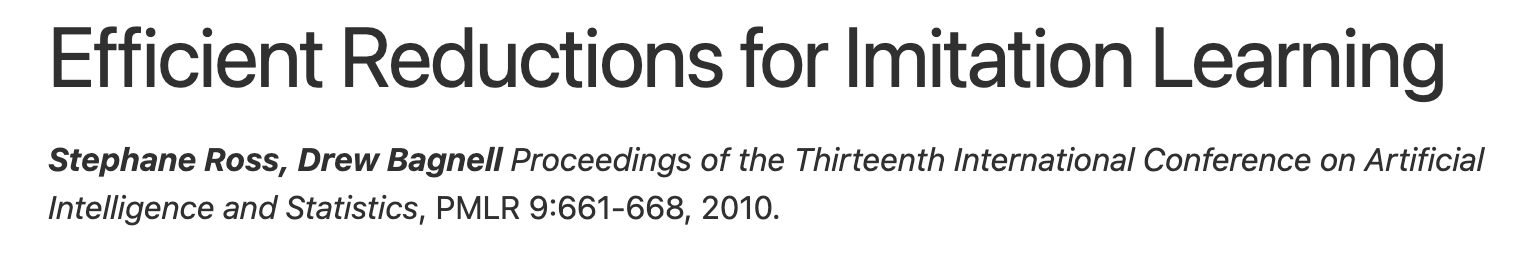

Dealing with multimodal demonstrations

Can we have stability and multimodality?

Provable Guarantees for Generative Behavior Cloning: Bridging Low-Level Stability and High-Level Behavior.

Adam Block*, Ali Jadbabaie, Daniel Pfrommer*, Max Simchowitz*, Russ Tedrake. NeurIPS, 2023.

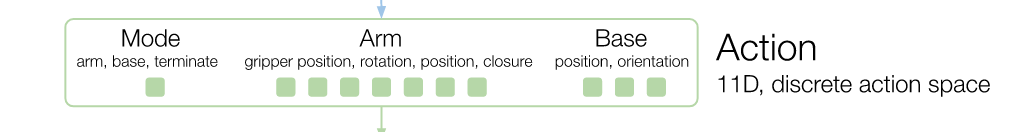

BC architectures/algorithms

A simple taxonomy

Dynamic output feedback

input

output

Control Policy

(as a dynamical system)

Most models today are (almost) auto-regressive (ARX):

As opposed to, for instance, state-space models like LSTM.

\(H\) is the length of the history

Dynamic output feedback

Transformer

input encoder(s)

output decoder(s)

Output/action decoders

- Option 1 (RT-style):

- Discrete tokens

- uniform bins

"each action dimension in RT-1/2(X) is discretized into 256 bins [...] uniformly distributed within the bounds of each variable."

Open source reproduction:

https://openvla.github.io/

Robotics Transformer (RT)

Output/action decoders

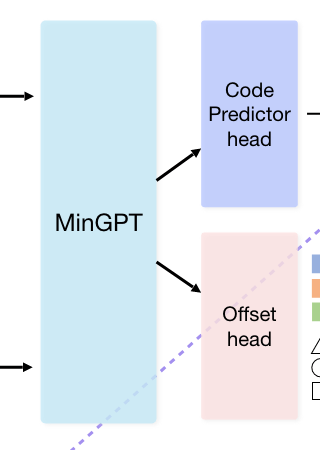

- Option 2 (BeT / VQ-BeT):

- Discrete tokens

- smart bins +

- continuous offset

[...]

Behavior Transformer (BeT)

Output/action decoders

- Option 3 (ACT):

- Continuous output

- CVAE encoder/decoder

Action-chunking Transformer (ACT)

https://aloha-2.github.io/

"Overall, we found the CVAE objective to be essential in learning precise tasks from human demonstrations."

but I'm pretty sure people sometimes turn the CVAE off.

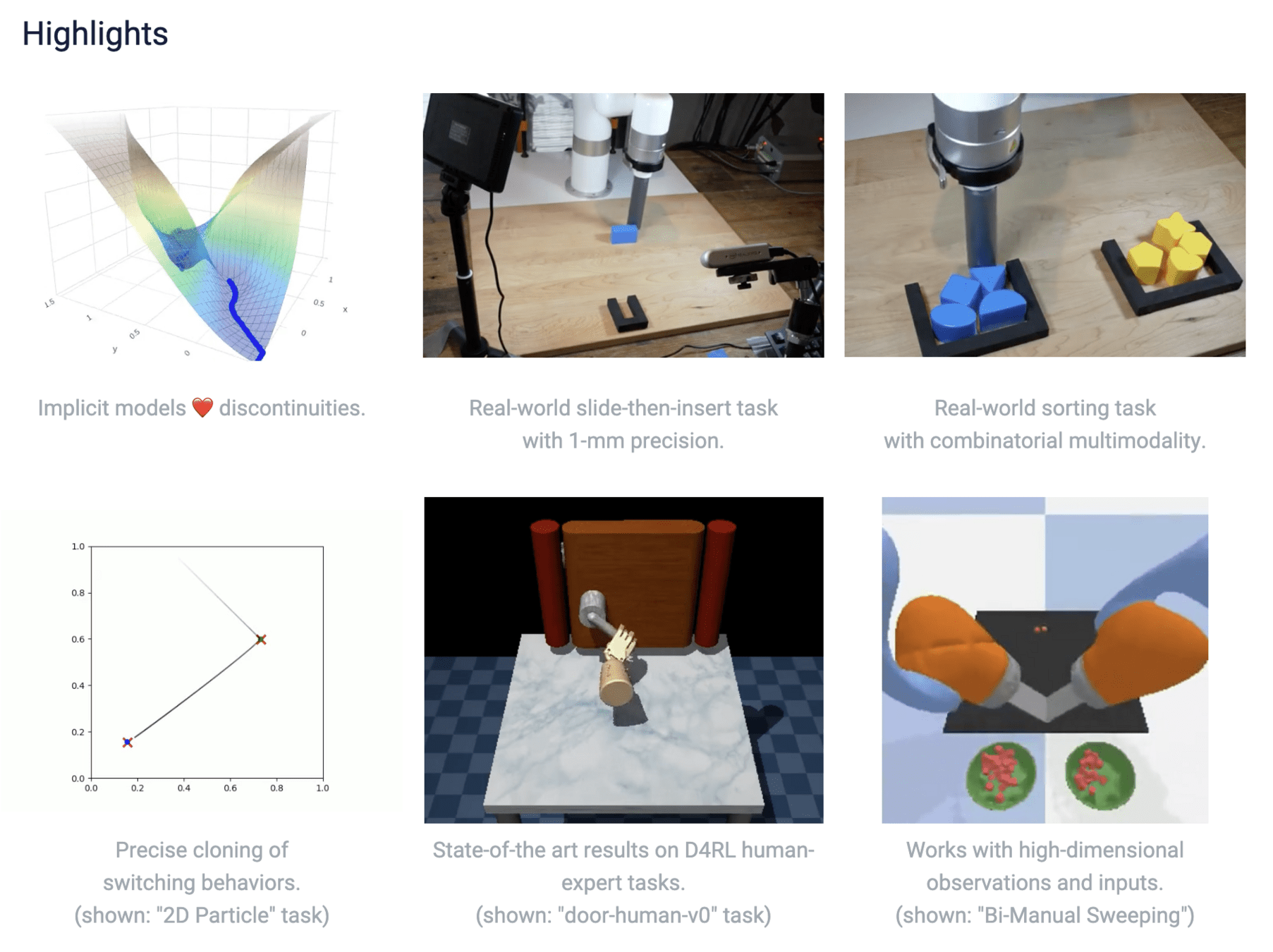

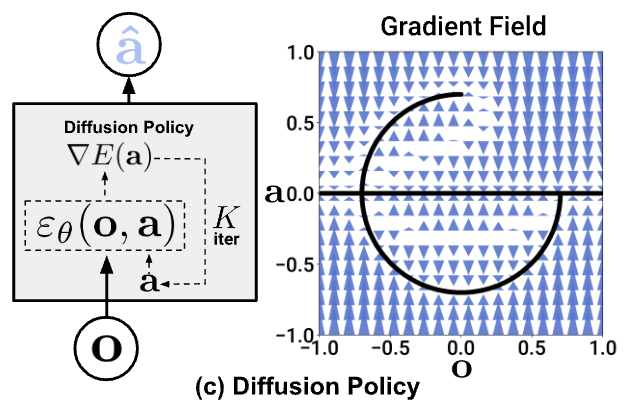

Output/action decoders

- Option 4 (Diffusion Policy):

- Continuous output

- Sampled at inference time via denoising

Diffusion Policy (DP)

Dealing with multimodal demonstrations

Learning categorial distributions already worked well (e.g. AlphaGo, GPT, RT-style)

BeT/ACT/Diffusion helped extend this to high-dimensional continuous trajectories

RT vs BeT vs ACT vs DP

- With enough capacity, they can all fit the demonstrations.

- The potentially subtle impact of discretization.

- Relative to discrete actions, DP is more expressive, but also more expensive at inference time.

- word on the street is maybe ACT wins in small data regime (~25 demos), DP in bigger data (>50 demos).

- Some people don't think we need the expressiveness; an open question.

- Many other concerns: stable training, hyperparameters, etc.

Note: Action sequence prediction

- In regression we might call this a "forecasting" model.

- Very standard practice in model-predictive control (MPC).

\(H\) is the length of the history,

\(P\) is the length of the prediction

- In both ACT and Diffusion Policy, predicting sequences of actions seems very important

Input encoders

- Image encoder (note: often unfrozen)

- RT-1 tokenizes a history of 6 images by passing images through an ImageNet pretrained EfficientNet-B3 (8 tokens per image)

- ResNet

- Vision Transformer (ViT)

- Proprioception

- standard encodings for joints/poses

- (for multitask, we typically adapt a VLM for the input encoder + transformer backbone)

Do we need new architectures?

Almost certainly, but first I want to understand...

I still don't understand the basics

- Clear limitations in current approaches

- some severe context length limitations

- use of proprioception

- ...

- Domain experts give different answers/explanations to basic questions

- Often the answer is "we didn't try that (yet)"

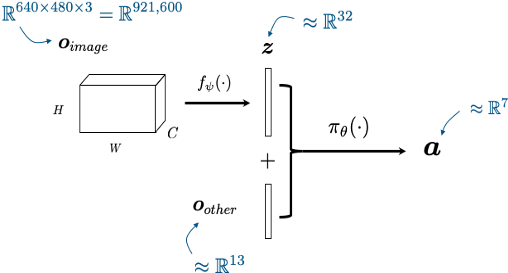

Why I still don't understand the basics

- Have been relying on (small numbers) of hardware rollouts.

- because we don't believe open-loop predictions (~perplexity from LLMs) are predictive of closed-loop,

- and (many) don't believe in sim

- but the experiments are time-consuming and biased

- and the statistical power is very weak

Getting more rigorous

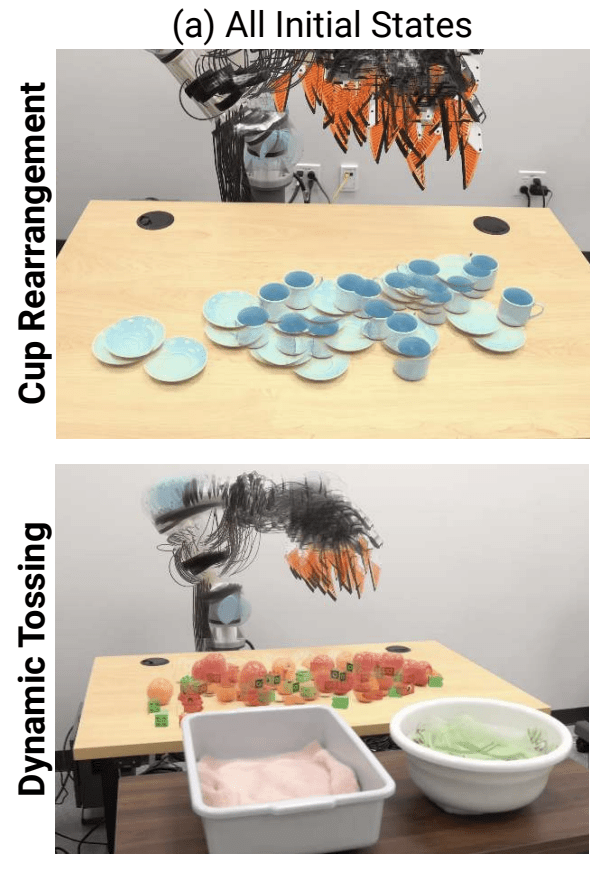

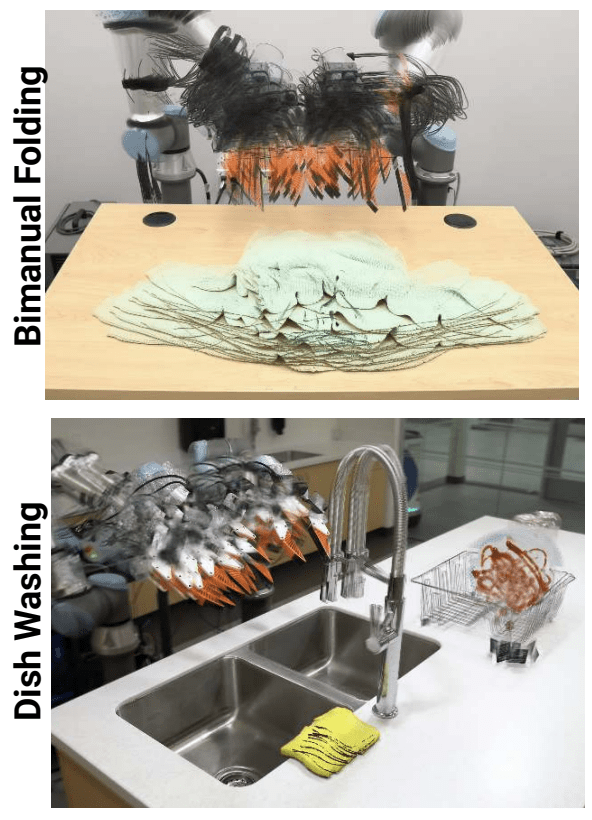

I really like the way Cheng et al reported the initial conditions in the UMI paper.

Rigorous hardware testing

At TRI, we have non-experts run regular "hardware evals"

- Randomized: each rollout randomly selects from multiple policies

- Blind: tester does not know which policy is running

w/ Hadas Kress-Gazit, Naveen Kuppuswamy, ...

Rigorous hardware testing

- can choose to "control" for initial conditions

w/ Hadas Kress-Gazit, Naveen Kuppuswamy, ...

Doing proper statistics

- Given:

- i.i.d. Bernoulli samples with unknown probability of success: \(p\),

- user-specified tolerance: \(\alpha\).

- Maximally efficient confidence bounds, \(\underline{p},\overline{p}\), such that:

- e.g., given two policies, run tests until the lower bound of one is above the upper bound of the other.

But "success" is subjective for complex tasks

Example: we asked the robot to make a salad...

simulation-based eval

(Establishing faith in)

- Isaac/PhysX, MuJoCo, Drake, ...

- At TRI, we call out two distinct use cases for sim + BC:

- benchmarking/eval

- data generation (e.g. leveraging privileged info)

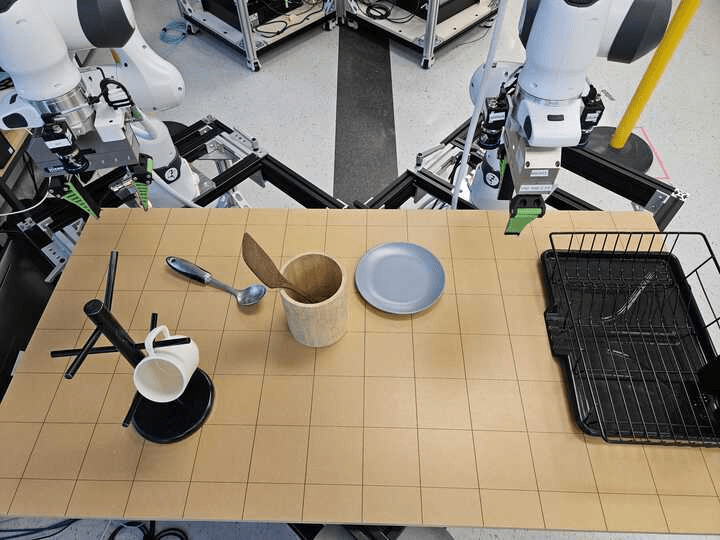

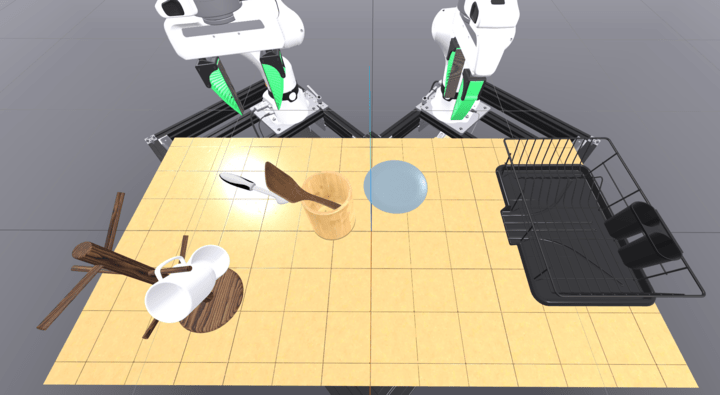

TRI's LBM simulation-based eval

- TRI's LBM division is focused on now multitask

- ~15 skills per scene

- Task is not visually obvious, requires language

- 200 demonstrations per skill

Sample LBM sim demonstrations in lerobot format

python lerobot/scripts/visualize_dataset.py \

--repo-id russtedrake/tri-small-BimanualStackPlatesOnTableFromTable \

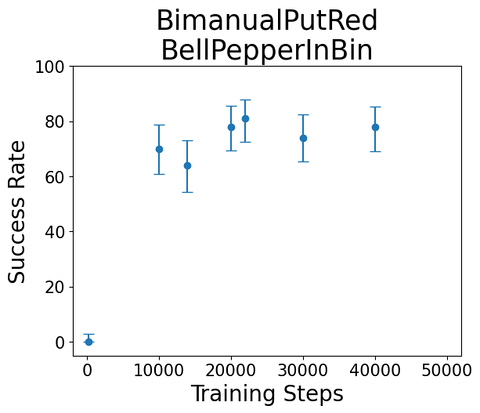

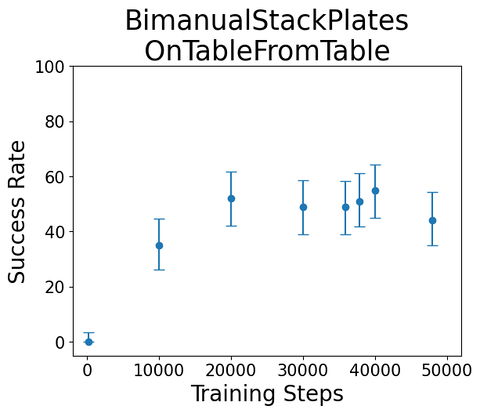

--episode-index 1We trained some single-skill diffusion policy on these skills; here are some example roll-outs/scores

Task:

"Bimanual Put Red Bell Pepper in Bin"

Sample rollout from single-skill diffusion policy, trained on sim teleop

Task:

"Bimanual stack plates on table from table"

Sample rollout from single-skill diffusion policy, trained on sim teleop

Coming soon: Public release of v1.0

lbm_eval

from lbm_eval import evaluate

result = evaluate(

my_policy, only_run={"put_spatula_in_utensil_crock": [81]}

)def evaluate(

policy: Policy,

only_run: Optional[Dict[ScenarioName, List[ScenarioIndex]]] = None,

t_max: Optional[float | Dict[ScenarioName, float]] = None,

output_directory: str = None,

use_eval_seed=True,

) -> MultiEvaluationResult:

"""Evaluate a manipulation policy.

Evaluates the policy `policy` on the whole evaluation corpus or,

alternatively, only those scenarios and instances named in `only_run`.

Each policy is run for `t_max` time, or less if the policy succeeds early.

If `t_max` is unspecified, then the default value for each scenario is

used.

Any logging or other files generated by the evaluations will be saved

in `output_directory`.

"""

Dale McConcachie, TRI

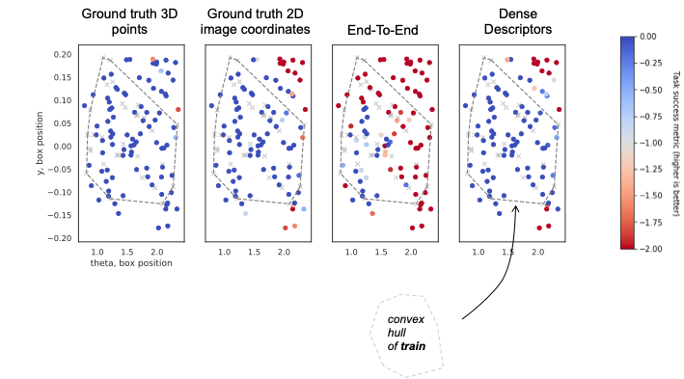

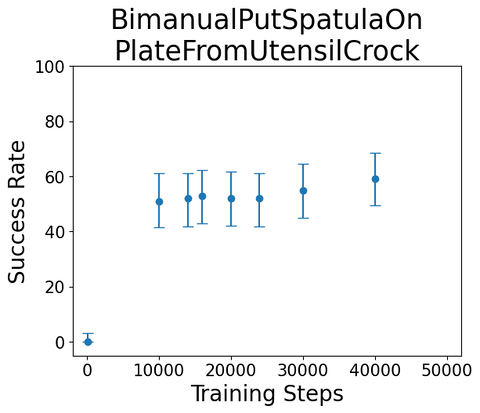

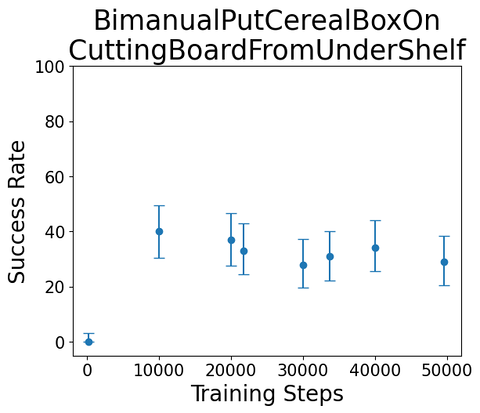

Example evals during training

(100 rollouts each, \(\alpha = 0.05\))

Extra results

to be remembered, if not used

Summary

- BC is working surprisingly well; enabling robots to do tasks that were impossible just a few years ago.

- But often at < 90% success rate.

- A few key lessons / architectures have emerged...

- plus a lot of folk wisdom.

- We (I?) still don’t completely understand the basics.

- Rigorous eval will help.

- There are many open (basic) questions!

Action prediction as representation learning

Thought experiment:

To predict future actions, must learn

dynamics model

task-relevant

demonstrator policy

dynamics

Simulation Eval / Benchmark

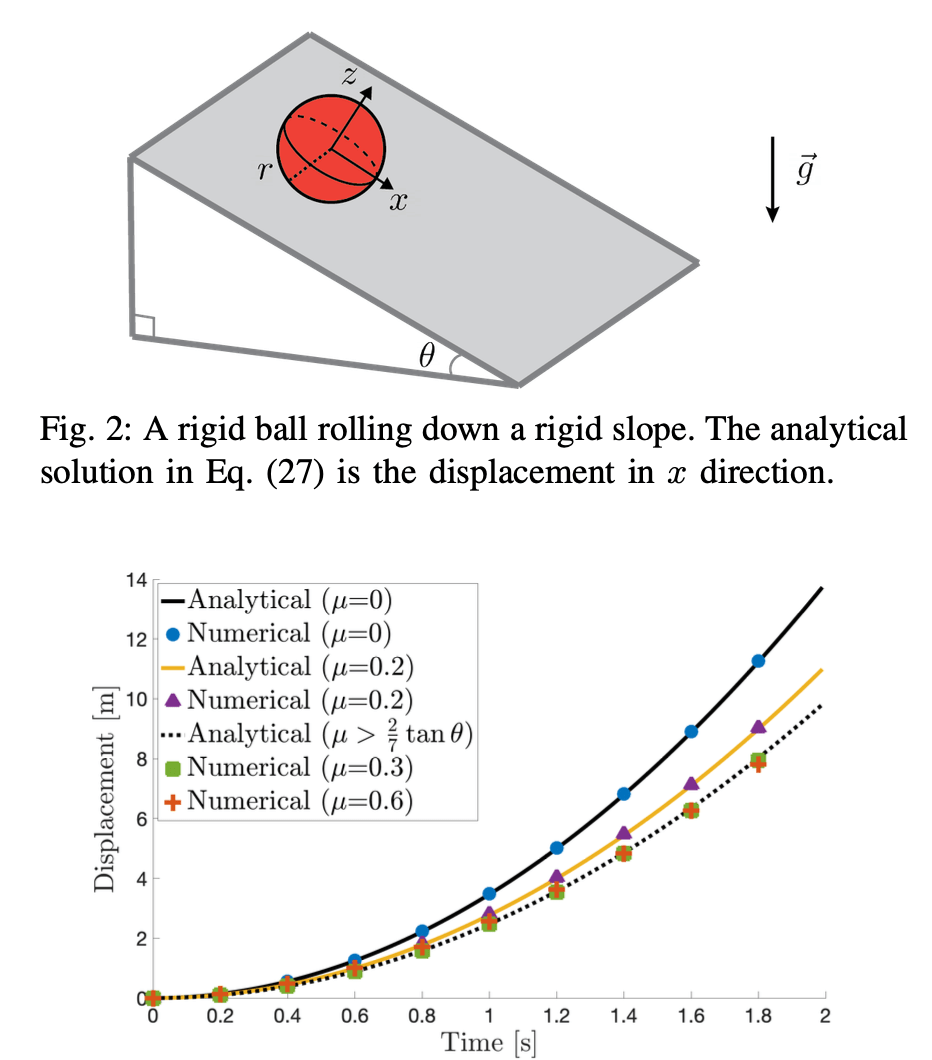

"Hydroelastic contact" as implemented in Drake

"Hydroelastic contact" as implemented in Drake

Material Point Method

w/ Chenfanfu Jiang

Partnership with Amazon Robotics and NVidia

NVIDIA is starting to support Drake (and MuJoCo):

- Drake OpenUSD parser

- RTX rendering

- potentially make Drake available in IsaacSym/Omniverse