Nonlinear Dynamics

MIT 6.832: Underactuated Robotics

Spring 2022, Lecture 2

Follow live at https://slides.com/d/U7aWVic/live

(or later at https://slides.com/russtedrake/spring22-lec02)

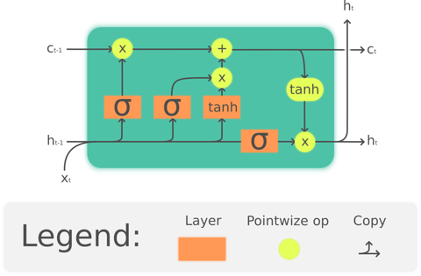

Long Short-term Memory (LSTM)

https://en.wikipedia.org/wiki/Long_short-term_memory

“RNNs using LSTM units partially solve the vanishing gradient problem, because LSTM units allow gradients to also flow unchanged.”

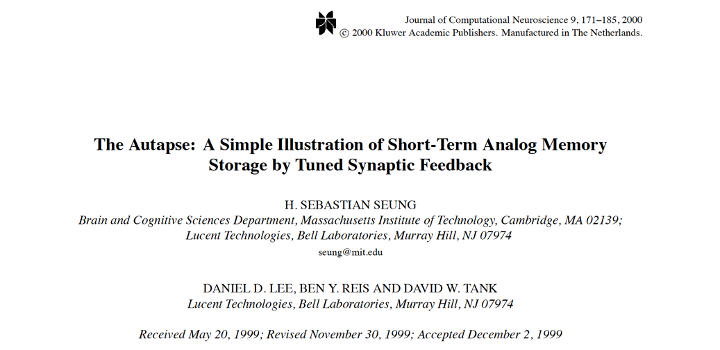

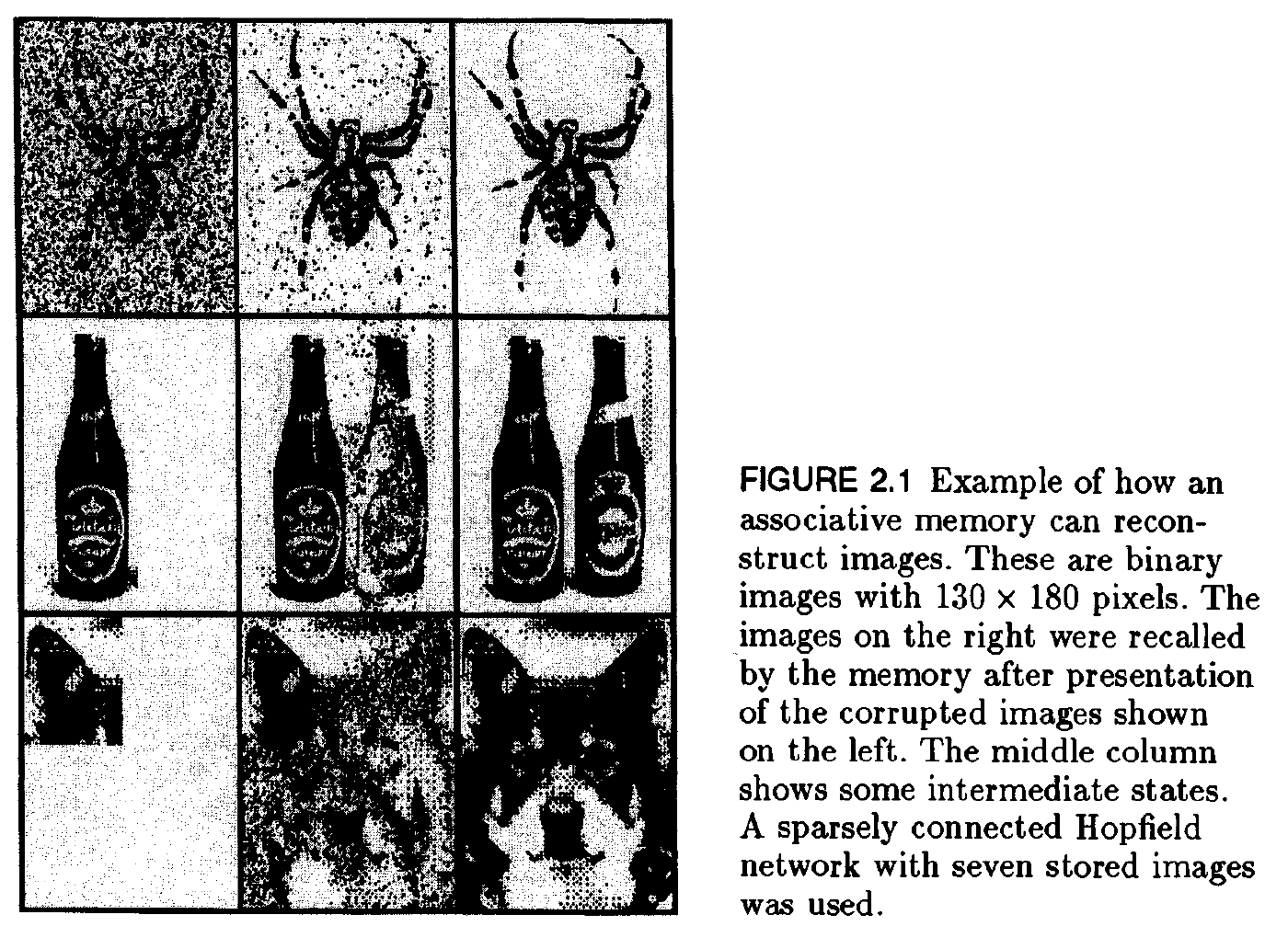

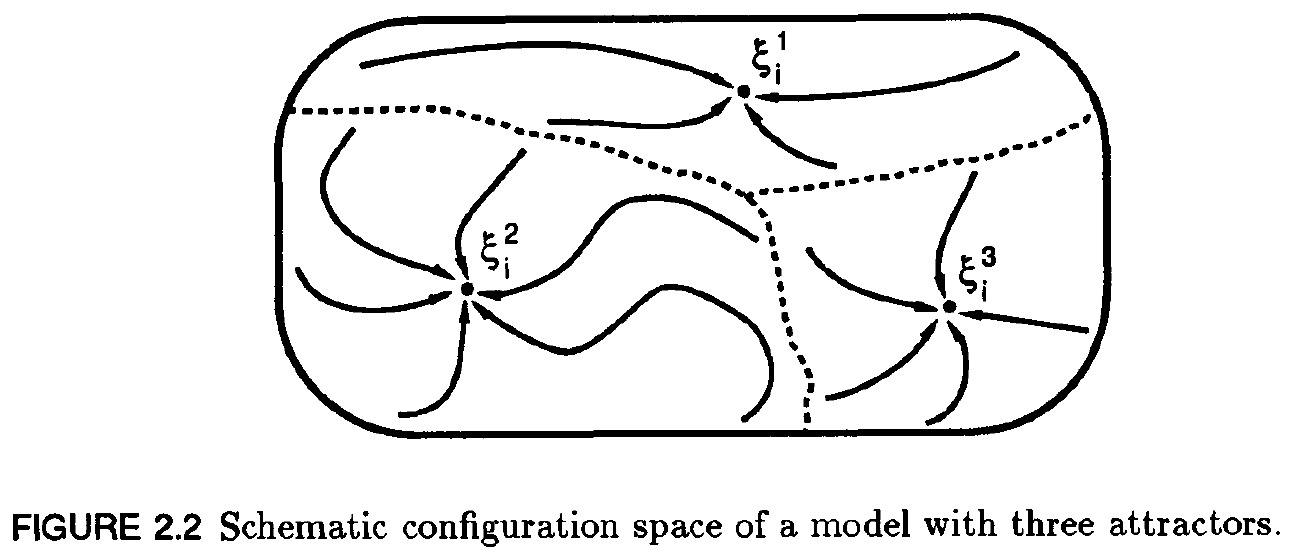

Hopfield Networks as Associative Memory

\(x_i\) represents activation (e.g. firing rate) of neuron \(i\).

"Memory" is fixed point.

Dynamics can fill in the details.

from Hertz, Krogh, Palmer, 1991.

Hopfield Networks as Associative Memory

from Hertz, Krogh, Palmer, 1991.

Hopfield Networks as Associative Memory

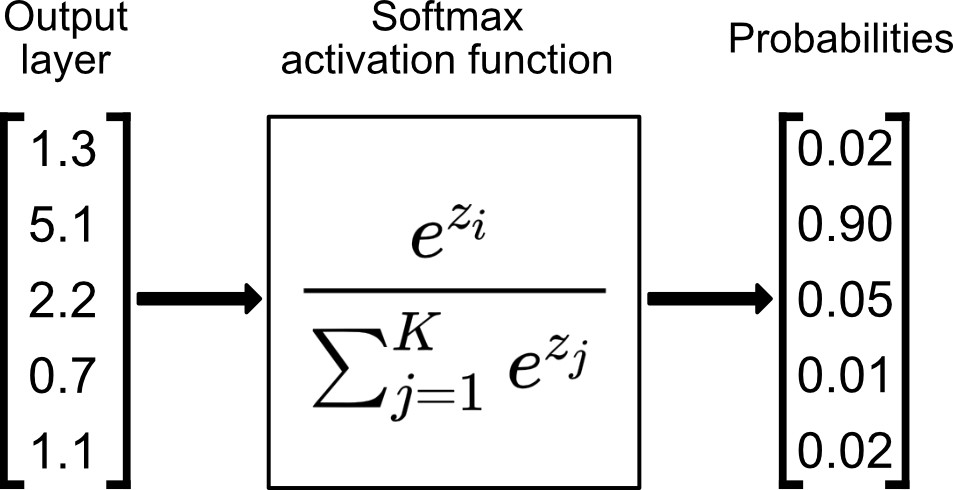

\dot{x} = A^T\,\text{softmax}(\beta\, A\, x) - x

Hopfield Networks as Associative Memory

\dot{x} = A^T\,\text{softmax}(\beta\, A\, x) - x

- \(Ax\) is dot product of x with rows of A

- \(\beta\) controls how soft/hard the argmax is.

- softmax gives "probability" of memory \(i\). Call vector \(p\).

- \(A^T p\) gives the memory.

- \(\dot{x} = memory - x\) has a fixed point at the memory.