Model Predictive Control

ML in Feedback Sys #21

Prof Sarah Dean

Reminders/etc

- Project midterm update due Friday!

- Scribing feedback to come by next week

-

Upcoming paper presentations starting next week

- RB17 Frank, Wei-Han, Minjae

- DSA+20 Jerry, Wendy, Yueying

- Participation includes attending presentations

- Relevant AI Seminar tomorrow 12:15-1:15 in Gates 122: Brandon Amos, Learning with differentiable and amortized optimization

policy

\(\pi_t:\mathcal S\to\mathcal A\)

observation

\(s_t\)

accumulate

\(\{(s_t, a_t, c_t)\}\)

Safe action in a dynamic world

Goal: select actions \(a_t\) to bring environment to low-cost states

while avoiding unsafe states

action

\(a_{t}\)

\(F\)

\(s\)

Recap: Invariant Sets

- A set \(\mathcal S_\mathrm{inv}\) is invariant under dynamics \(s_{t+1} = F(s_t)\) if for all \( s\in\mathcal S_\mathrm{inv}\), \( F(s)\in\mathcal S_\mathrm{inv}\)

- If \(\mathcal S_\mathrm{inv}\) is invariant for dynamics \(F\), then \(s_0\in \mathcal S_\mathrm{inv} \implies s_t\in\mathcal S_\mathrm{inv}\) for all \(t\).

- Example: sublevel set of Lyapunov function

- \(\{s\mid V(s)\leq c\}\)

Recap: Receding Horizon

time

Do

Plan

Do

Plan

Do

Plan

- For \(t=0,1,\dots\)

- Observe state

- Optimize plan

- Apply first planned action

\(\pi(s_t) = u_0^\star(s_t)\)

$$\min_{u_0,\dots, u_{H-1}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k})$$

\(\text{s.t.}\quad x_0 = s_t,\quad x_{k+1} = F(x_{k}, u_{k})\)

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe}\quad~~~\)

Notation: distinguish real states and actions \(s_t\) and \(a_t\) from the planned optimization variables \(x_k\) and \(u_k\).

\([u_0^\star,\dots, u_{H-1}^\star](s_t) = \arg\)

Recap: The MPC Policy

$$\min_{u_0,\dots, u_{H-1}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k})$$

\(\text{s.t.}\quad x_0 = s_t,\quad x_{k+1} = F(x_{k}, u_{k})\)

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe}\quad~~~\)

Notation: distinguish real states and actions \(s_t\) and \(a_t\) from the planned optimization variables \(x_k\) and \(u_k\).

Recap: The MPC Policy

\(F\)

\(s\)

\(s_t\)

\(a_t = u_0^\star(s_t)\)

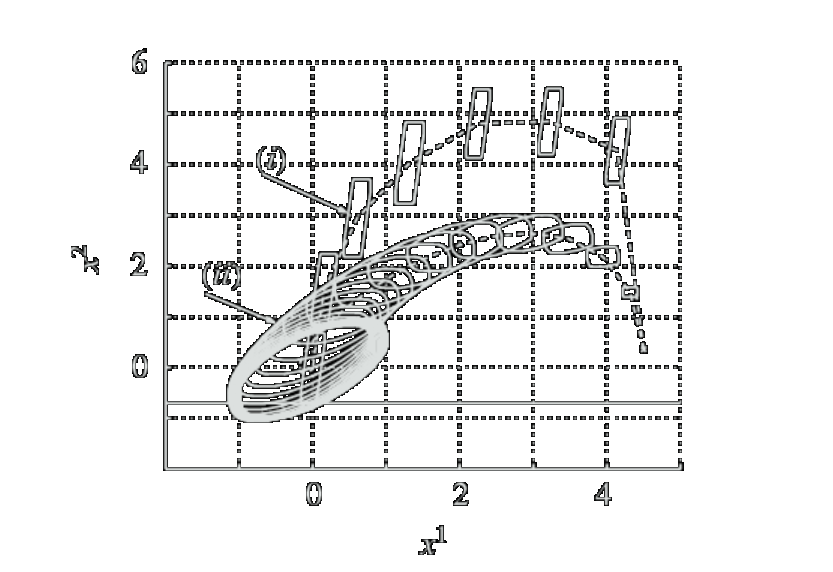

Example: infeasibility

The state is position & velocity \(s=[\theta,\omega]\) with \( s_{t+1} = \begin{bmatrix} 1 & 0.1\\ & 1 \end{bmatrix}s_t + \begin{bmatrix} 0\\ 1 \end{bmatrix}a_t\)

Goal: stay near origin and be energy efficient

- Safety constraint \(|\theta|\leq 1\) and actuation limit \(|a|\leq 0.5\)

- Infeasibility = inability to guarantee safety

- also leads to loss of stability

- States that are initially feasible vs. states that remain feasible

- not different when plan is over \(H=T\)

Infeasibility Problem

- infeasible

- initially feasible

- remain feasible

- Infeasibility = inability to guarantee safety

- also leads to loss of stability

- States that are initially feasible vs. states that remain feasible

- not different when plan is over (\(H=T\))

-

Definition: We call \(\mathcal S_\mathrm{inv}\) a control invariant set for dynamics

\(s_{t+1} = F(s_t, a_t)\) if for all \( s\in\mathcal S_\mathrm{inv}\), there exists an \(a\in\mathcal A_\mathrm{safe}\) such that \( F(s, a)\in\mathcal S_\mathrm{inv}\) -

Definition: The region of attraction for dynamics \(\tilde F(s)\) is the set of initial states \(s_0\) that converge to the origin.

- We consider the closed loop dynamics \(\tilde F(s) = F(s,\pi(s))\)

Infeasibility Problem

$$\min_{u_0,\dots, u_{H-1}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k}) +\textcolor{cyan}{ c_H(x_H)}$$

\(\text{s.t.}\quad x_0 = s,\quad x_{k+1} = F(x_{k}, u_{k})\qquad\qquad\)

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe},\quad \textcolor{cyan}{x_H\in\mathcal S_H}\)

- Let \(J(s; u_0,\dots, u_{H-1})\) be the value of the objective for actions \(u_0,\dots, u_{H-1}\)

- Let \(J^\star(s)=J(s; u^\star_0,\dots, u^\star_{H-1})\) be the optimal value.

- Assume that stage cost \(c(s,a)\) is positive definite, i.e. \(c(s,a)>0\) for all \(s,a\neq 0\) and \(c(0,0)=0\).

- Assume that \(0\in\mathcal S_\mathrm{safe}\) and \(0\in\mathcal A_\mathrm{safe}\)

Terminal cost and constraints

- Receding horizon control is short sighted

- Additional terms more closely approximate infinite horizon problem

$$\min_{u_0,\dots, u_{H}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k}) $$

\(\text{s.t.}\quad x_0 = s,\quad x_{k+1} = F(x_{k}, u_{k})\qquad\qquad\)

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe},\quad \textcolor{cyan}{x_H=0}\)

- Assume that the origin is a fixed point of the uncontrolled dynamics

- i.e. \(F(0,0)=0\)

- We will prove that

- \(\pi_\mathrm{MPC}\) is recursively feasible

- \(F(s, \pi_\mathrm{MPC}(s))\) is stable within region of attraction

Terminal cost and constraints

- Warm up: consider \(\mathcal S_H = \{0\}\) and \(c_H(0)=0\)

Recursive feasibility: feasible at \(s_t\implies\) feasible at \(s_{t+1}\)

Proof:

- \(s_t\) feasible and solution to optimization problem is \(u^\star_{0}, \dots, u^\star_{H-1}\) with corresponding states \(x^\star_{0}, \dots, x^\star_{H}\)

- After applying \(a_t=u^\star_{0}\), state moves to \(s_{t+1} = F(s_t,a_t)\)

- Notice that \(s_{t+1} = x^\star_1\)

- Claim: \(u^\star_{1}, \dots, u^\star_{H-1}, 0\) is now a feasible solution.

- because \(x_{H}^\star=0\) and \(F(0,0)=0\)

- thus corresponding states \(x^\star_{1}, \dots, x^\star_{H}, 0\) satisfy constraints

Recursive Feasibility

$$\min_{u_0,\dots, u_{H}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k})\qquad\text{s.t.}\quad x_0 = s_t,\quad x_{k+1} = F(x_{k}, u_{k})$$

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe},\quad \textcolor{cyan}{x_H=0}\)

Review: Lyapunov Stability

Definition: A Lyapunov function \(V:\mathcal S\to \mathbb R\) for \(F\) is continuous and

- (positive definite) \(V(0)=0\) and \(V(0)>0\) for all \(s\in\mathcal S - \{0\}\)

- (decreasing) \(V(F(s)) - V(s) \leq 0\) for all \(s\in\mathcal S\)

- Optionally, (strict) \(V(F(s)) - V(s) < 0\) for all \(s\in\mathcal S-\{0\}\)

Theorem (1.2, 1.4): Suppose that \(F\) is locally Lipschitz, \(s_{eq}=0\) is a fixed point, and \(V\) is a Lyapunov function for \(F,s_{eq}\). Then, \(s_{eq}=0\) is

- asymptotically stable if \(V\) is strictly decreasing

Proof:

\(J^\star(s)\) is positive definite and strictly decreasing. Therefore, the closed loop dynamics \(F(\cdot, \pi_\mathrm{MPC}(\cdot))\) are asymptotically stable.

- Positive definite:

- if \(s=0\), then optimal actions are \(0\) since \(F(0,0)=0\) and stage cost is positive definite

- if \(s\neq 0\), \(J^\star(s)>0\) since stage cost is positive definite

Stability

- Strictly decreasing: recall \(J^\star (s_t) =\sum_{k=0}^{H-1} c(x^\star_{k}, u^\star_{k}) +c_H(x_H^\star)\)

- \(J^\star(s_{t+1}) \leq\) cost of feasible solution starting at \(x_0=F(s_t, u^\star_{0})\)

- \(=J(s_{t+1}; u^\star_1,\dots, u^\star_{H-1}, 0)=\sum_{k=1}^{H-1} c(x^\star_{k}, u^\star_{k}) + c(x^\star_{H}, 0)+c_H(0)\)

- \(=\sum_{k=0}^{H-1} c(x^\star_{k}, u^\star_{k}) + \cancel{c(x^\star_{H}, 0)}+\cancel{c_H(0)} -c(x^\star_{0}, u^\star_{0}) \)

- \(= J^\star (s) -c(x^\star_{0}, u^\star_{0}) < J^\star (s)\)

- \(J^\star(s_{t+1}) \leq\) cost of feasible solution starting at \(x_0=F(s_t, u^\star_{0})\)

$$\min_{u_0,\dots, u_{H}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k}) +\textcolor{cyan}{ c_H(x_H)}$$

\(\text{s.t.}\quad x_0 = s,\quad x_{k+1} = F(x_{k}, u_{k})\qquad\qquad\)

\(x_k\in\mathcal S_\mathrm{safe},\quad u_k\in\mathcal A_\mathrm{safe},\quad \textcolor{cyan}{x_H\in\mathcal S_H}\)

General terminal cost and constraints

Assumptions:

- The origin is uncontrolled fixed pointed \(F(0,0)=0\)

- The costs are positive definite and constraints contain \(0\)

- The terminal set is contained in safe set and is control invariant

- The terminal cost satisfies $$ c_H(s_{t+1}) - c_H(s_t) \leq -c(s_t, u) $$ for some \(u\) such that \(s_{t+1} = F(s_{t}, u)\in\mathcal S_H\)

Recursive feasibility: feasible at \(s_t\implies\) feasible at \(s_{t+1}\)

Proof:

- \(s_t\) feasible and solution to optimization problem is \(u^\star_{0}, \dots, u^\star_{H-1}\) with corresponding states \(x^\star_{0}, \dots, x^\star_{H}\)

- After applying \(a_t=u^\star_{0}\), state moves to \(s_{t+1} = F(s_t,a_t)\)

- Notice that \(s_{t+1} = x^\star_1\)

- Claim: there exists a \(u\) such that \(u^\star_{1}, \dots, u^\star_{H-1}, u\) is a feasible solution.

- because \(x_{H}^\star\in\mathcal S_H\) and \(\mathcal S_H\) is control invariant

- thus corresponding states \(x^\star_{1}, \dots, x^\star_{H}, F(x^\star_{H}, u)\) satisfy constraints

Recursive Feasibility

Proof:

\(J^\star(s)\) is positive definite and strictly decreasing. Therefore, the closed loop dynamics \(F(\cdot, \pi_\mathrm{MPC}(\cdot))\) are asymptotically stable.

Stability

- Positive definite: same argument as before

- Strictly decreasing: recall \(J^\star (s_t) =\sum_{k=0}^{H-1} c(x^\star_{k}, u^\star_{k}) +c_H(x_H^\star)\)

- \(J^\star(s_{t+1}) \leq\) cost of feasible solution starting at \(x_0=F(s_t, u^\star_{0})\)

- \(=J(s_{t+1}; u^\star_1,\dots, u^\star_{H-1}, u)\)

- \(=\sum_{k=1}^{H-1} c(x^\star_{k}, u^\star_{k}) + c(x^\star_{H}, u)+c_H(F(x^\star_{H}, u))\)

- \(=\sum_{k=0}^{H-1} c(x^\star_{k}, u^\star_{k})+c_H(x^\star_H)+ c(x^\star_{H}, u)+c_H(F(x^\star_{H}, u))-c_H(x^\star_H) -c(x^\star_{0}, u^\star_{0}) \)

- \(\leq J^\star (s) +c(x^\star_{H}, u) - c(x^\star_{H}, u) -c(x^\star_{0}, u^\star_{0}) < J^\star (s)\)

- \(J^\star(s_{t+1}) \leq\) cost of feasible solution starting at \(x_0=F(s_t, u^\star_{0})\)

Terminal cost and constraints for LQR

Based on unconstrained LQR policy where \(P=\mathrm{DARE}(A,B,Q,R)\) $$ K=-(B^\top PB+R)^{-1}B^\top P$$

- Terminal cost as \(c_H(s) = s^\top P s\)

- Terminal set is any invariant set for closed loop $$s\in\mathcal S_H\implies (A+BK)s\in\mathcal S_H$$ which also guarantees safety: $$\mathcal S_H\subseteq \mathcal S_\mathrm{safe},\quad Ks\in\mathcal A_\mathrm{safe}\quad\forall~~s\in\mathcal S_H$$

- ex: sublevel set of \(s^\top P s\)

Constrained LQR Problem

$$ \min ~~\lim_{T\to\infty}\frac{1}{T}\sum_{t=0}^{T} s_t^\top Qs_t+ a_t^\top Ra_t\quad\\ \text{s.t}\quad s_{t+1} = A s_t+ Ba_t \\ G_s s_t\leq b_s,G_a a_t\leq b_a$$

MPC Policy

$$ \min ~~\sum_{k=0}^{H-1} x_k^\top Qx_k + u_k^\top Ru_k +x_H^\top Px_H\quad \\ \text{s.t}\quad x_0=s,~~ x_{k+1} = A x_k+ Bu_k\\G_s x_k\leq b_s,G_a u_k\leq b_a ,x_H\in\mathcal S_H$$

Terminal cost and constraints for LQR

This satisfies the assumptions:

- The origin is uncontrolled fixed pointed \(F(0,0)=0\)

- ✓ \(A\cdot 0+B\cdot 0=0\)

- The costs are positive definite and constraints contain \(0\)

- ✓ \(Q,R,P\) are psd, assume \(b_s,b_a\geq 0\)

- The terminal set is contained in safe set and is control invariant

- ✓ by construction, have \(u=Ks\) guarantees invariance

- The terminal cost satisfies \(c_H(s_{t+1}) - c_H(s_t) \leq -c(s_t, u) \) for some \(u\) such that \(s_{t+1} = F(s_{t}, u)\in\mathcal S_H\)

- Exercise: use the form of \(K\) and the DARE to show that $$((A+BK)s)^\top P(A+BK)s - s^\top Ps = -s^\top Q s$$

- Recall the Bellman Optimality Equation:

- \( \pi^\star(s) = \arg\min_{a\in\mathcal A} c(s, a)+J^\star (F(s,a))\)

- For LQR, this means that

- \(\pi^\star(s) = \arg\min_a s^\top Q s + a^\top R a + (As+Ba)^\top P (As+Ba)\)

- \(\pi^\star(s) = \arg\min_u x_0^\top Q x_0 + u^\top R u + x_1^\top P x_1~~\text{s.t.} ~~x_0=s,~~x_1=Ax_0+Bu\)

-

This is MPC with \(H=1\) and correct terminal cost!

Equivalence for unconstrained

Constrained LQR Problem

$$ \min ~~\lim_{T\to\infty}\frac{1}{T}\sum_{t=0}^{T} s_t^\top Qs_t+ a_t^\top Ra_t\quad\\ \text{s.t}\quad s_{t+1} = A s_t+ Ba_t $$

MPC Policy

$$ \min ~~\sum_{k=0}^{H-1} x_k^\top Qx_k + u_k^\top Ru_k +x_H^\top Px_H\quad \\ \text{s.t}\quad x_0=s,~~ x_{k+1} = A x_k+ Bu_k$$

$$\min_{u_0,\dots, u_{H-1}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k}) + c_H(x_H)$$

\(\text{s.t.}\quad x_0 = s_t,\quad x_{k+1} = F(x_{k}, u_{k})\)

\(x_k\in\mathcal S_\mathrm{safe},~ u_k\in\mathcal A_\mathrm{safe},~x_H\in\mathcal S_H\)

- Terminal constraint not often used (instead: long horizon)

- Soft constraints

- \(x_k+\delta \in\mathcal S_\mathrm{safe}\) and add penalty \(C\|\delta\|_2^2\) to cost

- Accuracy of costs/dynamics vs. ease of optimization

- Sampling based optimization (cross entropy method)

MPC in practice

\(F\)

\(s\)

\(s_t\)

\(a_t = u_0^\star(s_t)\)

$$\min_{u_0,\dots, u_{H-1}} \quad\sum_{k=0}^{H-1} c(x_{k}, u_{k}) + c_H(x_H)$$

\(\text{s.t.}\quad x_0 = s_t,\quad x_{k+1} = F(x_{k}, u_{k})\)

\(x_k\in\mathcal S_\mathrm{safe},~ u_k\in\mathcal A_\mathrm{safe},~x_H\in\mathcal S_H\)

- Disturbances:

- optimize expectation, high probability, or worst-case

- Unknown dynamics/costs

- robust to uncertainty (worst case)

- learn from data

MPC extensions

\(F\)

\(s\)

\(s_t\)

\(a_t = u_0^\star(s_t)\)

Recap

- Recap: MPC

- Feasibility problems

- Terminal sets and costs

- Proof of feasibility and stability

References: Predictive Control by Borrelli, Bemporad, Morari

Reminders

- Project update due Friday

- Upcoming paper presentations:

-

[RB17] Learning model predictive control for iterative tasks

-

[DSA+20] Fairness is not static

-

[FLD21] Algorithmic fairness and the situated dynamics of justice

-