Societal Implications of Technology

Sarah Dean

CS 6006, October 2023

Societal Implications of Tech

- This fall: CS 7340, theme: tech & labor

- Last fall: informal reading group, theme: race & technology

- first run in 2020 (without me)

With Haym Hirsch and Anil Damle

Bias: prejudice, usually in a way considered to be unfair

(mathematical) systematic distortion of a statistical result

Prejudice: preconceived opinion

(legal) harm or injury resulting from some action or judgment

Why hold CS seminar on this?

- We are trained in CS/math/engineering

- Other disciplines have more expertise on these issues

- CS research calls for understanding societal positioning

- I came to this view due to my research on bias (in ML)

1. Miscalibration in Recommendation

Designing recommendations which optimize engagement leads to over-recommending the most prevalent types (Steck, 2018)

rom-com 80% of the time

horror 20% of the time

optimize for probable click

- The chance of a click depends on whether we recommend a rom-com or horror movie:

$$\mathbb P(\mathsf{click})= \mathbb P(\mathsf{click}~\text{and}~\mathsf{romcom}) + \mathbb P(\mathsf{click}~\text{and}~\mathsf{horror}) $$

\(0.8\times \mathbb P(\mathsf{romcom})+0.2\times P(\mathsf{horror}) \)

\((1- \mathbb P(\mathsf{romcom}) )\)

1. Miscalibration in Recommendation

Designing recommendations which optimize engagement leads to over-recommending the most prevalent types (Steck, 2018)

rom-com 80% of the time

horror 20% of the time

optimize for probable click

recommend rom-com 100% of the time

$$\max_{0\leq x\leq 1}0.8\times x + 0.2 \times (1-x)$$

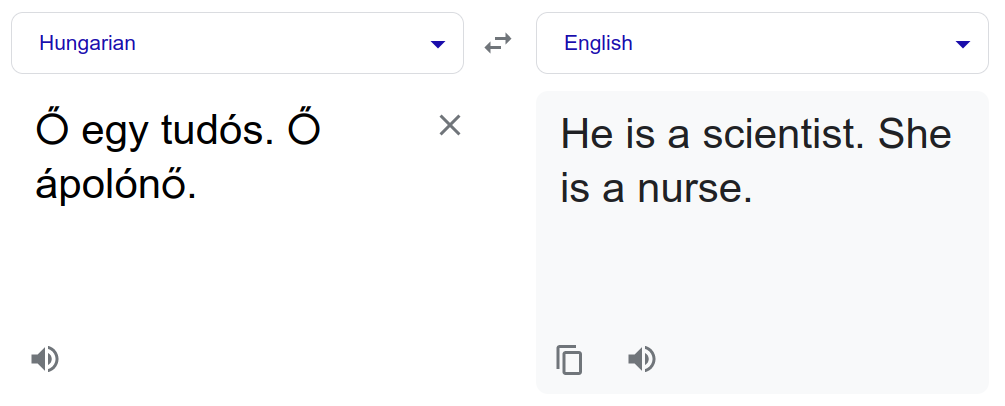

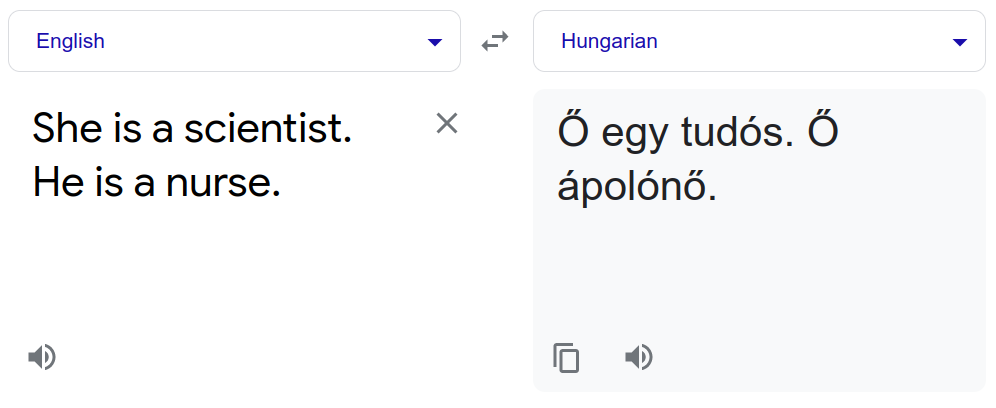

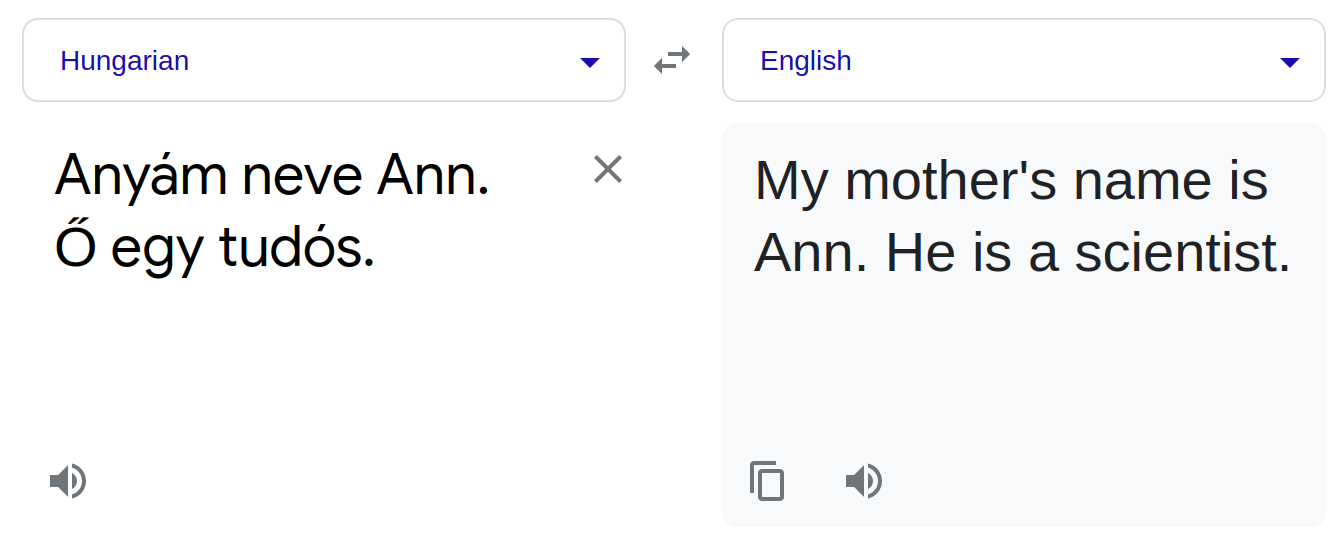

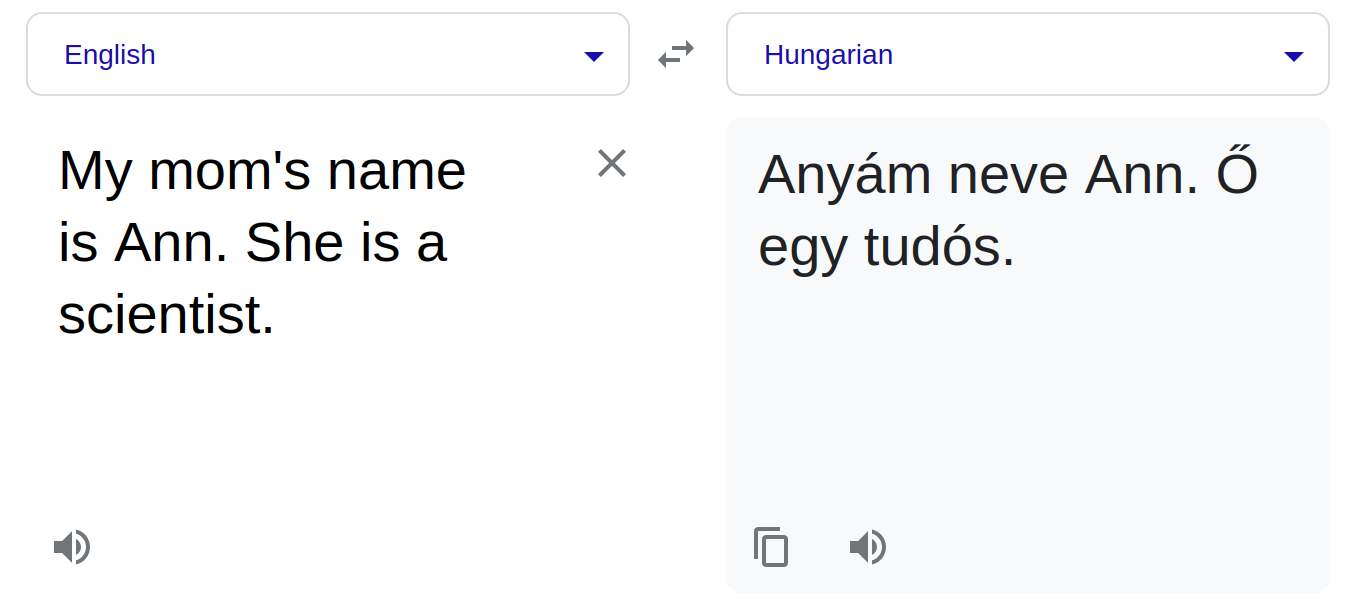

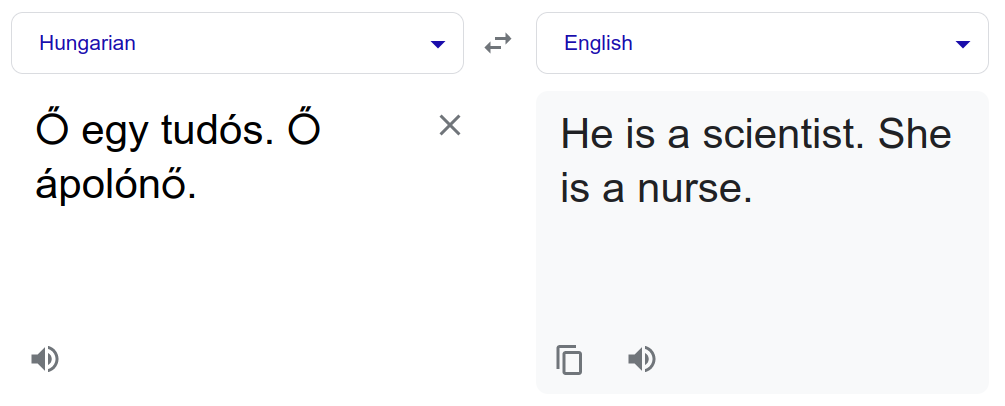

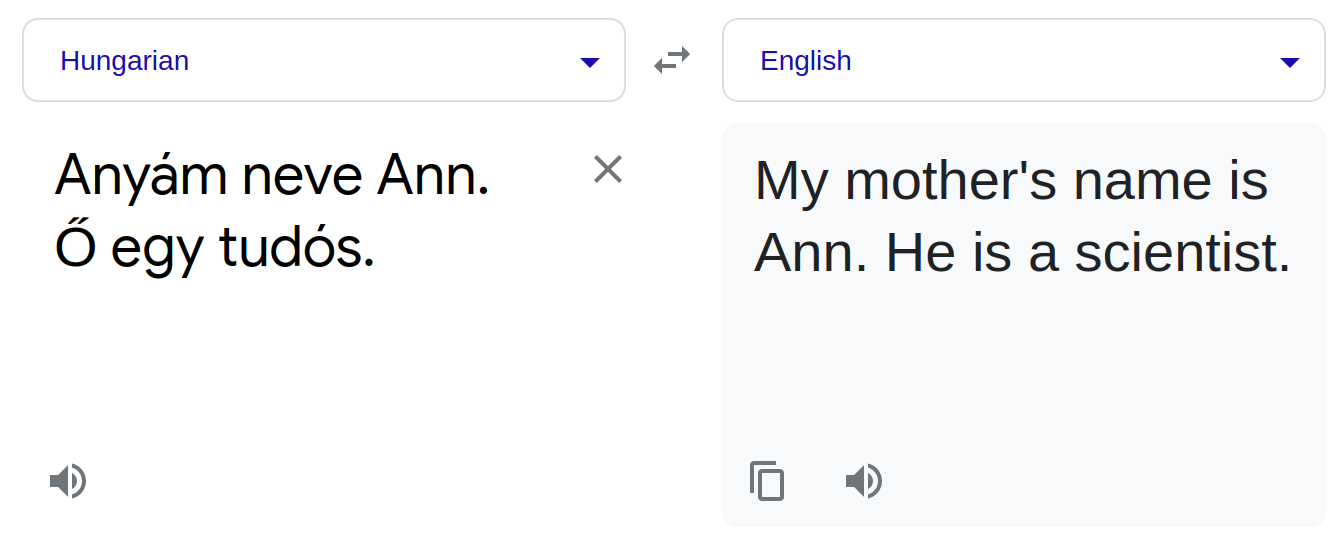

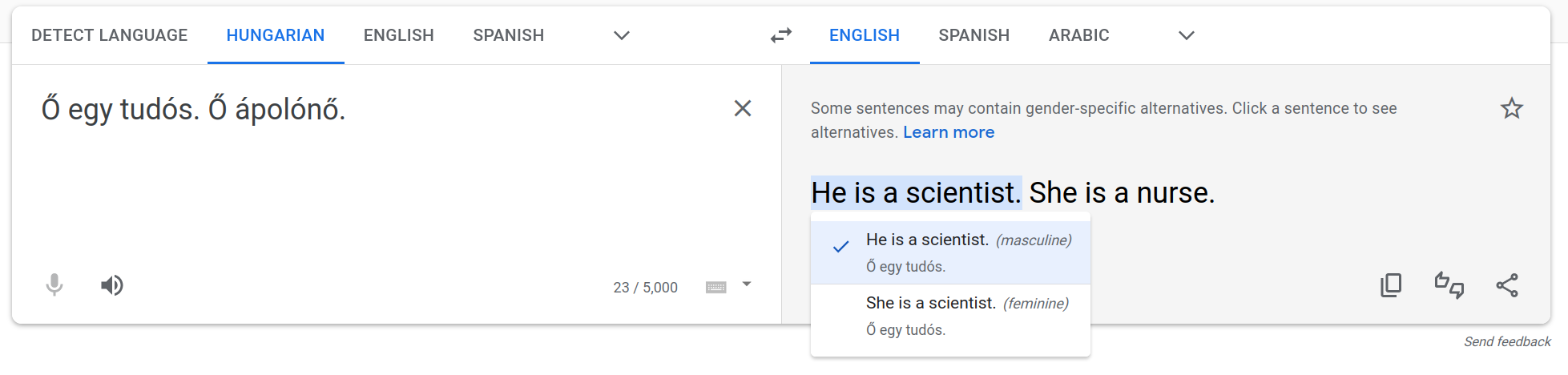

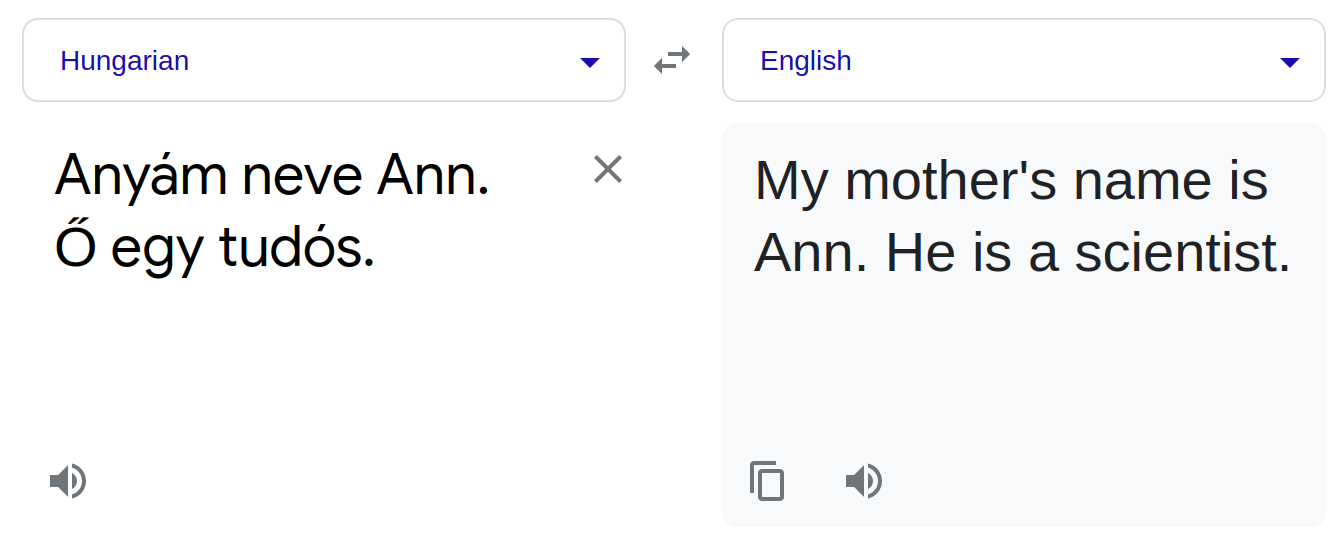

2. Gender Bias in Translation

Data-driven machine translation perpetuates gender bias.

2. Gender Bias in Translation

Machine translation works by maximizing the probability of an English sentence given Hungarian sentence

- (A) She \(\leftrightarrow\) Ő

- (B) She is a scientist \(\leftrightarrow\) Ő egy tudós

- (C) My mother's name is Ann. She is a scientist. \(\leftrightarrow\) Anyám neve Ann. Ő egy tudós.

\(0\)

\(1\)

(A)

(B)

(C)

Naive optimization under uncertainty leads to miscalibration and bias

- A broad set of possible ameliorating approaches: UI, uncertainty reduction

3. Gender Bias in Resume Screening

✅

send to recruiter

Data-driven resume screening algorithms repeat pre-existing bias

✅

❌

❌

records

resume

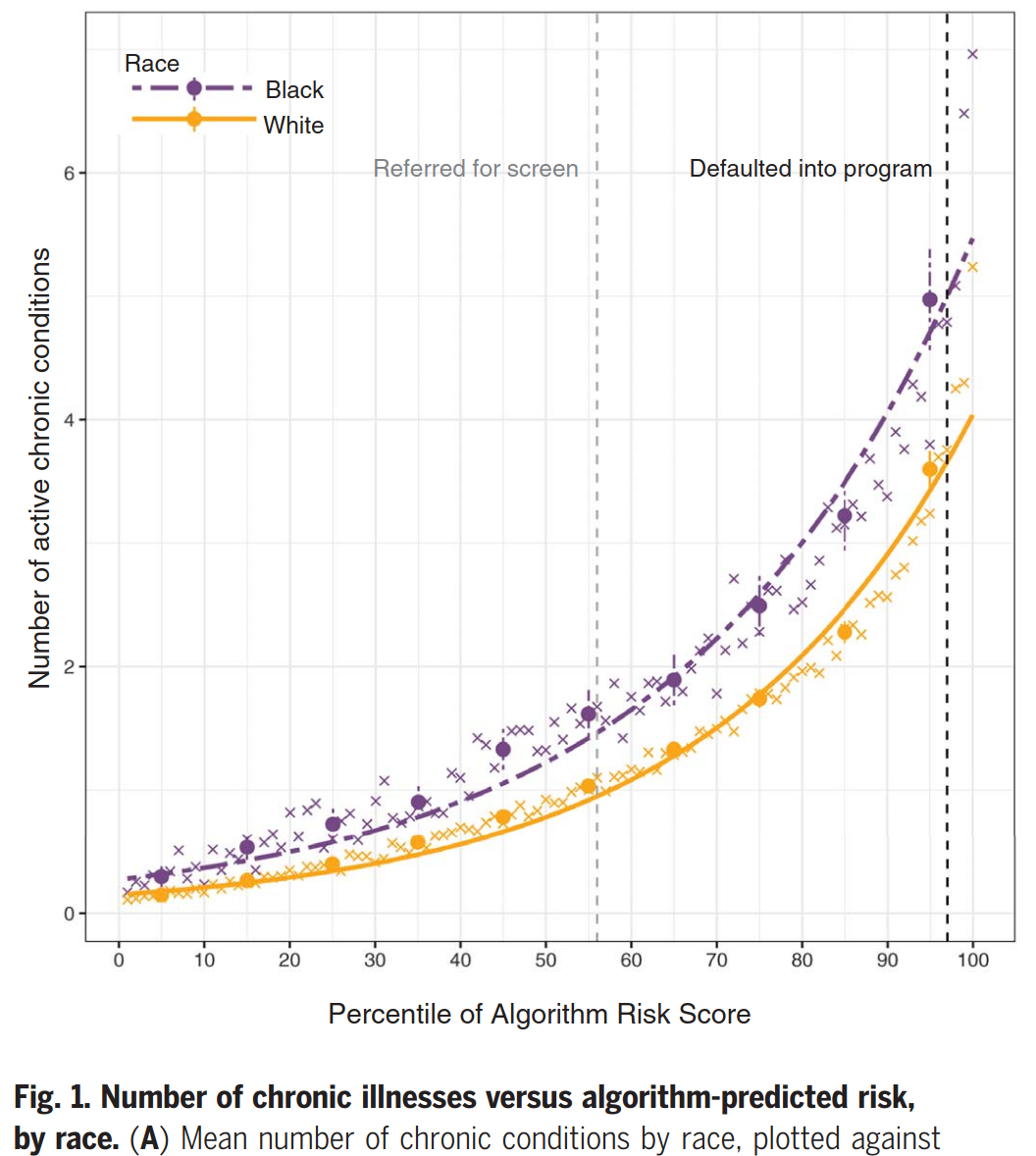

Risk algorithms used in healthcare under-predict the needs of Black patients compared with White patients (Obermeyer et al., 2019).

4. Racial Bias in Healthcare

risk score

medical records

$$

$$$

$

Pattern recognition (i.e. machine learning) replicates and amplifies bias in the data

✅/❌

data

decisions

Sources of Bias

Naive optimization under uncertainty leads to miscalibration and bias

What does it mean to build good technology in an imperfect world?

Technologies are developed and used within a particular social, economic, and political context. They arise out of a social structure, they are grafted on to it, and they may reinforce it or destroy it, often in ways that are neither foreseen nor foreseeable.”

Ursula Franklin, 1989

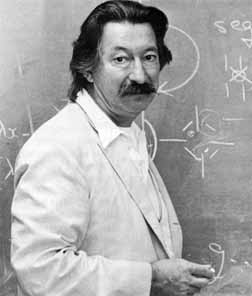

I think the computer has from the beginning been a fundamentally conservative force. It has made possible the saving of institutions pretty much as they were, which otherwise might have had to be changed. [...] If it had not been for the computer [...] it might have been necessary to introduce a social invention, as opposed to the technical invention.

Joseph Weizenbaum, 1985

Words of Wisdom

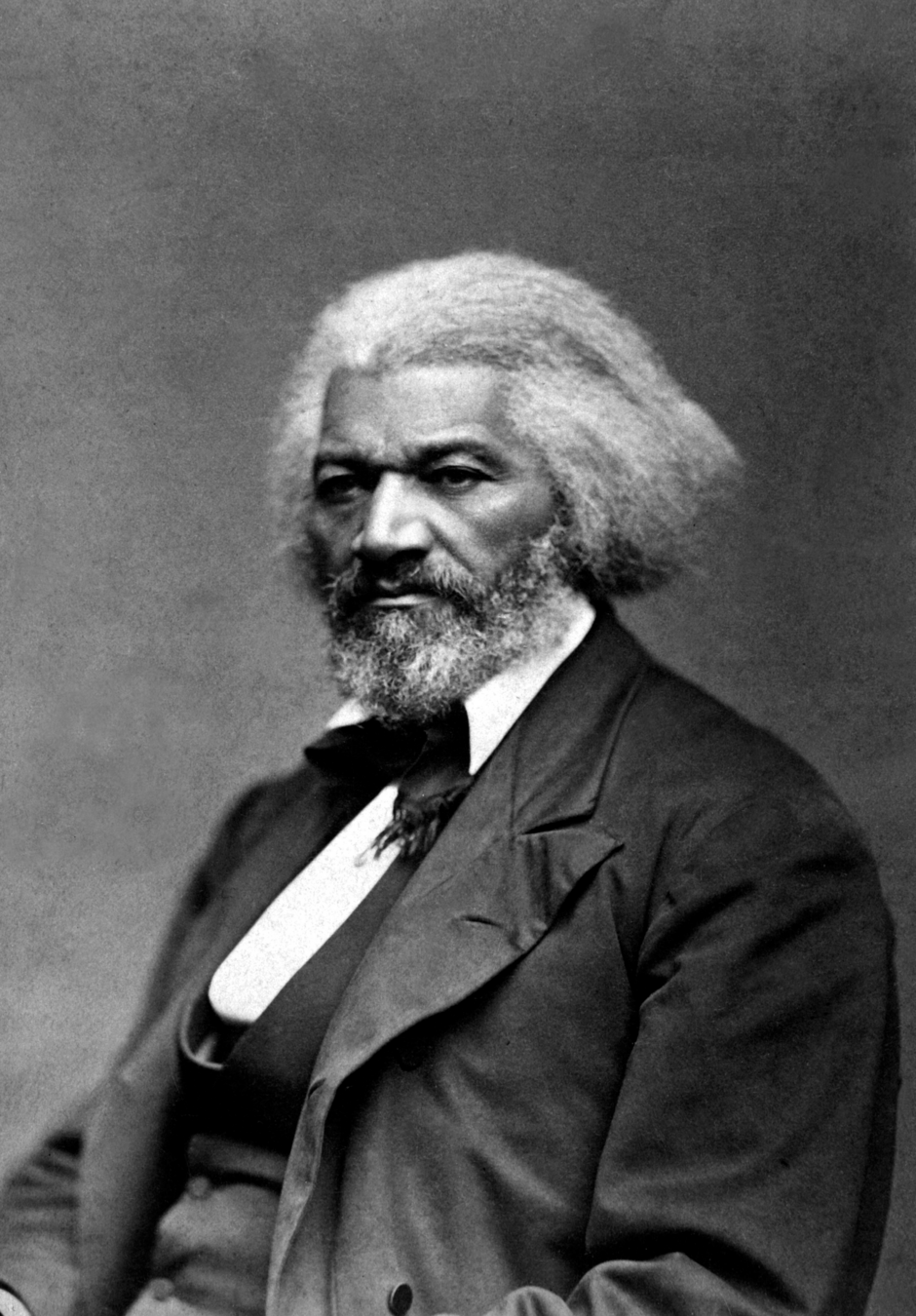

- What to a Slave is the Fourth of July? Fredrick Douglass (1852)

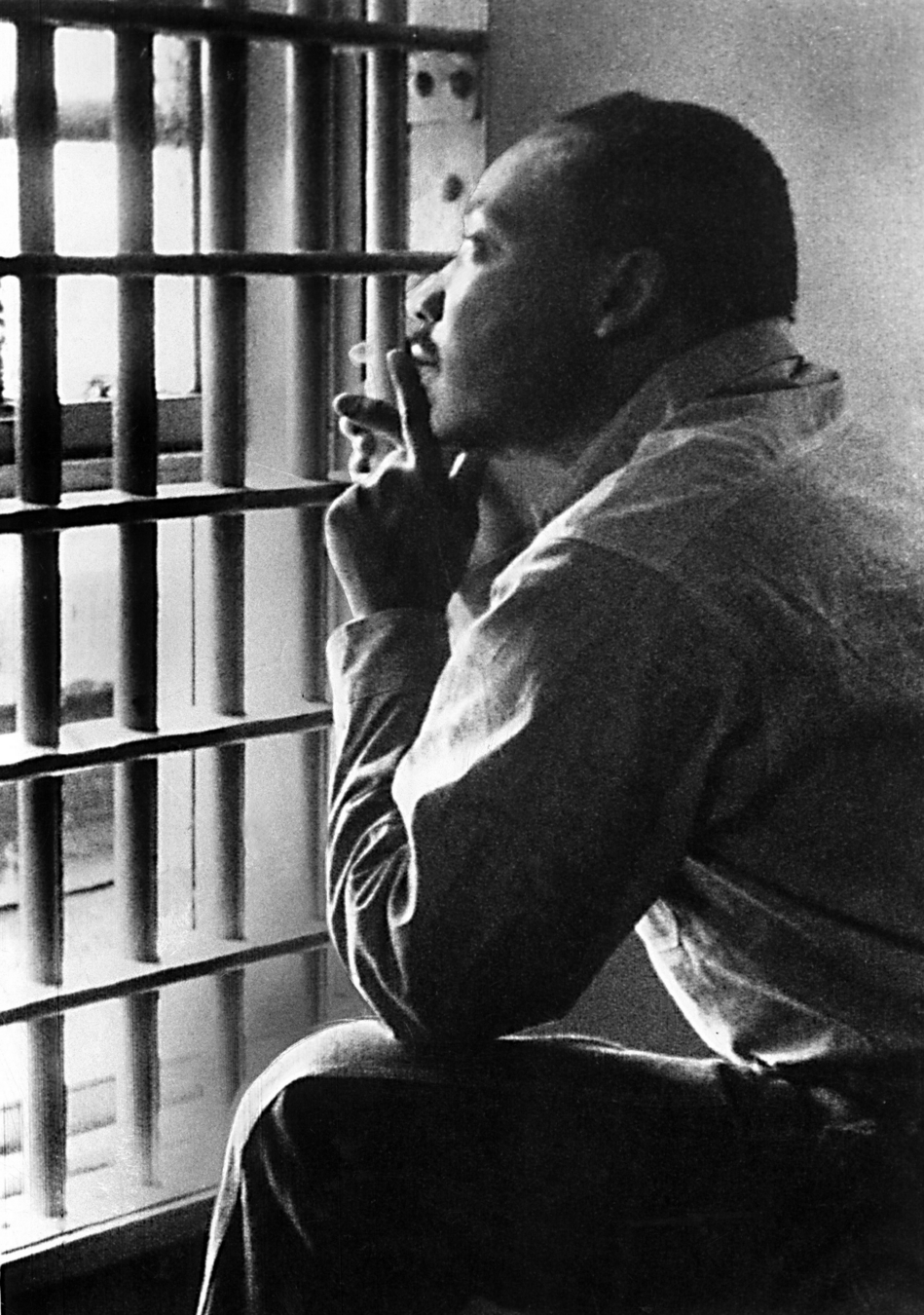

- Letter from a Birmingham Jail Martin Luther King Jr. (1963)

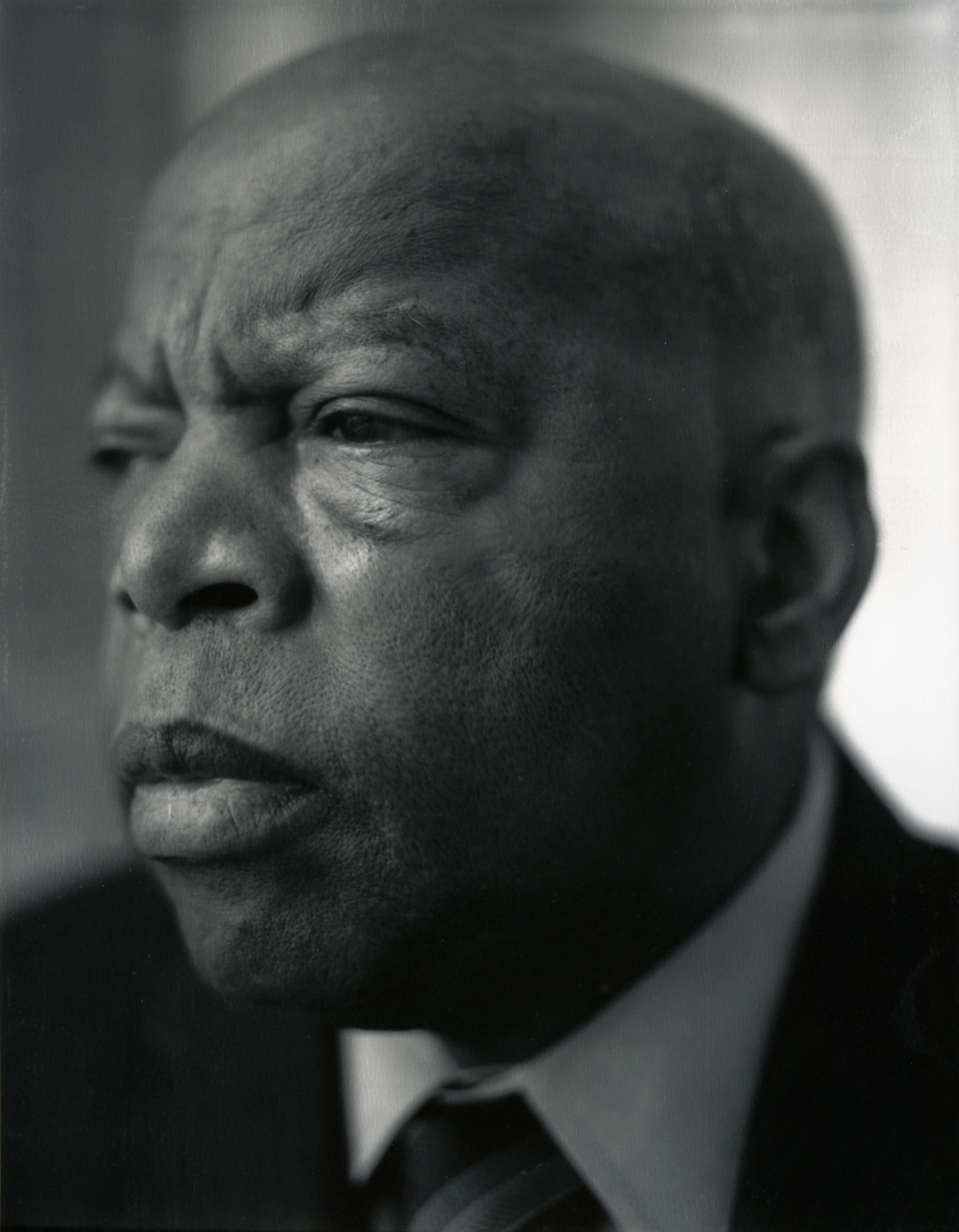

- Together, You Can Redeem the Soul of Our Nation John Lewis (2020)

-

The Fire Next Time James Baldwin (1963)

-

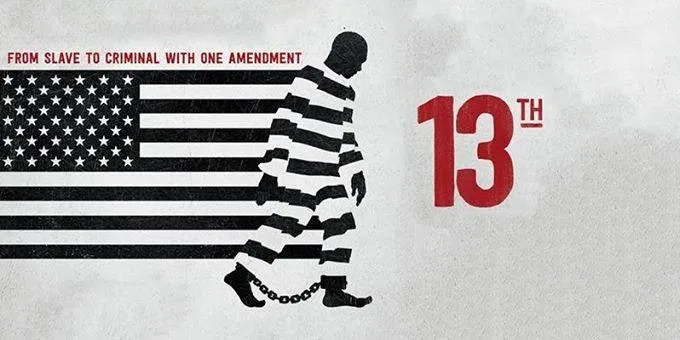

13th Ava DuVernay (2016)

-

I Am Not Your Negro Raoul Peck (2017)

-

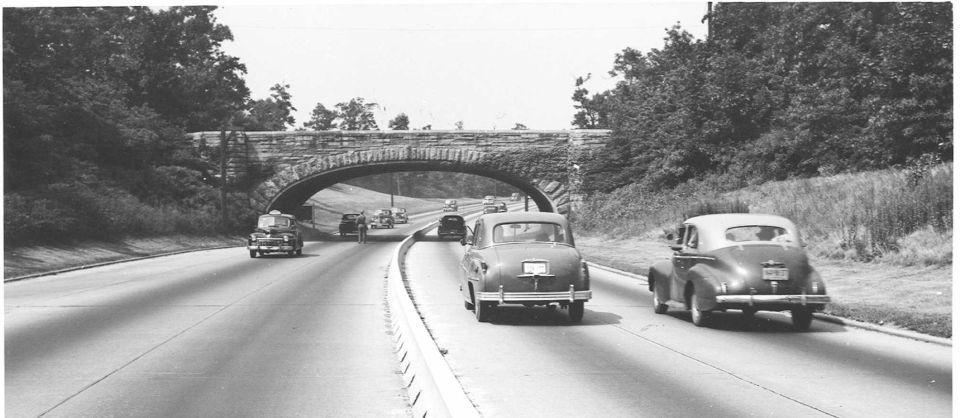

Do Artifacts have Politics Langdon Winner (1980)

-

Bias in Computer Systems Batya Friedman and Helen Nissenbaum (1996)

Race & Technology (2022, 2020)

Race & Technology (2022, 2020)

- What to a Slave is the Fourth of July? Fredrick Douglass (1852)

- Letter from a Birmingham Jail Martin Luther King Jr. (1963)

- Together, You Can Redeem the Soul of Our Nation John Lewis (2020)

-

The Fire Next Time James Baldwin (1963)

-

13th Ava DuVernay (2016)

-

I Am Not Your Negro Raoul Peck (2017)

-

Do Artifacts have Politics Langdon Winner (1980)

-

Bias in Computer Systems Batya Friedman and Helen Nissenbaum (1996)

Race & Technology (2022, 2020)

- What to a Slave is the Fourth of July? Fredrick Douglass (1852)

- Letter from a Birmingham Jail Martin Luther King Jr. (1963)

- Together, You Can Redeem the Soul of Our Nation John Lewis (2020)

-

The Fire Next Time James Baldwin (1963)

-

13th Ava DuVernay (2016)

-

I Am Not Your Negro Raoul Peck (2017)

-

Do Artifacts have Politics Langdon Winner (1980)

-

Bias in Computer Systems Batya Friedman and Helen Nissenbaum (1996)

Race & Technology (2022, 2020)

- What to a Slave is the Fourth of July? Fredrick Douglass (1852)

- Letter from a Birmingham Jail Martin Luther King Jr. (1963)

- Together, You Can Redeem the Soul of Our Nation John Lewis (2020)

-

The Fire Next Time James Baldwin (1963)

-

13th Ava DuVernay (2016)

-

I Am Not Your Negro Raoul Peck (2017)

-

Do Artifacts have Politics Langdon Winner (1980)

-

Bias in Computer Systems Batya Friedman and Helen Nissenbaum (1996)

Tech & Labor (2023)

- Inside the AI Factory: the humans that make tech seem human (2023)

- The secret lives of Google raters (2017)

- How the AI industry profits from catastrophe (2023)

- Revolt of the NYC Delivery Workers (2021)

- Ghost Work Mary Gray & Siddarth Suri (2019)

- Atlas of AI Kate Crawford (2021)

- Origin Stories: Plantations, Computers, and Industrial Control Whittaker (2023)

- The Secret History of Women in Coding Clive Thompson (2019)

- Gamification and work games: Examining consent and resistance among Uber drivers Vasudevan & Chan (2022).

- Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. Chu et al. (2023).

- Beyond opening up the black box: Investigating the role of algorithmic systems in Wikipedian organizational culture Geiger (2017).

- All That’s Happening behind the Scenes: Putting the Spotlight on Volunteer Moderator Labor in Reddit Li, Hecht, & Chancellor (2022).

- The Civic Labor of Volunteer Moderators Online Matias (2019).

Tech & Labor (2023)

- Inside the AI Factory: the humans that make tech seem human (2023)

- The secret lives of Google raters (2017)

- How the AI industry profits from catastrophe (2023)

- Revolt of the NYC Delivery Workers (2021)

- Ghost Work Mary Gray & Siddarth Suri (2019)

- Atlas of AI Kate Crawford (2021)

- Origin Stories: Plantations, Computers, and Industrial Control Whittaker (2023)

- The Secret History of Women in Coding Clive Thompson (2019)

- Gamification and work games: Examining consent and resistance among Uber drivers Vasudevan & Chan (2022).

- Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. Chu et al. (2023).

- Beyond opening up the black box: Investigating the role of algorithmic systems in Wikipedian organizational culture Geiger (2017).

- All That’s Happening behind the Scenes: Putting the Spotlight on Volunteer Moderator Labor in Reddit Li, Hecht, & Chancellor (2022).

- The Civic Labor of Volunteer Moderators Online Matias (2019).

Tech & Labor (2023)

- Inside the AI Factory: the humans that make tech seem human (2023)

- The secret lives of Google raters (2017)

- How the AI industry profits from catastrophe (2023)

- Revolt of the NYC Delivery Workers (2021)

- Ghost Work Mary Gray & Siddarth Suri (2019)

- Atlas of AI Kate Crawford (2021)

- Origin Stories: Plantations, Computers, and Industrial Control Whittaker (2023)

- The Secret History of Women in Coding Clive Thompson (2019)

- Gamification and work games: Examining consent and resistance among Uber drivers Vasudevan & Chan (2022).

- Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. Chu et al. (2023).

- Beyond opening up the black box: Investigating the role of algorithmic systems in Wikipedian organizational culture Geiger (2017).

- All That’s Happening behind the Scenes: Putting the Spotlight on Volunteer Moderator Labor in Reddit Li, Hecht, & Chancellor (2022).

- The Civic Labor of Volunteer Moderators Online Matias (2019).

Tech & Labor (2023)

- Inside the AI Factory: the humans that make tech seem human (2023)

- The secret lives of Google raters (2017)

- How the AI industry profits from catastrophe (2023)

- Revolt of the NYC Delivery Workers (2021)

- Ghost Work Mary Gray & Siddarth Suri (2019)

- Atlas of AI Kate Crawford (2021)

- Origin Stories: Plantations, Computers, and Industrial Control Whittaker (2023)

- The Secret History of Women in Coding Clive Thompson (2019)

- Gamification and work games: Examining consent and resistance among Uber drivers Vasudevan & Chan (2022).

- Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. Chu et al. (2023).

- Beyond opening up the black box: Investigating the role of algorithmic systems in Wikipedian organizational culture Geiger (2017).

- All That’s Happening behind the Scenes: Putting the Spotlight on Volunteer Moderator Labor in Reddit Li, Hecht, & Chancellor (2022).

- The Civic Labor of Volunteer Moderators Online Matias (2019).