Linguistic and social network coevolution: joint analysis of heterogeneous sources of information in Twitter

Sébastien Lerique, Jacobo Levy-Abitbol, Márton Karsai & Éric Fleury

IXXI, École Normale Supérieure de Lyon

Questions

-

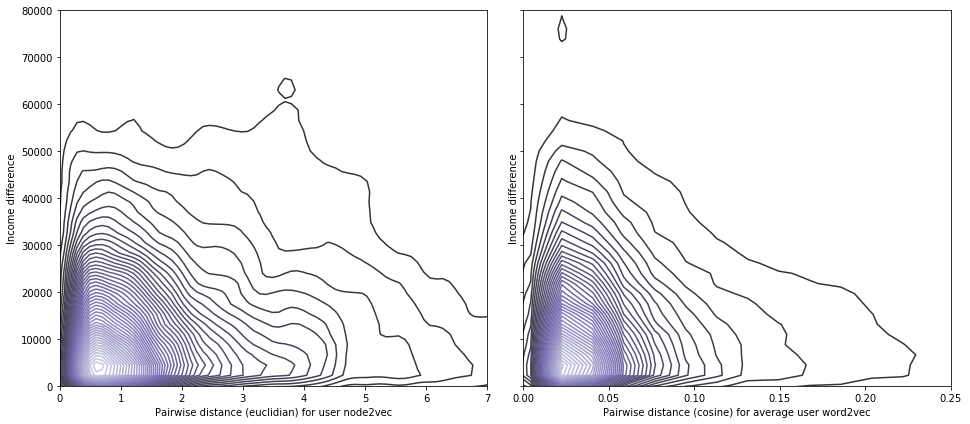

2-way: how much do network structure and feature structure correlate

-

3-way: how well does feature set 1 + network structure predict feature set 2

(and how much does network structure help in predicting) -

Combined: how much can we compress network + features (down to what dimension)

network—feature dependencies

network—feature independence

Use deep learning to create embeddings

Sociolinguistics on 25% of the GMT+1/GMT+2 twittosphere in French

Questions

On Twitter

A framework

Graph convolutional neural networks + Auto-encoders

Current challenges

With great datasets come great computing headaches

How is this framework useful

Speculative questions we want to ask

Graph-convolutional neural networks

\(H^{(l+1)} = \sigma(H^{(l)}W^{(l)})\)

\(H^{(0)} = X\)

\(H^{(L)} = Z\)

\(H^{(l+1)} = \sigma(\color{DarkRed}{\tilde{D}^{-\frac{1}{2}}\tilde{A}\tilde{D}^{-\frac{1}{2}}}H^{(l)}W^{(l)})\)

\(H^{(0)} = X\)

\(H^{(L)} = Z\)

\(\color{DarkGreen}{\tilde{A} = A + I}\)

\(\color{DarkGreen}{\tilde{D}_{ii} = \sum_j \tilde{A}_{ij}}\)

Kipf & Welling (2016)

Neural networks

x

y

green

red

\(H^{(l+1)} = \sigma(H^{(l)}W^{(l)})\)

\(H^{(0)} = X\)

\(H^{(L)} = Z\)

Inspired by colah's blog

Semi-supervised GCN netflix

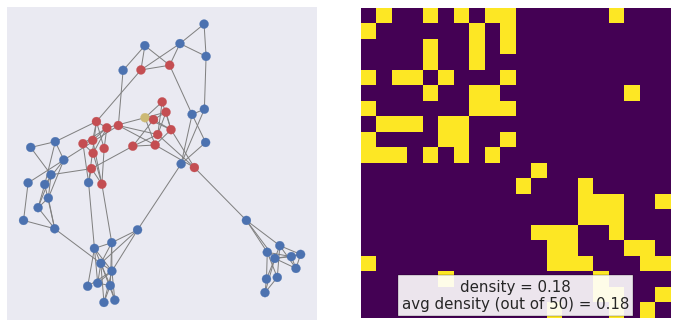

Four well-marked communities of size 10, small noise

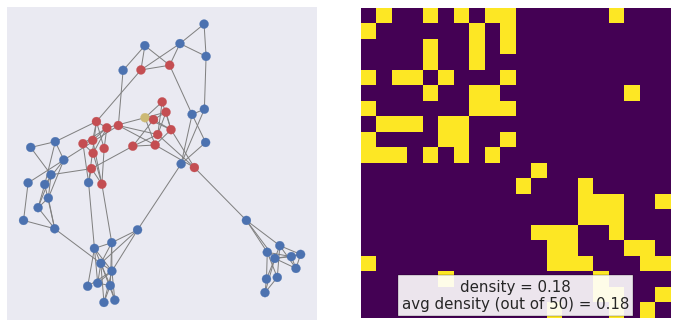

More semi-supervised GCN netflix

Overlapping communities of size 12, small noise

Two feature communities in a near-clique, small noise

Five well-marked communities of size 20, moderate noise

Auto-encoders

From blog.keras.io

- Bottleneck compression → creates embeddings

- Flexible training objectives

- Free encoder/decoder architectures

high dimension

high dimension

low dimension

Example — auto-encoding MNIST digits

MNIST Examples by Josef Steppan (CC-BY-SA 4.0)

60,000 training images

28x28 pixels

784 dims

784 dims

2D

From blog.keras.io

GCN + Auto-encoders = 🎉💖🎉

node features

embedding

GCN

node features

adjacency matrix

Socio-economic status

Language style

Topics

Socio-economic status

Language style

Topics

Compressed & combined representation of nodes + network

Kipf & Welling (2016)

Scaling

node2vec, Grover & Leskovec (2016)

Walk on triangles

Walk outwards

| Dataset | # nodes | # edges |

|---|---|---|

| BlogCatalog | 10K | 333K |

| Flickr | 80K |

5.9M |

| YouTube | 1.1M | 3M |

| 178K | 44K |

✔

👷

👷

👷

Mutual mention network on 25% of the GMT+1/GMT+2 twittosphere in French

Mini-batch sampling

node2vec, Grover & Leskovec (2016)

walk back \(\propto \frac{1}{p}\)

walk out \(\propto \frac{1}{q}\)

walk in triangle \(\propto 1\)

Walk on triangles — p=100, q=100

Walk out — p=1, q=.01

How is this useful

a.k.a., questions we can (will be able to) ask

2-way: how much do network structure and feature structure interact

Combined: how much can we compress network + features

(down to what dimension)

3-way: how well does feature set 1 + network structure predict feature set 2

(and how much does network structure help in predicting)

Disentangled embeddings

Cluster comparisons

Socioeconomic status

Language style

Speculation

Thank you!

Sébastien Lerique, Jacobo Levy-Abitbol, Márton Karsai & Éric Fleury