The

Augmented Developer

Shifting the mindset, upgrading the toolset..

Becoming more human and less machine.

Less Iron, More Man

Shadi Sharaf

Senior Engineer

WordCamp Lisbon

2025

- 👷🏻♂️Senior Engineer, 20+ years in Web dev, 15 in WP

- 🗣️ Speak about Standards, Debugging, Performance, Testing, AI

- ✈️ Travel junkie

- 🍝 Foodie 🍣🍤🥗

- 🧑🏻💻 Agencies: XWP, Human Made, The Times.

- 📦 Projects: WP Coding Standards, Stream plugin, and others.

- 🤝 Clients: Enterprise publishers, Universities, Banks, Tech

Who am I?

https://slides.com/shadisharaf

- AI (R)Evolution, and Why it matters

- Jargon Demystified: Buzzwords Explained

- AI Workspace: Editors & Assistants

- Mastering the Conversation: PETs

- Rules and Tools: Advanced Customisation

- The Road Ahead: What's Next

- Q&A

Agenda

AI (R)Evolution

When, What, Why, and Why!

1950

"Can machines think?"

Alan Turin

80's

Rise of

Expert Systems

Medical applications, etc

Deep Learning

Evolution

Systems learning to learn!

2010's

Machine Learning Renaissance

Systems learn from data!

90's

ML

Generative AI

Revolution

Systems learn to dream!

2020's

GenAI/LLMs

AI

The Dartmouth Workshop

The birth of AI as a field

1956

?

General Intelligence

(AGI)

System running systems!

AGI

ai-2027.com

AI SCI-FI (or is it?)

What does that mean for us, Engineers?

-

86% of businesses expect AI and technology to transform their operations by 2030.

-

AI and technology are expected to displace 9 million jobs

-

.. and create 11 million others

-

.. resulting in a net increase of 2 million jobs directly related to these technologies.

-

39% of existing worker skills are expected to change or become outdated by 2030.

-

"Software and Applications Developers" remain among the fastest-growing jobs in absolute numbers.

-

So? Good news?

– World Economic Forum

Job Report 2025 *

* https://www.weforum.org/publications/the-future-of-jobs-report-2025/in-full/2-jobs-outlook/#2-1-total-job-growth-and-loss

Change is the only CONST in life

– Heraclitus

Shifting Mindset

Shift

Outdated

- I'm good at my job!

- I'm a Senior engineer!

- My company is stable!

- I have the context!

- I'll wait and see!

- It's a hype! It'll go away!

- AI writes s**t code!

- AI can't code on its own!

- Document better!

- Communicate clearer!

- Iterate quicker!

- Experiment more!

- Prioritise learning!

- Leverage automation!

- Embrace Testing!

- Architect, delegate,

- BE THE BOSS!

Shift

Outdated

- I'm good at my job!

- I'm a Senior engineer!

- My company is stable!

- I have the context!

- I'll wait and see!

- It's a hype! It'll go away!

- AI writes s**t code!

- AI can't code on its own!

LLMs, Agents, MCPs, PETs, Rules, Tools,

OH MY!

Jargons demystified!

Pre-trained autocomplete algorithm trained on massive amount of data and code, that predicts next most likely word or code in a sequence based on context and its training data.

LLMs: Large Language Models

Systems that augment LLMs with tools ( files, service APIs, system tools, etc), and context ( code, docs, data ), empowering it to make decisions and execute actions (editing files, running commands, calling APIs) to achieve a certain outcome.

Agents: LLMs with tools

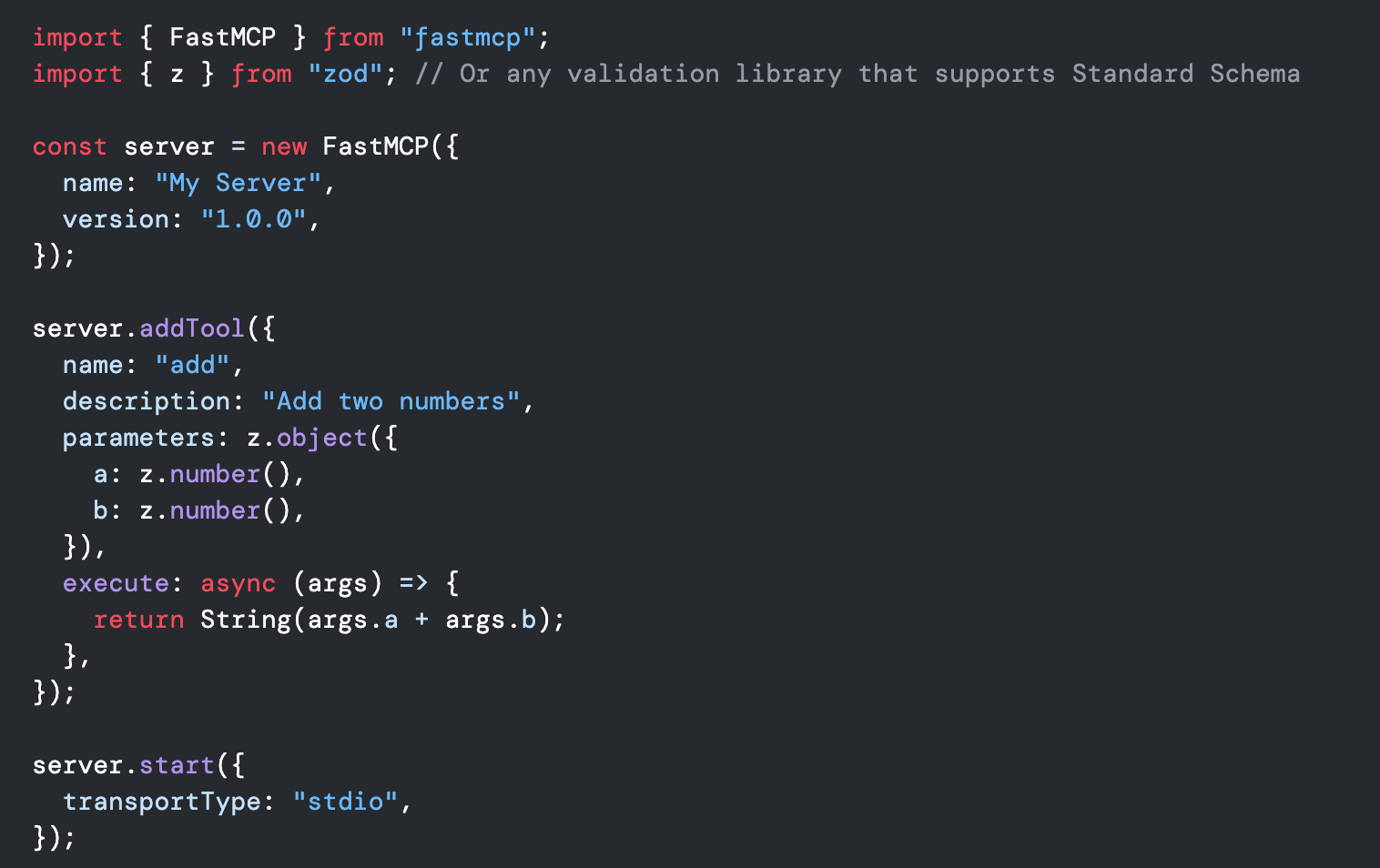

Tiny applications that extend the functionality of an agent, or enable it to access another system through APIs or system tools, to get data or execute actions.

MCP servers:

Model Context Protocol

Different techniques to form AI prompts to enhance AI context awareness, influence its generation process, and control its output.

PETs: Prompt Engineering

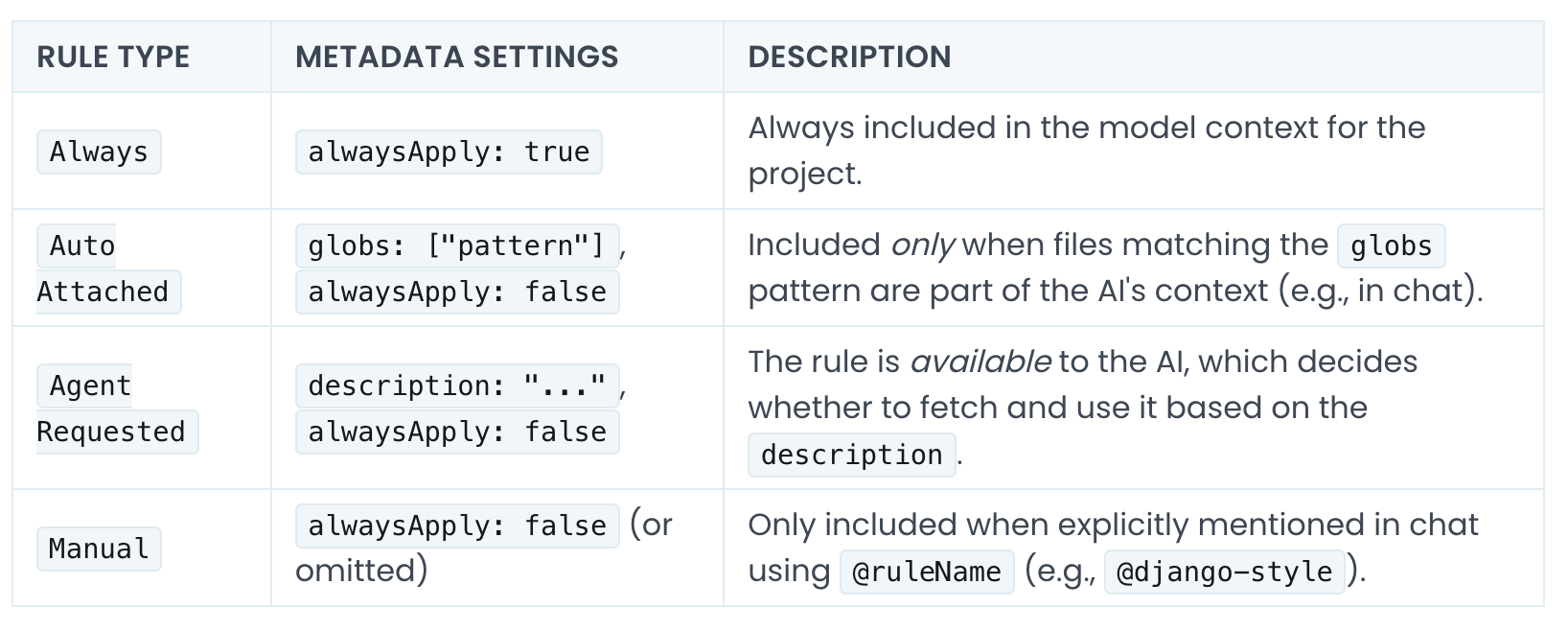

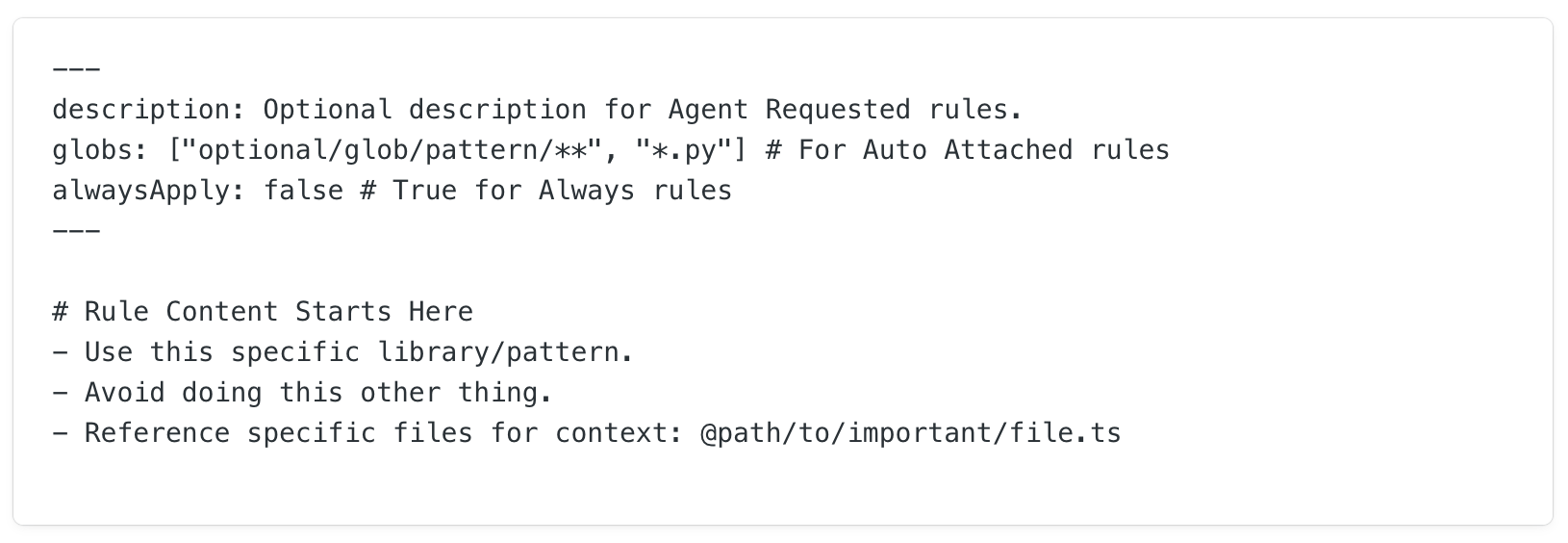

Just like any engineering team, LLMs can be taught to do things the way your team or product does it, think standards and conventions and documentation for your project and technologies.

Rules: Teaching AI your ways

Tokens are a single word or character the model uses to understand input or generate output. Different models uses different tokenisation algorithms. Typical cost unit.

Tokens: LLM Currency

The amount of data a model can keep in memory while it considers the input and generates the output.

Models typically have ways to prioritise context data for long conversations, which means it can _forget_ data as the conversation goes longer.

Context Length: Attention span

The creativity (randomness) threshold allowed for the model to choose between different possible word combinations, the colder the more deterministic and repetitive the output is, the hotter the more creative and random the output is.

Typically between 0 and 2,

Temperature: Probability control

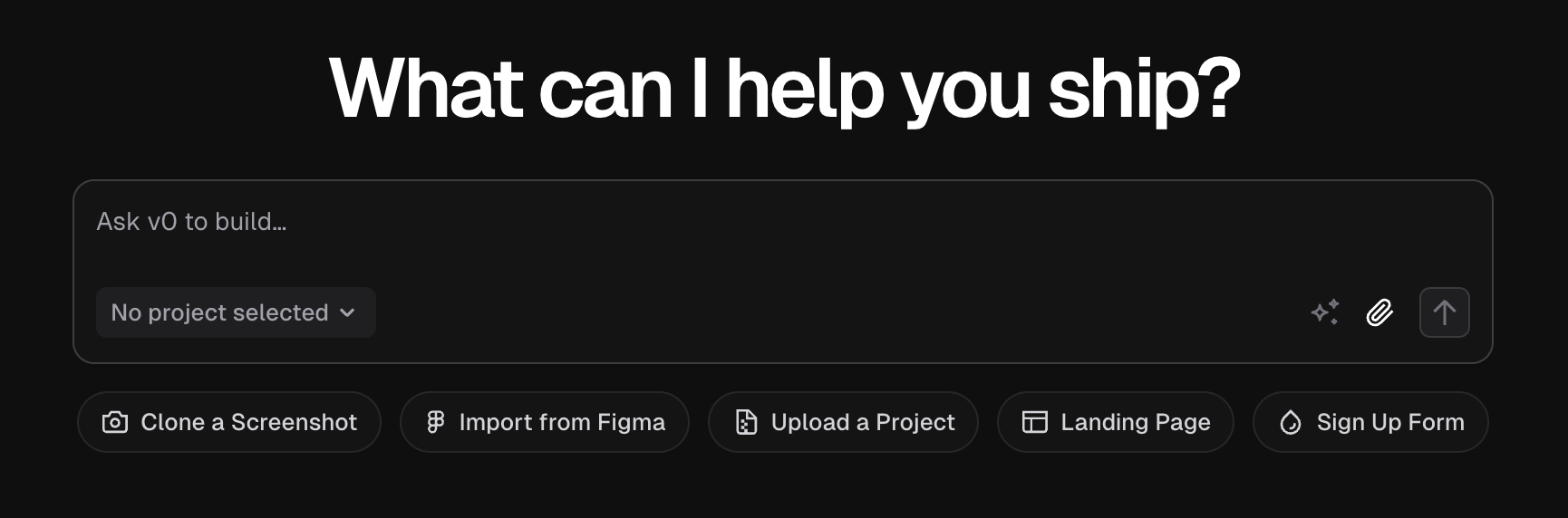

AI Workspace

Editors, Services, Platforms

Touch points

Web platforms

Mobile/Desktop clients

Terminals

Browser extensions

1

Chat interface

Editor extensions

AI IDEs

2

Editors

Low/No-code platform

3

Services / Tools

Chat interface

Web/Mobile:

- OpenAI's ChatGPT

- Anthropic's Claude

- Google Gemini

- DeepSeek

- Perplexity

CLI client

- Aider

- Continue

- Claude Code

- Codex

Terminals

- Warp / iTerm

Editors

VSCode extensions:

- GitHub CoPilot

- Cline

- Continue

- Amazon Q

- Microsoft's Intellicode

Editor forks

- Cursor

- Windsurf (Codeium)

- Trae (ByteDance)

Services / Tools

UX AI

- Figma

- UXPilot

- Galileo

Prototyping AI

- Vercel's V0

- Bolt (OS)

- Lovable

- Replit

AI mates

- Devin

- Wingman

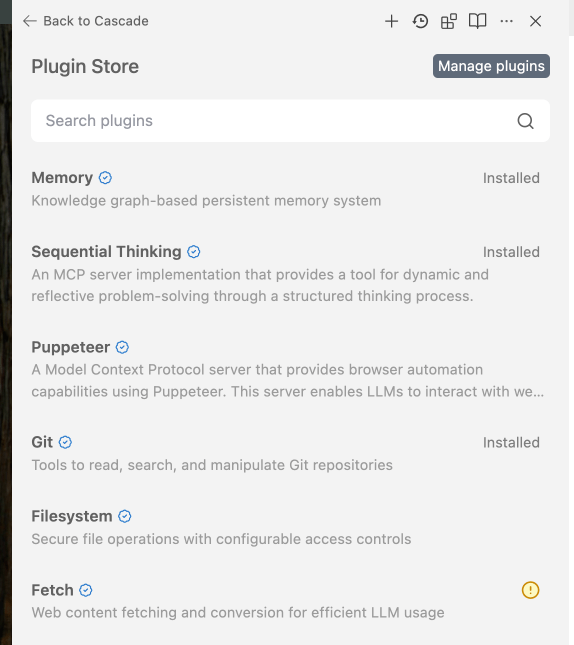

Tools / MCP Servers

Giving LLMs powers.

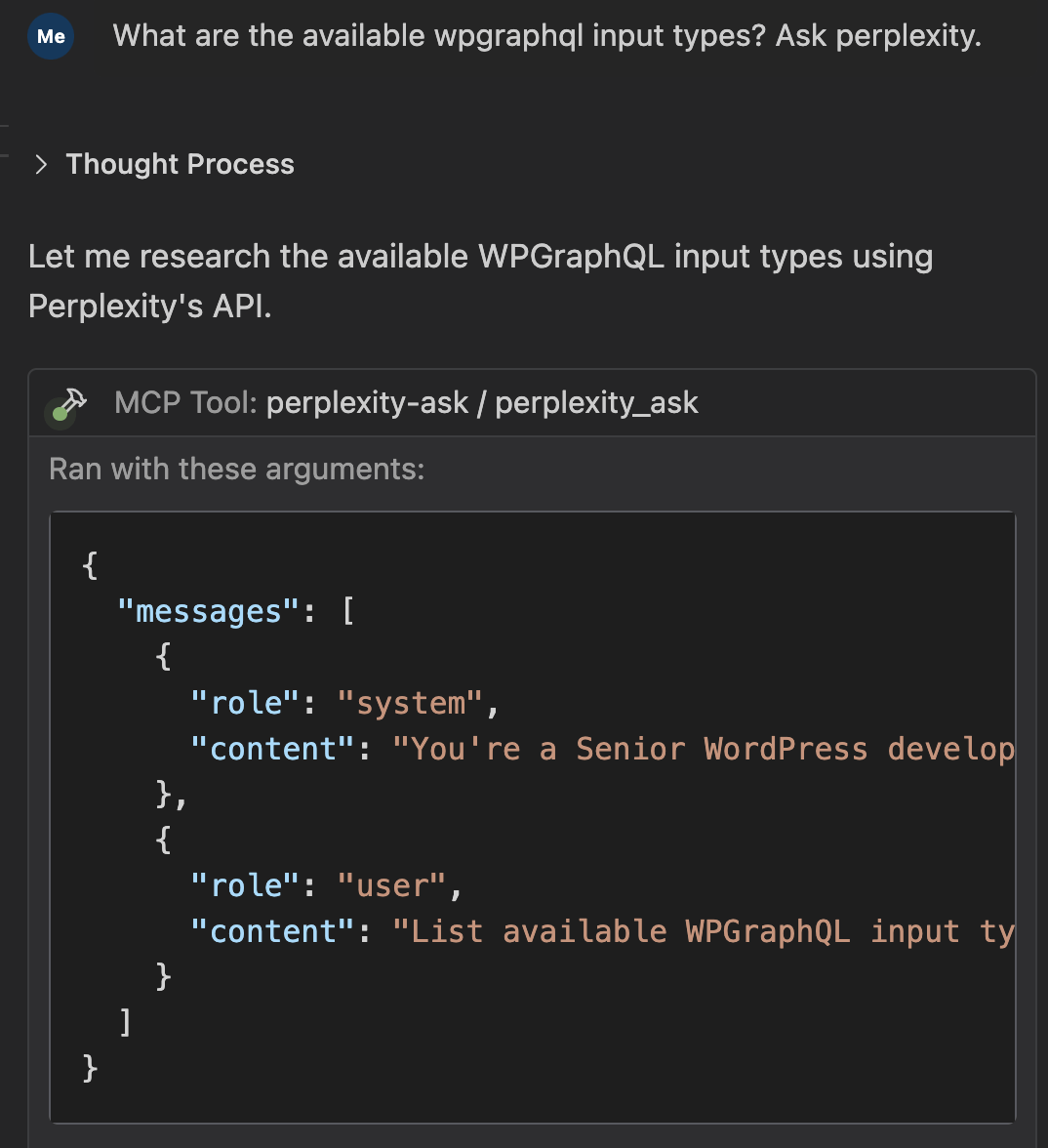

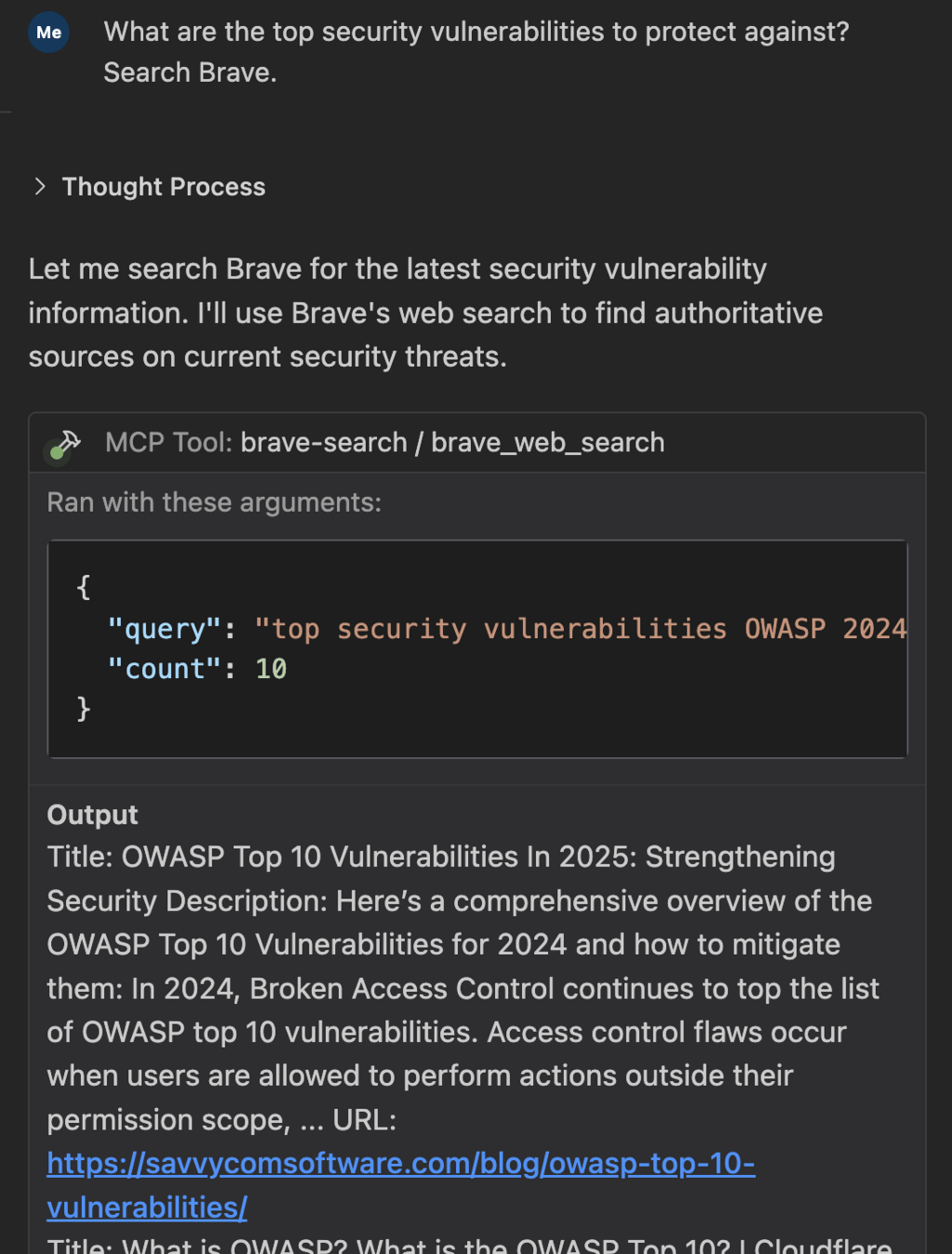

MCP

Model Context Protocol

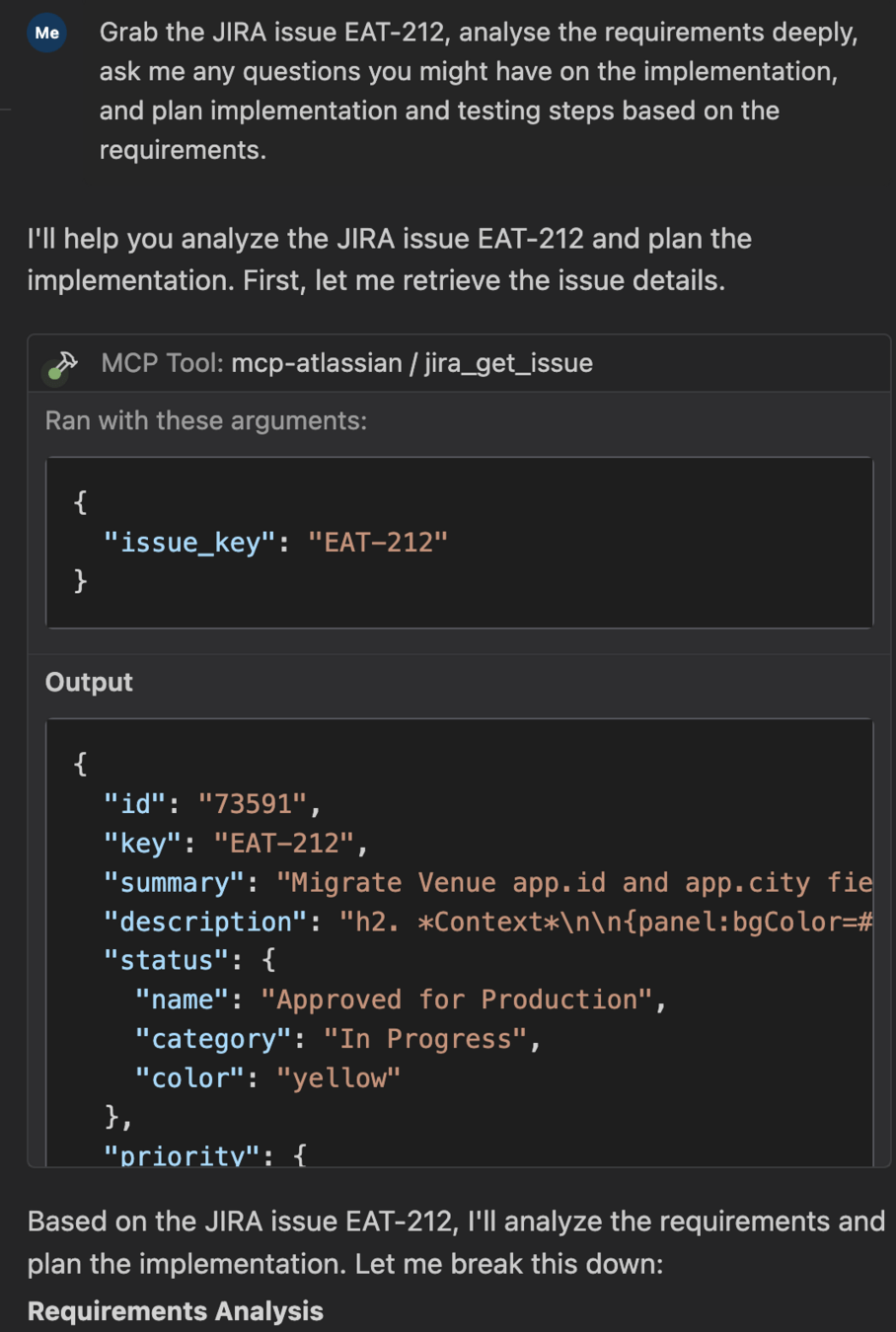

What are examples of MCP servers I can use? 🤔

- Search

- Perplexity

- Brave Search

MCP Examples

- Services

- JIRA

- GitHub

- Figma

- ...

MCP Examples

- Servers

- GraphQL

- MySQL

- ElasticSearch

- Automation/Testing/Debugging

- Playwright

- Puppeteer

- Browser dev tools!

MCP Examples

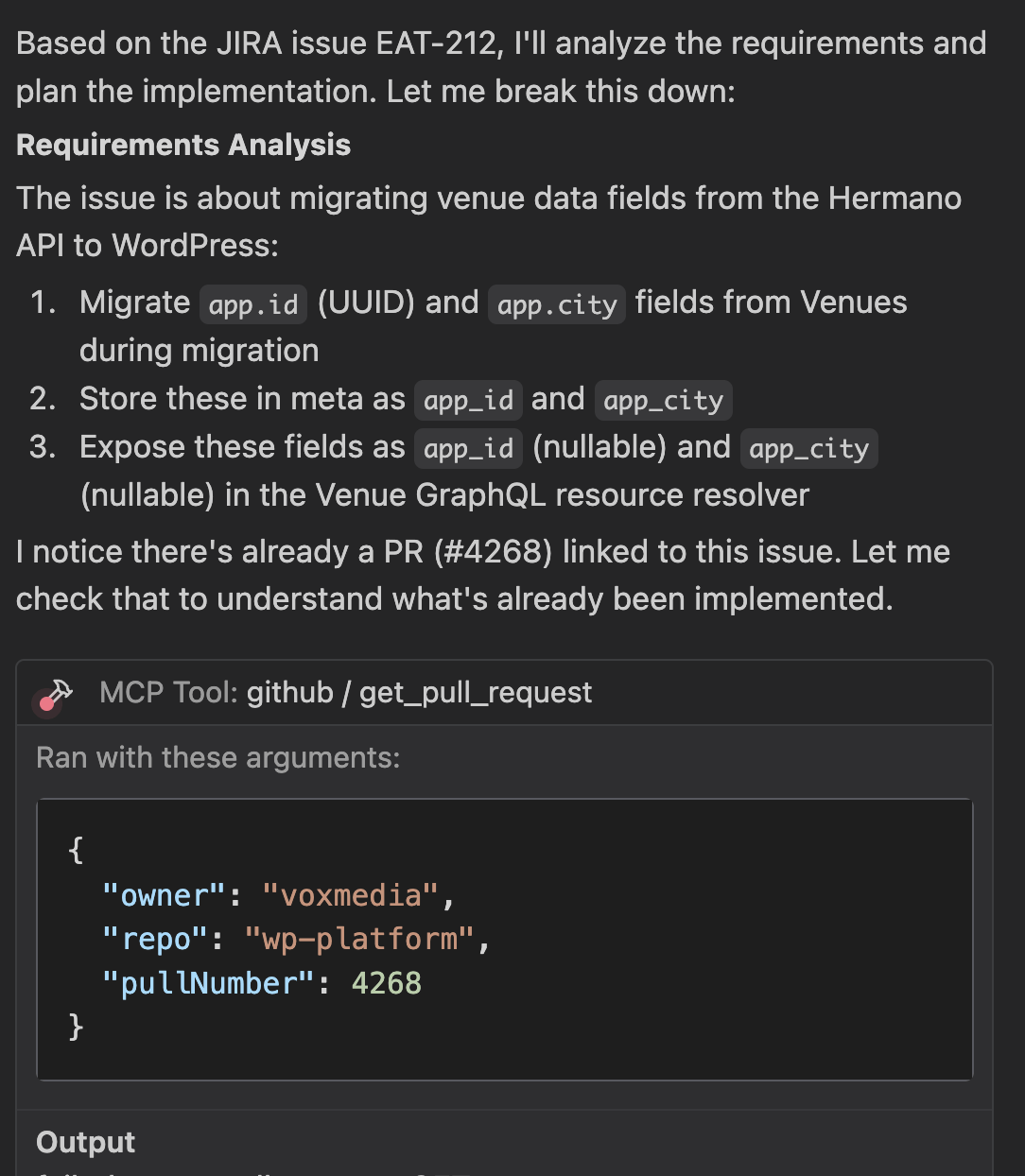

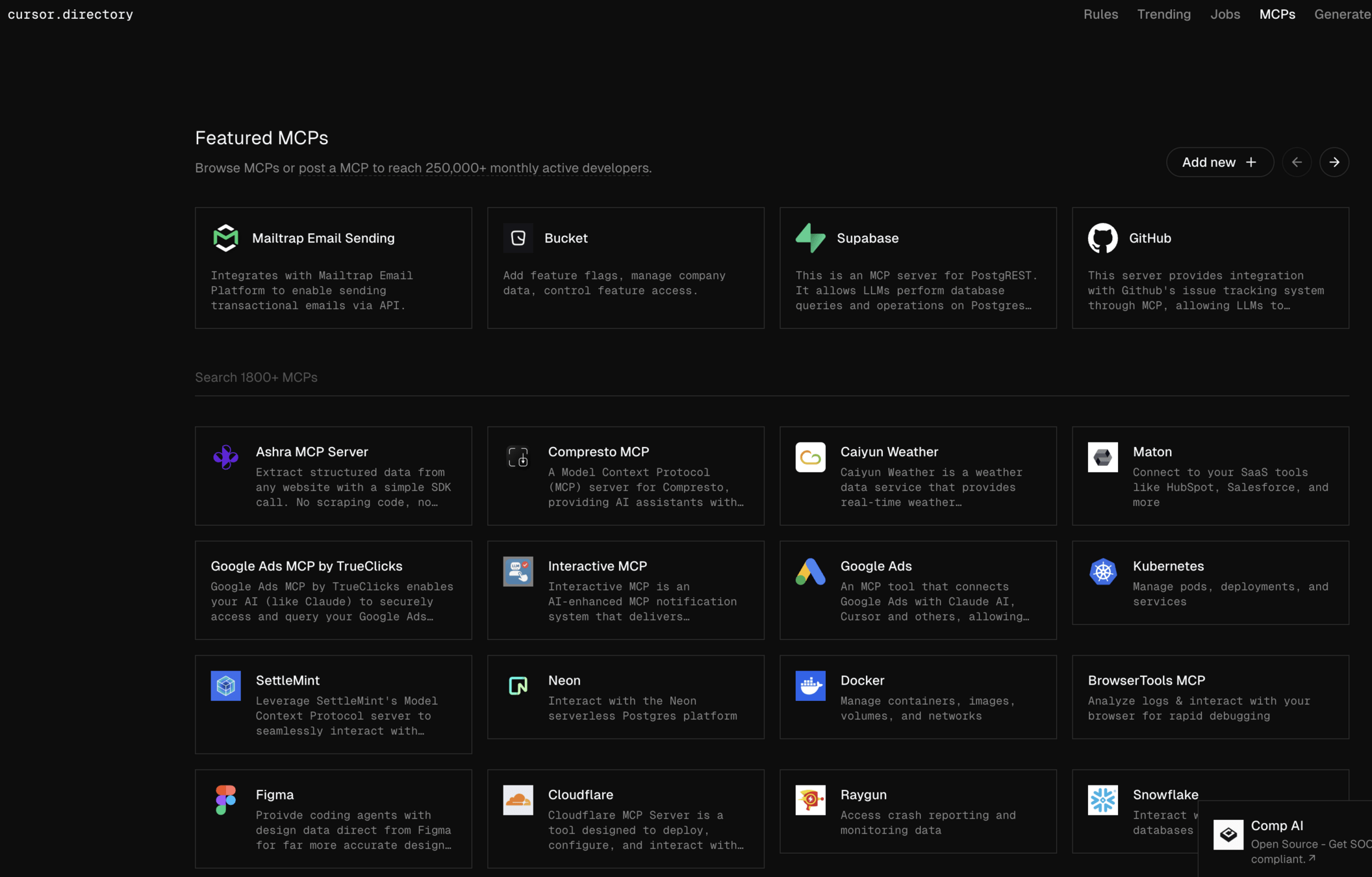

How to discover more MCPs? 😳

- Glama's MCP Directory

- Trae's MCP.so

- Cursor's MCP directory

- Pulse's MCP

- Google!

MCP Directories

- Your editor!

MCP Directories

Can I write my own MCP? 🤔

Rules

Whipping LLMs into obedience.

- Global rules

- Project rules

- Glob rules

- Custom rules

Rules: How?

- File structures and Naming conventions

- Requirements (Security, Performance, Accessibility)

- Framework-specific rules (WordPress, React, etc)

- Design patterns

- Testing approaches

- Documentation style

- ..

- Reasoning methodology

- Prompt Engineering Techniques

Rules: Why?

Hey Gemini, read our engineering guidelines at xx, create MDC rules for the project, concise, simple, and comprehensive, in this format: xx.

How can I write my own rules? 🤔

Directories

- cursor.directory

- google: awesome cursor rules! 😳

Generators

- cursor.directory

- 10xrules.ai

- cursorpractice.com

Rules: Examples and generators

- Split: Use different rule files for different technologies/frameworks

- Concise: Be concise about rules to avoid excessive context hijacking

- Examples: Link to example implementations where possible

- Project context: Provide basic project context and functionality

- Rinse and repeat: Try different rules, stick with what works

Tips

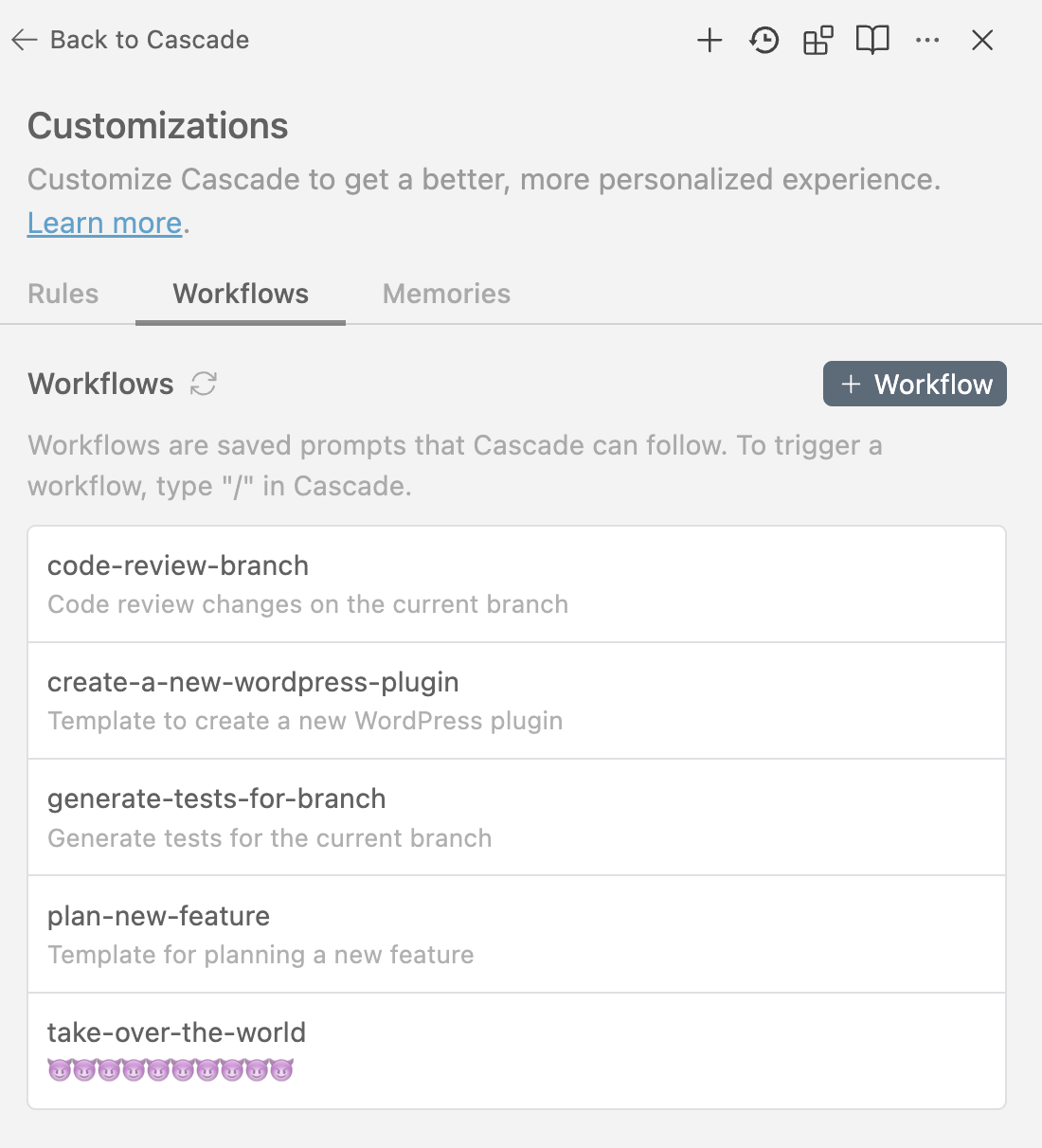

DRY for prompts!

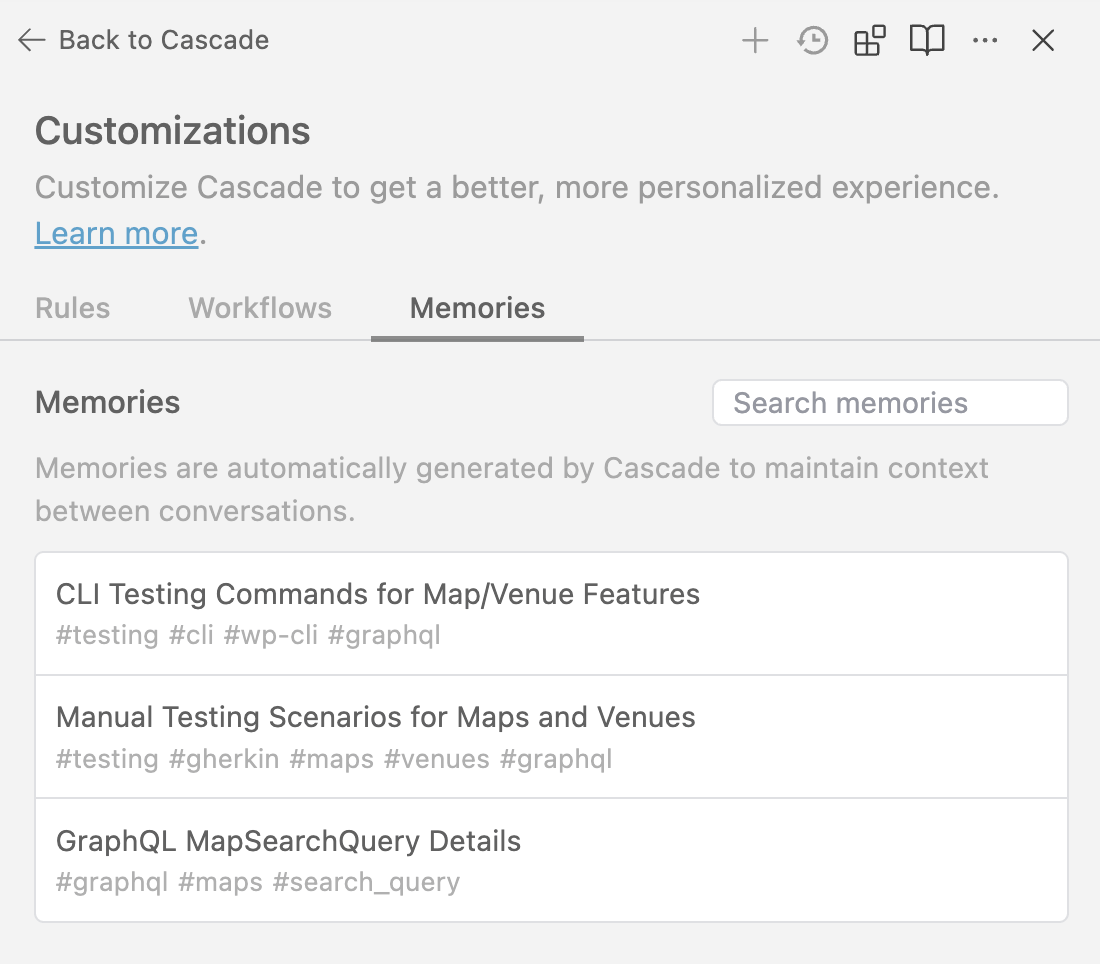

Workflows

-

Memory

- Built in memory

-

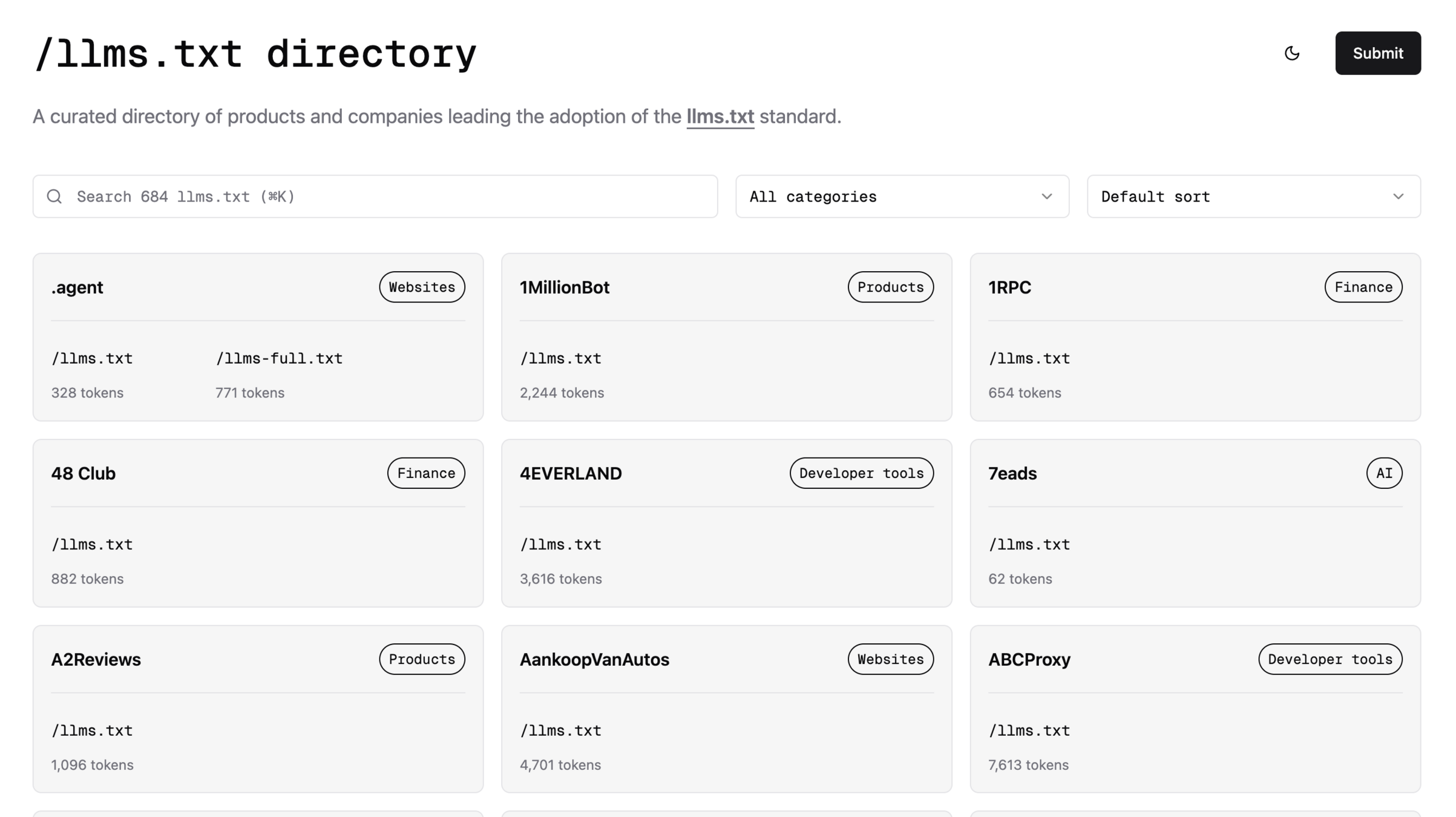

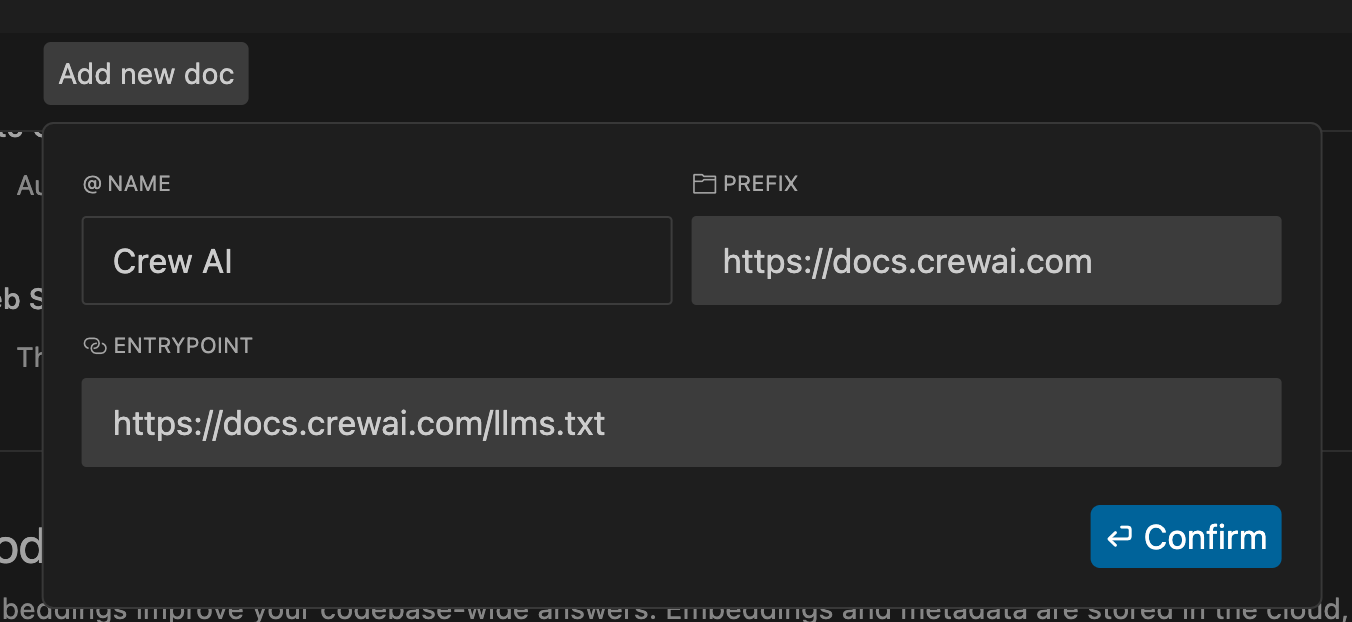

Documentation

- llms.txt

Knowledge / Docs

Prompt Engineering

How to talk to AI

Prompt engineering is the art and science of designing and optimizing prompts to guide AI models, particularly LLMs, towards generating the desired responses.

Prompt Engineering

Zero-shot:

Parse a customer's pizza order into valid JSON:

"I want a small pizza with cheese, tomato sauce, and pepperoni."

1. Zero-shot, multi-shot

Output 1:

{

"size": "small",

"type": "pizza",

"ingredients": [

"cheese",

"tomato sauce",

"pepperoni"

]

}

Zero-shot:

Parse a customer's pizza order into valid JSON:

"I want a small pizza with cheese, tomato sauce, and pepperoni."

1. Zero-shot, multi-shot

Output 2:

{

"size": "small",

"sauce": "tomato sauce",

"toppings": ["cheese", "pepperoni"]

}

Multi-shot:

Parse a customer's pizza order into valid JSON:

"I want a small pizza with cheese, tomato sauce, and pepperoni."

Example

{

"size": "small",

"sauce": "{SAUCE}",

"toppings": ["TOPPING 1", "TOPPING 2", ..]

}

1. Zero-shot, multi-shot

You are an experienced software engineer focused on security and performance. You're fluent in PHP, JS, WordPress, and React. You're patient, thorough, and you ask questions to clarify any points when needed.

The requirement is:

2. Role prompting

You are an experienced software engineer focused on security and performance. You're fluent in PHP, JS, WordPress, and React. You're patient, thorough, and you ask questions to clarify any points when needed.

You are working on a commercial WordPress plugin for syncing data across site in a multi-site installation.

The requirement is:

3. Contextual prompting

You are an experienced software engineer focused on security and performance. You're fluent in PHP, JS, WordPress, and React. You're patient, thorough, and you ask questions to clarify any points when needed.

You are working on a commercial WordPress plugin for syncing data across site in a multi-site installation.

The requirement is: X

Let's think step by step.

4. Chain of Thought (CoT)

You are an experienced software engineer focused on security and performance. You're fluent in PHP, JS, WordPress, and React. You're patient, thorough, and you ask questions to clarify any points when needed.

You are working on a commercial WordPress plugin for syncing data across site in a multi-site installation.

The requirement is: X

Let's think step by step. Break down the problem into smaller points:

Point 1:

Point 2:

Analysis: a) Maintainability: b) Security c) Performance

Solutions: a) Solution 1: (pros/cons), Solution 2: (pros/cons)

4.CoT + single-shot

Three experienced software engineers focused on security and performance. They're fluent in PHP, JS, WordPress, and React. They're patient, thorough, and they ask questions to clarify any points when needed. They discuss the following requirement, trying to solve it step by step, and make sure the result is correct.

The requirement is: X

5. Tree of Thought (ToT)

Three experienced software engineers focused on security and performance. They're fluent in PHP, JS, WordPress, and React. They're patient, thorough, and they ask questions to clarify any points when needed. They discuss the following requirement with a panel discussion, trying to solve it step by step, and make sure the result is correct and avoid penalty.

The requirement is: X

6. Panel GPT (ToT)

How do I use any of that?

- Develop your persona(s), add them to a global rule in your editor.

- Persist your working steps in global rules too, (step-by-step thinking/CoT, one or few-shot examples of AI tackling your requests)

- Make a workflow / custom rule for techniques like ToT for analysis queries.

- Keep a good project documentation for contextual awareness.

- Implement validation loops, so the model would test their work and iterate until the desired outcome is reached (tests, cli commands).

Tips

Resources

Tips

Okay, what's next?

- Experiment: Try, track, train, ongoing process.

- MDCs: Teach your AI how to work.

- MCPs: Level-up your AI with tools (reasoning, APIs, etc).

- Context: AI is useless without context (project, standards, task).

- PETs: Learn AI Language, bring its best.

- Plan: ALWAYS plan before execution, take the time.

- Prompts: Keep inventory of prompts (code review, planning, test).

- Test: Write tests, they're cheaper now!

- Review: ALWAYS review the code.

Final tips

- ☑️ Choose an editor and model

- ☑️ Setup MCPs (search, reasoning, services)

- ☑️ Setup your rules and prompt (standards/code styles, workflows)

- ☑️ Document your project (history, codebase, roadmap, etc)

- ☑️ Keep inventory of prompts (planning, code review, testing, etc)

- ☑️ Learn and experiment with PETs

- ☑️ Keep up to date (newsletters)

Checklist

Thank You!

Questions?

Shadi Sharaf

/shadyvb