Lecture 12: Non-parametric Models

Shen Shen

May 2, 2025

11am, Room 10-250

Intro to Machine Learning

Outline

- Non-parametric models overview

- Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

Outline

- Non-parametric models overview

- Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

Predictive performance and beyond

There’s more to machine learning than predictive performance

“The choices were made to simplify the exposition and implementation: methods need to be transparent to be adopted as part of the election process and to inspire public confidence. [An alternative] might be more efficient, but because of its complexity would likely meet resistance from elections officials and voting rights groups.” —Philip Stark, 2008

Predictive performance and beyond

Even if all we care about is vanilla predicative performance, we should care about non-parametric models

- there are still parameters to be learned to build a hypothesis/model.

- just that, the model/hypothesis does not have a fixed parameterization (e.g. even the number of parameters can change.)

Non-parametric models

- they are usually fast to implement / train and often very effective.

- often a good baseline (especially when the data doesn't involve complex structures like image or languages)

Outline

- Non-parametric models overview

-

Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

-

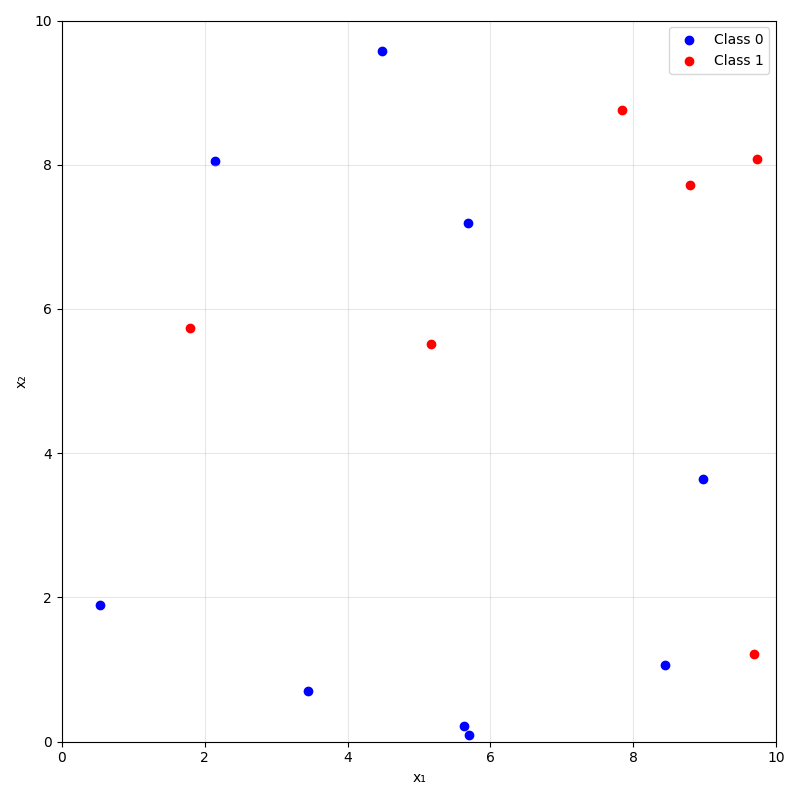

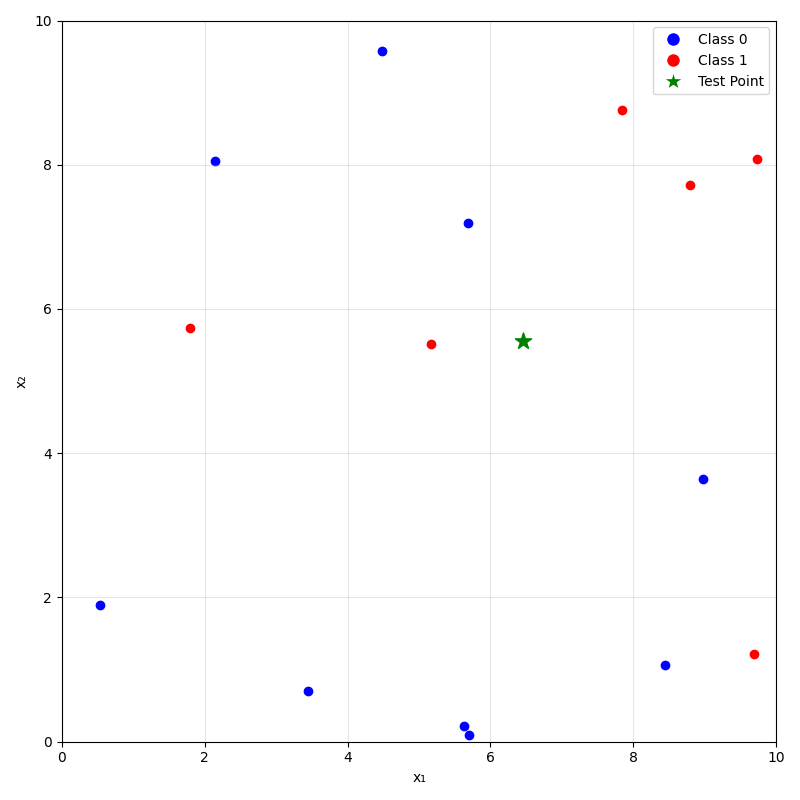

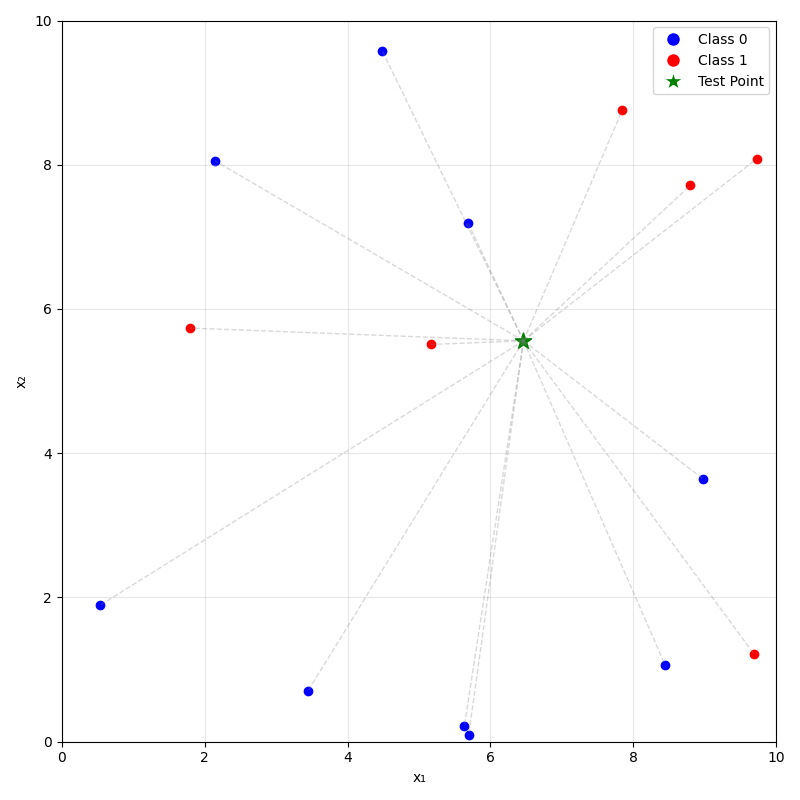

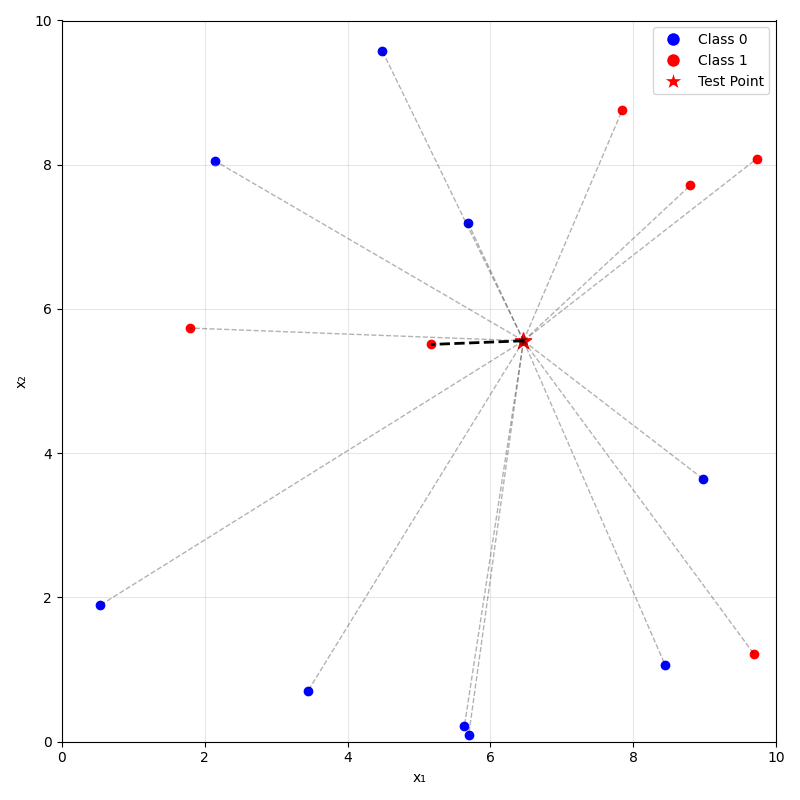

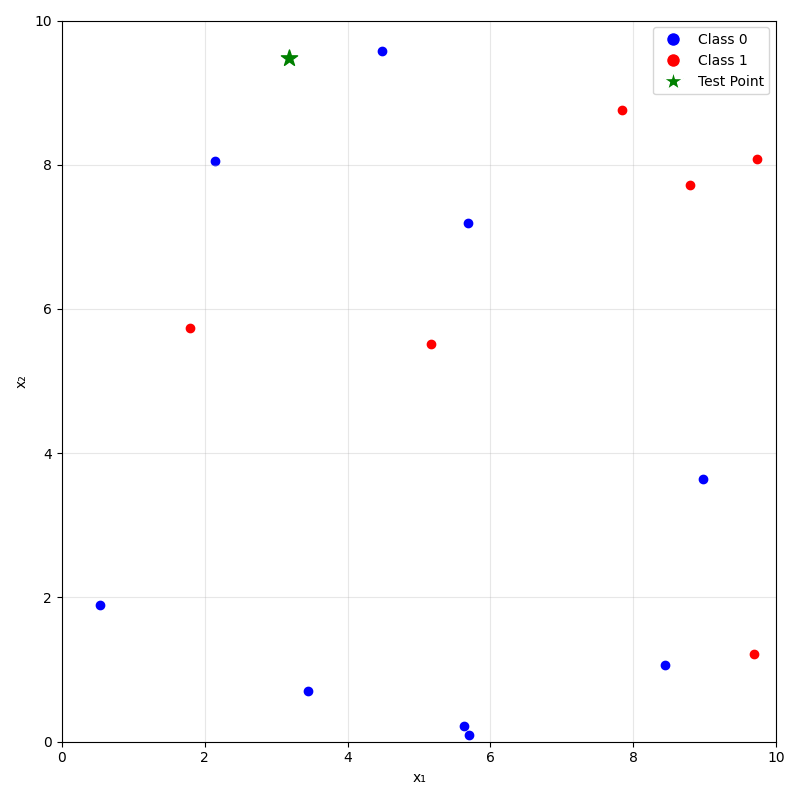

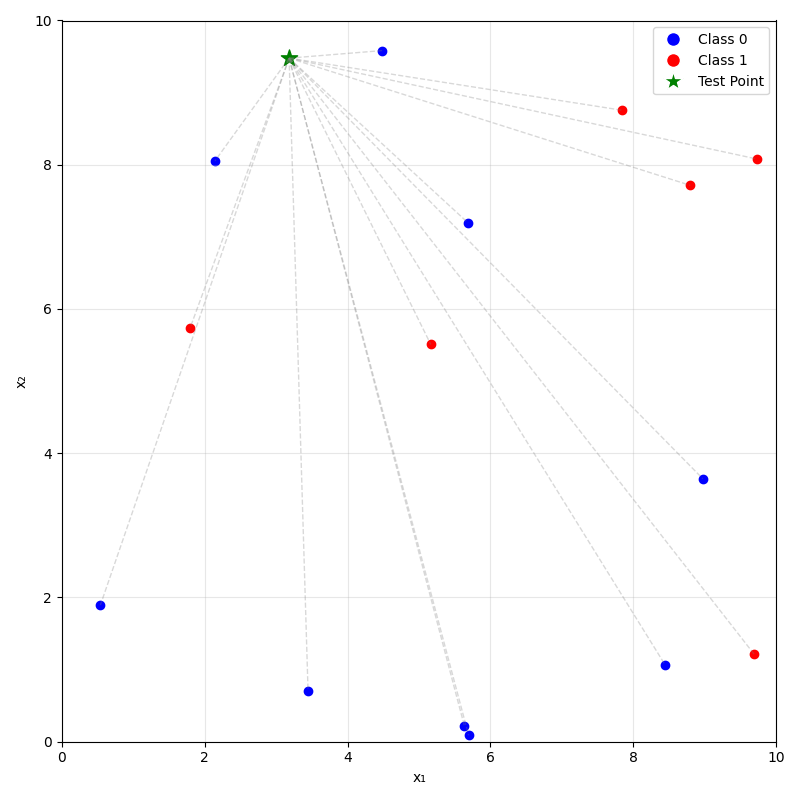

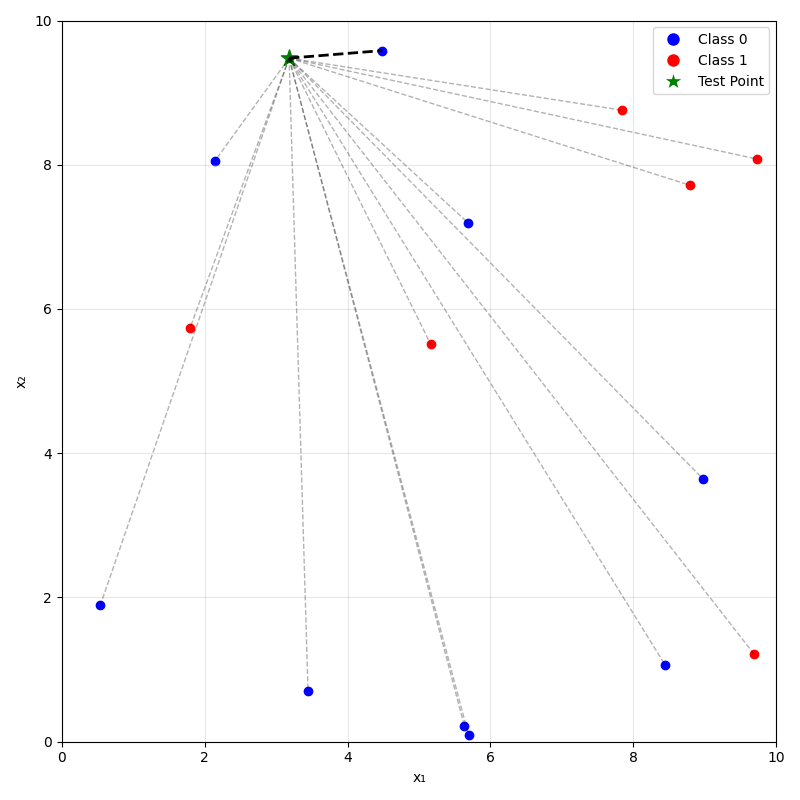

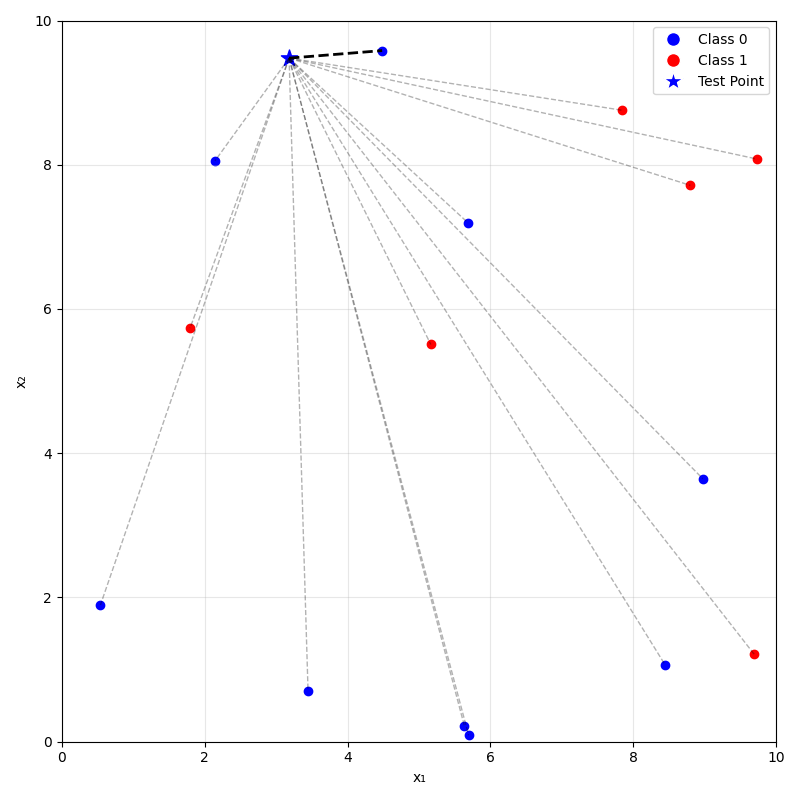

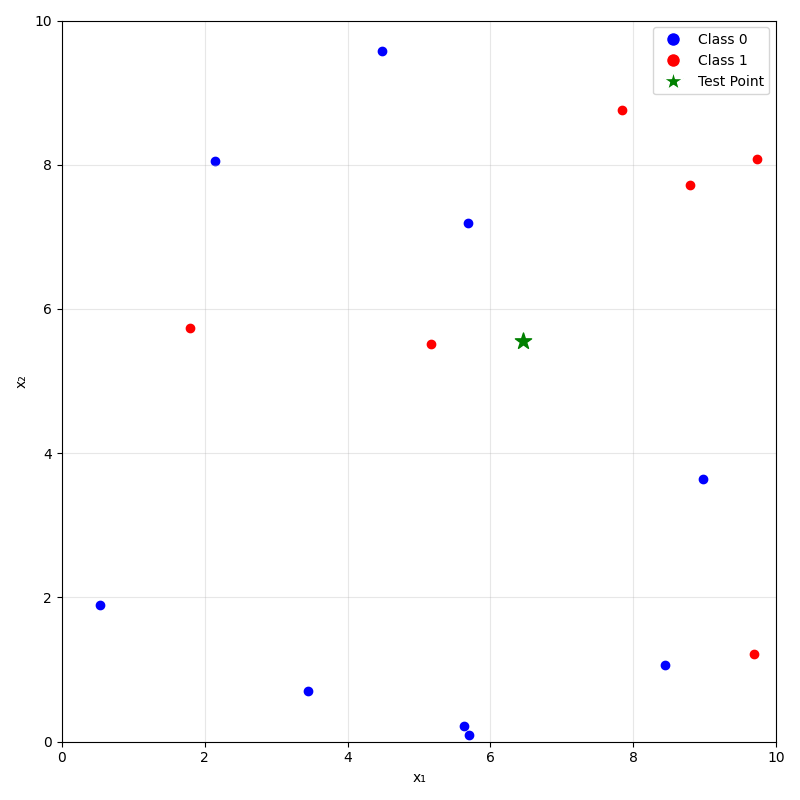

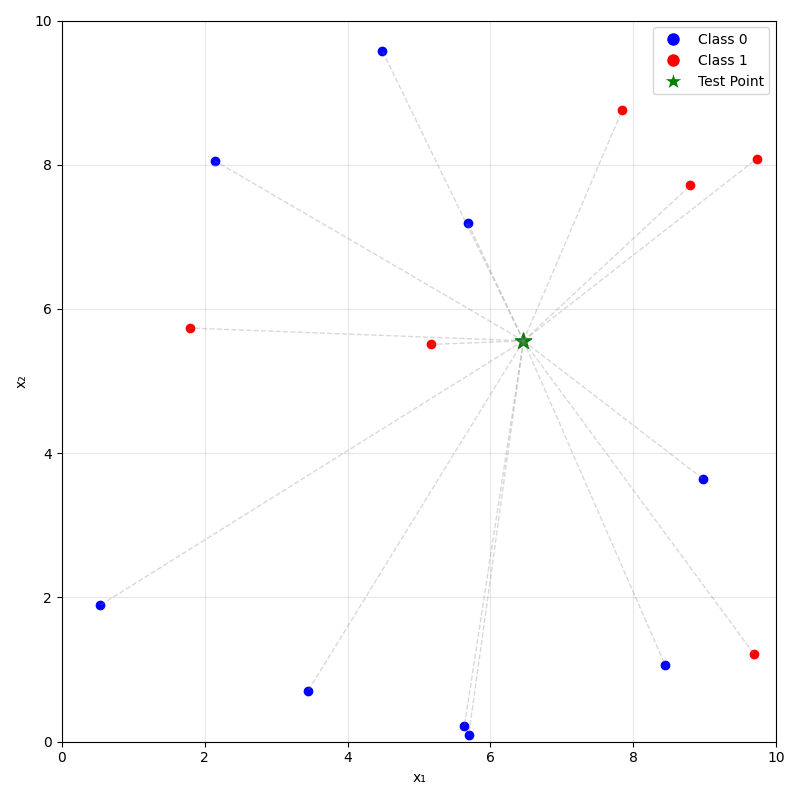

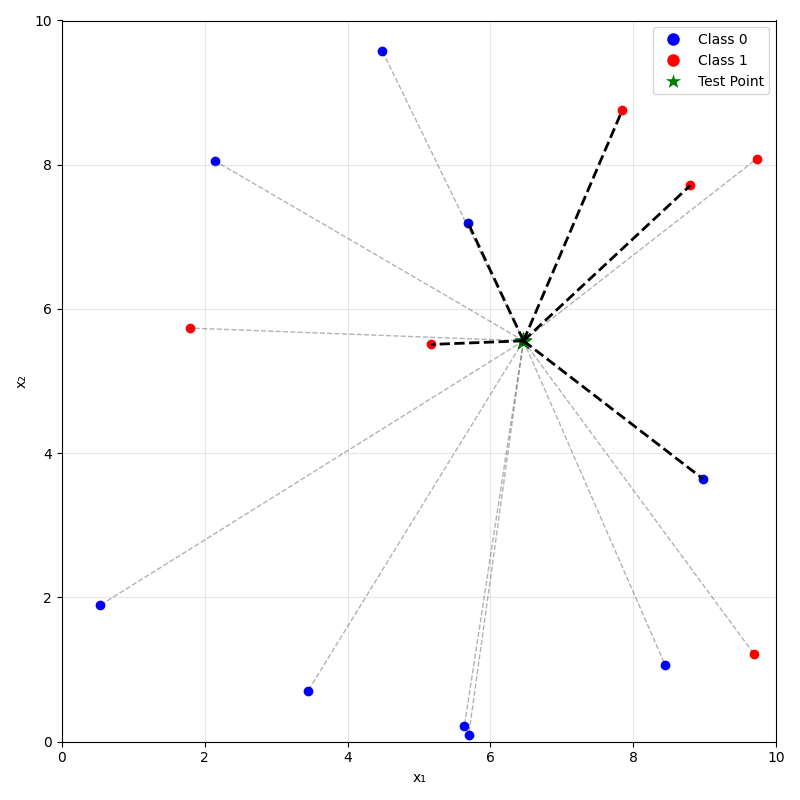

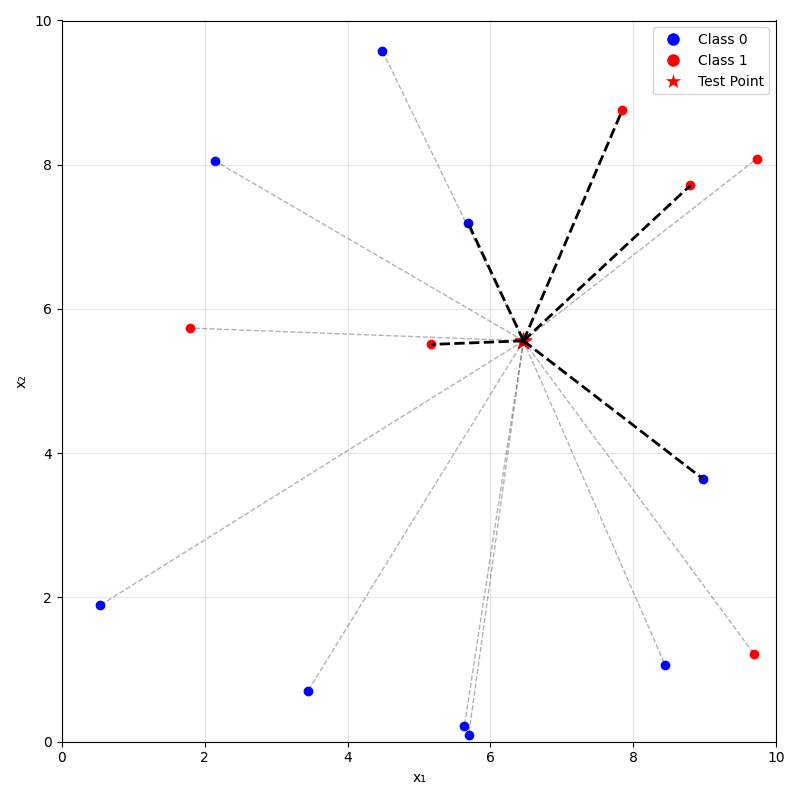

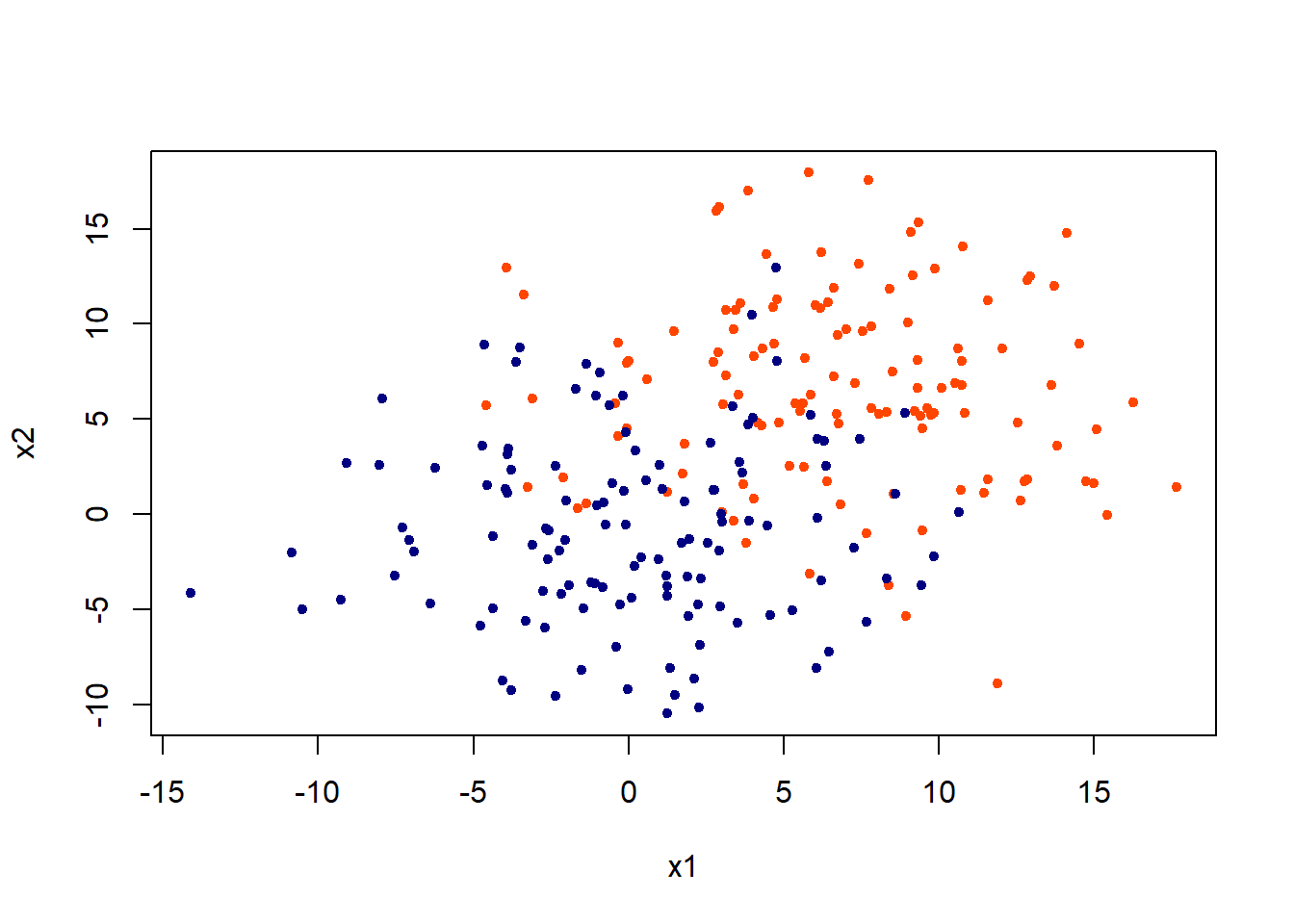

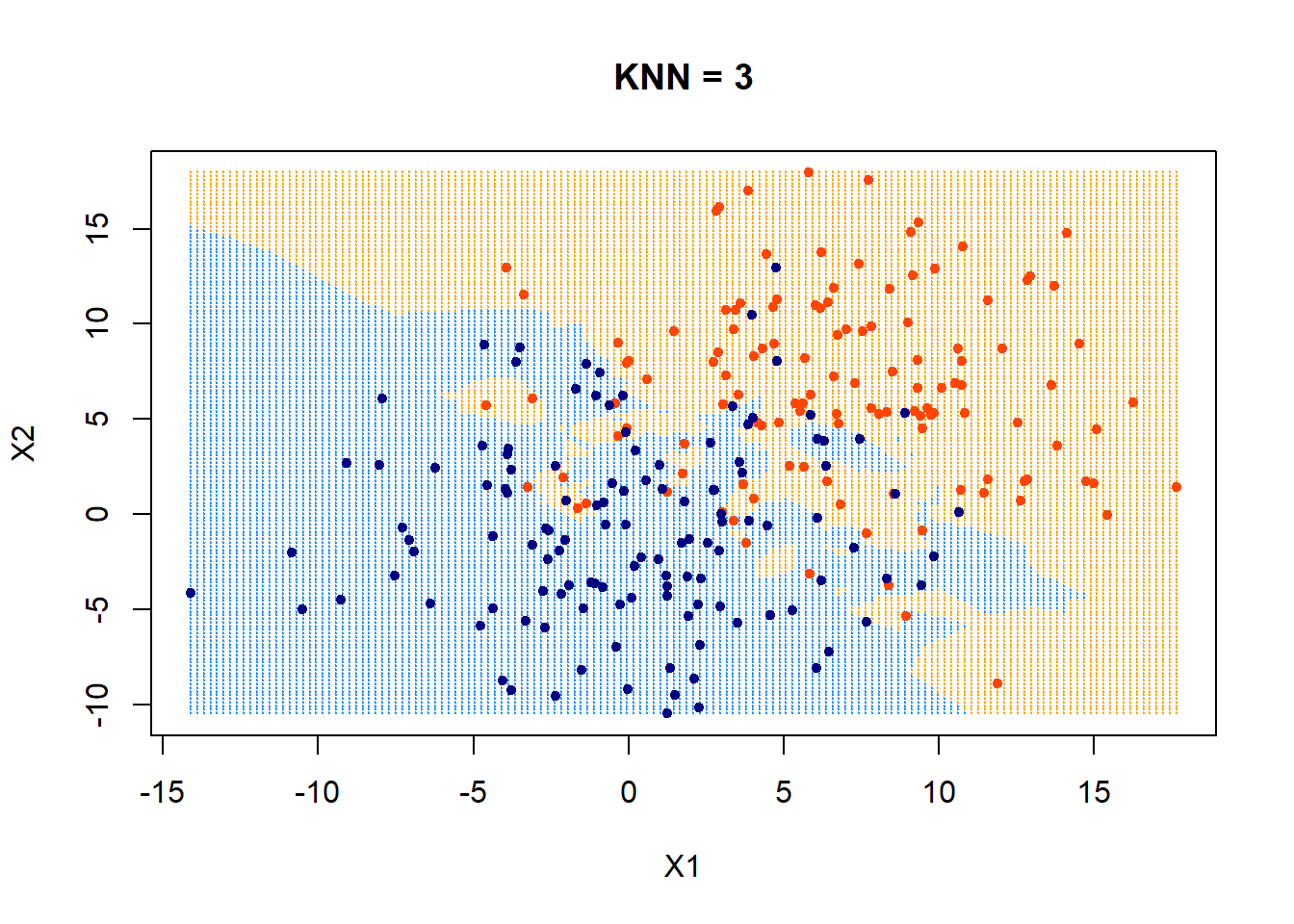

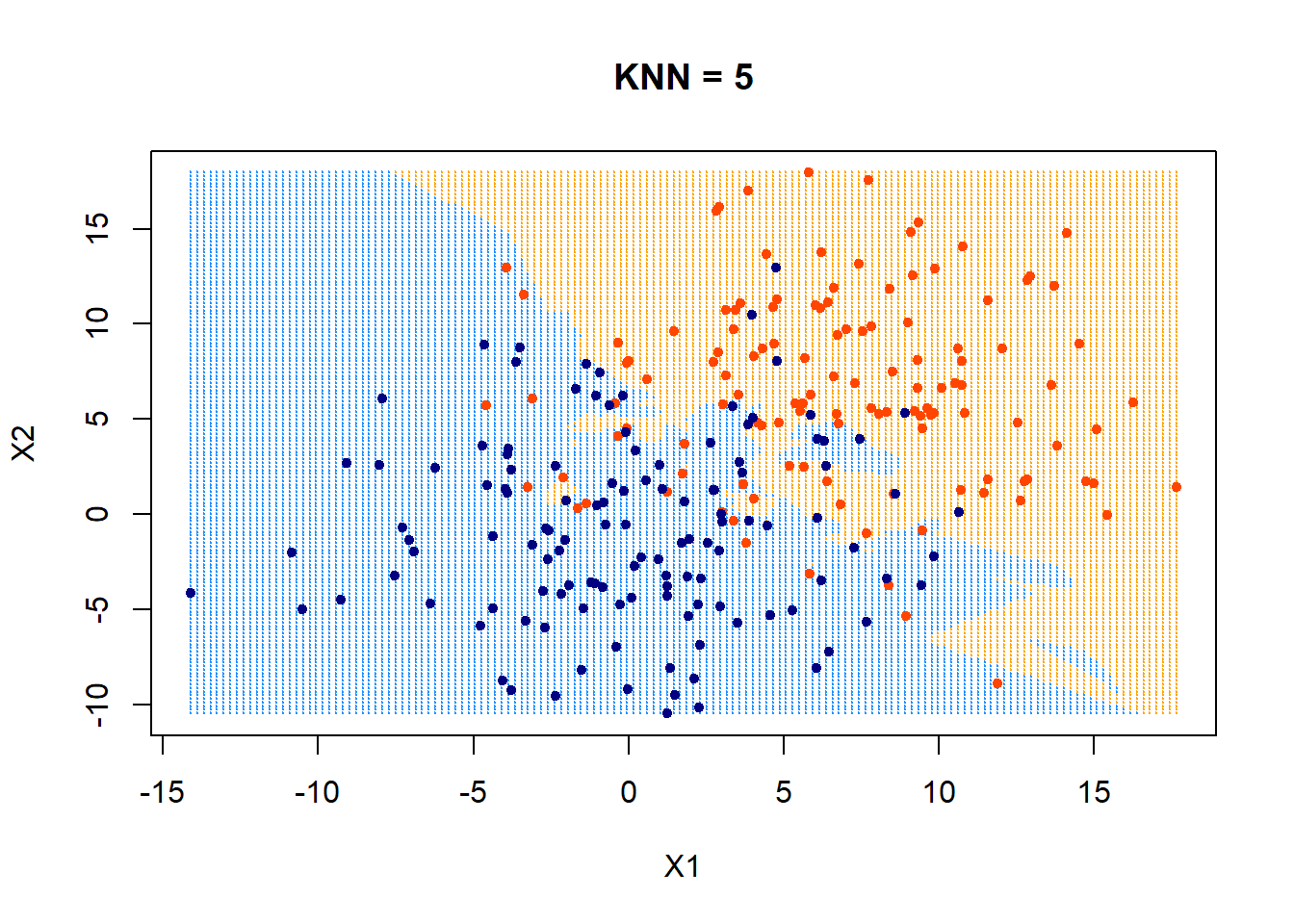

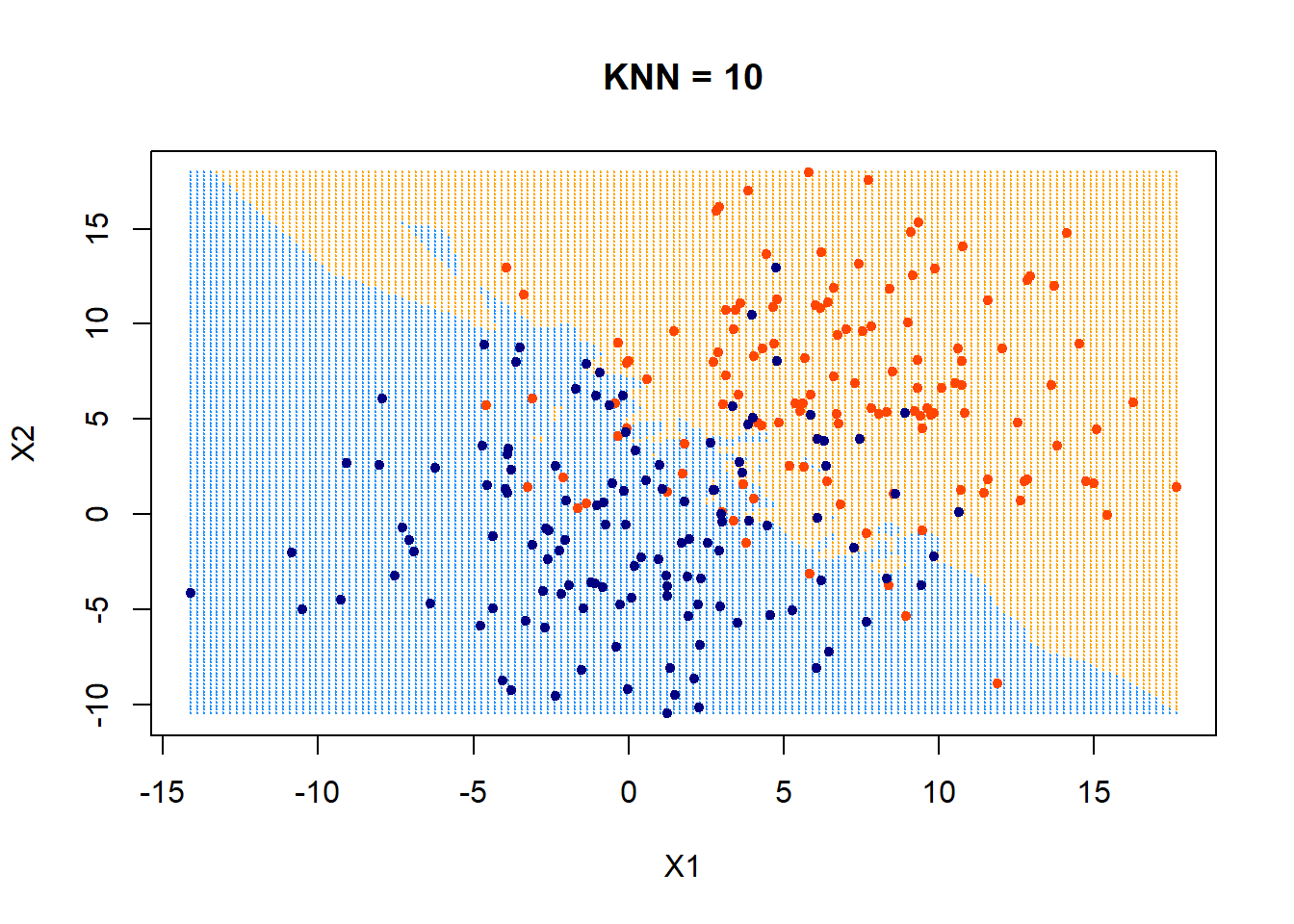

Predicting (inferencing, testing):

- for a new data point \(x_{\text{new}}\) do:

- find the \(k\) nearest points from the training data to \(x_{\text{new}}\)

- For classification: predict label \(\hat{y}_{\text{new}}\) by taking a majority vote of the \(k\) neighbors's labels

- For regression: predict label \(\hat{y}_{\text{new}}\) by taking an average over the \(k\) neighbors' labels

- Training: None (or rather: memorize the entire training data)

Hyper-parameter: \(k\)

Distance metric (typically Euclidean or Manhattan distance)

A tie-breaking scheme (typically at random)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=1\)

\(k=5\)

Outline

- Non-parametric models overview

-

Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

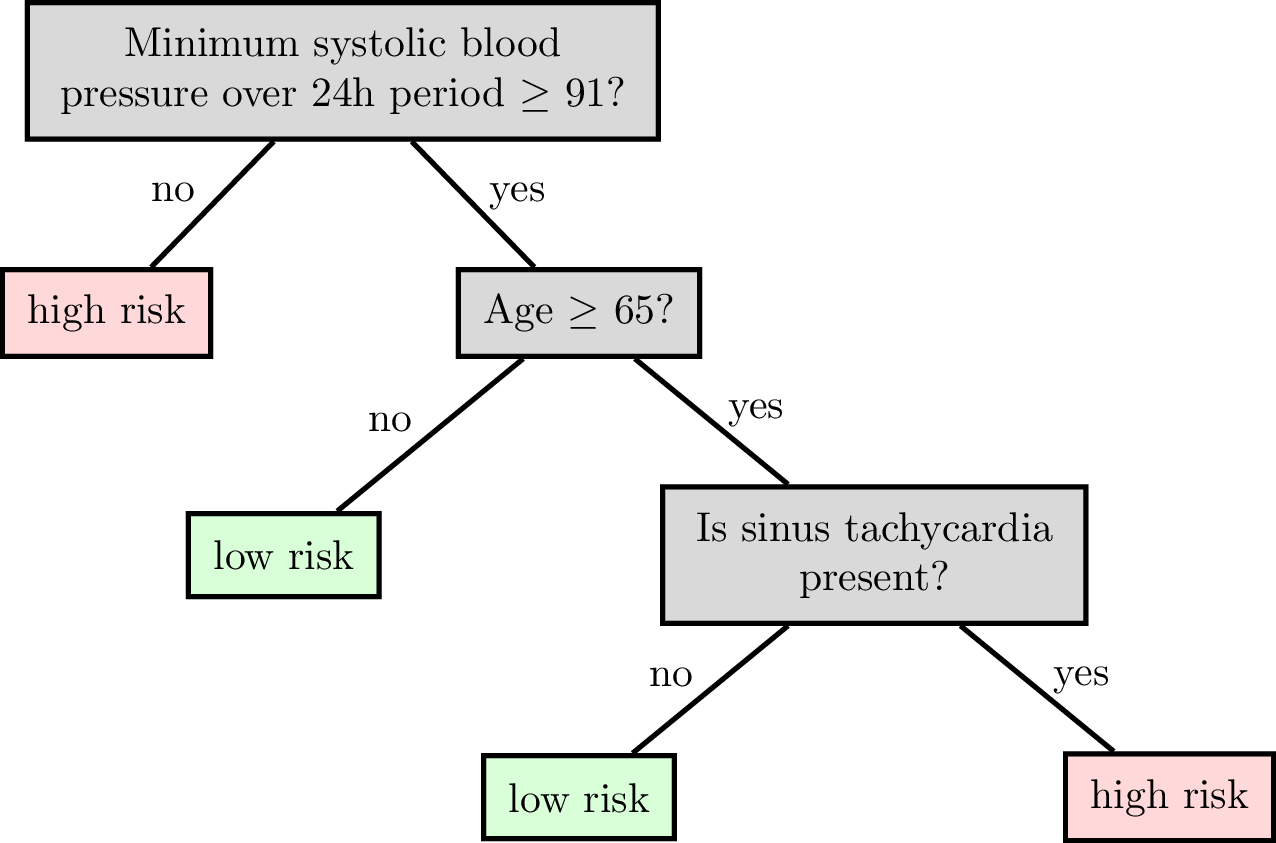

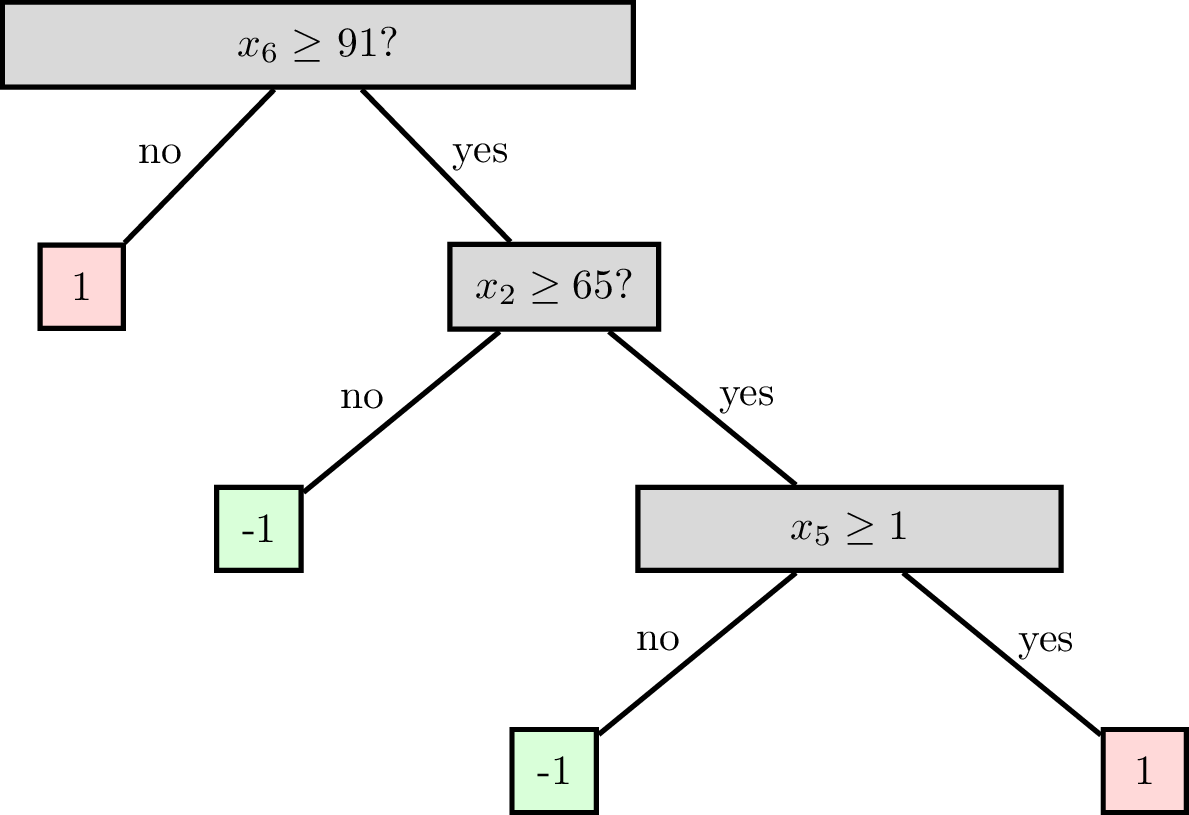

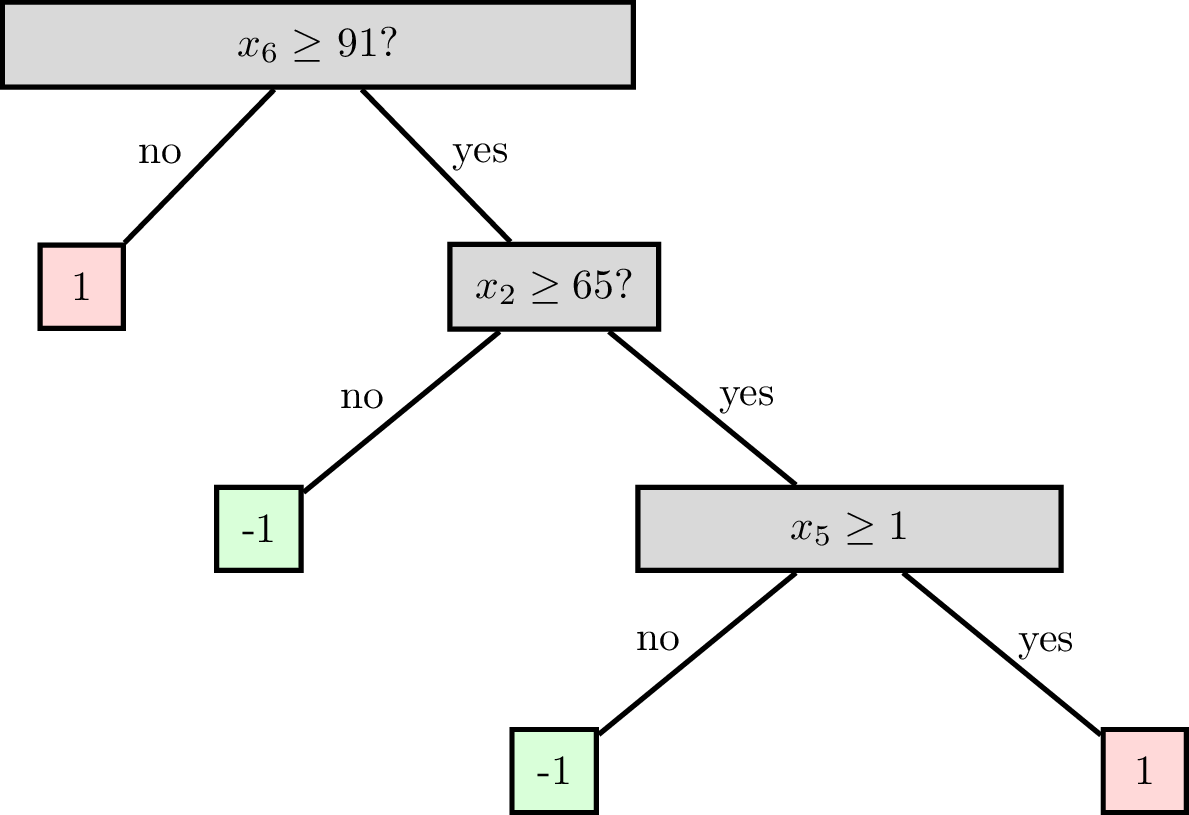

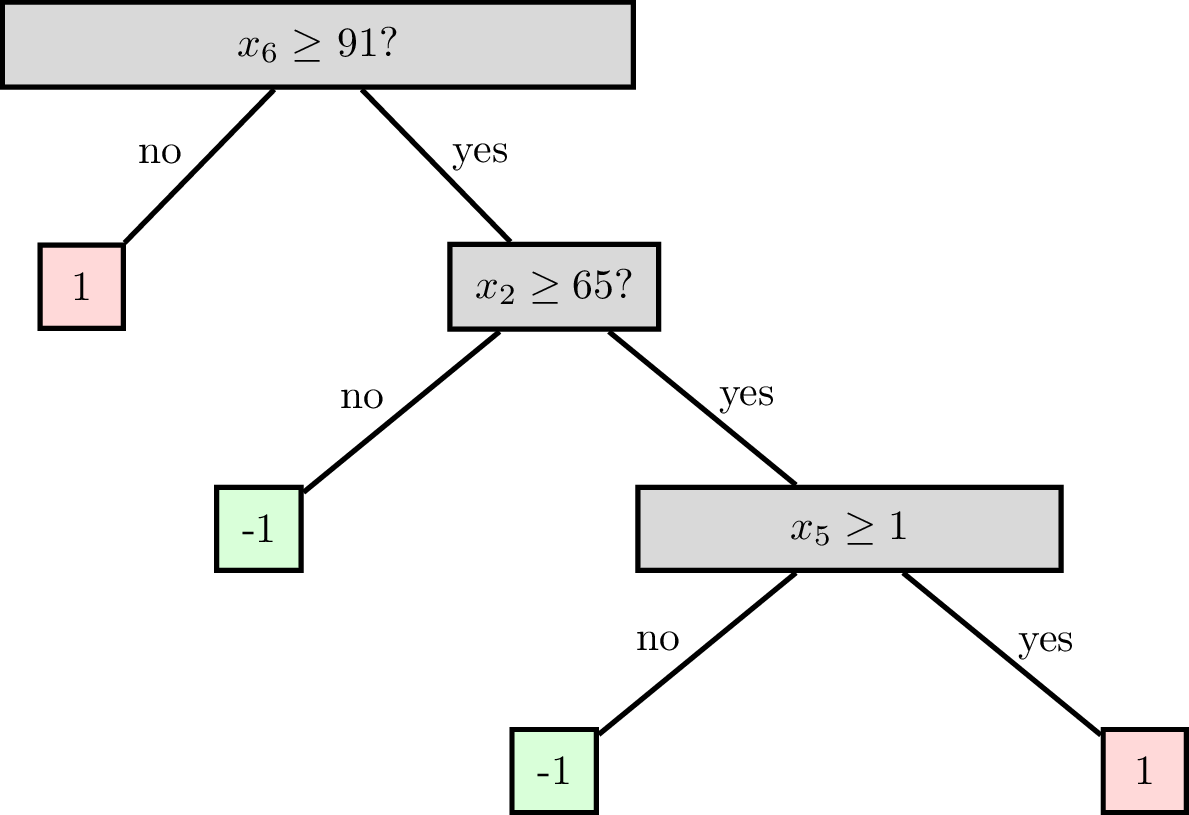

Reading a classification decision tree

features:

\(x_1\) : date

\(x_2\) : age

\(x_3\) : height

\(x_4\) : weight

\(x_5\) : sinus tachycardia?

\(x_6\) : min systolic bp, 24h

\(x_7\) : latest diastolic bp

labels \(y\) :

1: high risk

-1: low risk

Node (root)

Leaf (terminal)

Node (internal)

Decision tree terminologies

Split dimension

Split value

A node can be specified by

Node(split dim, split value, left child, right child)

A leaf is specified by `Leaf(leaf_value)

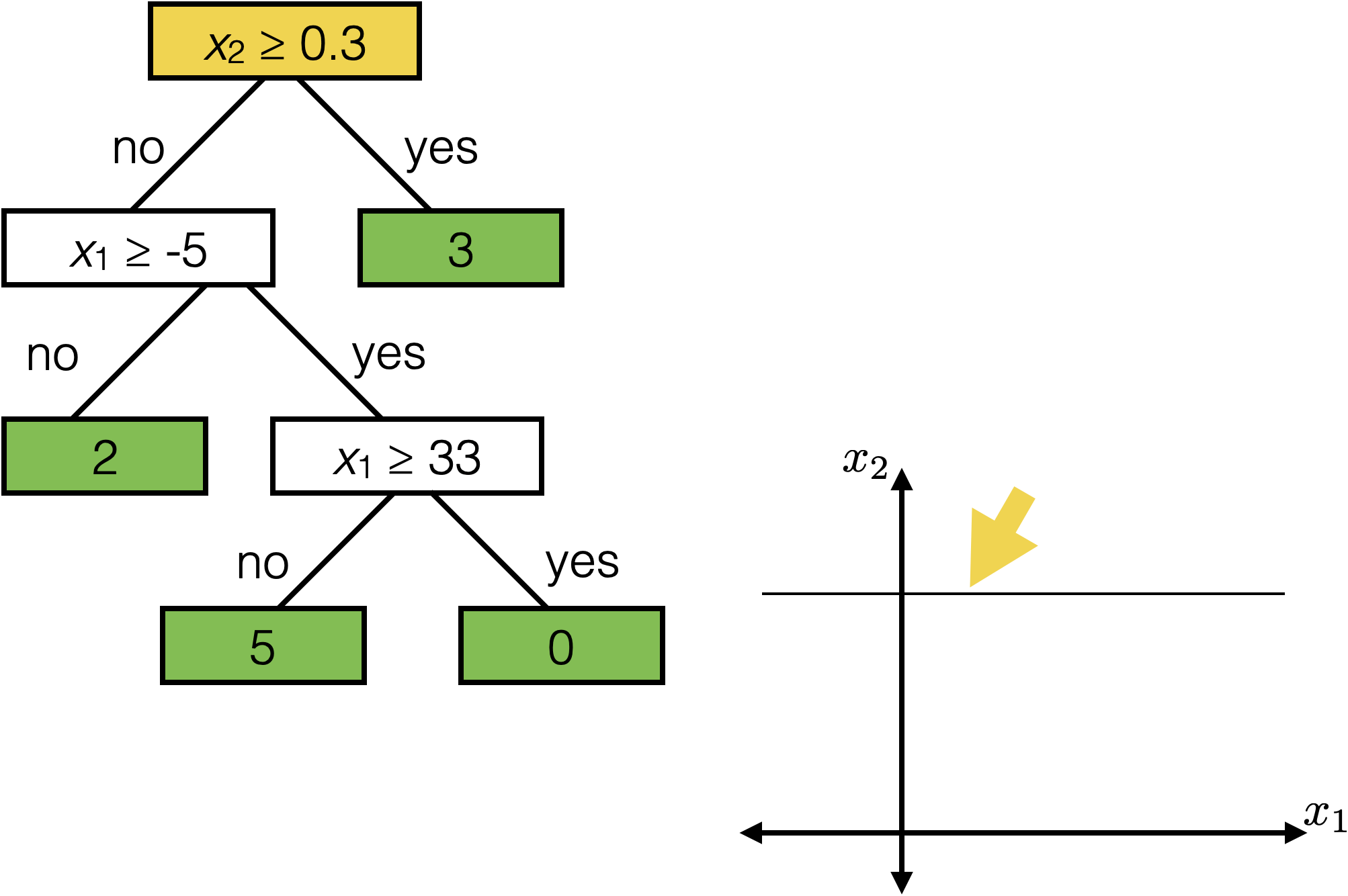

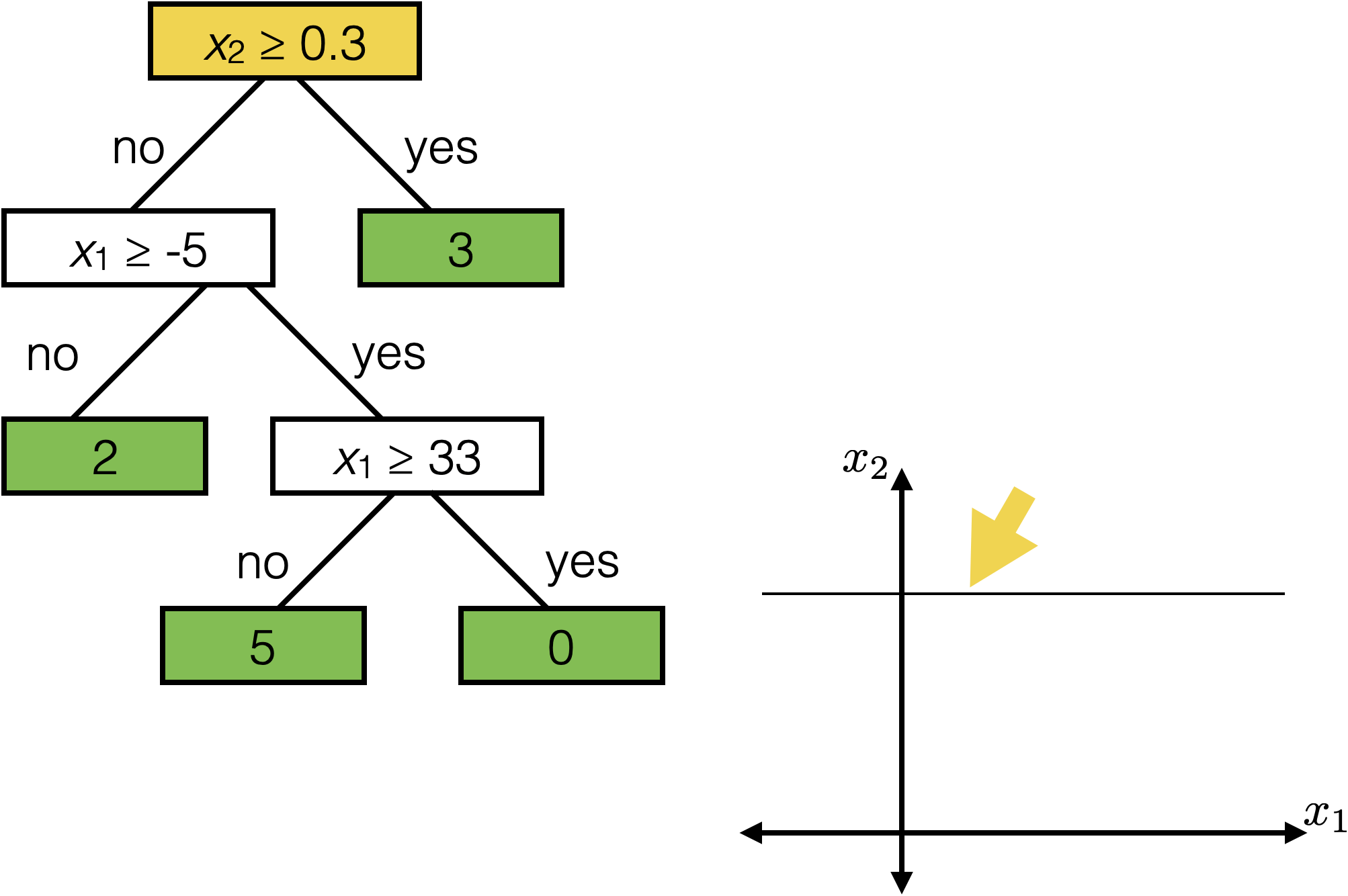

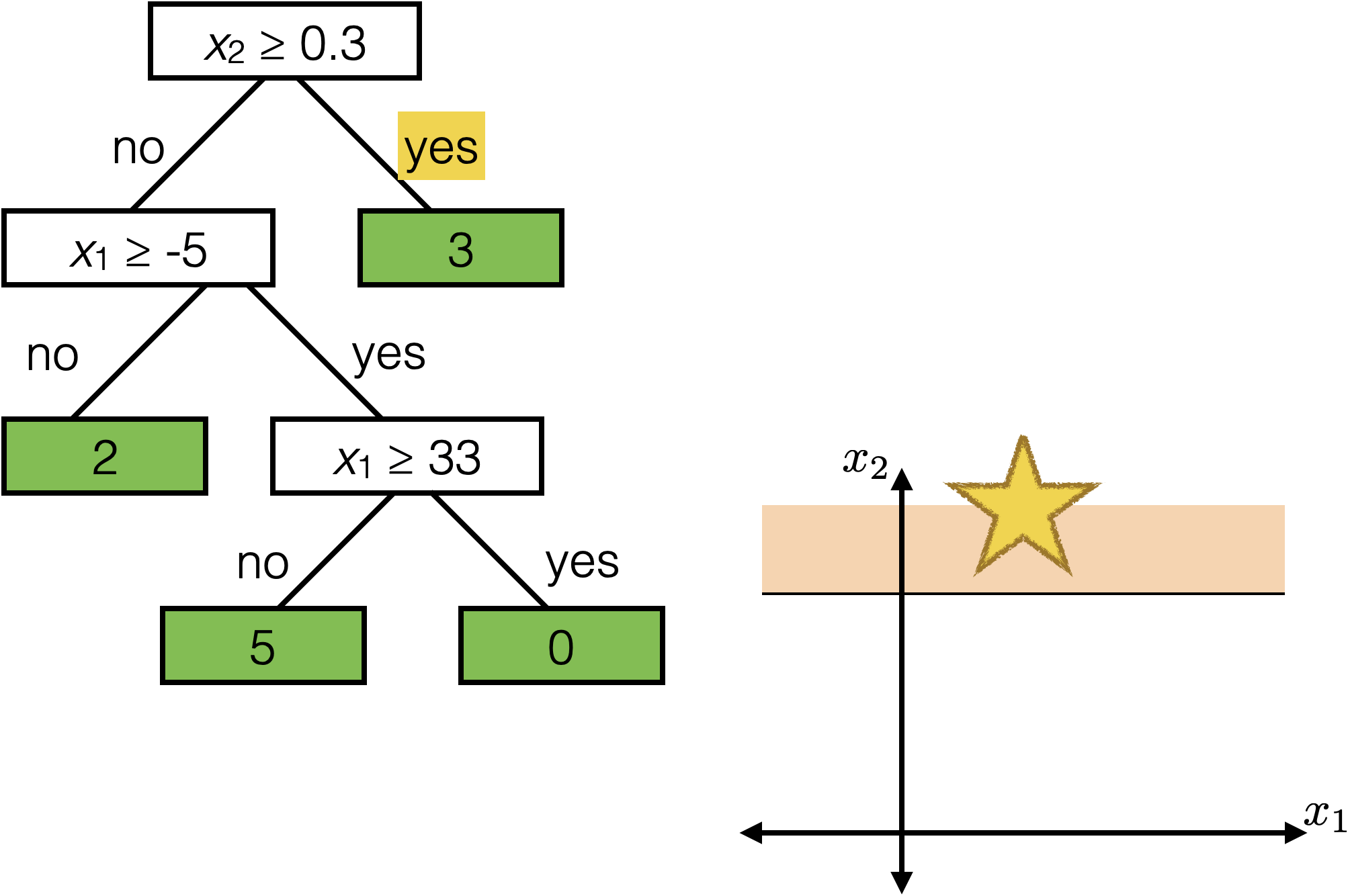

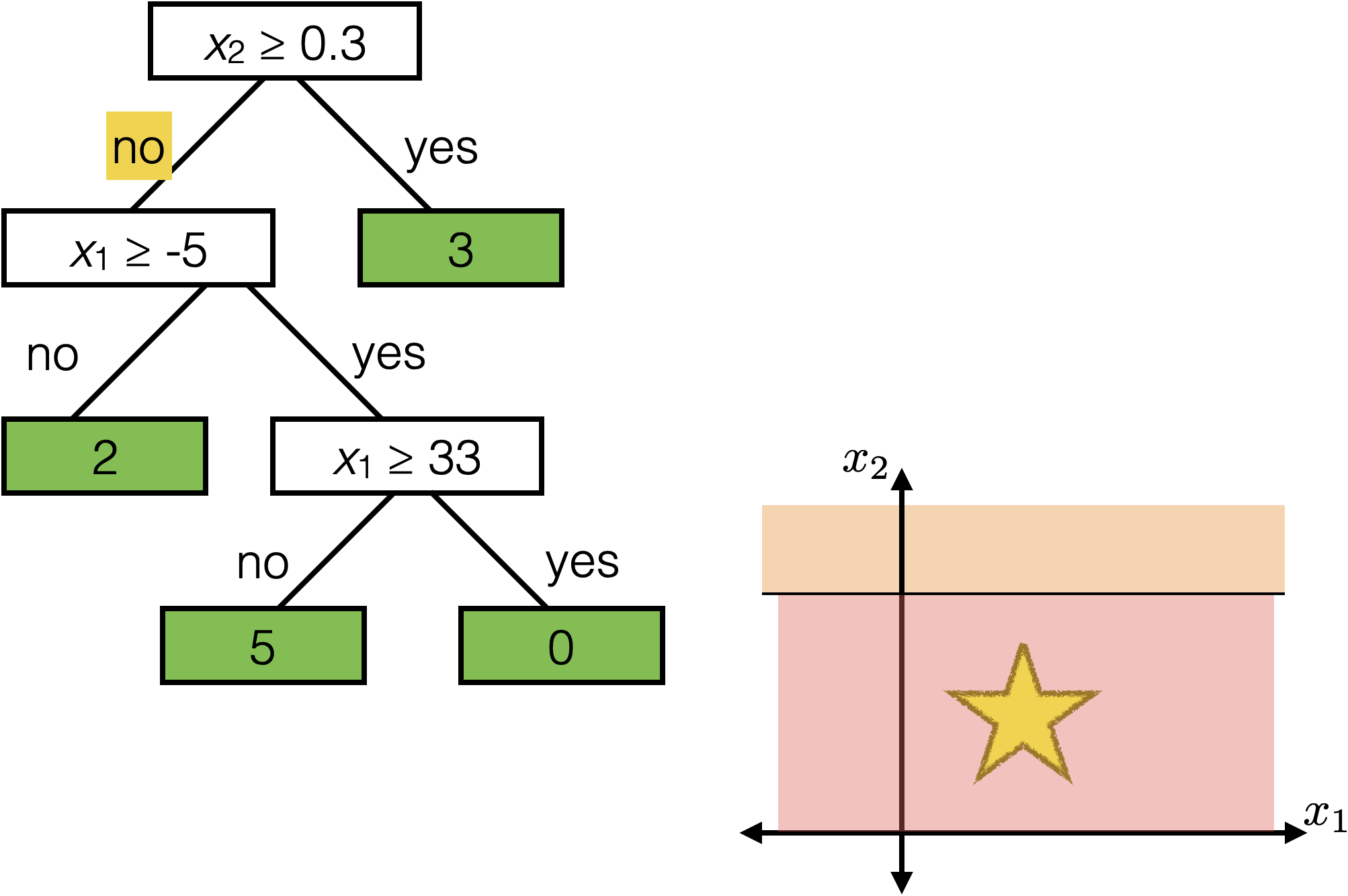

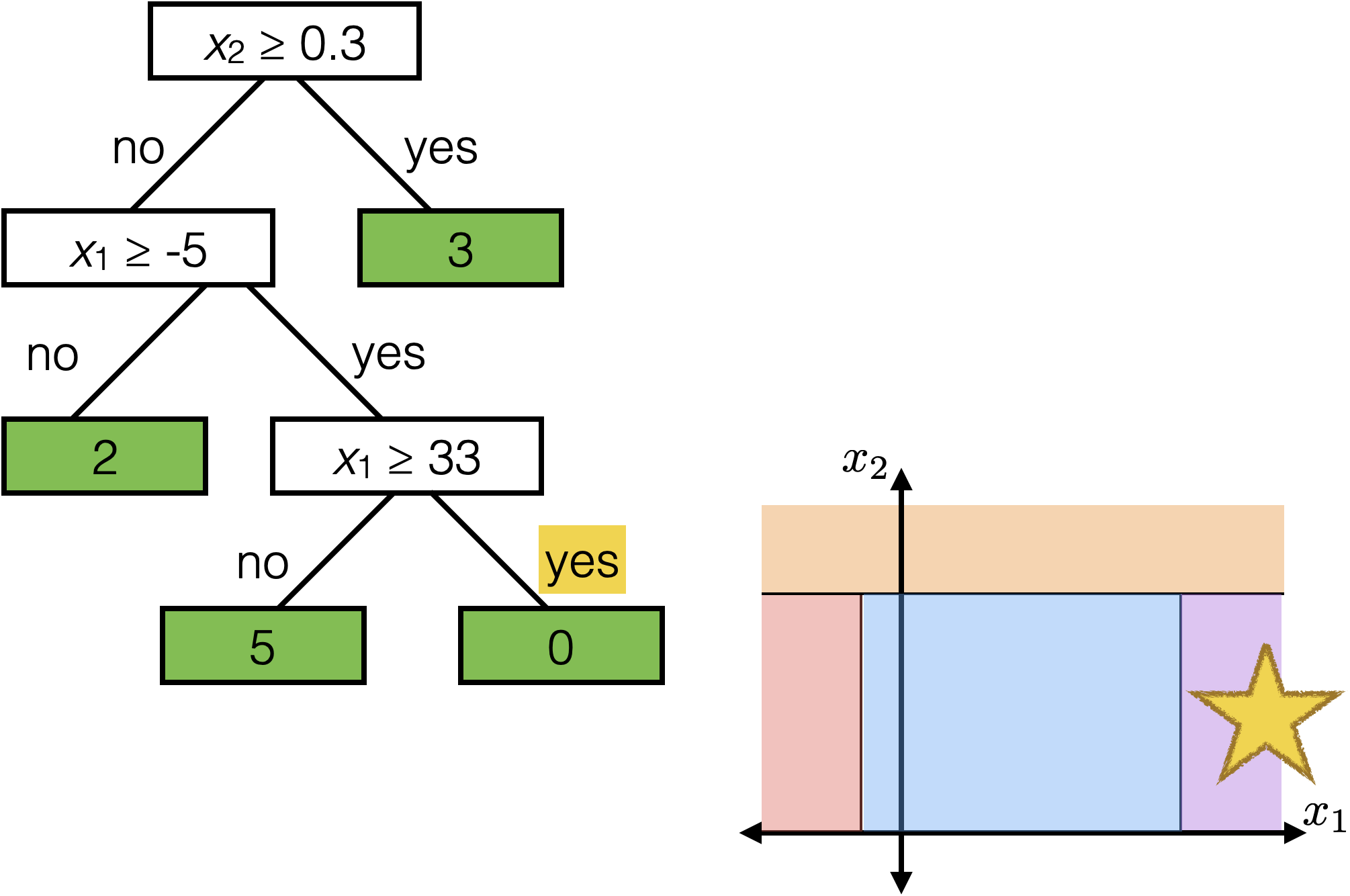

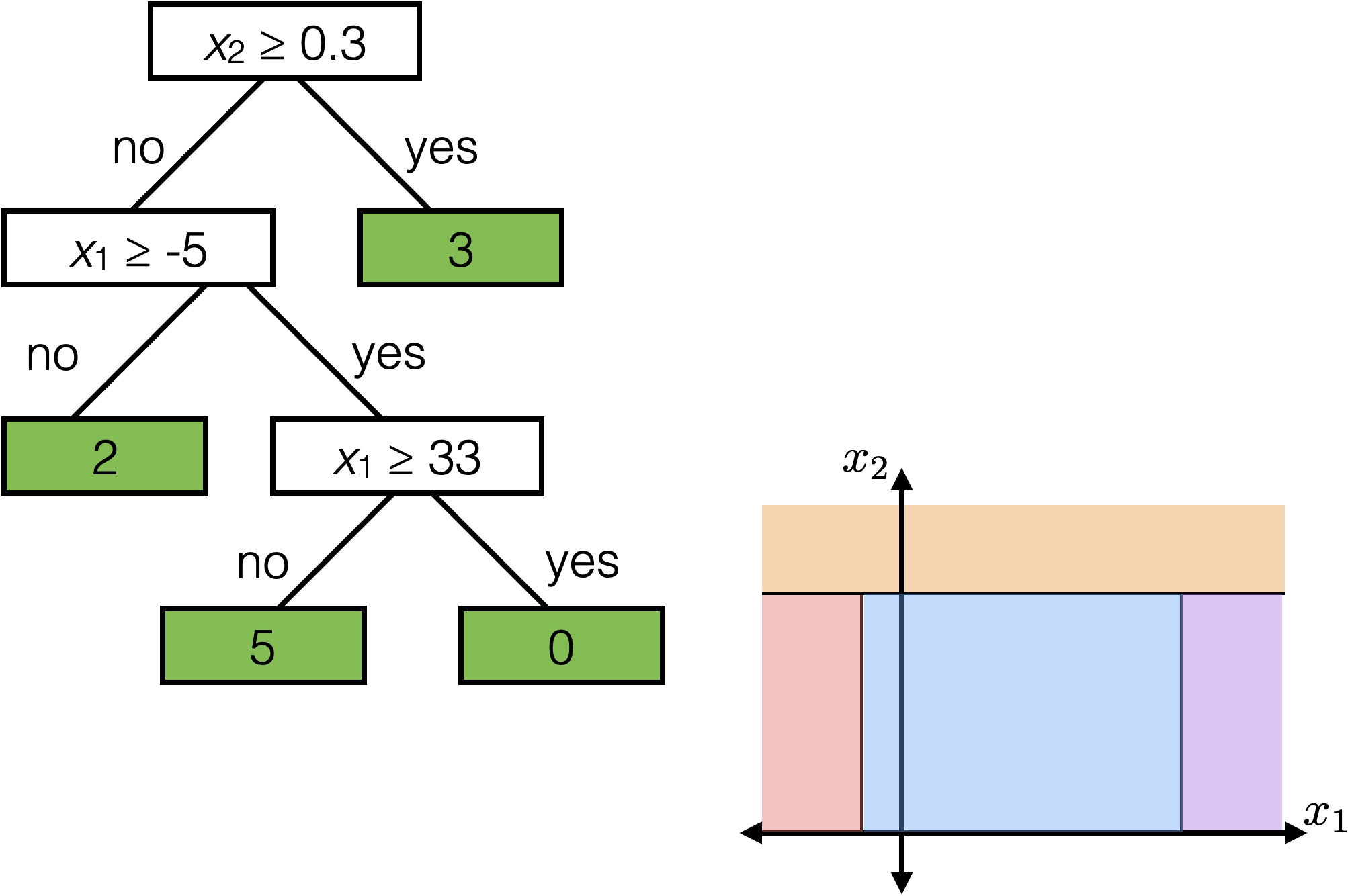

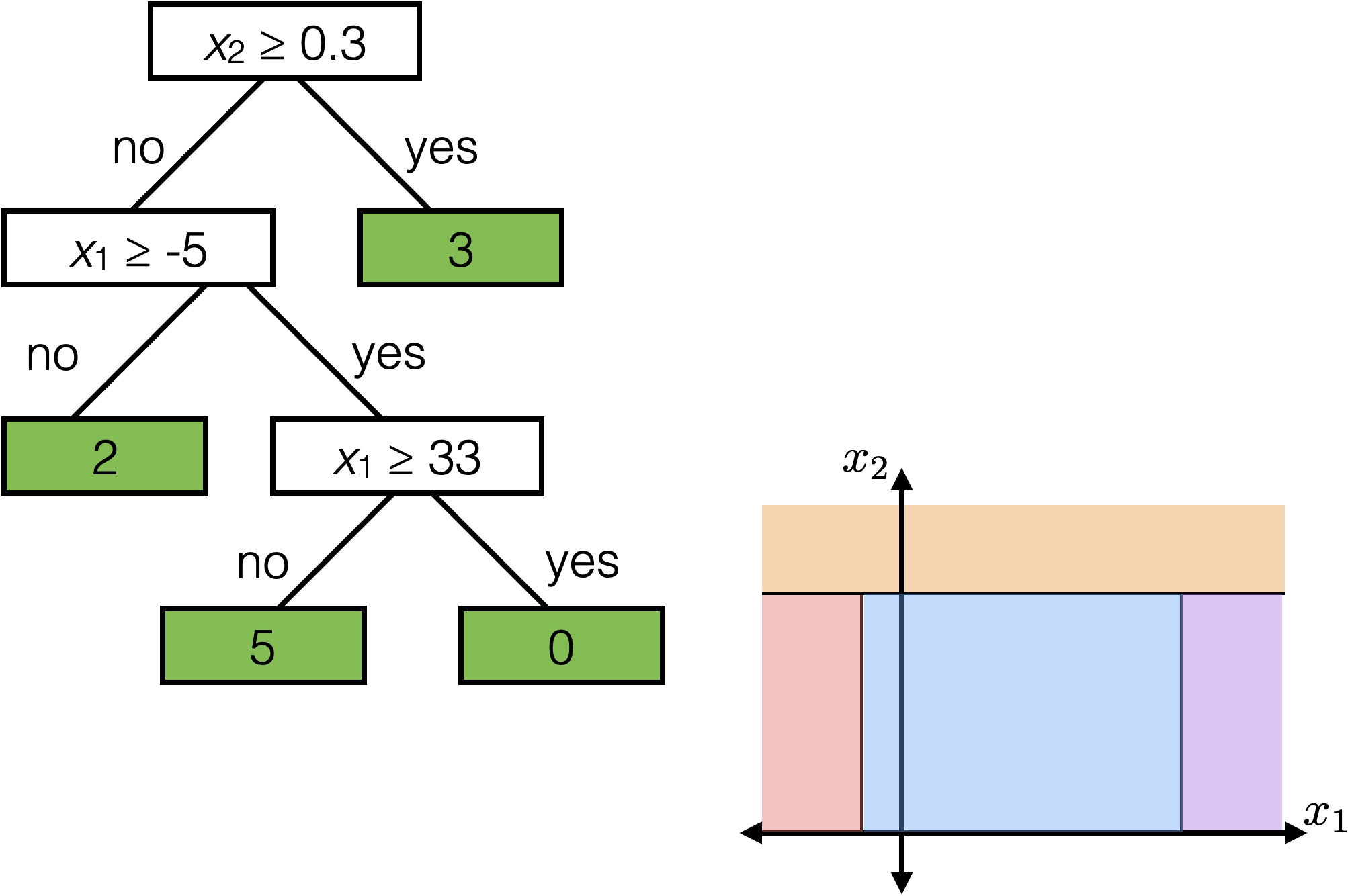

features:

- \(x_1\): temperature (deg C)

- \(x_2\): precipitation (cm/hr)

label: km run

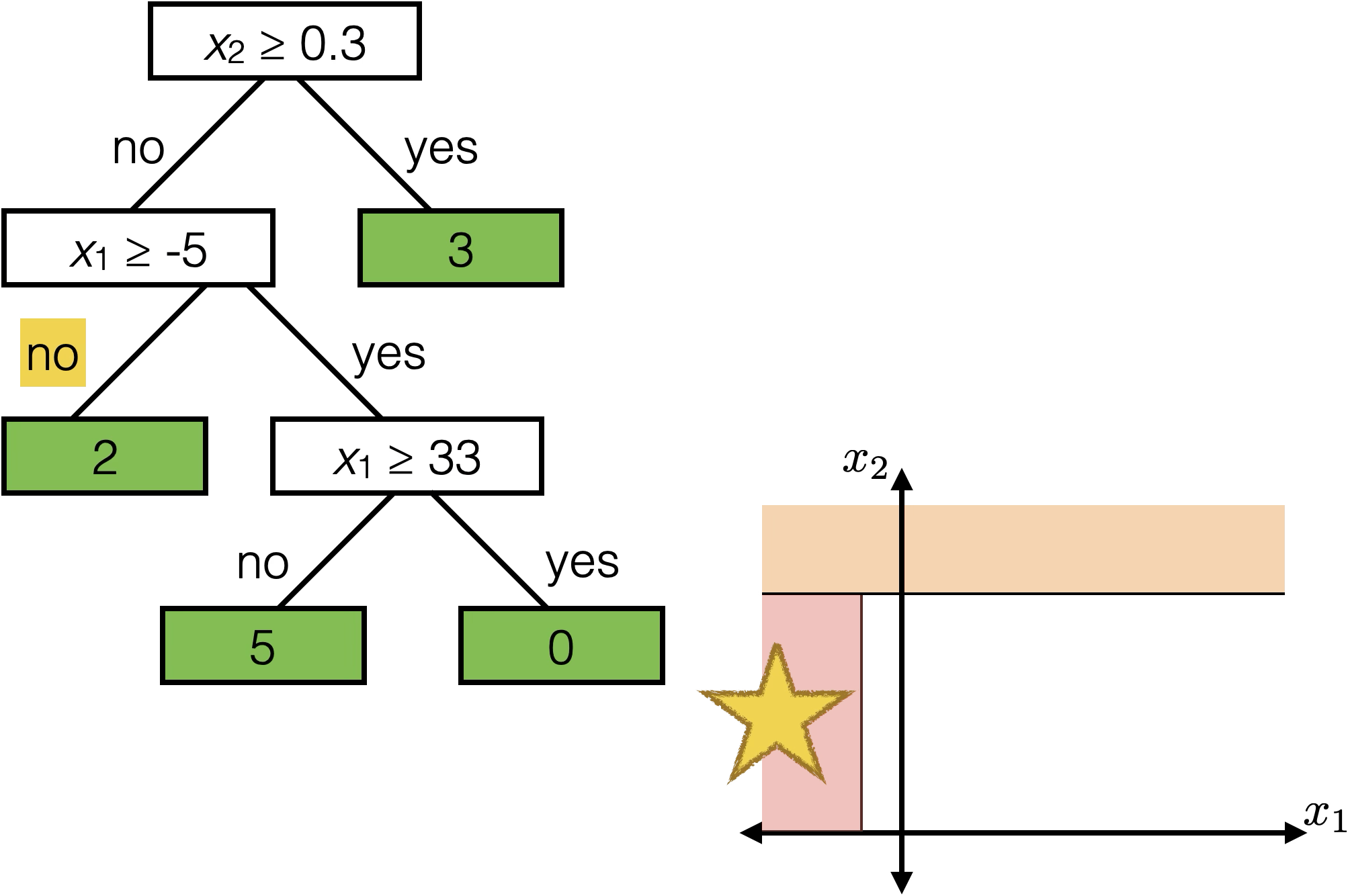

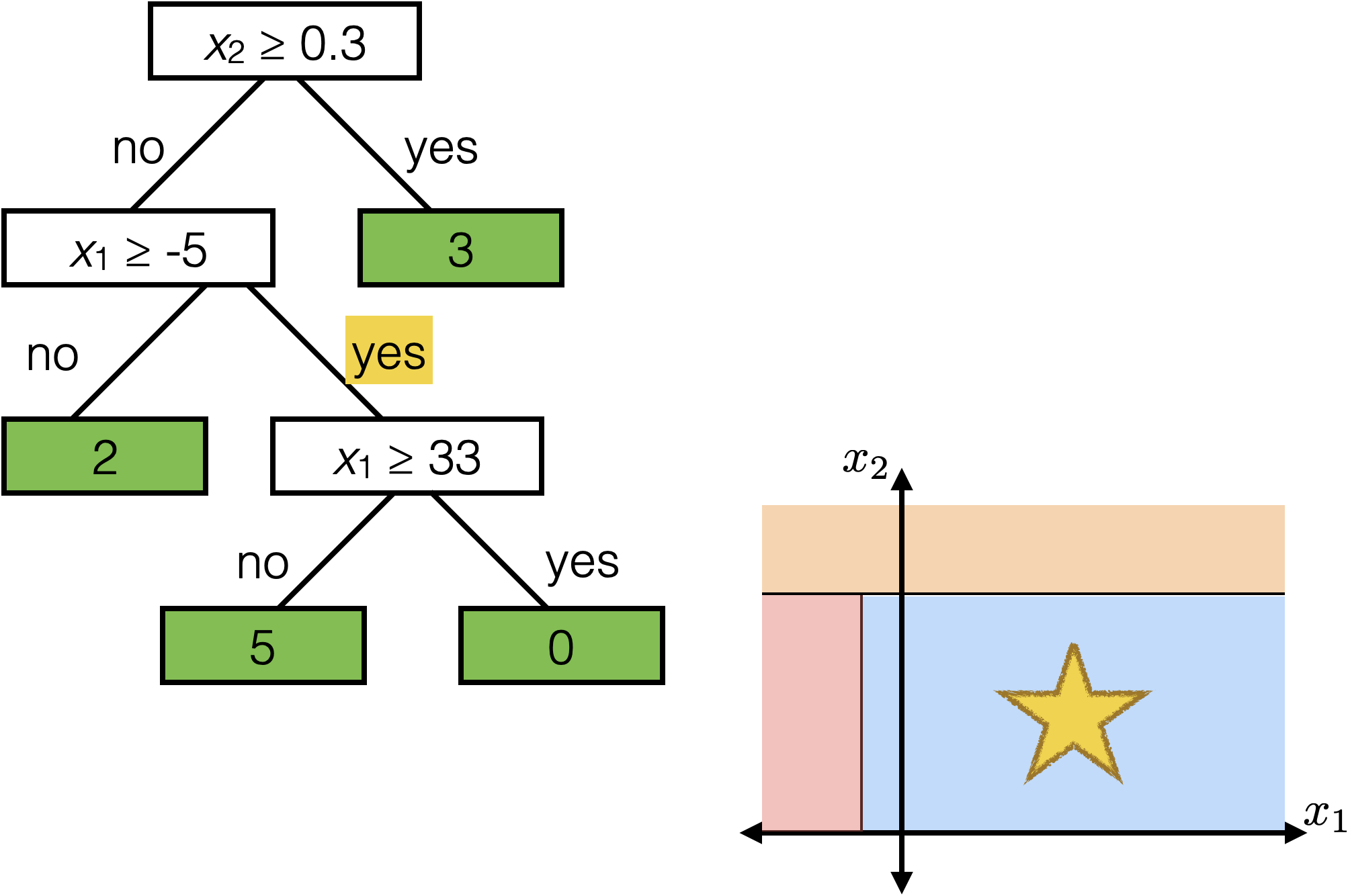

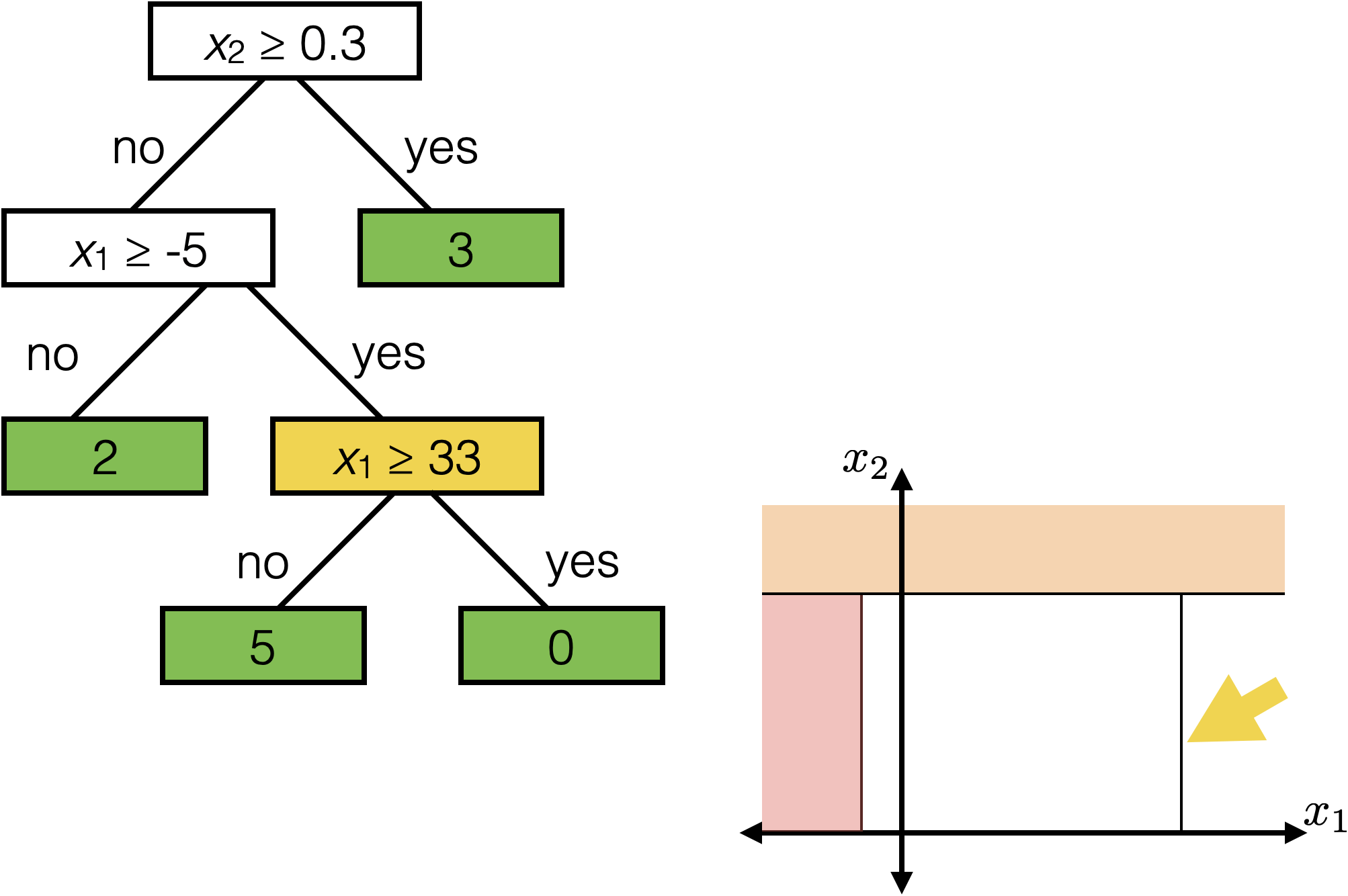

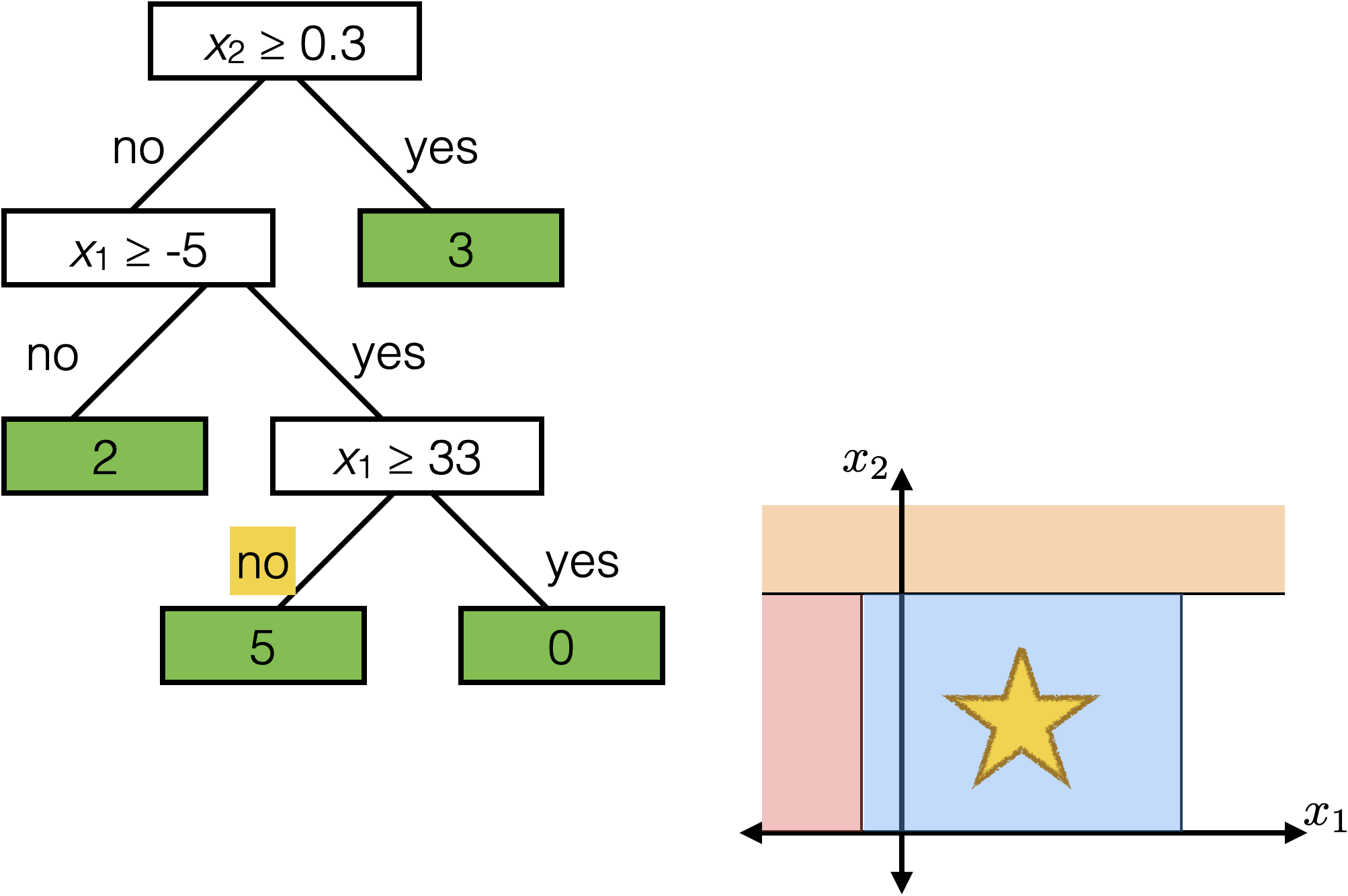

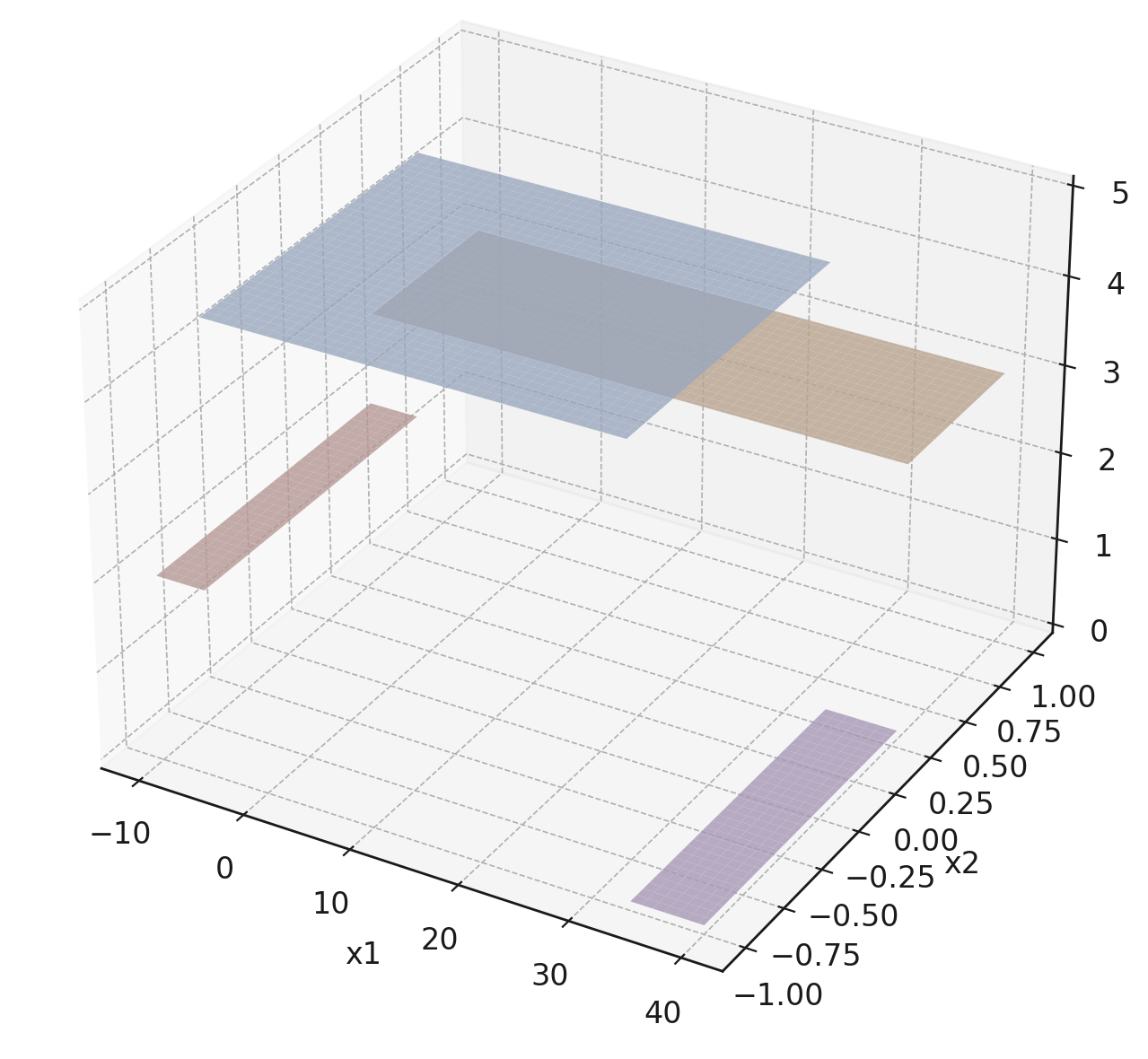

Reading a regression decision tree

The same prediction applies to an axis-aligned ‘box’ or ‘volume’ in the feature space

label: km run

temperature

precipitation

\(x_2 \geq 0.3\)

\(x_1 \geq -5 \)

\(x_1 \geq 33\)

2

3

0

5

temperature

precipitation

The same prediction applies to an axis-aligned ‘box’ or ‘volume’ in the feature space

Outline

- Non-parametric models overview

-

Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

BuildTree for regression

Set of indices.

Hyper-parameter, largest leaf size (i.e. the maximum number of training data that can "flow" into that leaf).

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k, \mathcal{D}\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k, \mathcal{D}\right)\right)\)

- Choose \(k=2\)

- \(\operatorname{BuildTree}(\{1,2,3\};2)\)

- Line 1 true

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

- For this fixed \((j, s)\)

- \(I_{j, s}^{+} = \{2,3\}\)

- \(I_{j, s}^{-} = \{1\}\)

- \(\hat{y}_{j, s}^{+} = 5\)

- \(\hat{y}_{j, s}^{-} = 0\)

- \(E_{j, s} =0\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

- For this fixed \((j, s)\)

- \(I_{j, s}^{+} = \{2,3\}\)

- \(I_{j, s}^{-} = \{1\}\)

- \(\hat{y}_{j, s}^{+} = 5\)

- \(\hat{y}_{j, s}^{-} = 0\)

- \(E_{j, s} =0\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

- Line 2: It suffices to consider a finite number of \((j, s)\) combo suffices (those that split in-between data points)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

- Line 8: picks the "best" among these finite choices of \((j, s)\) combos (random tie-breaking).

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

Suppose line 8 sets this \((j^*,s^*)\),

say = \((j^*,s^*) = (1, 1.7)\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

Line 12 recursion

\(x_1 \geq 1.7\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\(x_1 \geq 1.7\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\(x_1 \geq 1.7\)

0

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\(x_1 \geq 1.7\)

0

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) average \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) average \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s}=\sum_{i \in I_{j, s}^{+}}\left(y^{(i)}-\hat{y}_{j, s}^{+}\right)^2+\sum_{i \in I_{j, s}^{-}}\left(y^{(i)}-\hat{y}_{j, s}^{-}\right)^2\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

10. Set \(\hat{y}=\) average \(_{i \in I} y^{(i)}\)

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\(x_1 \geq 1.7\)

0

5

Outline

- Non-parametric models overview

-

Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

BuildTree for classification

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

weighted average entropy (WAE)

as performance metric

majority vote as the (intermediate) prediction

For classification

entropy \(H:=-\sum_{\text {class }_c} \hat{P}_c (\log _2 \hat{P}_c)\)

(about 1.1)

e.g.: \(c\) iterates over 3 classes

\(\hat{P}_c:\)

\(H= -[\frac{4}{6} \log _2\left(\frac{4}{6}\right)+\frac{1}{6} \log _2\left(\frac{1}{6}\right)+\frac{1}{6} \log _2\left(\frac{1}{6}\right)]\)

(about 1.252)

\(\hat{P}_c:\)

\(H= -[\frac{3}{6} \log _2\left(\frac{3}{6}\right)+\frac{3}{6} \log _2\left(\frac{3}{6}\right)+ 0]\)

\(\hat{P}_c:\)

\(H= -[\frac{6}{6} \log _2\left(\frac{6}{6}\right)+ 0+ 0]\)

(= 0)

empirical probability

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\( \frac{4}{6} \cdot H\left(I_{j, s}^{-}\right)+\frac{2}{6} \cdot H\left(I_{j, s}^{+}\right)\)

fraction of points to the left of the split

fraction of points to the right of the split

\(E_{j,s}= \)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\( -[\frac{3}{4} \log _2\left(\frac{3}{4}\right)+\frac{1}{4} \log _2\left(\frac{1}{4}\right)+0] \approx 0.811\)

\( \frac{4}{6} \cdot H\left(I_{j, s}^{-}\right)+\frac{2}{6} \cdot H\left(I_{j, s}^{+}\right)\)

\(\hat{P}_c:\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\( \frac{4}{6} \cdot H\left(I_{j, s}^{-}\right)+\frac{2}{6} \cdot H\left(I_{j, s}^{+}\right)\)

\(\approx 0.811\)

\( -[\frac{1}{2} \log _2\left(\frac{1}{2}\right)+\frac{1}{2} \log _2\left(\frac{1}{2}\right)+0] = 1\)

\(\hat{P}_c:\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\( \frac{4}{6} \cdot H\left(I_{j, s}^{-}\right)+\frac{2}{6} \cdot H\left(I_{j, s}^{+}\right)\)

\(\approx 0.811\)

\( = 1\)

(for this split choice, line 7 \(E_{j, s}\approx\) 0.874)

\(\approx \frac{4}{6}\cdot0.811+\frac{2}{6}\cdot 1\)

\(= 0.874\)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

\( \frac{5}{6} \cdot H\left(I_{j, s}^{-}\right)+\frac{1}{6} \cdot H\left(I_{j, s}^{+}\right)\)

\( -[\frac{4}{5} \log _2\left(\frac{4}{5}\right)+\frac{1}{5} \log _2\left(\frac{1}{5}\right)+0] \approx 0.722\)

\( -[1 \log _2\left(1\right)+0+0] = 0\)

(line 7, overall \(E_{j, s}\approx\) 0.602)

\(\operatorname{BuildTree}(I, k, \mathcal{D})\)

10. Set \(\hat{y}=\) majority \(_{i \in I} y^{(i)}\)

1. if \(|I| > k\)

2. for each split dim \(j\) and split value \(s\)

3. Set \(I_{j, s}^{+}=\left\{i \in I \mid x_j^{(i)} \geq s\right\}\)

4. Set \(I_{j, s}^{-}=\left\{i \in I \mid x_j^{(i)}<s\right\}\)

5. Set \(\hat{y}_{j, s}^{+}=\) majority \(_{i \in I_{j, s}^{+}} y^{(i)}\)

6. Set \(\hat{y}_{j, s}^{-}=\) majority \(_{i \in I_{j, s}^{-}} y^{(i)}\)

7. Set \(E_{j, s} = \frac{\left|I_{j, s}^{-}\right|}{|I|} \cdot H\left(I_{j, s}^{-}\right)+\frac{\left|I_{j, s}^{+}\right|}{|I|} \cdot H\left(I_{j, s}^{+}\right)\)

8. Set \(\left(j^*, s^*\right)=\arg \min _{j, s} E_{j, s}\)

9. else

11. return \(\operatorname{Leaf}\)(leave_value=\(\hat{y})\)

12. return \(\operatorname{Node}\left(j^*, s^*, \operatorname{BuildTree}\left(I_{j^*, s^*}^{-}, k\right), \operatorname{BuildTree}\left(I_{j^*, s^*}^{+}, k\right)\right)\)

this split \(E_{j, s}\approx\) 0.602

this split \(E_{j, s}\approx\) 0.874

line 8, set the better \((j, s)\)

Outline

- Non-parametric models overview

-

Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

- Unsupervised learning non-parametric

- \(k\)-means clustering

Ensemble

- One of multiple ways to make and use an ensemble

- Bagging = Bootstrap aggregating

Bagging

Training data \(\mathcal{D}_n\)

\(\left(x^{(1)}, y^{(1)}\right)\)

\(\left(x^{(2)}, y^{(2)}\right)\)

\(\left(x^{(3)}, y^{(3)}\right)\)

\(\left(x^{(4)}, y^{(4)}\right)\)

\(\left(x^{(5)}, y^{(5)}\right)\)

Bagging

Training data \(\mathcal{D}_n\)

\(\left(x^{(1)}, y^{(1)}\right)\)

\(\left(x^{(2)}, y^{(2)}\right)\)

\(\left(x^{(3)}, y^{(3)}\right)\)

\(\left(x^{(4)}, y^{(4)}\right)\)

\(\left(x^{(5)}, y^{(5)}\right)\)

\(\tilde{\mathcal{D}}_n^{(1)}\)

\(\tilde{x}^{(1)}, \tilde{y}^{(1)}\)

\(\tilde{x}^{(2)}, \tilde{y}^{(2)}\)

\(\tilde{x}^{(4)}, \tilde{y}^{(4)}\)

\(\tilde{x}^{(3)}, \tilde{y}^{(3)}\)

\(\tilde{x}^{(5)}, \tilde{y}^{(5)}\)

- Training data \(\mathcal{D}_n\)

- For \(b=1, \ldots, B\)

- Draw a new "data set" \(\tilde{\mathcal{D}}_n^{(b)}\) of size \(n\) by sampling with replacement from \(\mathcal{D}_n\)

Bagging

Training data \(\mathcal{D}_n\)

\(\left(x^{(1)}, y^{(1)}\right)\)

\(\left(x^{(2)}, y^{(2)}\right)\)

\(\left(x^{(3)}, y^{(3)}\right)\)

\(\left(x^{(4)}, y^{(4)}\right)\)

\(\left(x^{(5)}, y^{(5)}\right)\)

\(\tilde{\mathcal{D}}_n^{(1)}\)

\(\tilde{x}^{(1)}, \tilde{y}^{(1)}\)

\(\tilde{x}^{(2)}, \tilde{y}^{(2)}\)

\(\tilde{x}^{(4)}, \tilde{y}^{(4)}\)

\(\tilde{x}^{(3)}, \tilde{y}^{(3)}\)

\(\tilde{x}^{(5)}, \tilde{y}^{(5)}\)

- Training data \(\mathcal{D}_n\)

- For \(b=1, \ldots, B\)

- Draw a new "data set" \(\tilde{\mathcal{D}}_n^{(b)}\) of size \(n\) by sampling with replacement from \(\mathcal{D}_n\)

- Train a predictor \(\hat{f}^{(b)}\) on \(\tilde{\mathcal{D}}_n^{(b)}\)

\(\tilde{\mathcal{D}}_n^{(2)}\)

\(\tilde{x}^{(1)}, \tilde{y}^{(1)}\)

\(\tilde{x}^{(2)}, \tilde{y}^{(2)}\)

\(\tilde{x}^{(4)}, \tilde{y}^{(4)}\)

\(\tilde{x}^{(3)}, \tilde{y}^{(3)}\)

\(\tilde{x}^{(5)}, \tilde{y}^{(5)}\)

\(\tilde{\mathcal{D}}_n^{(B)}\)

\(\tilde{x}^{(1)}, \tilde{y}^{(1)}\)

\(\tilde{x}^{(2)}, \tilde{y}^{(2)}\)

\(\tilde{x}^{(4)}, \tilde{y}^{(4)}\)

\(\tilde{x}^{(3)}, \tilde{y}^{(3)}\)

\(\tilde{x}^{(5)}, \tilde{y}^{(5)}\)

- Training data \(\mathcal{D}_n\)

- For \(b=1, \ldots, B\)

- Draw a new "data set" \(\tilde{\mathcal{D}}_n^{(b)}\) of size \(n\) by sampling with replacement from \(\mathcal{D}_n\)

- Train a predictor \(\hat{f}^{(b)}\) on \(\tilde{\mathcal{D}}_n^{(b)}\)

- Return

- Regression: \(\hat{f}_{\text {bag }}(x)=\frac{1}{B} \sum_{b=1}^B \hat{f}^{(b)}(x)\)

- For classification: Predict the class that receives the most votes among the \(B\) classifiers.

Bagging

Outline

- Non-parametric models overview

- Supervised learning non-parametric

- \(k\)-nearest neighbor

- Decision Tree (read a tree, BuildTree, Bagging)

-

Unsupervised learning non-parametric

- \(k\)-means clustering

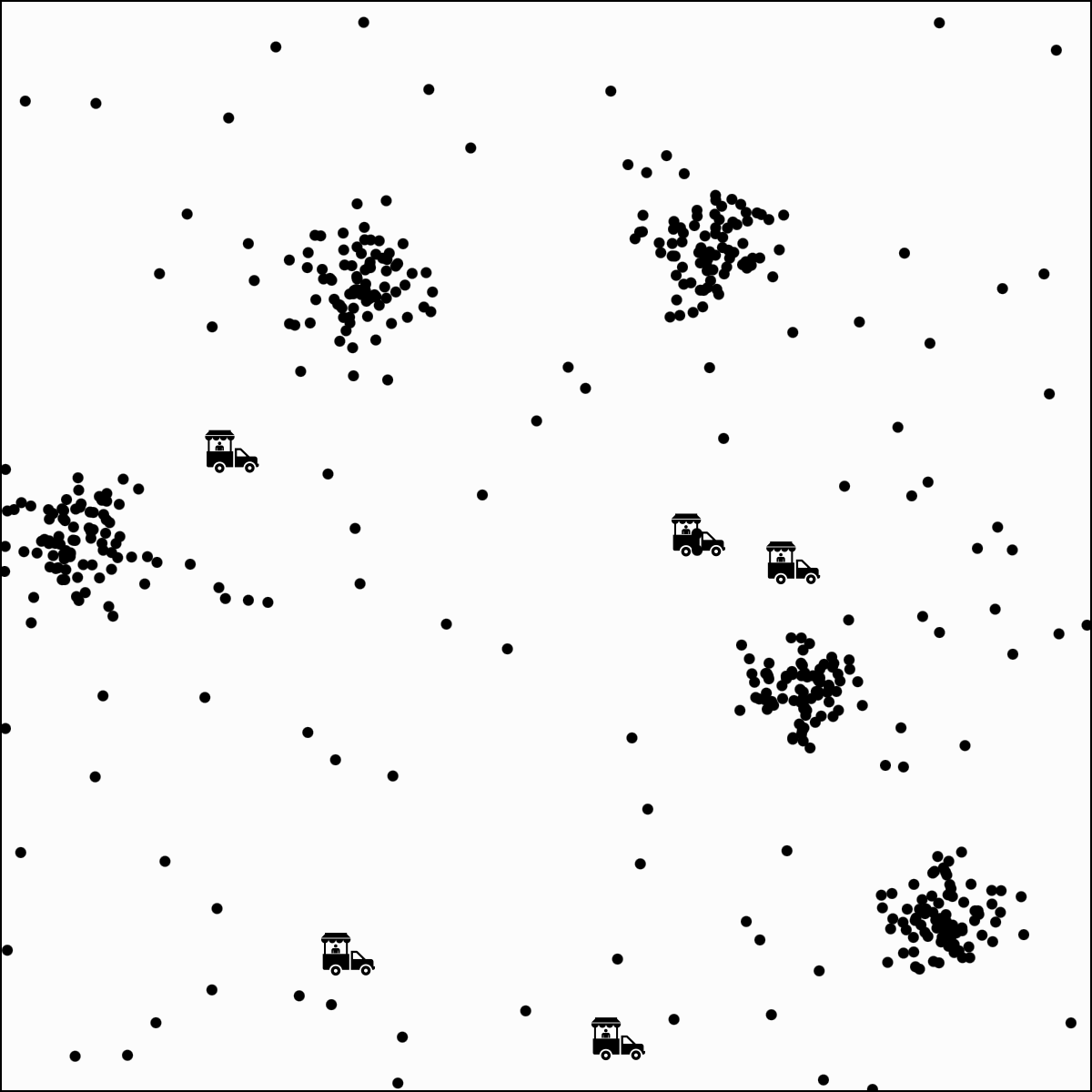

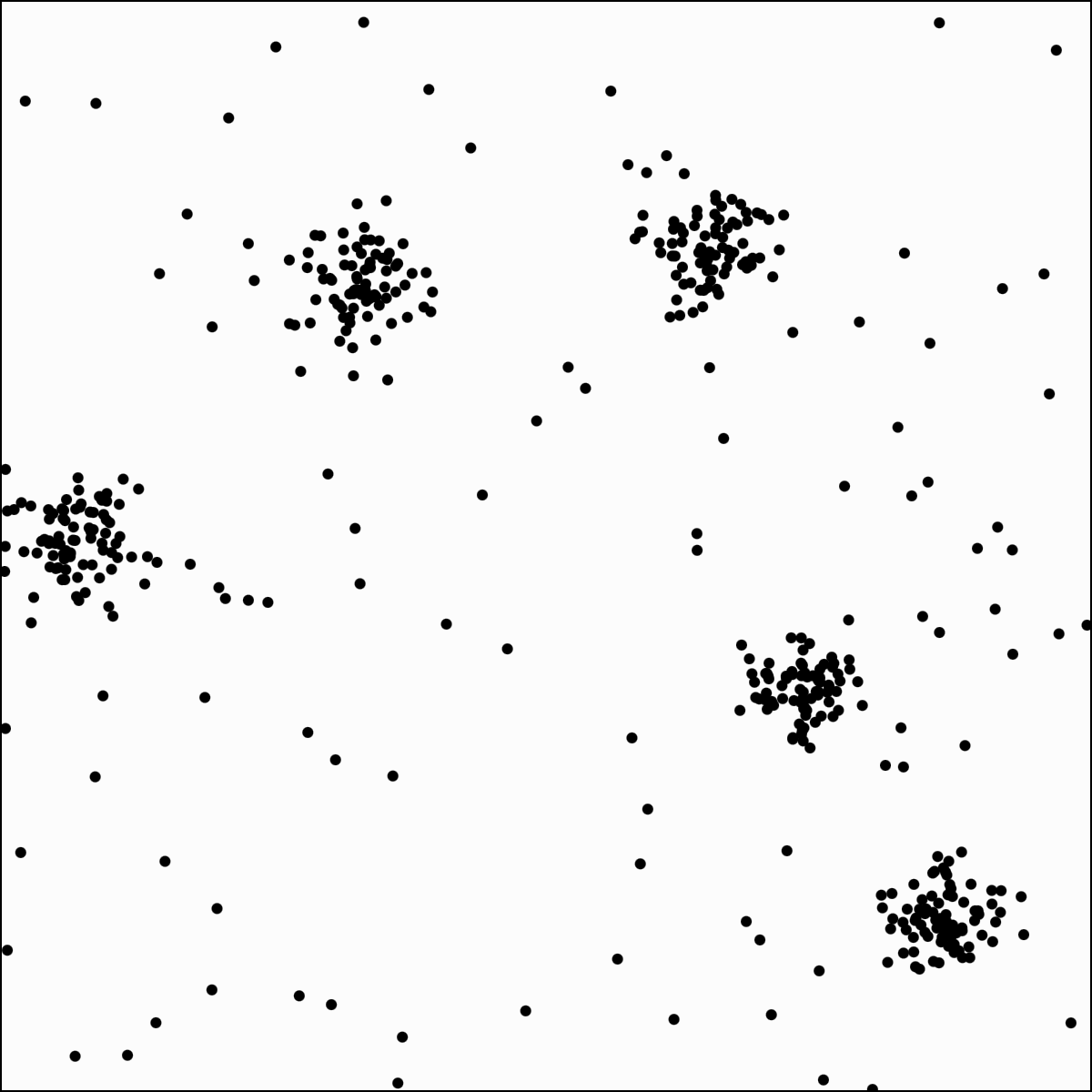

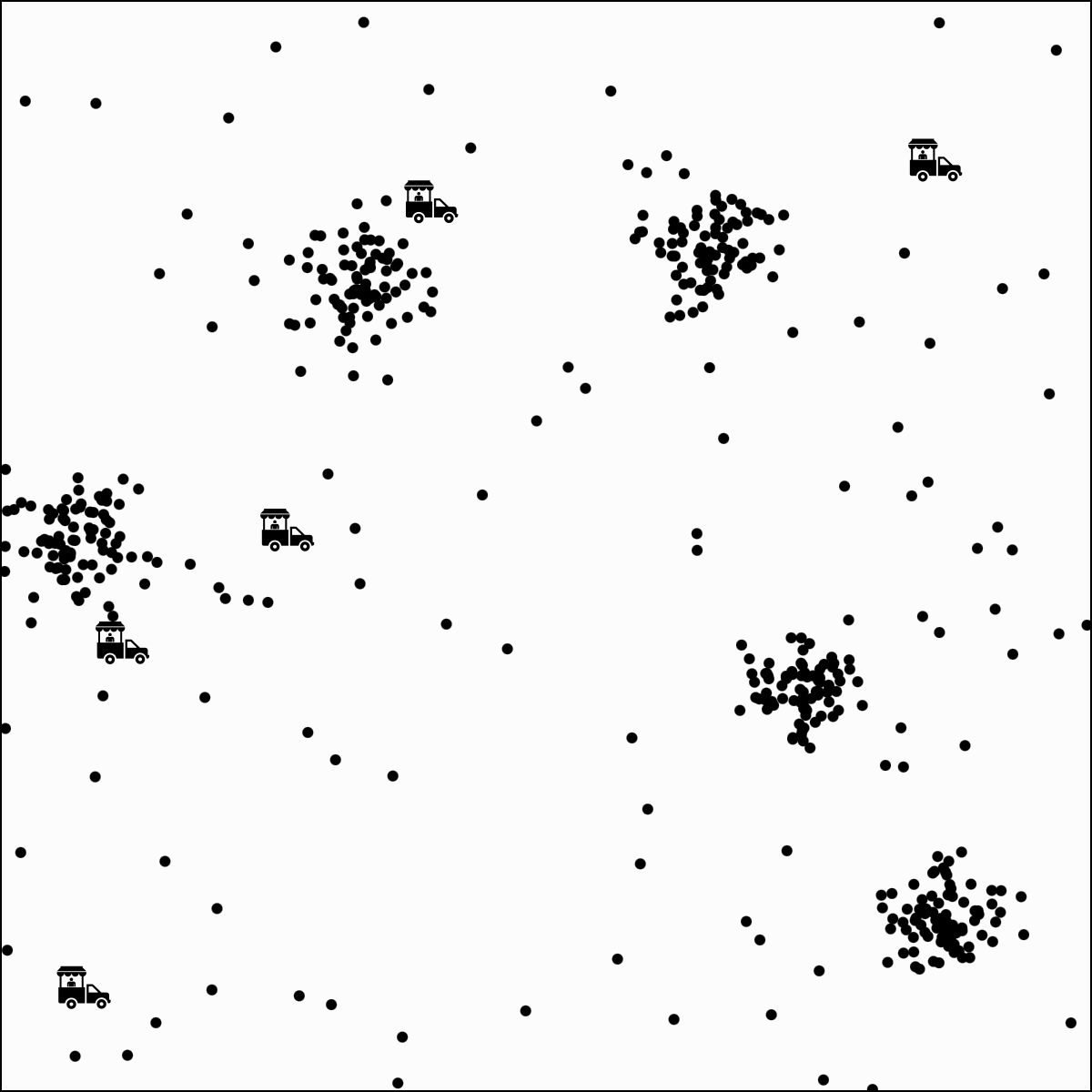

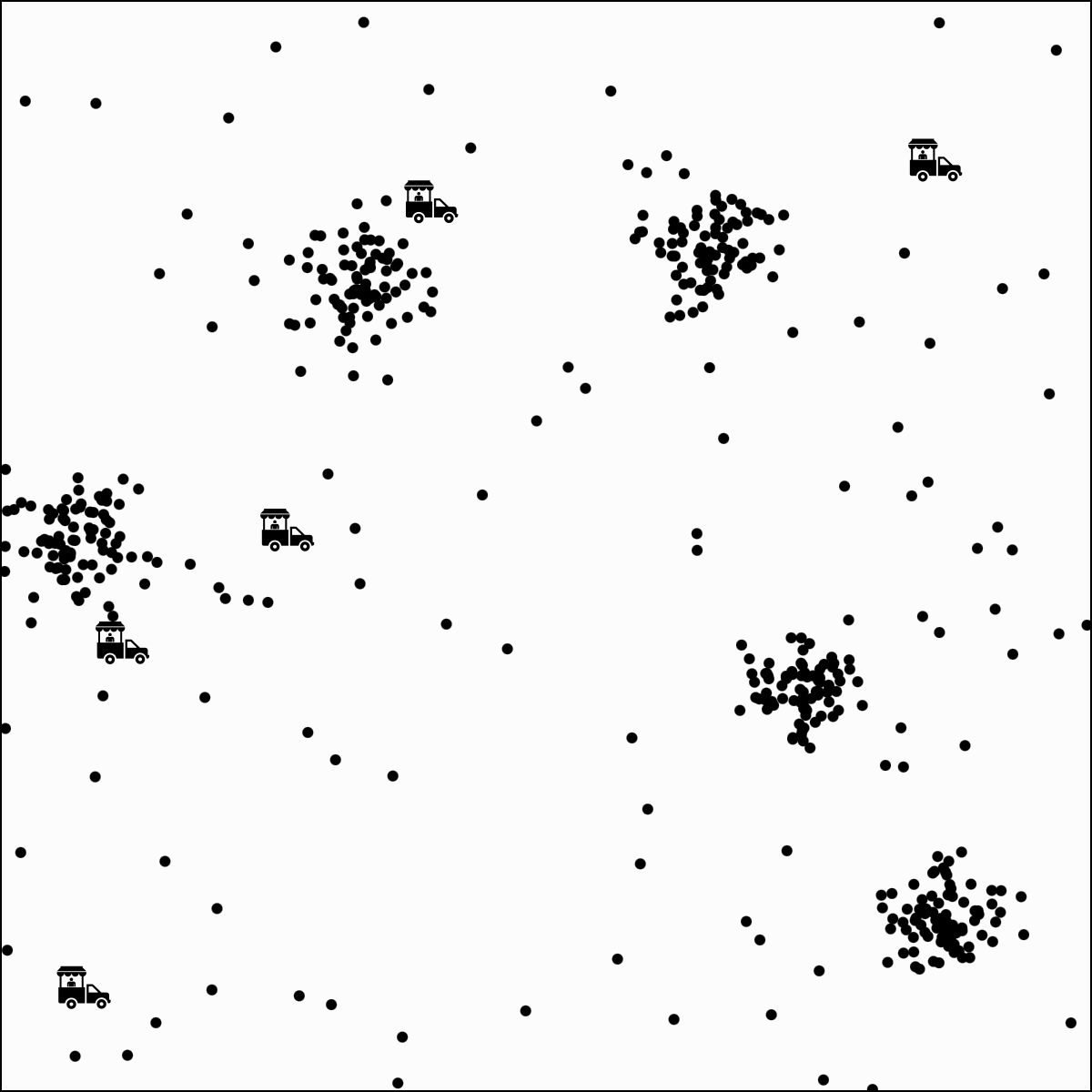

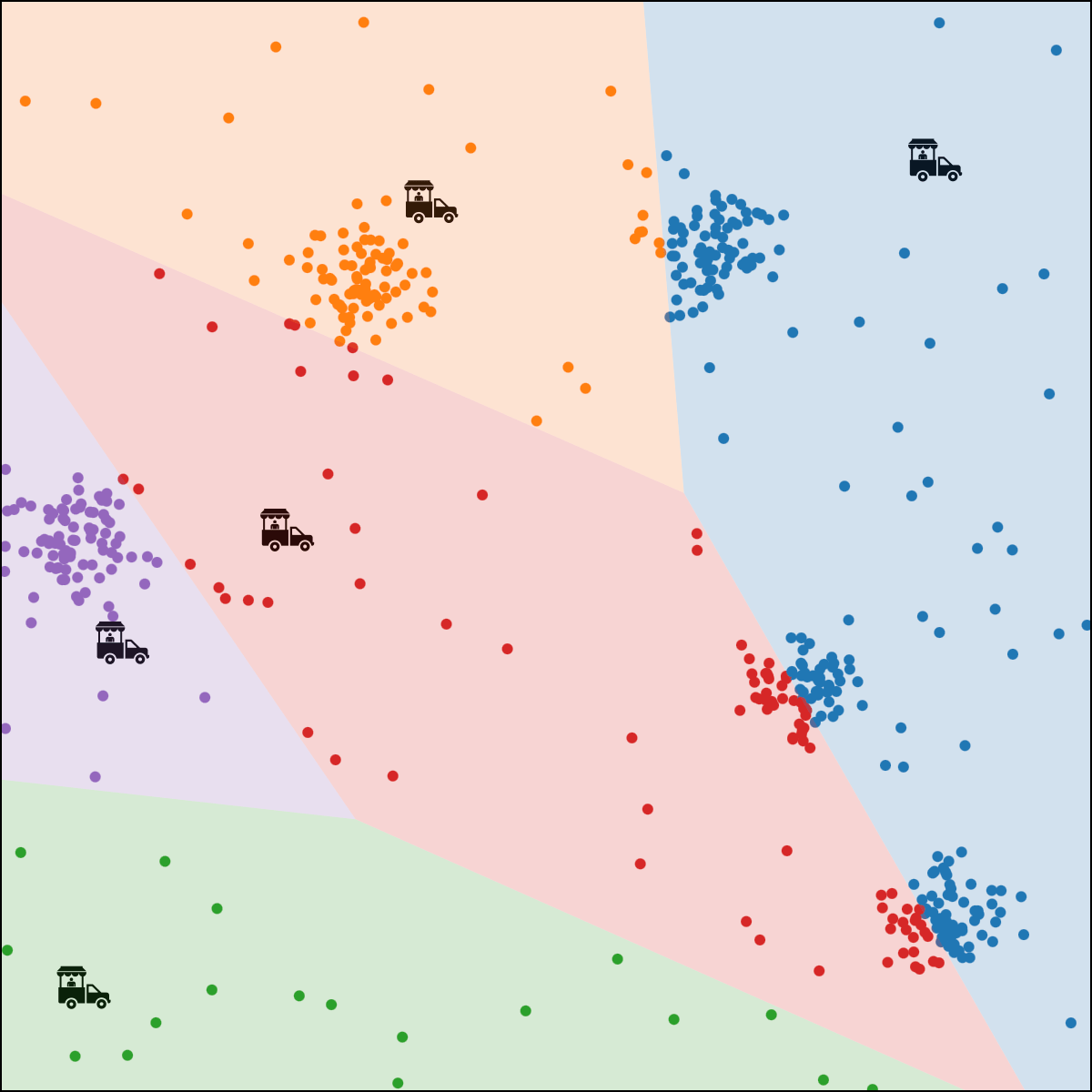

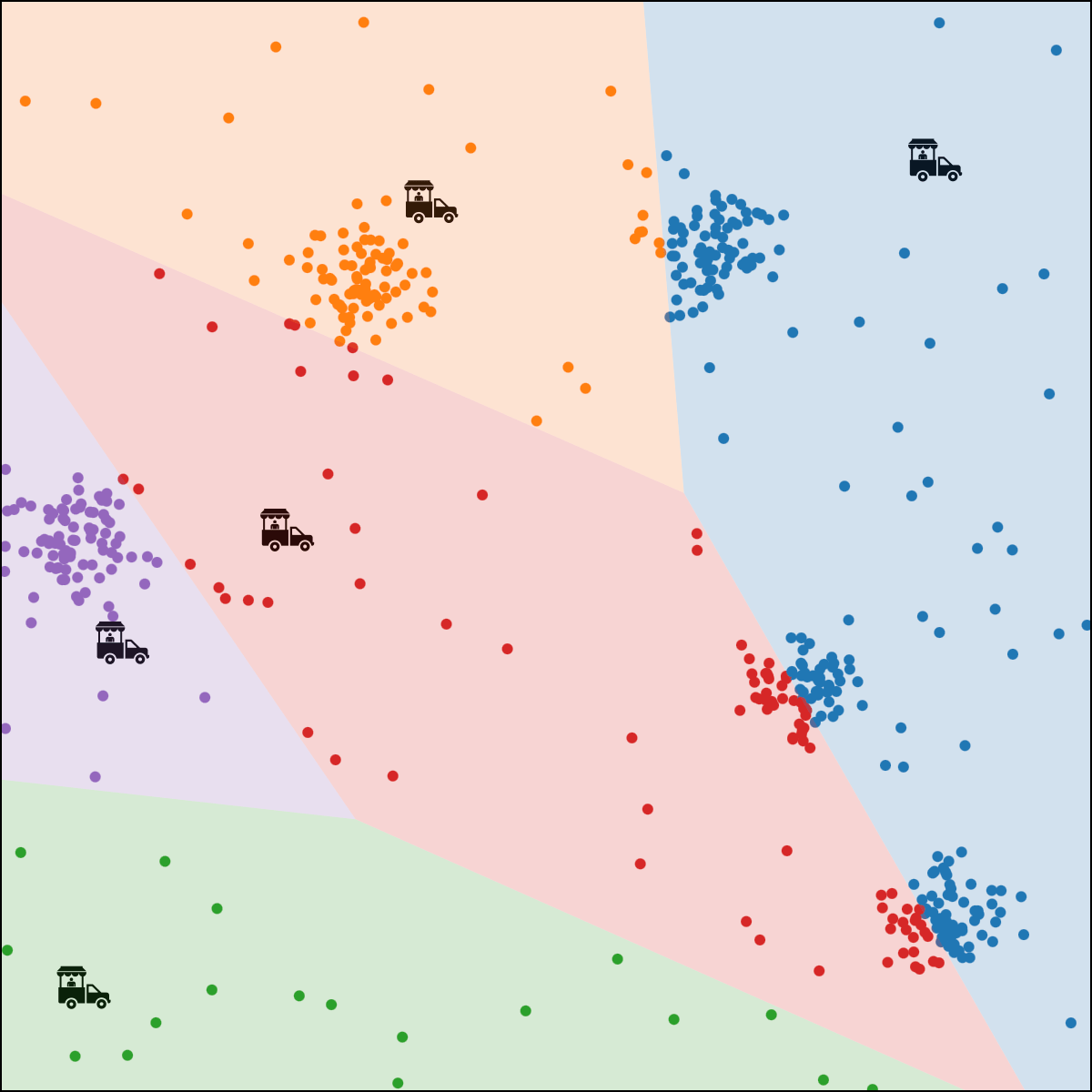

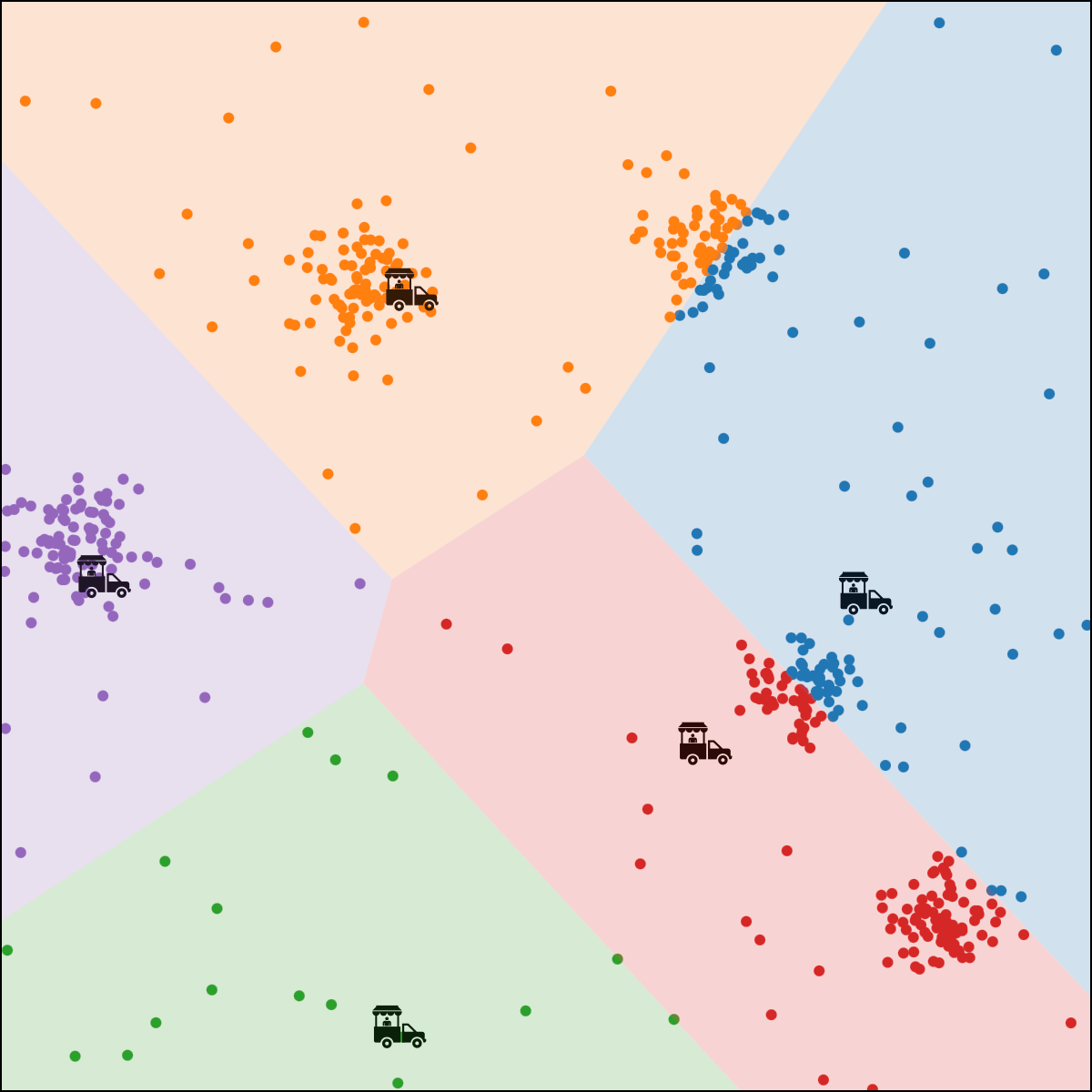

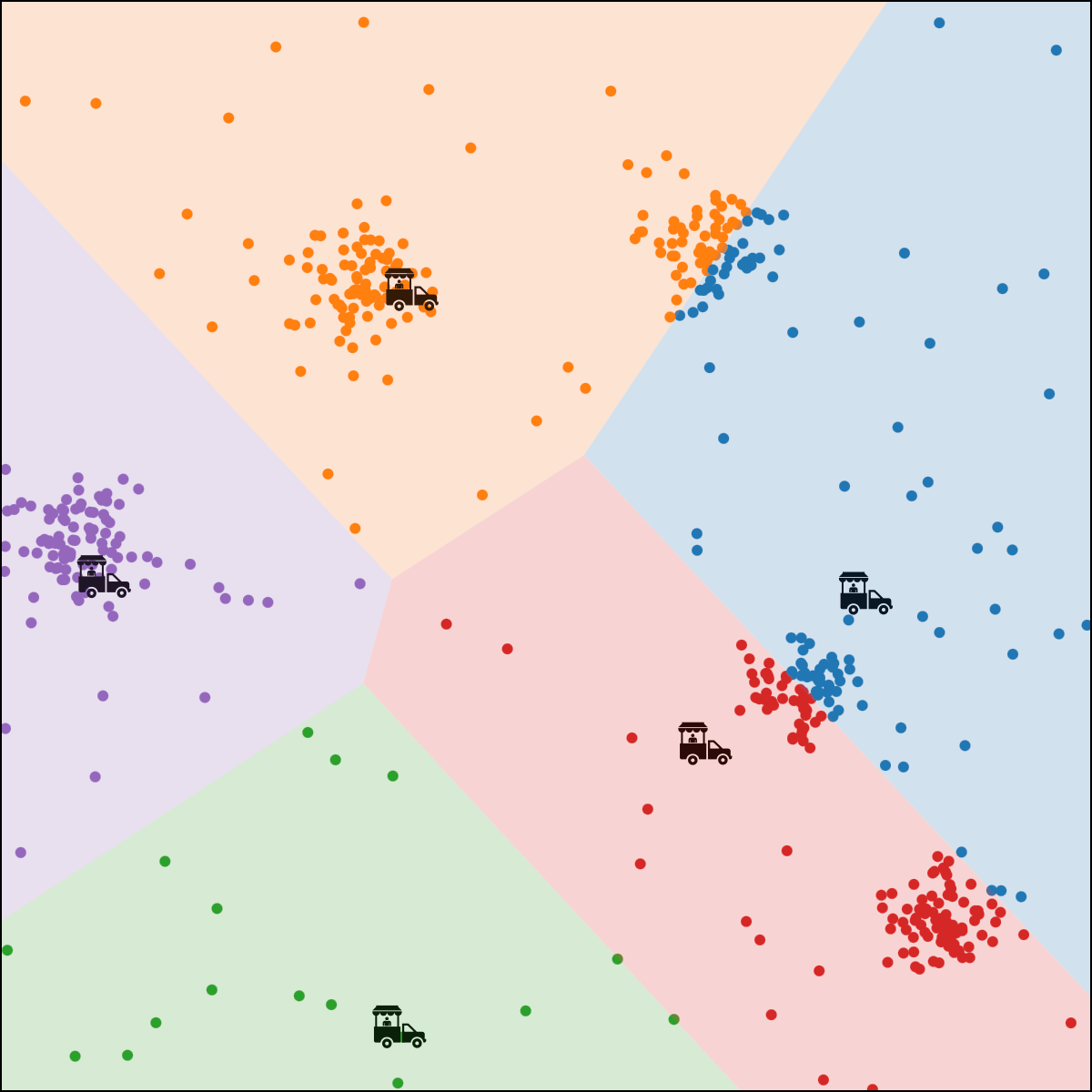

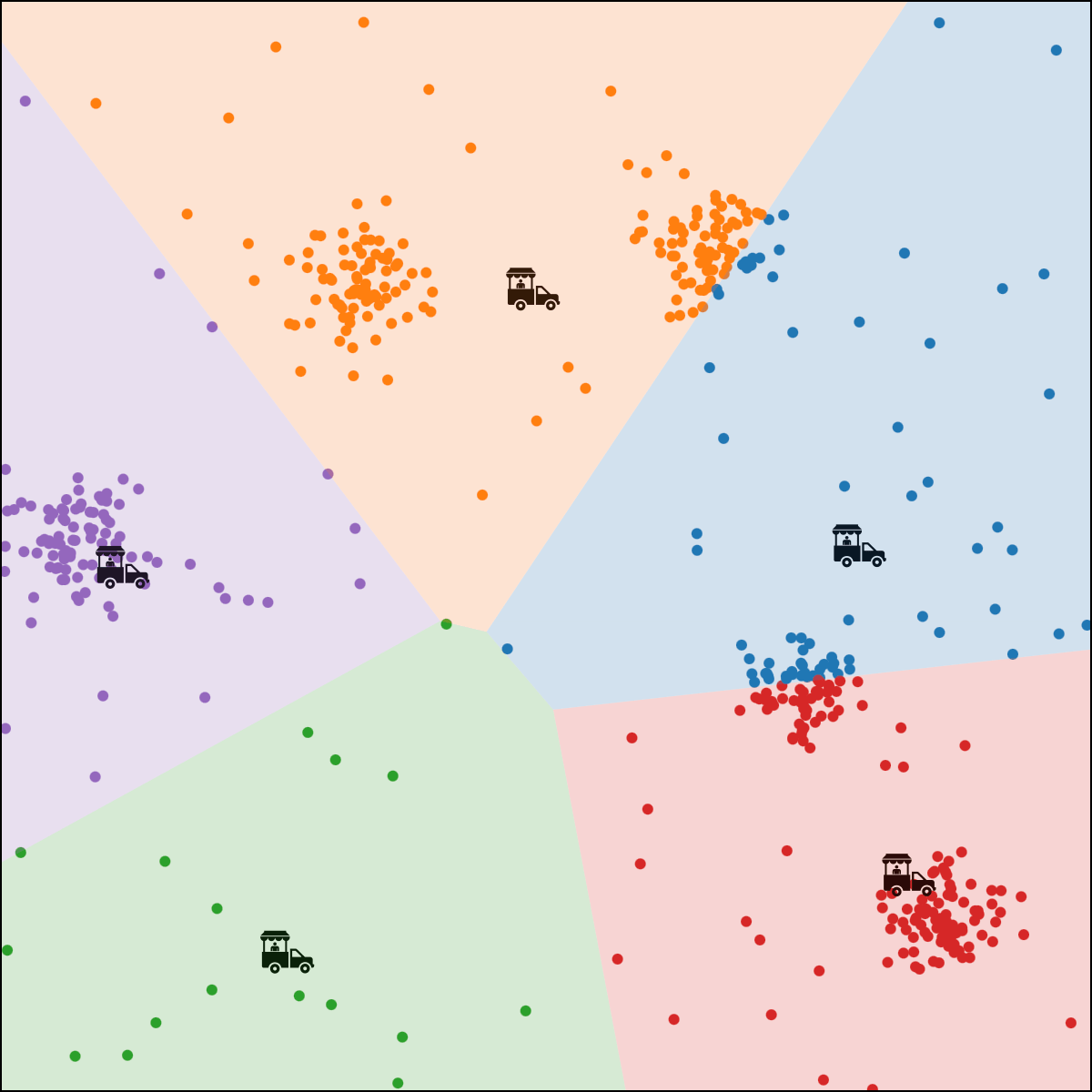

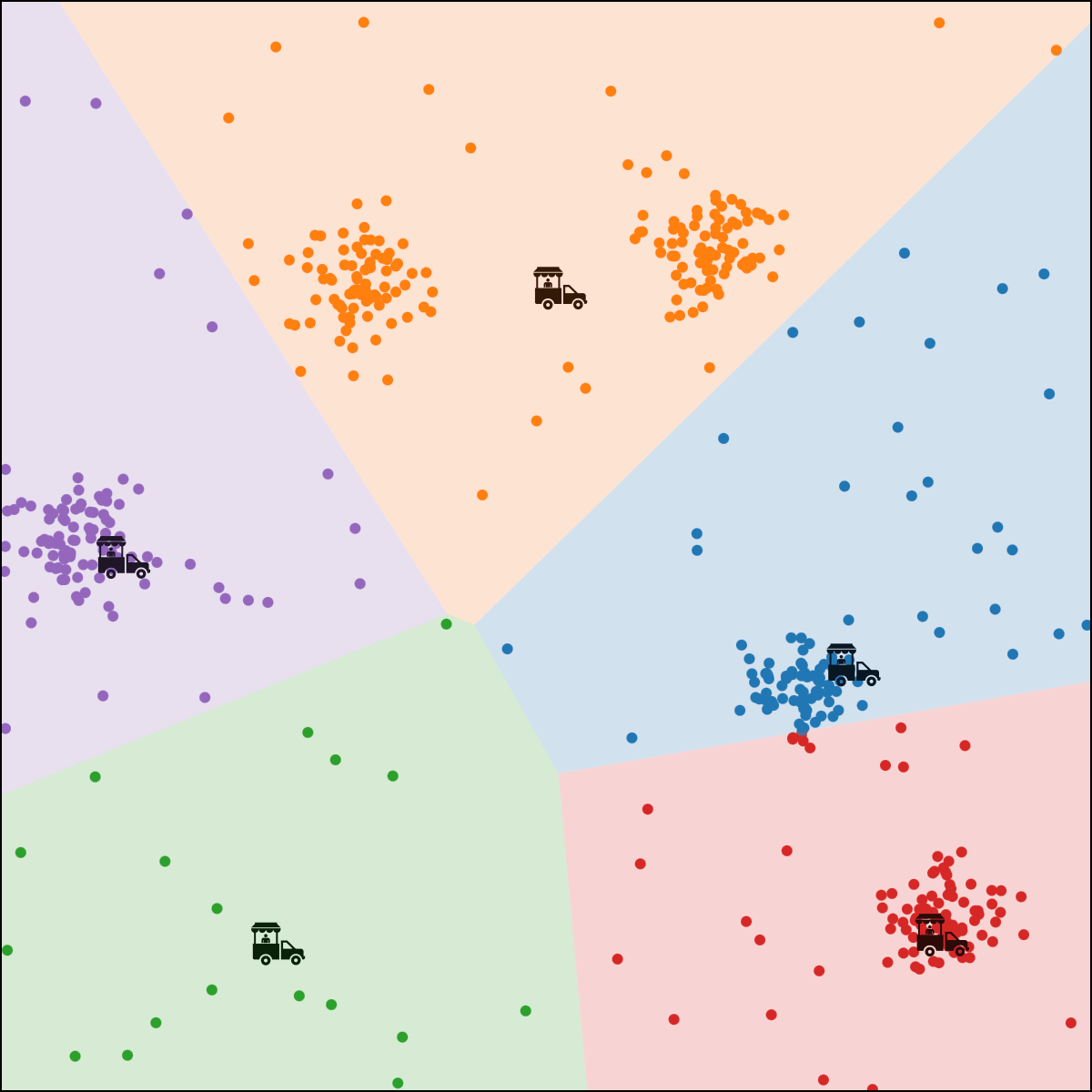

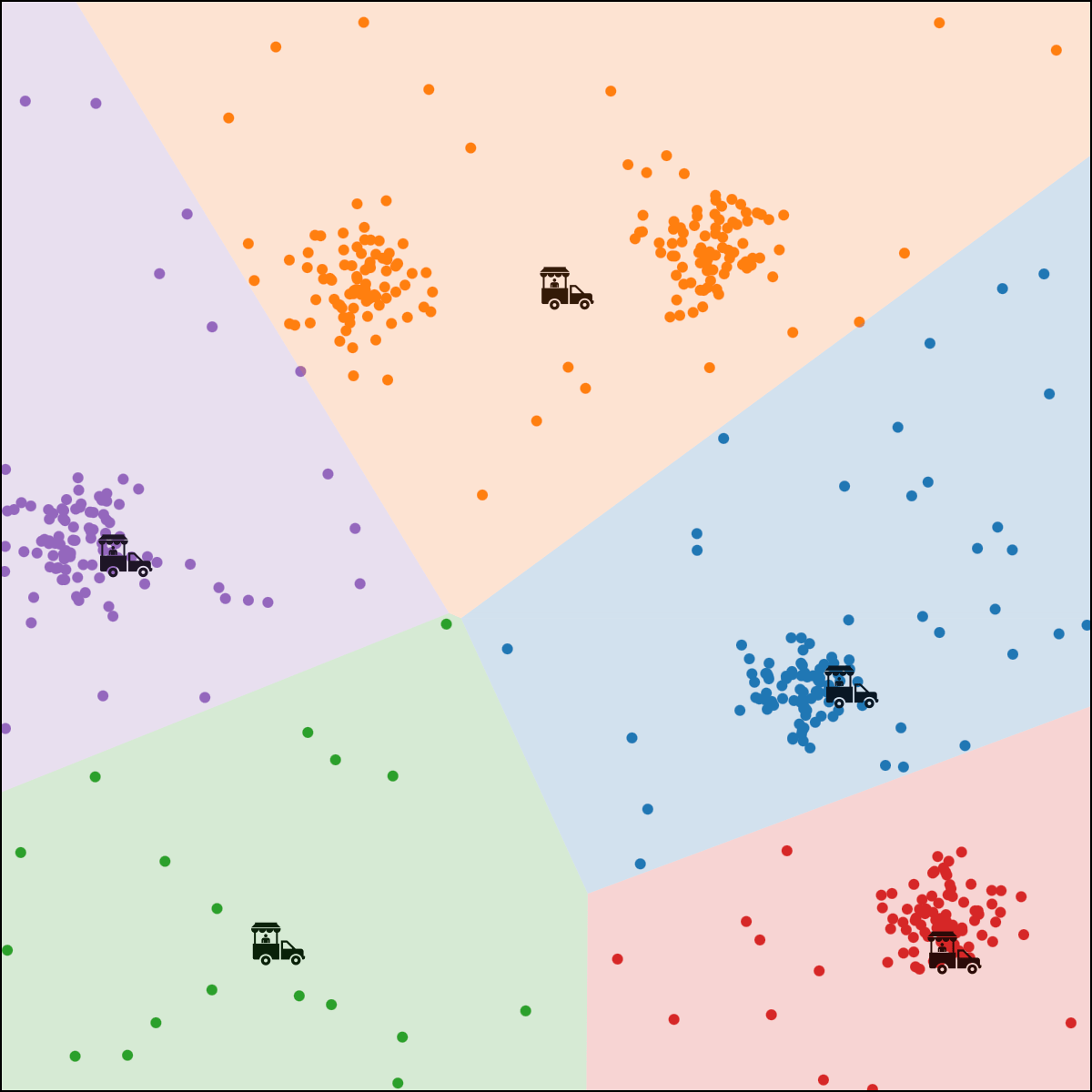

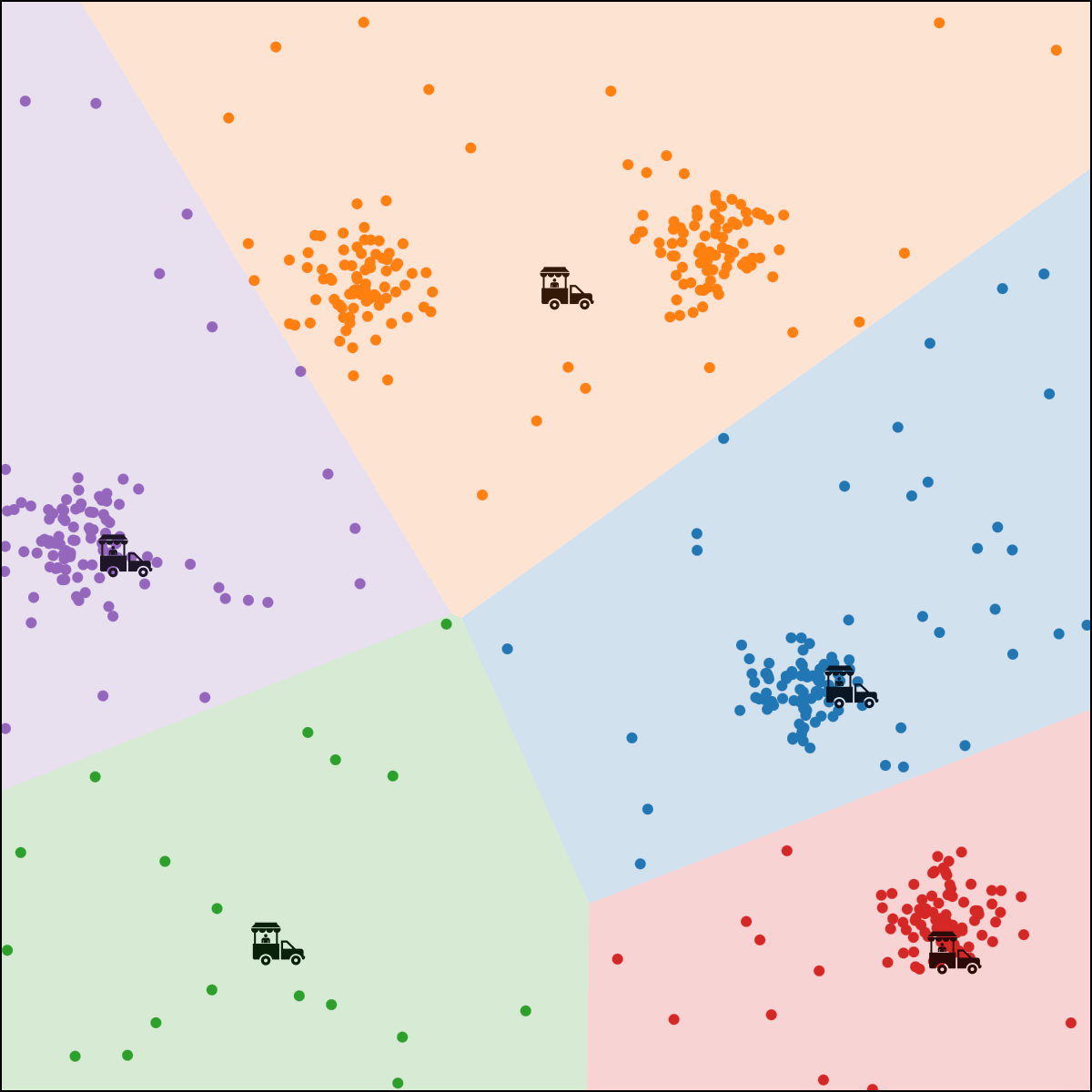

- \(x_1\): longitude, \(x_2\): latitude

- Person \(i\) location \(x^{(i)}\)

Food-truck placement

- \(x_1\): longitude, \(x_2\): latitude

- Person \(i\) location \(x^{(i)}\)

- Q: where should I have \(k\) food trucks park?

Food-truck placement

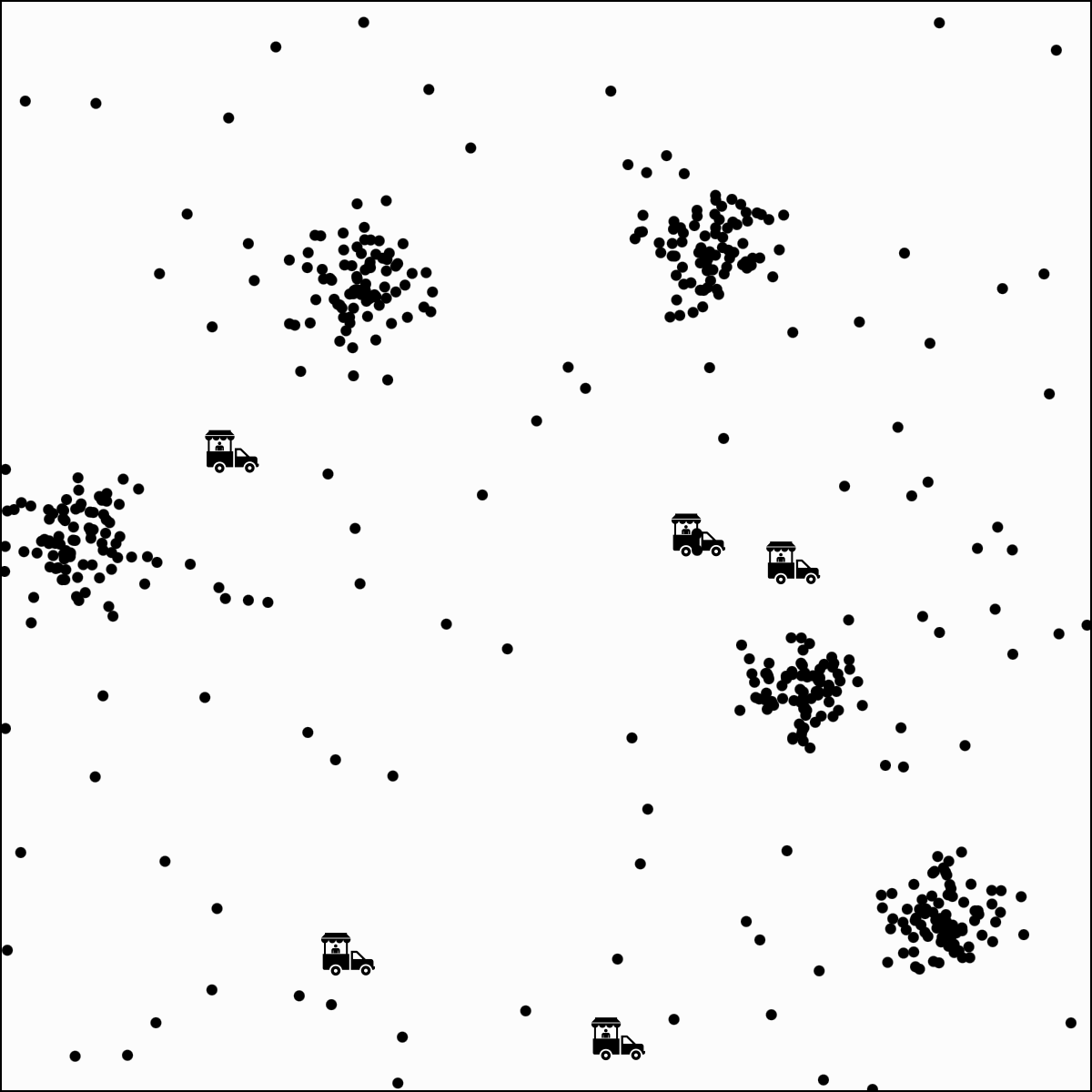

- \(x_1\): longitude, \(x_2\): latitude

- Person \(i\) location \(x^{(i)}\)

- Q: where should I have \(k\) food trucks park?

- Food truck \(j\) location \(\mu^{(j)}\)

Food-truck placement

- \(x_1\): longitude, \(x_2\): latitude

- Person \(i\) location \(x^{(i)}\)

- Q: where should I have \(k\) food trucks park?

- Food truck \(j\) location \(\mu^{(j)}\)

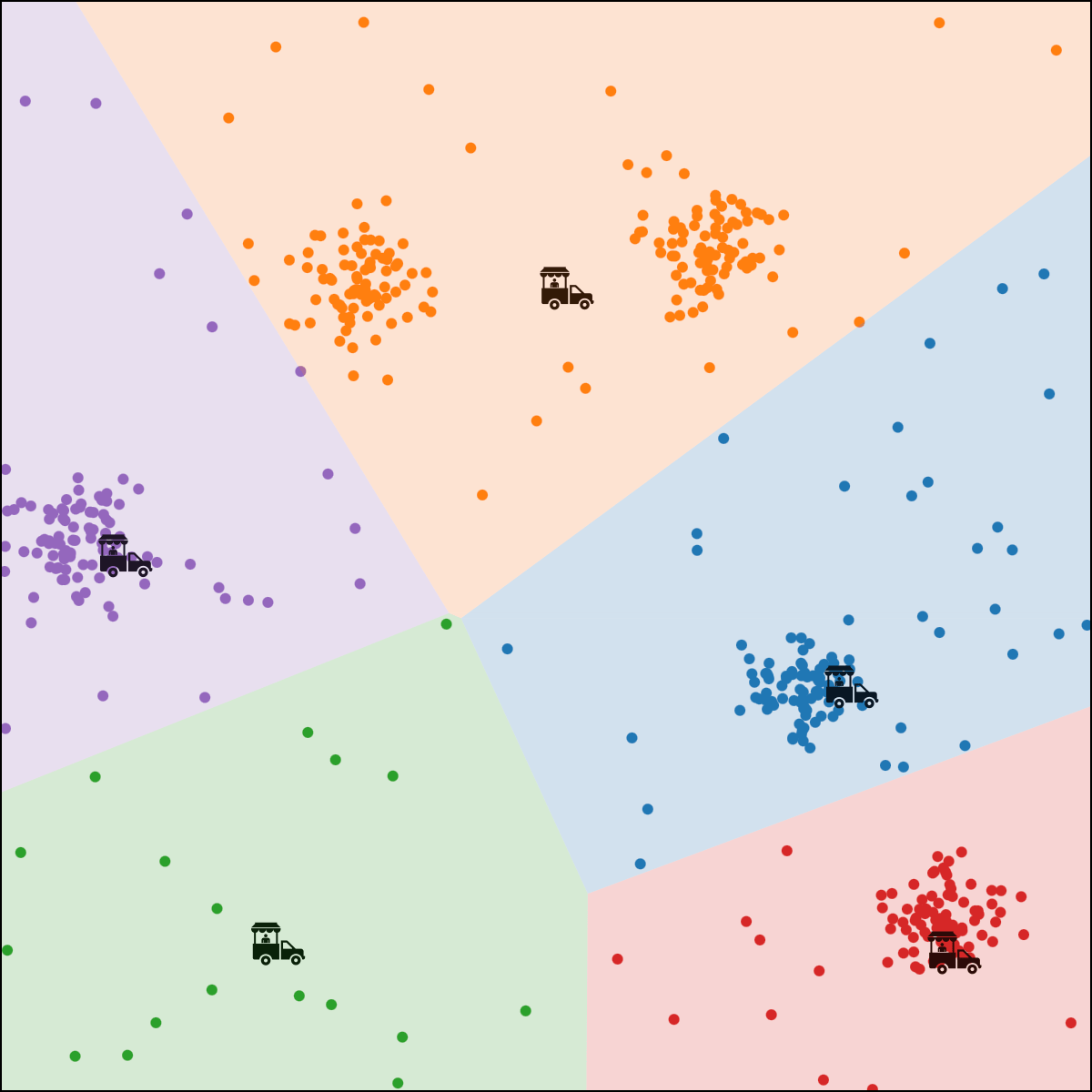

- Loss if \(i\) walks to truck \(j\) : \(\left\|x^{(i)}-\mu^{(j)}\right\|_2^2\)

Food-truck placement

- \(x_1\): longitude, \(x_2\): latitude

- Person \(i\) location \(x^{(i)}\)

- Q: where should I have \(k\) food trucks park?

- Food truck \(j\) location \(\mu^{(j)}\)

- Loss if \(i\) walks to truck \(j\) : \(\left\|x^{(i)}-\mu^{(j)}\right\|_2^2\)

- Index of the truck where person \(i\) walks: \(y^{(i)}\)

- Person \(i\) overall loss:

\(\sum_{j=1}^k \mathbf{1}\left\{y^{(i)}=j\right\}\left\|x^{(i)}-\mu^{(j)}\right\|_2^2\)

Food-truck placement

indicator function, 1 if person \(i\) is assigned to truck \(j,\) otherwise 0.

\( \sum_{j=1}^k \mathbf{1}\left\{y^{(i)}=j\right\}\left\|x^{(i)}-\mu^{(j)}\right\|_2^2\)

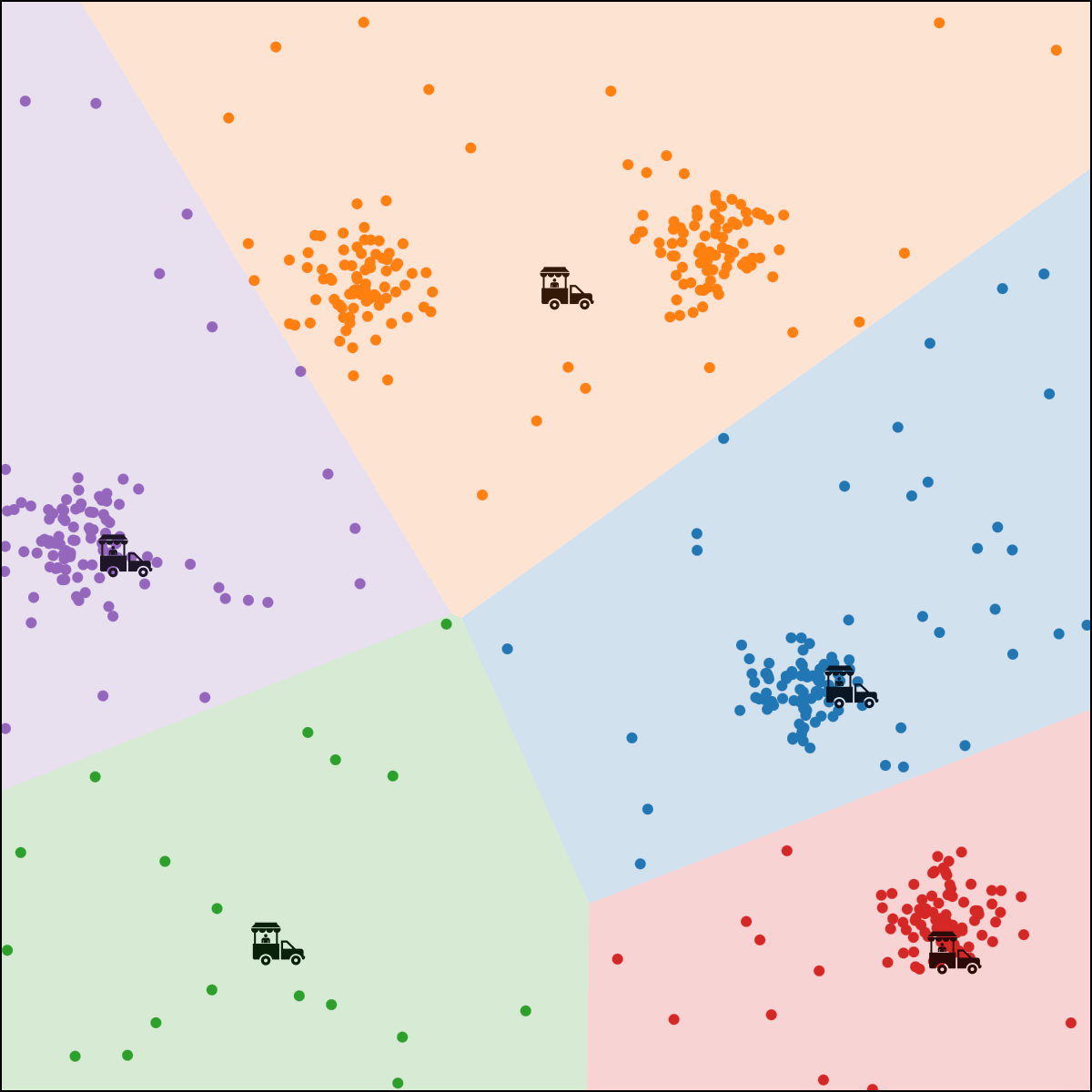

\(k\)-means objective

clustering membership

clustering centroid location

enumerates over cluster

enumerates over data

can switch the order = \(\sum_{j=1}^k \sum_{i=1}^n \mathbf{1}\left\{y^{(i)}=j\right\}\left\|x^{(i)}-\mu^{(j)}\right\|_2^2\)

what we learn

\(\sum_{i=1}^n\)

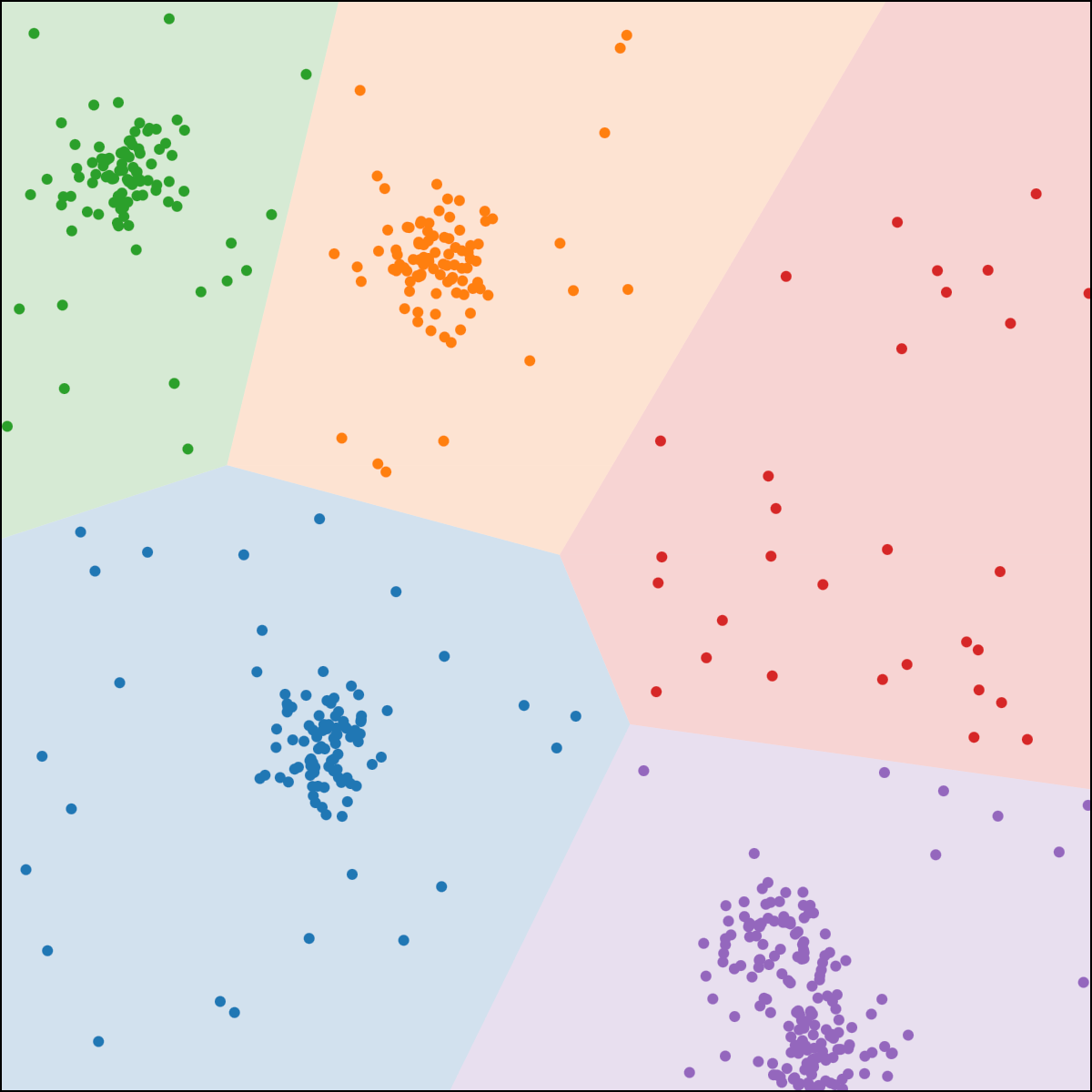

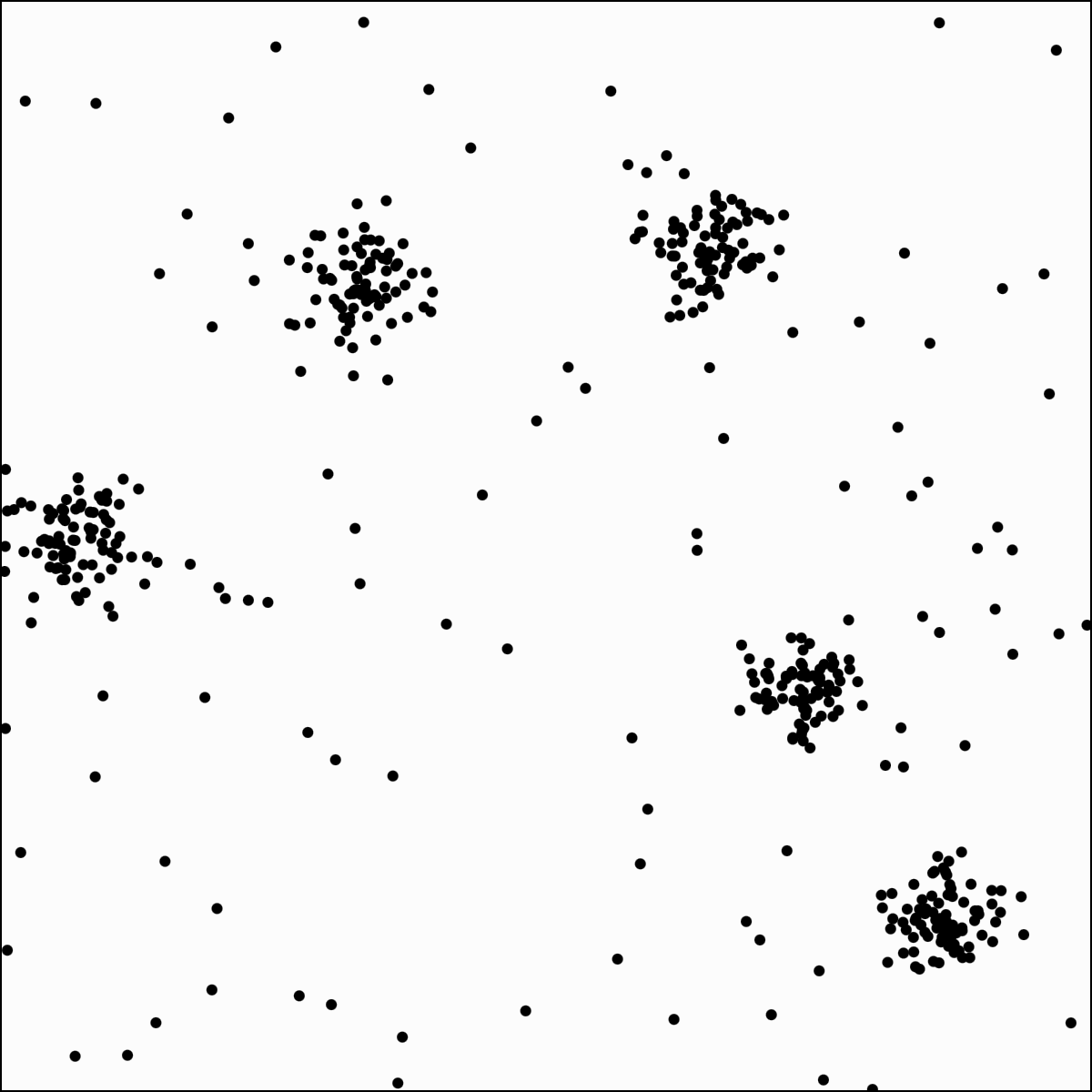

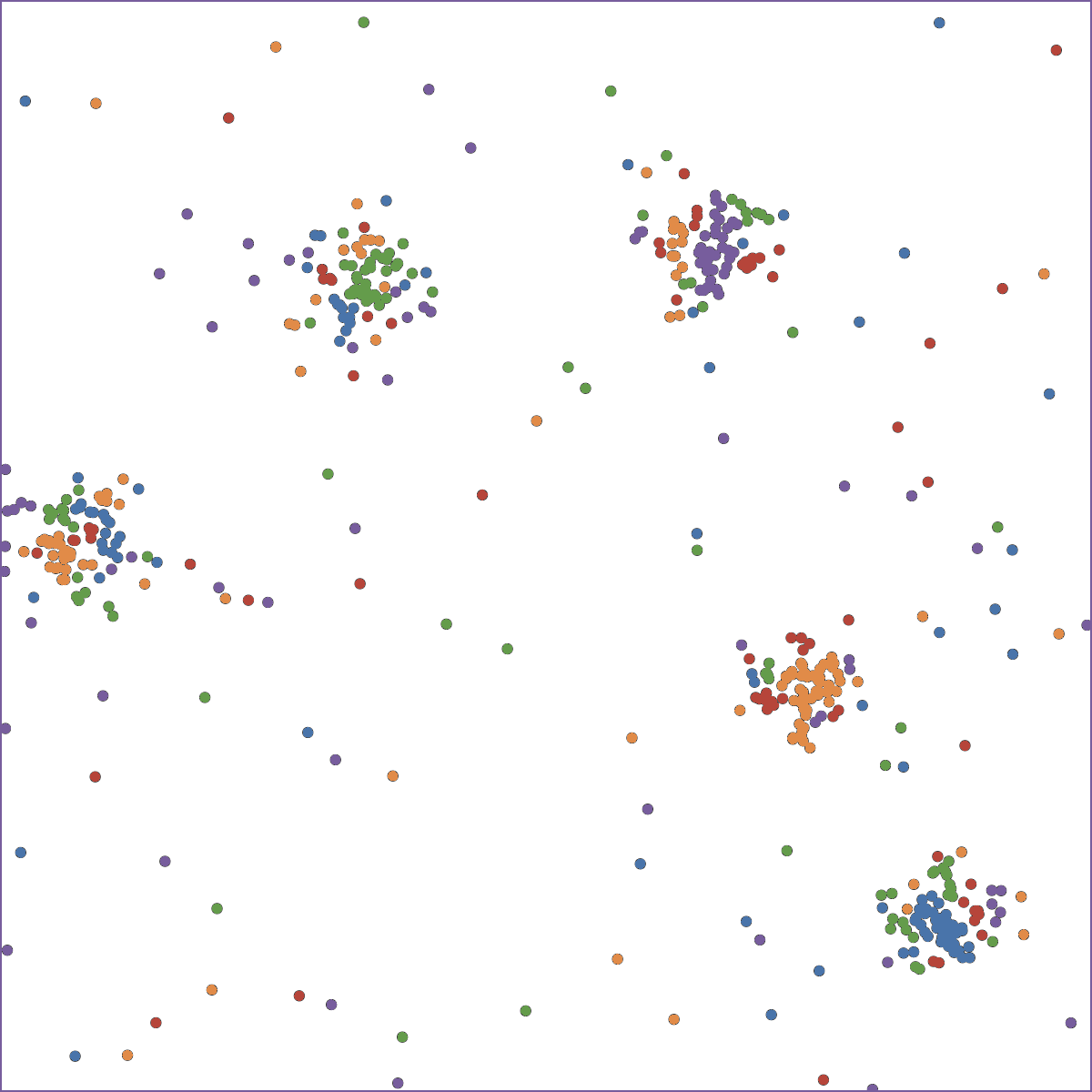

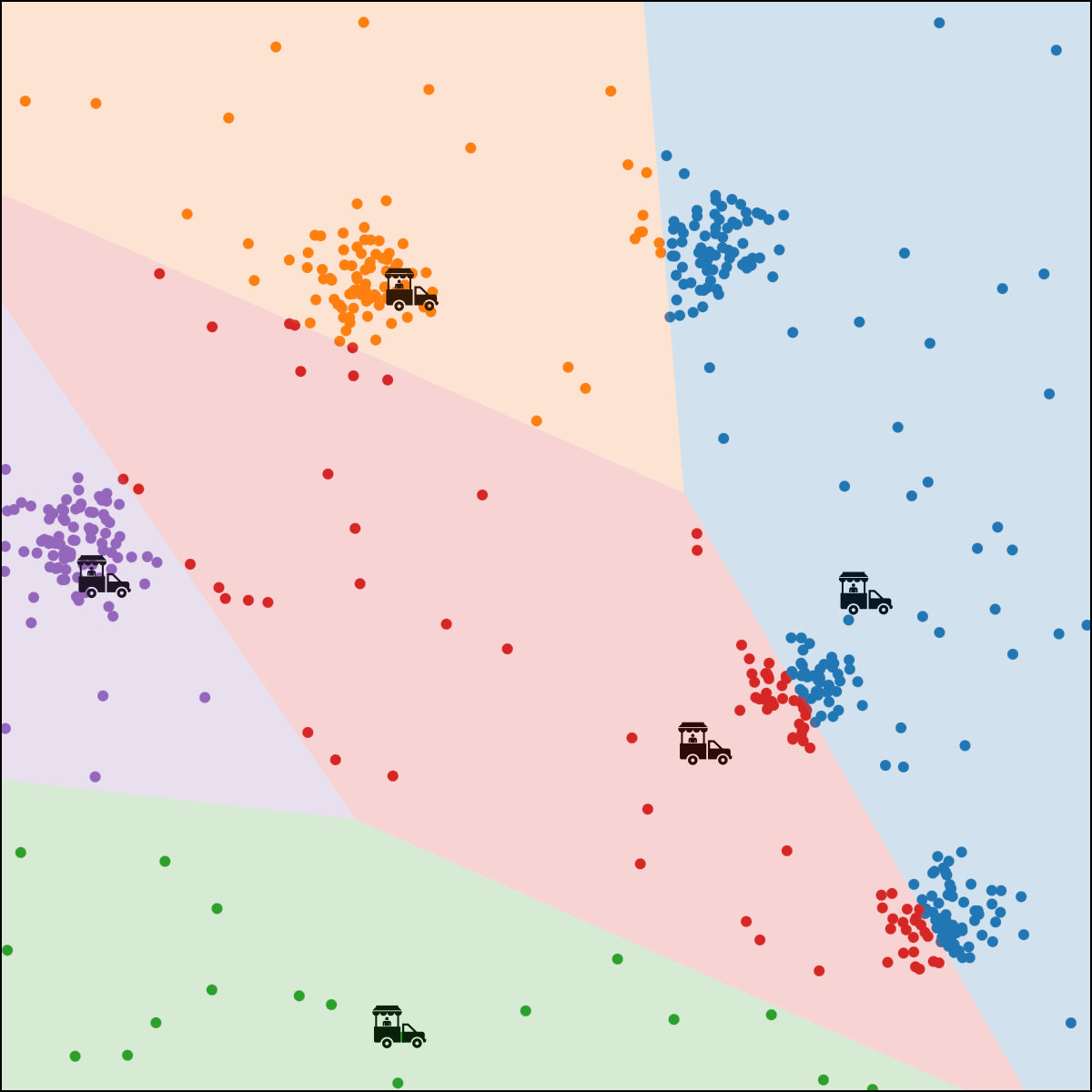

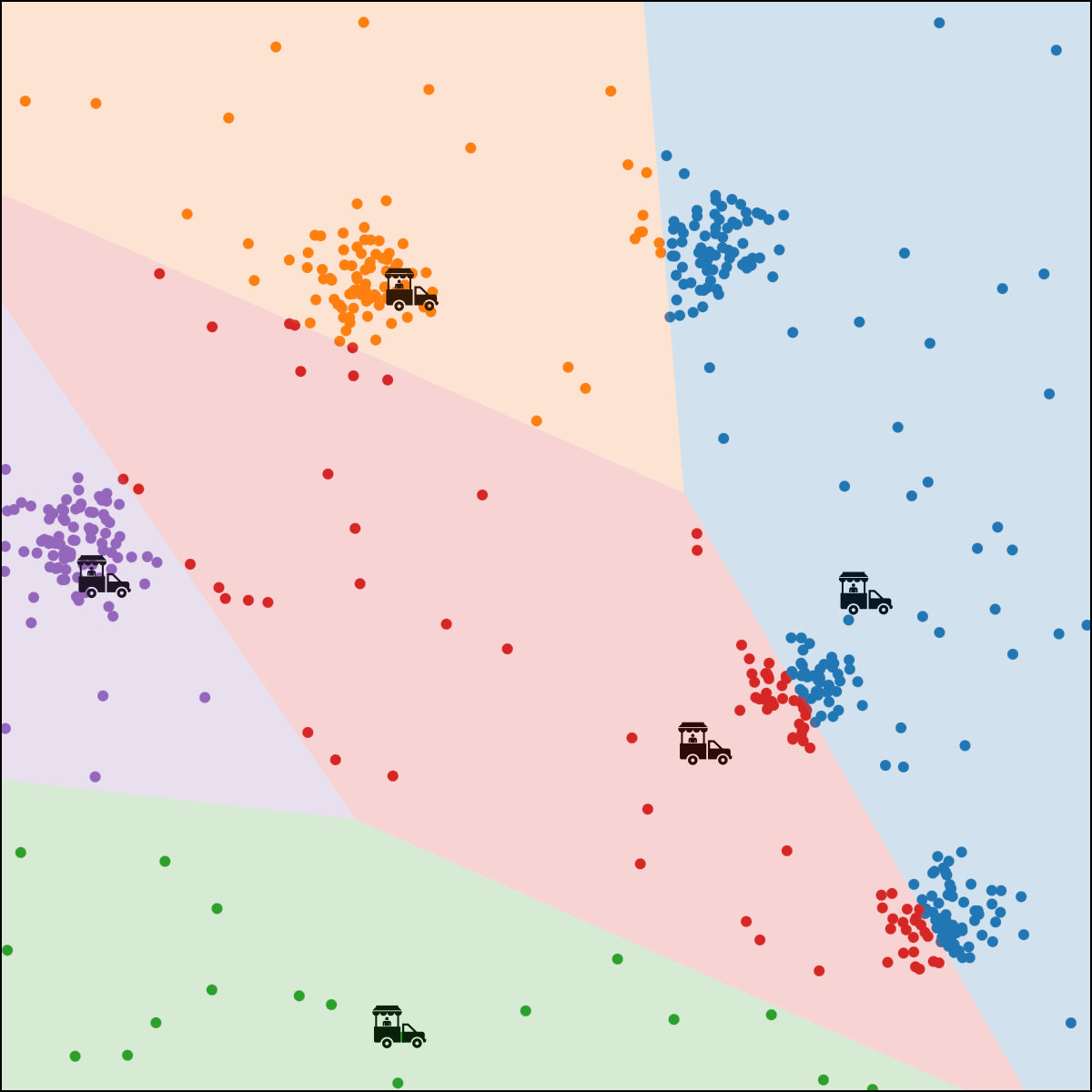

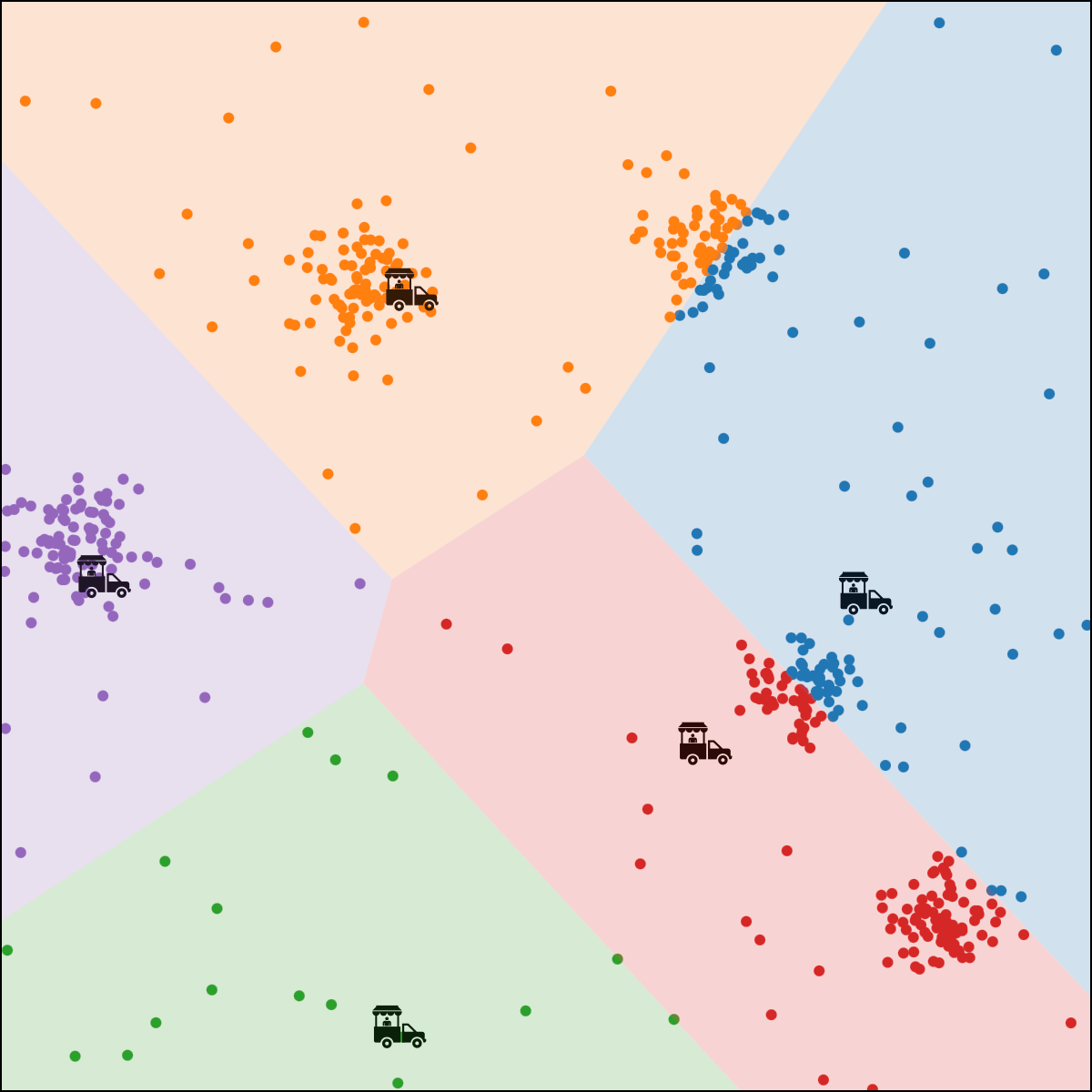

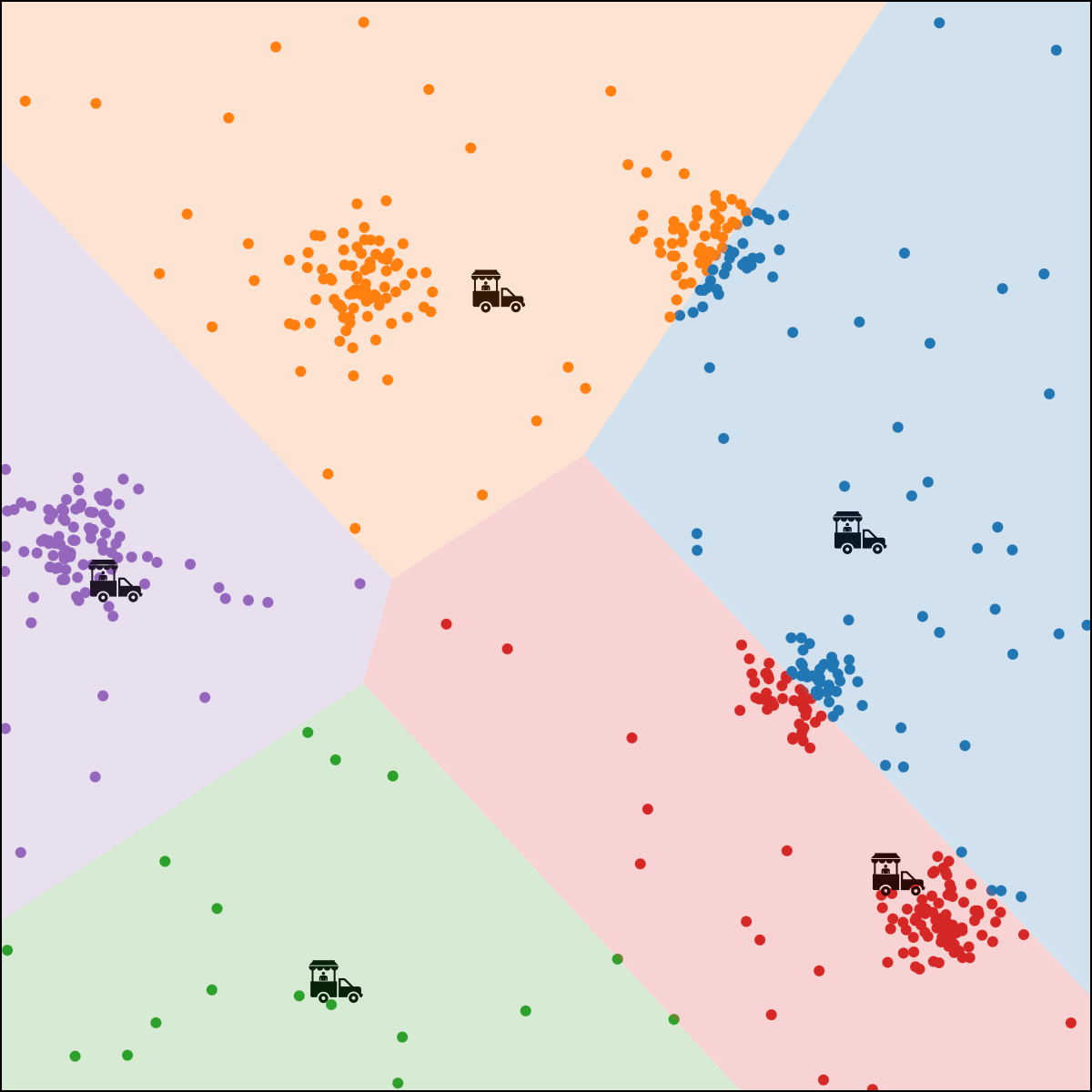

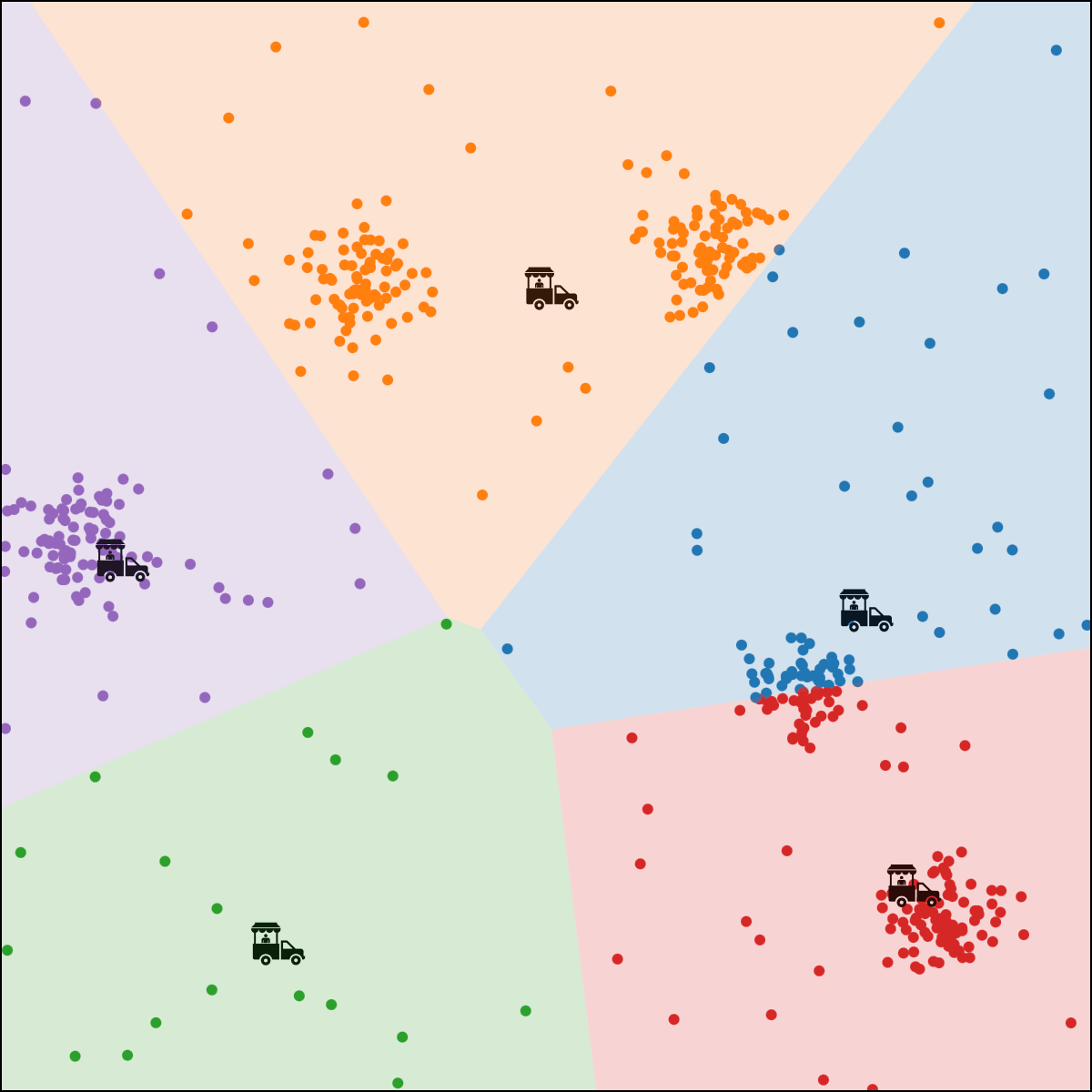

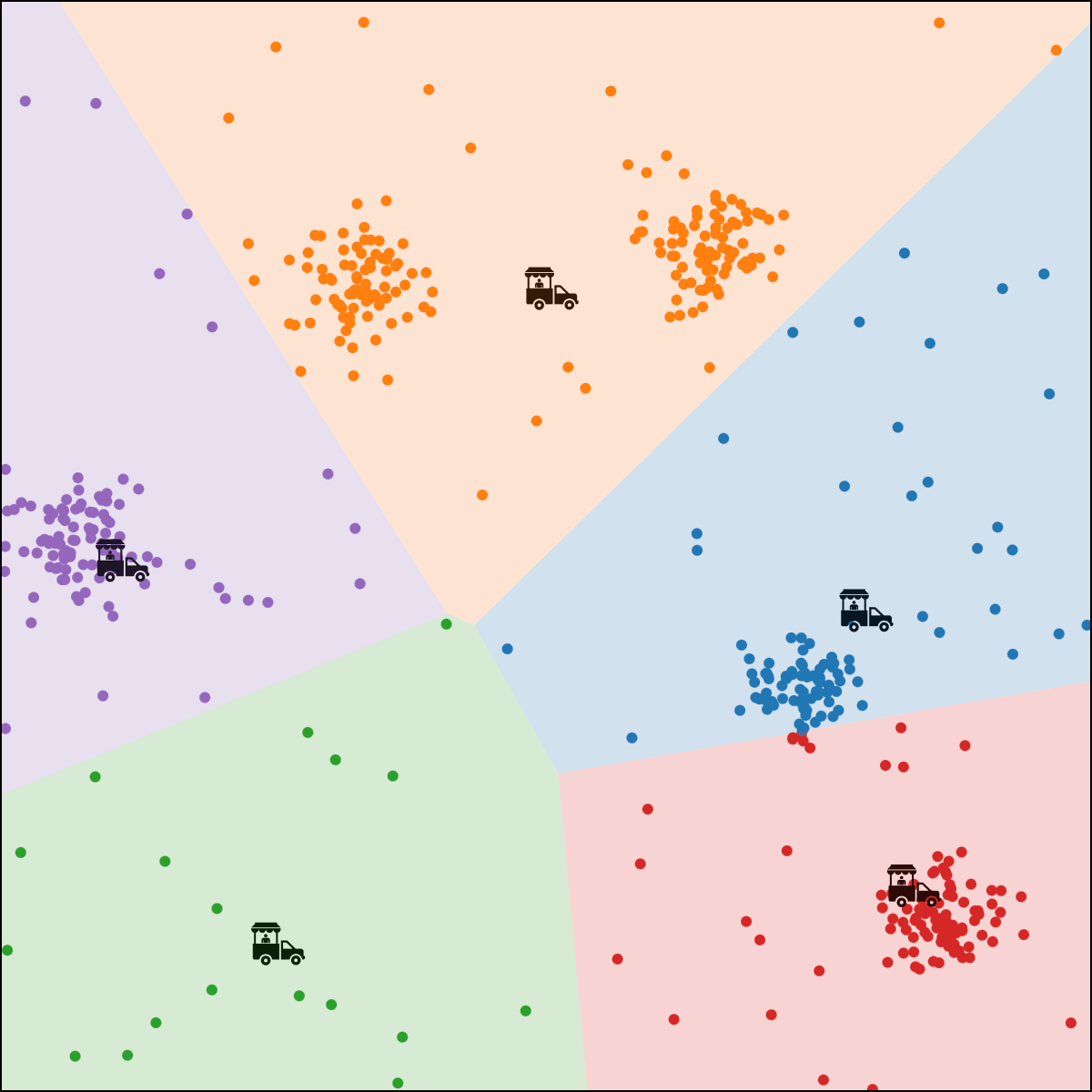

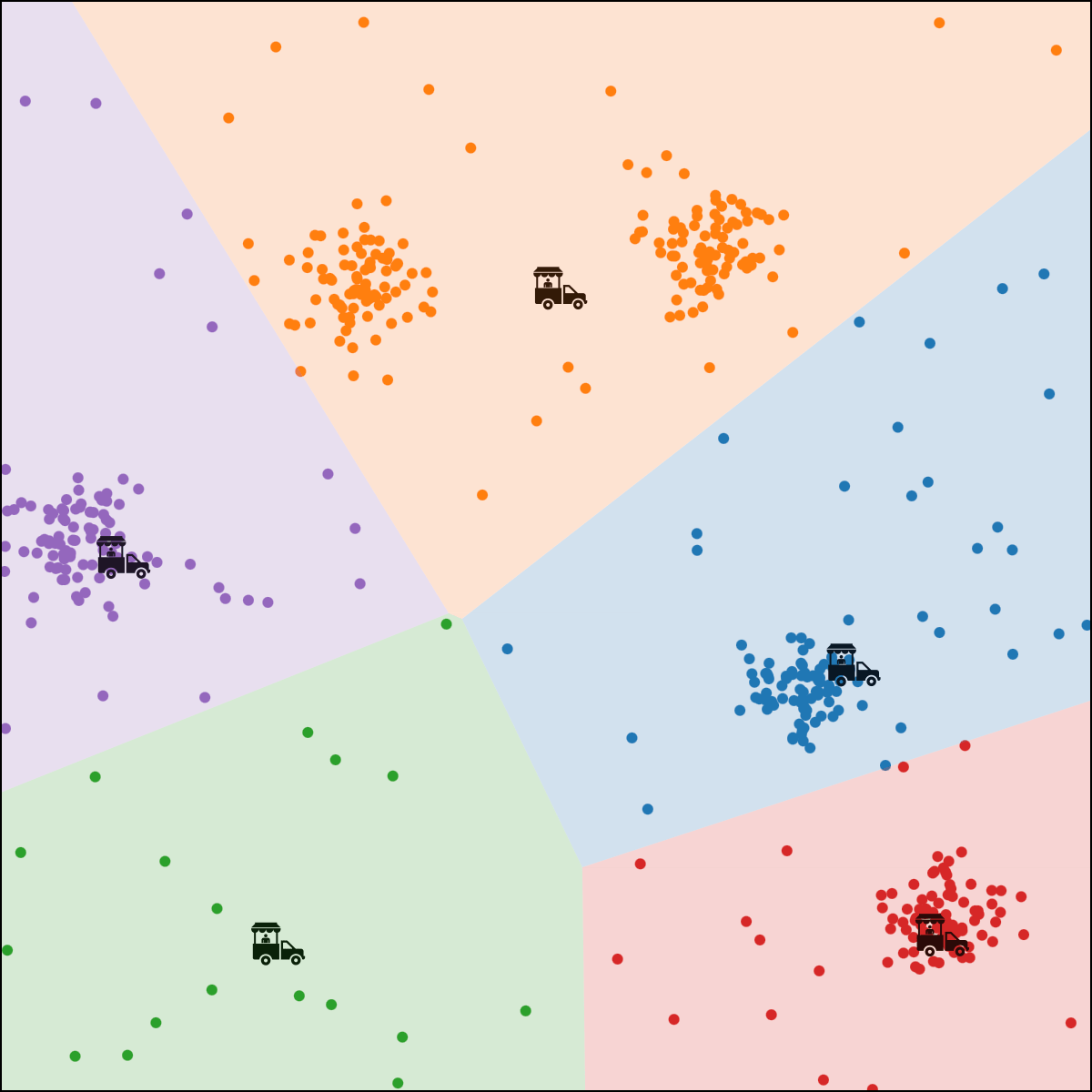

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

3 \(y_{\text {old }} = y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

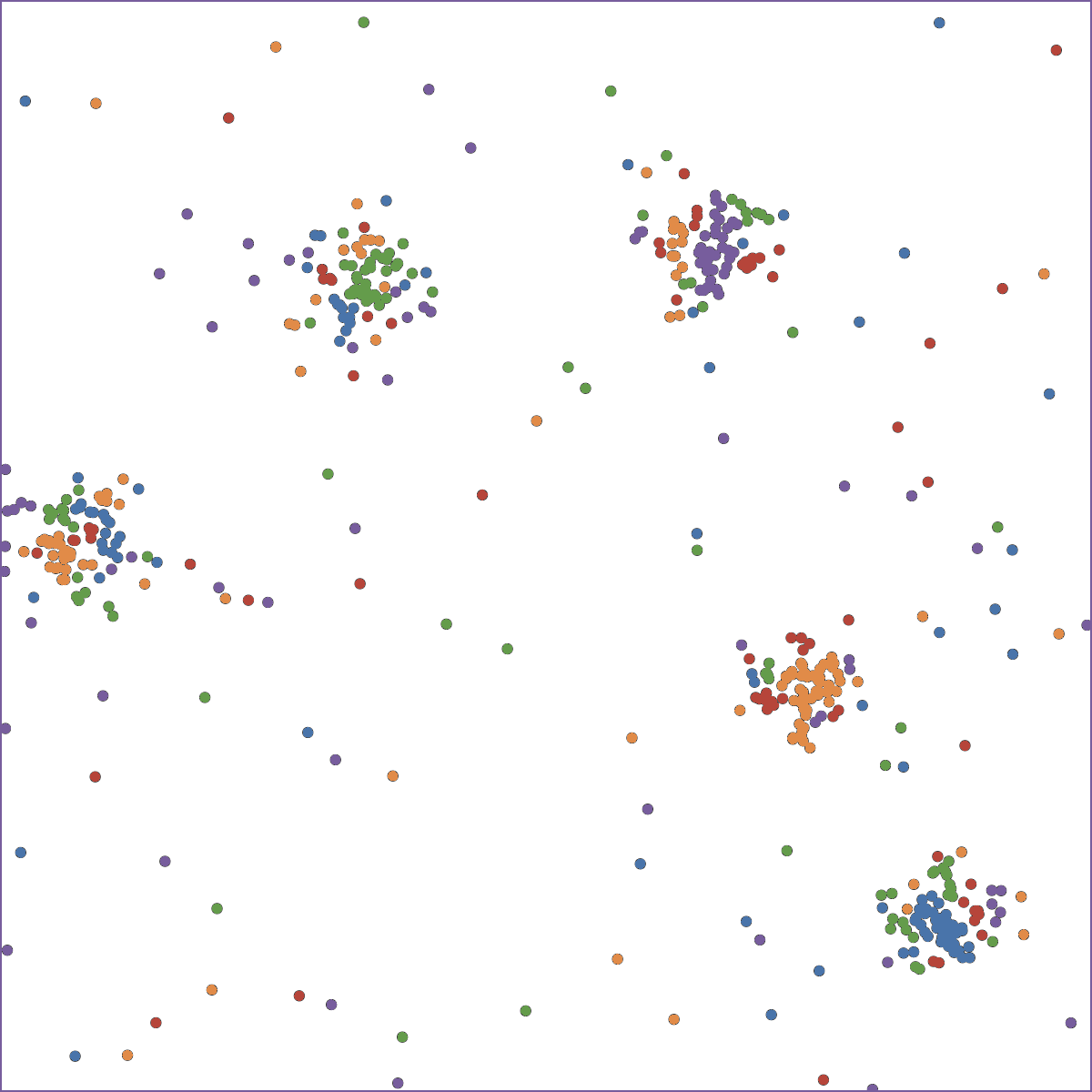

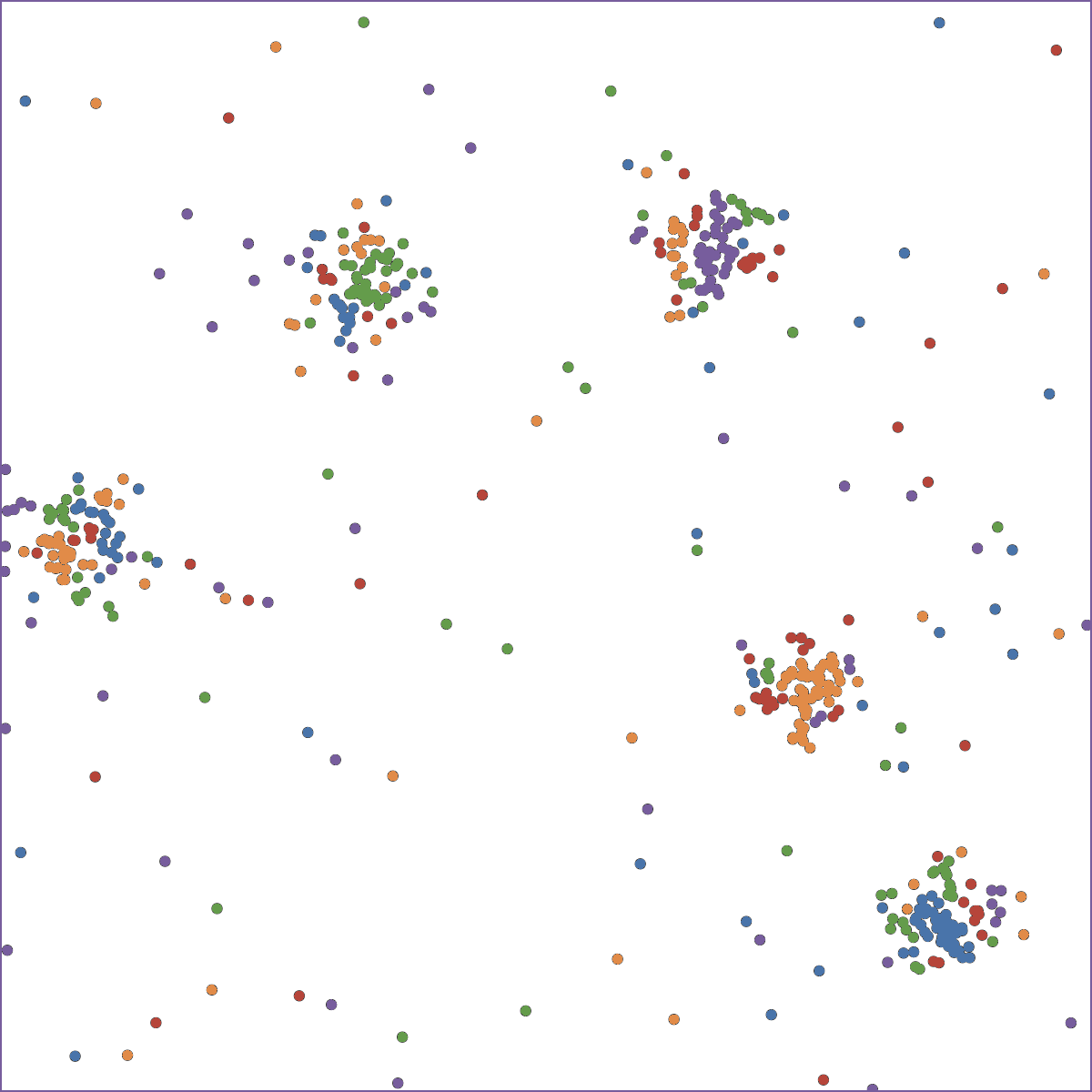

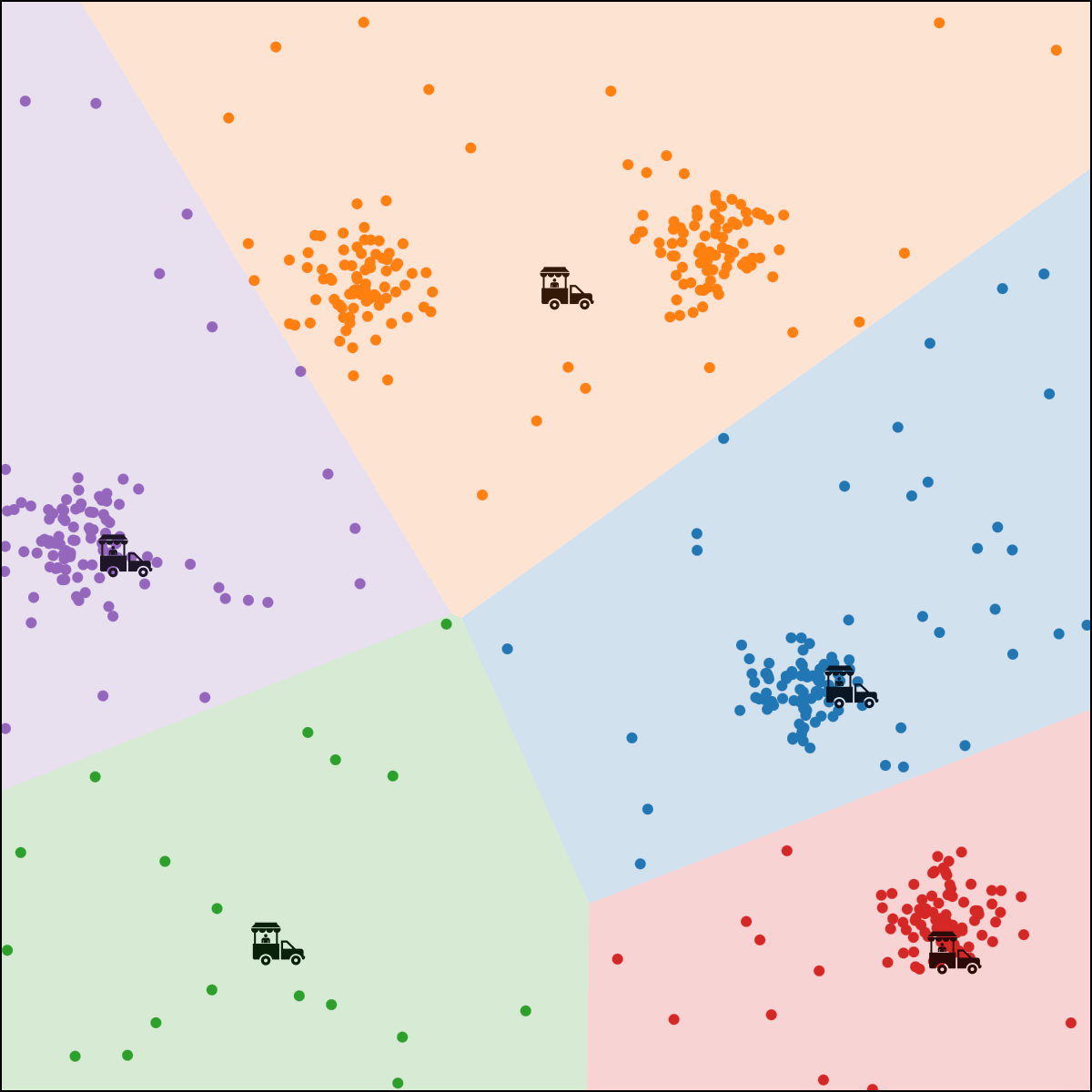

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

each person \(i\) gets assigned to food truck \(j\), color-coded.

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

\(\dots\)

3 \(y_{\text {old }} = y\)

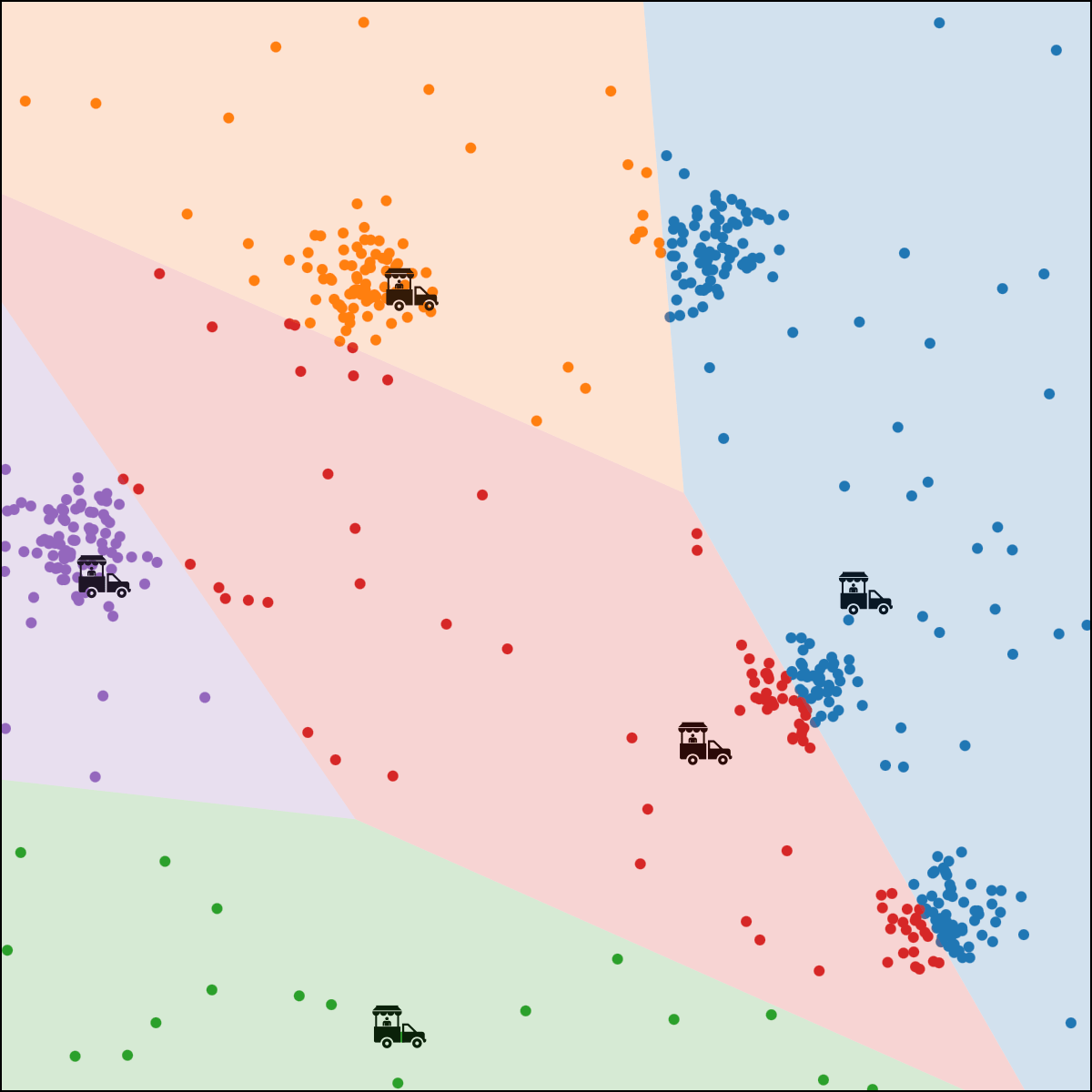

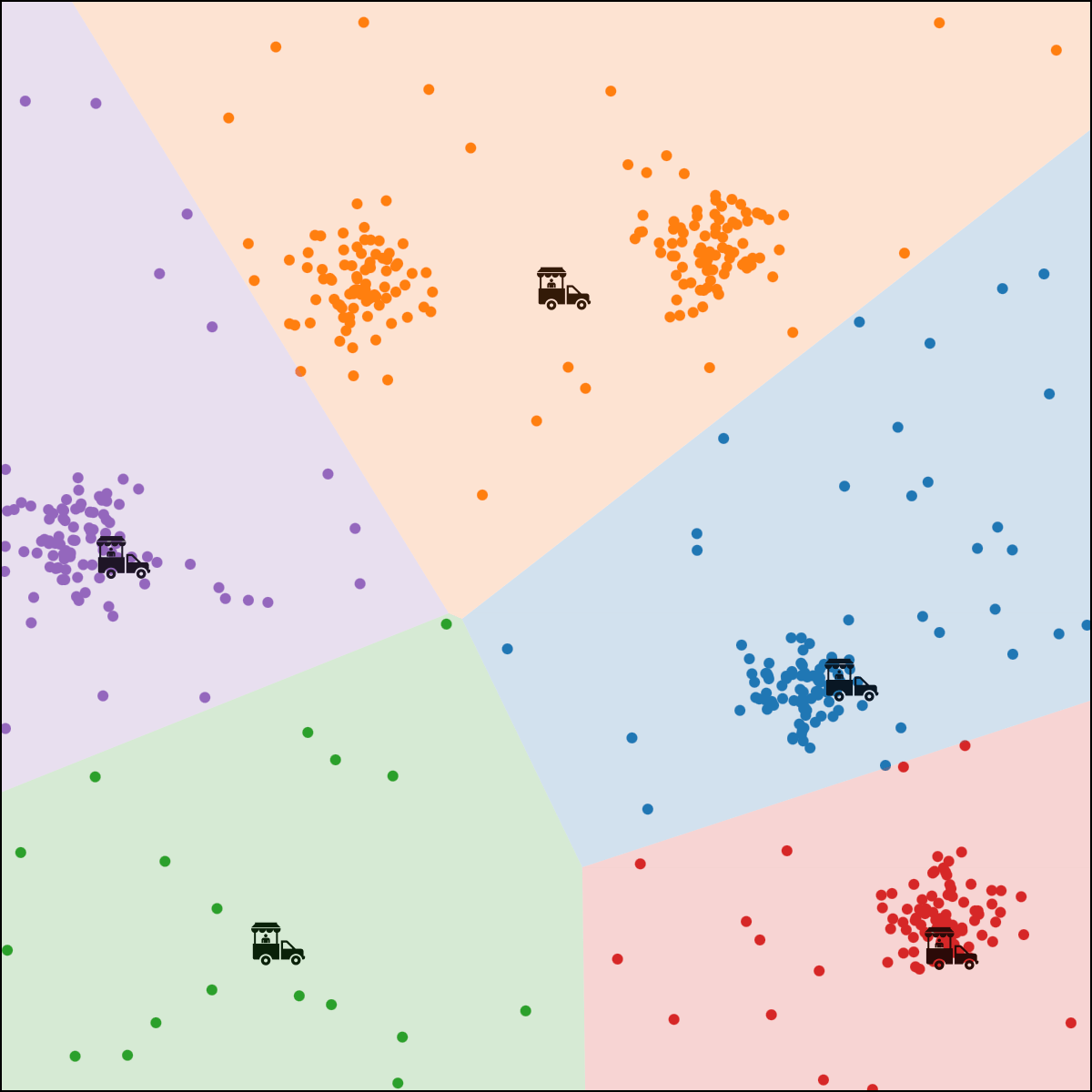

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

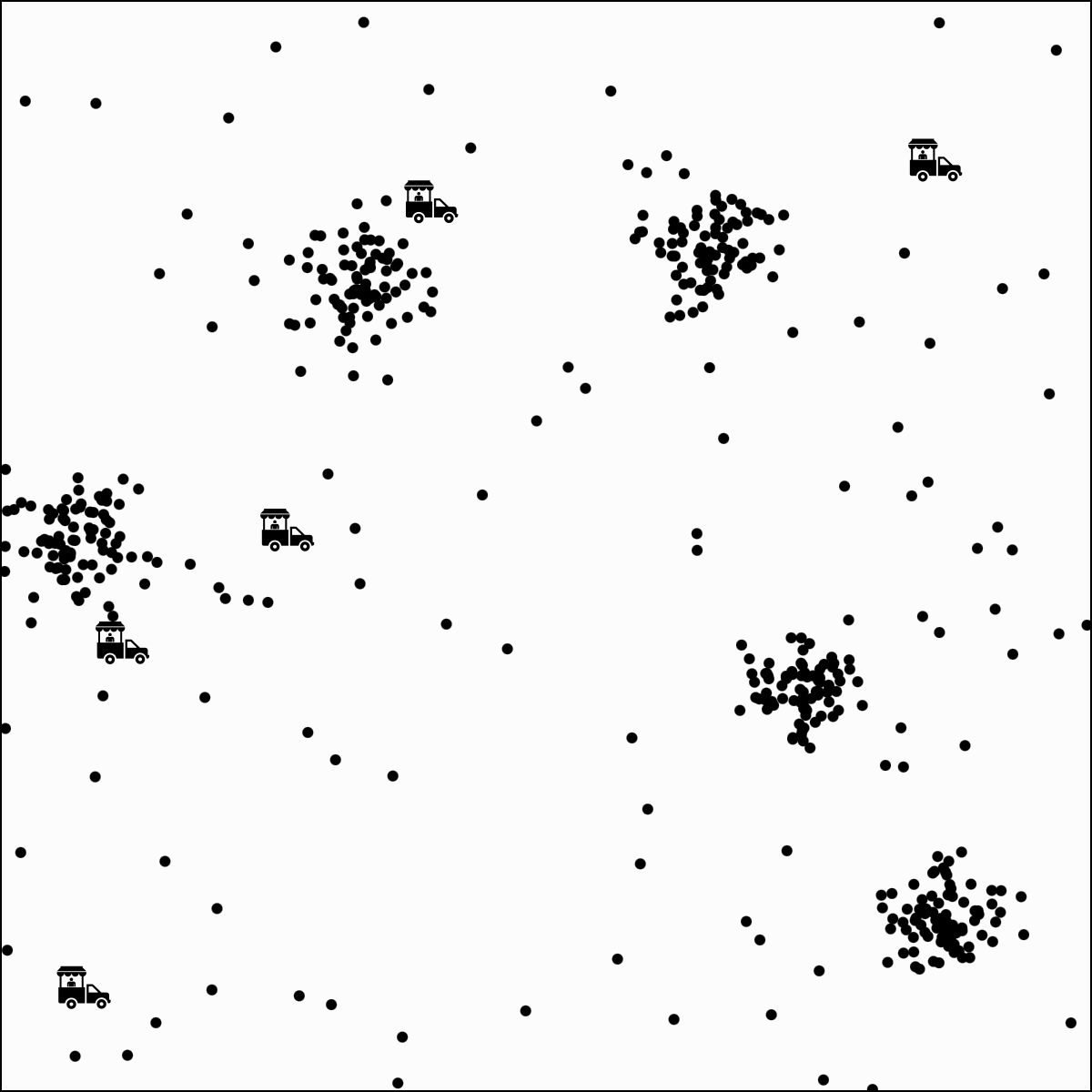

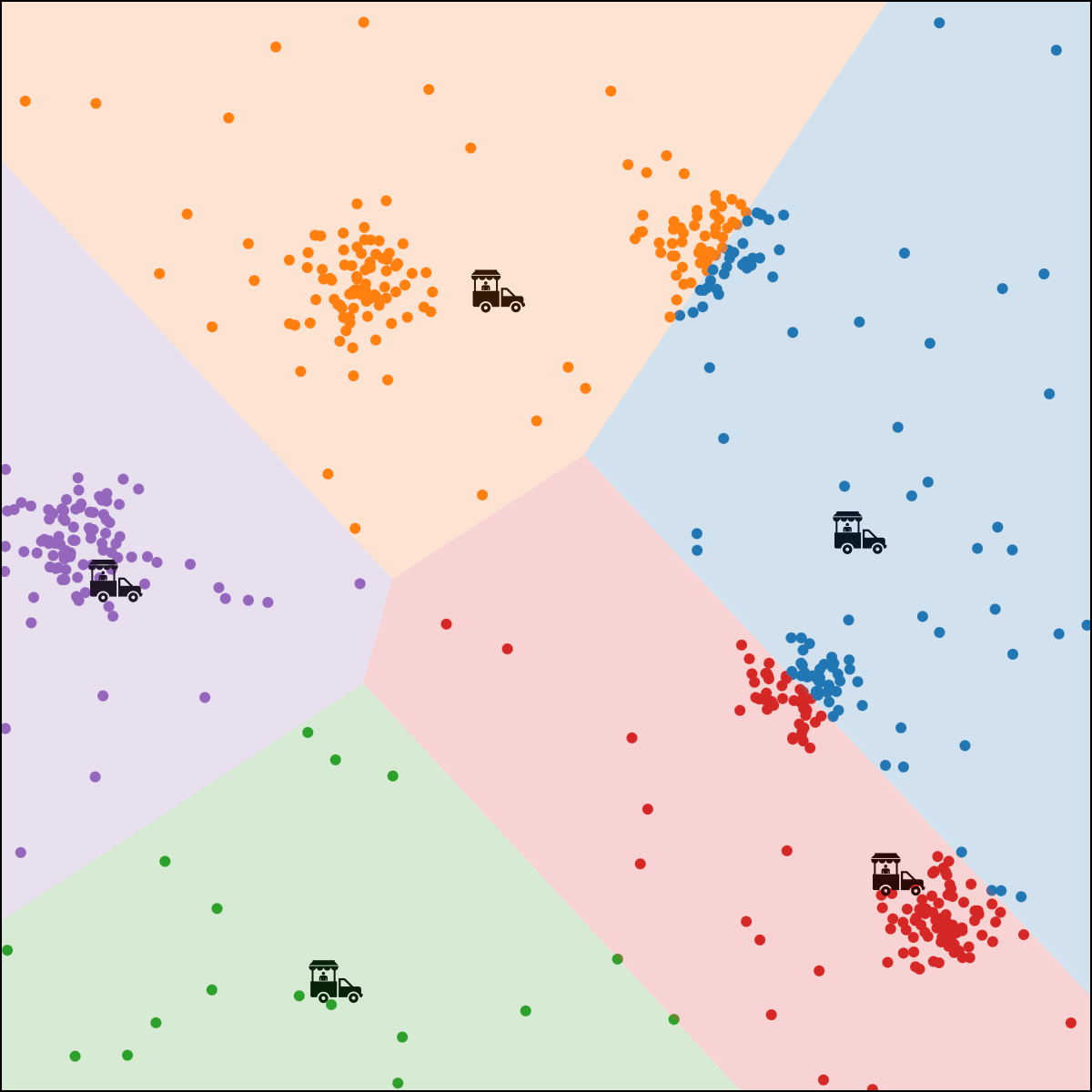

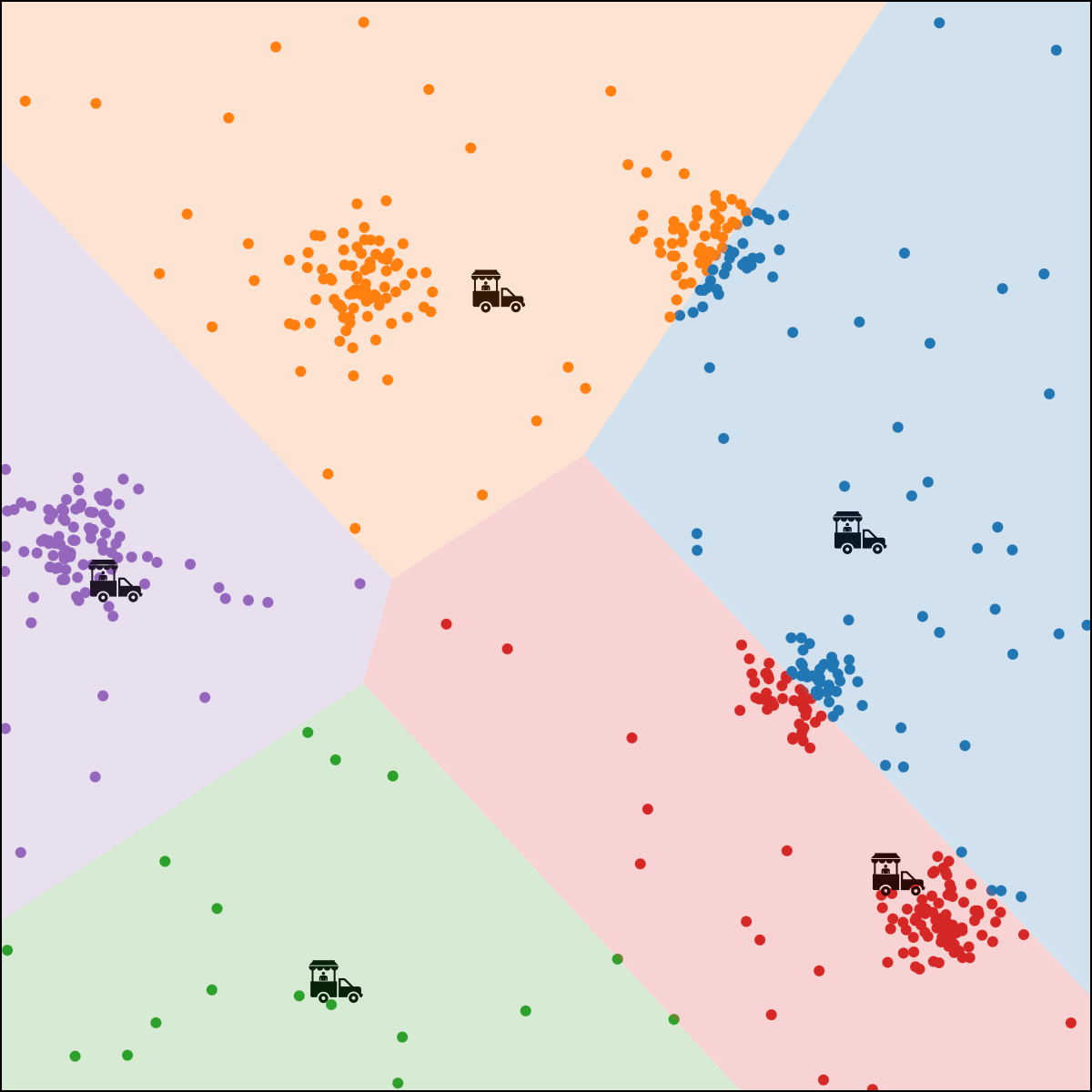

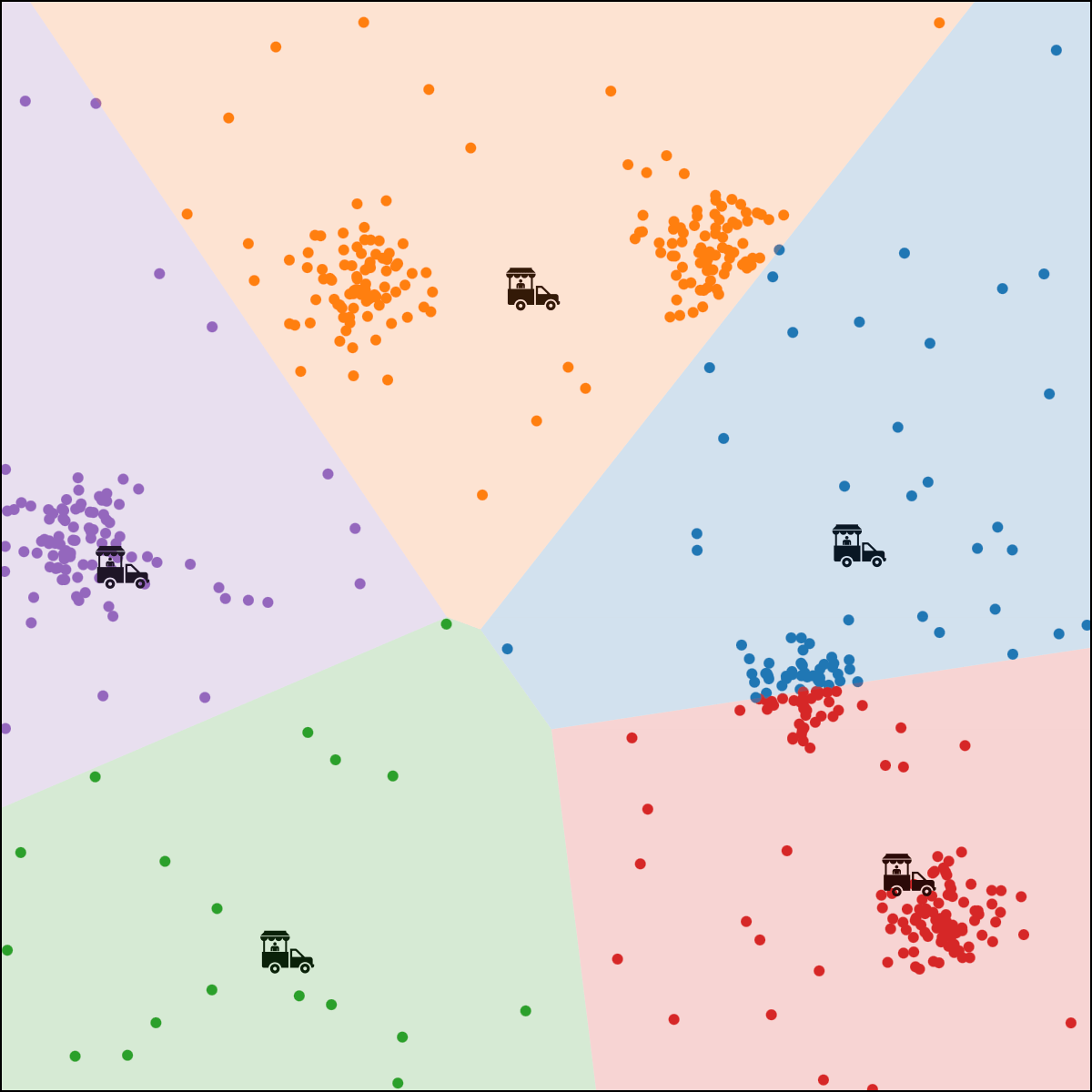

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

3 \(y_{\text {old }} = y\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

10 return \(\mu, y\)

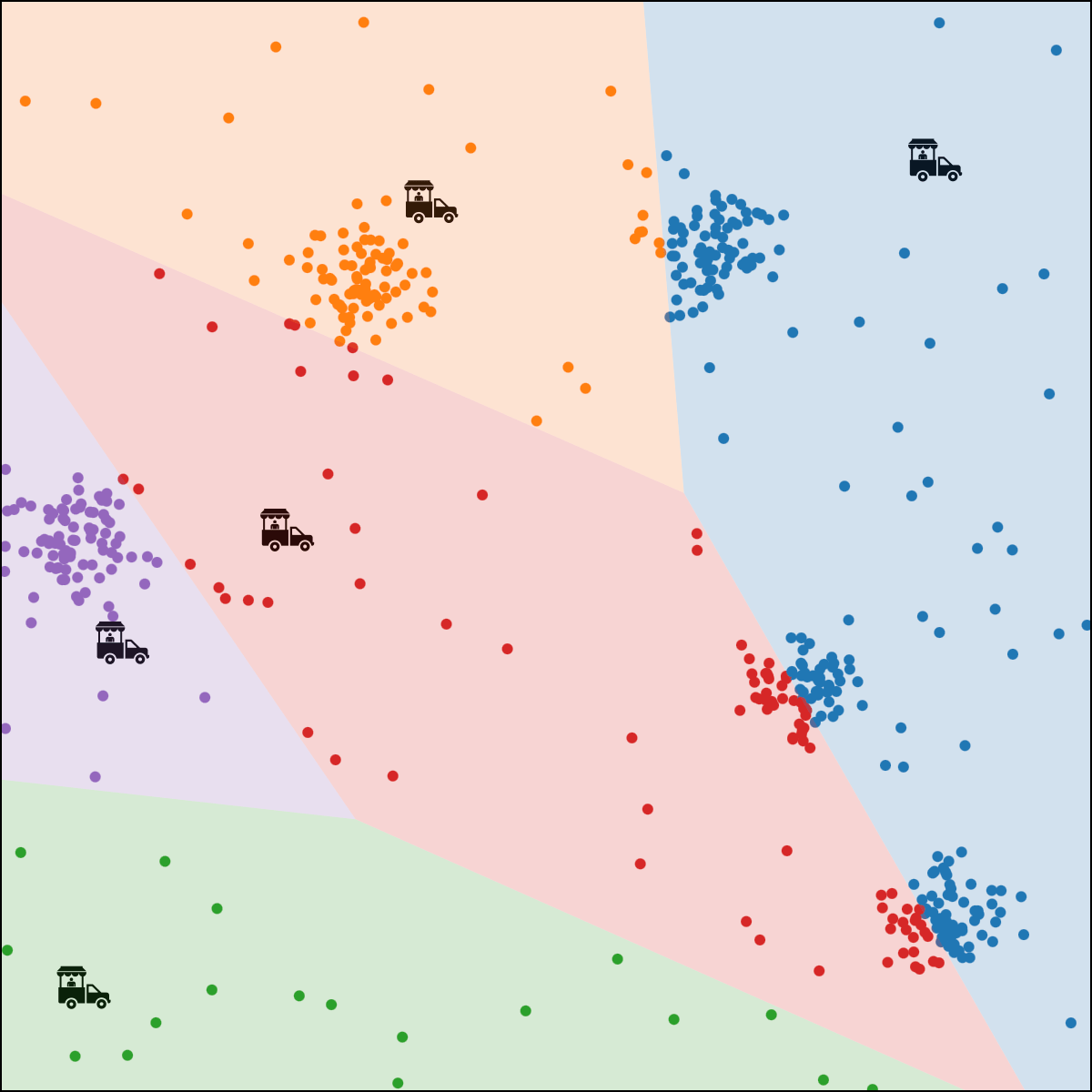

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

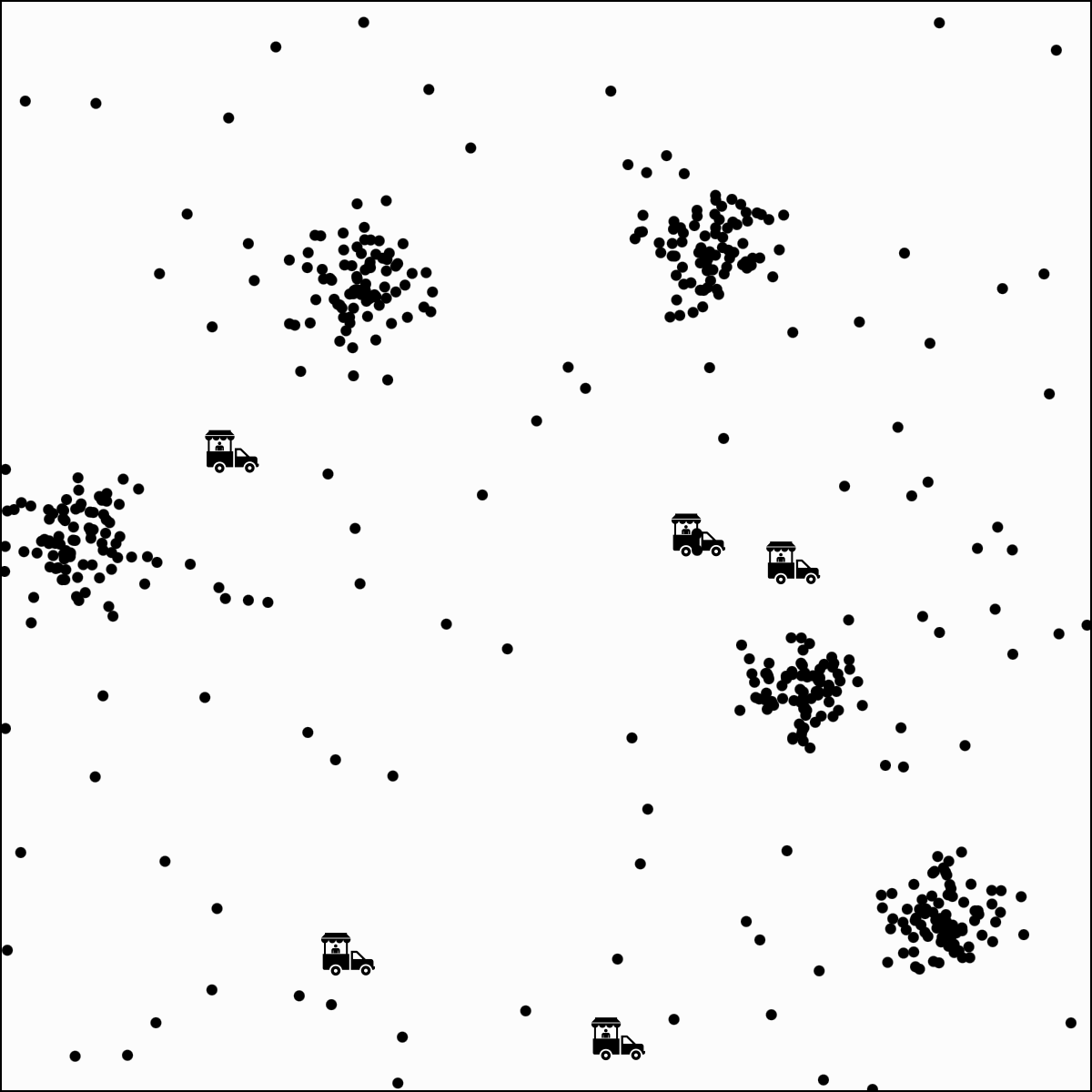

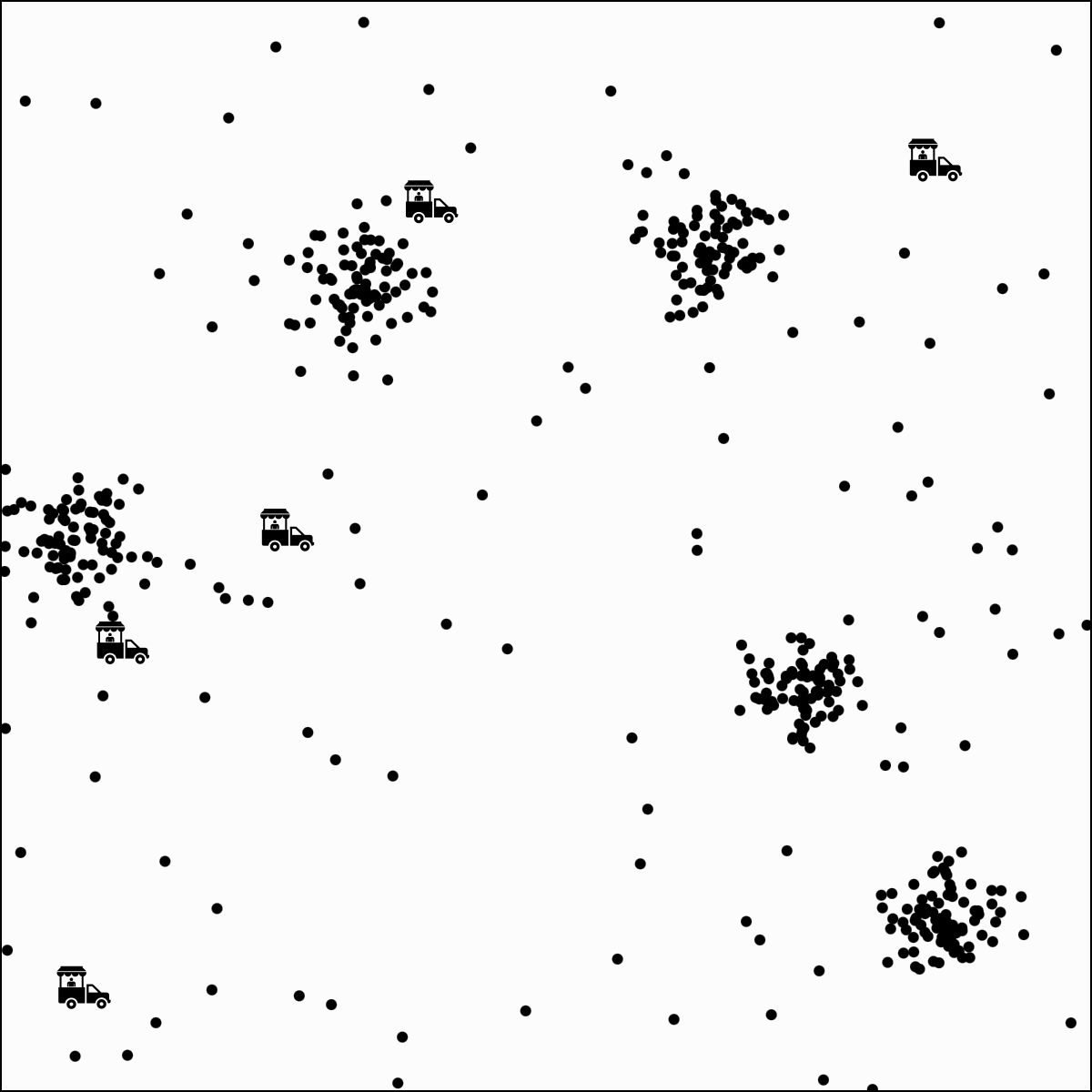

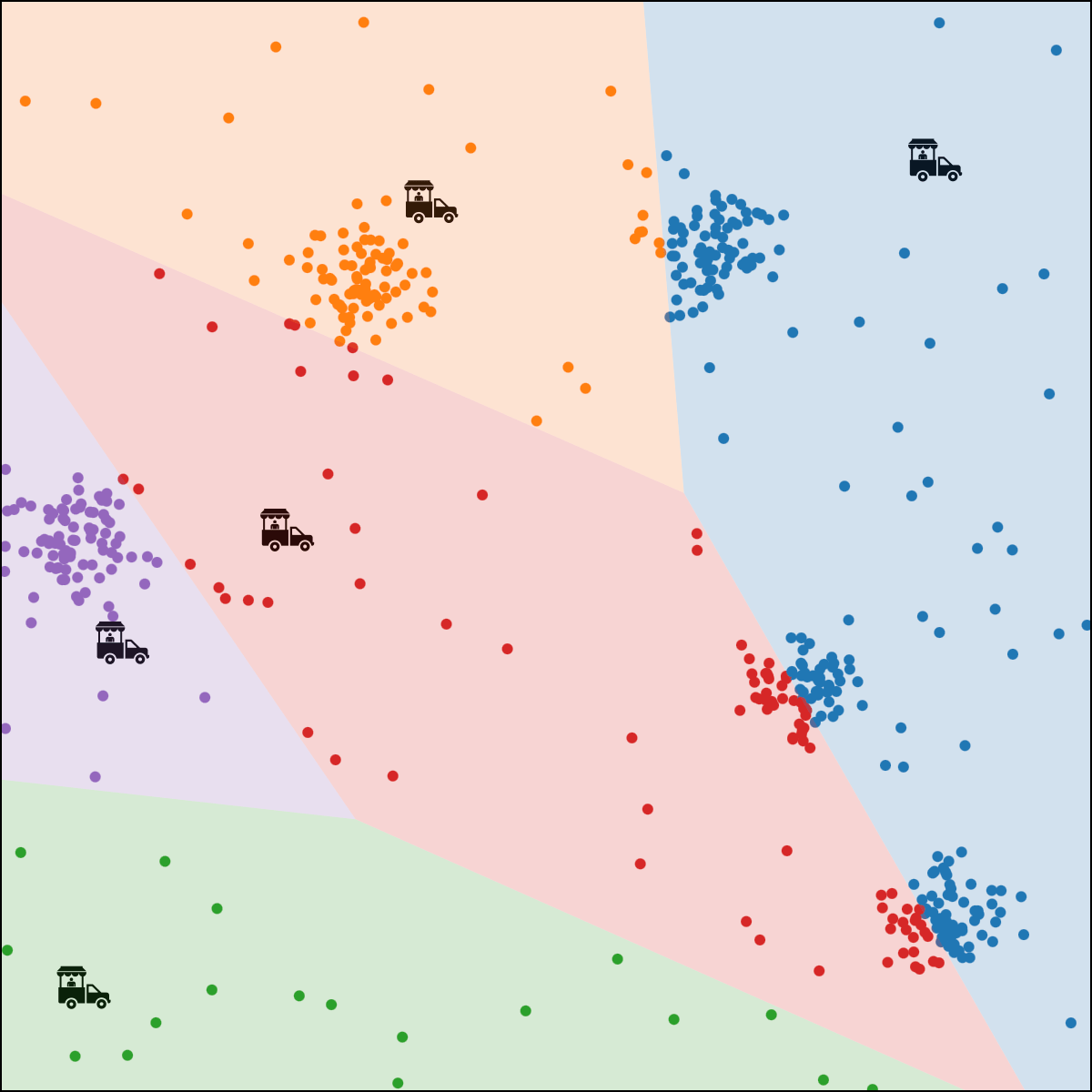

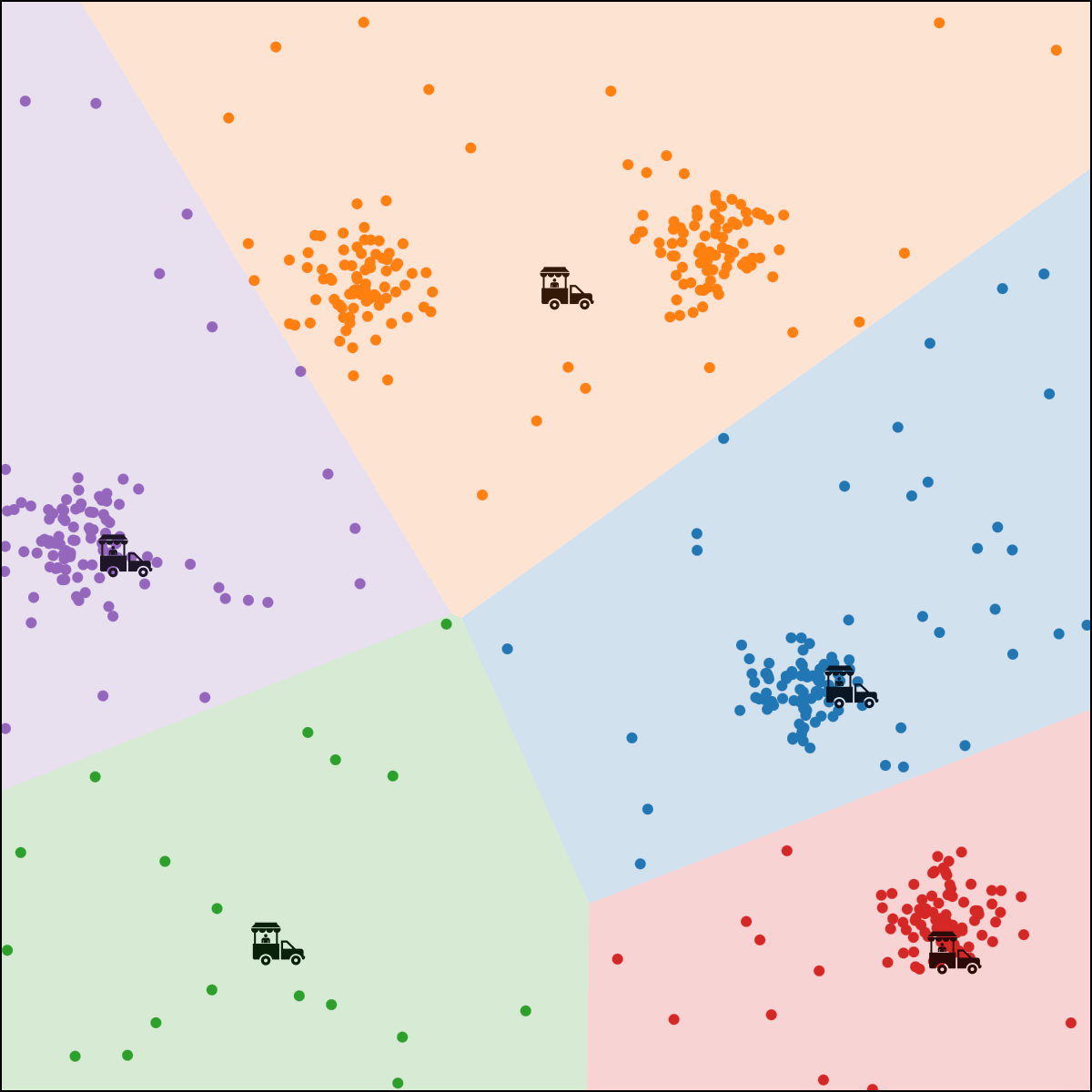

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

\(N_j = \sum_{i=1}^n \mathbf{1}\left\{y^{(i)}=j\right\}\)

food truck \(j\) gets moved to the "central" location of all ppl assigned to it

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

\(\dots\)

3 \(y_{\text {old }} = y\)

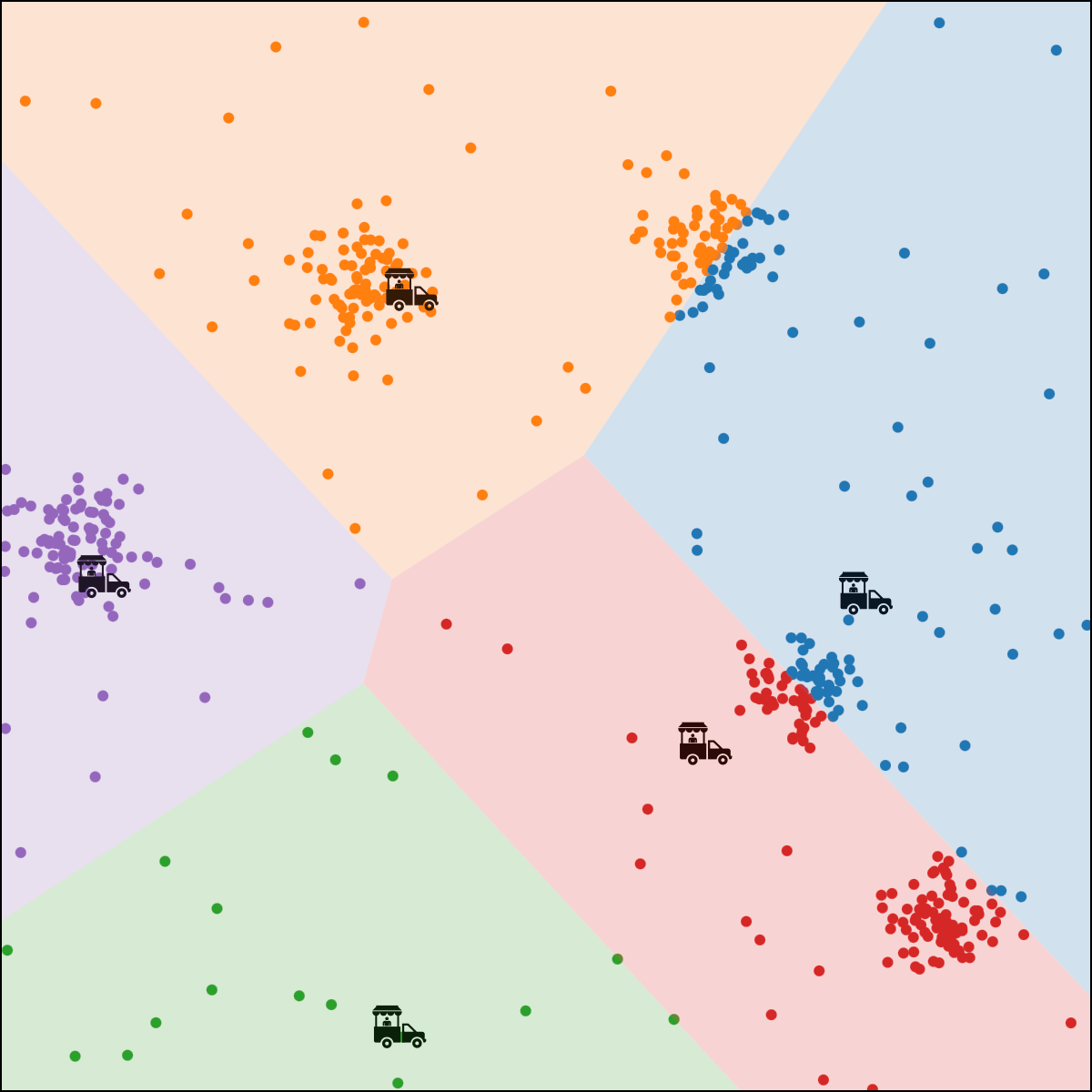

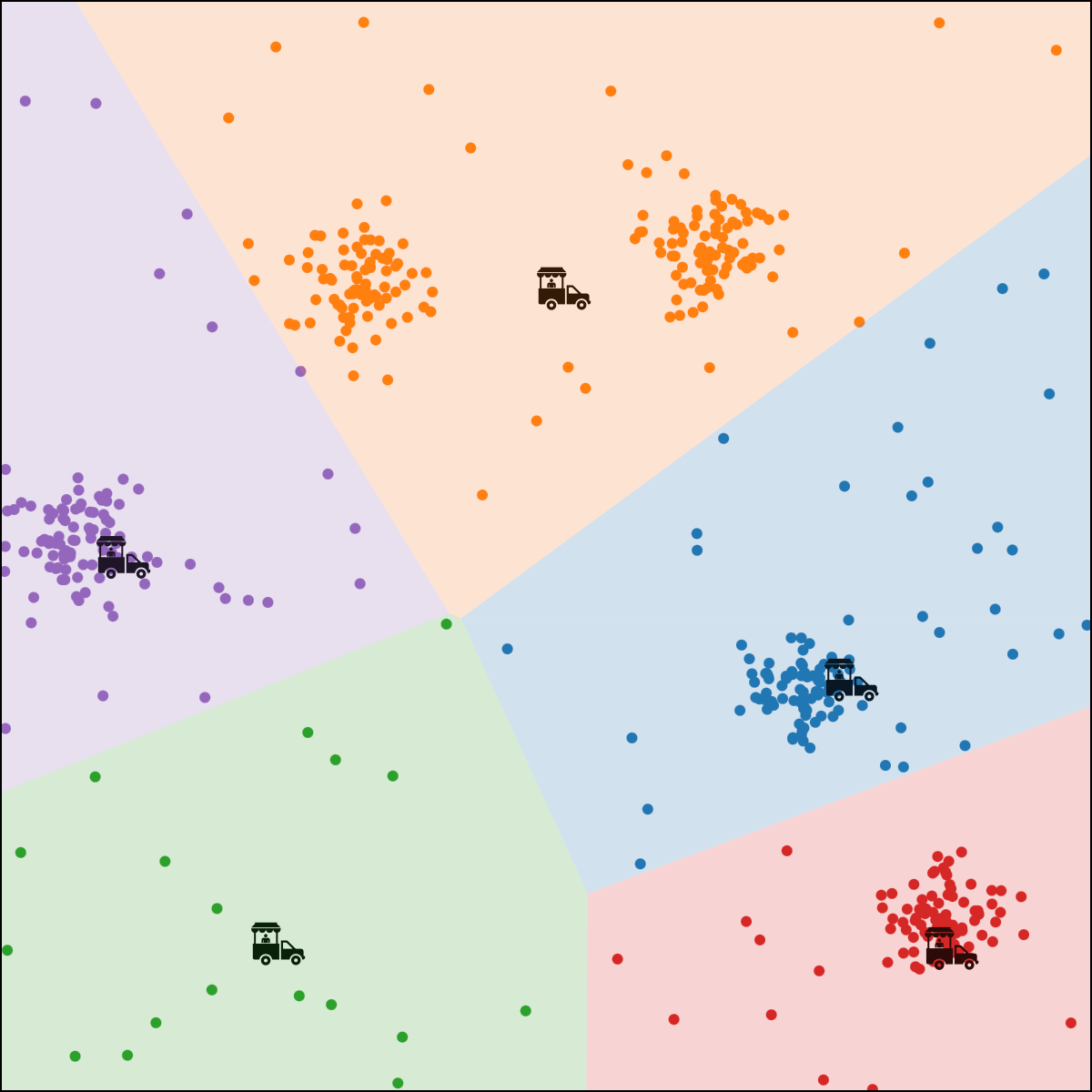

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

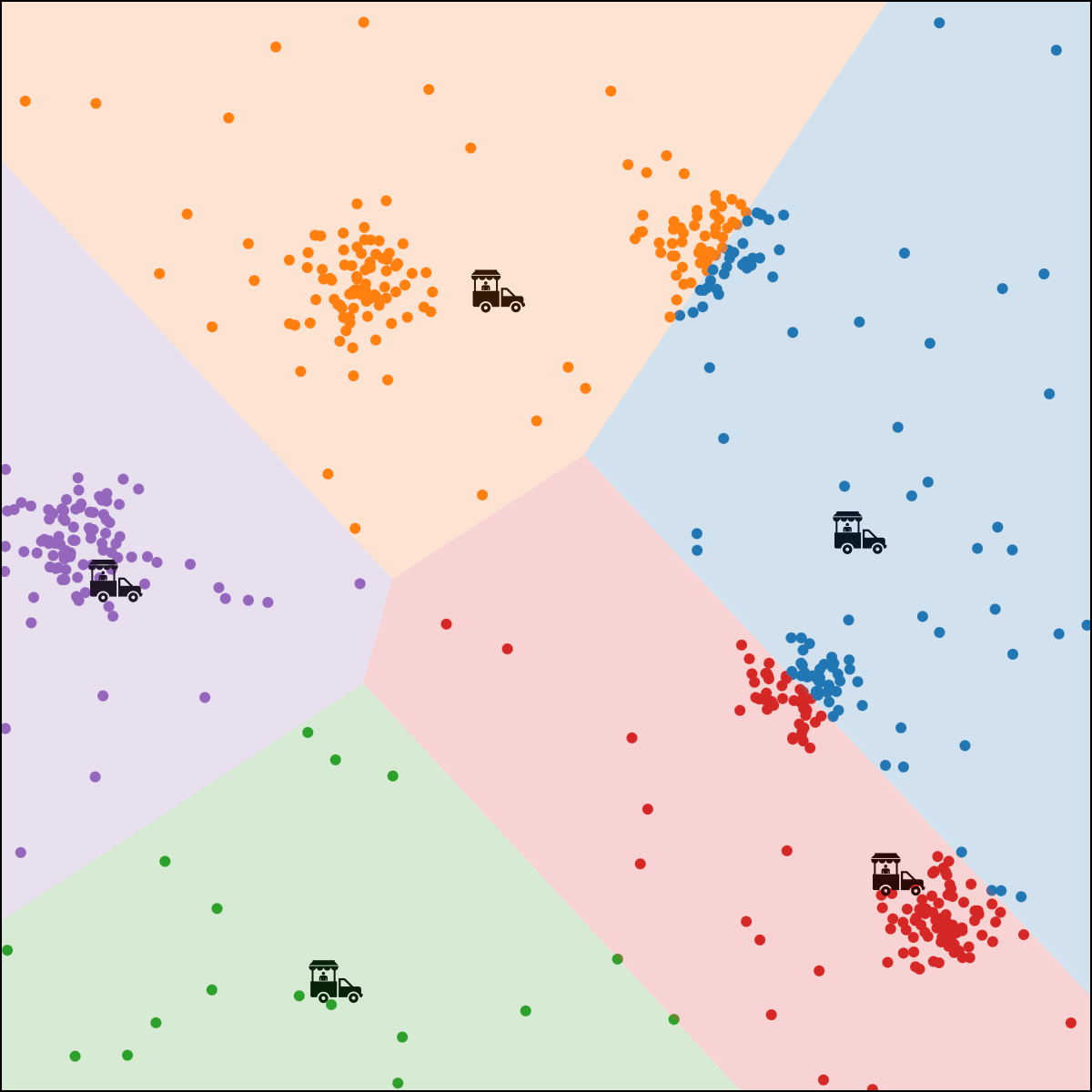

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

2 for \(t=1\) to \(\tau\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

2 for \(t=1\) to \(\tau\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

2 for \(t=1\) to \(\tau\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

2 for \(t=1\) to \(\tau\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

K-MEANS\((k, \tau, \left\{x^{(i)}\right\}_{i=1}^n)\)

6 for \(j=1\) to \(k\)

\(7 \quad \quad\quad\quad \quad \mu^{(j)}=\frac{1}{N_j} \sum_{i=1}^n \mathbf{1}\left(y^{(i)}=\mathfrak{j}\right) x^{(i)}\)

1 \(\mu, y=\) random initialization

2 for \(t=1\) to \(\tau\)

8 if \(y==y_{\text {old }}\)

9\(\quad \quad \quad\quad \quad\)break

4 for \(i=1\) to \(n\)

\(5 \quad \quad\quad\quad \quad y^{(i)}=\arg \min _j\left\|x^{(i)}-\mu^{(j)}\right\|^2\)

3 \(y_{\text {old }} = y\)

10 return \(\mu, y\)

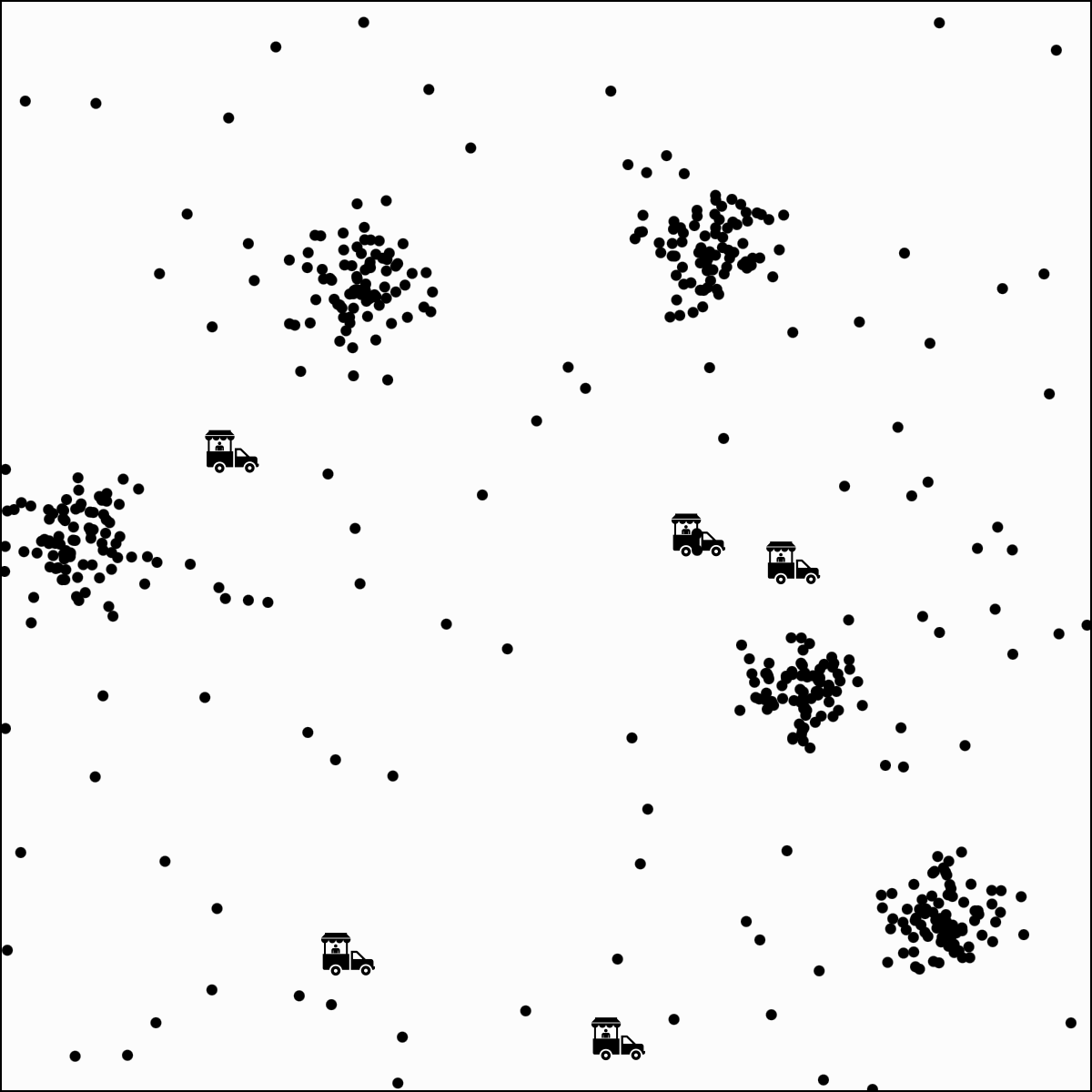

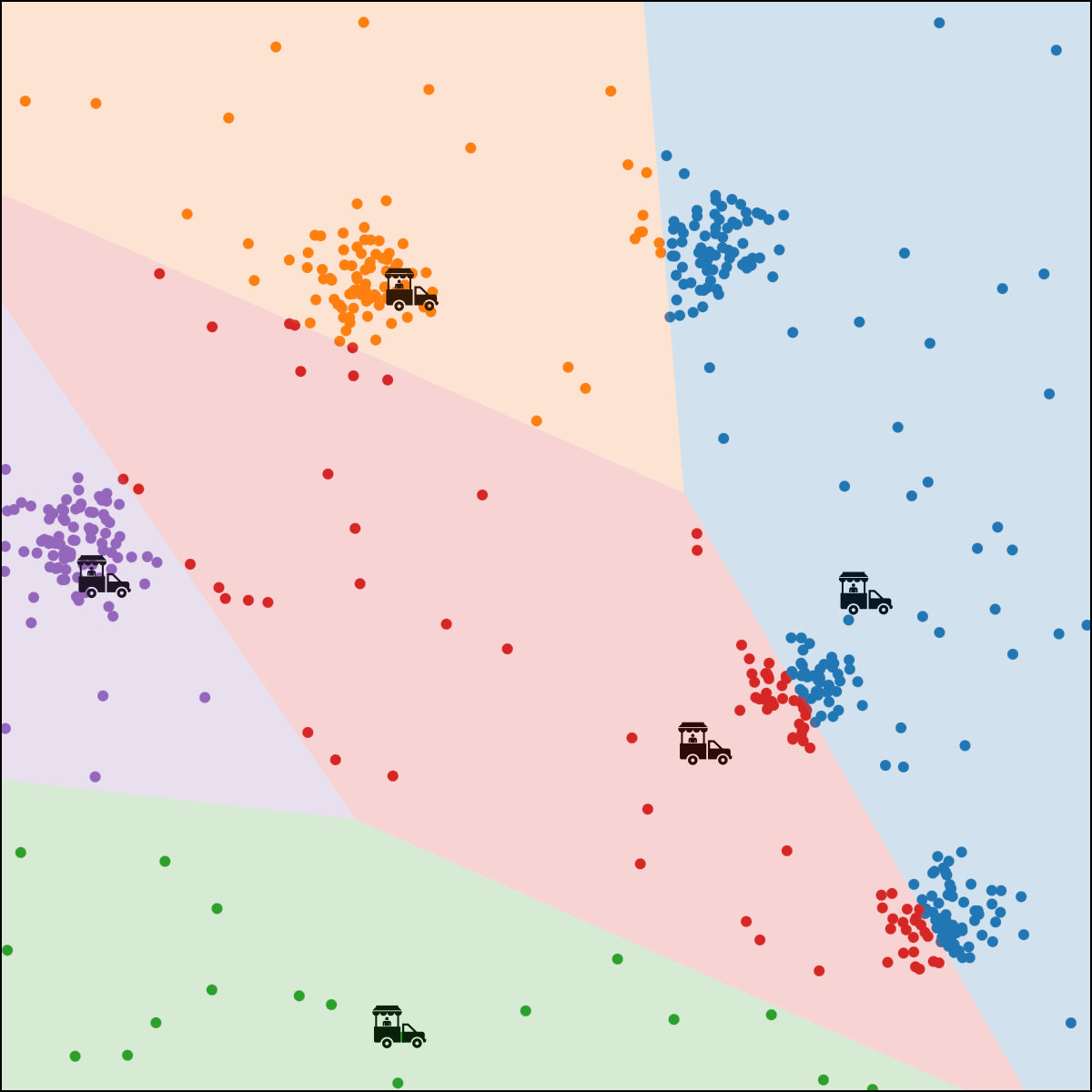

Summary

-

One really important class of ML models is called “non-parametric”.

-

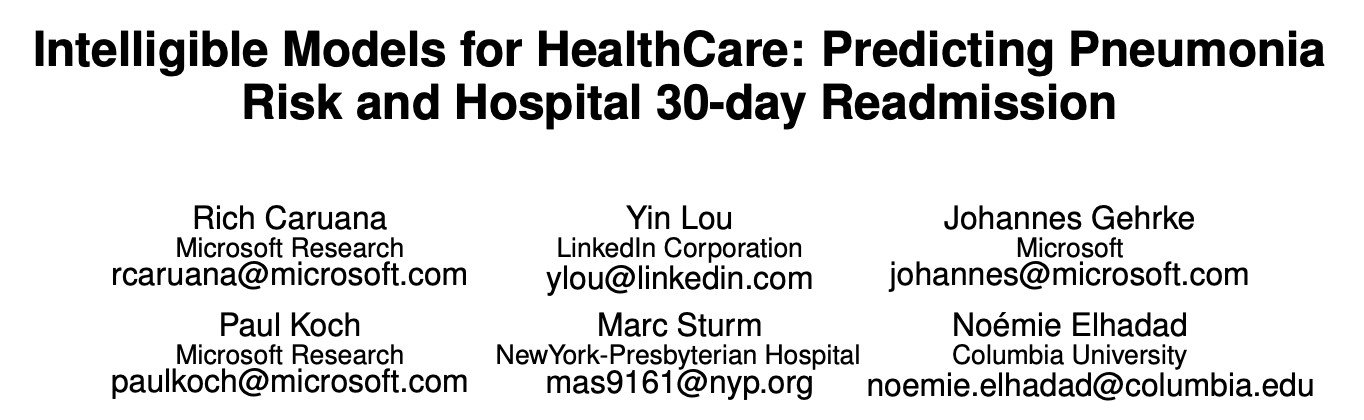

Decision trees are kind of like creating a flow chart. These hypotheses are the most human-understandable of any we have worked with.We regularize by first growing trees that are very big and then “pruning” them.

-

Ensembles: sometimes it’s useful to come up with a lot of simple hypotheses and then let them “vote” to make a prediction for a new example.

-

Nearest neighbor remembers all the training data for prediction. Depends crucially on our notion of “closest” (standardize data is important). Can do fancier things (weighted kNN). Less good in high dimensions (computationally expensive).

Summary

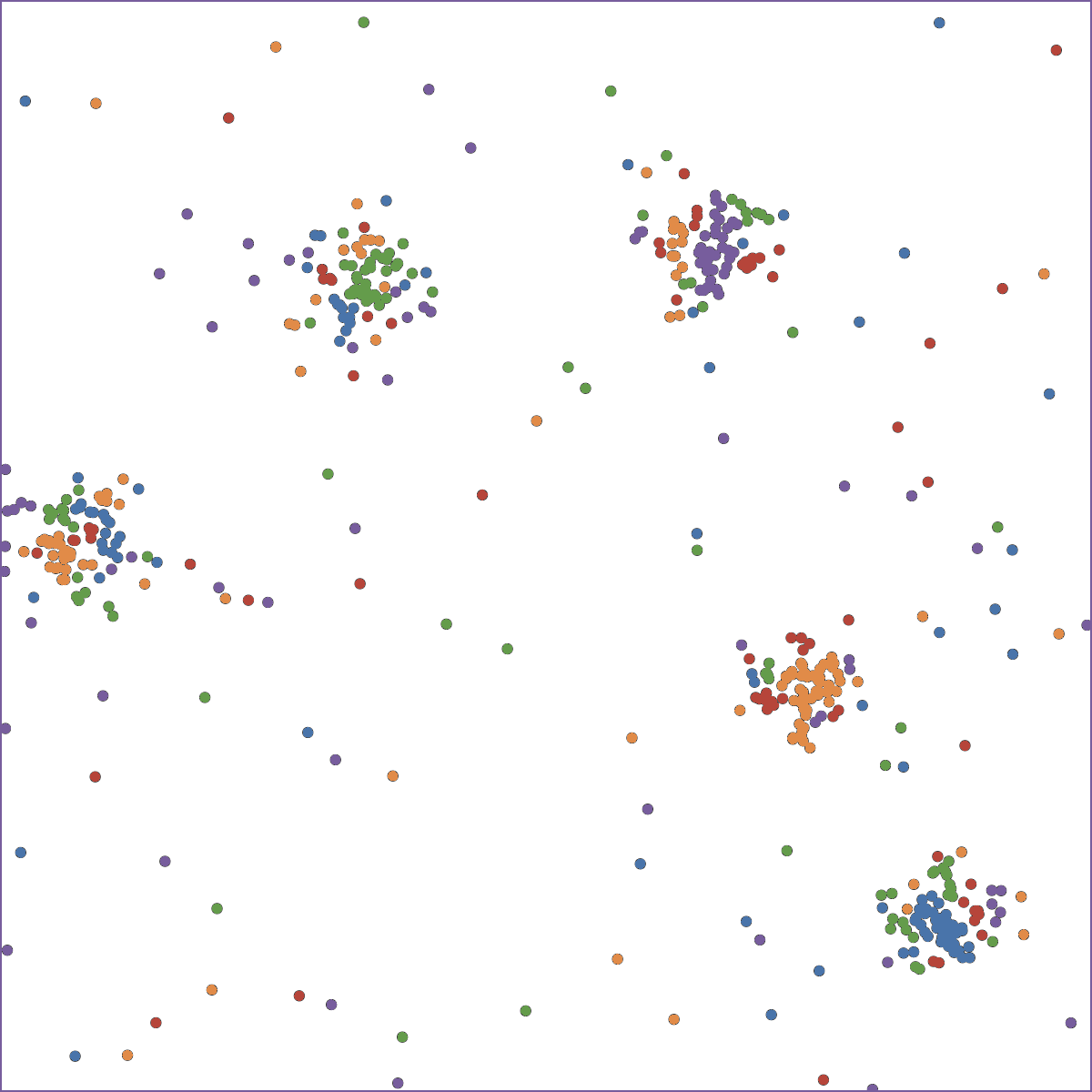

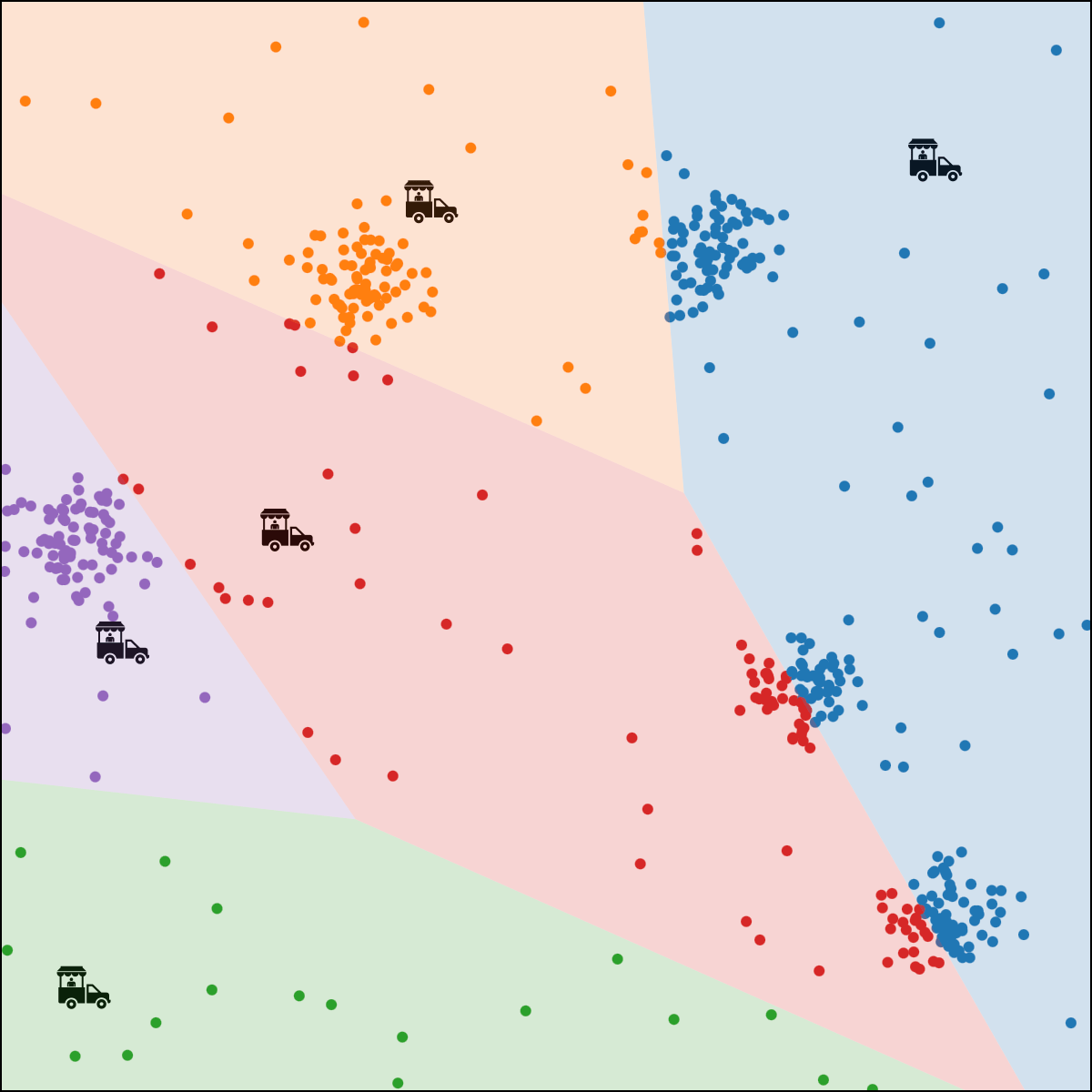

- Clustering is an important kind of unsupervised learning in which we try to divide the x’s into a finite set of groups that are in some sense similar.

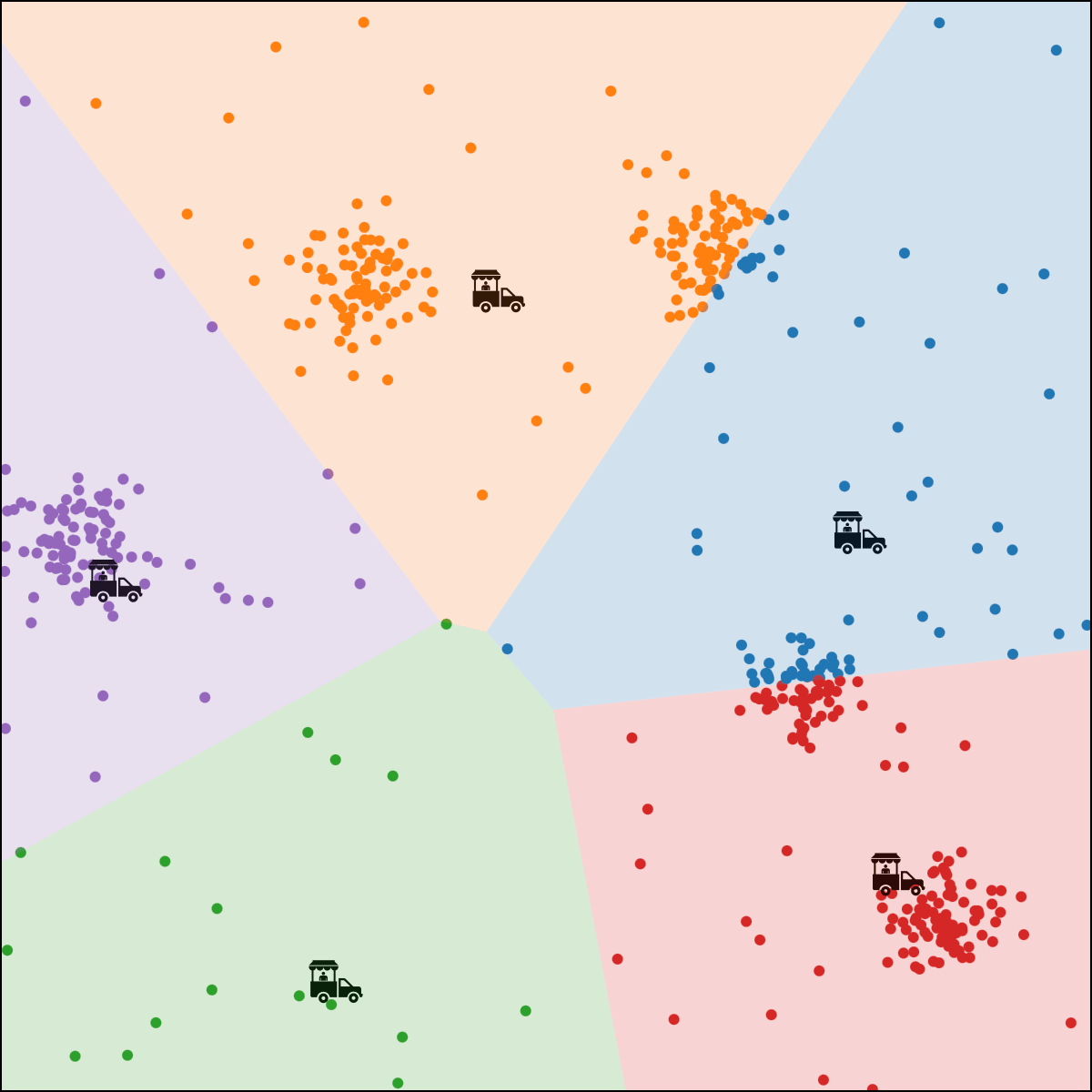

- A widely used clustering objective is the k-means. It also requires a distance metric on x’s.

- There’s a convenient special-purpose method for finding a local optimum: the k-means algorithm.

- The solution obtained by k-means algorithm is sensitive to initialization.

- The solution obtained by k-means algorithm is sensitive to the number of clusters chosen.

Thanks!

We'd love to hear your thoughts.