| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |

Faculty of Psychology and Neuroscience, Maastricht University, Maastricht, The Netherlands; Open Science Special Interest Group (OHBM); physiopy (https://github.com/physiopy)

Quality assessment

of published research

An Open and Reproducible Science perspective

Marseille, 11.10.25

I have no financial interests or relationship to disclose with regard to the subject matter of this presentation.

Disclaimers

1. I am an "open" scientist. I have a bias toward the

core tenets of Open Science as better practices.

2. "Quality" is a fairly subjective idea.

3. It's easy to take the higher moral ground - don't.

We are not here to chastise, we are here to

discuss a way to improve our science.

4. For this reason, I am going to assume all issues come

in good faith.

5. I also assume that there is far more subjectivity in

the scientific method than we want to admit.

This is a new chapter

This is a new chapter

Take home #0

This is a take home message

What use do we have for papers

- Formulate hypotheses

- Formulate methods

- "Validate" results

- Start or continue an offline (field-wide) discussion

1. Metadata heuristics

The "good old heuristics"

- Journal (Impact Factor)

- Amount of citations

(Self-)Citations

- Google Scholar: 57 citations

- Semantic Scholar: 40 citations

- Non self-citations: 20 citations

Resource bias

Even if high impact factor journals had higher quality,

if their APC fee is high,

your selection would be biased by financial availability

Journals and impact factors

| Title | Journal | Year | Citations |

|---|---|---|---|

| Pluripotency of mesenchymal stem cells derived from adult | Nature | 2002 | 4512 |

| Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial | International Journal of Antimicrobial Agents | 2020 | 3189 |

| 6-month consequences of COVID-19 in patients discharged from hospital: a cohort study | Lancet | 2021 | 3029 |

| Primary Prevention of Cardiovascular Disease with a Mediterranean Diet | New England Journal of Medicine | 2013 | 2671 |

| A specific amyloid-β protein assembly in the brain impairs memory | Nature | 2008 | 2384 |

| Predictive Validity of a Medication Adherence Measure in an Outpatient Setting | The Journal of Clinical Hypertension | 2008 | 2145 |

| MicroRNA signatures of tumor-derived exosomes as diagnostic biomarkers of ovarian cancer | Gynecologic Oncology | 2008 | 1940 |

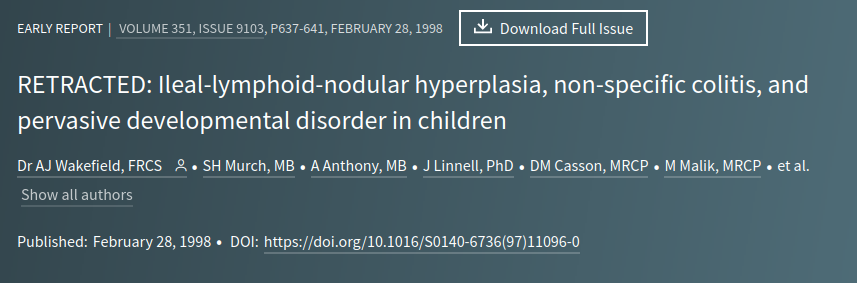

| Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children | Lancet | 1998 | 1741 |

| Recent progress in processing and properties of ZnO | Progress in Materials Science | 2004 | 1636 |

| Visfatin: A protein secreted by visceral fat that mimics the effects of insulin | Science | 2005 | 1564 |

https://retractionwatch.com/the-retraction-watch-leaderboard/top-10-most-highly-cited-retracted-papers/

Take home #1

Heuristics might be easy to implement,

but they hold biases

Any observed statistical regularity will tend to collapse

once pressure is placed upon it for control purposes¹

1. Goodhart, 1975

When a measure becomes a target,

it ceases to be a good measure

Goodhart's Law:

2. An "Open Science" definition of "quality"

What can "good quality manuscript" mean?

- Can I validate it?

- How easily can I implement it?

- Is the methodology solid?

- Can I reproduce it by just reading it?

- Can I reproduce it through its available deliverables?

- How much time will I have to invest to implement it?

Trustable and/or implementable,

as in easily reproducible/replicable

Good quality manuscript

3. Reproducibility and replicability

The reproducibility issue

2010s:

- Failed attempts to reproduce core concepts of social psychology and biomedical research

- Studies on systematic biases

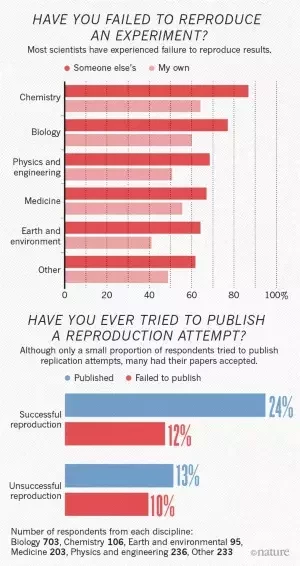

2016: Survey by Nature¹: 70% of researchers failed to reproduce other's results, 50%+ failed to reproduce their own

1. Baker 2016 (Nature)

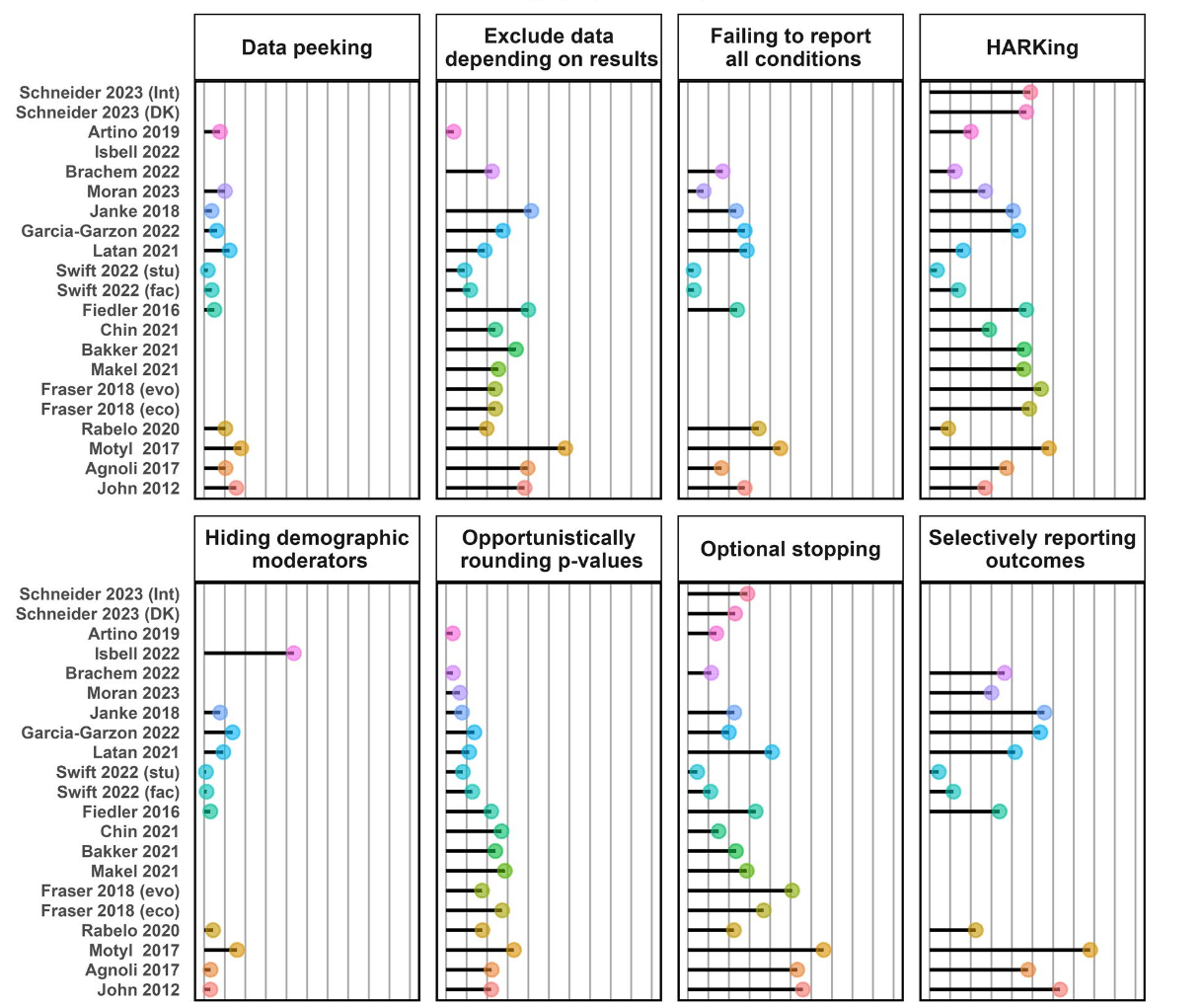

Systematic bias

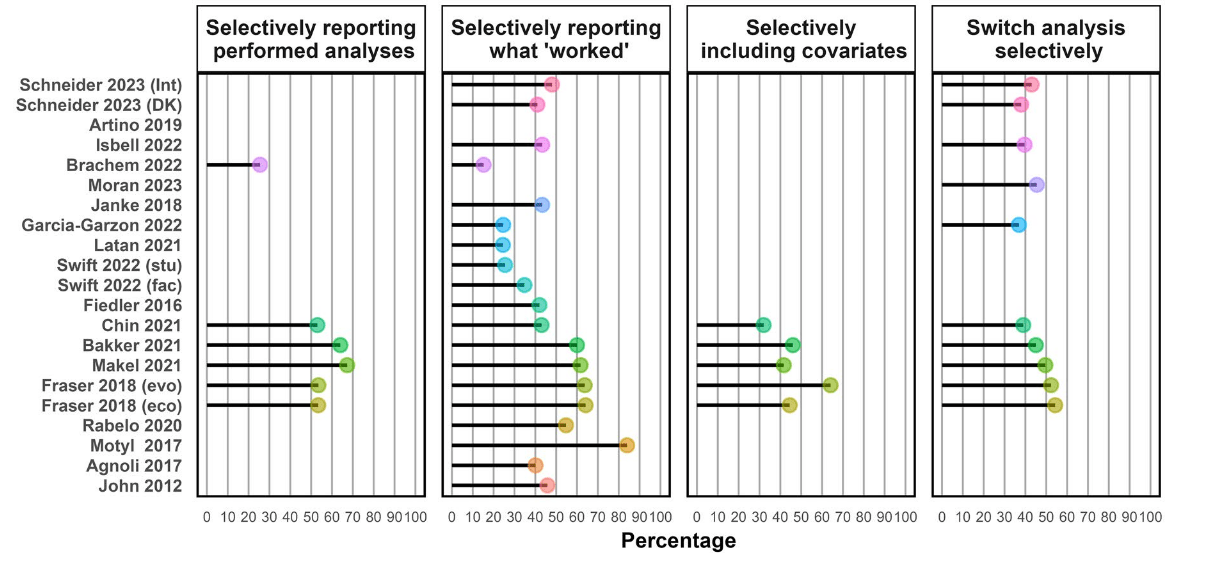

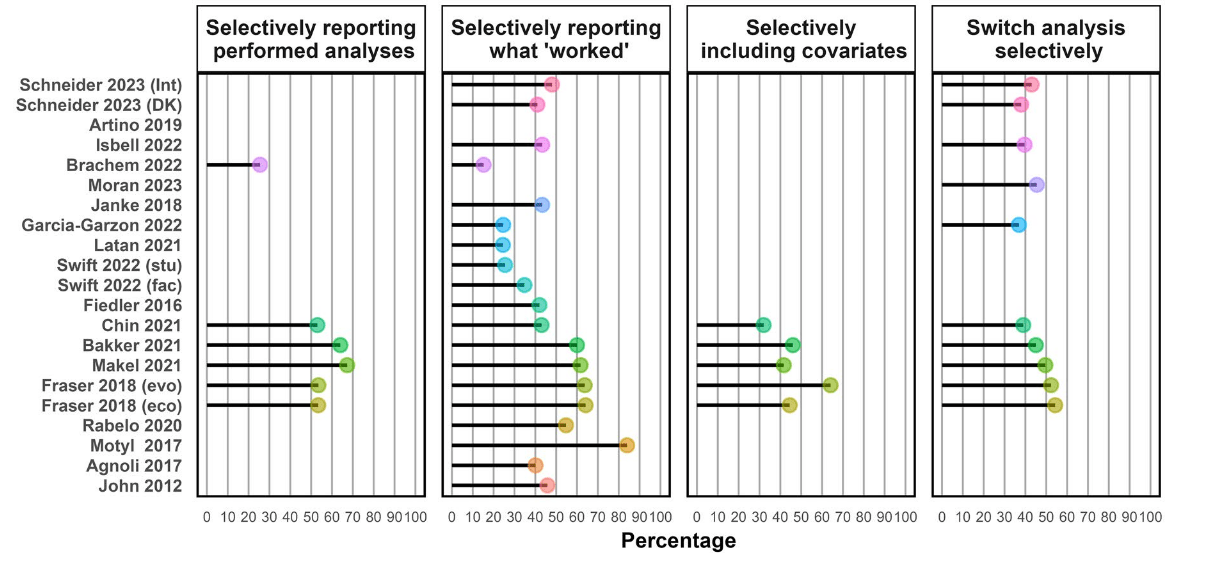

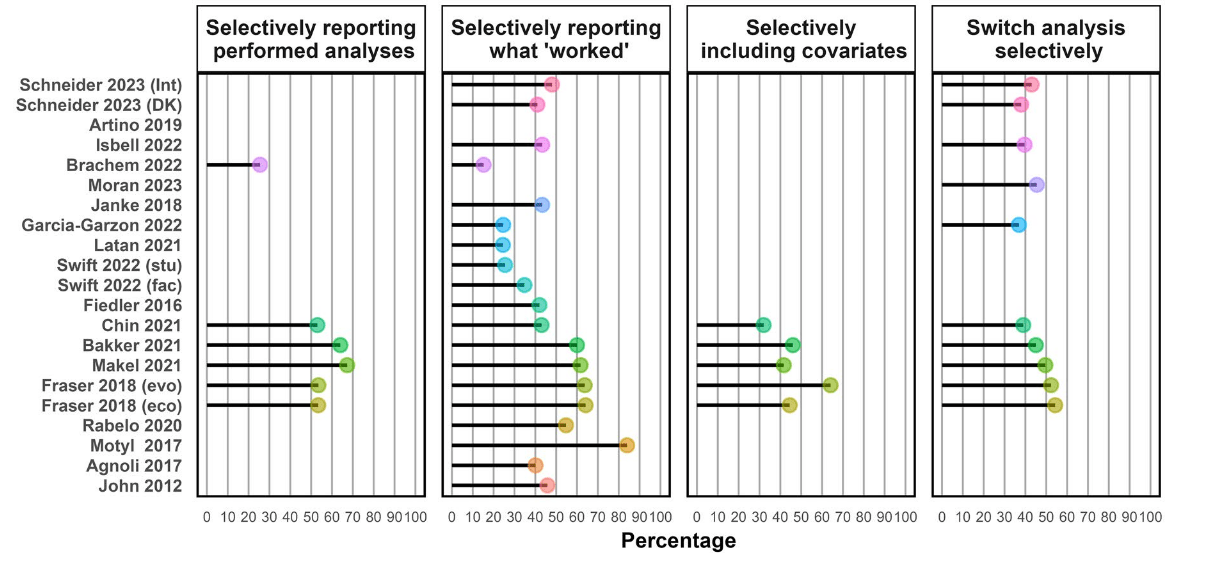

Lakens et al., 2024 (EBT)

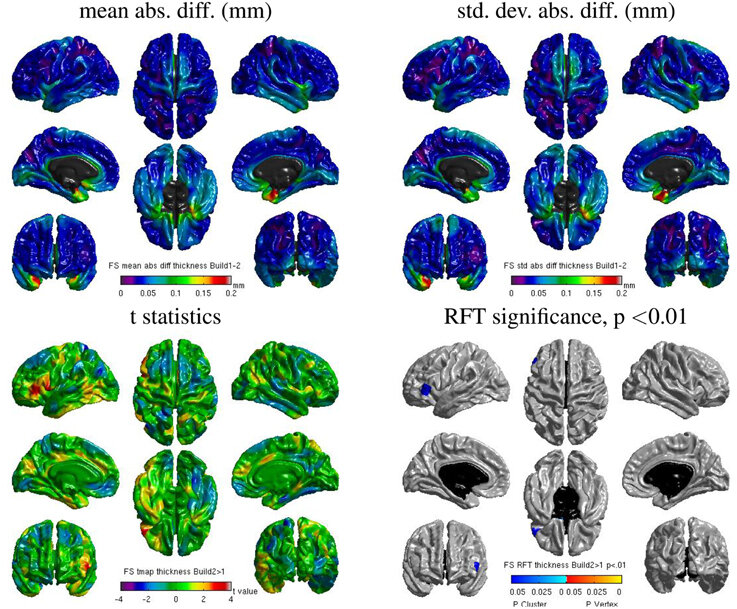

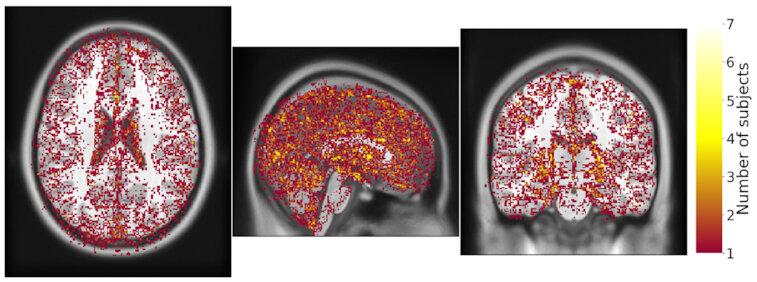

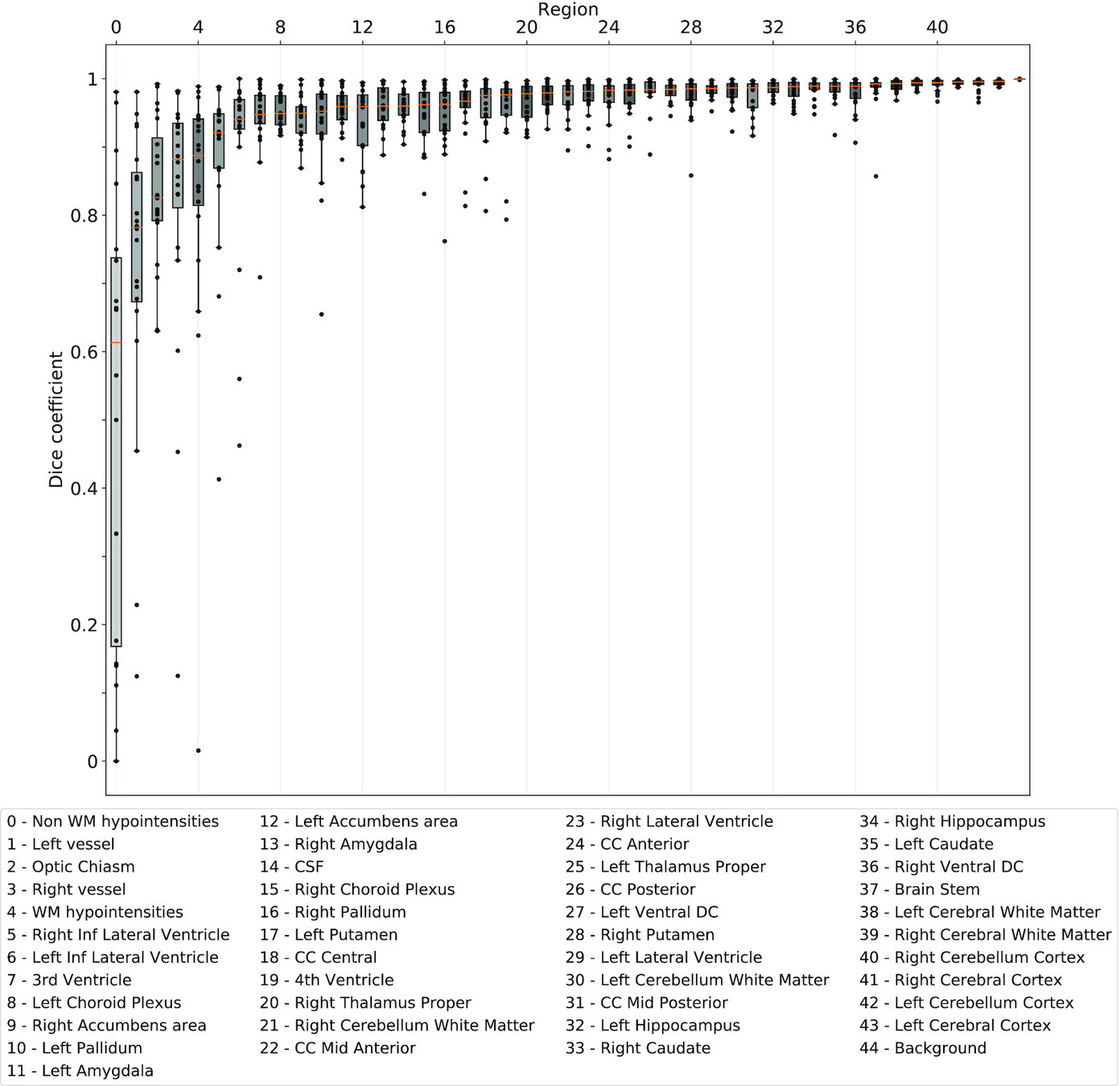

Really reproducible?

Same hardware, two Freesurfer builds (different glibc version)

Difference in estimated cortical tickness.¹

Same hardware, same FSL version, two glibc versions

Difference in estimated tissue segmentation.²

Same hardware, two Freesurfer builds (two glibc versions)

Difference in estimated parcellation.²

1. Glatard, et al., 2015 (Front. Neuroinform.) 2. Ali, et al., 2021 (Gigascience)

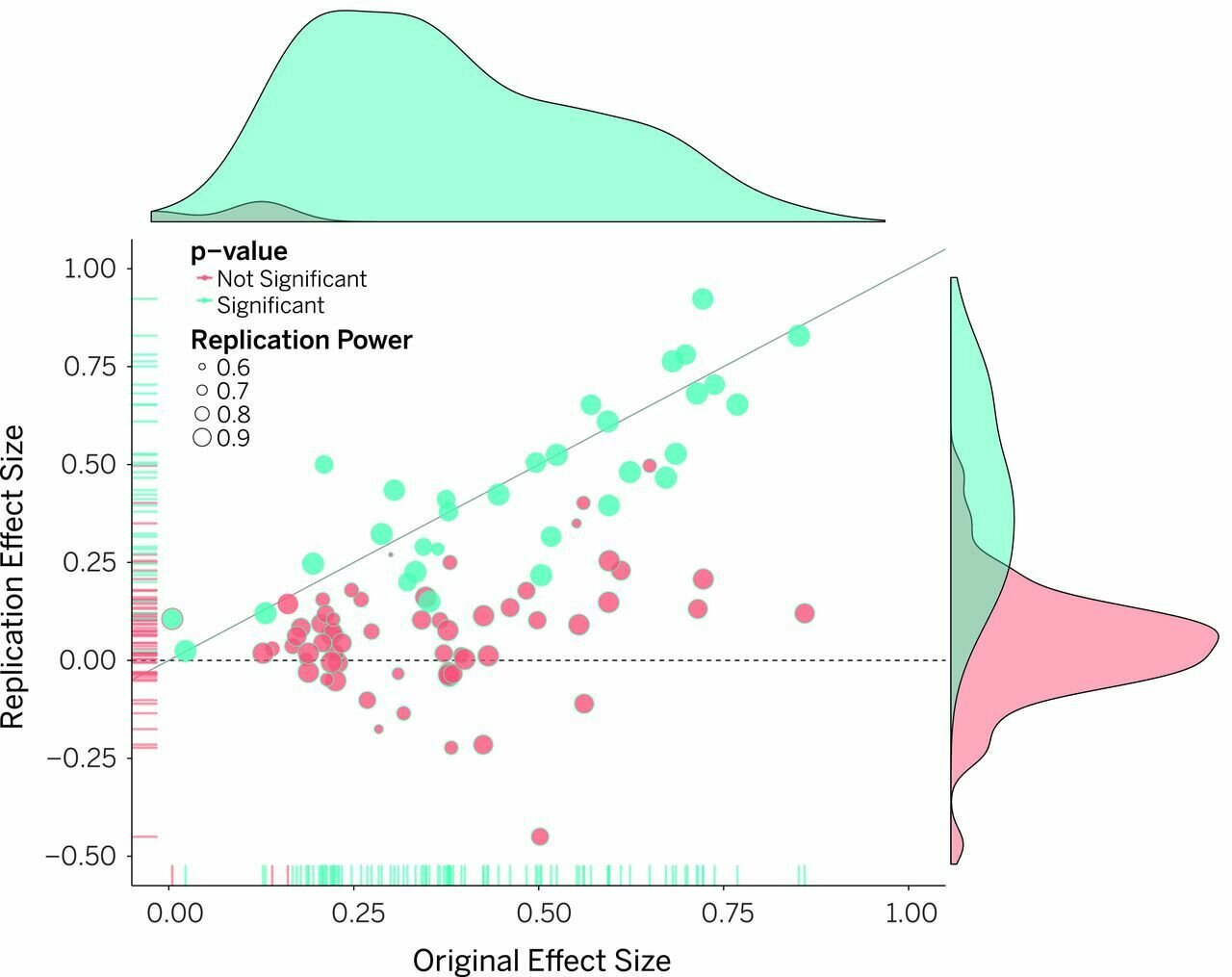

Really Robust?

Not generalisable?

Aarts et al. 2015 (Science)

What does failure to generalise tell us about hypotheses and scientific facts?

Reproducibility checklists

https://fairerdata.github.io/FAIRER-Aware-REPRODUCIBILITY-Assessment/

What to look for for replicability and reproducibility

- Extremely well documented methods

- Open Standard Operative Procedures

- Open code

- Open running environment (e.g. containers)

- Open data (at least first derivatives)

Standard Operating Procedures

https://github.com/TheAxonLab/hcph-sops

License your work

A work that is not licensed is not public (paradox!)

There are many (open source) licences to pick up from, not only code-related.

www.choosealicense.org

The licence should be in the first commit you make.

Personal picks for science: Apache 2.0 and CC-BY-ND-4.0

(consider L-GPLv3.0 and CC-BY-4.0 too)

Take home #2

Do not give reproducibility for granted,

even more so replicability.

Instead, look for the possibilities to reproduce a study yourself (or gain insight in their "success").

(caveat: it will take time)

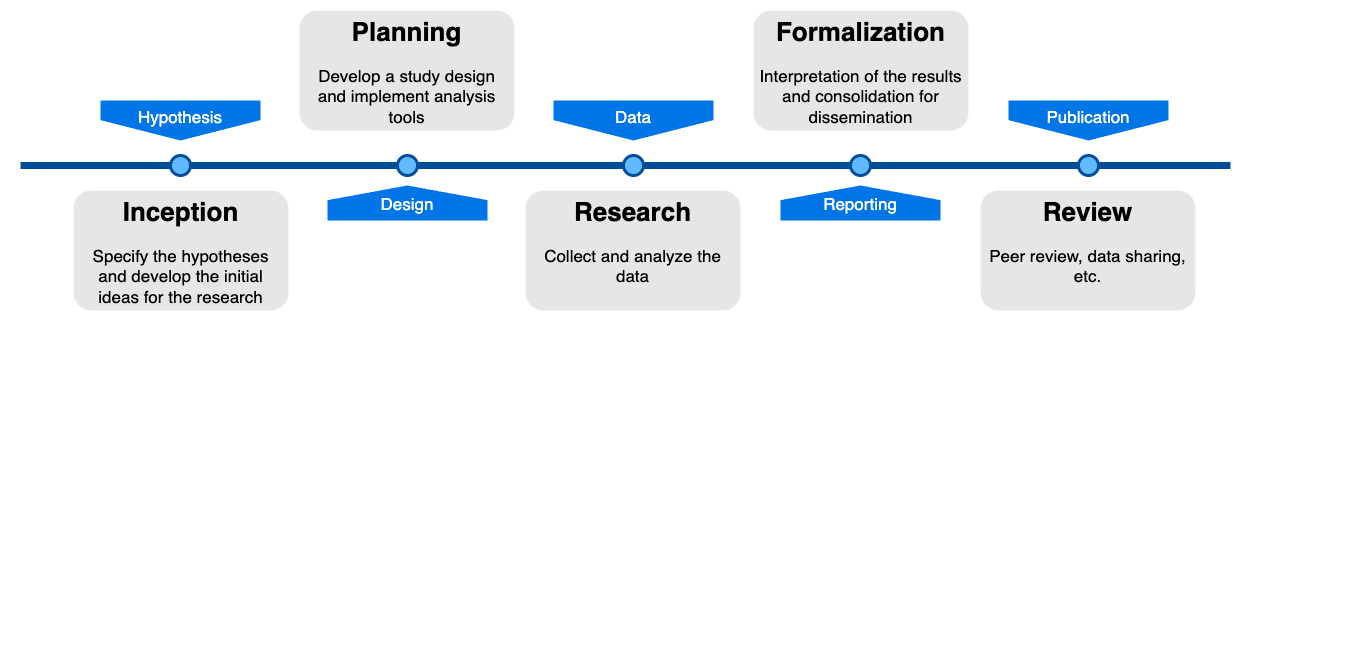

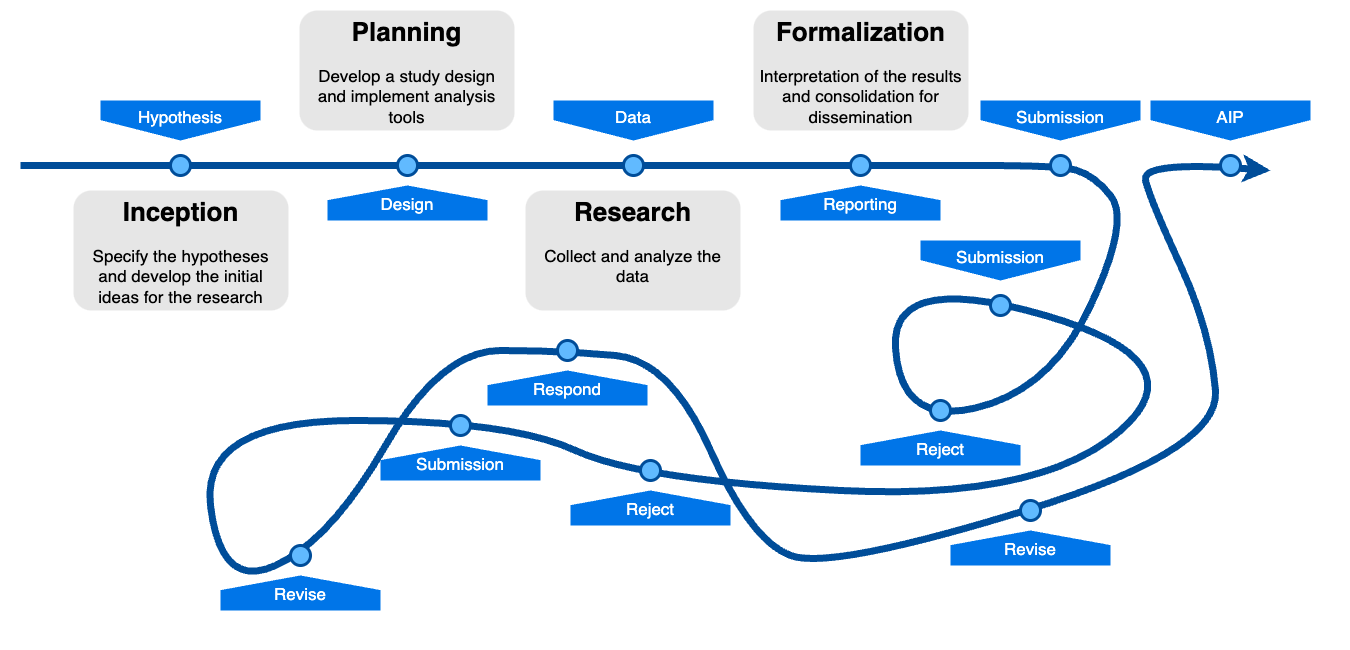

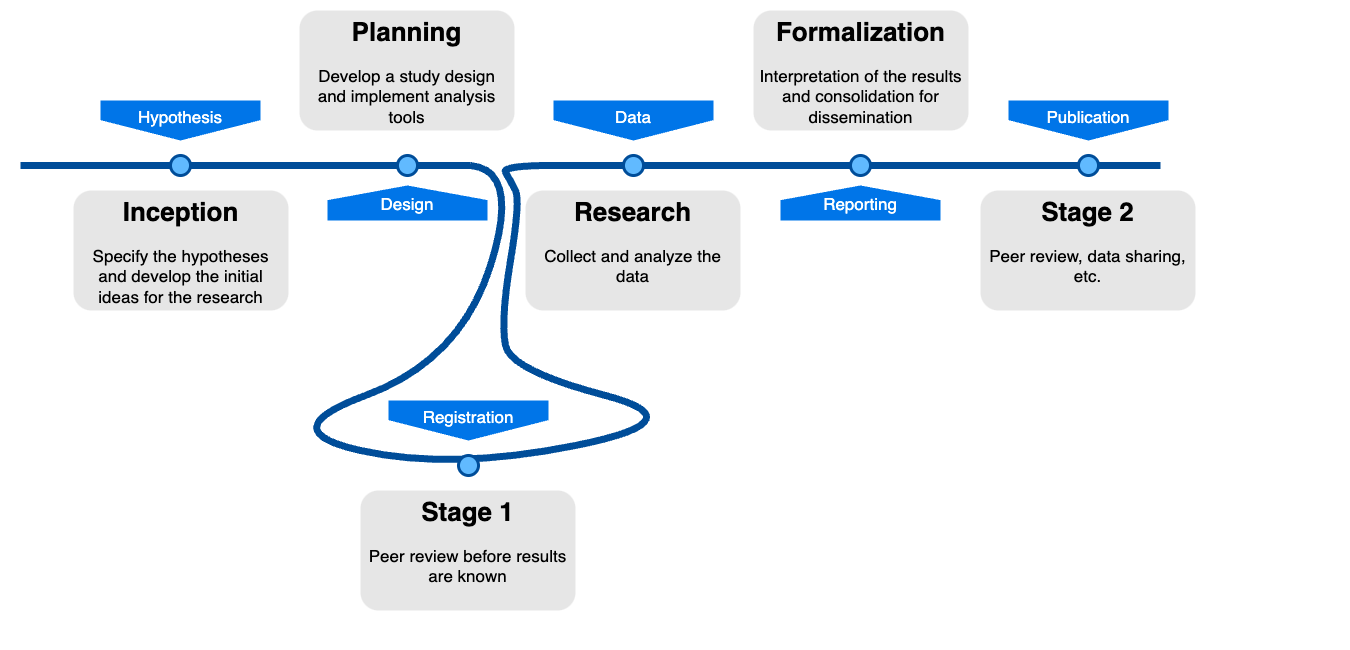

3. Another "good quality" indicator: Registered Reports

Registered Reports

Images courtesy of Oscar Esteban (CC-BY-4.0)

Ethics committee,

internal project assessment, ...

Re-learn scientific process

Currently not adapted to short projects

Guarantees a publication!

Registered Reports

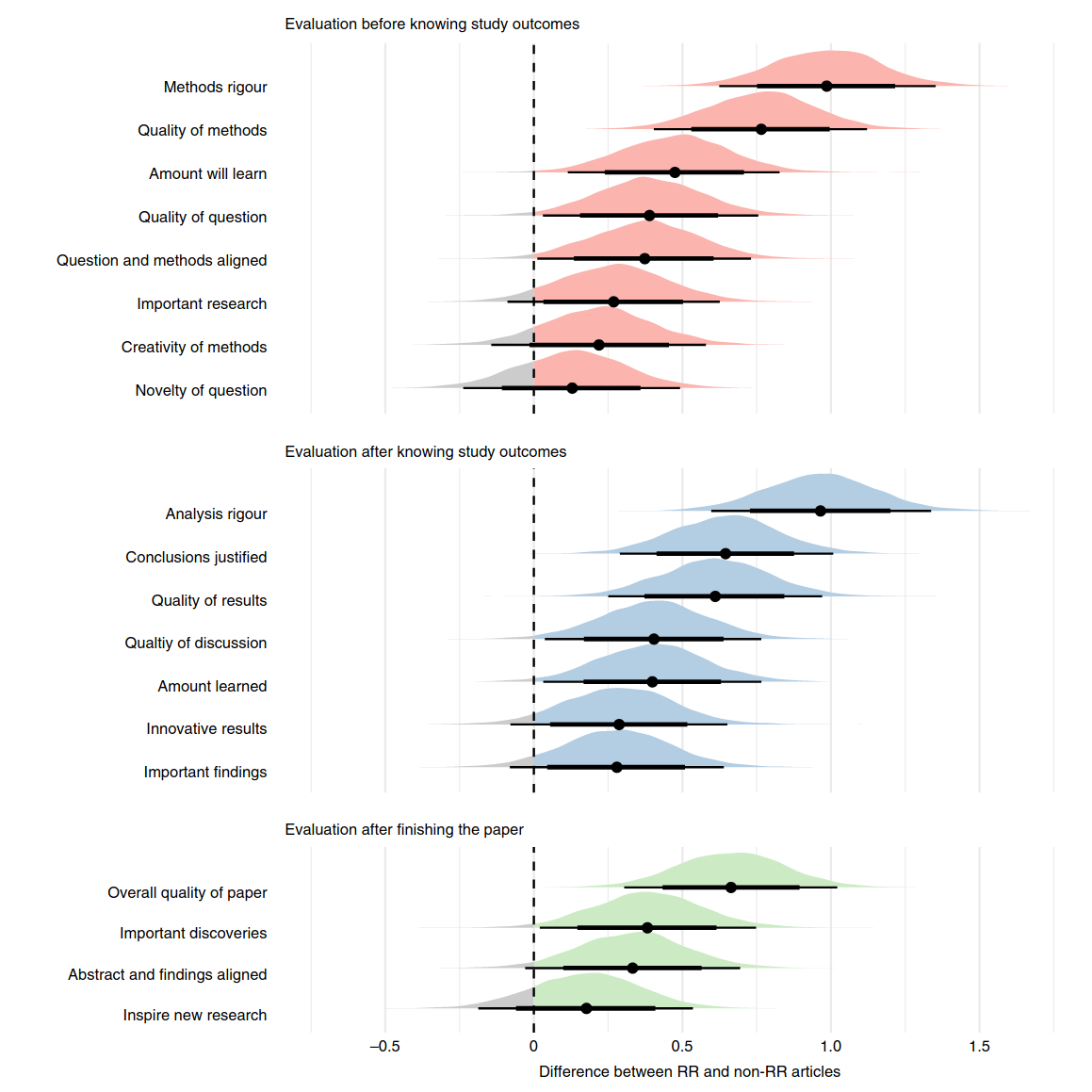

Registered Reports Quality

Soderberg et al. 2021 (Nat. Hum. Behav,)

Registered reports are scored as better quality

against equivalent papers

in a blinded test

(29 RRs, 86 papers, 353 reviewers)

Take home #3

Look for registered reports in your field, chances are their quality will be superior to non-registered reports.

The next time you start a (long) project, consider registered reports.

Shout Out: Registered abstracts session today @ 15h00

(Auditorium 900)

The risks

of non-replicable science

- Erratum

- Retraction

- Misinformation

- Public trust

- Impact

-

Erratum

Retraction

We are retracting this article due to concerns with Figure 5. In Figure 5A, there is a concern that the first and second lanes of the HIF-2α panel show the same data, [...], despite all being labeled as unique data. [...] We believe that the overall conclusions of the paper remain valid, but we are retracting the work due to these underlying concerns about the figure. Confirmatory experimentation has now been performed and the results can be found in a preprint article posted on bioRxiv [...]

Last take home message:

What you do in your scientific work has an impact on society.

It's not about you.

Remember that.

That's all folks!

Find the presentation at:

slides.com/smoia/esmrmb2025/scroll

Any question [/opinions/objections/...]?

| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |

Find the presentation at:

slides.com/smoia/esmrmb2025/scroll