RankSEG: A Consistent Ranking-based Framework for Segmentation

(Joint with Chunlin Li)

Ben Dai

The Chinese University of Hong Kong

Evolution of Consistency

Ronald Fisher (1890 - 1962)

A statistic is said to be a consistent estimate of any parameter, if when calculated from an indefinitely large sample it tends to be accurately equal to that parameter.

- Fisher (1925) Theory of Statistical Estimation

Evolution of Consistency

Ronald Fisher (1890 - 1962)

A statistic is said to be a consistent estimate of any parameter, if when calculated from an indefinitely large sample it tends to be accurately equal to that parameter.

- Fisher (1925) Theory of Statistical Estimation

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency

Evolution of Consistency

Ronald Fisher (1890 - 1962)

Fisher, R. A. (1922). On the mathematical foundations of theoretical statistics.

Consistency -- A statistic satisfies the criterion of consistency, if, when it is calculated from the whole population, it is equal to the required parameter.

- Fisher (1922)

Evolution of Consistency

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

Fisher / Fisherian

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(F_n) \xrightarrow{\mathbb{P}} \theta(F), \quad n \to \infty \)

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

Fisher / Fisherian

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(F_n) \xrightarrow{\mathbb{P}} \theta(F), \quad n \to \infty \)

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

\(\theta(F) = \theta_0\)

Fisher / Fisherian

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(F_n) \xrightarrow{\mathbb{P}} \theta(F), \quad n \to \infty \)

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

\(\theta(F) = \theta_0\)

Fisher Consistency (FC)

Fisher / Fisherian

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(F_n) \xrightarrow{\mathbb{P}} \theta(F), \quad n \to \infty \)

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

\(\theta(F) = \theta_0\)

Fisher Consistency (FC)

Gerow, K. (1989): In fact, for many years, Fisher took his two definitions to be describing the same thing... Fisher 34 years to polish the definitions of consistency to their present form.

Evolution of Consistency

Fisher / Fisherian

\( \hat{\theta}(F_n) \xrightarrow{\mathbb{P}} \theta(F), \quad n \to \infty \)

\( \hat{\theta}(X_1, \cdots, X_n) \xrightarrow{\mathbb{P}} \theta_0, \quad n \to \infty \)

Probability Consistency (PC)

\( \hat{\theta} = \hat{\theta}(X_1, \cdots, X_n) \)

\( \hat{\theta}= \hat{\theta}(F_n) \)

\(\theta(F) = \theta_0\)

Glivenko–Cantelli theorem (1933);

Fisher Consistency (FC)

Fisher / Fisherian

Evolution of Consistency: PC and FC

Hodges and Le Cam example is PC but not FC!

CR, Rao. (1962) Apparent Anomalies and Irregularities in Maximum Likelihood Estimation

Evolution of Consistency: PC and FC

CR, Rao. (1962) Apparent Anomalies and Irregularities in Maximum Likelihood Estimation

With continuous functionals; FC iff PC.

FC

-

Strength

-

FC almost implies PC (with continuous functionals)

- Distribution-free

- Practical and fundamentally true

-

FC provides a deterministic equality

- Easy to use

- Directly motivate a consistent method via the FC equality

-

FC almost implies PC (with continuous functionals)

FC

-

Strength

-

FC almost implies PC (with continuous functionals)

- Distribution-free

- Practical and fundamentally true

-

FC provides a deterministic equality

- Easy to use

- Directly motivate a consistent method via the FC equality

-

FC almost implies PC (with continuous functionals)

-

Weakness

-

Not all estimators can be easily expressed in the form of an empirical cdf.

-

iid assumptions

-

FC

-

Strength

-

FC almost implies PC (with continuous functionals)

- Distribution-free

- Practical and fundamentally true

-

FC provides a deterministic equality

- Easy to use

- Directly motivate a consistent method via the FC equality

-

FC almost implies PC (with continuous functionals)

-

Weakness

-

Not all estimators can be easily expressed in the form of an empirical cdf.

-

iid assumptions

-

- MLE and ERM are functionals of an empirical cdf

- ML often requires iid assumptions

- FC has become the minimal requirement for ML methods.

FC in ML

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

FC in ML

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

Due to the computational difficulty, direct optimization is often infeasible.

FC in ML

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

Consistency. What ensures that your estimator achieves good accuracy?

FC in ML

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big)$$

Consistency. What ensures that your estimator achieves good accuracy?

Suppose we develop a classifier as a functional of empirical cdf, that is \( \hat{\delta}(F_n) \).

FC in ML

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

FC

Consistency. What ensures that your estimator achieves good accuracy?

Suppose we develop a classifier as a functional of empirical cdf, that is \( \hat{\delta}(F_n) \).

FC in ML

Suppose we develop a classifier as a functional of empirical cdf, that is \( \hat{\delta}(F_n) \).

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

FC

How can we develop a FC method?

Classification

- Data: \( \mathbf{X} \in \mathbb{R}^d \to Y \in \{0,1\} \)

- Decision function: \( \delta(\mathbf{X}): \mathbb{R}^d \to \{0,1\} \)

- Evaluation:

$$ Acc( \delta) = \mathbb{E}\big( \mathbb{I}( Y = \delta(\mathbf{X}) )\big) $$

Consistency. What ensures that your estimator achieves good accuracy?

How to develop a FC method

Approach 1 (Plug-in rule).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) $$

$$ \delta^* = \delta^*(F) $$

How to develop a FC method

Approach 1 (Plug-in rule).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) $$

$$ \delta^* = \delta^*(F) \to \delta^*(F_n)$$

How to develop a FC method

Approach 1 (Plug-in rule).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) \ \ \to \ \delta^*(\mathbf{x}) = \mathbb{I}( p(\mathbf{x}) \geq 0.5 ) $$

$$ p(\mathbf{x}) = \mathbb{P}(Y=1|\mathbf{X}=\mathbf{x})$$

- Obtain the probabilistic form of the Bayes rule (optimal decision)

- Re-write the Bayes rule as \( \delta(F) \)

How to develop a FC method

Approach 1 (Plug-in rule).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) \ \ \to \ \delta^*(\mathbf{x}) = \mathbb{I}( p(\mathbf{x}) \geq 0.5 ) $$

$$ p(\mathbf{x}) = \mathbb{P}(Y=1|\mathbf{X}=\mathbf{x})$$

- Replace \(F\) by \(F_n\):

$$ \hat{\delta}(\mathbf{x}) = \mathbb{I}( \hat{p}_n(\mathbf{x}) \geq 0.5 ) $$

- Obtain the probabilistic form of the Bayes rule (optimal decision)

- Re-write the Bayes rule as \( \delta(F) \)

How to develop a FC method

Approach 1 (Plug-in rule).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) \ \ \to \ \delta^*(\mathbf{x}) = \mathbb{I}( p(\mathbf{x}) \geq 0.5 ) $$

$$ p(\mathbf{x}) = \mathbb{P}(Y=1|\mathbf{X}=\mathbf{x})$$

- Replace \(F\) by \(F_n\):

$$ \hat{\delta}(\mathbf{x}) = \mathbb{I}( \hat{p}_n(\mathbf{x}) \geq 0.5 ) $$

Plug-in rule method:

- Step 1. Estimate \( \hat{p}_n \) from MLE

- Step 2. Plug-in \( \hat{p}_n \) in \( \hat{\delta}(\mathbf{x}) \)

- Obtain the probabilistic form of the Bayes rule (optimal decision)

- Re-write the Bayes rule as \( \delta(F) \)

How to develop a FC method

Approach 2 (ERM via a surrogate loss).

- Obtain \( \hat{f} \) from a ERM with a surrogate loss (\( \hat{\delta} = \mathbb{I}(\hat{f} \geq 0) \))

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

$$ \hat{f}_{\phi} = \argmin_f \mathbb{E}_n \phi\big(Y f(\mathbf{X}) \big) $$

How to develop a FC method

Approach 2 (ERM via a surrogate loss).

- Obtain \( \hat{f} \) from a ERM with a surrogate loss (\( \hat{\delta} = \mathbb{I}(\hat{f} \geq 0) \))

$$ \hat{f}_{\phi} = \argmin_f \mathbb{E}_n \phi\big(Y f(\mathbf{X}) \big) $$

$$ f_\phi = \argmin_f \mathbb{E} \phi\big(Y f(\mathbf{X}) \big)$$

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

How to develop a FC method

Approach 2 (ERM via a surrogate loss).

- Obtain \( \hat{f} \) from a ERM with a surrogate loss (\( \hat{\delta} = \mathbb{I}(\hat{f} \geq 0) \))

$$ \hat{f}_{\phi} = \argmin_f \mathbb{E}_n \phi\big(Y f(\mathbf{X}) \big) $$

$$ f_\phi = \argmin_f \mathbb{E} \phi\big(Y f(\mathbf{X}) \big)$$

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

FC leads to conditions for "consistent" surrogate losses

How to develop a FC method

Approach 2 (ERM via a surrogate loss).

- Obtain \( \hat{f} \) from a ERM with a surrogate loss (\( \hat{\delta} = \mathbb{I}(\hat{f} \geq 0) \))

$$ \hat{f}_{\phi} = \argmin_f \mathbb{E}_n \phi\big(Y f(\mathbf{X}) \big) $$

$$ f_\phi = \argmin_f \mathbb{E} \phi\big(Y f(\mathbf{X}) \big)$$

$$Acc \big ( \hat{\delta}(F_n) \big) \xrightarrow{\mathbb{P}} Acc \big( \delta(F) \big) = \max_{\delta} Acc(\delta)$$

FC leads to conditions for "consistent" surrogate losses

Theorem. (Bartlett et al. (2006); informal) Let \(\phi\) be convex. \(\phi\) is "consistent" iff it is differentiable at 0 and \( \phi'(0) < 0 \).

Convex loss. Zhang (2004), Lugosi and Vayatis (2004), Steinwart (2005)

Non-convex loss. Mason et al. (1999), Shen et al. (2003)

Segmentation

Medical image segmentation

Autonomous vehicles

Agriculture

Segmentation

Input

output

Input: \(\mathbf{X} \in \mathbb{R}^d\)

Outcome: \(\mathbf{Y} \in \{0,1\}^d\)

Segmentation function:

- \( \pmb{\delta}: \mathbb{R}^d \to \{0,1\}^d\)

- \( \pmb{\delta}(\mathbf{X}) = ( \delta_1(\mathbf{X}), \cdots, \delta_d(\mathbf{X}) )^\intercal \)

Predicted segmentation set:

- \( I(\pmb{\delta}(\mathbf{X})) = \{j: \delta_j(\mathbf{X}) = 1 \}\)

Segmentation

Input

output

Input: \(\mathbf{X} \in \mathbb{R}^d\)

Outcome: \(\mathbf{Y} \in \{0,1\}^d\)

Segmentation function:

- \( \pmb{\delta}: \mathbb{R}^d \to \{0,1\}^d\)

- \( \pmb{\delta}(\mathbf{X}) = ( \delta_1(\mathbf{X}), \cdots, \delta_d(\mathbf{X}) )^\intercal \)

Predicted segmentation set:

- \( I(\pmb{\delta}(\mathbf{X})) = \{j: \delta_j(\mathbf{X}) = 1 \}\)

Segmentation

Input

output

$$ Y_j | \mathbf{X}=\mathbf{x} \sim \text{Bern}\big(p_j(\mathbf{x})\big)$$

$$ p_j(\mathbf{x}) := \mathbb{P}(Y_j = 1 | \mathbf{X} = \mathbf{x})$$

Probabilistic model:

The Dice and IoU metrics are introduced and widely used in practice:

Evaluation

IoU

The Dice and IoU metrics are introduced and widely used in practice:

Evaluation

Goal: learn segmentation function \( \pmb{\delta} \) maximizing Dice / IoU

Dice

- Given training data \( \{\mathbf{x}_i, \mathbf{y}_i\} _{i=1, \cdots, n}\), most existing methods characterize segmentation as a classification problem:

Existing frameworks

- Given training data \( \{\mathbf{x}_i, \mathbf{y}_i\} _{i=1, \cdots, n}\), most existing methods characterize segmentation as a classification problem:

Existing frameworks

Classification-based loss

CE + Focal

CE

CE

We aim to leverage the principles of FC to develop an consistent segmentation method.

FC in Segmentation

Recall (Plug-in rule in classification).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) $$

$$ \delta^* = \delta^*(F) \to \delta^*(F_n)$$

We aim to leverage the principles of FC to develop an consistent segmentation method.

FC in Segmentation

Recall (Plug-in rule in segmentation).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) $$

$$ \delta^* = \delta^*(F) \to \delta^*(F_n)$$

$$ \pmb{\delta}^* = \text{argmax}_{\pmb{\delta}} \ \text{Dice}_\gamma ( \pmb{\delta})$$

Bayes segmentation rule

We aim to leverage the principles of FC to develop an consistent segmentation method.

FC in Segmentation

Recall (Plug-in rule in segmentation).

$$ \delta^* = \argmax_{\delta} \ Acc(\delta) $$

$$ \delta^* = \delta^*(F) \to \delta^*(F_n)$$

$$ \pmb{\delta}^* = \text{argmax}_{\pmb{\delta}} \ \text{Dice}_\gamma ( \pmb{\delta})$$

Bayes segmentation rule

What form would the Bayes segmentation rule take?

Theorem 1 (Dai and Li, 2023). A segmentation rule \(\pmb{\delta}^*\) is a global maximizer of \(\text{Dice}_\gamma(\pmb{\delta})\) if and only if it satisfies that

\( \tau^*(\mathbf{x}) \) is called optimal segmentation volume, defined as

where \(J_\tau(\mathbf{x})\) is the index set of the \(\tau\)-largest probabilities, \(\Gamma(\mathbf{x}) = \sum_{j=1}^d {B}_{j}(\mathbf{x})\), and \( {\Gamma}_{- j}(\mathbf{x}) = \sum_{j' \neq j} {B}_{j'}(\mathbf{x})\) are Poisson-binomial random variables.

Bayes segmentation rule

Bayes Segmentation Rule

The Dice measure is separable w.r.t. \(j\)

Bayes Segmentation Rule

Bayes Segmentation Rule

Theorem 1 (Dai and Li, 2023). A segmentation rule \(\pmb{\delta}^*\) is a global maximizer of \(\text{Dice}_\gamma(\pmb{\delta})\) if and only if it satisfies that

\( \tau^*(\mathbf{x}) \) is called optimal segmentation volume, defined as

where \(J_\tau(\mathbf{x})\) is the index set of the \(\tau\)-largest probabilities, \(\Gamma(\mathbf{x}) = \sum_{j=1}^d {B}_{j}(\mathbf{x})\), and \( {\Gamma}_{- j}(\mathbf{x}) = \sum_{j' \neq j} {B}_{j'}(\mathbf{x})\) are Poisson-binomial random variables.

Bayes segmentation rule

Theorem 1 (Dai and Li, 2023). A segmentation rule \(\pmb{\delta}^*\) is a global maximizer of \(\text{Dice}_\gamma(\pmb{\delta})\) if and only if it satisfies that

\( \tau^*(\mathbf{x}) \) is called optimal segmentation volume, defined as

Obs: both the Bayes segmentation rule \(\pmb{\delta}^*(\mathbf{x})\) and the optimal volume function \(\tau^*(\mathbf{x})\) are achievable when the conditional probability \(\mathbf{p}(\mathbf{x}) = ( p_1(\mathbf{x}), \cdots, p_d(\mathbf{x}) )^\intercal\) is well-estimated

Bayes segmentation rule

Theorem 1 (Dai and Li, 2023). A segmentation rule \(\pmb{\delta}^*\) is a global maximizer of \(\text{Dice}_\gamma(\pmb{\delta})\) if and only if it satisfies that

\( \tau^*(\mathbf{x}) \) is called optimal segmentation volume, defined as

where \(J_\tau(\mathbf{x})\) is the index set of the \(\tau\)-largest probabilities, \(\Gamma(\mathbf{x}) = \sum_{j=1}^d {B}_{j}(\mathbf{x})\), and \( {\Gamma}_{- j}(\mathbf{x}) = \sum_{j' \neq j} {B}_{j'}(\mathbf{x})\) are Poisson-binomial random variables.

RankDice inspired by Thm 1 (plug-in rule)

-

Ranking the conditional probability \(p_j(\mathbf{x})\)

Plug-in rule

Theorem 1 (Dai and Li, 2023+). A segmentation rule \(\pmb{\delta}^*\) is a global maximizer of \(\text{Dice}_\gamma(\pmb{\delta})\) if and only if it satisfies that

\( \tau^*(\mathbf{x}) \) is called optimal segmentation volume, defined as

RankDice inspired by Thm 1

-

Ranking the conditional probability \(p_j(\mathbf{x})\)

-

searching for the optimal volume of the segmented features \(\tau(\mathbf{x})\)

Plug-in rule

RankDice

RankDice

RankDice

RankDice

- Fast evaluation of Poisson-binomial r.v.

- Quick search for \(\tau \in \{0,1,\cdots, d\}\)

Note that (6) can be rewritten as:

RankDice: Algo

In practice, the DFT–CF method is generally recommended for computing. The RF1 method can also been used when n < 1000, because there is not much difference in computing time from the DFT–CF method. The RNA method is recommended when n > 2000 and the cdf needs to be evaluated many times. As shown in the numerical study, the RNA method can approximate the cdf well, when n is large, and is more computationally efficient.

Hong. (2013) On computing the distribution function for the Poisson binomial distribution

RankDice: Algo

In practice, the DFT–CF method is generally recommended for computing. The RF1 method can also been used when n < 1000, because there is not much difference in computing time from the DFT–CF method. The RNA method is recommended when n > 2000 and the cdf needs to be evaluated many times. As shown in the numerical study, the RNA method can approximate the cdf well, when n is large, and is more computationally efficient.

Hong. (2013) On computing the distribution function for the Poisson binomial distribution

RankDice: Algo

- Fast evaluation of Poisson-binomial r.v.

- Quick search for \(\tau \in \{0,1,\cdots, d\}\)

Lemma 3 (Dai and Li, 2023). If \(\sum_{s=1}^{\tau} \widehat{q}_{j_s}(\mathbf{x}) \geq (\tau + \gamma + d) \widehat{q}_{j_{\tau+1}}(\mathbf{x})\), then \(\bar{\pi}_\tau(\mathbf{x}) \geq \bar{\pi}_{\tau'}(\mathbf{x})\) for all \(\tau' >\tau\)

Early stop!

RankDice: Algo - early stopping

RankDice: T-RNA

RankDice: T-RNA

It is unnecessary to compute all \(\mathbb{P}(\widehat{\Gamma}_{-j}(\mathbf{x}) = l)\) and \(\mathbb{P}(\widehat{\Gamma}(\mathbf{x}) = l)\) for \(l=1, \cdots, d\), since they are negligibly close to zero when \(l\) is too small or too large.

RankDice: T-RNA

Truncation!

RankDice: T-RNA

\(\widehat{\sigma}^2(\mathbf{x}) = \sum_{j=1}^d \widehat{q}_j(\mathbf{x}) (1 - \widehat{q}_j(\mathbf{x})) \to \infty \quad \text{as } d \to \infty \)

\(o_P(1)\)

RankDice: T-RNA

RankDice: BA Algo

RankDice: BA Algo

RankDice: BA Algo

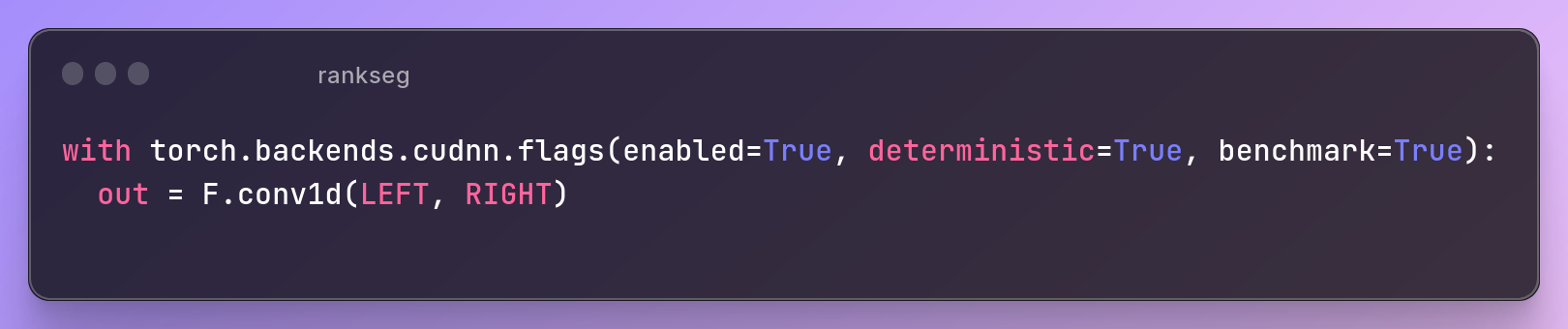

GPU via CUDA

\(o_P(1)\)

RankDice: BA Algo

RankDice: Algo

Fisher consistency or Classification-Calibration

(Lin, 2004, Zhang, 2004, Bartlett et al 2006)

Classification

Segmentation

RankDice: Theory

RankDice: Theory

RankDice: Theory

RankDice: Theory

RankDice: Theory

- Three segmentation benchmark: VOC, CityScapes, Kvasir

RankDice: Experiments

- Three segmentation benchmark: VOC, CityScapes, Kvasir

RankDice: Experiments

Source: Visual Object Classes Challenge 2012 (VOC2012)

- Three segmentation benchmark: VOC, CityScapes, Kvasir

RankDice: Experiments

Source: Visual Object Classes Challenge 2012 (VOC2012)

Source: The Cityscapes Dataset: Semantic Understanding of Urban Street Scenes

- Three segmentation benchmark: VOC, CityScapes, Kvasir

RankDice: Experiments

Source: Visual Object Classes Challenge 2012 (VOC2012)

Source: The Cityscapes Dataset: Semantic Understanding of Urban Street Scenes

Jha et al (2020) Kvasir-seg: A segmented polyp dataset

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

DeepLab: Chen et al (2018) Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

DeepLab: Chen et al (2018) Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

PSPNet: Zhao et al (2017) Pyramid Scene Parsing Network

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

DeepLab: Chen et al (2018) Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

PSPNet: Zhao et al (2017) Pyramid Scene Parsing Network

FCN: Long, et al. (2015) Fully convolutional networks for semantic segmentation

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

- The proposed framework VS. the existing frameworks

- Based on the SAME trained neural networks

- No implementation tricks

- Open-Source python module and codes

- All trained neural networks available for download

RankDice: Experiments

-

Three segmentation benchmarks: VOC, CityScapes, Kvasir

- Standard benchmarks, NOT cherry-picks

- Three commonly used DL models: DeepLab-V3+, PSPNet, FCN

- The proposed framework VS. the existing frameworks

- Based on the SAME trained neural networks

- No implementation tricks

- Open-Source python module and codes

- All trained neural networks available for download

RankDice: Experiments

RankDice: Experiments

RankDice: Experiments

RankDice: Experiments

RankDice: Experiments

RankDice: Experiments

The optimal threshold is NOT 0.5, and it is adaptive over different images/inputs

RankDice: Experiments

The optimal threshold is NOT fixed, and it is adaptive over different images/inputs

RankDice: Experiments

Long, et al. (2015) Fully convolutional networks for semantic segmentation

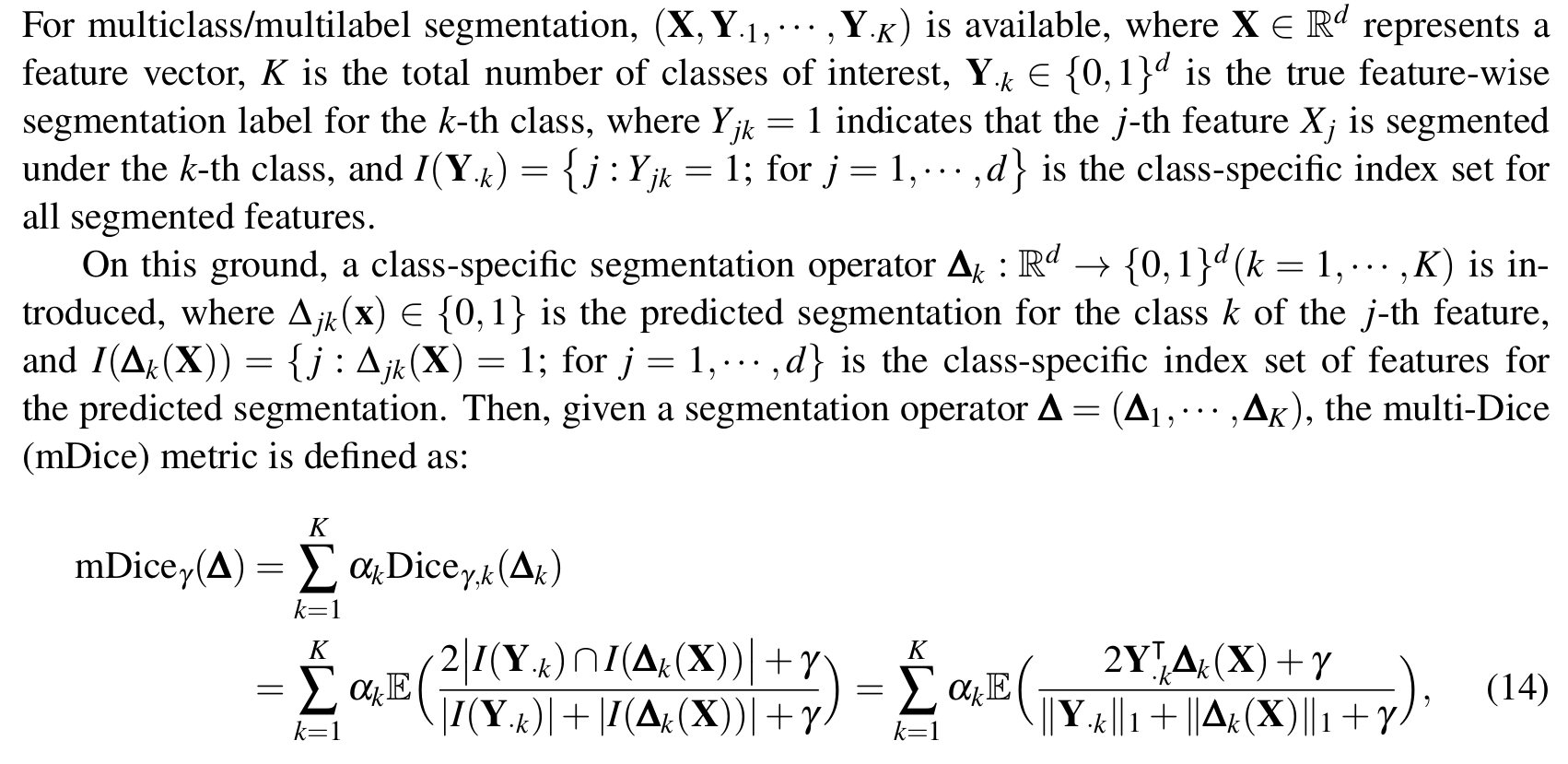

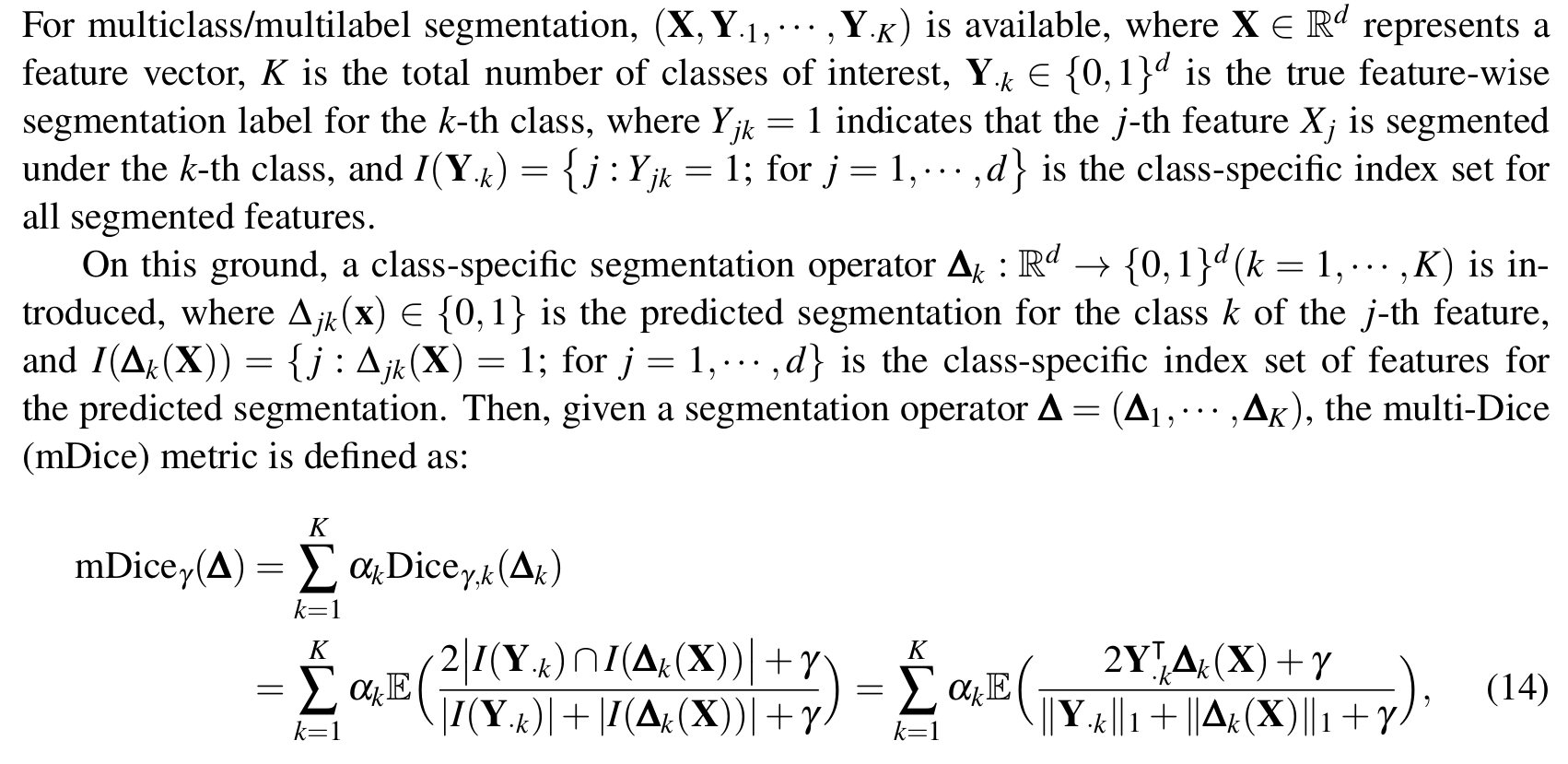

mRankDice

- Probabilistic model: multiclass or multilabel

- Decision rule: overlapping / non-overlapping

mRankDice

mRankDice

mRankDice

mRankDice

mRankDice

mRankDice

- mRankDice: extension and challenge

- RankIoU

- Simulation

- Probability calibration

- ....

More results

-

To our best knowledge, the proposed ranking-based segmentation framework RankDice, is the first consistent segmentation framework with respect to the Dice metric.

-

Three numerical algorithms with GPU parallel execution are developed to implement the proposed framework in large-scale and high-dimensional segmentation.

-

We establish a theoretical foundation of segmentation with respect to the Dice metric, such as the Bayes rule, Dice-calibration, and a convergence rate of the excess risk for the proposed RankDice framework, and indicate inconsistent results for the existing methods.

-

Our experiments suggest that the improvement of RankDice over the existing frameworks is significant.

Contribution

Thank you!

If you like RankDice please star 🌟 our Github repository, thank you for your support!