Comment récupérer des données sur le web automatiquement à l’aide du scraping, même lorsque je débute en Python

Pourquoi s'intéresser à l'extraction automatique de données du web

(ou web scraping) ?

- Exercice de programmation motivant

- Projet de recherche

- Data journalisme

- Etre alerté d'une offre intéressante

- Etude de marché et observer la concurrence

- Automatiser sa recherche de mots clés

- Exporter le contenu d'un site en vue de sa migration

CAS D'USAGE

Ai-je le droit de récupérer des données sur le web automatiquement ?

- "Terms & Conditions" ou "Conditions d'utilisation"

- "Privacy Policy" ou "Politique de confidentialité"

- robots.txt

Vérifications d'usage

Rechercher les termes

- Scraper ou Scraping

- Récupération automatique

- Crawler ou Crawling

- Bot ou Robot

- Spider

- Program ou Programme

Conditions d'utilisation

Garder les données de personnes privées hors du contexte de votre automatisation

- Pas de numéros de téléphone privés

- Pas d'adresse emails privées

- Pas d'adresses de privés

- Pas d'identités

N'oubliez pas le RGPD

robots.txt

# robots.txt de developpez.com

Sitemap: https://www.developpez.com/sitemap.xml

Sitemap: https://www.developpez.com/sitemap2.xml.gz

User-agent: *

Disallow: /ws/

Disallow: /telechargements/

Disallow: /redirect/

Disallow: /actu/partager/robots.txt

# robots.txt de oreilly.com

User-agent: *

Disallow: /images/

Disallow: /graphics/

Disallow: /admin/

Disallow: /promos/

Disallow: /ddp/

Disallow: /dpp/

Disallow: /programming/free/files/

Disallow: /design/free/files/

Disallow: /iot/free/files/

Disallow: /data/free/files/

Disallow: /webops-perf/free/files/

Disallow: /web-platform/free/files/

Disallow: /cs/

Disallow: /test/

Disallow: /*/?ar

Disallow: /*/?orpq

Disallow: /*/?discount=learn

Disallow: /self-registration/*

User-agent: 008

Disallow: /

#ITOPS-10158

Sitemap: https://www.oreilly.com/book-sitemap.xml

#ITOPS-8392

Sitemap: https://www.oreilly.com/radar/sitemap.xml

Sitemap: https://www.oreilly.com/content/sitemap.xml

#ITOPS-10157

Sitemap: https://www.oreilly.com/video-sitemap.xml

Analyser automatiquement un robots.txt

# is_allowed.py

from urllib import robotparser

robot_parser = robotparser.RobotFileParser()

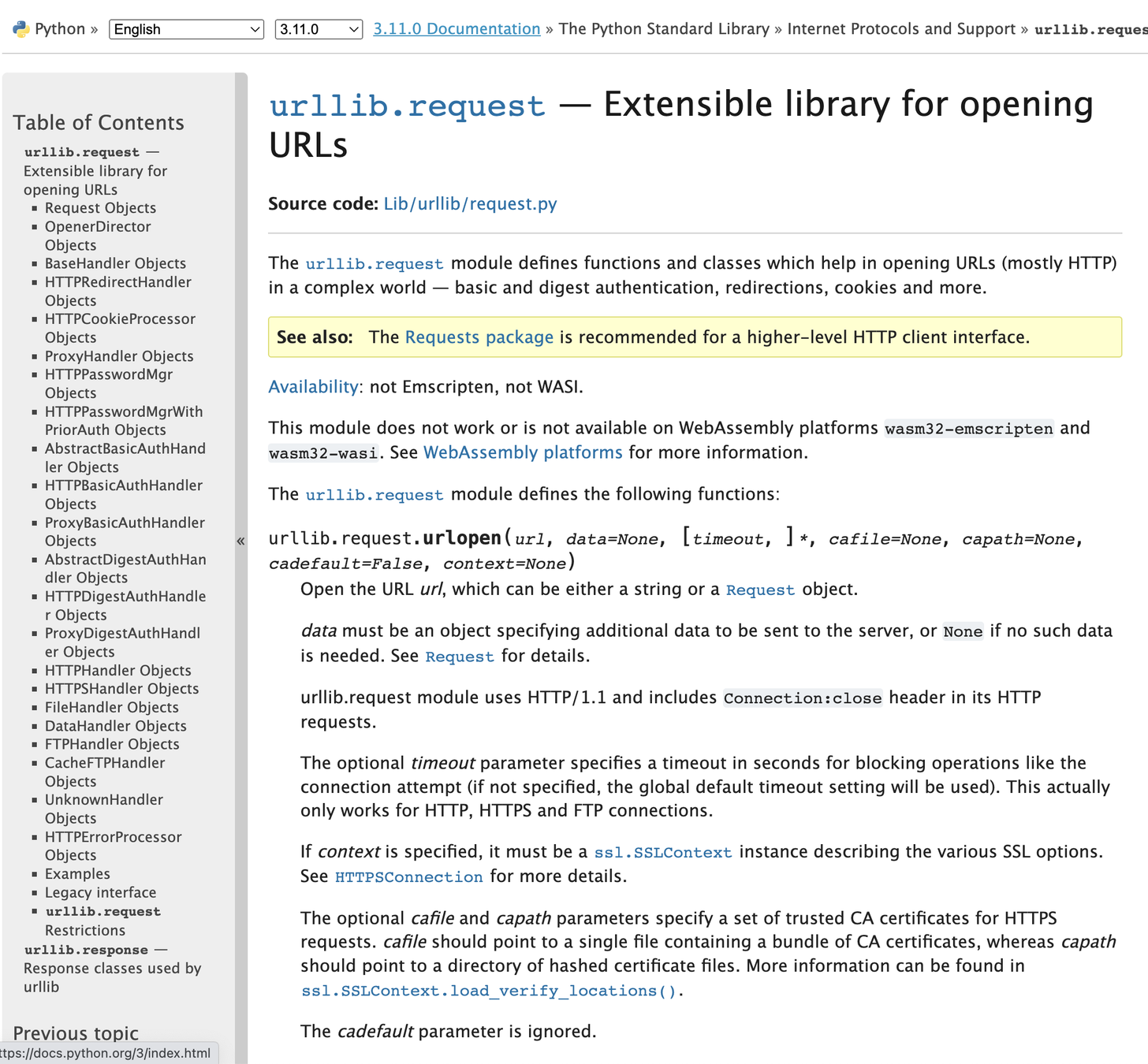

La bibliothèque standard avant tout

# is_allowed.py - pipenv install typer

from urllib import robotparser

from urllib.parse import urlparse

import typer

def prepare_robots_parser(robot_parser, robot_url):

"""Prépare l'analyseur de fichier robots.txt à l'analyse."""

robot_parser.set_url(robot_url)

robot_parser.read()

def is_allowed(robot_parser, url, user_agent='*'):

"""Détermine si une url peut être scrapée."""

return robot_parser.can_fetch(user_agent, url)

def main(robot_url: str, url: str, user_agent: str = "*"):

"""Point d'entrée principal du script."""

# TODO: valider robot_url et url

robot_parser = robotparser.RobotFileParser()

prepare_robots_parser(robot_parser, robot_url)

if is_allowed(robot_parser, url, user_agent):

print(f"L'accès à l'url {url} est autorisé")

else:

print(f"L'accès à l'url {url} n'est pas autorisé")

if __name__ == "__main__":

typer.run(main)

$ python -m is_allowed https://www.developpez.com/robots.txt https://www.developpez.com

L'accès à l'url https://www.developpez.com est autorisé

$$ python -m is_allowed [OPTIONS] ROBOT_URL URL

Quels outils pour faire du scraping web ?

-

Phase 1 L'extraction

- urllib, requests, scrapy, etc.

-

Phase 2 La transformation

- BeautifulSoup, lxml, parsel, scrapy, etc.

-

Phase 3 L'enregistrement ou loading

- json, xlsx, csv, sqlite3, postgresql, mongodb, etc.

Le web scraping: un processus en 3 Phases

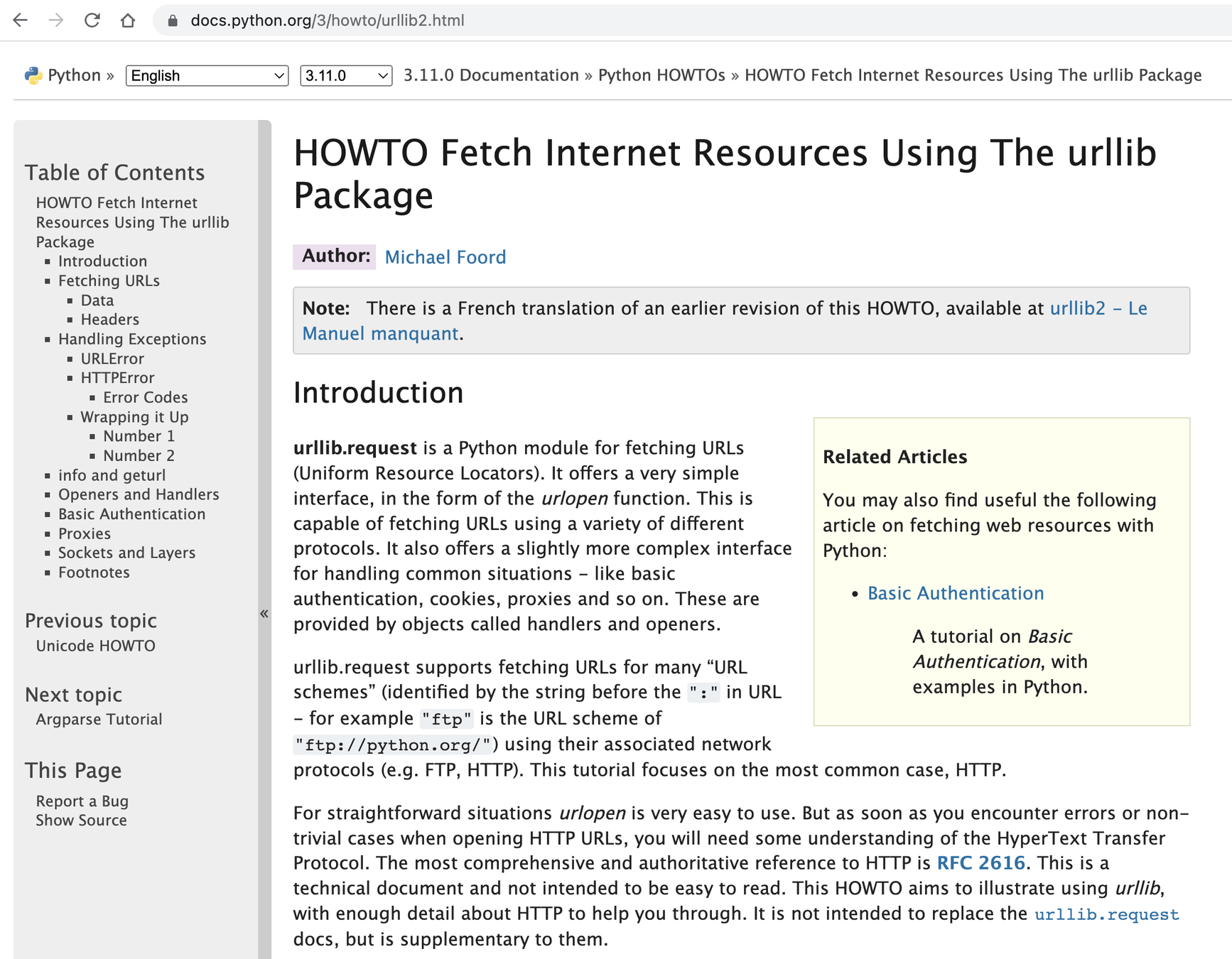

- urllib.request.urlopen()

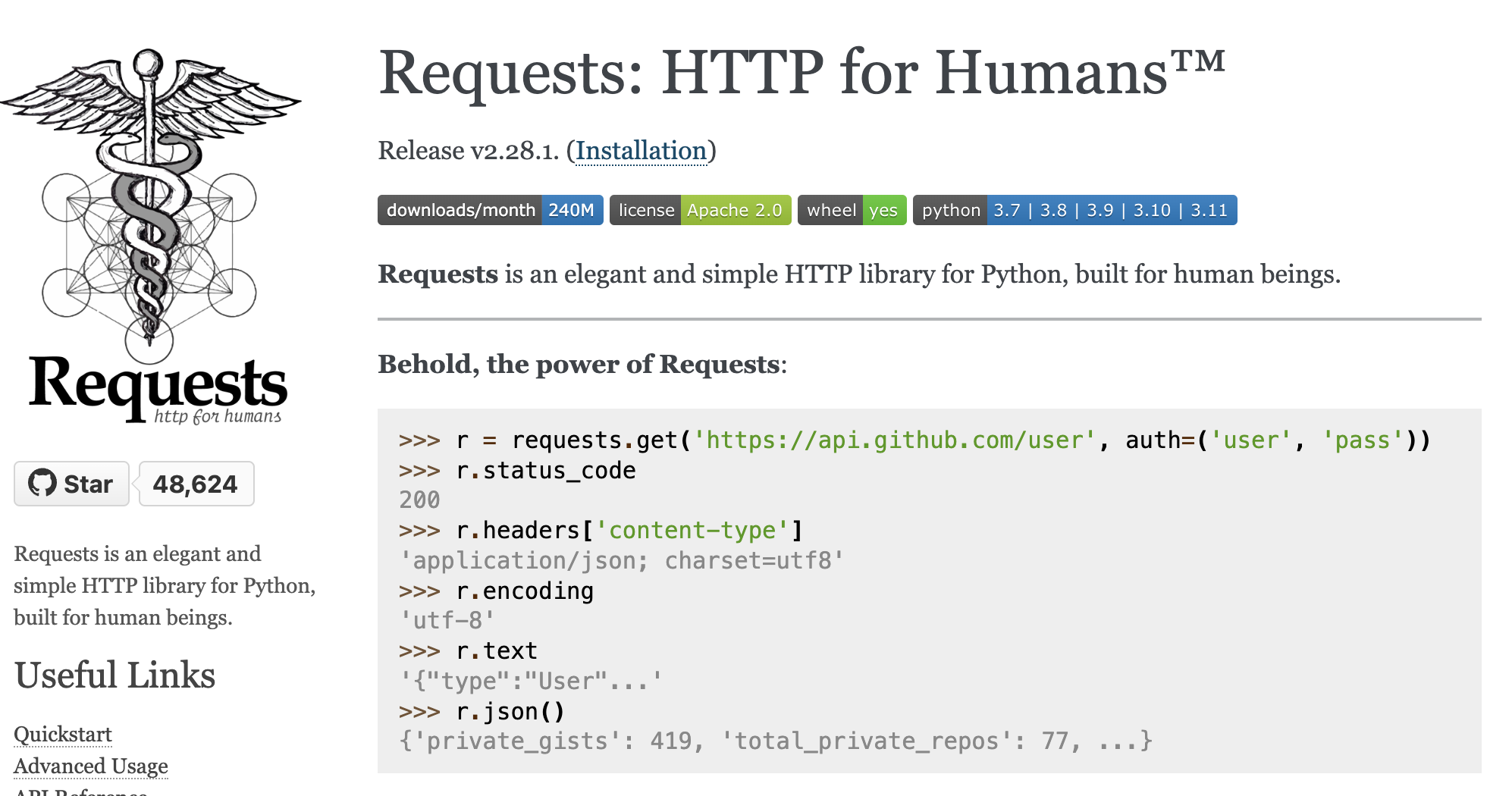

- requests

- Scrapy (phases 1, 2 et 3)

Phase 1 Télécharger les données

# exemple_urllib.py:

# adapté de pymotw.com/3/urllib.request/

from urllib import requesturllib.request, la bibliothèque standard avant tout

# exemple_urllib.py

# adapté de pymotw.com/3/urllib.request/

from urllib import request

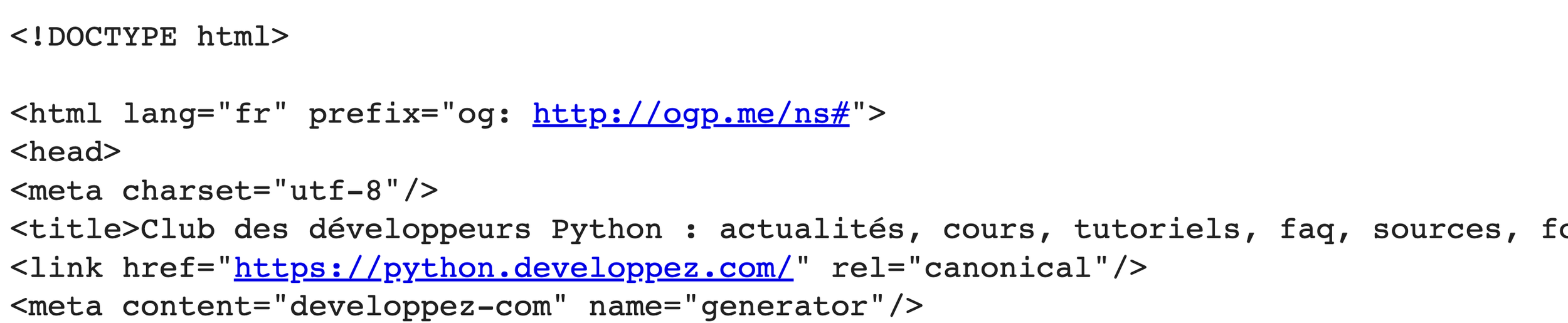

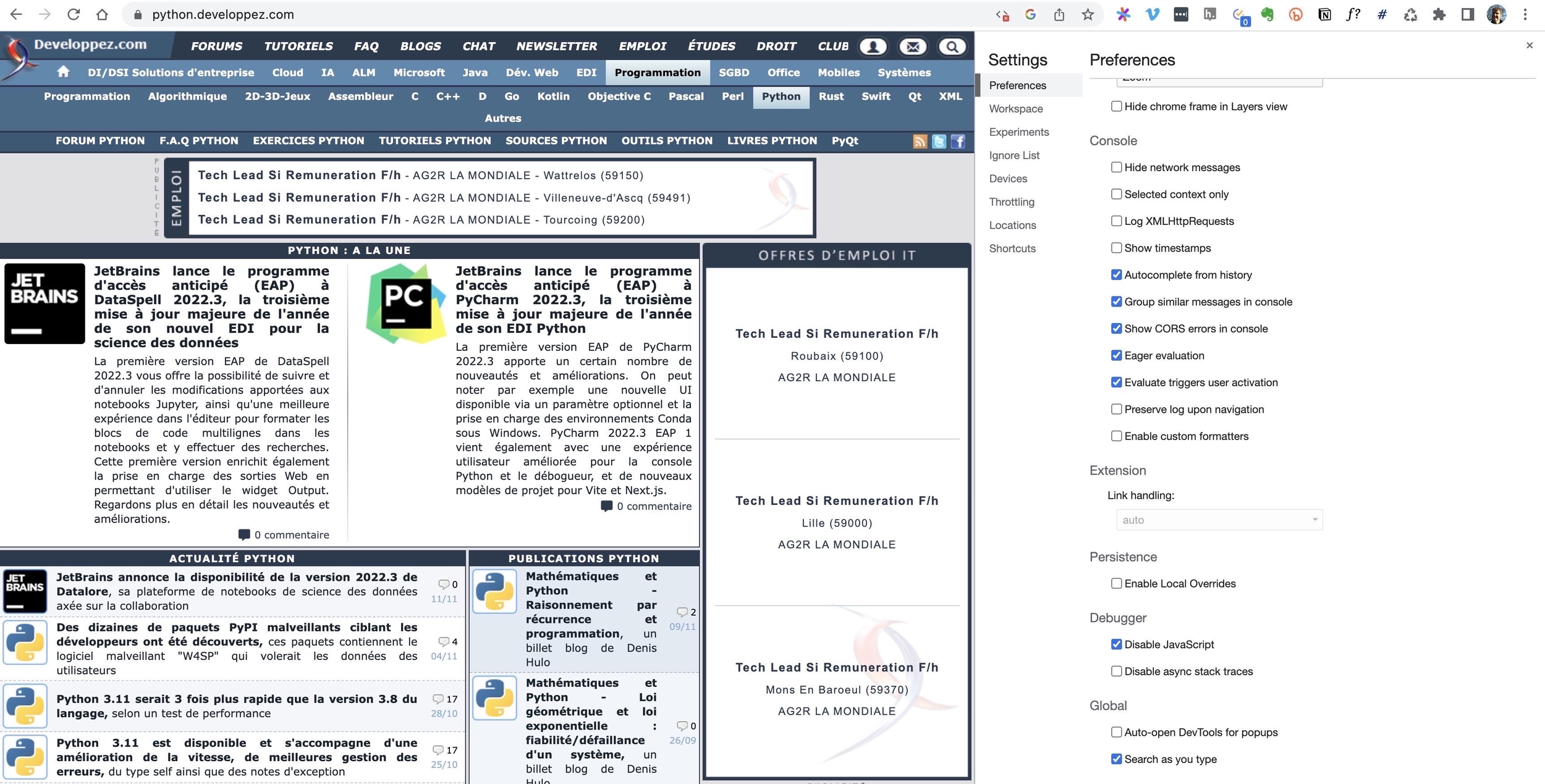

response = request.urlopen('https://python.developpez.com/')# exemple_urllib.py

# adapté de pymotw.com/3/urllib.request/

from urllib import request

response = request.urlopen('https://python.developpez.com/')

print('RESPONSE:', response)

print('URL :', response.geturl())

headers = response.info()

print('DATE :', headers['date'])

print('HEADERS :')

print('-' * 80)

print(headers)

data = response.read().decode('iso-8859-1')

print('LENGTH :', len(data))

print('DATA :')

print('-' * 80)

print(data)RESPONSE: <http.client.HTTPResponse object at 0x1019ae6e0>

URL : https://python.developpez.com/

DATE : Thu, 01 Dec 2022 09:18:37 GMT

HEADERS :

--------------------------------------------------------------------------------

Date: Thu, 01 Dec 2022 09:18:37 GMT

Server: Apache/2.4.38 (Debian)

Referrer-Policy: unsafe-url

X-Powered-By: PHP/5.6.40

Set-Cookie: PHPSESSID=t6clh8bnj31joh6rv1ub6pva34; path=/

Expires: Thu, 19 Nov 1981 08:52:00 GMT

Cache-Control: no-store, no-cache, must-revalidate, post-check=0, pre-check=0

Pragma: no-cache

Connection: close

Transfer-Encoding: chunked

Content-Type: text/html, charset=iso-8859-1

LENGTH : 105343

DATA :

--------------------------------------------------------------------------------

<!DOCTYPE html>

<html lang="fr" prefix="og: http://ogp.me/ns#">

<head>

<meta charset="iso-8859-1">

<title>Club des développeurs Python : actualités, cours, tutoriels, faq, sources, forum</title>

<link rel="canonical" href="https://python.developpez.com/">

<meta name="generator" content="developpez-com">

<meta name="description" content="Club des développeurs Python : actualités, cours, tutoriels, faq, sources, forum">

<meta property="og:type" content="website" />

...suite du code html de la page...$ python -m exemple_urllib

# exemple_urllib.py

# adapté de pymotw.com/3/urllib.request/

from urllib import request

response = request.urlopen('https://python.developpez.com/')

print('RESPONSE:', response)

print('URL :', response.geturl())

headers = response.info()

print('DATE :', headers['date'])

print('HEADERS :')

print('-' * 80)

print(headers)

data = response.read().decode('iso-8859-1')

print('LENGTH :', len(data))

print('DATA :')

print('-' * 80)

print(data)L'encodage est un problème récurrent lorsqu'on scrape le monde francophone

# exemple_urllib.py

# adapté de pymotw.com/3/urllib.request/

from urllib import request

response = request.urlopen('https://python.developpez.com/')

print('RESPONSE:', response)

print('URL :', response.geturl())

headers = response.info()

print('DATE :', headers['date'])

print('HEADERS :')

print('-' * 80)

print(headers)

data = response.read().decode('utf-8')

print('LENGTH :', len(data))

print('DATA :')

print('-' * 80)

print(data)L'encodage est un problème récurrent lorsqu'on scrape le monde francophone

Traceback (most recent call last):

File "/opt/homebrew/Cellar/python@3.10/3.10.8/Frameworks/Python.framework/Versions/3.10/lib/python3.10/runpy.py", line 196, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/opt/homebrew/Cellar/python@3.10/3.10.8/Frameworks/Python.framework/Versions/3.10/lib/python3.10/runpy.py", line 86, in _run_code

exec(code, run_globals)

File "/Users/thierry/dev/wepynaires/2022/django-de-zero/exemple.py", line 16, in <module>

data = response.read().decode('utf-8')

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xe9 in position 118: invalid continuation byteTout ne se passe pas toujours comme on veut

Détecter automatiquement l'encodage

import re

from urllib import request

response = request.urlopen('https://python.developpez.com/')

headers = response.info()

charset = (

re.search(

r"charset=(?P<encoding>\S*)",

headers['Content-Type'],

re.IGNORECASE

)

.group("encoding")

)Rechercher l'encodage dans les en-têtes HTTP

import re

from urllib import request

response = request.urlopen('https://python.developpez.com/')

raw_data = response.read()

charset = (

re.search(

b"<meta[^>]+charset=['\"](?P<encoding>.*?)['\"]",

raw_data,

re.IGNORECASE

)

.group("encoding")

.decode()

)Rechercher l'encodage dans la page elle-même

- Guide officiel des expressions rationnelles https://docs.python.org/3/howto/regex.html

- regex101 pour s'entraîner https://regex101.com/

Pas à l'aise avec les expressions rationnelles ou regex ?

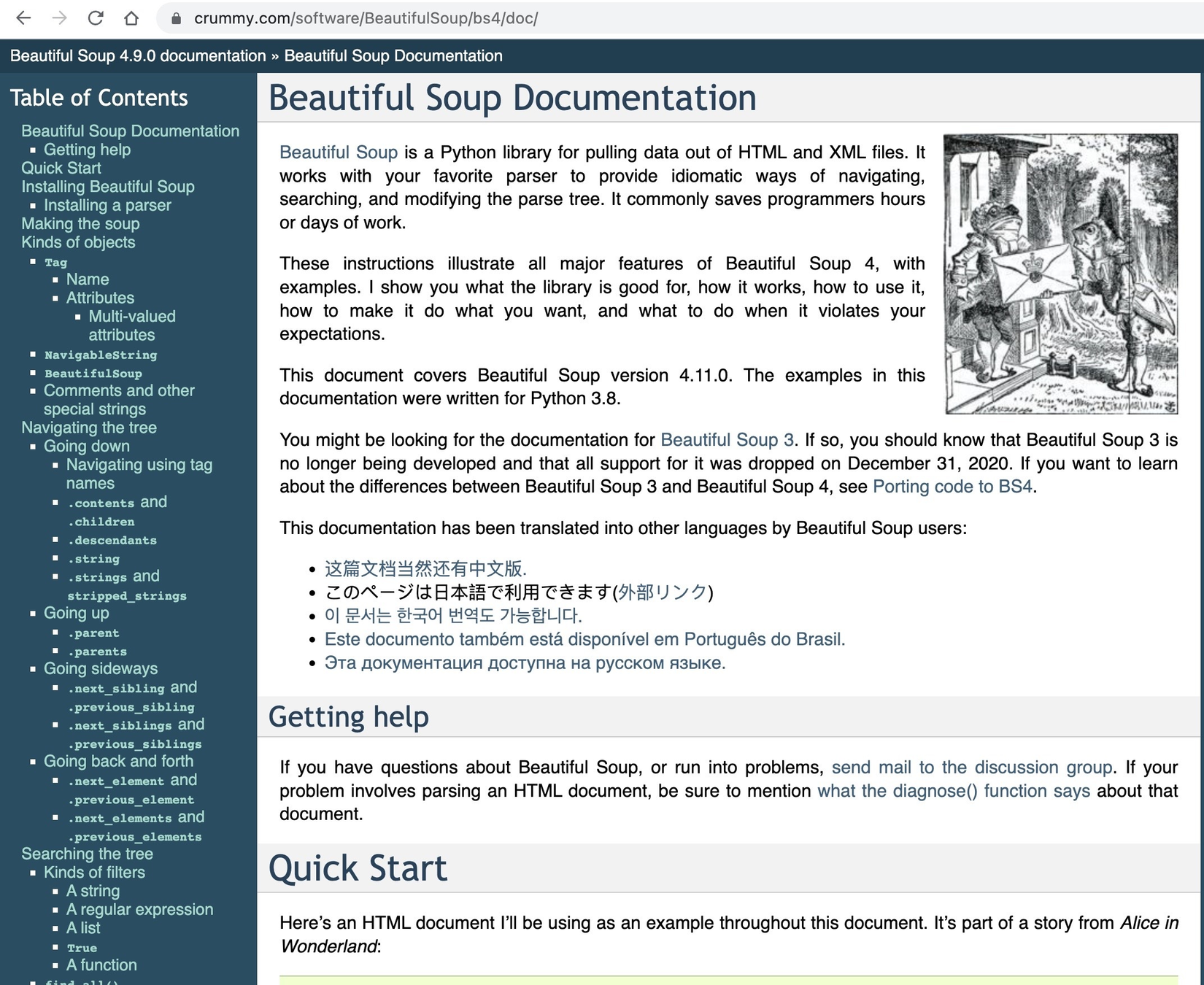

BeautifulSoup détecte automatiquement l'encodage

$ pipenv install beautifulsoup4

Installing beautifulsoup4...

Pipfile.lock (d52e37) out of date, updating to (242488)...

Locking [packages] dependencies...

Locking [dev-packages] dependencies...

Updated Pipfile.lock (2e0b0e7b9add9dc3f24d00f495ee7dc1e5687672aee16a577ed934e972242488)!

Installing dependencies from Pipfile.lock (242488)...from urllib import request

from bs4 import BeautifulSoup

response = request.urlopen('https://python.developpez.com/')

raw_data = response.read()

# html.parser peut être remplacé par lxml

# de meilleures performances d'analyse

soup = BeautifulSoup(raw_content, "'html.parser'")

print(soup)BeautifulSoup détecte automatiquement l'encodage

Gérer les erreurs avec urllib.request

bit.ly/howto-urllib

from urllib.request import urlopen

from urllib.error import URLError, HTTPError

def get_page(url)

"""Récupère le contenu d'une page et retoure une

urllib.response."""

try:

response = urlopen("https://placepython.fr")

except HTTPError as e:

print("Le serveur n'a pas pu satisfaire la requête.")

print(f"Le code d'erreur est: {e.code}")

except URLError as e:

print("Nous ne sommes pas parvenus à joindre le serveur.")

print(f"Raison de l'échec: {e.reason}")

else:

return responseL'encodage est un problème récurrent lorsqu'on scrape le monde francophone

Récupérer tous les liens d'une pages avec la bibliothèque standard

import re

from urllib.request import urlopen, urljoin

def get_page(url, encoding="utf8"):

"""Récupère une page web avec l'encodage spécifié et

retourne le code html de cette page."""

return urlopen(url).read().decode(encoding)

def extract_links(page):

"""Extrait les liens d'une page HTML et retourne une liste

des urls."""

return re.findall(

'<a[^>]+href=["\'](.*?)["\']', page, re.IGNORECASE

)

def main():

"""Point d'entrée du script."""

url = "https://python.developpez.com"

developpez = get_page(url, encoding="iso-8859-1")

links = extract_links(developpez)

for link in links:

print(urljoin(url, link))

if __name__ == '__main__':

main()La récupération des données se fait avec urllib.request

import re

from urllib.request import urlopen, urljoin

def get_page(url, encoding="utf8"):

"""Récupère une page web avec l'encodage spécifié et

retourne le code html de cette page."""

return urlopen(url).read().decode(encoding)

def extract_links(page):

"""Extrait les liens d'une page HTML et retourne une liste

des urls."""

return re.findall(

'<a[^>]+href=["\'](.*?)["\']', page, re.IGNORECASE

)

def main():

"""Point d'entrée du script."""

url = "https://python.developpez.com"

developpez = get_page(url, encoding="iso-8859-1")

links = extract_links(developpez)

for link in links:

print(urljoin(url, link))

if __name__ == '__main__':

main()L'extraction des liens a été faite avec re

https://python.developpez.com

http://www.developpez.net/forums/login.php?do=lostpw

https://www.developpez.net/forums/inscription/

https://www.developpez.com

https://www.developpez.com

https://www.developpez.net/forums/

https://general.developpez.com/cours/

https://general.developpez.com/faq/

https://www.developpez.net/forums/blogs/

https://chat.developpez.com/

https://www.developpez.com/newsletter/

https://emploi.developpez.com/

https://etudes.developpez.com/

https://droit.developpez.com/

https://club.developpez.com/

https://www.developpez.com

https://solutions-entreprise.developpez.com

https://solutions-entreprise.developpez.com

https://abbyy.developpez.com

https://big-data.developpez.com

https://bpm.developpez.com

https://business-intelligence.developpez.com

https://data-science.developpez.com

https://solutions-entreprise.developpez.com/erp-pgi/presentation-erp-pgi/

https://crm.developpez.com

https://sas.developpez.com

https://sap.developpez.com

https://www.developpez.net/forums/f1664/systemes/windows/windows-serveur/biztalk-server/

https://talend.developpez.com

https://droit.developpez.com

https://onlyoffice.developpez.com

https://cloud-computing.developpez.com

https://cloud-computing.developpez.com

https://oracle.developpez.com

https://windows-azure.developpez.com

https://ibmcloud.developpez.com

https://intelligence-artificielle.developpez.com

https://intelligence-artificielle.developpez.com

https://alm.developpez.com

https://alm.developpez.com

https://agile.developpez.com

https://merise.developpez.com

https://uml.developpez.com

https://microsoft.developpez.com

https://microsoft.developpez.com

https://dotnet.developpez.com

https://office.developpez.com

https://visualstudio.developpez.com

https://windows.developpez.com

https://dotnet.developpez.com/aspnet/

https://typescript.developpez.com

https://dotnet.developpez.com/csharp/

https://dotnet.developpez.com/vbnet/

https://windows-azure.developpez.com

https://java.developpez.com

https://java.developpez.com

https://javaweb.developpez.com

https://spring.developpez.com

https://android.developpez.com

https://eclipse.developpez.com

https://netbeans.developpez.com

https://web.developpez.com

https://web.developpez.com

https://ajax.developpez.com

https://apache.developpez.com

https://asp.developpez.com

https://css.developpez.com

https://dart.developpez.com

https://flash.developpez.com

https://javascript.developpez.com

https://nodejs.developpez.com

https://php.developpez.com

https://ruby.developpez.com

https://typescript.developpez.com

https://web-semantique.developpez.com

https://webmarketing.developpez.com

https://xhtml.developpez.com

https://edi.developpez.com

https://edi.developpez.com

https://4d.developpez.com

https://delphi.developpez.com

https://eclipse.developpez.com

https://jetbrains.developpez.com

https://labview.developpez.com

https://netbeans.developpez.com

https://matlab.developpez.com

https://scilab.developpez.comEt pour récupérer des images ?

import re

from urllib.request import urlopen, urljoin

def get_page(url, encoding="utf8"):

"""Récupère une page web avec l'encodage spécifié et

retourne le code html de cette page."""

return urlopen(url).read().decode('iso-8859-1')

def extract_image_links(page):

"""Extrait les liens d'images d'une page HTML et retourne une

liste des urls."""

return re.findall('<img[^>]+src=["\'](.*?)["\']', page, re.IGNORECASE)

def main():

"""Point d'entrée du script."""

url = "https://python.developpez.com"

developpez = get_page(url, encoding="iso-8859-1")

links = extract_image_links(developpez)

for link in links:

print(urljoin(url, link))

if __name__ == '__main__':

main()

Même usage de urllib.request et re

https://python.developpez.com/template/images/logo-dvp-h55.png

https://www.developpez.com/images/logos/90x90/jetbrains.png?1611920537

https://www.developpez.com/images/logos/90x90/pycharm.png?1553595023

https://www.developpez.com/images/logos/jetbrains.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/programmation.png

https://www.developpez.com/images/logos/jeux.png

https://www.developpez.com/images/logos/programmation.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/programmation.png

https://www.developpez.com/images/logos/jetbrains.png

https://www.developpez.com/images/logos/programmation.png

https://www.developpez.com/images/logos/securite2.png

https://www.developpez.com/images/logos/jetbrains.png

https://www.developpez.com/images/logos/pycharm.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/jetbrains.png

https://www.developpez.com/images/logos/jetbrains.png

https://www.developpez.com/images/logos/python.png

https://www.developpez.com/images/logos/python.png

En apprendre plus sur urllib.request ?

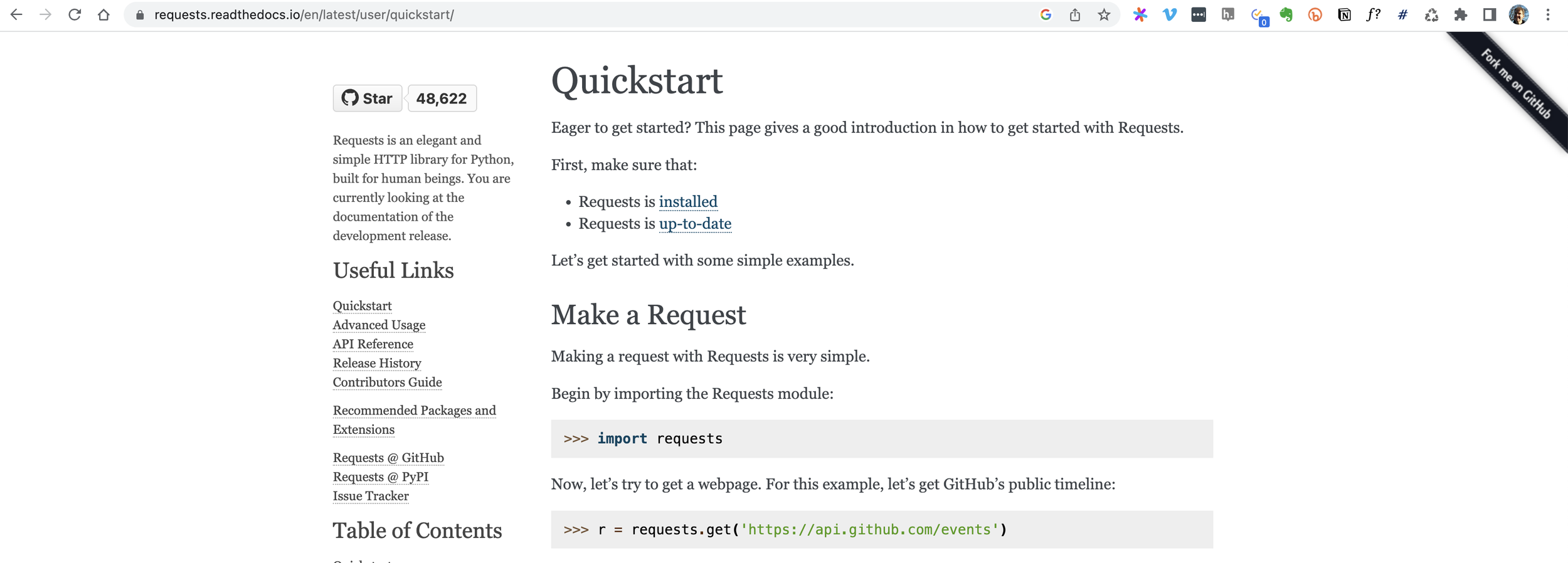

Récupérer des données avec la bibliothèque requests

$ pipenv install requests

Des requêtes HTTP légèrement simplifiées

import requests

def get_page(url, params=None):

"""Récupère une page sur le web et retourne une

request.Response."""

try:

response = requests.get(url, params=params)

response.raise_for_status()

except requests.exceptions.ConnectionError:

...

except requests.exceptions.URLRequired:

...

except requests.exceptions.HTTPError:

...

except requests.exceptions.RequestException:

# Tout le reste

...

else:

return response

response.content ou response.text ?

Lorsque requests se trompe dans le décodage

import requests

response = requests.get("https://www.developpez.com")

print(response.encoding)

print("-" * len(response.encoding))

for header, value in response.headers.items():

print(f"{header}: {value}")ISO-8859-1

----------

Date: Fri, 02 Dec 2022 12:54:23 GMT

Server: Apache/2.4.38 (Debian)

Referrer-Policy: unsafe-url

...

Connection: Keep-Alive

Transfer-Encoding: chunked

Content-Type: text/html, charset=iso-8859-1ici, requests a tout juste: on pourrait utilise requests.text

from pprint import pprint

import requests

response = requests.get("https://books.toscrape.com")

print(response.encoding)

print("-" * len(response.encoding))

for header, value in response.headers.items():

print(f"{header}: {value}")ISO-8859-1

----------

Date: Fri, 02 Dec 2022 13:02:10 GMT

Content-Type: text/html

Content-Length: 51294

Connection: keep-alive

Last-Modified: Thu, 26 May 2022 21:15:15 GMT

ETag: "628fede3-c85e"

Accept-Ranges: bytes

Strict-Transport-Security: max-age=0; includeSubDomains; preloadici, requests se trompe:

ne pas utiliser requests.text !

from bs4 import BeautifulSoup

# La fonction vue précédemment

from .http_tools import get_page

response = get_page("https://developpez.com")

if response:

# ATTENTION: response.content, PAS response.text

soup = BeautifulSoup(response.content, "html.parser")

Utilisation de BeautifulSoup pour détecter automatiquement de l'encodage à partir de response.content

$ pipenv install beautifulsoup4

from urllib import request

from bs4 import UnicodeDammit

import lxml.html

def decode_html(html_bytes):

"""Cherche à décoder des bytes de html à partir des

déclarations dans le code et d'heuristiques."""

converted = UnicodeDammit(html_bytes)

if not converted.unicode_markup:

raise UnicodeDecodeError (

"Echec de la détection de l'encodage. "

"Les jeux de caractères sont %s",

", ".join(converted.tried_encodings)

)

return converted.unicode_markup

response = request.urlopen("https://www.developpez.com")

html_text = decode_html(response.read())

# Permet d'utiliser directement p.ex. lxml qui

# n'est pas aussi doué dans le décodage

html = lxml.html.fromstring(html_text)Utiliser BeautifulSoup QUE pour détecter l'encodage ?

Phase 2

La transformation

- BeautifulSoup

- lxml

- Parsel

- Scrapy

Disable JavaScript

Un web sans javascript

Analyser le html avec BeautifulSoup

from bs4 import BeautifulSoup

...

def get_page(url):

...

return response

def parse_html(response):

"""Analyse et transforme la réponse reçue de la page

interrogée."""

soup = BeautifulSoup(response.content, "html.parser")

...$ pipenv install beautifulsoup4

BeautifulSoup:

find et find_all

soup.find('p', id="first-section") # retourne un objet unique

soup.find_all('p', class_="carousel") # retourne une liste

soup.find('img', src='pythons.jpg')

soup.find('img', src=re.compile('\.gif$'))

soup.find_all('p', text="python")

soup.find_all('p', text=re.compile('python'))

for tag in soup.find_all(re.compile('h')):

print(tag.name)

# affiche html, head, hr, h1, h2, hr

# Tous les éléments qui contiennent "Python"

soup.find_all(True, text=re.compile("python"))

BeautifulSoup:

select et select_one

# Source: documentation de BeautifulSoup

# bit.ly/bs4-select

soup.select("title")

# [<title>The Dormouse's story</title>]

soup.select("p:nth-of-type(3)")

# [<p class="story">...</p>]

soup.select("head > title")

# [<title>The Dormouse's story</title>]

soup.select("p > a")

# [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>,

# <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>,

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>]

soup.select("p > a:nth-of-type(2)")

# [<a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>]

soup.select("p > #link1")

# [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>]

soup.select("body > a")

# []

soup.select('a[href]')

# [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>,

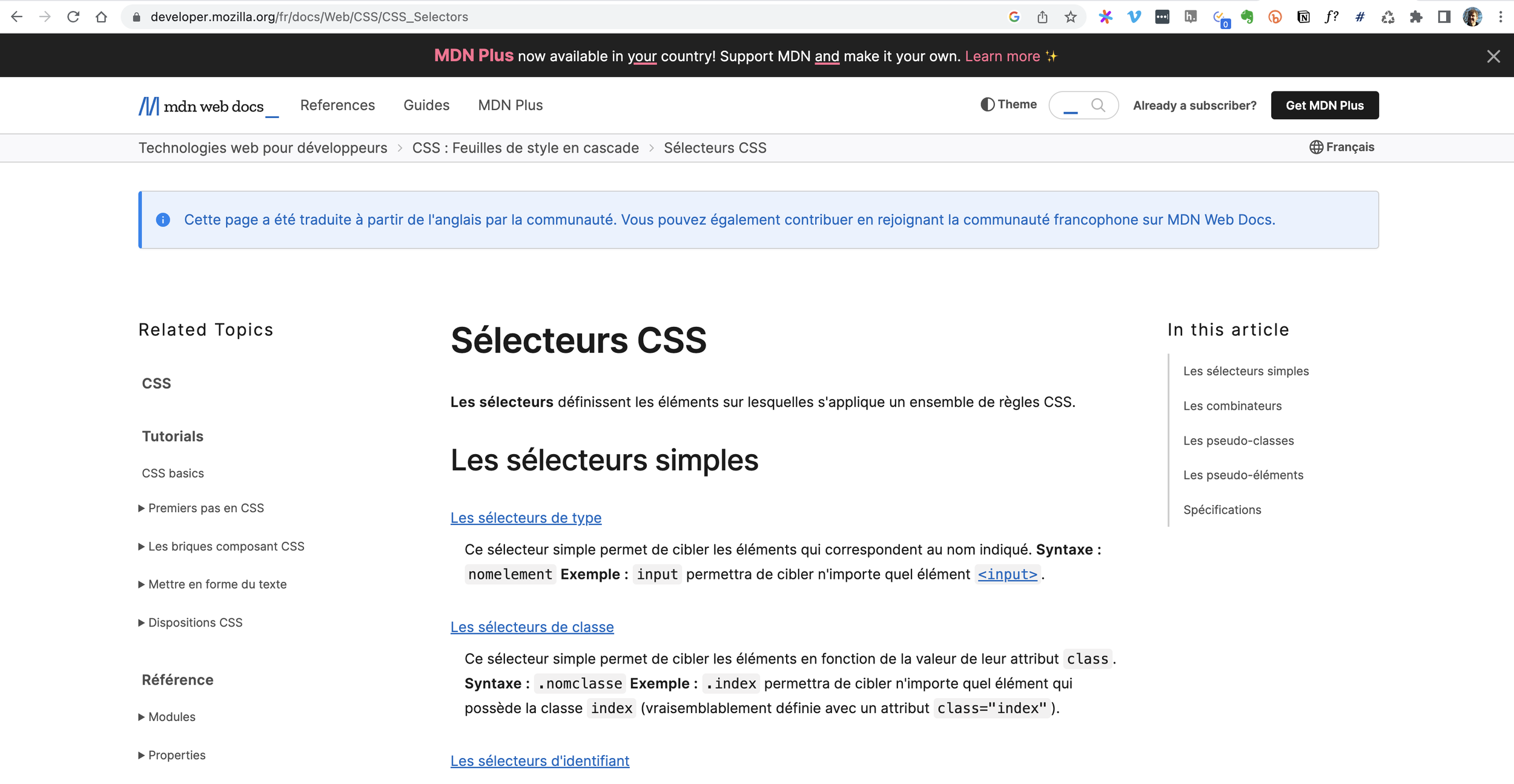

# <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>,

# <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>]La puissance des sélecteurs CSS

MDN le guide de survie des selecteurs CSS

https://mzl.la/3VqdZzN

L'arme fatale de la récupération de données

Utiliser XPath pour récupérer ses éléments ?

lxml ou Parsel ou Scrapy

Parsel:

un wrapper de lxml à la base des sélecteurs de Scrapy

$ pipenv install parsel

https://parsel.readthedocs.io/

<!-- https://parsel.readthedocs.org/en/latest/_static/selectors-sample1.html -->

<html>

<head>

<base href='http://example.com/' />

<title>Example website</title>

</head>

<body>

<div id='images'>

<a href='image1.html'>Name: My image 1 <br /><img src='image1_thumb.jpg'/></a>

<a href='image2.html'>Name: My image 2 <br /><img src='image2_thumb.jpg'/></a>

<a href='image3.html'>Name: My image 3 <br /><img src='image3_thumb.jpg'/></a>

<a href='image4.html'>Name: My image 4 <br /><img src='image4_thumb.jpg'/></a>

<a href='image5.html'>Name: My image 5 <br /><img src='image5_thumb.jpg'/></a>

</div>

</body>

</html>Le support CSS et XPath à la base de Scrapy

from bs4 import UnicodeDammit

import requests

from parsel import Selector

def decode_html(html_bytes):

...

url = 'https://parsel.readthedocs.org/en/latest/_static/selectors-sample1.html'

response = requests.get(url)

selector = Selector(text=decode_html(response.content))parsel.Selector offre une interface de haut niveau à XPath et aux sélecteurs css

>>> selector.xpath('//title/text()')

[<Selector xpath='//title/text()' data='Example website'>]

>>> selector.css('title::text')

[<Selector xpath='descendant-or-self::title/text()' data='Example website'>]

>>> selector.xpath('//title/text()').getall()

['Example website']

>>> selector.xpath('//title/text()').get()

'Example website'

>>> selector.css('title::text').get()

'Example website'

>>> selector.css('img').xpath('@src').getall()

['image1_thumb.jpg',

'image2_thumb.jpg',

'image3_thumb.jpg',

'image4_thumb.jpg',

'image5_thumb.jpg']

>>> selector.xpath('//div[@id="images"]/a/text()').get()

'Name: My image 1 '

>>> selector.xpath('//div[@id="not-exists"]/text()').get() is None

True

>>> [img.attrib['src'] for img in selector.css('img')]

['image1_thumb.jpg',

'image2_thumb.jpg',

'image3_thumb.jpg',

'image4_thumb.jpg',

'image5_thumb.jpg']

>>> selector.css('img').attrib['src']

'image1_thumb.jpg'

>>> selector.xpath('//base/@href').get()

'http://example.com/'

>>> selector.css('base::attr(href)').get()

'http://example.com/'

>>> selector.css('base').attrib['href']

'http://example.com/'

>>> selector.xpath('//a[contains(@href, "image")]/@href').getall()

['image1.html',

'image2.html',

'image3.html',

'image4.html',

'image5.html']

>>> selector.css('a[href*=image]::attr(href)').getall()

['image1.html',

'image2.html',

'image3.html',

'image4.html',

'image5.html']

>>> selector.xpath('//a[contains(@href, "image")]/img/@src').getall()

['image1_thumb.jpg',

'image2_thumb.jpg',

'image3_thumb.jpg',

'image4_thumb.jpg',

'image5_thumb.jpg']

>>> selector.css('a[href*=image] img::attr(src)').getall()

['image1_thumb.jpg',

'image2_thumb.jpg',

'image3_thumb.jpg',

'image4_thumb.jpg',

'image5_thumb.jpg']

Le mélange des sélecteurs css et de XPath offre des possibilités très expressives

Investissez dans XPath !

Utile avec lxml, Parsel, Scrapy et même avec Selenium pour vos tests Django :)

Scrapy: un framework complet

Le Django de la récupération automatique de données

Pour les petits scripts rapides

- urllib.request et Parsel

Pour les récupérations de données plus avancées

- Scrapy

- Scrapy-playwright (si le site contient du js)

- Scrapy-selenium (si le site contient du js)

Quand utiliser quoi ?

$ scrapy startproject monprojet

$ tree monprojet # windows: tree /F

monprojet

├── monprojet

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ └── __init__.py

└── scrapy.cfg

2 directories, 7 files$ pipenv install scrapy

BOT_NAME = 'monprojet'

SPIDER_MODULES = ['monprojet.spiders']

NEWSPIDER_MODULE = 'monprojet.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'monprojet (+https://placepython.fr)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 1monprojet/settings.py

$ scrapy shell 'https://quotes.toscrape.com/page/1/'

...

>>> response.css('title')

[<Selector xpath='descendant-or-self::title' data='<title>Quotes to Scrape</title>'>]

>>> response.css('title::text').getall()

['Quotes to Scrape']

>>> response.css('noelement')[0].get()

Traceback (most recent call last):

...

IndexError: list index out of range

>>> response.xpath('//title')

[<Selector xpath='//title' data='<title>Quotes to Scrape</title>'>]

>>> response.xpath('//title/text()').get()

'Quotes to Scrape'Se faire la main à l'aide du shell de Scrapy

On se retrouve avec les sélecteurs Parsel

les sélecteurs de response sont un fin wrapper

$ scrapy startproject monprojet

$ tree monprojet # windows: tree /F

monprojet

├── monprojet

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ └── __init__.py

└── scrapy.cfg

2 directories, 7 filesCréer des scripts de récupérations des données avec les spiders

import scrapy

class QuotesSpider(scrapy.Spider):

"""Spider responsable de récupérer des citations sur

quotes.toscrape.com. Source: https://docs.scrapy.org/"""

name = "quotes"

def start_requests(self):

urls = [

'https://quotes.toscrape.com/page/1/',

'https://quotes.toscrape.com/page/2/',

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

# Alternativement, au lieu de définir start_requests

start_urls = [

'https://quotes.toscrape.com/page/1/',

'https://quotes.toscrape.com/page/2/',

]

def parse(self, response):

# Faire quelque chose avec la réponse

...

Créer notre premier Spider pour scraper des données avec Scrapy

monprojet/spiders/quotes_spider.py

class QuotesSpider(scrapy.Spider):

...

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}Extraire des données dans notre spider

$ scrapy crawl quotes -O quotes.json

$ scrapy crawl quotes -o quotes.json

$ scrapy crawl quotes -o quotes.jsonl # ou .jl

$ scrapy crawl quotes -o quotes.csv

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'https://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

next_page = response.urljoin(next_page)

yield scrapy.Request(next_page, callback=self.parse)Suivre des liens avec notre spider

scrapy.Request ne supporte pas les liens internes

class QuotesSpider(scrapy.Spider):

...

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

next_page = response.css('li.next a').get()

if next_page is not None:

yield response.follow(next_page, callback=self.parse)Simplification avec response.follow()

Pas nécessaire d'extraire le href ni d'utiliser urljoin

Stocker ses données en base ?

Créer des pipelines !

Hors scope pour ce WePynaire :)

Que faire si notre page contient des éléments chargés dynamiquement pas Javascript ?

- Selenium

- selenium

- scrapy-playwright

- Playwright

- pytest-playwright

- scrapy-playwright

- Splash

- scrapy-spash

- Puppeteer

- pyppeteer (maintenu ?)

Les options

avec javascript

Scrapy-selenium

monprojet

├── chromedriver

├── geckodriver

├── monprojet

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ └── __init__.py

└── scrapy.cfg$ pipenv install scrapy-selenium

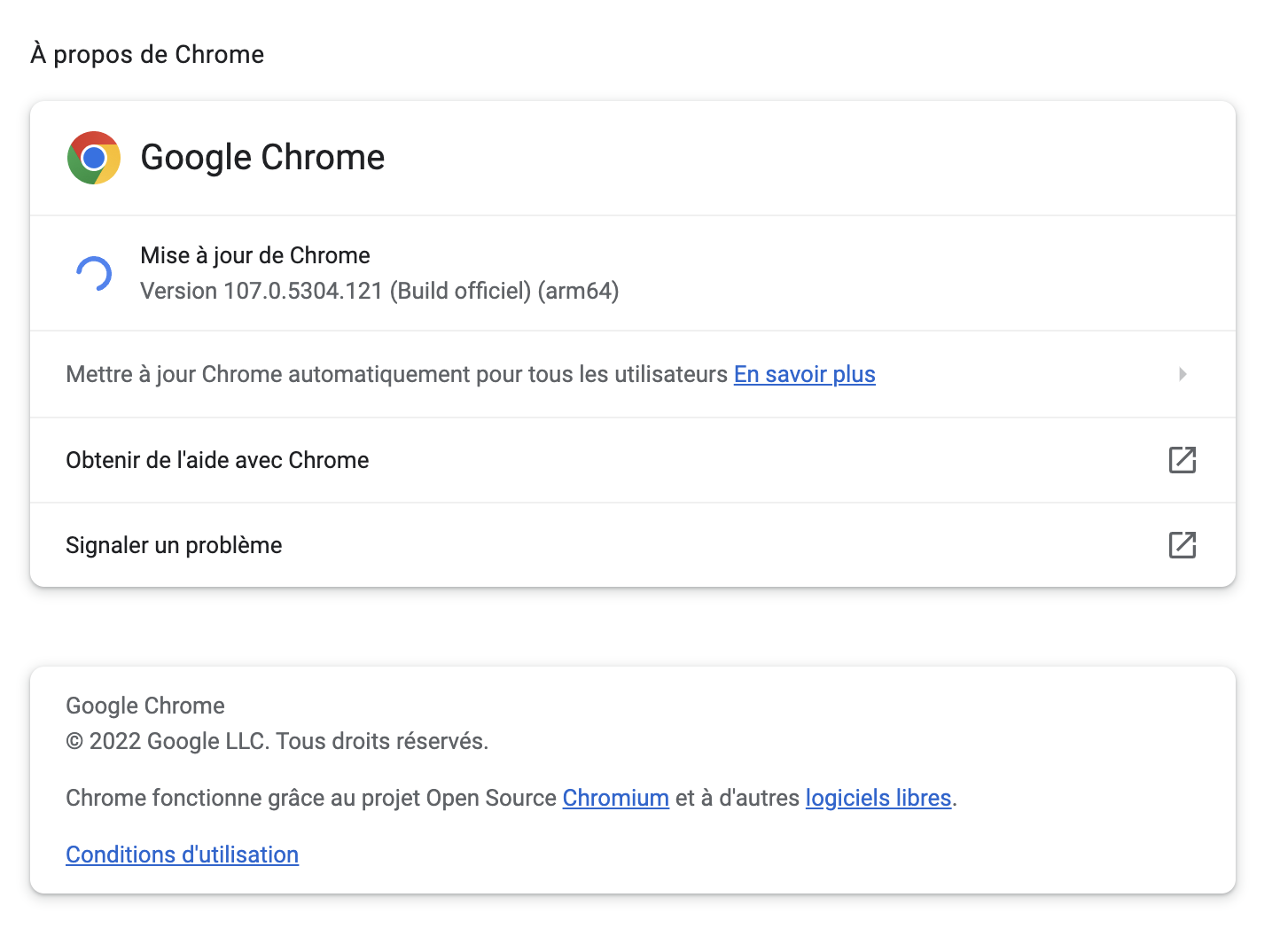

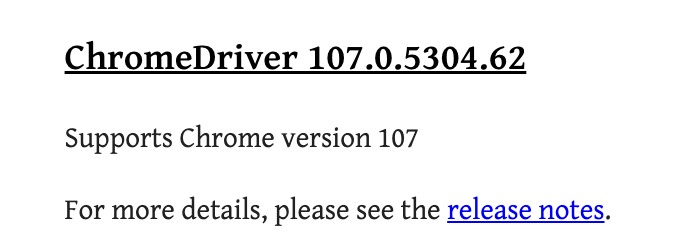

$ pipenv install scrapy-selenium

+ les drivers de navigateurs

from shutil import which

# SELENIUM_DRIVER_NAME = 'firefox'

SELENIUM_DRIVER_NAME = 'chrome'

# SELENIUM_DRIVER_EXECUTABLE_PATH = which('geckodriver')

SELENIUM_DRIVER_EXECUTABLE_PATH = which('chromedriver')

SELENIUM_DRIVER_ARGUMENTS=['--headless']

DOWNLOADER_MIDDLEWARES = {

'scrapy_selenium.SeleniumMiddleware': 800

}Configuration

import scrapy

from scrapy_selenium import SeleniumRequest

class QuotesSpider(scrapy.Spider):

name = 'quotes'

def start_requests(self):

url = 'https://quotes.toscrape.com/js/'

yield SeleniumRequest(

url=url,

callback=self.parse,

wait_time=10

)

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

Mise à jour de notre spider

import scrapy

from scrapy_selenium import SeleniumRequest

class QuotesSpider(scrapy.Spider):

name = 'quotes'

def start_requests(self):

url = 'https://quotes.toscrape.com/js/'

yield SeleniumRequest(

url=url,

callback=self.parse,

wait_time=10

)

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

Mise à jour de notre spider

Scrapy-playwright

# settings.py

playwright_handler = (

"scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler"

)

DOWNLOAD_HANDLERS = {

"http": playwright_handler,

"https": playwright_handler,

}

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"$ pipenv install scrapy-playwright

$ playwright install

import scrapy

class QuotesSpider(scrapy.Spider):

name = 'quotes'

def start_requests(self):

url = 'https://quotes.toscrape.com/js/'

yield scrapy.Request(url, meta={'playwright': True})

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}