By: Matt Bajor

- Infrastructure Automation Engineer on 10ft Pole

- Rally Software, Boulder CO

- Originally from New Hampshire

- Currently from Denver

- IT Helpdesk -> SA -> Webmaster -> DevOps -> Autom8

Intro to Unikernels

The first part of the presentation will be an introduction to unikernels and their benefits, a comparison to our existing 'stacks', as well as a brief overview of the current ecosystem.

Deploying LING to EC2

The second part of the presentation will be done in live demo mode. We will be building, packaging, and deploying an Erlang application (an overly simple webserver) as a LING unikernel on EC2. This will involve creating an AMI and provisioning an instance.

TL;DR:

This presentation consists of two parts:

Part 1: Intro to Unikernels

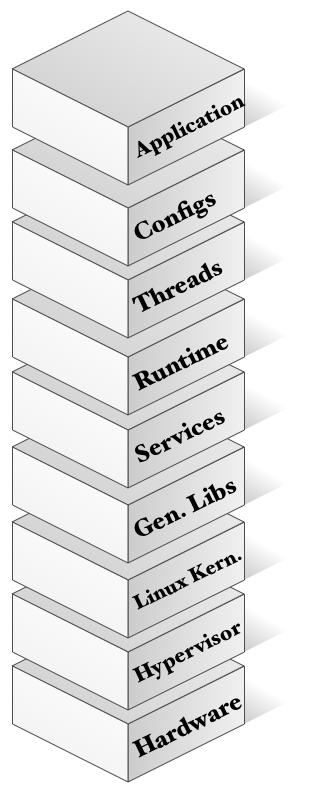

What do our existing environments look like?

Our current stack is:

-

Designed to support a wide array of hardware: brw-rw---- 1 root floppy 2, 0 May 1 15:19 /dev/fd0 - Designed to support a vast array of applications (POSIX).

- Designed to maintain backwards compatibility with existing, iron based systems.

- Sort of a forklift from the 90s (move it to the cloud Jim!).

- Essentially thicker than it needs to be.

- Ripe for a refactor!

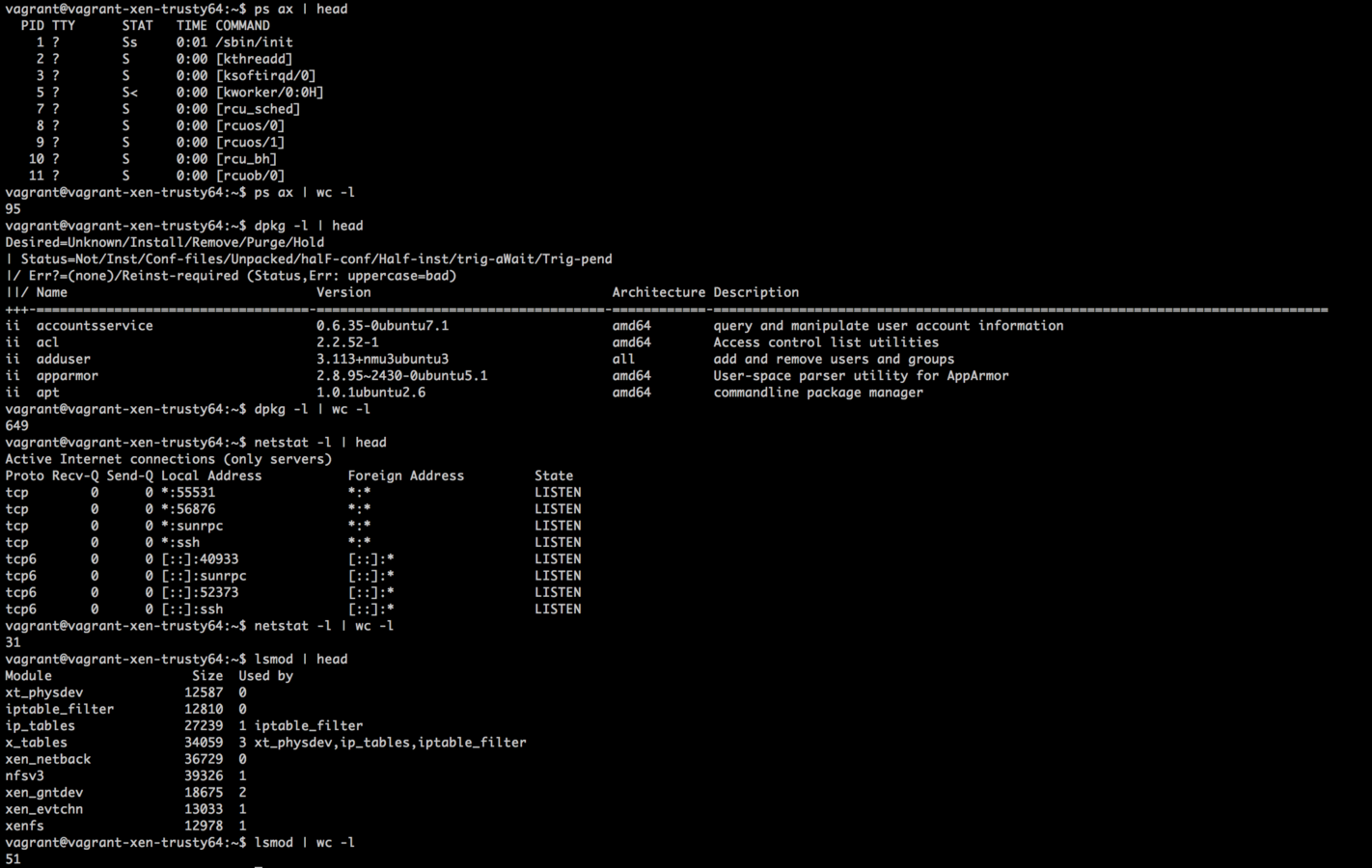

Look at all of the things!

But we get all that stuff for free right?

(Thanks RMS!)

No.

- Staffing and running ops teams is tricky.

- Ops is required because:

- Linux is a black box to a lot of devs.

- Linux very generalized with support for lots of stuff, much of which is no longer needed.

- There are tons of things running that a feature team does not know, care about, or use explicitly.

- Maintaining all of these things takes a lot of (risky) work.

- Security teams are also expensive:

- Auditing 3rd party code is a daunting task.

- Let's only use code (explicitly) if we need it.

- Limit exposure to vulnerabilities:

- no shell == no shellshock

- no libopenss = no heartbleed

So what do we really need?

The things we are actually using:

- Virtualized Disk I/O Drivers.

- Virtualized Network I/O Drivers.

- Virtualized CPU / RAM Access.

- The runtime env (Erlang VM / JVM / HHVM).

- Explicitly included libraries that provide

functionality our app requires. - External, network-based services.

- Our application.

Not:

- Tape drive drivers

- Python

-

libpango

-

SSH (we're immutable right?)

-

kmod

-

** Multi-user support **

-

It's only the app running bro!

-

Keeping these things around does cost $$$

So let's only deploy and maintain what we are actively using.

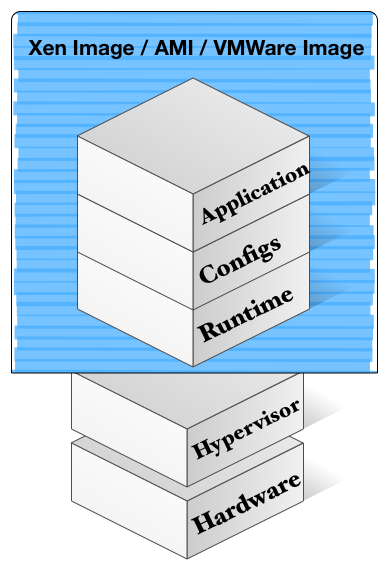

What is a unikernel?

A unikernel is...

An application compiled with everything it requires to operate including:

- application code

- required app libraries

- configuration

in addition to

- the runtime (JVM / BEAM)

- required system libraries*

- a RAMdisk and PV kernel

and packaged as an artifact that can be run against a H/V

*In the form of a Library OS

More specifically, an Erlang (LING) unikernel contains

- Your application's compiled Erlang bytecode.

- Your application's compiled dependencies.

- A version of the Erlang runtime (LING).

- Any explicitly included parts of Erlang's stdlib:

- mnesia

- inets

- ssl

- A block device if the app requires it (mnesia).*

- Any configuration information the app needs.

* goofs is a flat (think s3) plan 9 based filesystem that can be used for non-persistent block storage. ie: mnesia tables

and then...

- Is packaged using LING's `railing` tool:

- The output is a Xen image.

- This artifact can be run against an internal Xen server, but we're going to further package it.

- Is packaged using Amazon's ec2 tools:

- This process outputs an (environment specific) AMI

- Is deployed to EC2

- Manually for now

There are two main types of unikernels:

Purpose built

(like an ASIC)

Generalized

(POSIX Support)

Specialized & purpose built

- These true unikernels were made to utilize all the modern features they can in lieu of backwards compatibility. They are leaner, meaner, and (in general) more performant than the second type of unikernels.

- Examples include:

- LING

- HalVM

- MirageOS

- Clive

- Examples include:

- MirageOS's OCaml compiler will even optimize your code based on the instance's runtime configuration!

Generalized 'fat' unikernels

- These unikernels are much fatter and target running single process instances of unmodified Linux applications.

- Examples include:

- These are fatter than specialized unikernels and not true 'unikernels' as they support more than just the specific compiled application deployed.

I like the specialized ones

We have been moving our 1990's workloads around from mainframes to servers to clouds to containers without changing the architecture much. Now that we have something that works (cloud like platforms), let's invest in making applications that fully utilize the potential of our fancy ass platforms.

But how is that possible?

Paravirtualization

Paravirtualization (the type of virtualization Xen provides) basically exposes hardware in a similar, but consistent manner to the guests.

This means there is no need to support specific hardware in guests; only the virtualized hardware interfaces which remain consistent.

Network Interfaces

No longer do we need to run applications co-located on the same hardware in order to communicate. Almost all inter-process comms in an SOA is done over the network allowing each part of the architecture to run in isolation with all comms happening on the network layer.

...oh yeah. and dropping backwards compability

In order to reap the benefits that a true unikernel architecture offers, it is required to design (or redesign) the application to run in such an environment. This lack of general POSIX support is why a true unikernel approach can be so much leaner and less complex than existing Linux based stacks.

Luckily with languages like Java and Erlang, this can mostly be done at the runtime level requiring minimal application code changes if your app supports running in an ephemeral SOA environment.

Just like your app!

Specifically, why is this better?

In three words:

- Efficiency

- Security

- Complexity

Efficiency

- Images go from GB to MB (or even KB!) reducing the overall storage footprint of a given application.

- We no longer need a full OS stack for each app making the images very, very small.

- Since there is no unnecessary duplication of services, the RAM and CPU footprint of a running app is significantly reduced as well.

- With startup times in the hundreds of milliseconds, it is possible to run a zero footprint cloud. This means that there are no services running when there are no requests to be served. When a request is received, a unikernel is spun up to handle the request, hangs out for a bit to handle any others that may come in, and then shuts down within a few seconds.

Security

- The attack surface of a running application is significantly reduced when you remove the operating system. Now an attacker must rely on attack vectors in your application code and included libraries instead of the OS which is much more common across deployments.

- We are now out of the script kiddie land and into the land of professional hackers.

- The security patch problem becomes much smaller:

- Not every single app server is running BASH etc..

- There are no tools on the running instance to help in the attack. An attacker must bring along their own compiler, libraries to support it, and a shell to run it.

- Type safety/checking makes it even more secure (OCaml).

Complexity

- Removing the OS removes the bulk of complexity from a system.

- The majority of the remaining complexity is moved to build/compile time where there is no risk of an outage.

- The team that built the app knows it's exact requirements and how to meet them

- No more black-box Linux stack

- All development teams can now code against the same interface (virtualized drivers) creating re-usable libraries that can be shared across teams.

It's also the next logical step from containerization

Docker and other containerization techniques were a drastic improvement with regards to efficiency when compared to traditional VMs, but this is not the end-all be-all. Currently the shared nature of containers and the reliance on shared system libraries/kernels creates a few problems. Unikernels attempt to address the major faults of containers. Also there's security...

What are some of the challenges when adopting unikernels?

Feature teams have more responsibility..

- No OS maintained by another team.

- Deeper knowledge of what libraries are included and how to patch them.

- More ownership through all environments.

- On-call rotations.

..so give them a DevOps type

- Easier to staff DevOps on a feature team.

- More fun, less stress, working as a team to deliver a feature from dev to prod.

- Transfers opsy type knowledge to the rest of the team.

- Builds tools to support the feature/app.

- Is part of a larger operations group.*

Configuration management is way different...

Current best practices* dictate building a single artifact that is then moved around through environments that have different configurations. By compiling configurations into the binary this flow no longer works.

Luckily this configuration check is happening in the build phase which is much less risky than if it were changing production systems!

...but much more powerful

The major benefit here is when using a compiler that supports compiling in configuration information (like OCaml), the compiler sees exactly what is being setup, checks to confirm it can compile, and removes anything that is left unconfigured, further reducing the actual code being deployed and thus the security footprint etc.. This also creates a unique footprint for each instance. When is the last time Puppet removed packages that were not in use?

* 12 Factor Apps

Cya backwards compatability!

This next evolution in architecture will not be a fork-lift. We have done that from mainframes to iron to the cloud. It is now time to re-architect our applications to make better and more efficient use of our limited resources.

Immutable infra is different.

Adopting the mentality that the system running will never change, but only be replaced is a hard shift in thought. It requires thinking about where the app requires persistence and making that happen which can be more difficult than just writing to local disk.

...but if we can do it

- Development teams will have a much better understanding of the full application they are developing.

- Operations teams can focus on tool building and escalations.

- Business units will have a better understanding of the real cost of software development.

- Companies can horizontally scale feature teams as each team is self-sustainable.

- Infrastructure Engineering / Ops will not be the blocking resource!

What's out there right now?

(In alphabetical order)

- Name: ClickOS

- Description: A MiniOS based unikernel that targets networking use cases, specifically running the Click Modular routing project. The tiny, fast virtual machines created from this project boot in about 30 milliseconds and can drive 10 Gb/s nics for almost all packet sizes.

- Homepage: http://cnp.neclab.eu/clickos/

- Project URL: https://github.com/cnplab/clickos

- Language support: Click (Network Devices)

- Hypervisor support: Xen

- License: BSD 3-Clause (http://cnp.neclab.eu/license)

- Cool links:

- Name: Clive

- Description: Clive is a research project that takes a modified Go compiler and couples it with a runtime kernel that will allow the final artifact to be run against a hypervisor or bare metal blade. This is still certainly a research project, but it is a very, very interesting project.

- Homepage: http://lsub.org/ls/clive.html

- Project URL: git://git.lsub.org/go.git

- Language support: Go

- Hypervisor support: Unclear, but looks like they are targetting bare metal (which implies KVM and Xen)

- License: MIT http://opensource.org/licenses/MIT

- Cool links:

- Name: Microsoft Drawbridge

- Description: Drawbridge is a research prototype of a new form of virtualization for Microsoft application sandboxing. Drawbridge combines two core technologies: First, a picoprocess, which is a process-based isolation container with a minimal kernel API surface. Second, a library OS, which is a version of Windows enlightened to run efficiently within a picoprocess.

- Homepage: http://research.microsoft.com/en-us/projects/drawbridge/

- Project URL: No code available at this time

- Language support: Windoze

- Hypervisor support: Windoze

- License: No license specified, but probably not BSD

- Cool links:

- Name: HaLVM - The Haskell Lightweight Virtual Machine

- Description: The Haskell Lightweight Virtual Machine (HaLVM) is a port of the Glasgow Haskell Compiler toolsuite that enables developers to write high-level, lightweight virtual machines that can run directly on the Xen hypervisor. When connected with the appopriate libraries, the HaLVM can, for example, operate as a network appliance.

- Homepage: https://galois.com/project/halvm/

- Project URL: https://github.com/galoisinc/halvm

- Language support: Haskell

- Hypervisor support: Xen

- License: BSD (https://github.com/GaloisInc/HaLVM/blob/master/LICENSE)

- Cool links:

- Name: LING (aka Erlang on Xen)

- Description: LING is an Erlang unikernel runtime. It is more or less compatible with BEAM (see the compatibility link below) and almost as performant, but it's main feature is that it runs directly against Xen's paravirtualized drivers and allows you to run standard Erlang/OTP code as a unikernel directly against a hypervisor such as Xen or a public cloud such as Amazon's EC2.

- Homepage: http://erlangonxen.org/

- Project URL: https://github.com/cloudozer/ling

- Language support: Erlang

- Hypervisor support: Xen, EC2

- License: Proprietary (Sleepycat) https://github.com/cloudozer/ling/blob/master/LICENSE

- Cool links:

- Name: MiniOS

- Description: MiniOS is a kernel provided by Xen that is meant to be included with other projects to enable them to run on the Xen hypervisor. Two projects based on MiniOS include ClickOS and MirageOS.

- Homepage: http://wiki.xen.org/wiki/Mini-OS

- Project URL: http://xenbits.xen.org/gitweb/?p=mini-os.git;a=summary

- Language support: C

- Hypervisor support: Xen

- License: No declared license

- Cool links:

- Name: MirageOS

- Description: Mirage OS is a library operating system that constructs unikernels for secure, high-performance network applications across a variety of cloud computing and mobile platforms. Code can be developed on a normal OS such as Linux or MacOS X, and then compiled into a fully-standalone, specialised unikernel that runs under the Xen hypervisor.

- Homepage: http://openmirage.org/

- Project URL: https://github.com/mirage/mirage-platform

- Language support: OCaml

- Hypervisor support: Xen

- License: ISC, LGPLv2

- Cool links:

- Name: OSv

- Description: OSv is a new open-source operating system for virtual machines. OSv was designed from the ground up to execute a single application on top of a hypervisor. OSv has new APIs for new applications, but also runs unmodified Linux applications (most of Linux's ABI is supported) and in particular can run an unmodified JVM, and applications built on top of one.

- Homepage: http://osv.io/

- Project URL: https://github.com/cloudius-systems/osv

- Language support: Lots including the JVM (OSv runs unmodified Linux applications)

- Hypervisor support: EC2, GCE

- License: 3-clause BSD

- Cool links:

- Name: Rump Kernels

- Description: Rump kernels provide free, portable, componentized, kernel quality drivers such as file systems, POSIX system call handlers, PCI device drivers, a SCSI protocol stack, virtio and a TCP/IP stack. These drivers may be integrated into existing systems, or run as standalone unikernels on cloud hypervisors and embedded systems.

- Homepage: http://rumpkernel.org/

- Project URL: https://github.com/rumpkernel/wiki/wiki/Repo

- Language support: POSIX baby!

- Hypervisor support: Xen, Metal, KVM, Genode OS Framework

- License: 2-clause BSD

- Cool links:

..and many more to come!

Part 2: Building and deploying a LING unikernel to EC2

What are we going to be doing exactly?

- Write (paste) an Erlang app in IntelliJ.

- Compile and run the app in IntelliJ to test it.

- Package the app as a Xen image with LING's railing.

- Run the Xen image on Xen in Virtualbox to test.

- Package the Xen image as an AMI.

- Upload and register the AMI.

- Deploy an EC2 instance from AMI.

- ...

- Profit.

And what will we be using to do it?

- Erlang/OTP 17

- IntelliJ IDEA & Erlang/OTP Plugin

- railing: LING's packaging utility

- rebar: Basho's Erlang build tool

- Vagrant (to run Ubuntu

- Ubuntu (to run Xen)

- Xen (to run our LING artifact)

- AWS CLI tools

- AWS GUI

Thank you!

Interesting Links

05/01/2015

- My Github

- OSv ACEU14 (presentation)

- Unikernels: Rise of the Virtual Library Operating System

- OSv: Optimizing the Operating System for Virtual Machines

- Asplos Mirage Whitepaper

- PV-GRUB (The kernel loader we use with LING)

- 7Unikernel projects to take on Docker

- OCaml Labs Mirage Page

-

Rise of the unikernel (excellent presentation)