Train a Generative Model of your Training Data,

Use it to fight Distribution Shift in Optimal Control

Terry Suh, RLG Short Talk 2022/03/24

Optimal Control and Policy Search

In its most general statement: Find parameters of my policy to optimize some cost.

- Note this formalism is super broad. Trajectory optimization, dynamic policies, all included.

Typical discrete-time finite horizon optimal control (policy search)

Optimal Control with Distribution Risk

Given some reference distribution p(x,u), penalize how much we deviate away from the distribution.

Note the addition of a new term that penalizes the negative log-likelihood (tries to maximize log-likelihood). What does this mean?

In English: try to minimize my cumulative cost, but also try to maximize the likelihood that the points in my trajectory were drawn from the distribution p.

Motivating Log-Likelihood: Gaussian to LQR

For some people from a weak grasp of probability and inference (like me, somehow my weakness has become my research), let's motivate this better.

Case 1. p(z) is a Gaussian distribution centered at zero.

Motivating Log-Likelihood: Gaussian to LQR

For some people from a weak grasp of probability and inference (like me, somehow my weakness has become my research), let's motivate this better.

Case 1. p(z) is a Gaussian distribution centered at zero.

You can recover LQR cost by setting things this way.

(Can also set up LQ Trajectory tracking by modifying mean of Gaussian)

Motivating Log-Likelihood: Uniform

Case 2. p(x,u) is a uniform distribution over some set

Classic "indicator function"

in optimization.

Motivating Log-Likelihood: Uniform

Case 2. p(x,u) is a uniform distribution over some set

Classic "indicator function"

in optimization.

Cross Entropy and KL Divergence on Occupation Measures

Denote as the occupation measure of policy pi at time t.

Cross-Entropy

KL-Divergence

Entropy

How do we solve this problem?

Value

Distribution Risk

How do we solve this problem?

- How can we sample effectively from p? Do we know the distribution?

- How can I characterize the distribution p? Which class of models (e.g. GMM / VAE) do I use?

- We know how to get the gradient of value w.r.t. parameters. What about distribution risk?

Several Challenges

Value

Distribution Risk

Estimating the Policy Gradient in this setup:

Gradient Computations

NOTE: the blue parts are derivatives (Jacobians) of my trajectory w.r.t. the policy parameter,

we can obtain this through sensitivity analysis (autodiff), etc.

The red part can be characterized as the SCORE of my distribution,

As long as I have a good estimation of the score of my distribution, I can compute the policy gradient of the distribution-risk problem

WITHOUT having to compute the cost log p.

Score Matching

But how do we estimate the score? Is it easier than estimating the distribution itself?

One of the tricks we all love: integration by parts.

Hutchinson estimator for trace

Now we can autodiff and train to solve this problem.

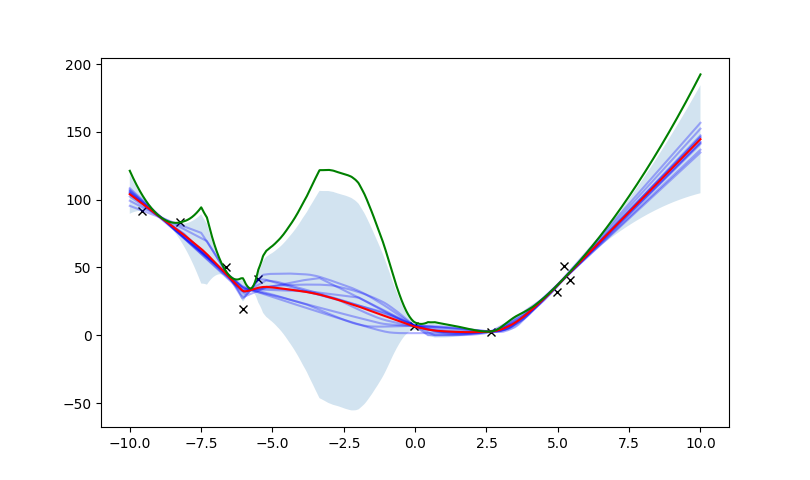

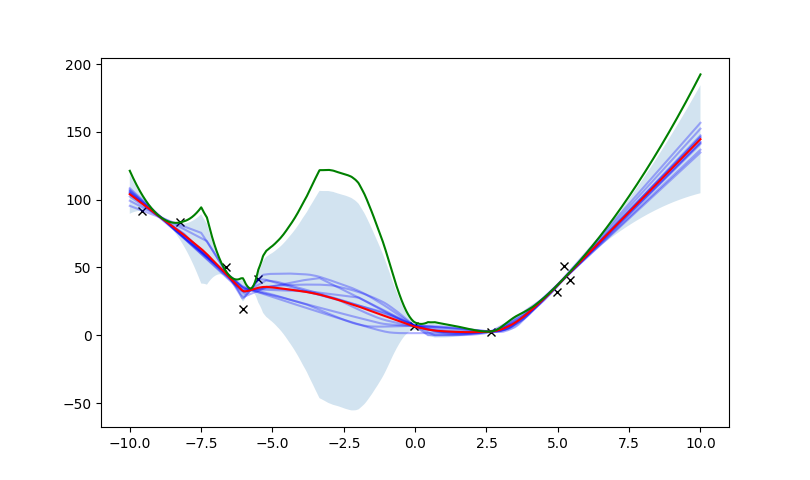

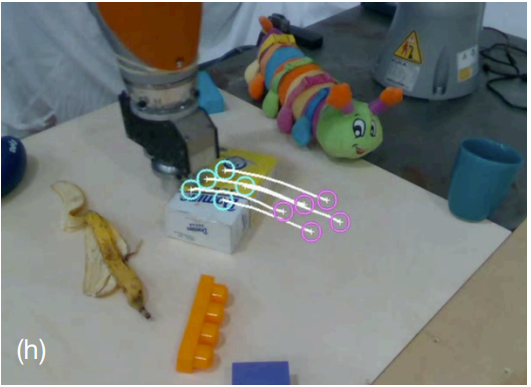

Example Case: Model-Based Offline Policy Optimization

Setting of Model-Based Offline Policy Optimization (Batch-RL):

2. Run policy optimization on the learned dynamics.

Key challenge: Distribution Shift

The policy optimizer can exploit the error in dynamics within out-of-distribution regime and result in very bad policy transfer.

1. First learn the dynamics of the system from data.

Example Case: Model-Based Offline Policy Optimization

Previous Solutions:

Train Ensembles of dynamics where alpha is drawn from a finite set of distributions.

In addition to original cost, penalize quantified uncertainty (e.g. variance among ensembles).

Assumptions:

- High Variance among Ensembles implies OOD

- Ensembles can be trained successfully? (Glen might have complaints)

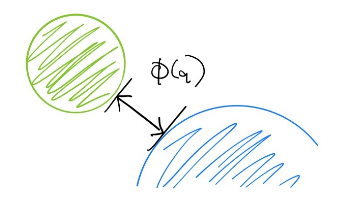

Proposed Method: Using direct training dist. prob.

Proposed Solution

Directly use our distribution risk

where p is obtained from our empirical distribution (training set).

Penalize horizontally as opposed to vertically

Some Benefits:

- Bypasses ensembles, pretty good since it's a sketchy uncertainty quantifier

- Minimum overhead, cheaper than ensemble.

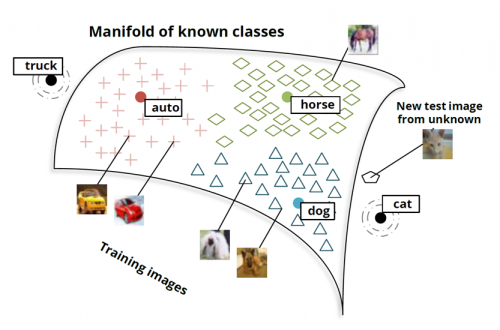

Implicit Constraints on Data

What if we have some implicit constraints on data?

"The Manifold Hypothesis"

Rigid-Body Structure

Wouldn't it be nice to able to constrain our dynamics to obey these constraints? What prevents these structure from being horribly violated after multiple rollouts?

Non-penetration Constraint

Distribution Risk as Imposition of Implicit Constraints

Claim: Let data lie on embedded submanifold M in the ambient space (x,u).

Denote denote the distance function (with the ambient space metric) between any (x,u) in the ambient space and the manifold M.

Then, the negative log likelihood acts as a soft constraint penalizing deviation away from the submanifold M.

The Strict Case and Randomized Smoothing

If we were actually able to train p(x,u) in the limit of infinite data, we have:

So again, this corresponds to the case of requiring strict feasibility.

But such score models would be extremely hard to train. To get around this issue, score-based models utilize randomized smoothing:

Gaussian Smoothing leads to Quadratic Penalty

To build some intuition, let's think of a simple case where p(x,u) is uniformly distributed on some manifold M.

The smoothed distribution is likely in the form of

Then, the negative log-likelihood is the penalty term of the constraint.

The general case is surprisingly hard to reason about!

Summary

Optimal Control with Distribution Risk

Score-Based Generative Modeling

Powerful Combination to tackle

Optimization w/ Data-Driven Components

- Ability to explicitly account for distribution shift.

- Ability to impose implicit constraints in data.