Robustifying Smoothened Dynamics

Policy Search through Contact Dynamics

Original problem formulation:

Find a deterministic policy that minimizes sum of rewards.

1. Flatness

2. Stiffness

Challenges of Gradient-based Optimization

Policy Search through Contact Dynamics

Perhaps unknowingly, many RL formulations benefit from specifying a more relaxed objective with a stochastic formulation.

Randomization of initial conditions

Randomization of domain parameters

Randomization of stochastic policy

Policy Search through Contact Dynamics

Policy Randomization

Domain Randomization

Initial Condition Randomization

Randomization potentially alleviates flatness and stiffness

Policy Search through Contact Dynamics

Policy Randomization

Domain Randomization

Initial Condition Randomization

Randomized smoothing also encodes robustness

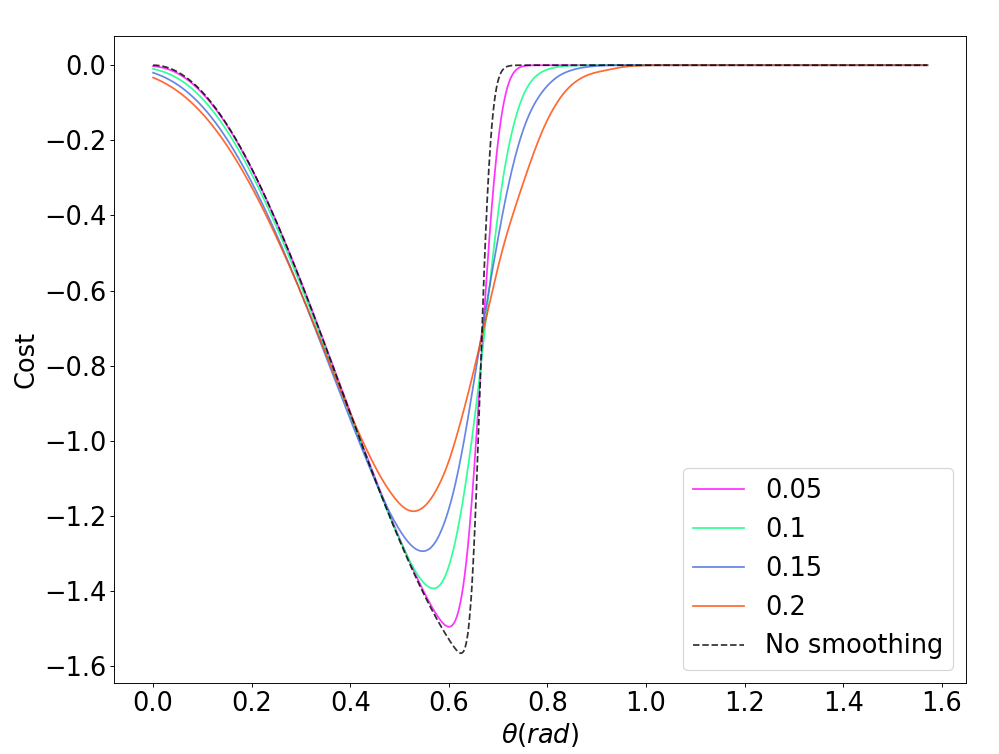

Rate of Bias

Suboptimality

Suboptimality gap (Bias)

Suboptimality Stiffness

Robustness

Suboptimality

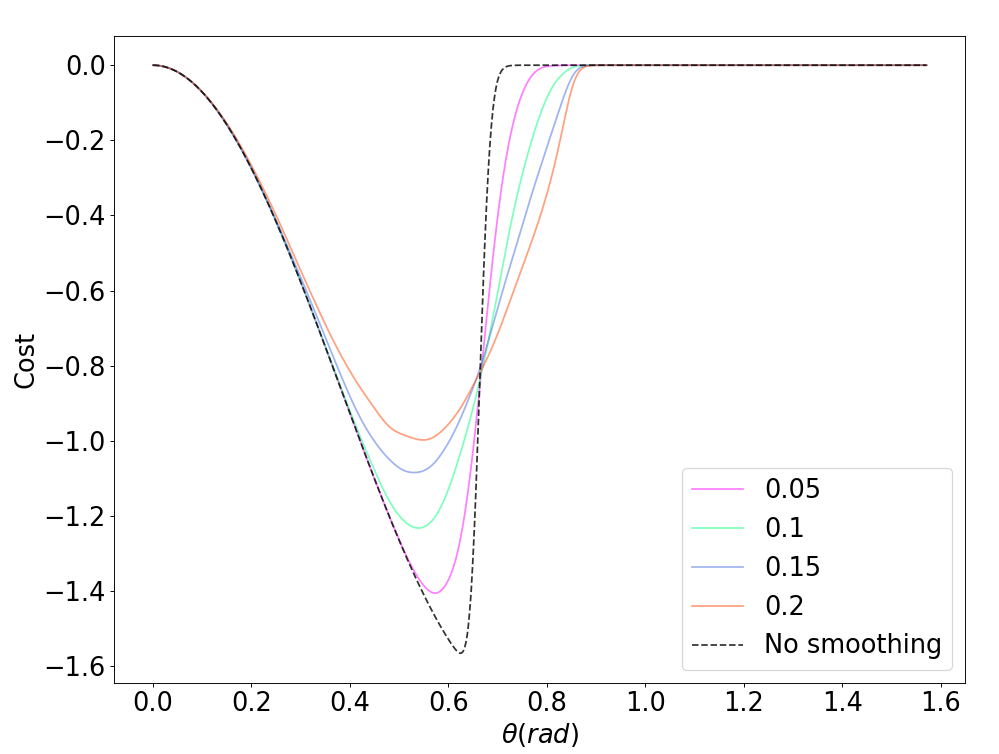

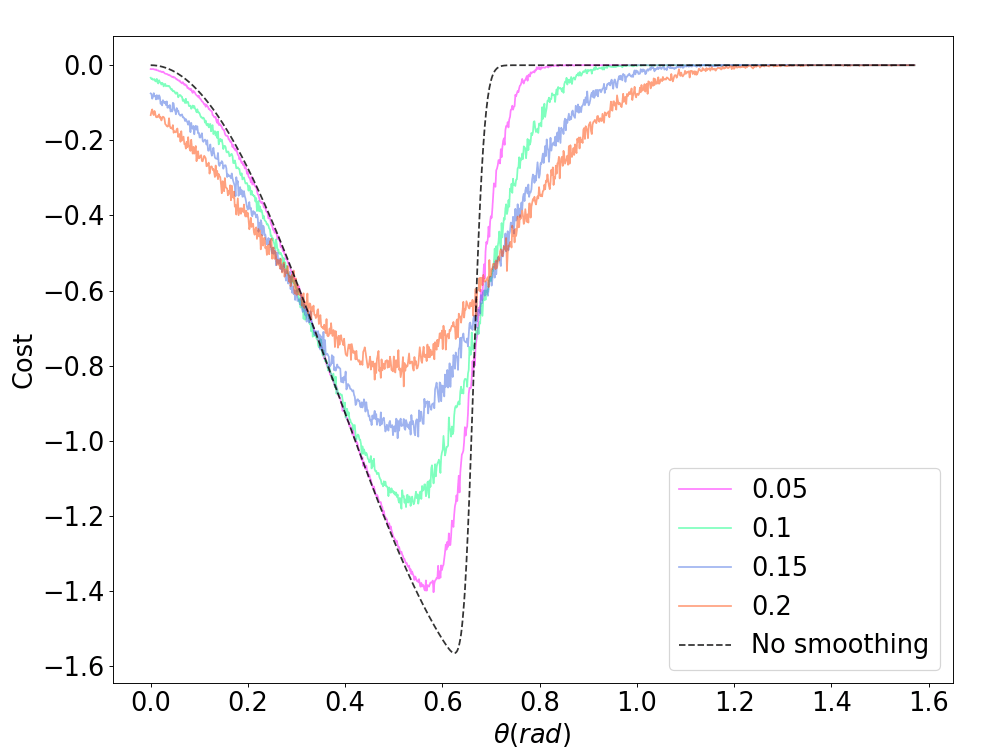

Pitfalls of Randomized Smoothing

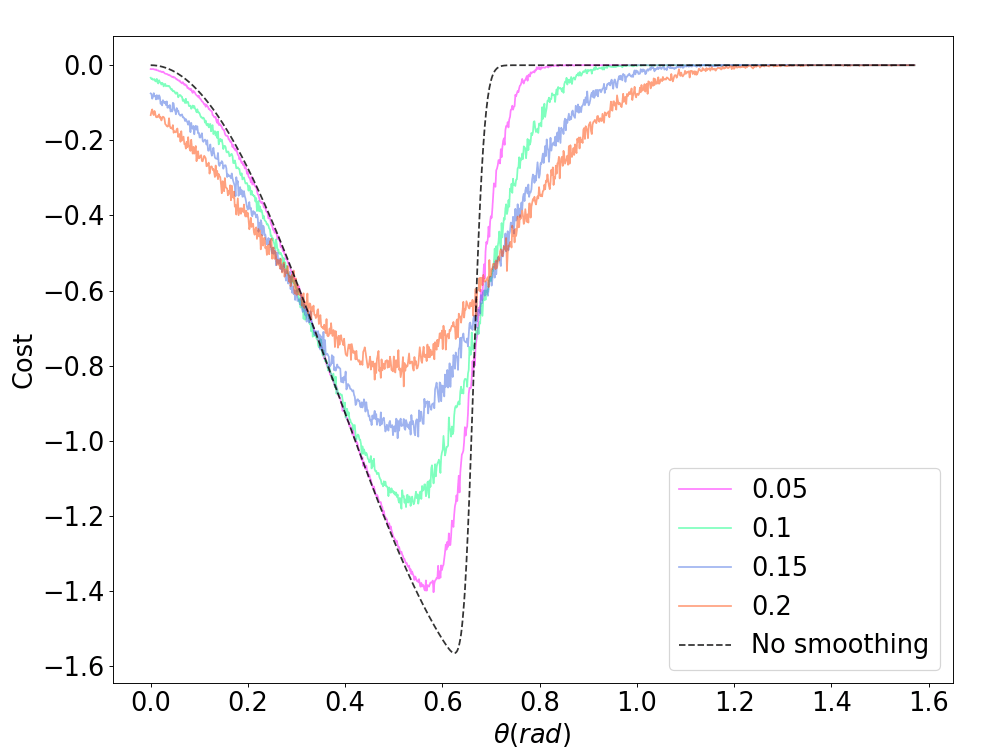

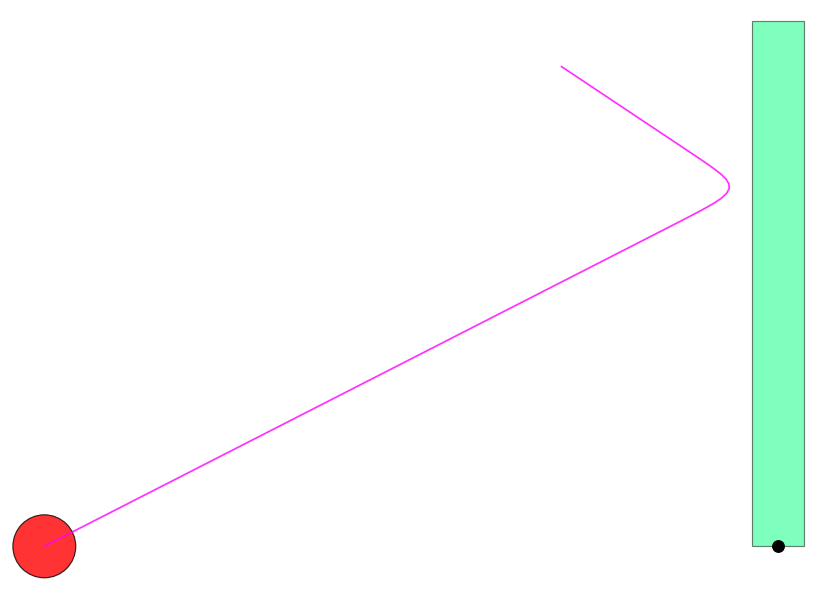

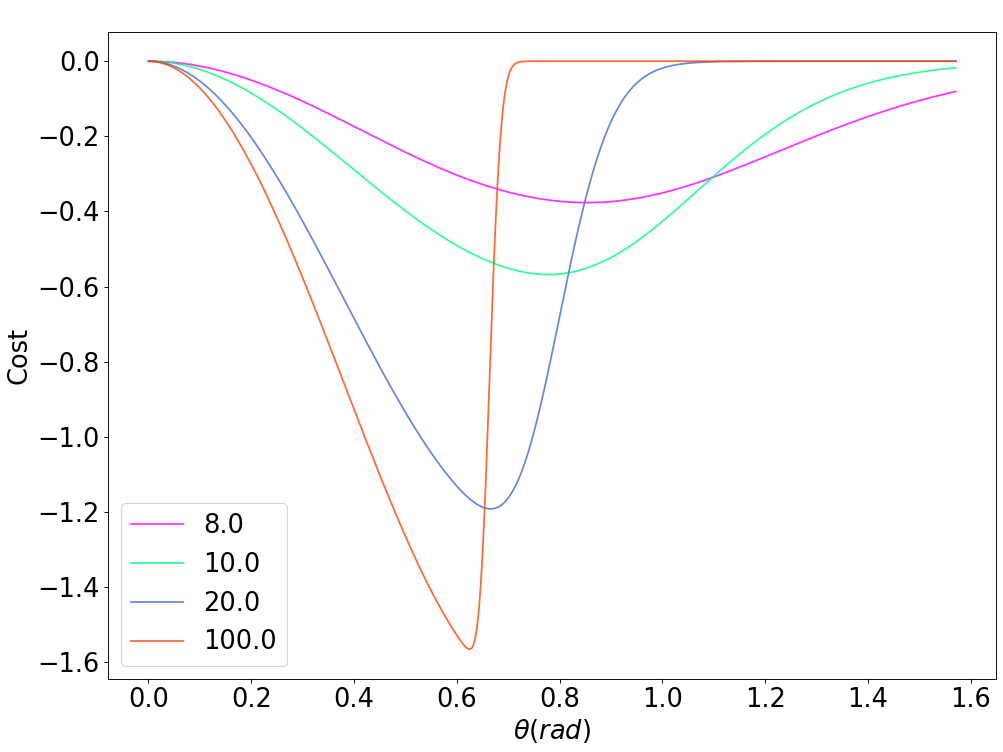

Challenge 1. Sample-Inefficient Exploration

Getting out of flatness requires lots of samples.

Need to have lot of samples to eventually reach a non-flat region.

Pitfalls of Randomized Smoothing

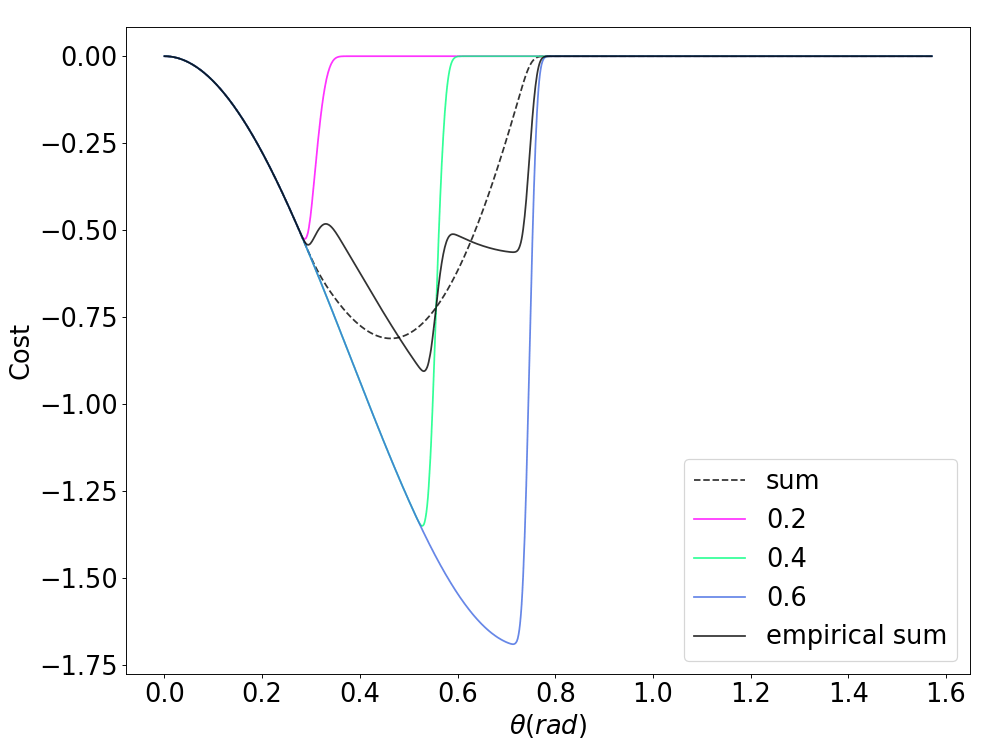

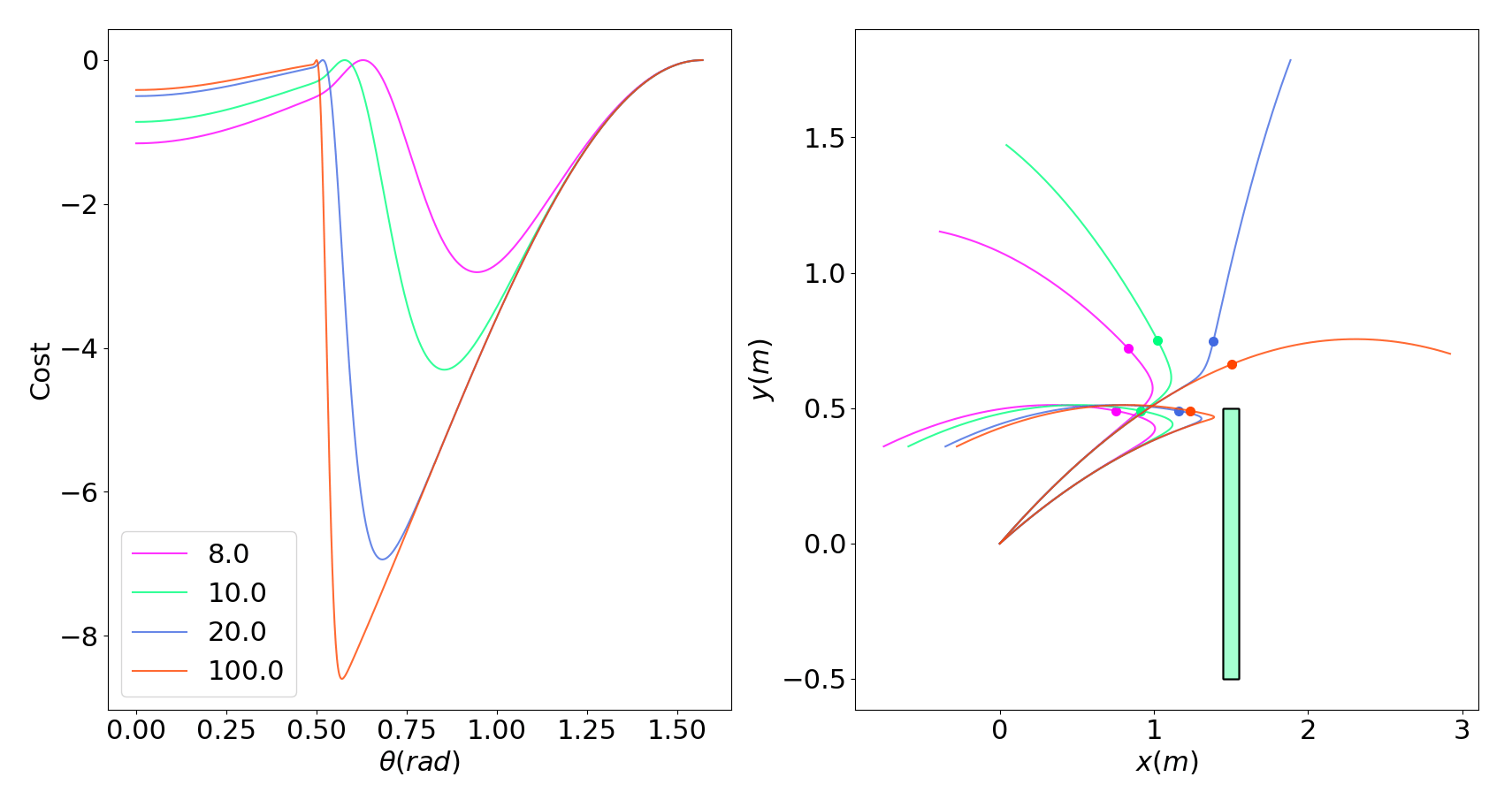

Challenge 2. Empirical Bias from Stiff Underlying Landscape

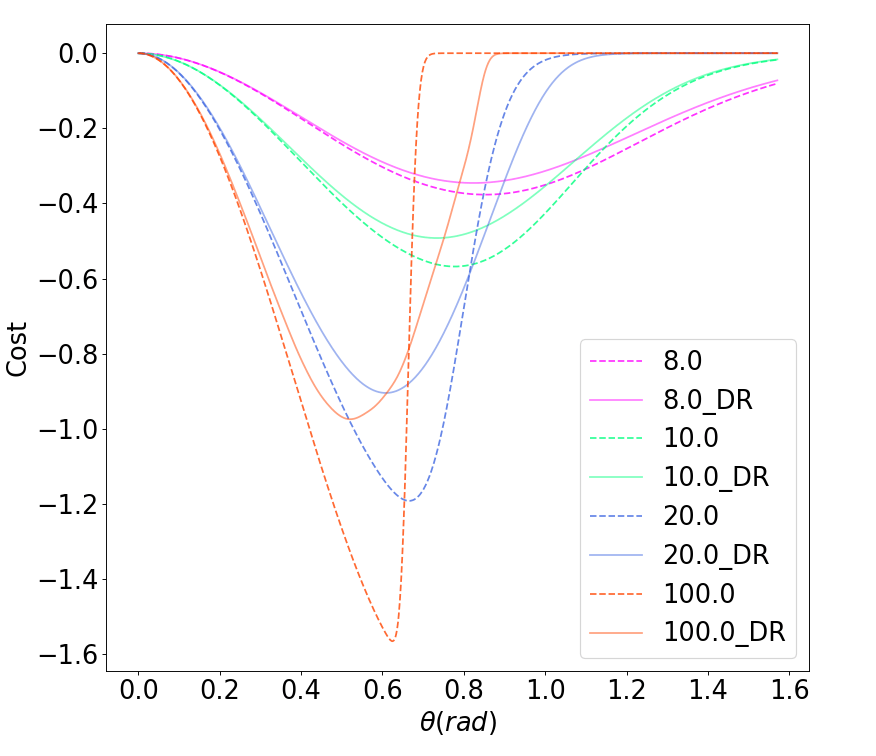

Example: Domain Randomization

Summing up gradients does not result in direction of true gradient!

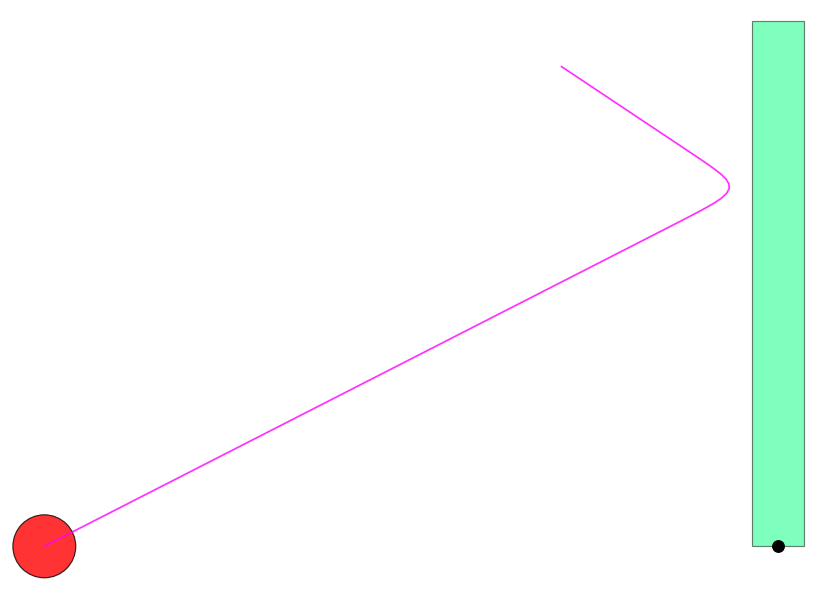

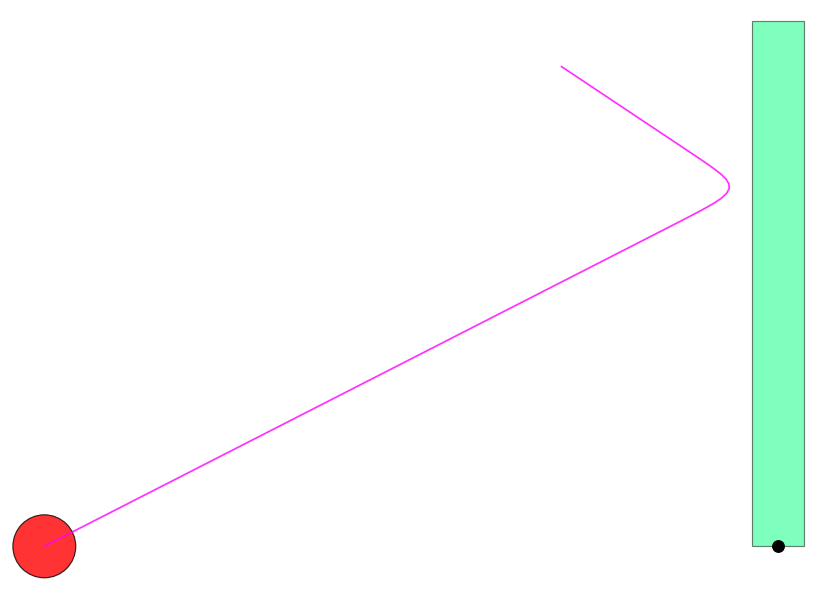

What causes stiffness?

Started off close, ended up far!

Rewards are tied to states and trajectories.

Stiff dynamics causes vastly different trajectories in state. If these are different in the direction of the reward, they directly translate to stiff value landscapes.

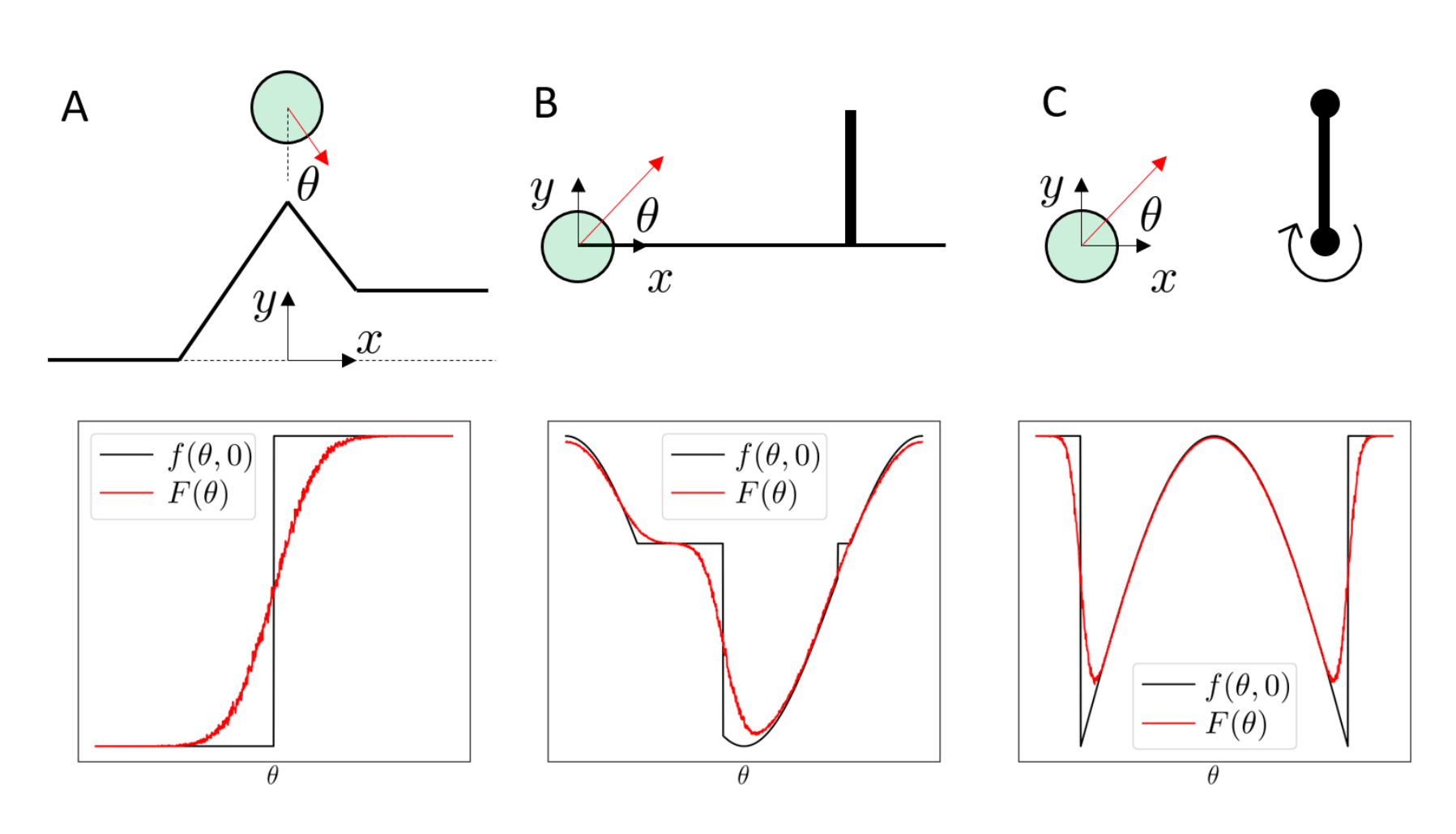

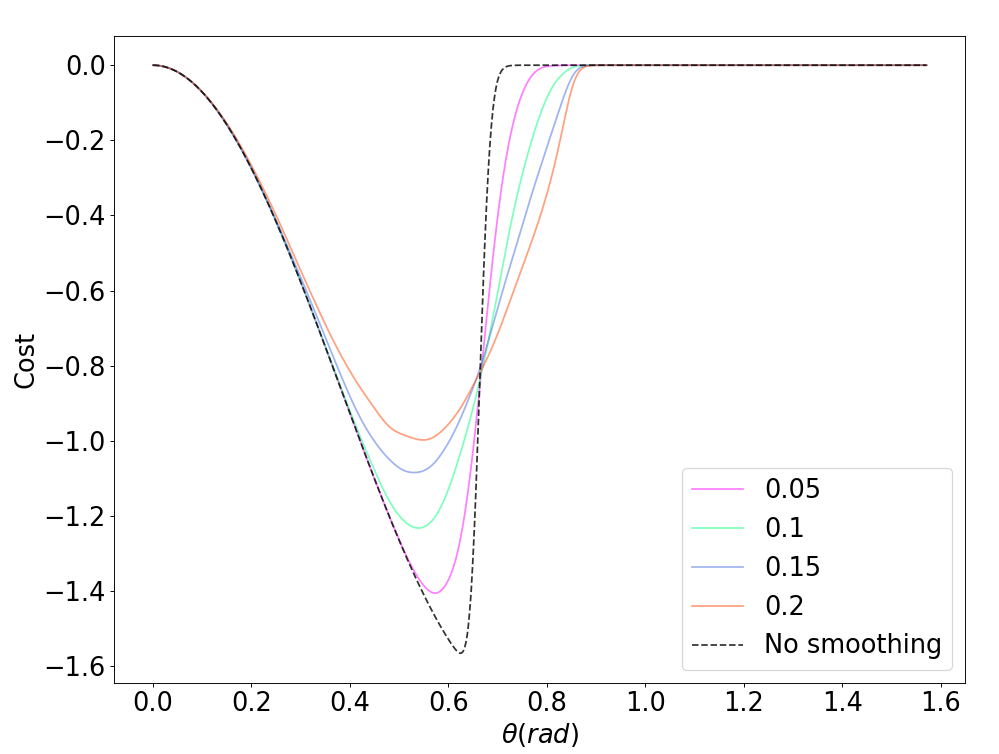

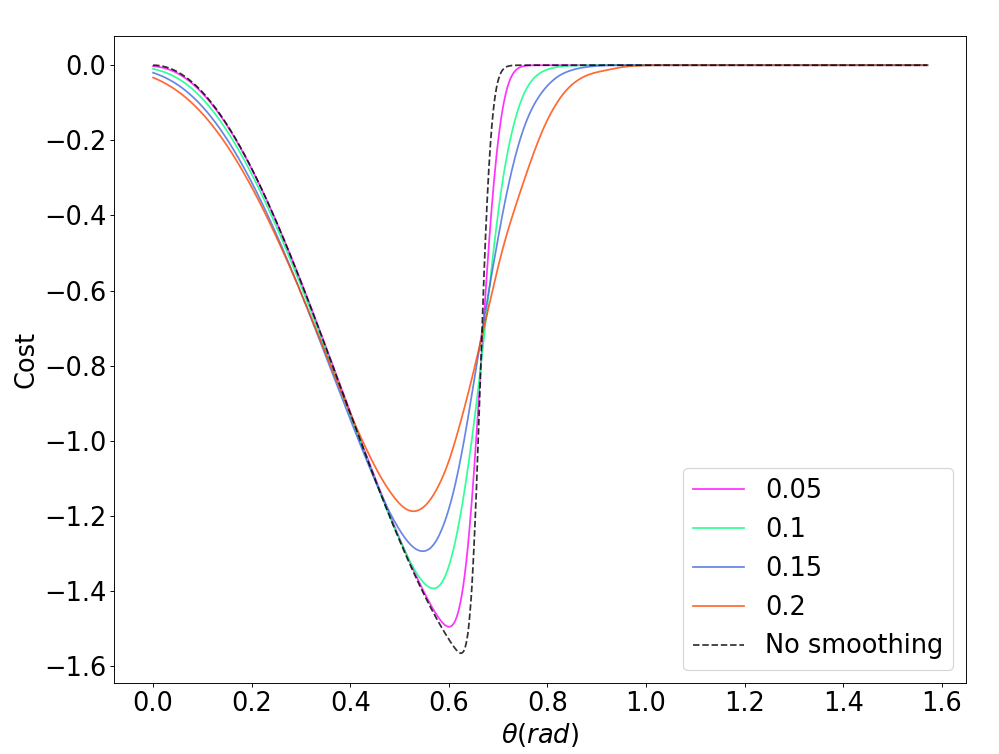

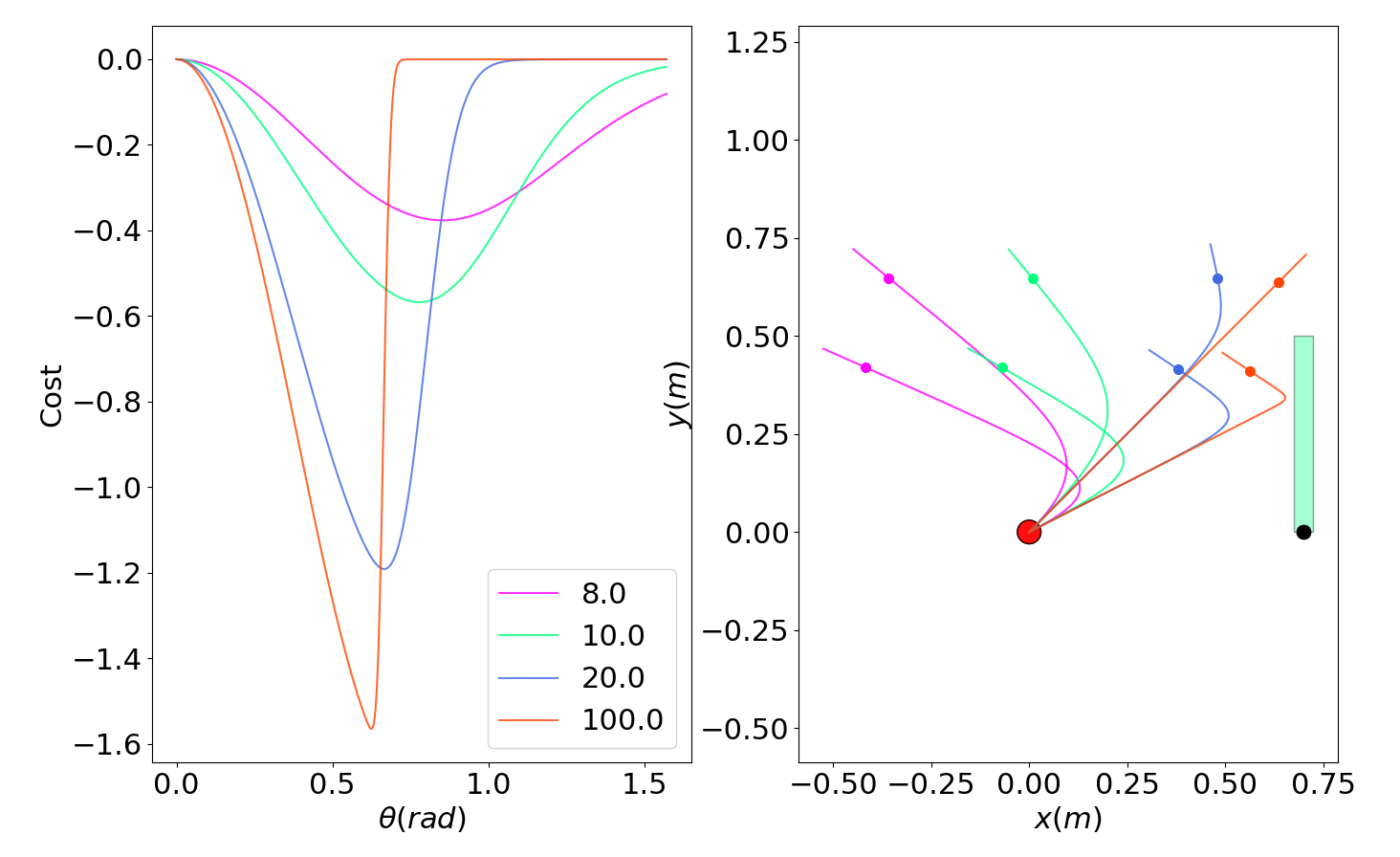

Dynamic smoothing alleviates stiffness / flatness

Dynamic smoothing alleviates flatness and stiffness without MC.

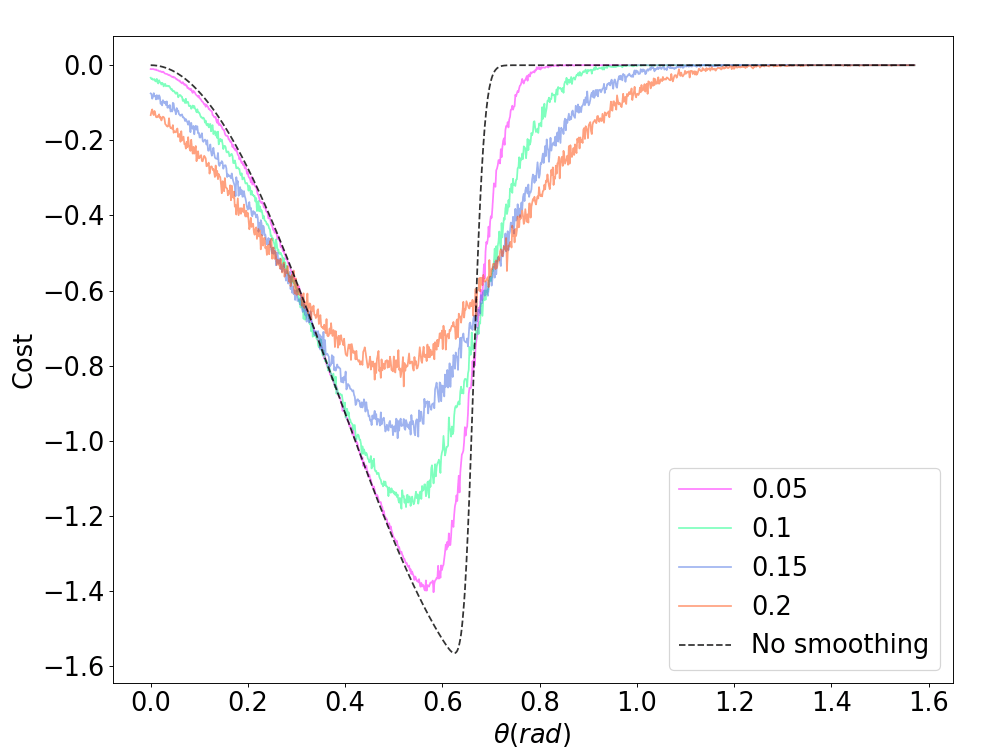

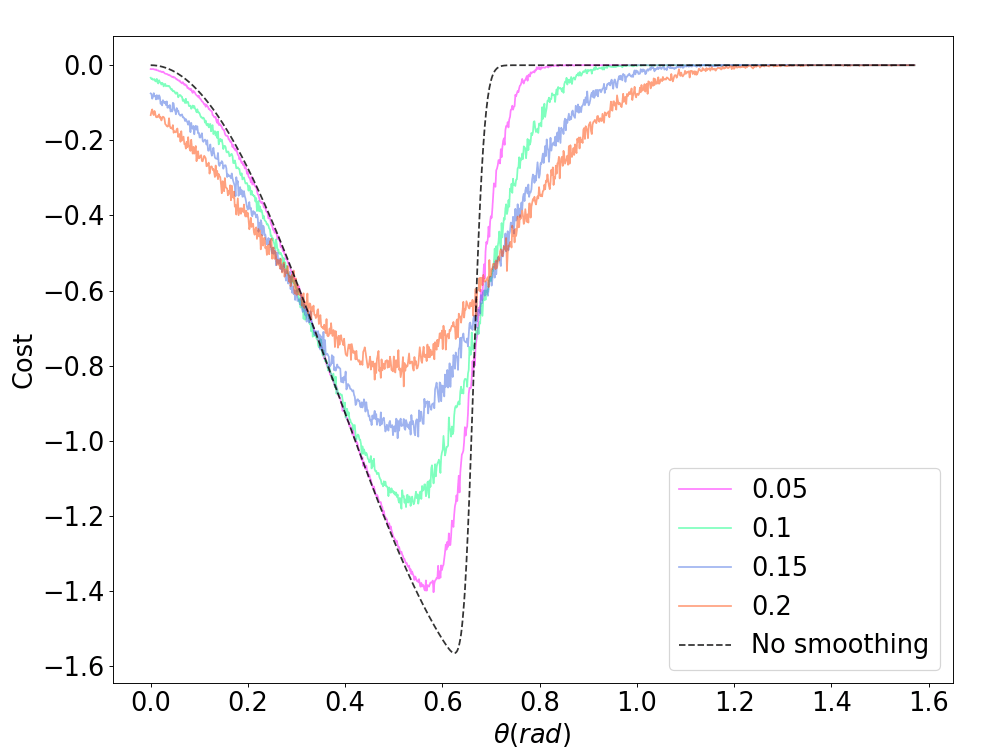

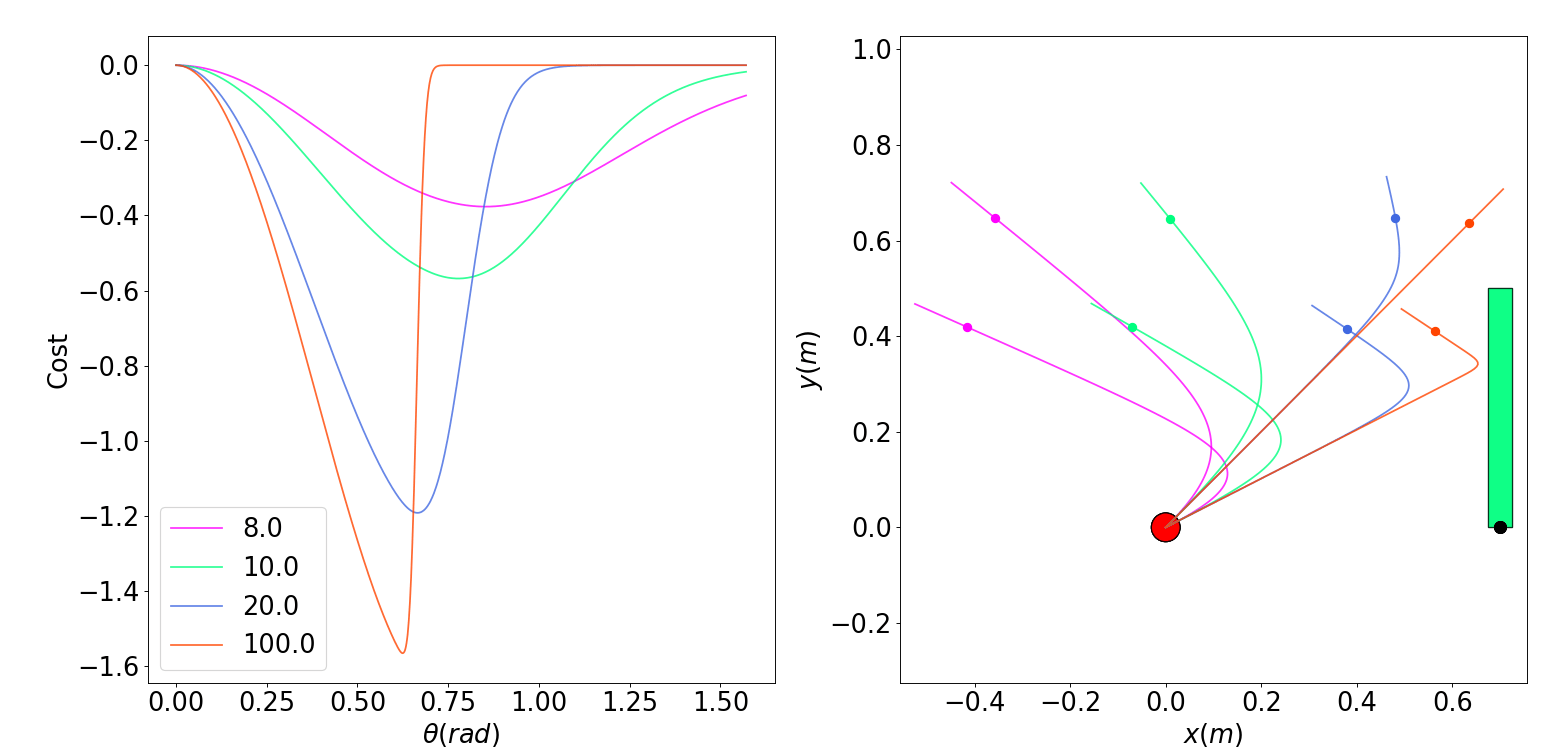

Harmful bias of dynamic smoothing

However, dynamic smoothing can develop harmful bias.

What kind of cases cause smoothing to be badly biased?

Dynamic Smoothing

Randomized Value Smoothing

RS solutions become suboptimal gracefully.

Dynamic smoothing solutions fail catastrophically.

Dynamic Smoothing alleviates Flatness

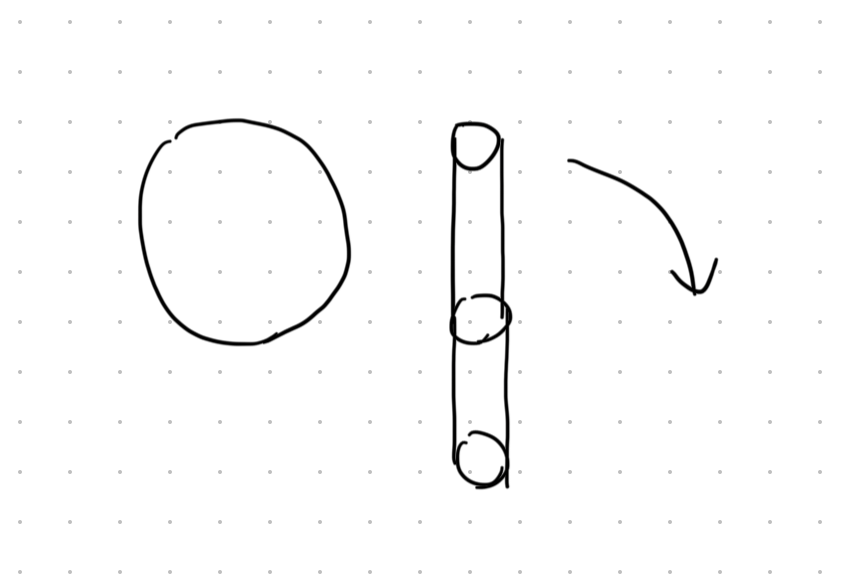

Contact-Averse Tasks

Contact-Seeking Tasks

Dynamic smoothing has "beneficial" bias since the smooth solution assumes force-from-a-distance.

Dynamic smoothing has "catastrophic" bias since force-from-a-distance does not result in contact.

A Combined Solution

Randomized Value Smoothing

Dynamic Smoothing

Solutions are more robust, often develop "beneficial" bias.

Sample-inefficient in exploration

Suffers from empirical bias when underlying landscape is stiff.

Sample-efficient method for smoothing

Develops bad bias in contact-seeking, finds non-robust solutions.

Domain Randomization w/ Dynamic Smoothing

Stochastic smoothing schemes suffer less from empirical bias due to underlying smooth dynamics.

Can strategically design domain randomization distribution to be robust to dynamic smoothing bias.

Domain Randomization w/ Dynamic Smoothing

DR says we don't need to be terribly accurate with dynamics as long as we can randomize.

So why not choose an inaccurate but smooth contact model to leverage this?