Efficient Global Planning for

Contact-Rich Manipulation

Hyung Ju Terry Suh, MIT

Talk @KAIST

2024-02-08

Chapter 1. Introduction

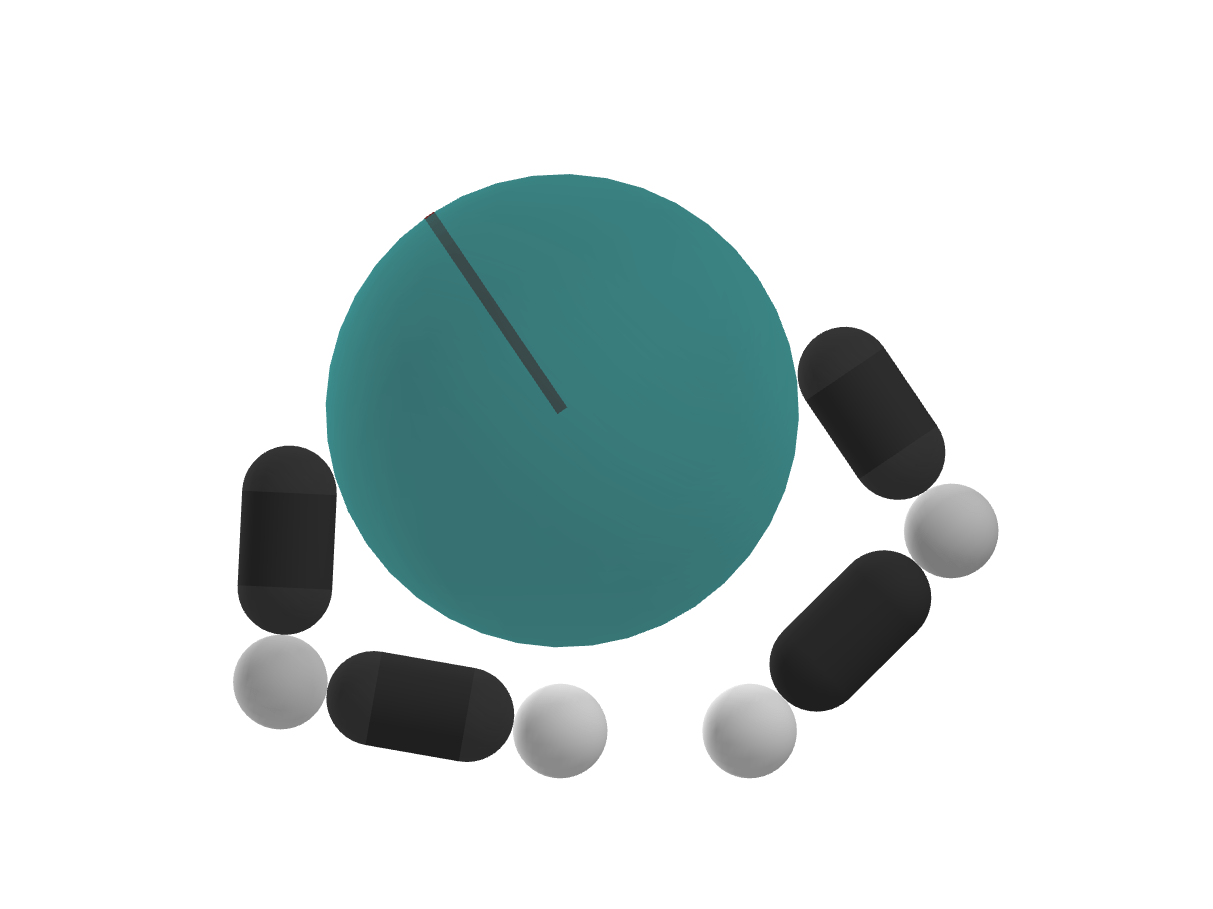

What is manipulation?

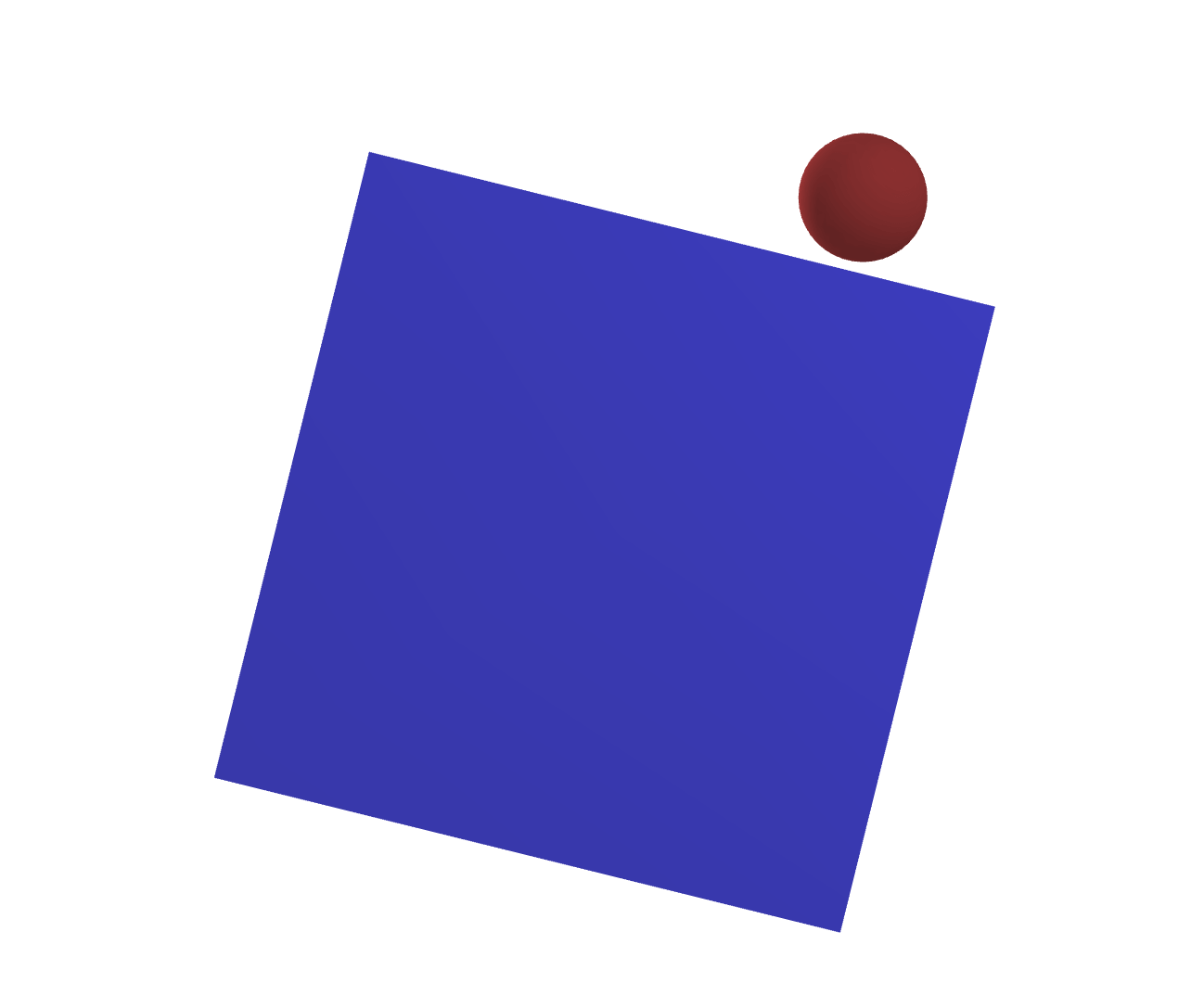

Rigid-body manipulation: Move object from pose A to pose B

A

B

What is manipulation?

How hard could this be? Just pick and place!

Rigid-body manipulation: Move object from pose A to pose B

A

B

Beyond pick & place

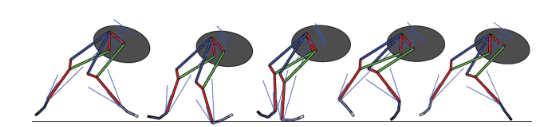

[DRPTSE 2016]

[ZH 2022]

[SKPMAT 2022]

[JTCCG 2014]

[PSYT 2022]

[ST 2020]

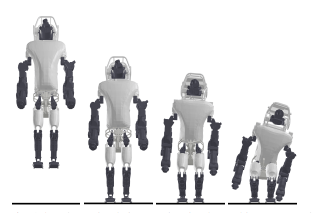

How have we been making progress?

Some amazing progress made with RL / IL.

Reinforcement Learning

Imitation Learning

[OpenAI 2018]

[CFDXCBS 2023]

How have we been making progress?

Some amazing progress made with RL / IL.

Reinforcement Learning

Imitation Learning

[OpenAI 2018]

[CFDXCBS 2023]

But how do we generalize and scale to the complexity of manipulation?

Generalization vs. Specialization

Specialization

Generalization

RL / IL are specialists!

- Extremely good at solving one task

- Long turnaround time for new skill acquisition

Fixed object + Few Goals

- What if we want different goals?

- What if the object shape changes?

- What if I have a different hand?

- What if I have a different environment?

How do we generalize?

Collect more data?

Secret Ingredient 1. Newton's Laws for Manipulation

Models Generalize!

Secret Ingredient 1. Newton's Laws for Manipulation

An Example of Generalization in Manipulation

How can we build "Newton's Laws" for contact-rich manipulation?

Models Generalize!

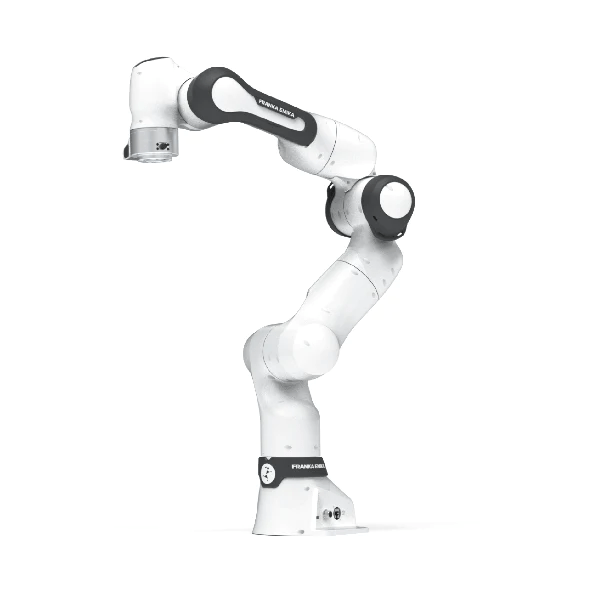

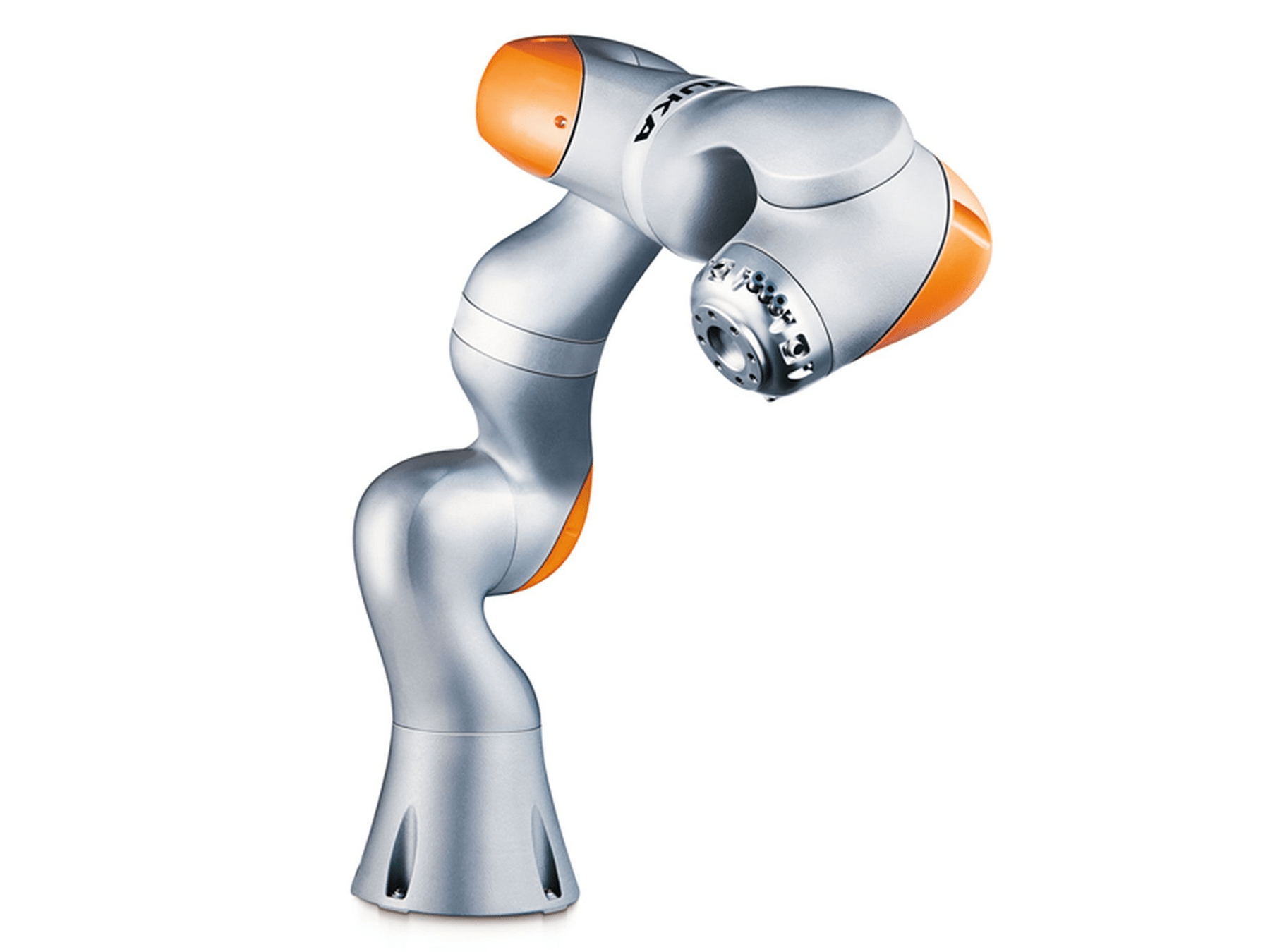

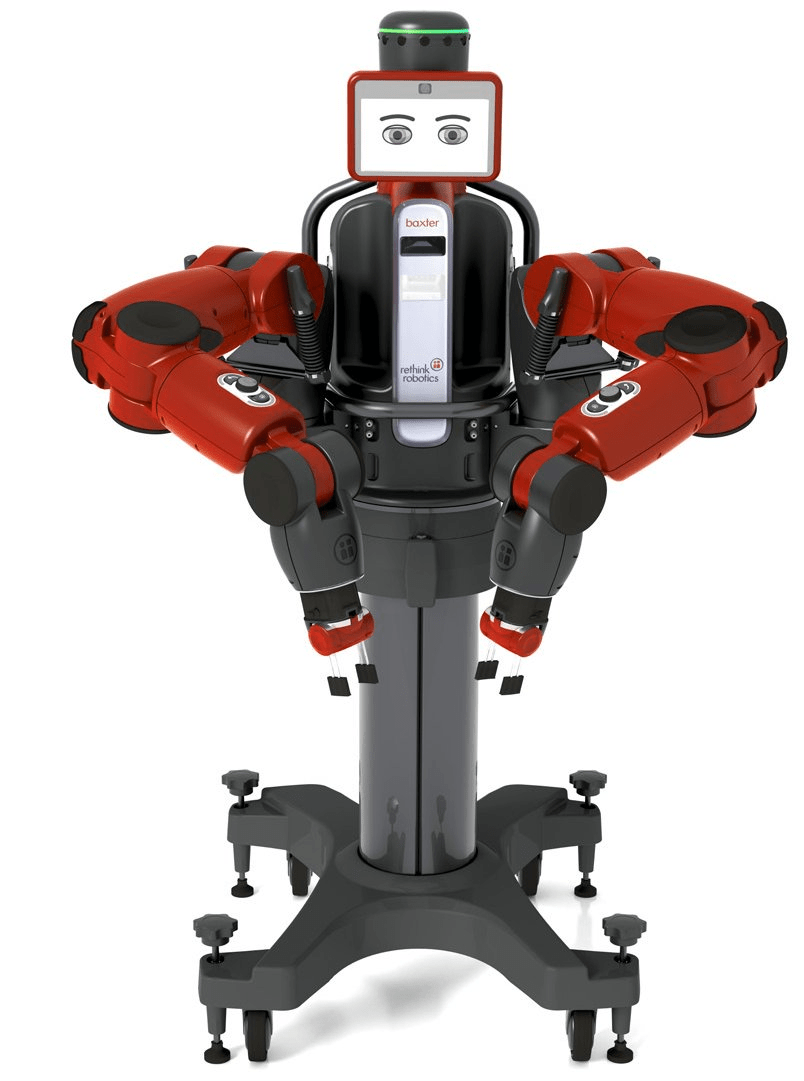

Differential Inverse Kinematics:

As long as you have the manipulator Jacobian, same control strategy works for every arm.

Secret Ingredient 2. Efficient Search for Manipulation

Specialization

Generalization

RL / IL are specialists!

- Extremely good at solving one task

- Long turnaround time for new skill acquisition

Fixed object + Few Goals

- What if we want different goals?

- What if the object shape changes?

- What if I have a different hand?

- What if I have a different environment?

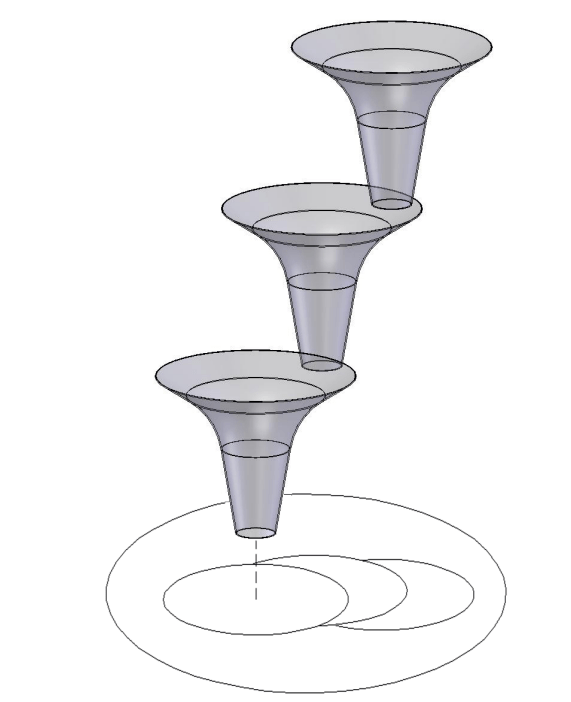

Search allows for

combinatorial generalization

Inference

Offline Computation

Search

Online Computation

Secret Ingredient 2. Efficient Search for Manipulation

Specialization

Generalization

RL / IL

Search

Not Mutually Exclusive!

They beautifully help each other.

MuZero

[Deepmind 2019]

Search allows better exploration, data generation for inference.

Feedback Motion Planning

[Tedrake 2009]

Inference allows for hierarchies that can narrow down the search space

Inference

Offline Computation

Search

Online Computation

Story of how we've built this capability

What about different goals, shapes,

environments, and tasks?

Newton's Laws for Manipulation

Efficient Search

How do we generalize?

Status Quo

Keep collecting data.

We're willing to spend huge offline time,

but your inference time (online) will be short.

Give me a few amount of online time (on an order of a minute), I'll give you the answer.

Our Proposal.

Overview of the Talk

Chapter 1. Introduction

Chapter 2. What's hard about contact?

Chapter 3. Why is RL so good?

Chapter 4. Bringing lessons from RL

Chapter 5. Efficient Global Planning

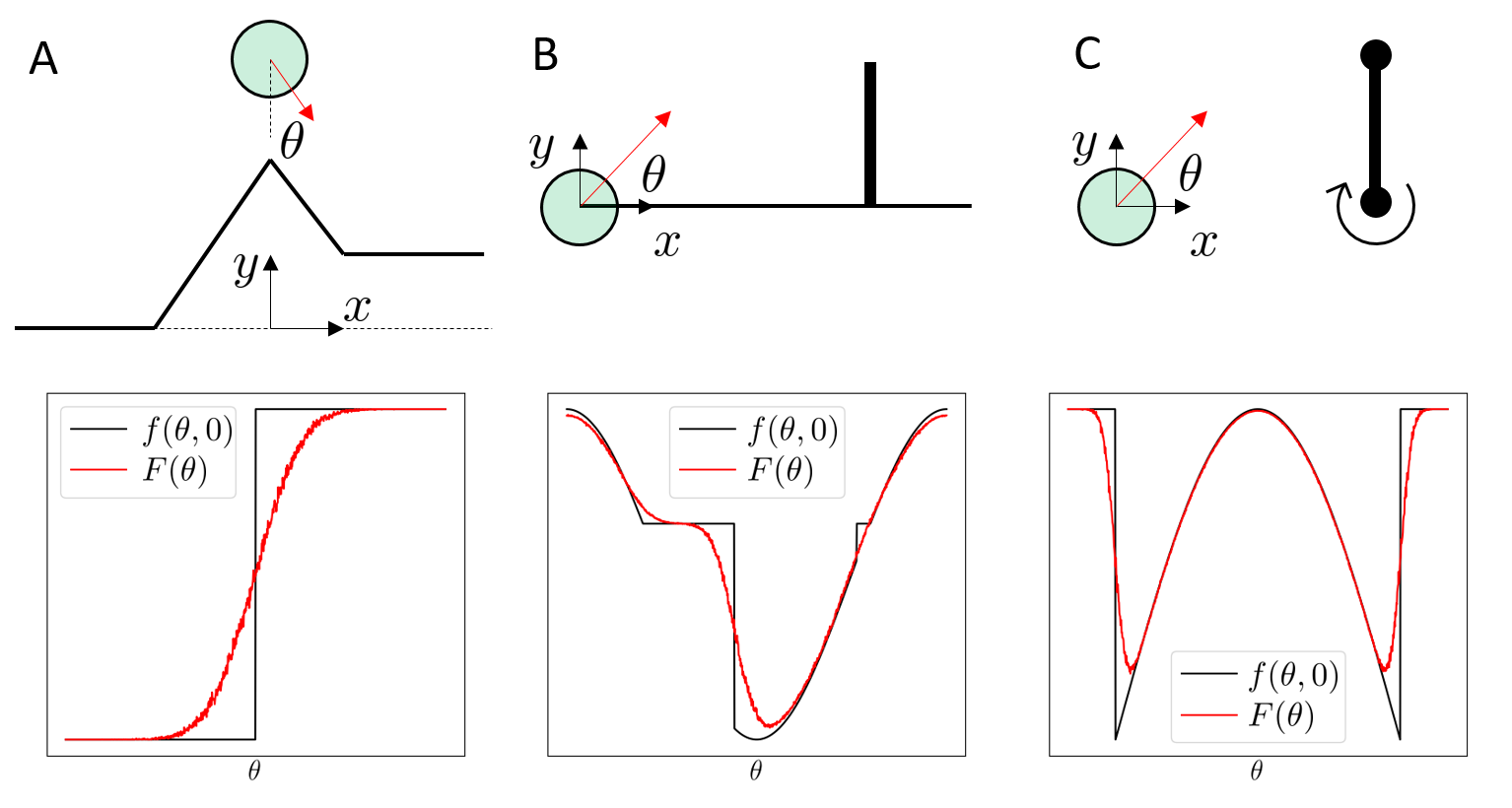

Chapter 2. What makes contact hard?

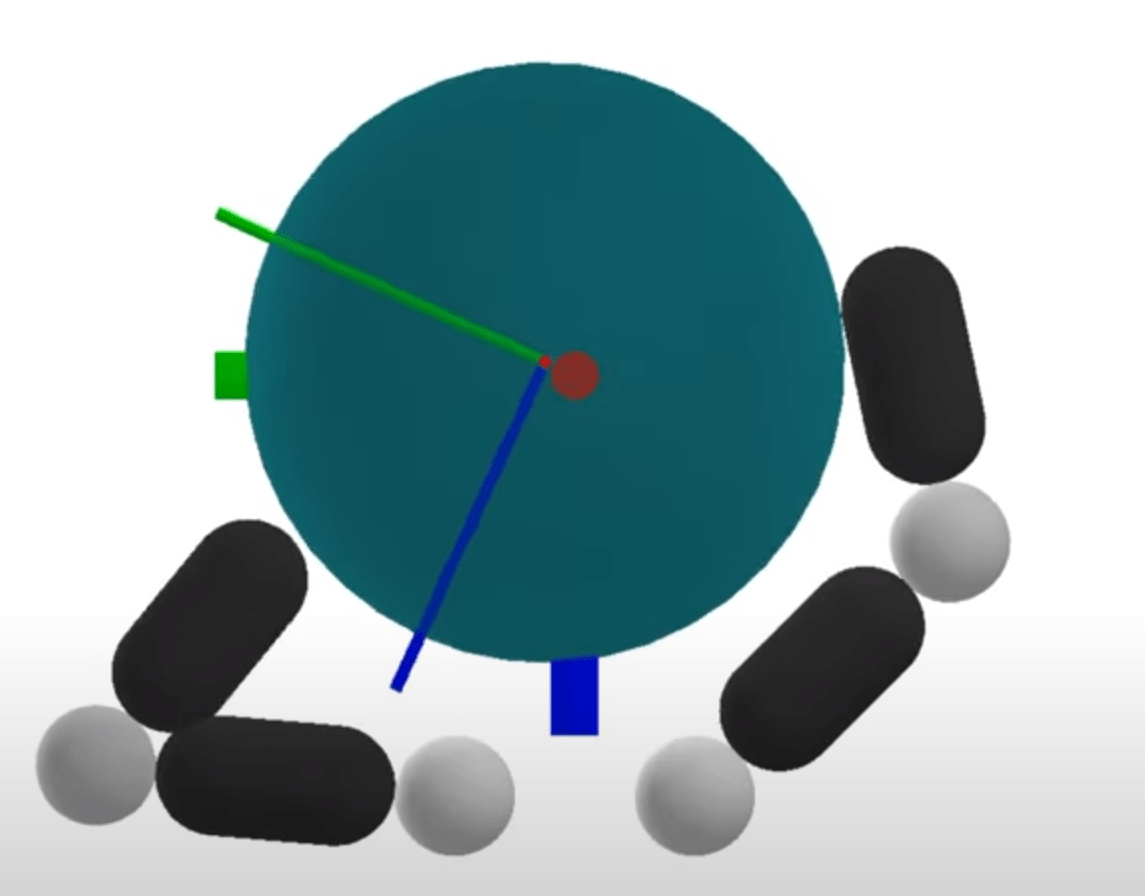

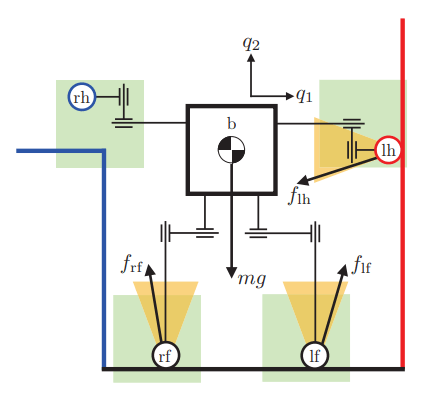

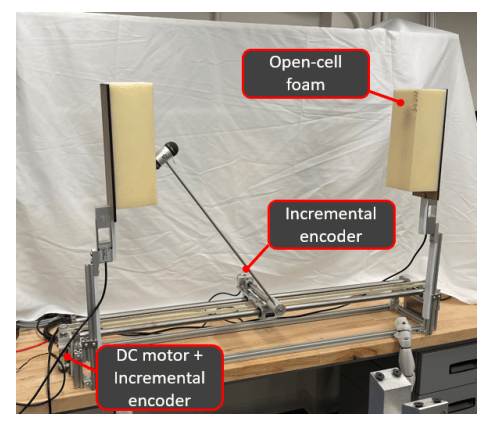

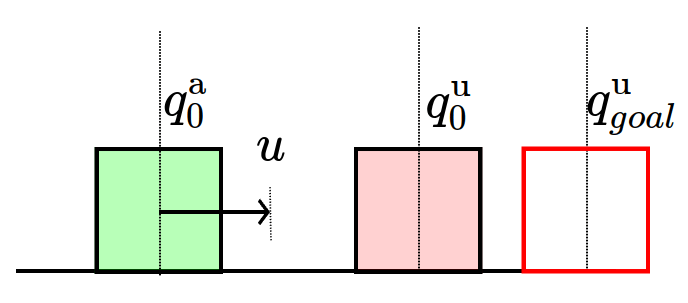

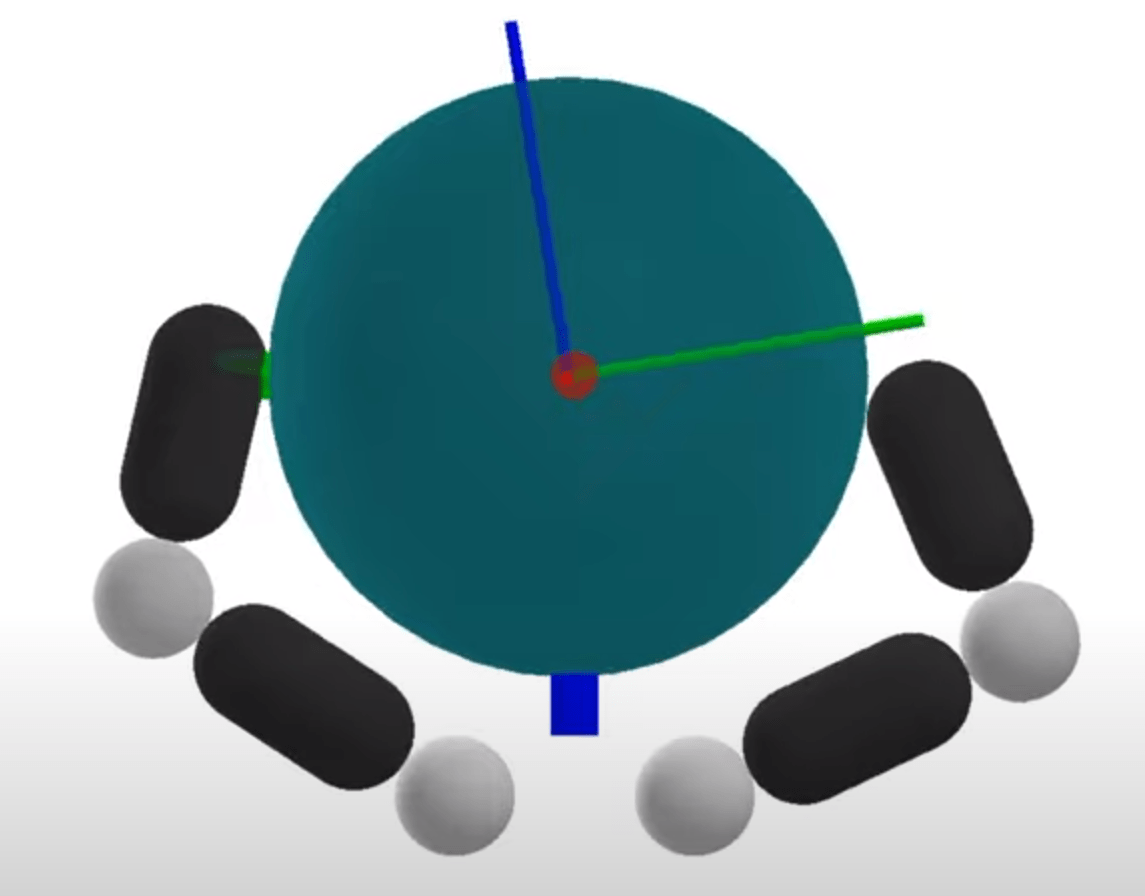

Planning through Contact: Problem Statement

System configuration as q

- Actuated degrees-of-freedom (Robot)

Planning through Contact: Problem Statement

System configuration as q

- Actuated degrees-of-freedom (Robot)

- Unactuated degrees-of-freedom (Object)

Planning through Contact: Problem Statement

System configuration as

- Actuated degrees-of-freedom (Robot)

- Unactuated degrees-of-freedom (Object)

Robot commands as

Planning through Contact: Problem Statement

System Dynamics

We will assume quasistatic dynamics - velocities are very small in magnitude.

Planning through Contact: Problem Statement

Find a sequence of actions and configurations to drive object to goal configuration.

Solution Desiderata

Efficient Global Planning for

Highly Contact-Rich Systems

Fast Solution Time

Beyond Local Solutions

Contact Scalability

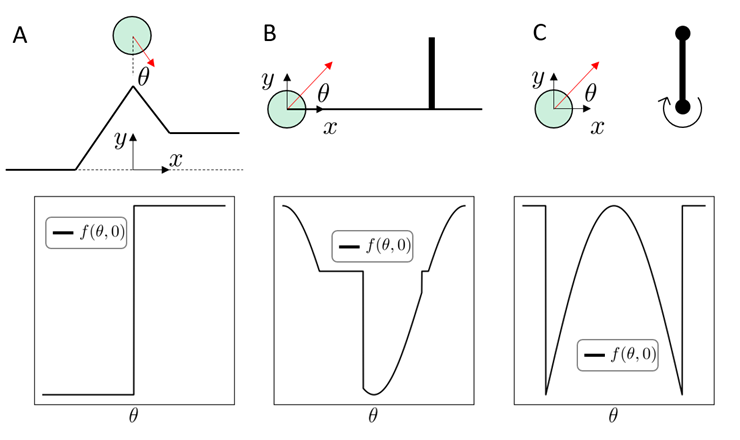

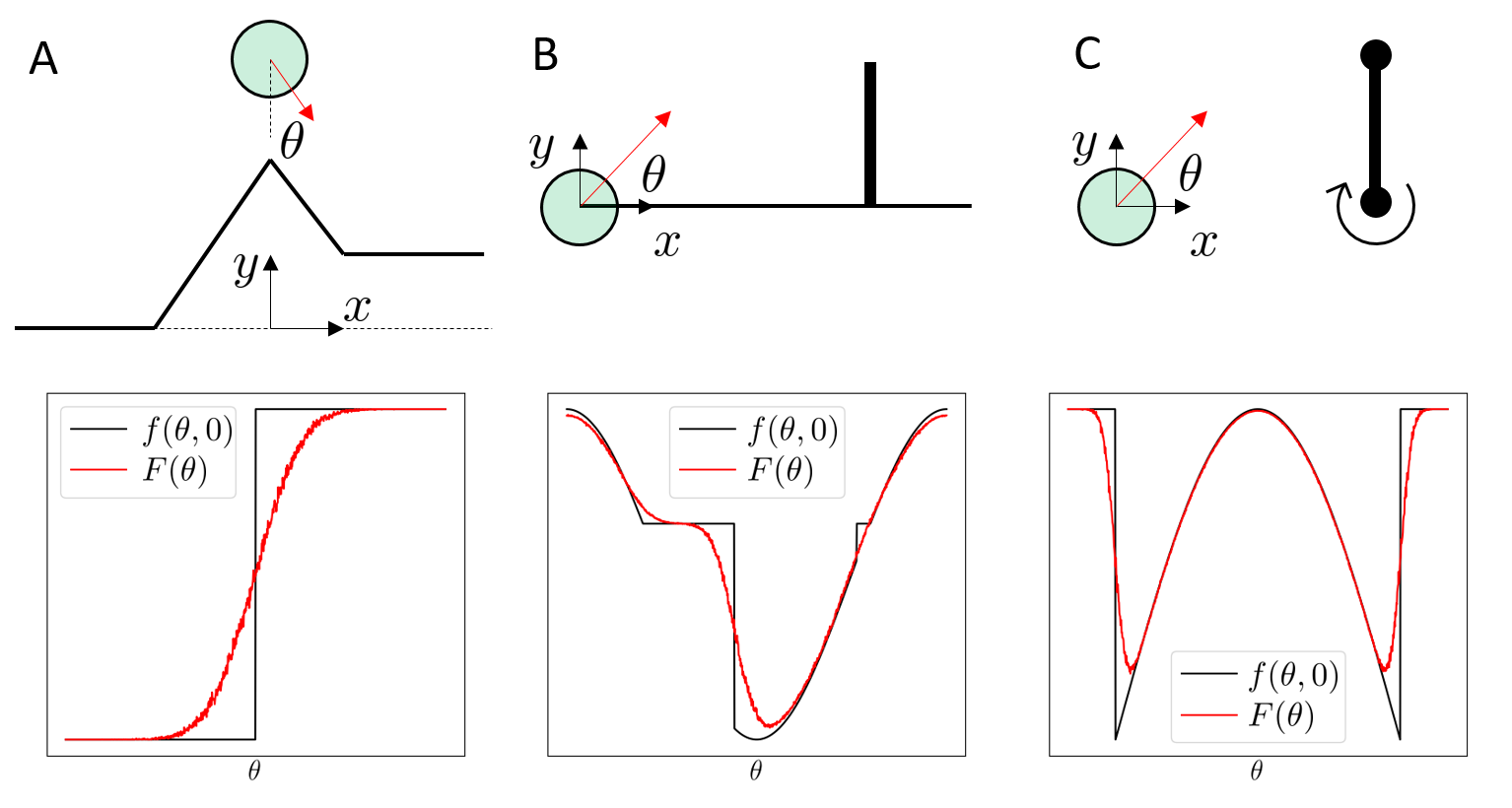

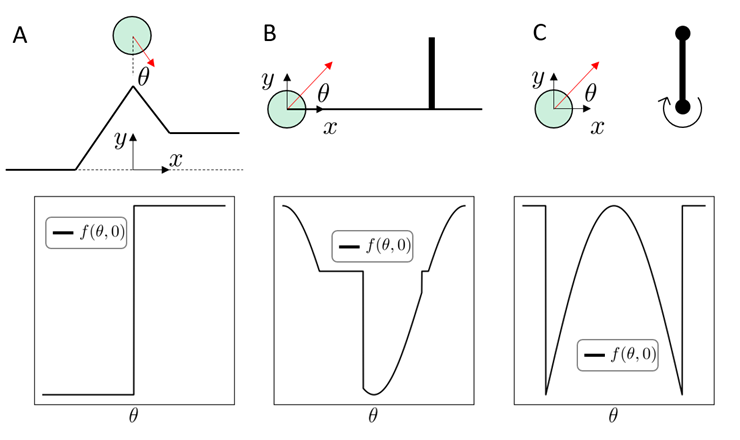

Why is this problem difficult?

To get some intuition, let's simplify the problem a bit!

Original Problem

Horizon 1

Simplifying the Problem

To get some intuition, let's simplify the problem a bit!

Horizon 1

Penalty on Terminal Constraint

Original Problem

Simplifying the Problem

Original Problem

Simplified Problem

Horizon 1

Penalty on Terminal Constraint

Equivalent to

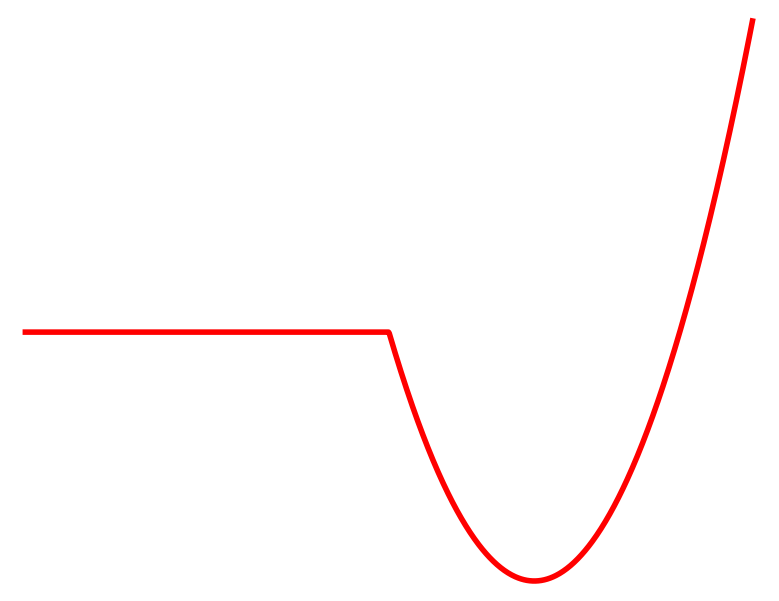

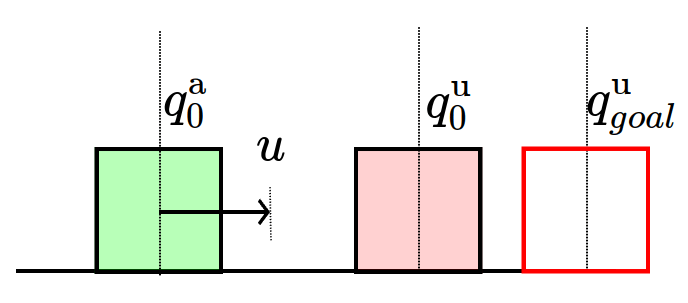

Toy Problem

Simplified Problem

Given some initial and goal configuration, which action minimizes distance to the goal configuration?

Toy Problem

Simplified Problem

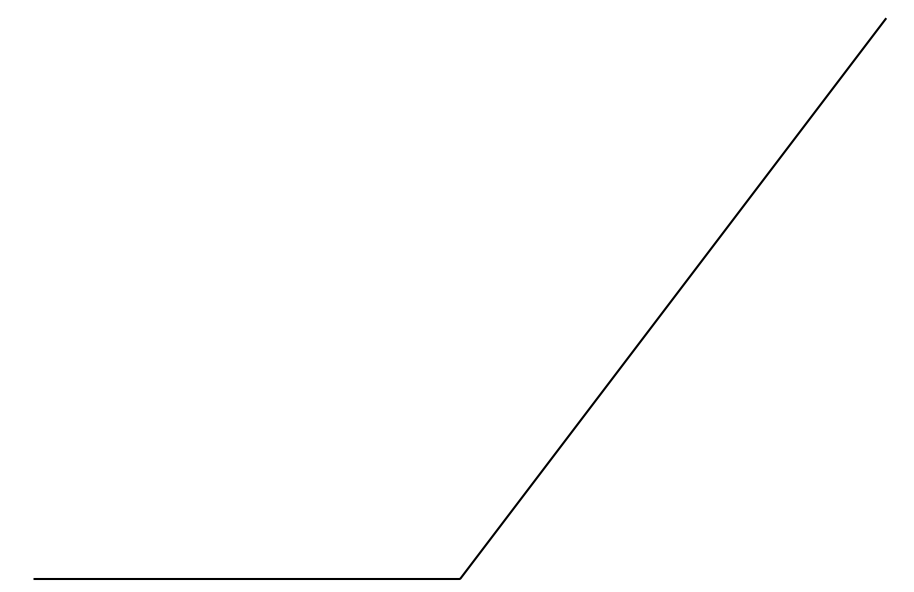

Consider simple gradient descent,

Toy Problem

Simplified Problem

Consider simple gradient descent,

Dynamics of the system

No Contact

Contact

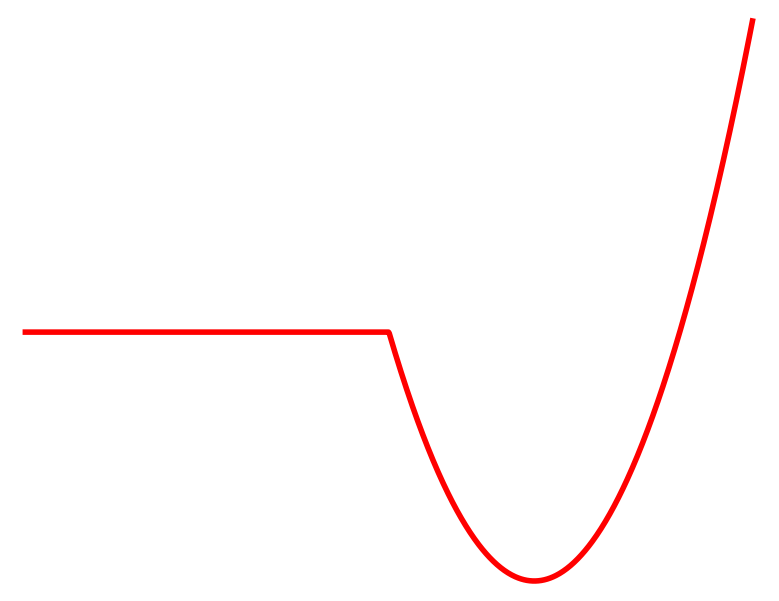

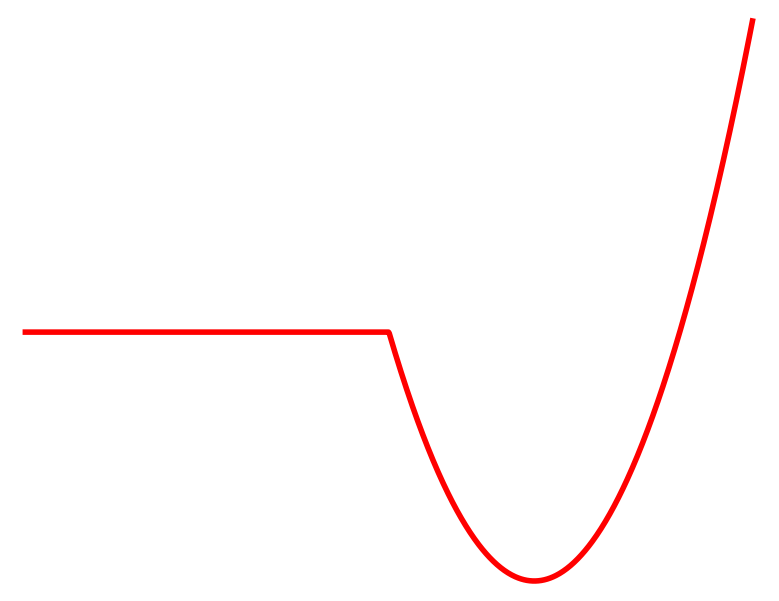

Toy Problem

Simplified Problem

Consider simple gradient descent,

Dynamics of the system

No Contact

Contact

The gradient is zero if there is no contact!

The gradient is zero if there is no contact!

Toy Problem

Simplified Problem

Consider simple gradient descent,

Dynamics of the system

No Contact

Contact

The gradient is zero if there is no contact!

The gradient is zero if there is no contact!

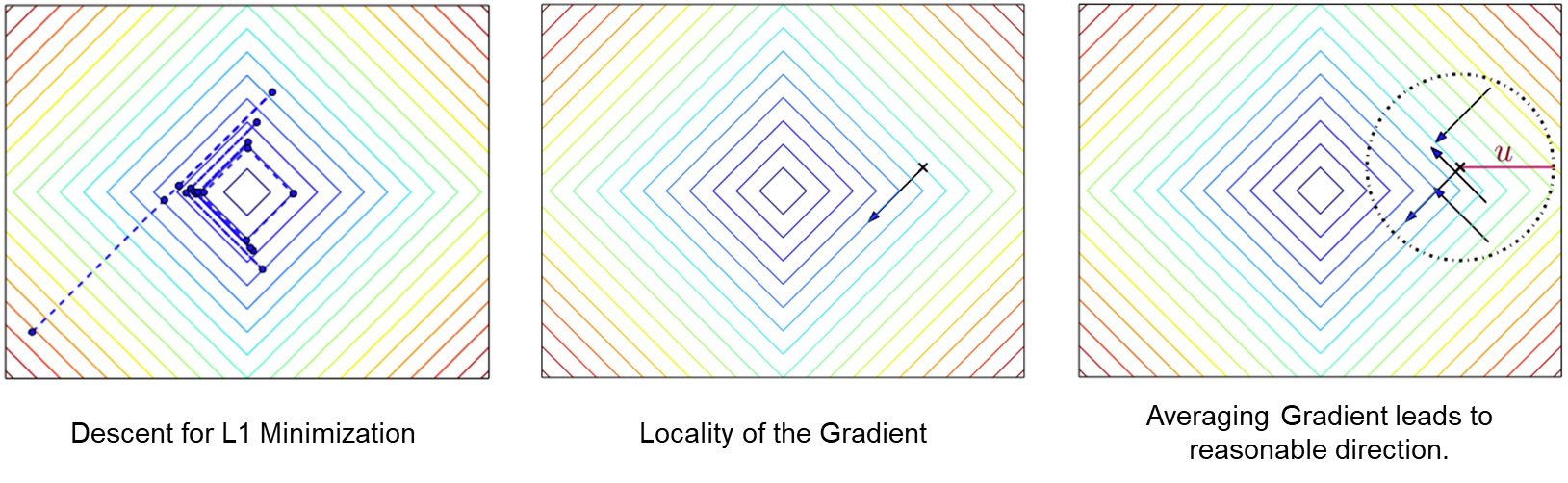

Local gradient-based methods easily get stuck due to the flat / non-smooth nature of the landscape

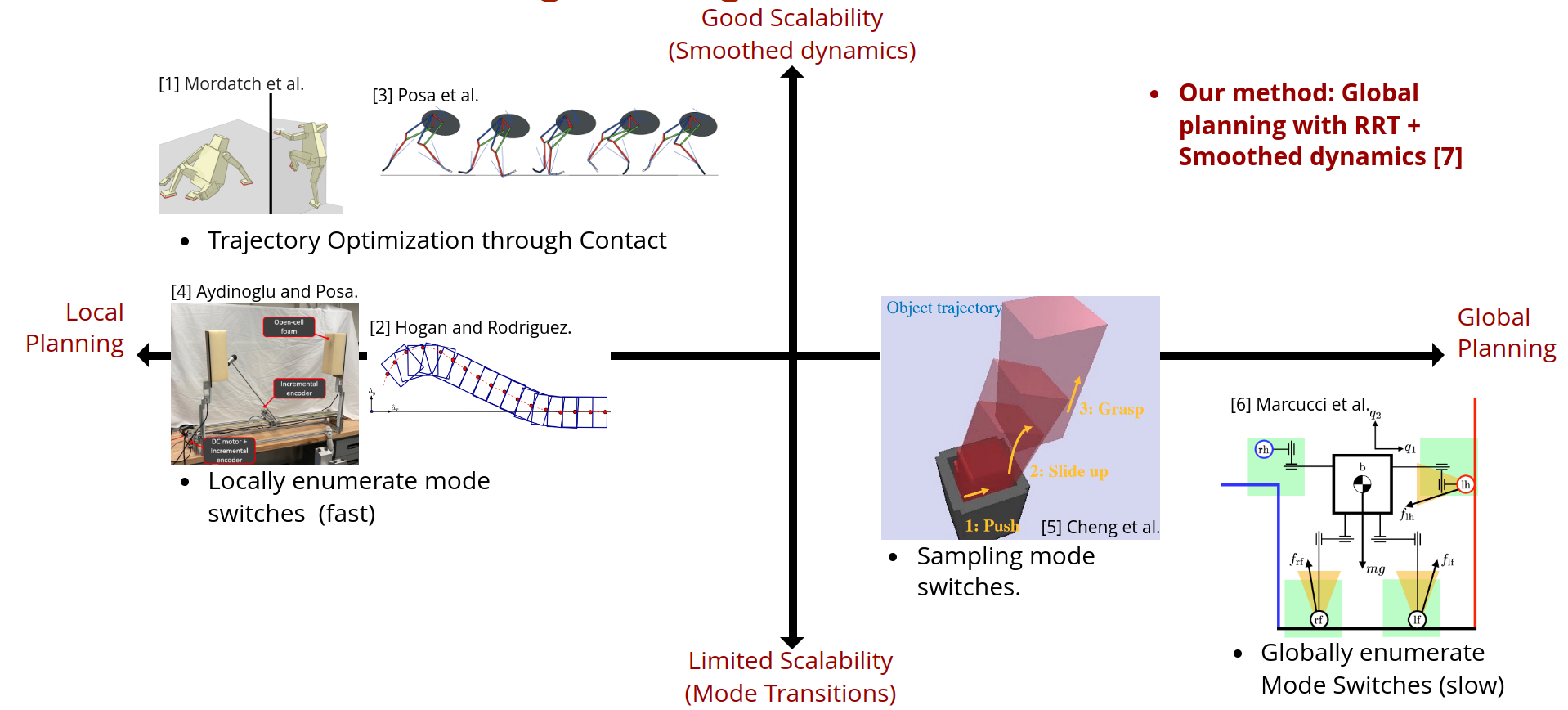

Previous Approaches to Tackling the Problem

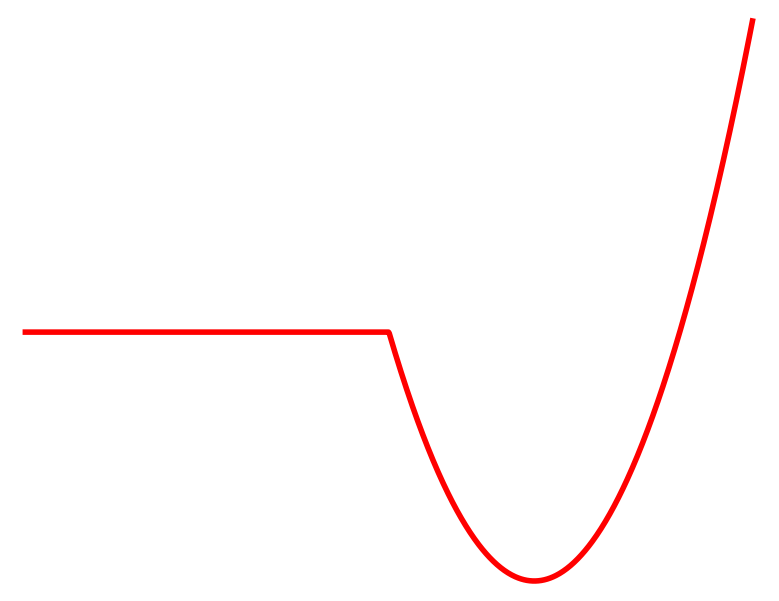

Why don't we search more globally for a locally optimal action subject to each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

Contact

No Contact

Cost

Previous Approaches to Tackling the Problem

Why don't we search more globally for a locally optimal action subject to each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

Mixed Integer Programming

Mode Enumeration

Active Set Approach

Contact

No Contact

Cost

Previous Approaches to Tackling the Problem

Why don't we search more globally for a locally optimal action subject to each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

Mixed Integer Programming

Mode Enumeration

Active Set Approach

[MDGBT 2017]

[HR 2016]

[CHHM 2022]

[AP 2022]

Contact

No Contact

Cost

Problems with Mode Enumeration

System

Number of Modes

No Contact

Sticking Contact

Sliding Contact

Number of potential active contacts

Problems with Mode Enumeration

System

Number of Modes

The number of modes scales terribly with system complexity

No Contact

Sticking Contact

Sliding Contact

Number of potential active contacts

Mixed Integer Approaches

Efficient Global Planning for

Highly Contact-Rich Systems

Fast Solution Time

Beyond Local Solutions

Contact Scalability

Chapter 3. What makes RL so good?

Reinforcement Learning

Efficient Global Planning for

Highly Contact-Rich Systems

Fast Solution Time

Beyond Local Solutions

Contact Scalability

Why is RL so good?

Why are model-based planning methods doing not as well?

How does RL power through these problems?

Reinforcement Learning fundamentally considers a stochastic objective

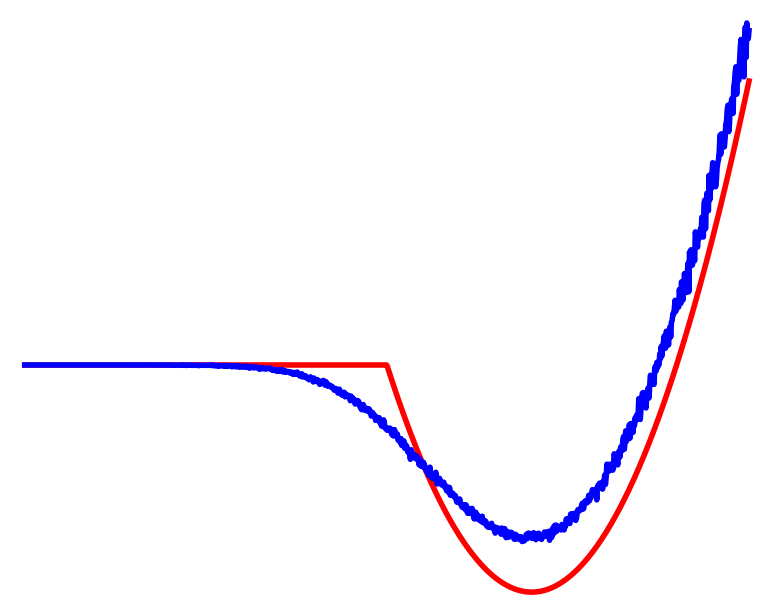

Previous Formulations

Reinforcement Learning

Contact

No Contact

Cost

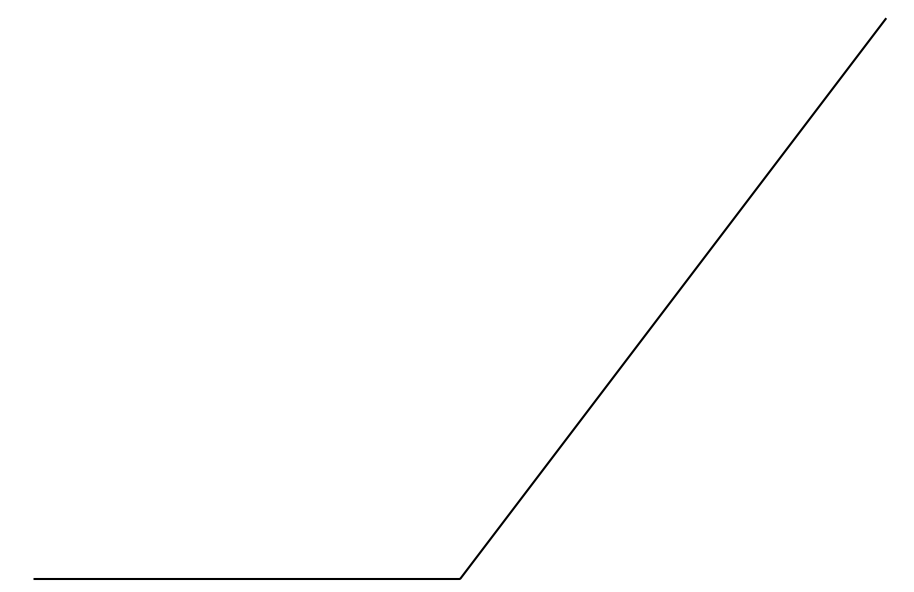

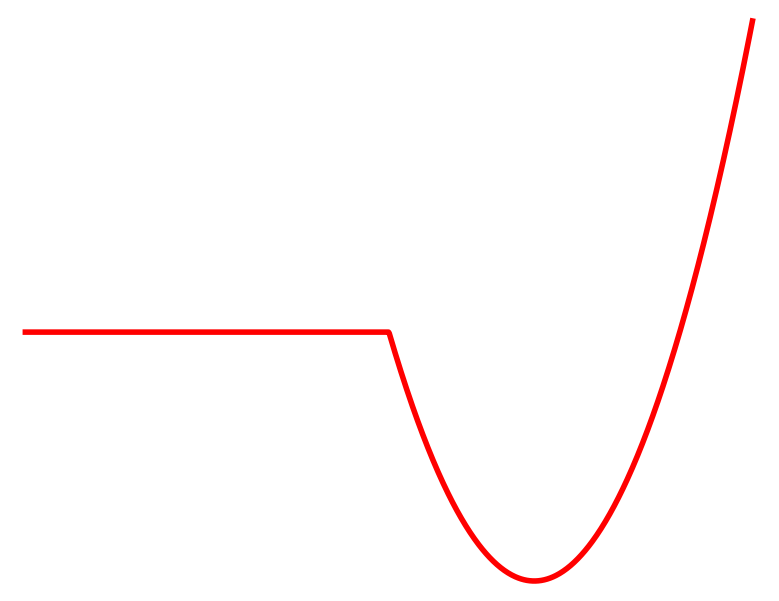

How does RL power through these problems?

Previous Formulations

Reinforcement Learning

Contact

No Contact

Cost

How does RL power through these problems?

Previous Formulations

Reinforcement Learning

Contact

No Contact

Cost

Contact

No Contact

Averaged

Randomized smoothing

regularizes landscapes

Cost

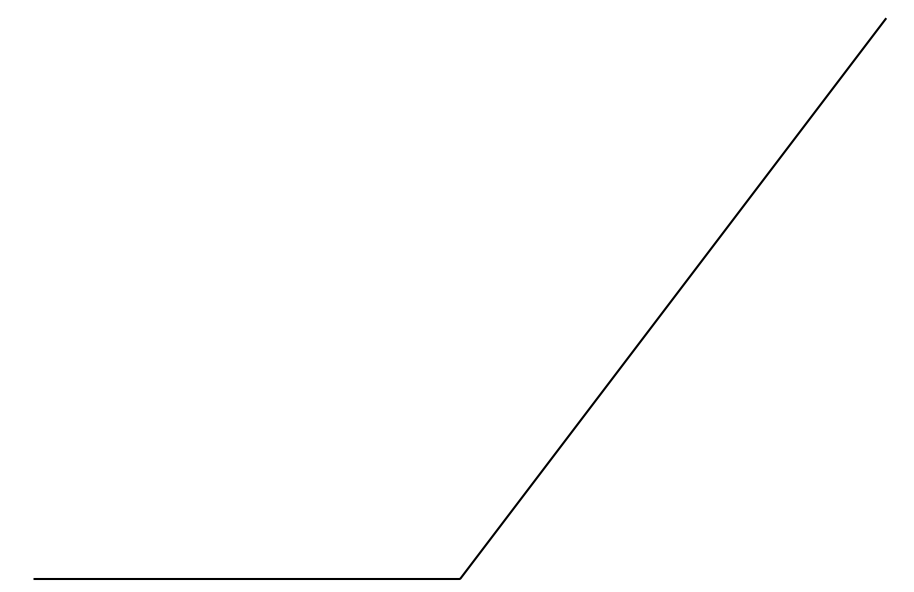

How does RL power through these problems?

Previous Formulations

Reinforcement Learning

Contact

No Contact

Averaged

Cost

Some samples end up in contact, some samples do not!

No consideration of modes.

Randomized smoothing

regularizes landscapes

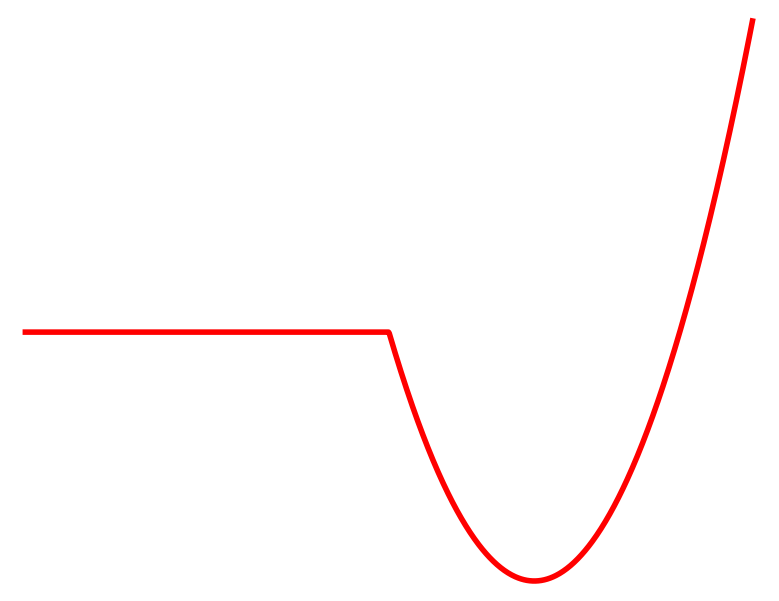

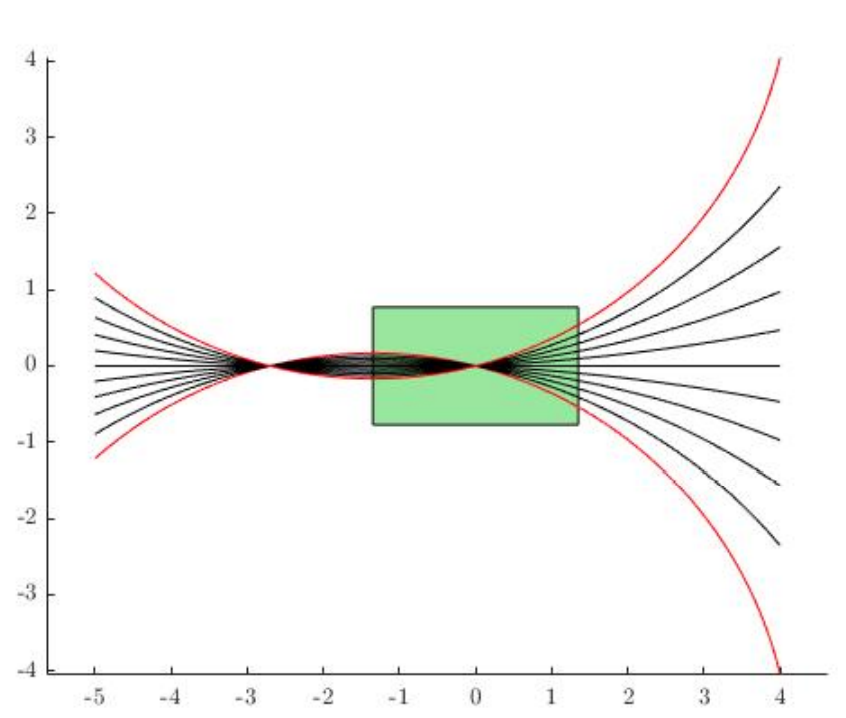

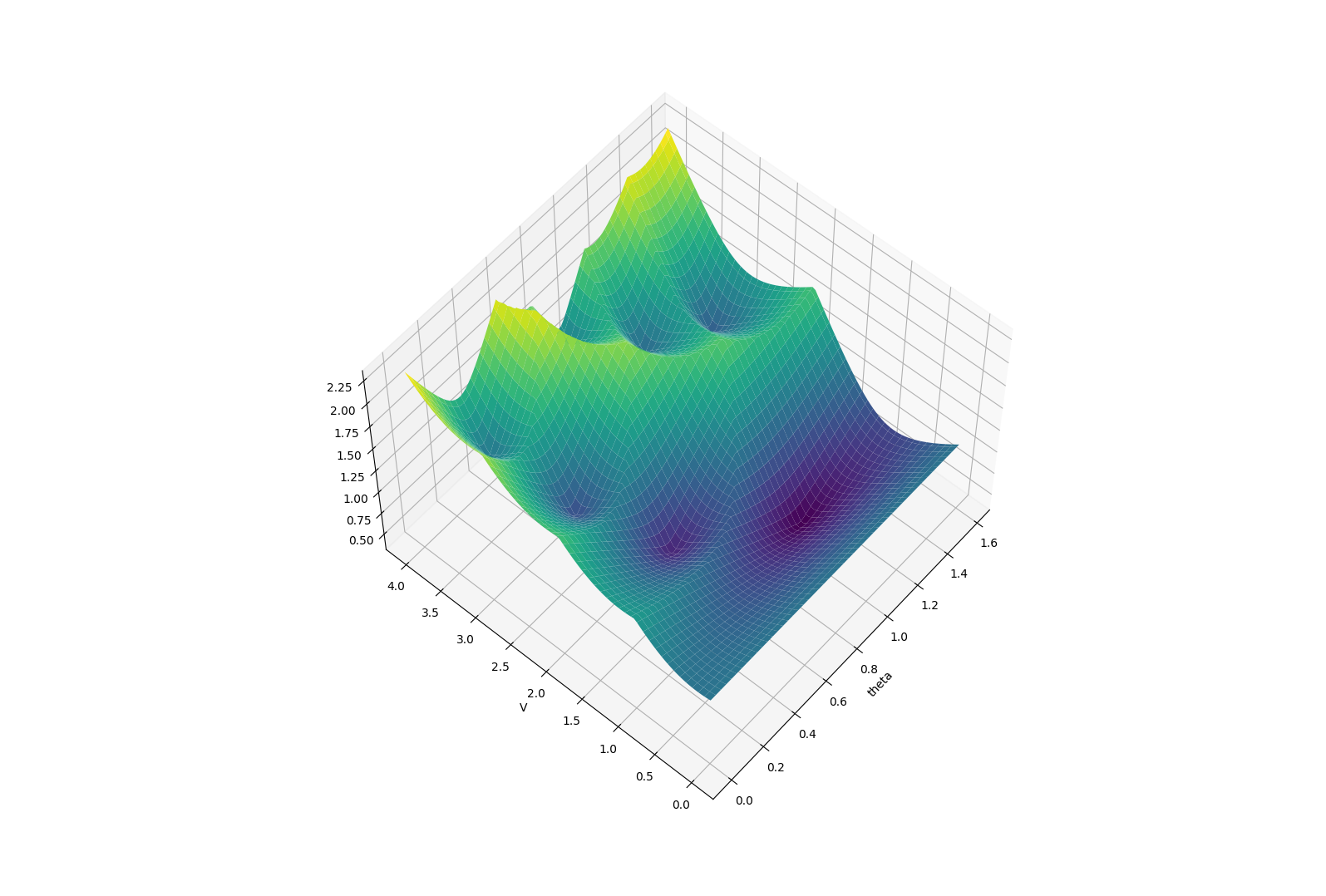

Original Problem

Long-horizon problems involving contact can have terrible landscapes.

How does RL power through these problems?

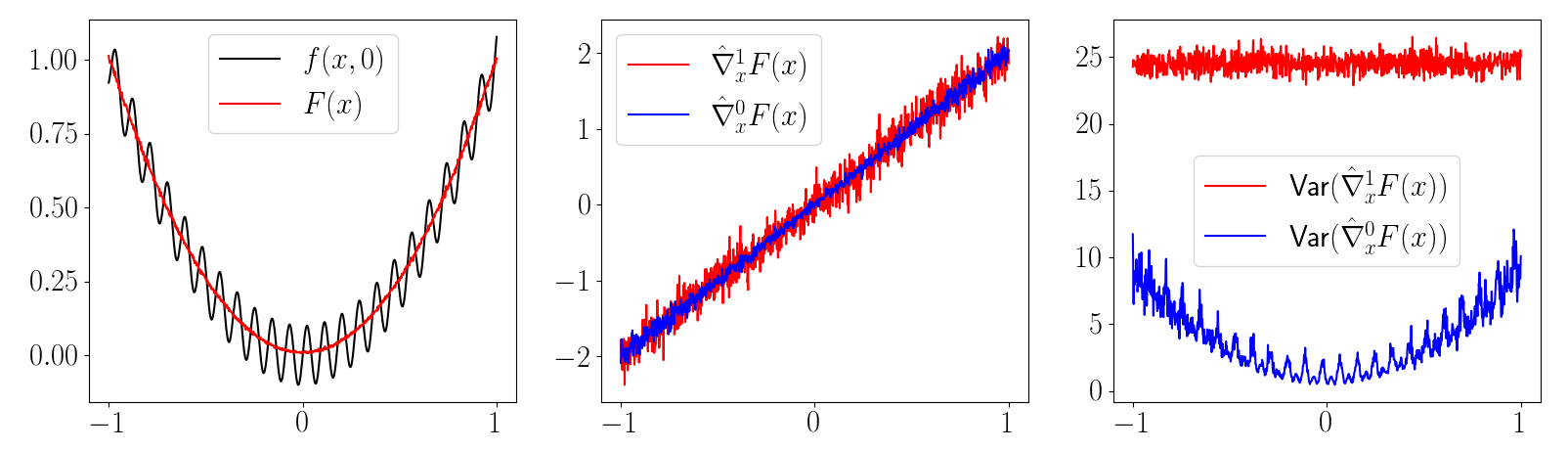

[SSZT 2022]

Smoothing of Value Functions.

Smooth Surrogate

The benefits of smoothing are much more pronounced in the value smoothing case.

Beautiful story - noise sometimes regularizes the problem, developing into helpful bias.

[SSZT 2022]

Computation of Gradients

But how do we take gradients through a stochastic objective?

First-Order Gradient Estimator

Zeroth-Order Gradient Estimator

Reparametrization Gradient

Gradient Sampling

REINFORCE

Score Function Gradient

Likelihood Ratio Gradient

Stein Gradient Estimator

Common Lesson in Stochastic Optimization

Analytic Expression

First-Order Gradient Estimator

Zeroth-Order Gradient Estimator

- Requires differentiability over dynamics, reward, policy.

- Generally lower variance.

- Only requires zeroth-order oracle (value of f)

- High variance.

Structure Requirements

Performance / Efficiency

Possible for only few cases

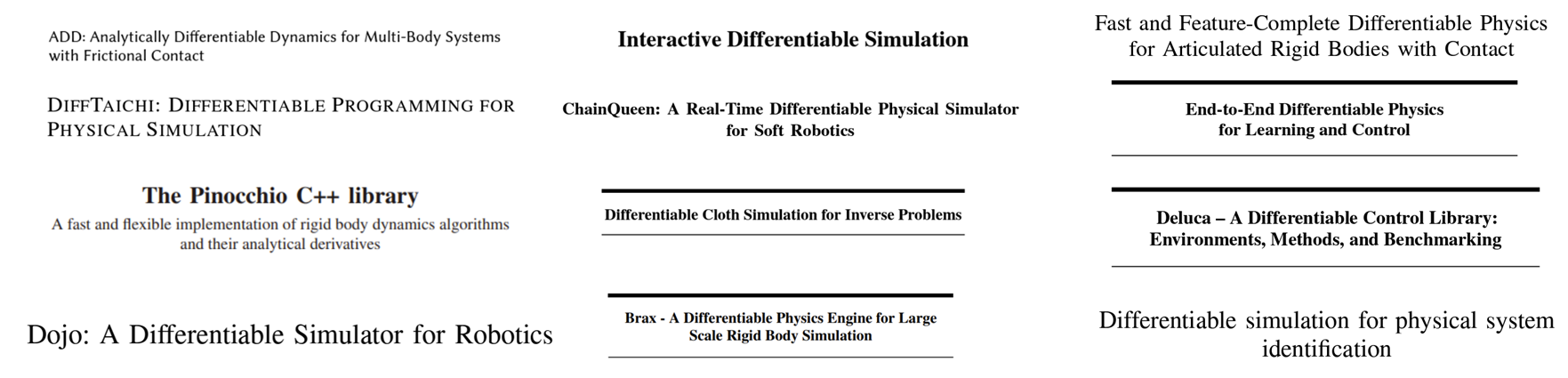

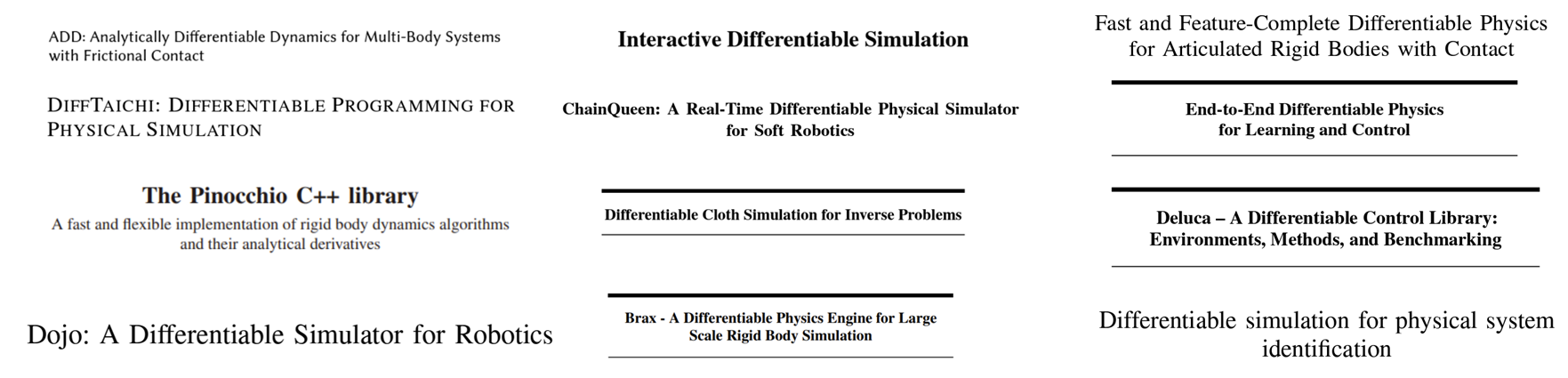

First-Order Policy Search with Differentiable Simulation

Policy Gradient Methods in RL (REINFORCE / TRPO / PPO)

- Requires differentiability over dynamics, reward, policy.

- Generally lower variance.

- Only requires zeroth-order oracle (value of f)

- High variance.

Structure Requirements

Performance / Efficiency

Turns out there is an important question hidden here regarding the utility of differentiable simulators.

Common Lesson in Stochastic Optimization

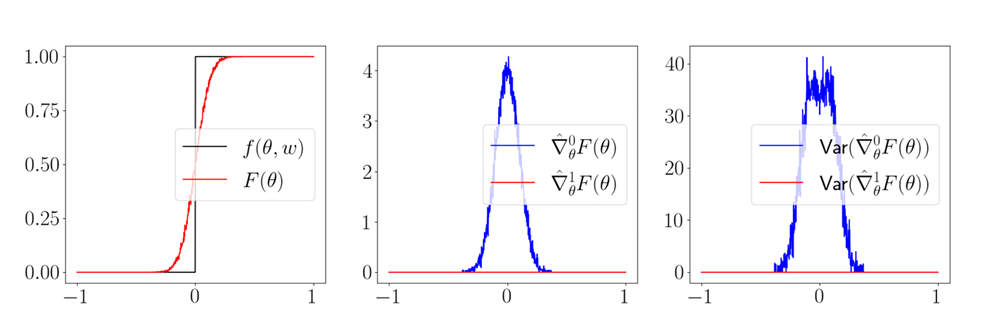

Do Differentiable Simulators Give Better Policy Gradients?

Very important question for RL, as it promises lower variance, faster convergence rates, and more sample efficiency.

What do we mean by better?

Bias

Variance

Common lesson from stochastic optimization:

1. Both are unbiased under sufficient regularity conditions

2. First-order generally has less variance than zeroth order.

We show two cases where the commonly accepted wisdom is not true.

Pathologies of Differentiable RL

Bias

Variance

Common lesson from stochastic optimization:

1. Both are unbiased under sufficient regularity conditions

2. First-order generally has less variance than zeroth order.

Bias

Variance

Bias

Variance

We show two cases where the commonly accepted wisdom is not true.

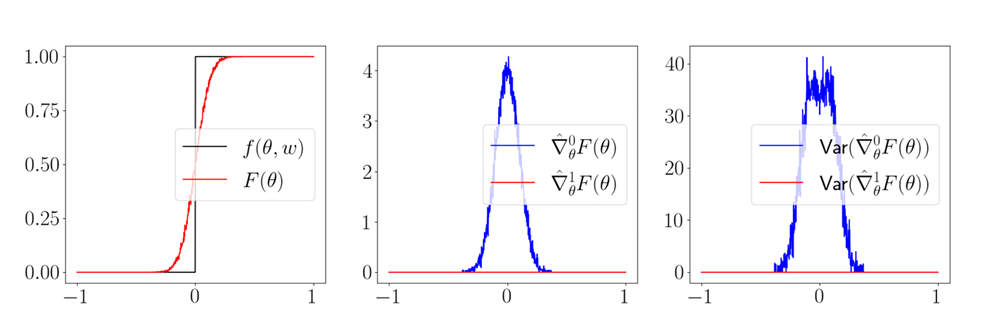

1st Pathology: First-Order Estimators CAN be biased.

2nd Pathology: First-Order Estimators can have MORE

variance than zeroth-order.

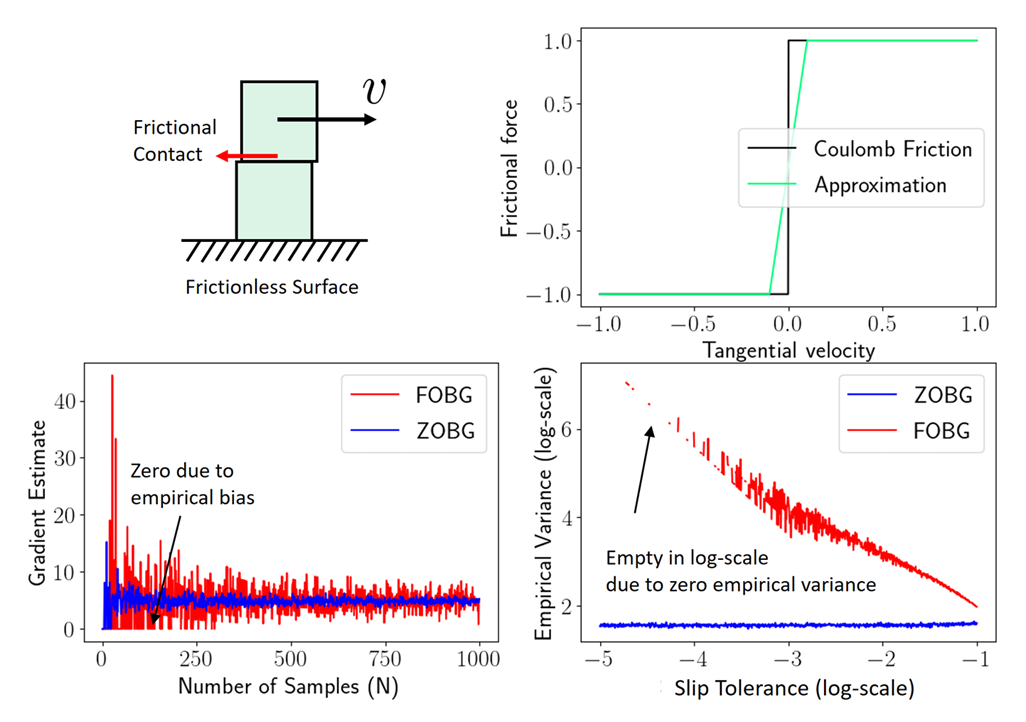

Bias from Discontinuities

1st Pathology: First-Order Estimators CAN be biased.

Note that empirical variance is also zero for first-order!

Empirical Bias

Happens for near-discontinuities as well!

Scales with Gradient

Scales with Function Value

Scales with dimension of decision variables.

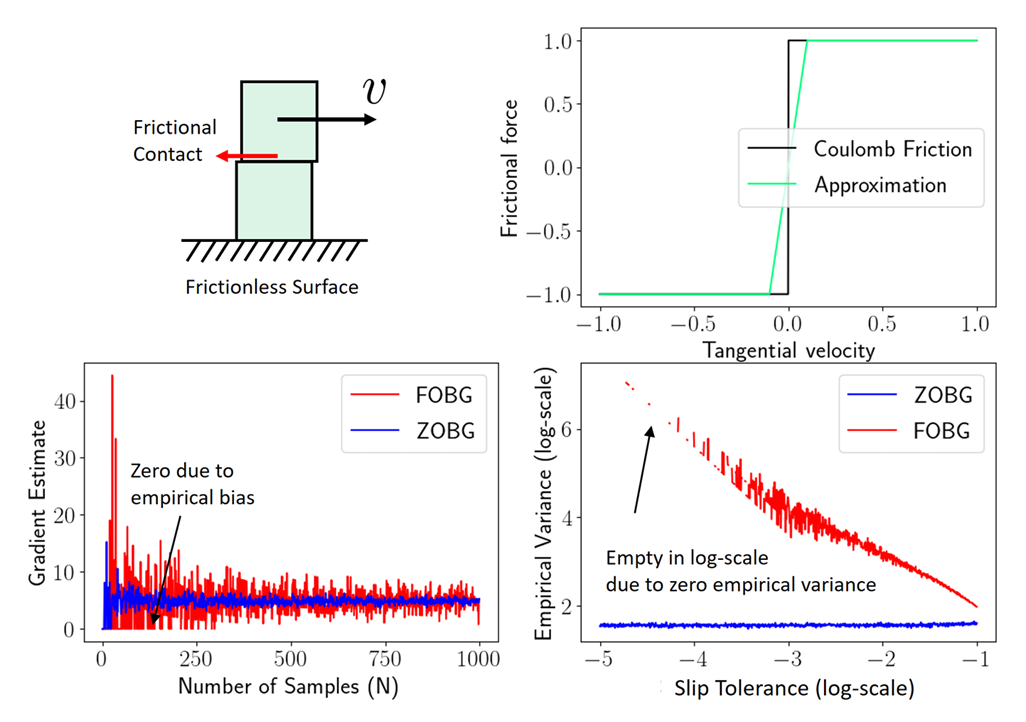

Variance of First-Order Estimators

2nd Pathology: First-order Estimators CAN have more variance than zeroth-order ones.

High-Variance Events

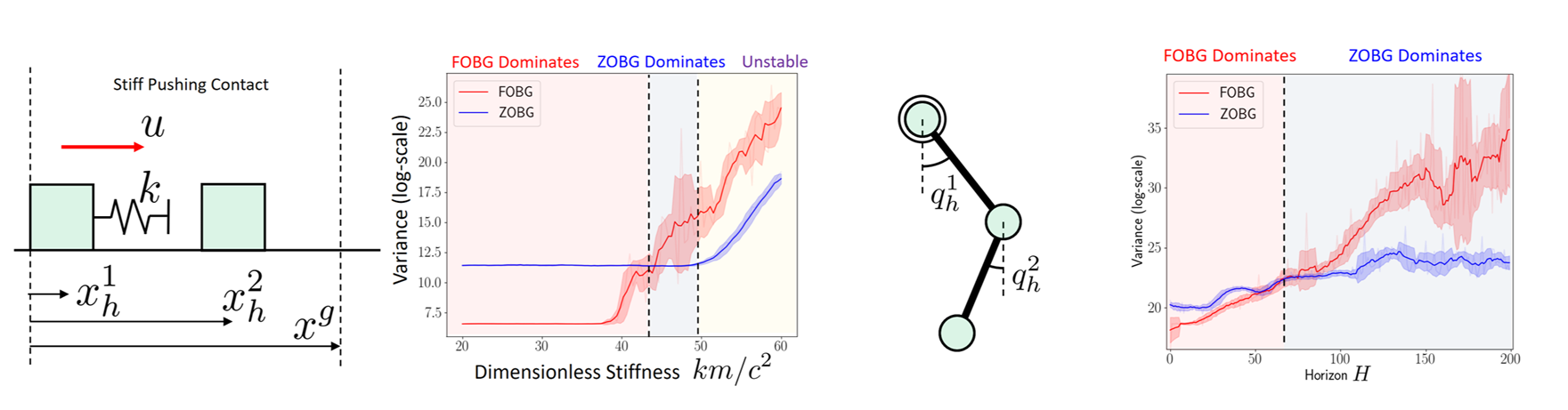

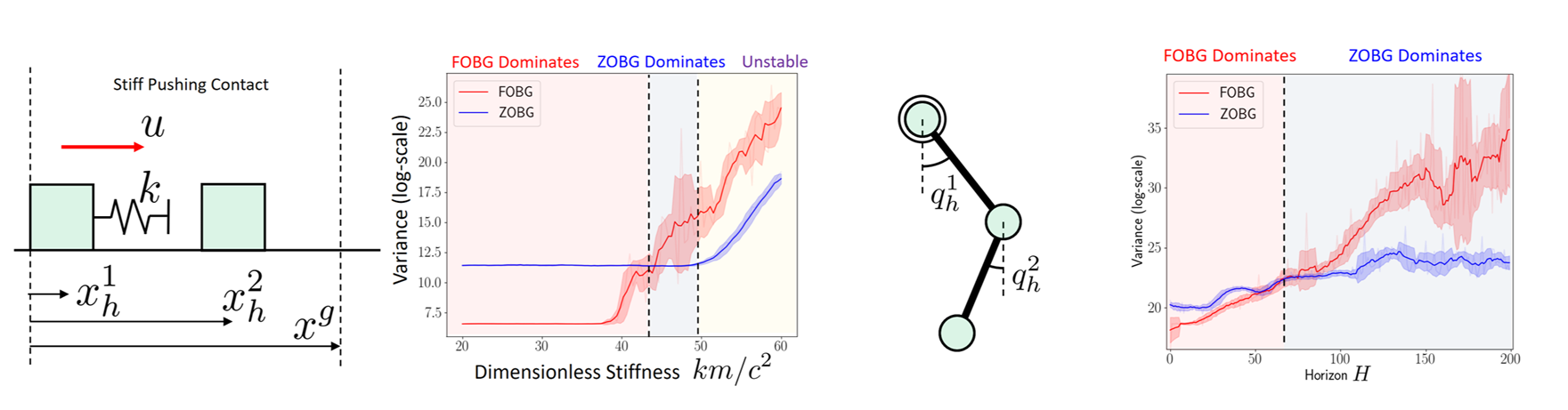

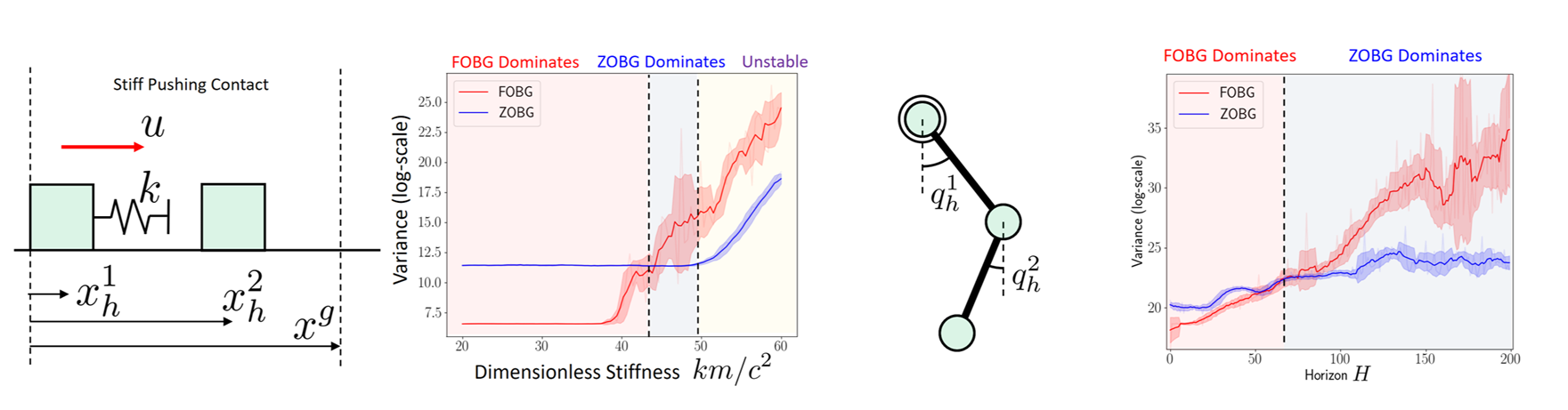

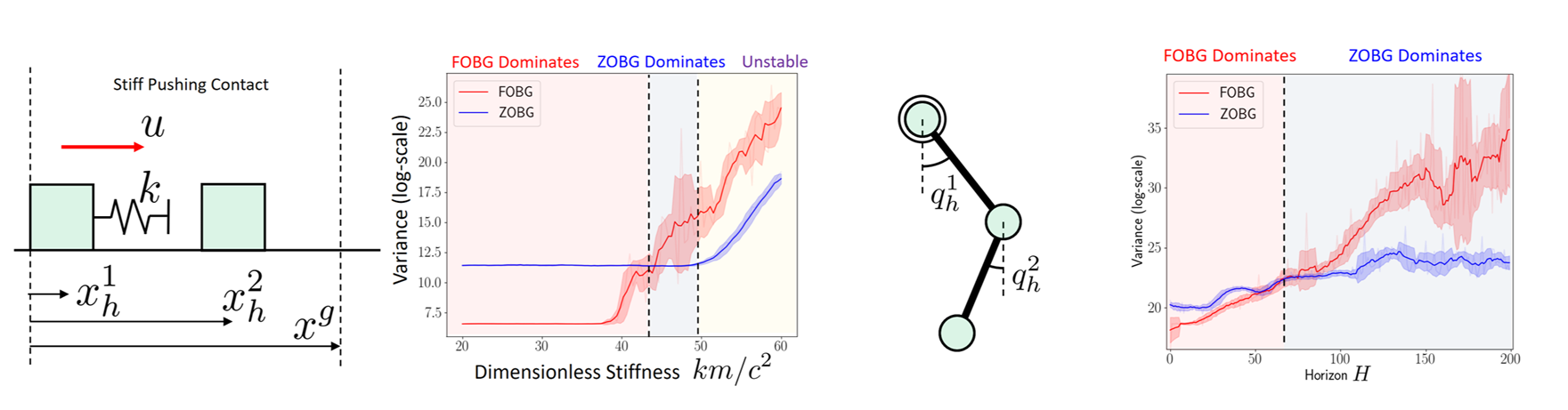

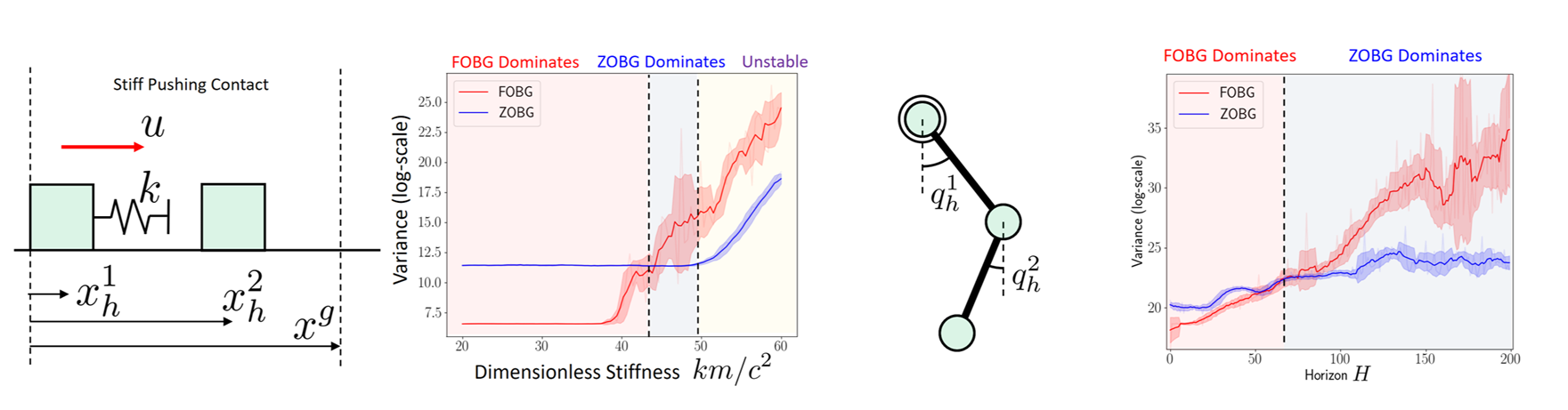

Case 1. Persistent Stiffness

Case 2. Chaos

Few Important Lessons

Why does RL do better on these problems?

Randomized Smoothing: Regularization effects of stochasticity

Zeroth-Order Gradients are surprisingly robust

- Chaining first-order gradients explode / vanish.

- First order gradients suffer from empirical bias under stiff dynamics.

Chapter 4. Bringing lessons from RL

Few Important Lessons

How do we do better?

Regularizing Effects of Stochasticity

Contact problems require some smoothing regularization

Stiff dynamics causes empirical bias / gradient explosion.

Are we writing down our models in the right way?

Chaining first-order gradients explode / vanish

Let's avoid single shooting.

Newton's Laws for Manipulation

Are we writing down the models in the right way?

Newton's Laws for Manipulation

Are we writing down the models in the right way?

How did we predict this?

Results vary, but we always know what's NOT going to happen.

Newton's Laws for Manipulation

No Contact

Contact

Newton's laws for manipulation seem much more like constrained optimization problems!

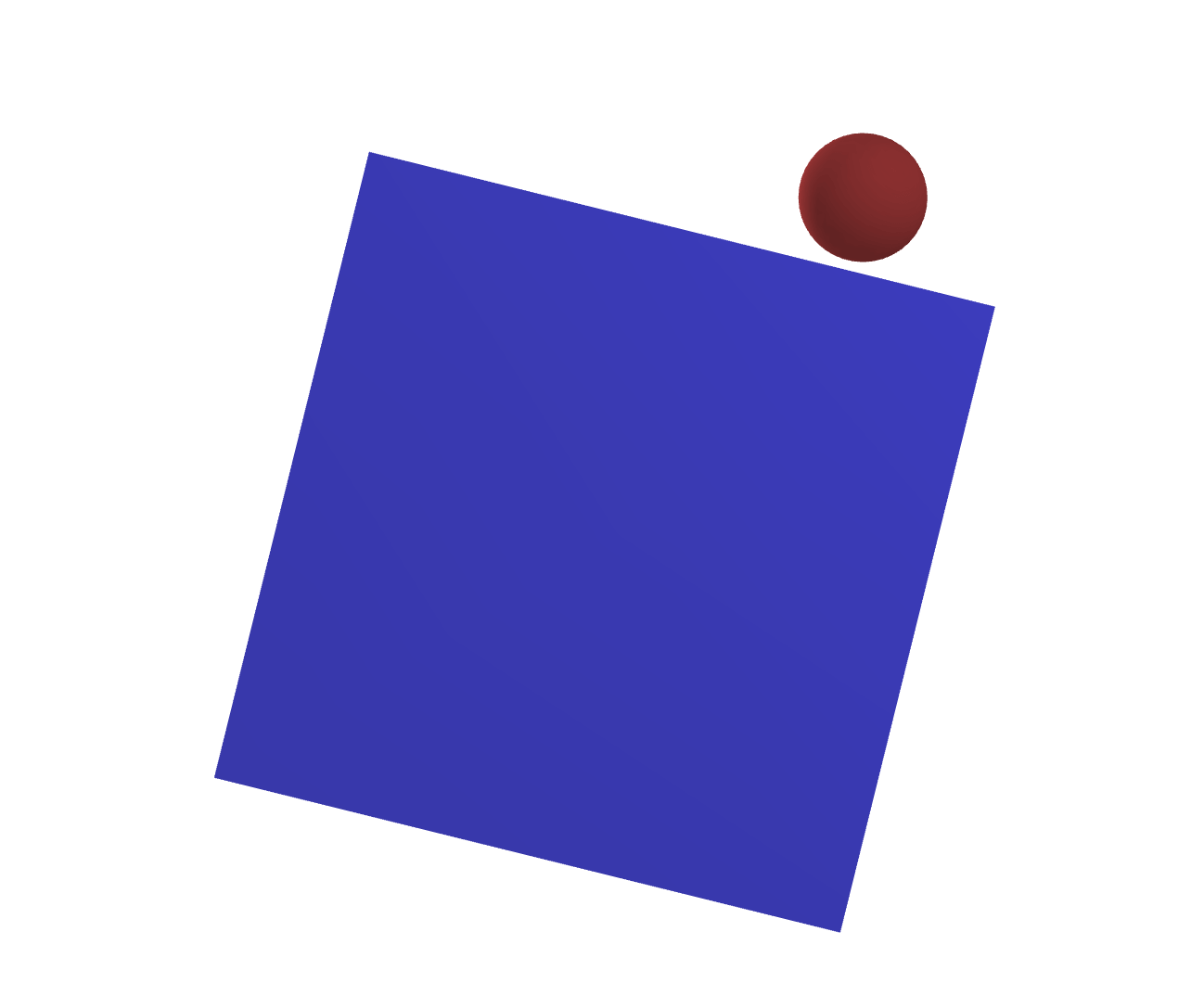

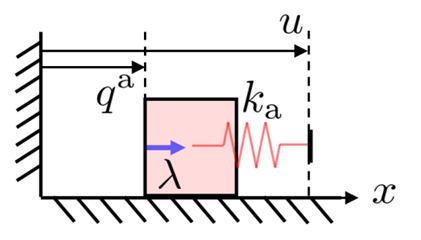

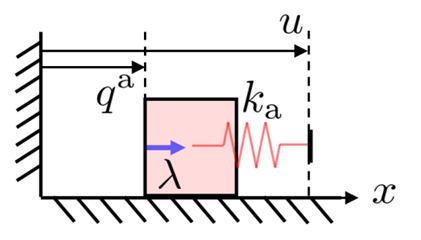

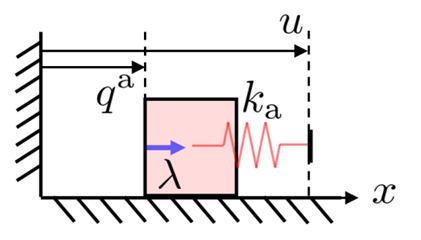

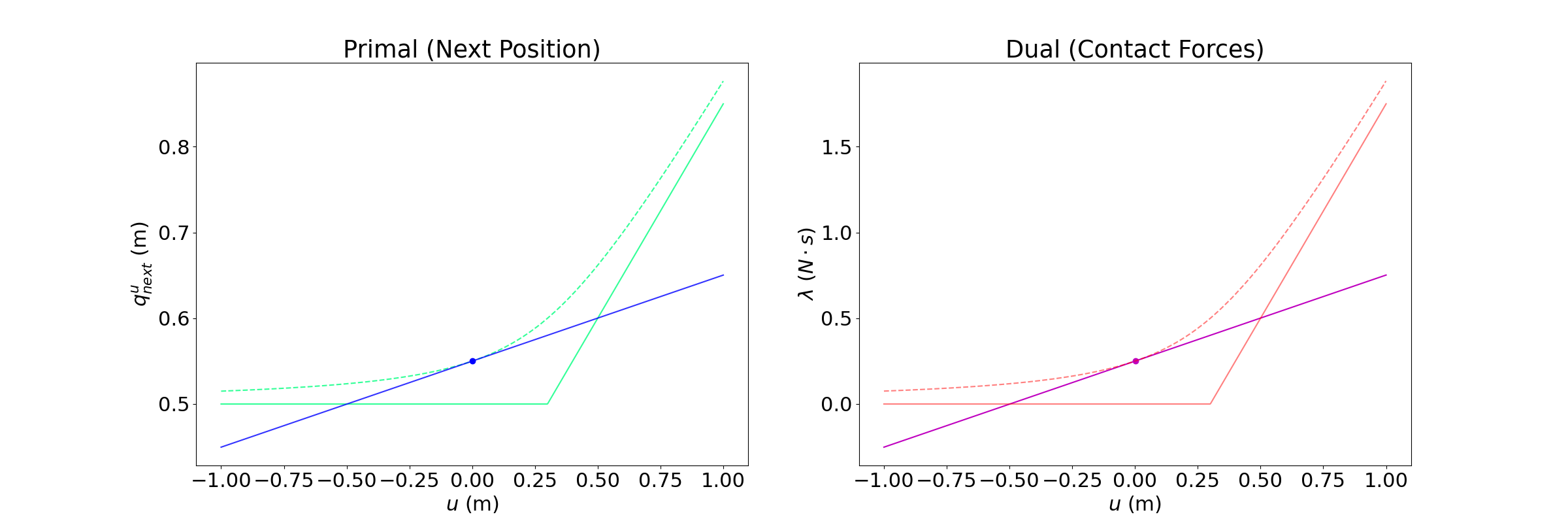

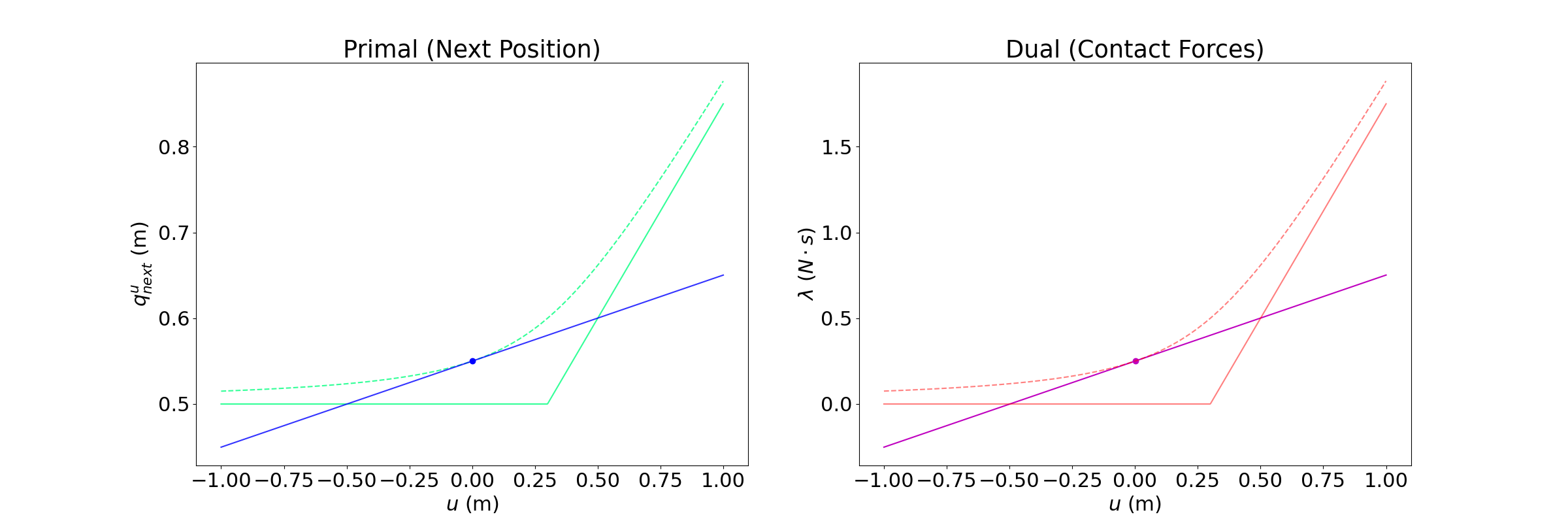

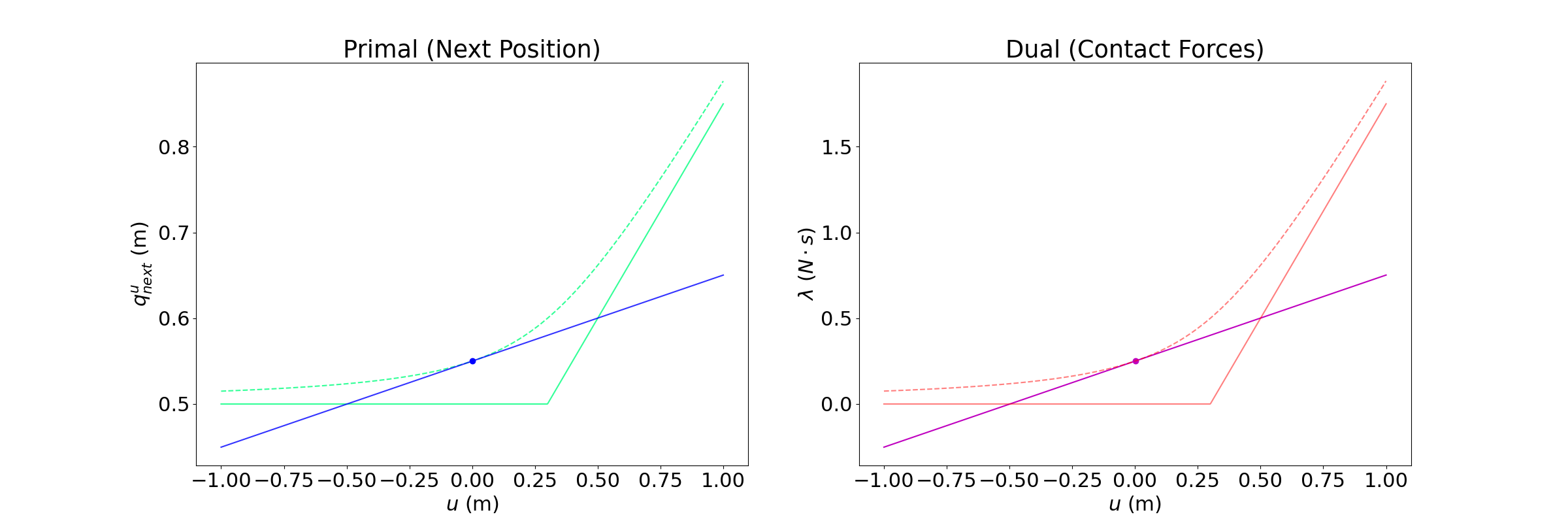

Example: Box vs. wall

Commanded next position

Actual next position

Cannot penetrate into the wall

Constrained-based Simulation

Interpretation with KKT Conditions

KKT Conditions

(Stationarity)

(Primal Feasibility)

(Dual Feasibility)

(Complementary Slackness)

Example: Box vs. wall

Commanded next position

Actual next position

Cannot penetrate into the wall

Constrained-based Simulation

Contact Constraints naturally encoded as

optimality conditions

Newton's Laws for Manipulation

Penalty Method

Optimization-based Dynamics

- Prone to stiff dynamics

- Oscillations can cause inefficiencies for chaining gradients

- Less stiff dynamics

- Long-horizon gradients by jumping through equilibrium

How you simulate matters for gradients!

Newton's Laws for Manipulation

Log-Barrier Relaxation

Optimization-based Dynamics

Randomized smoothing

Barrier smoothing

Optimization-based dynamics can be regularized easily!

(without Monte Carlo)

Differentiating with Sensitivity Analysis

How do we obtain the gradients from an optimization problem?

Differentiating with Sensitivity Analysis

How do we obtain the gradients from an optimization problem?

Differentiate through the optimality conditions!

Stationarity Condition

Implicit Function Theorem

Differentiate by u

Differentiating with Sensitivity Analysis

[HLKM 2023]

[MBMSHNCRVM 2020]

[PSYT 2023]

How do we obtain the gradients from an optimization problem?

Differentiate through the optimality conditions!

Stationarity Condition

Implicit Function Theorem

Differentiate by u

How have we learned from RL?

Lessons from RL

Model-based Remedy

-

Contact requires smoothing regularization

-

First-order gradients suffer from empirical bias under stiff dynamics

-

Chaining first-order gradients explode / vanish

-

Log-barrier relaxation provides smoothing regularization

-

Log-barrier smoothing requires no Monte Carlo estimation, and gradient is accurate.

-

Optimization-based dynamics provide longer-horizon gradients that leads to less explosion.

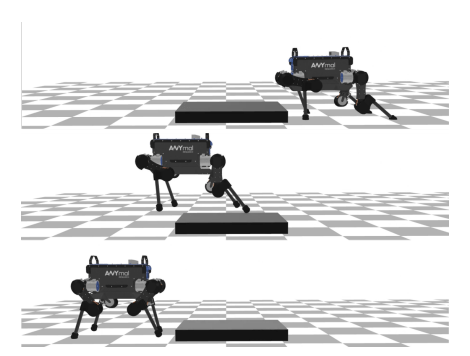

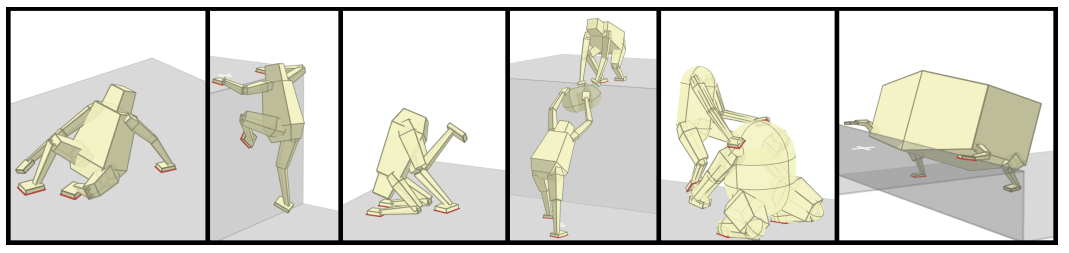

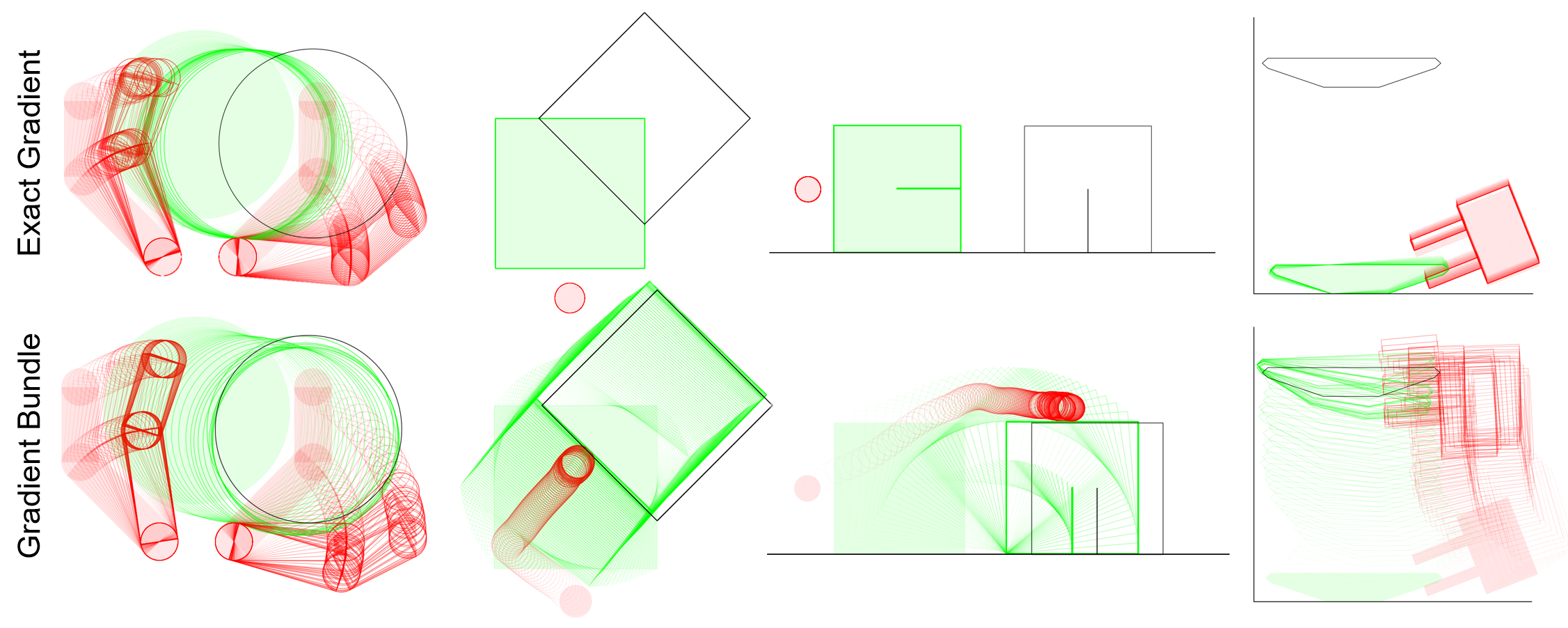

Gradient-based Optimization with Dynamics Smoothing

Single Horizon

Single Horizon

Multi-Horizon

Scales extremely well in highly-rich contact

Efficient solutions in ~10s.

Efficient Global Planning for

Highly Contact-Rich Systems

Fast Solution Time

Beyond Local Solutions

Contact Scalability

[PCT 2014]

[MTP 2012]

[SPT 2022]

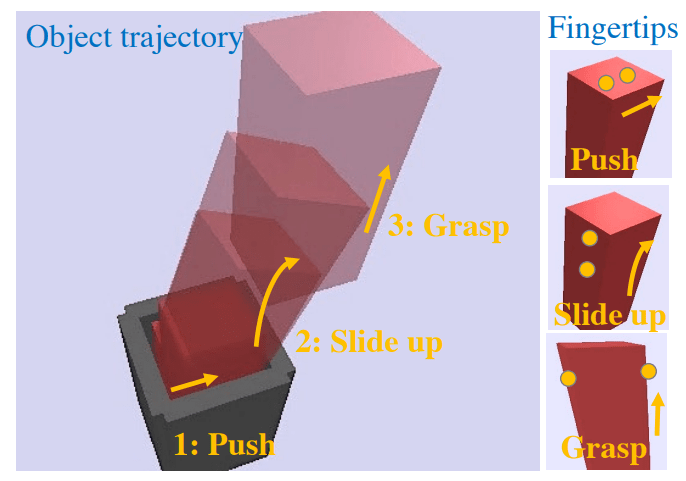

Gradient-based Optimization with Dynamics Smoothing

Fundamental Limitations with Local Search

How do we push in this direction?

How do we rotate further in presence of joint limits?

Highly non-local movements are required to solve these problems

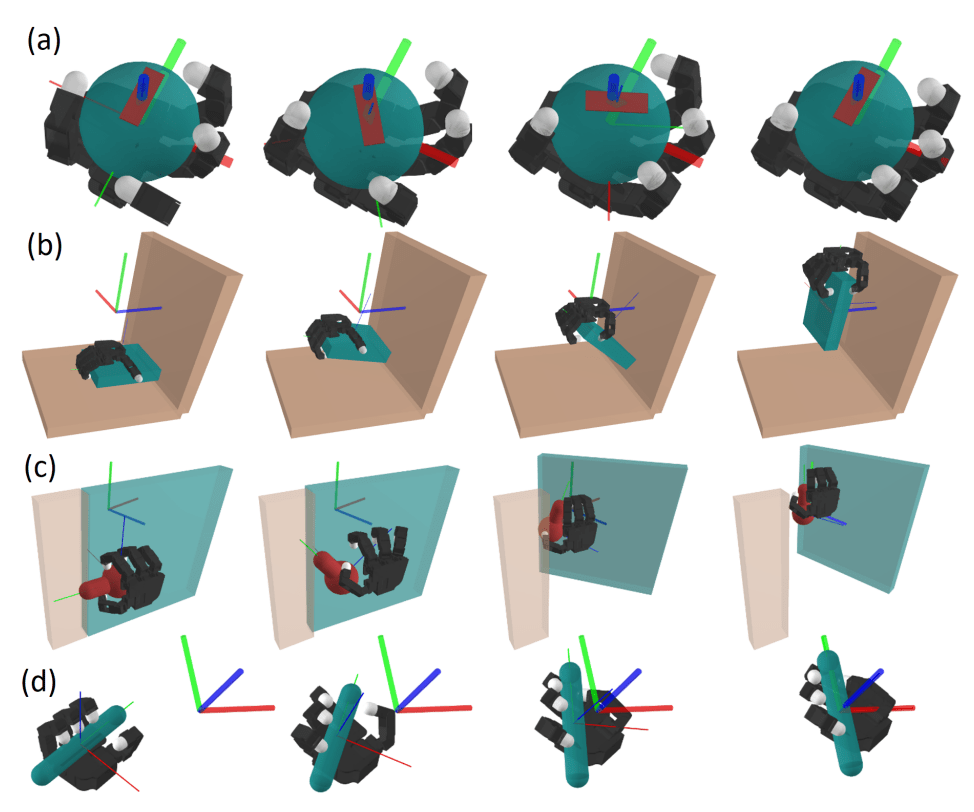

Chapter 5. Efficient Global Search

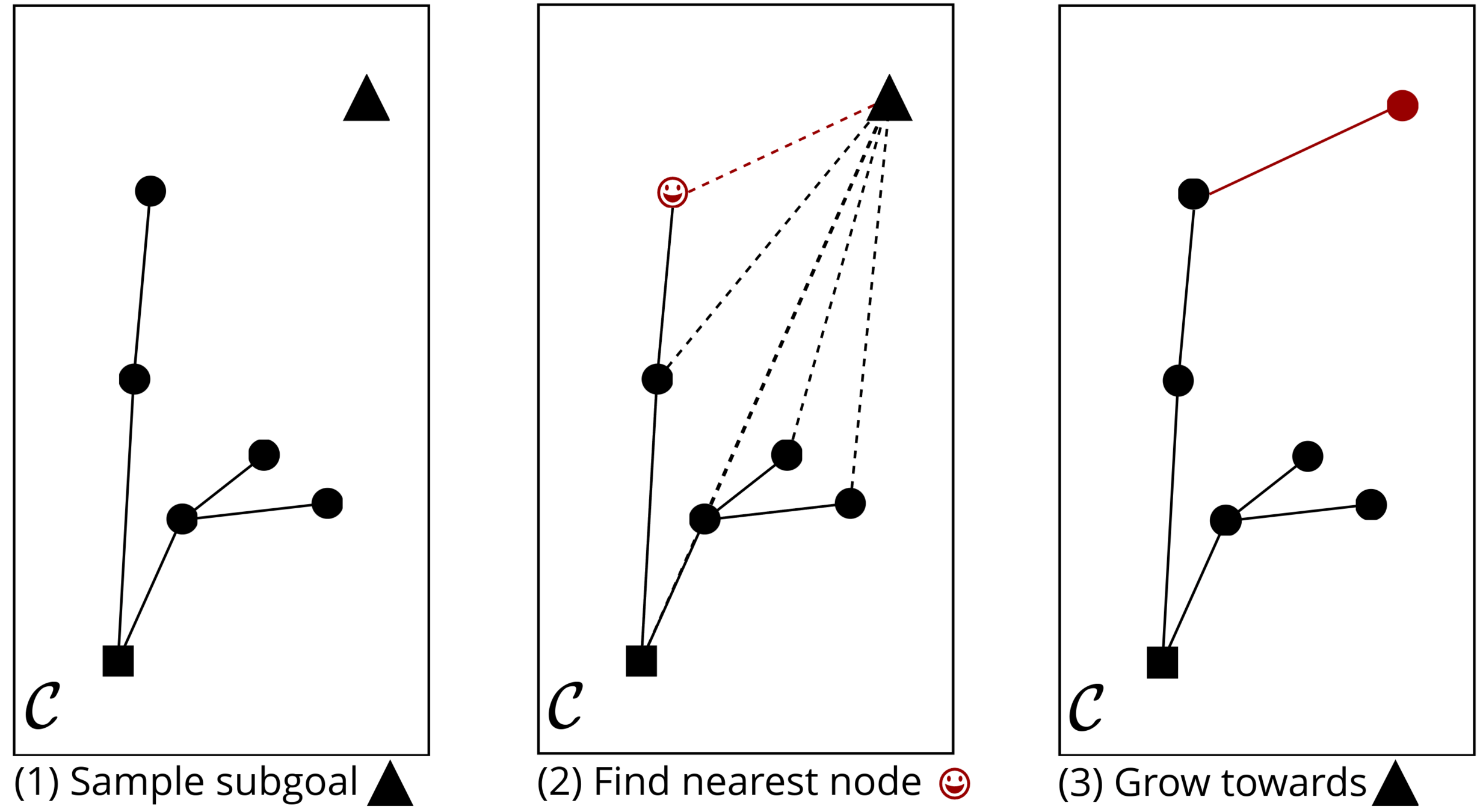

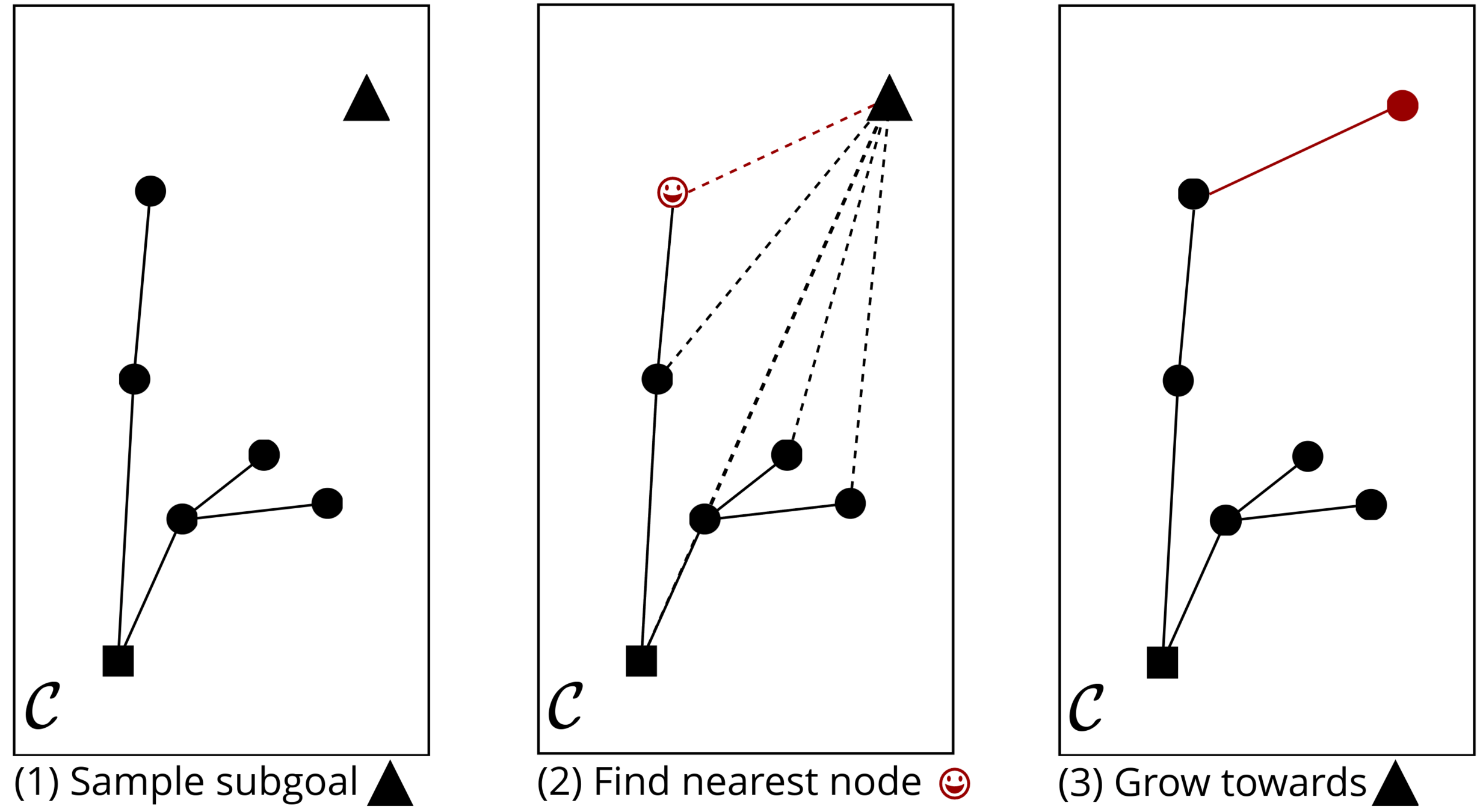

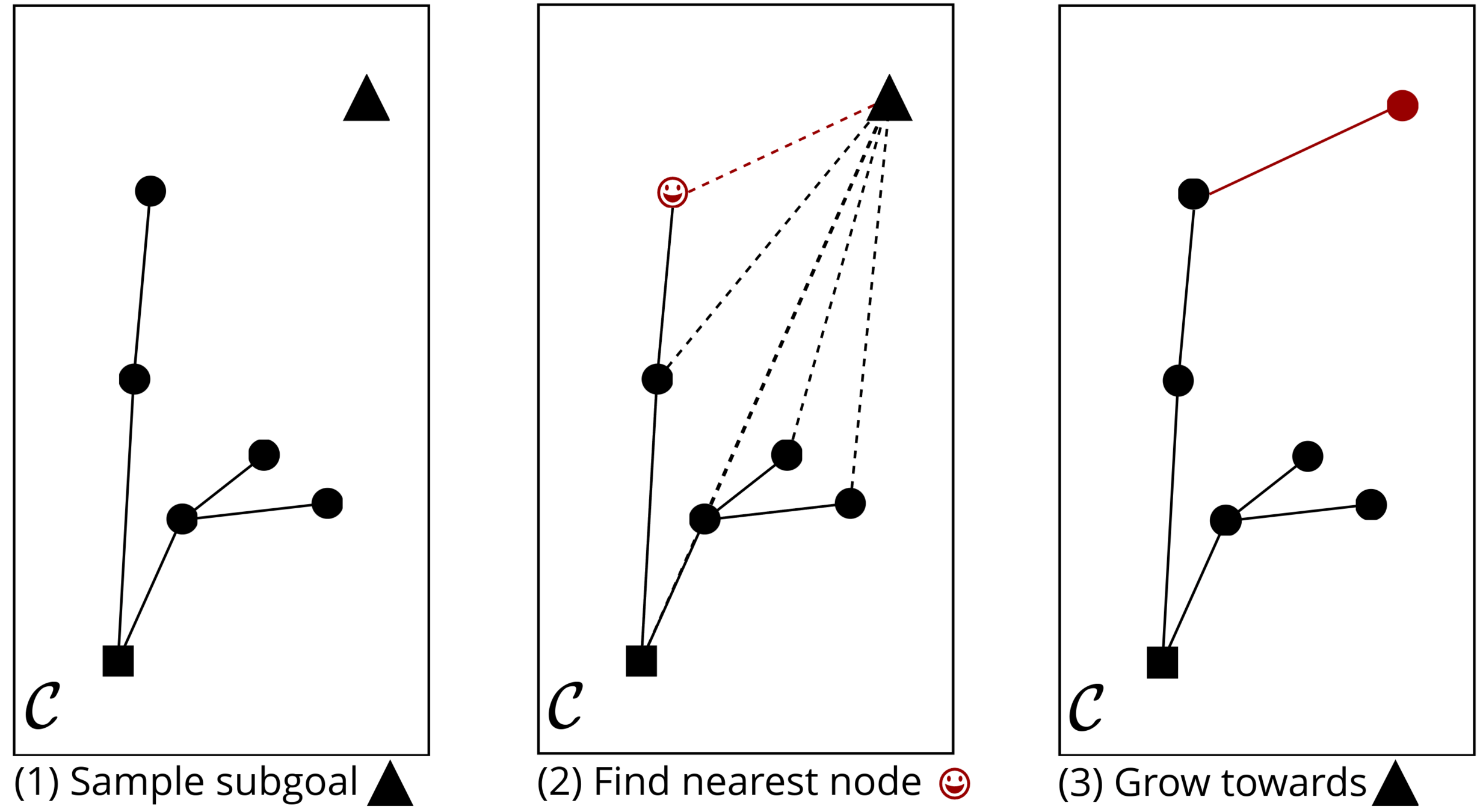

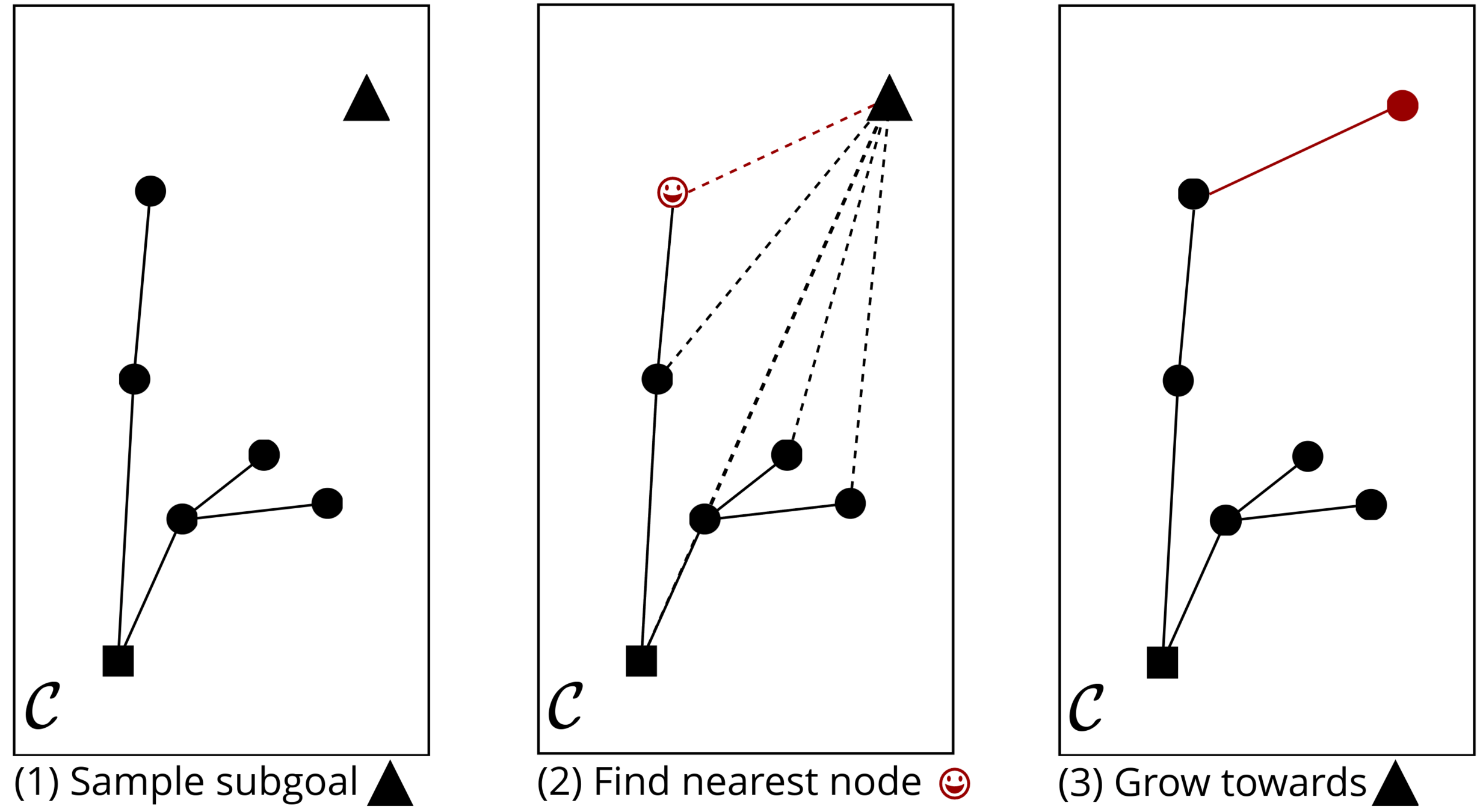

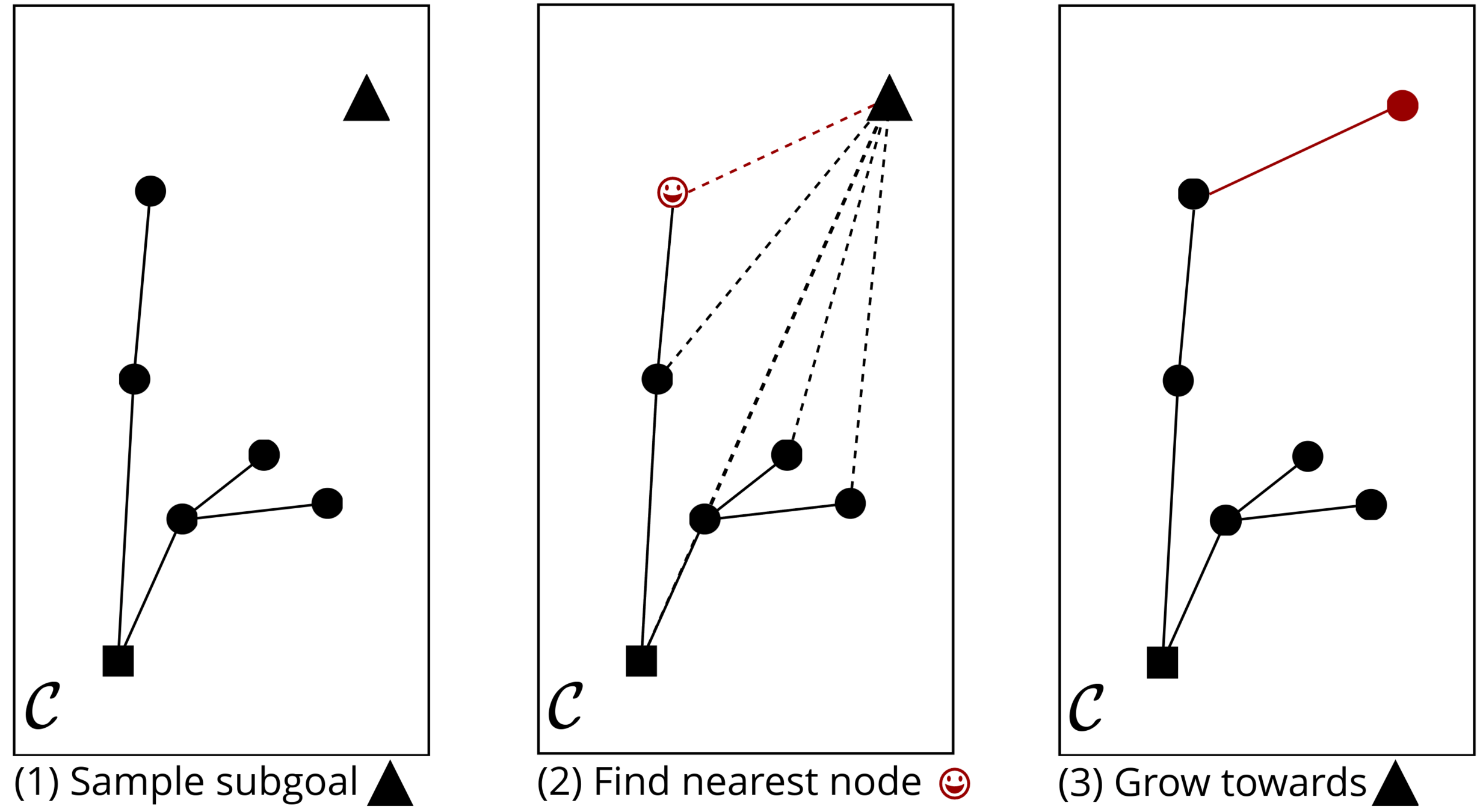

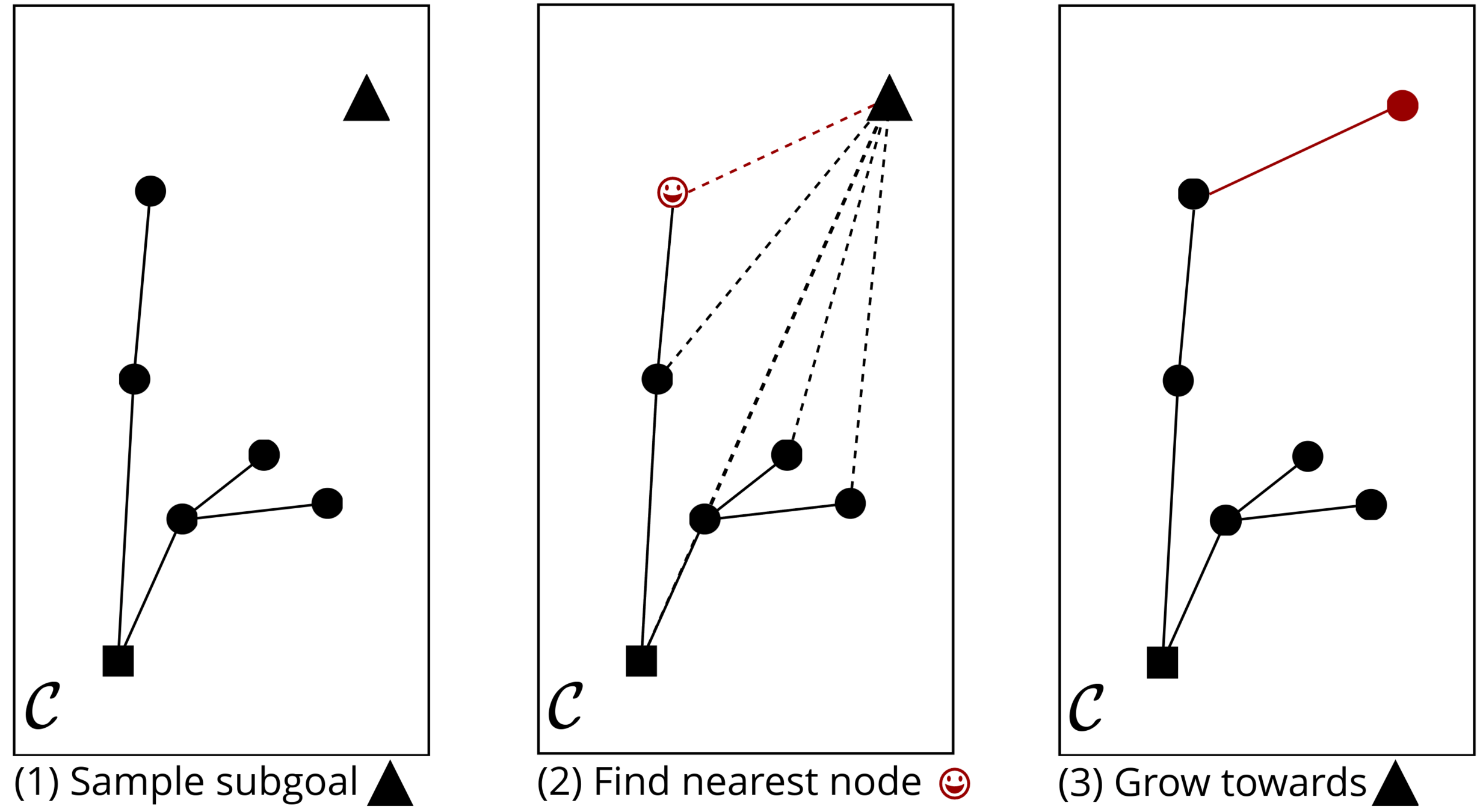

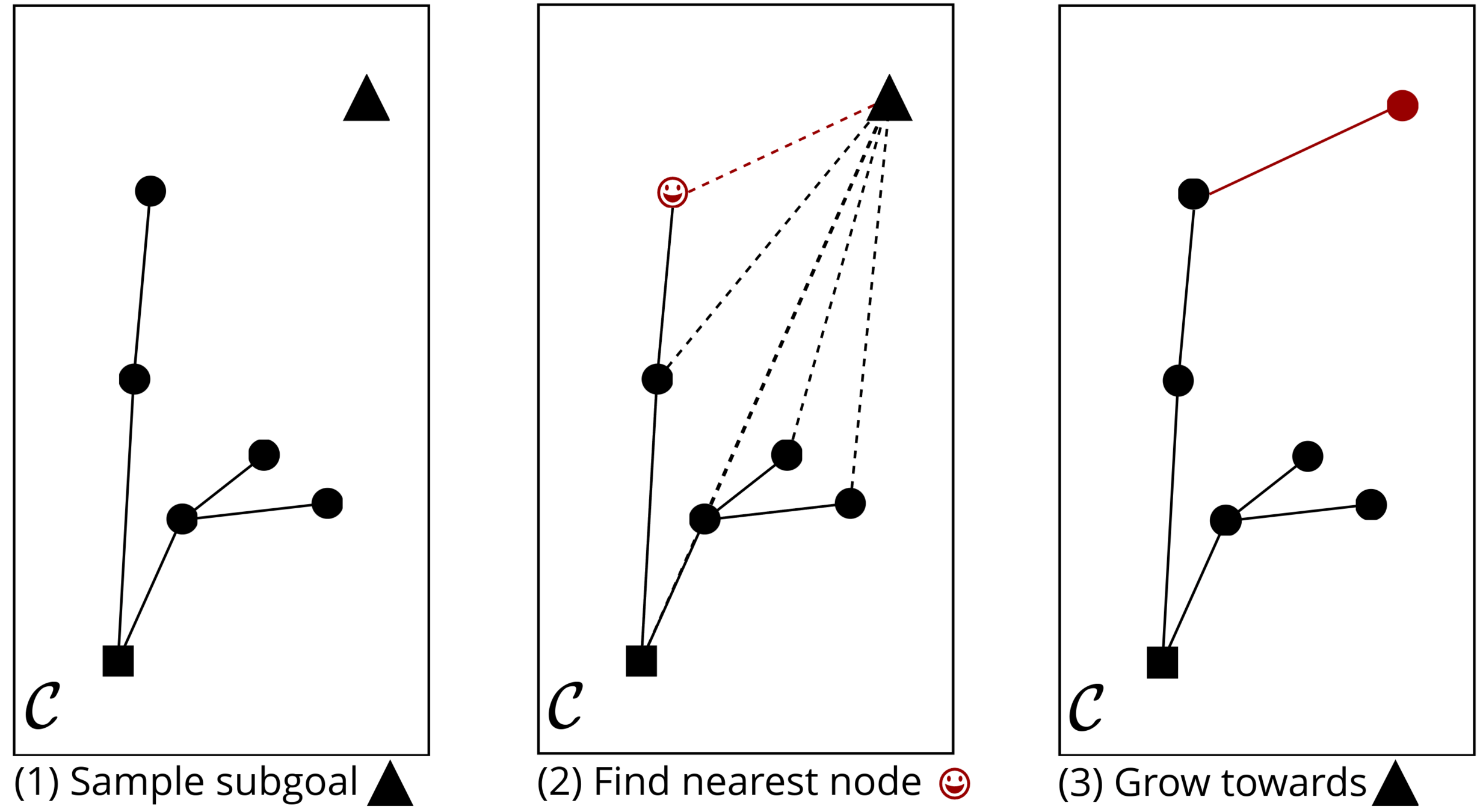

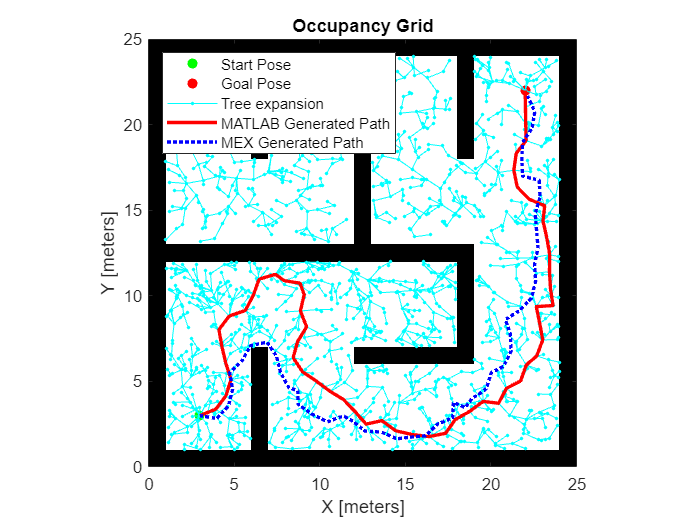

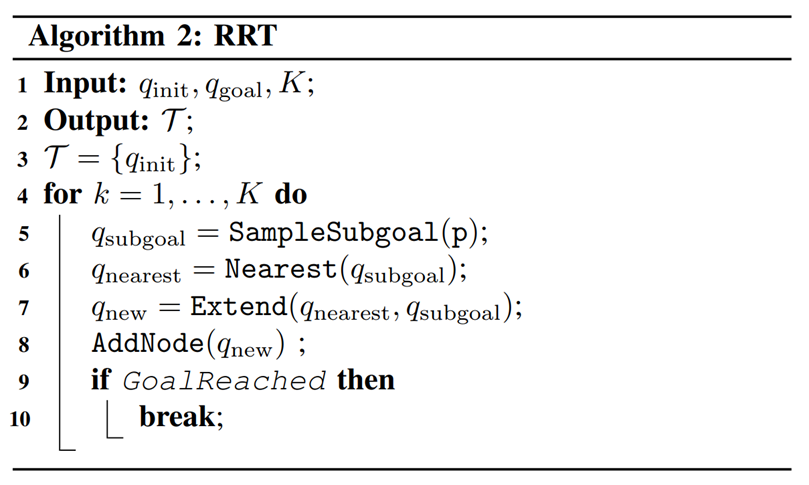

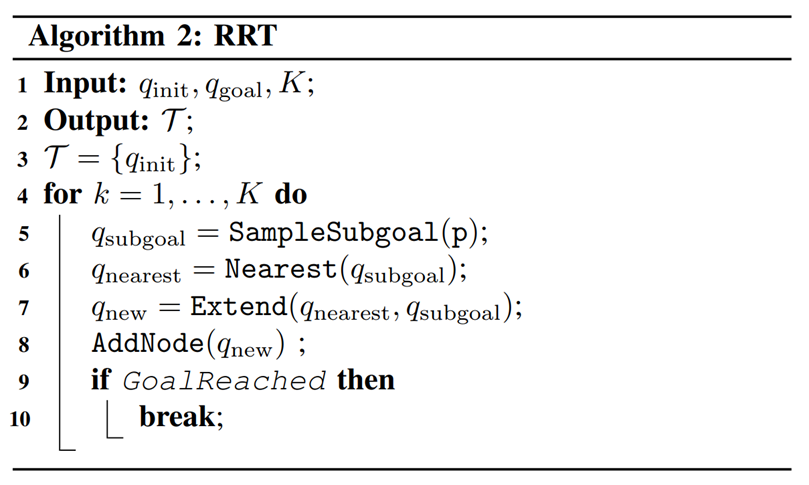

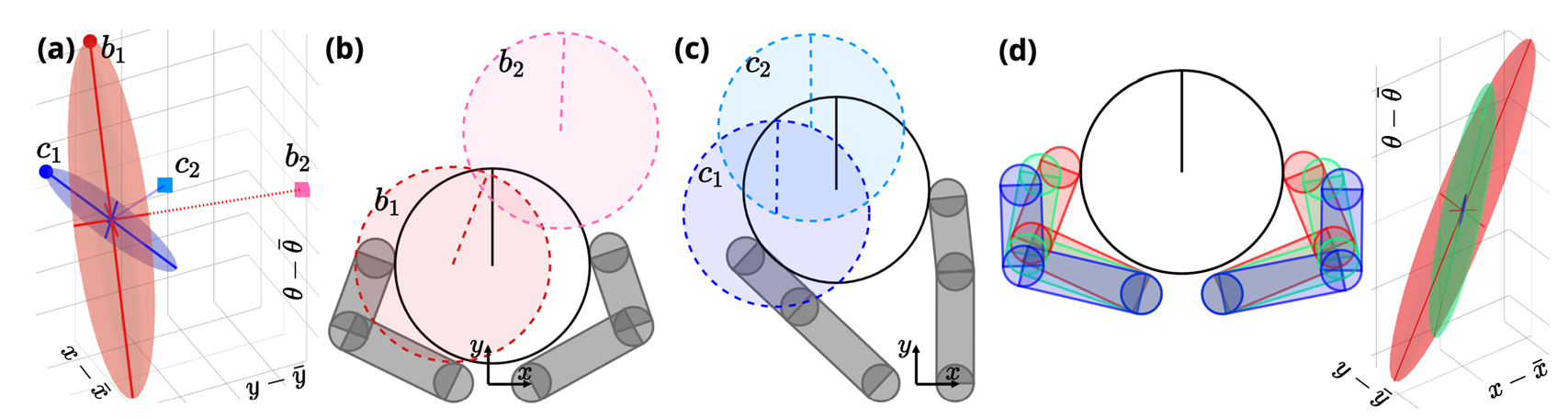

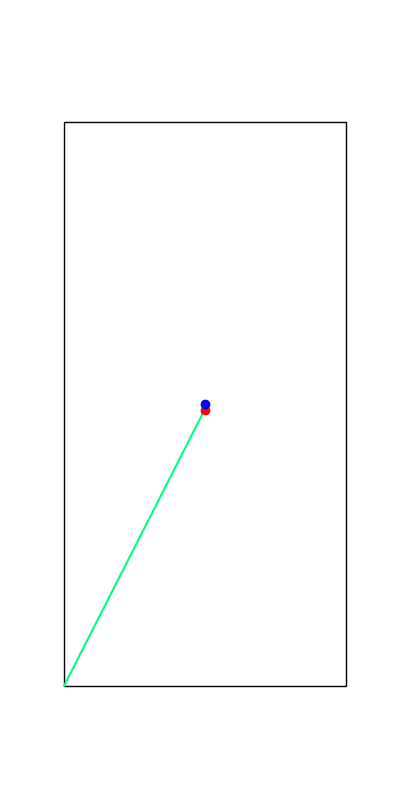

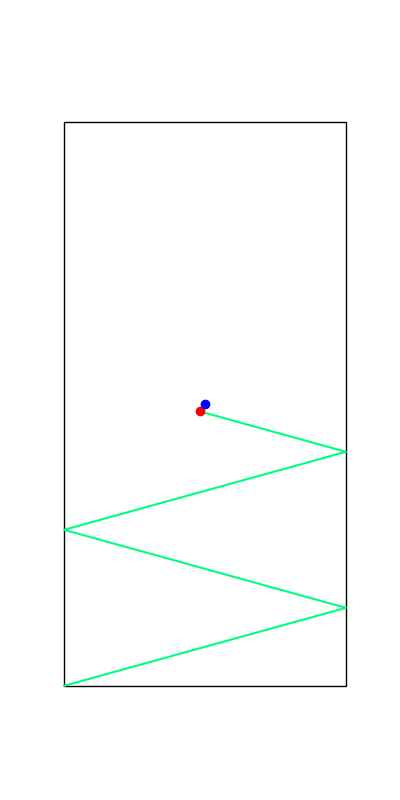

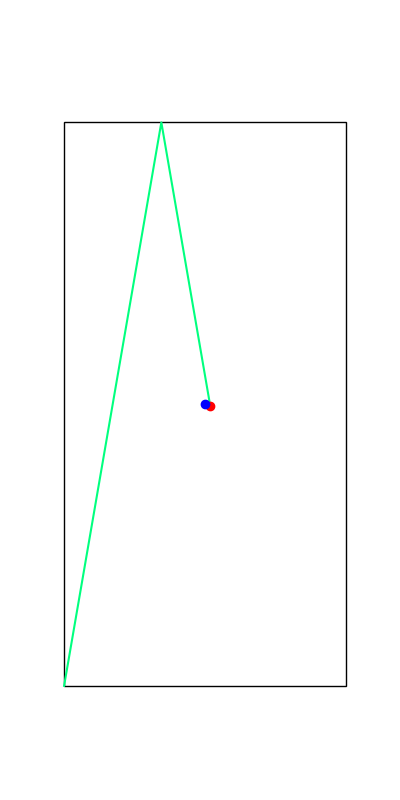

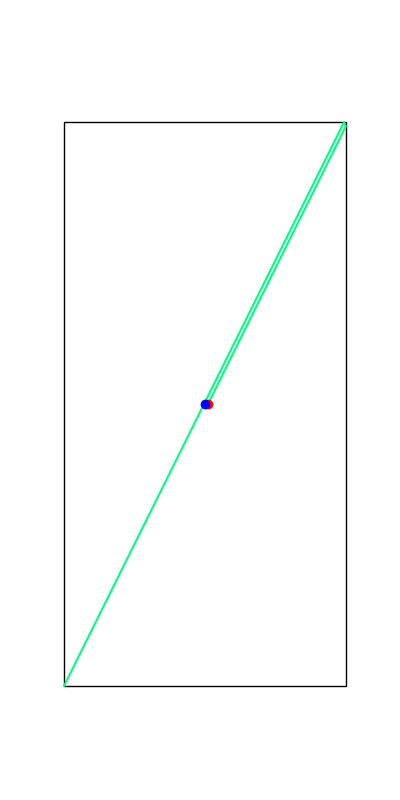

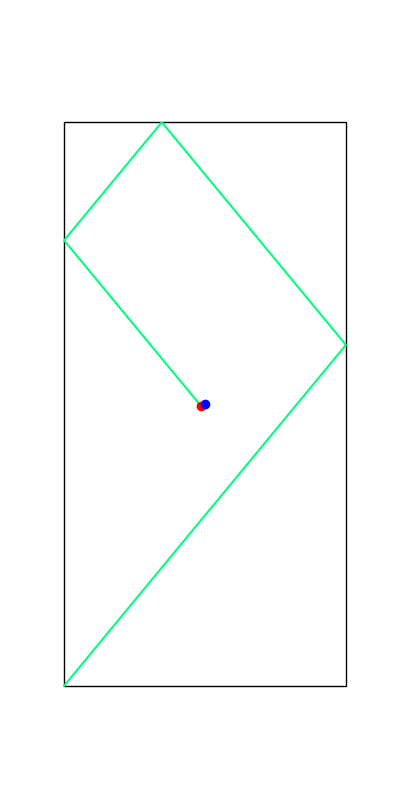

Rapidly Exploring Random Tree (RRT) Algorithm

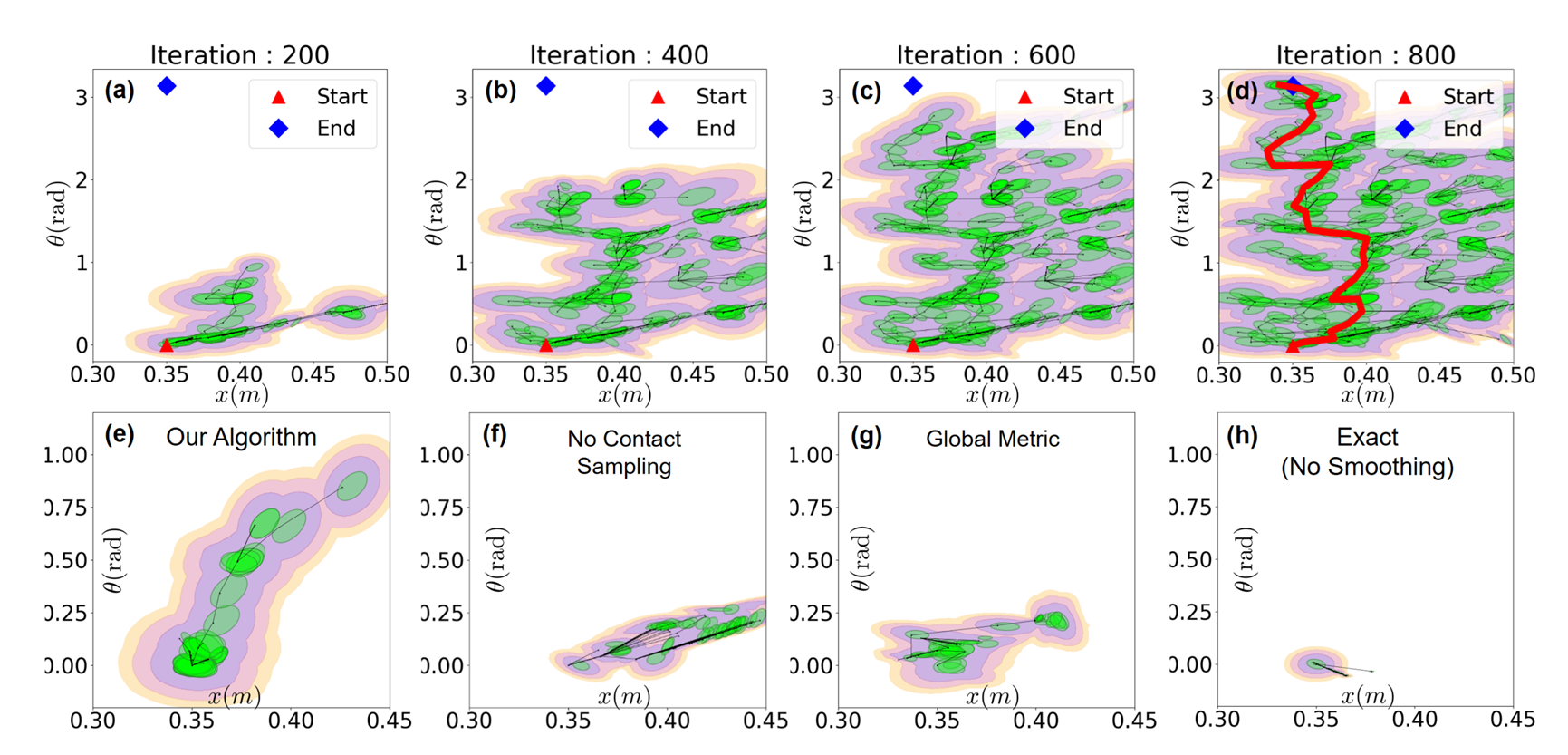

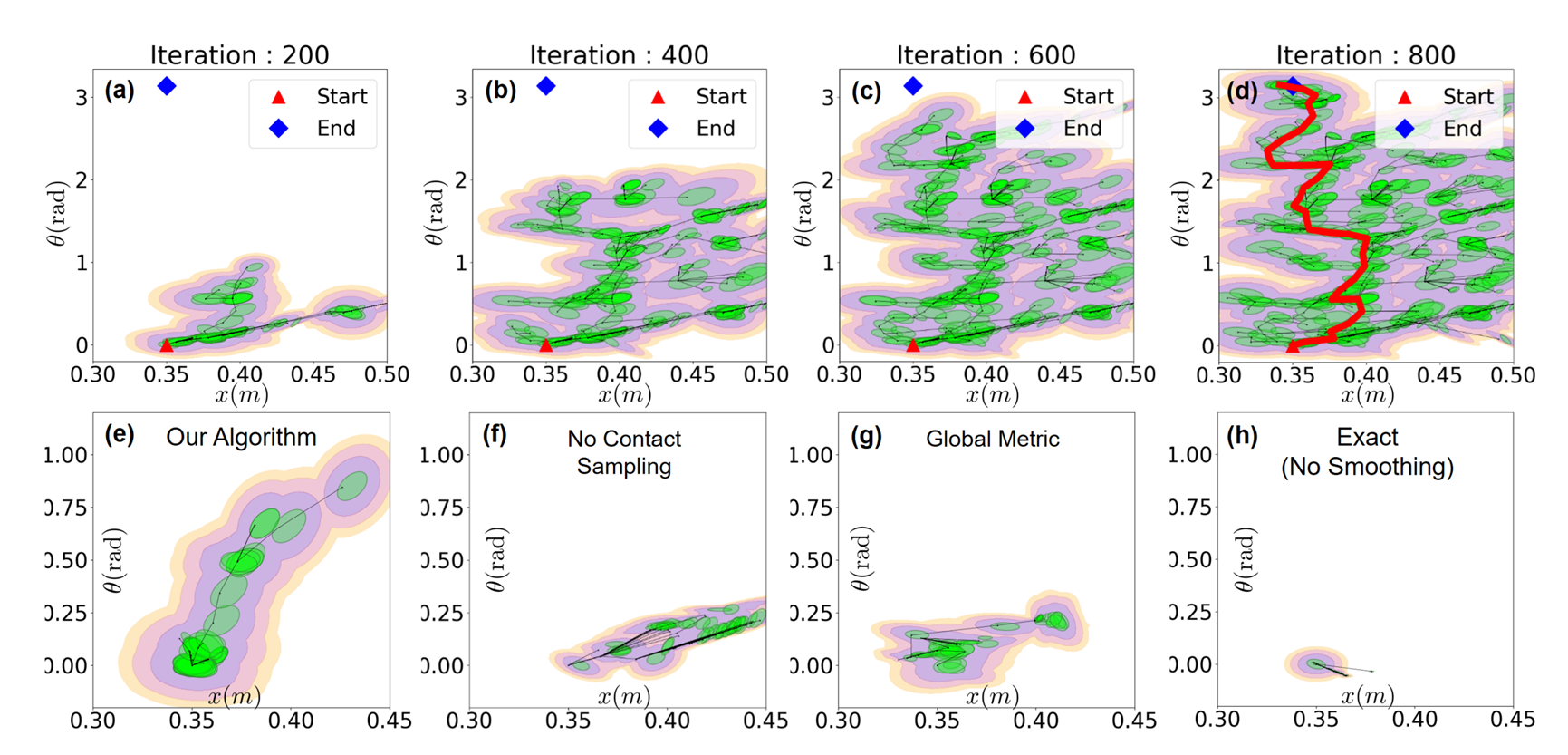

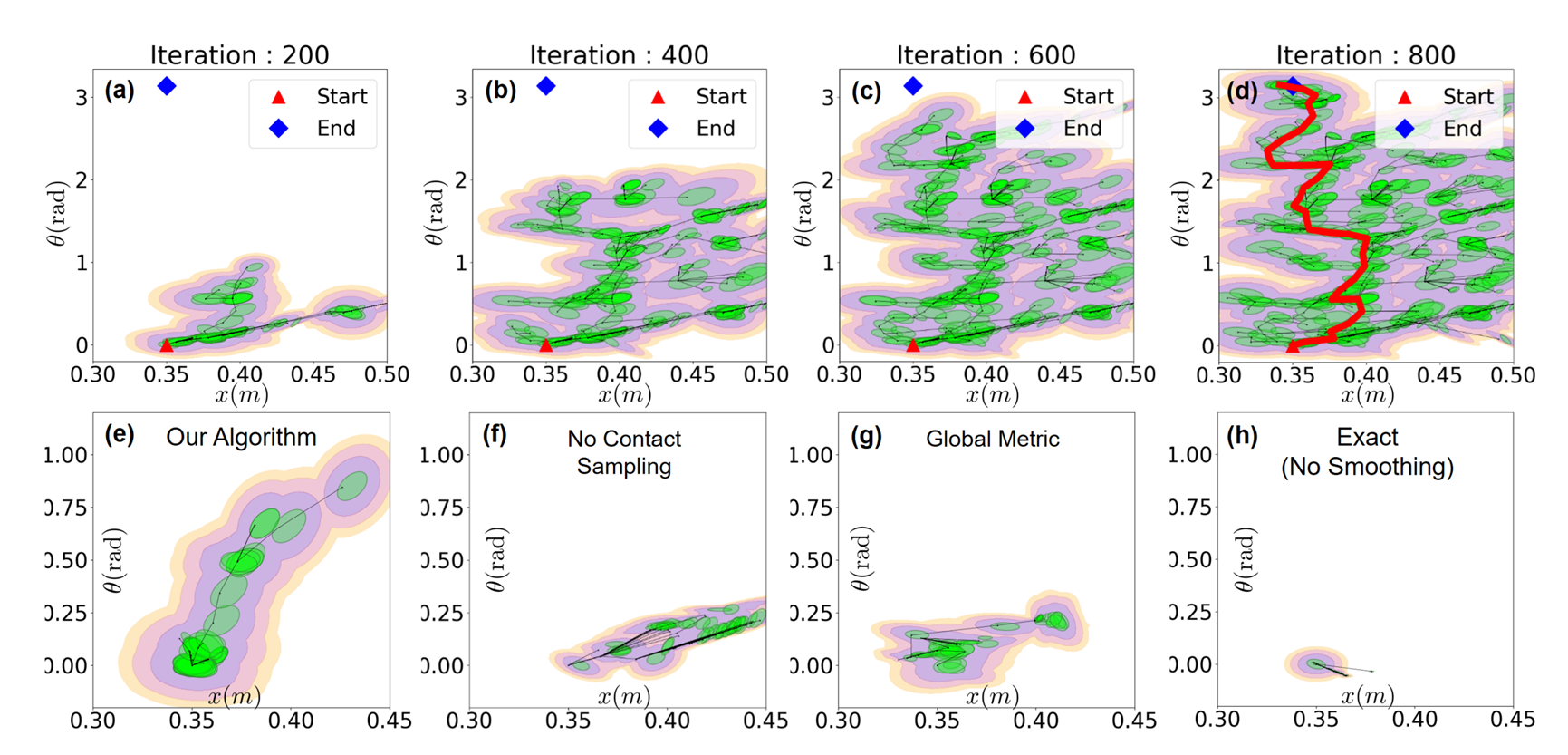

Figure Adopted from Tao Pang's Thesis Defense, MIT, 2023

(1) Sample subgoal

(2) Find nearest node

(3) Grow towards

Rapidly Exploring Random Tree (RRT) Algorithm

Steven M. LaValle, "Planning Algorithms", Cambridge University Press , 2006.

RRT for Dynamics

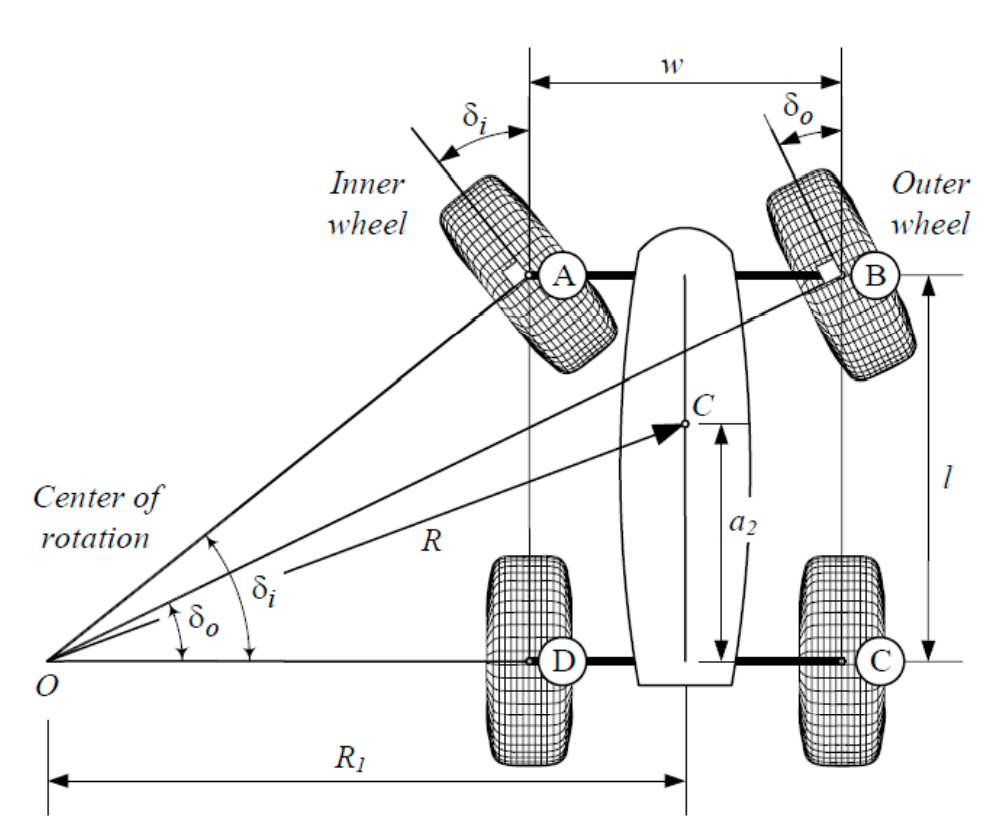

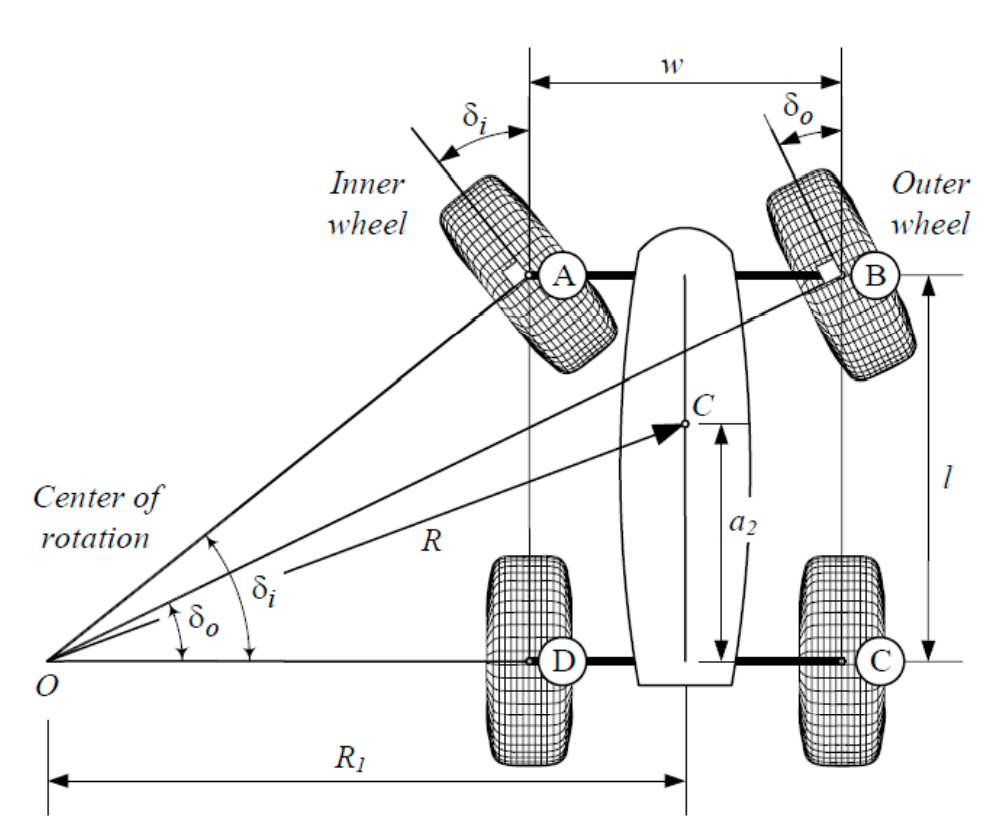

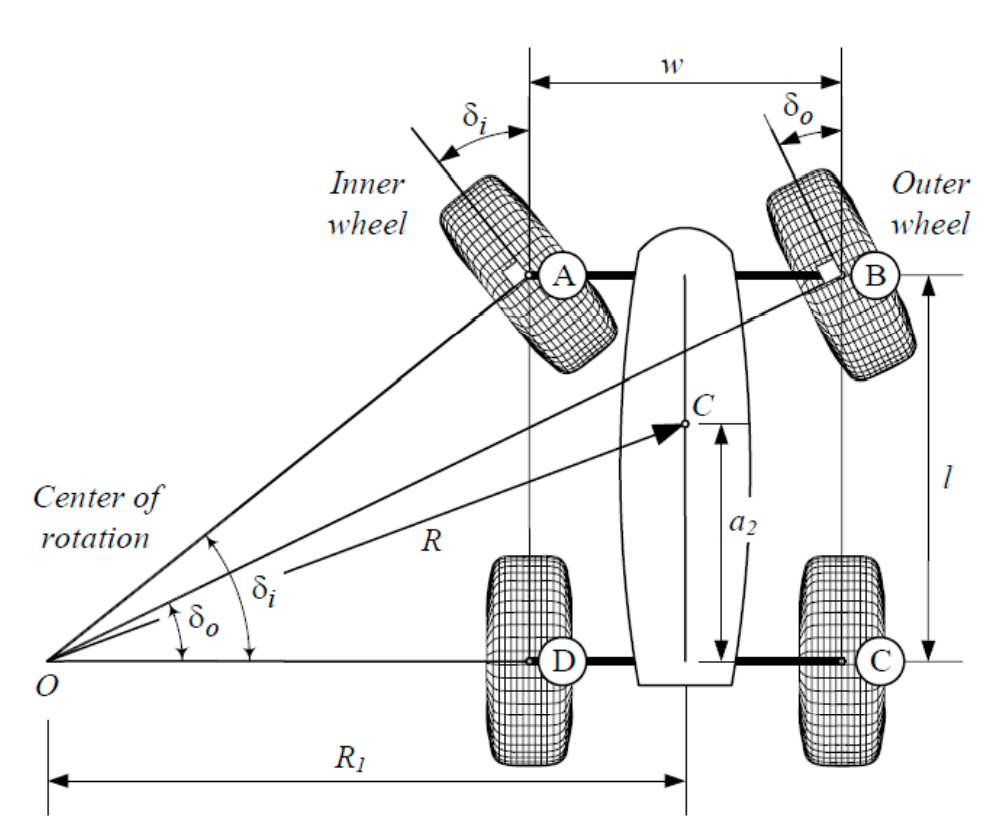

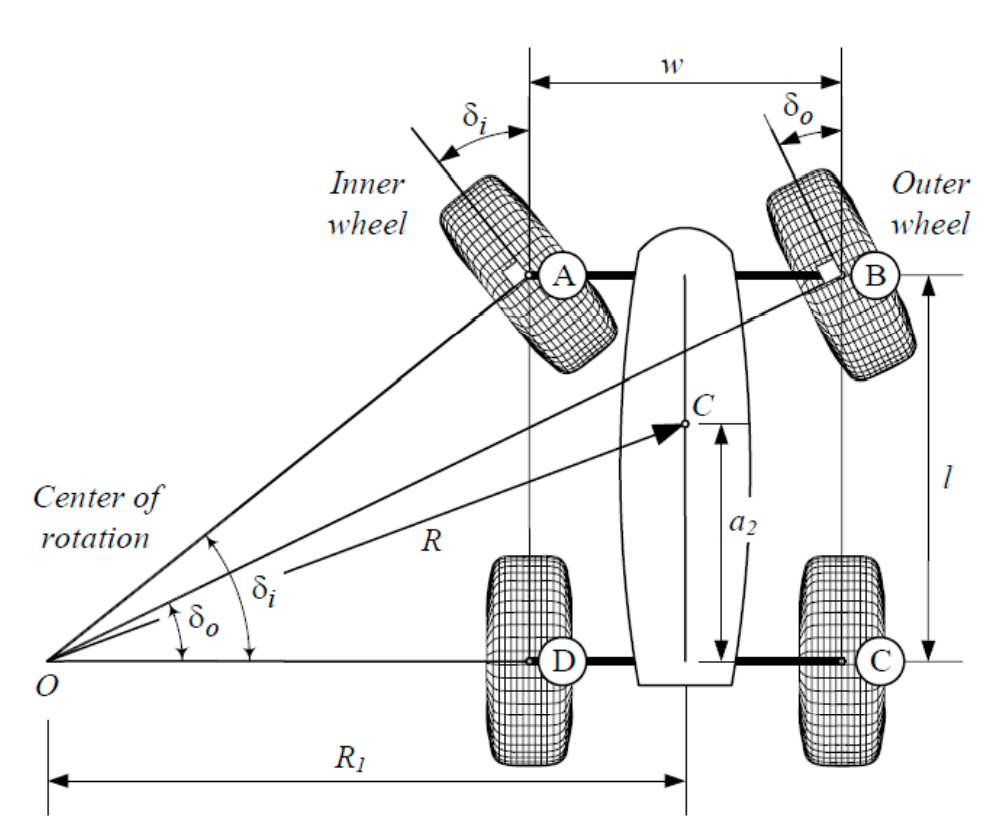

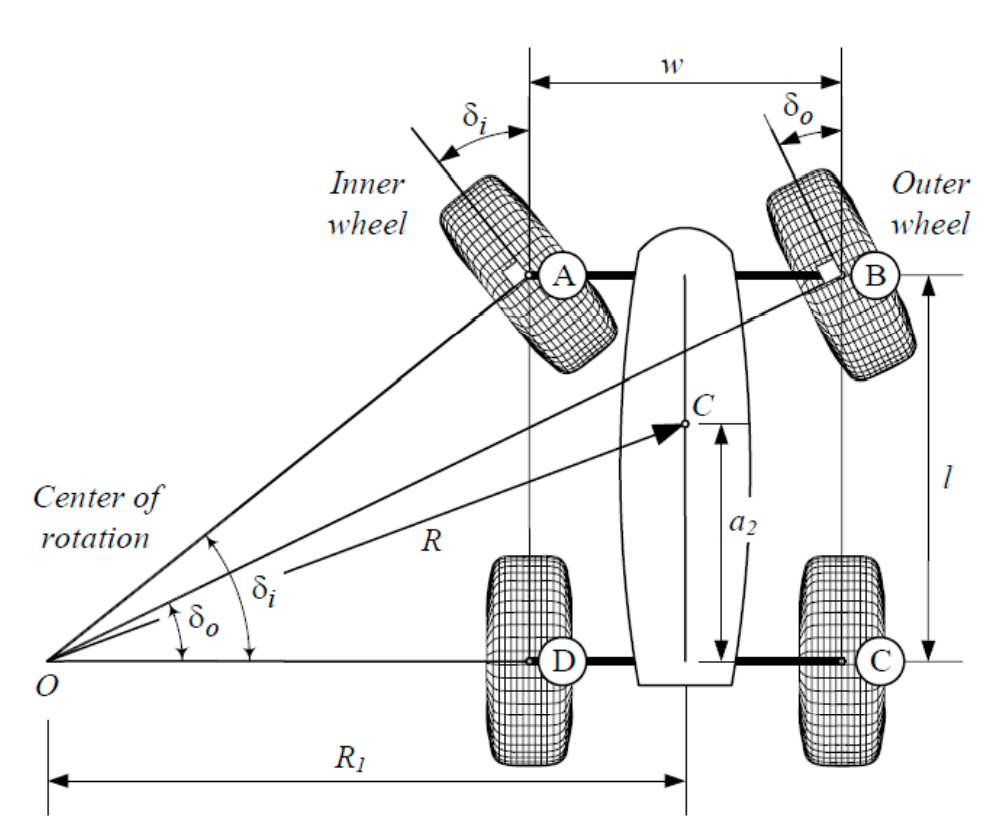

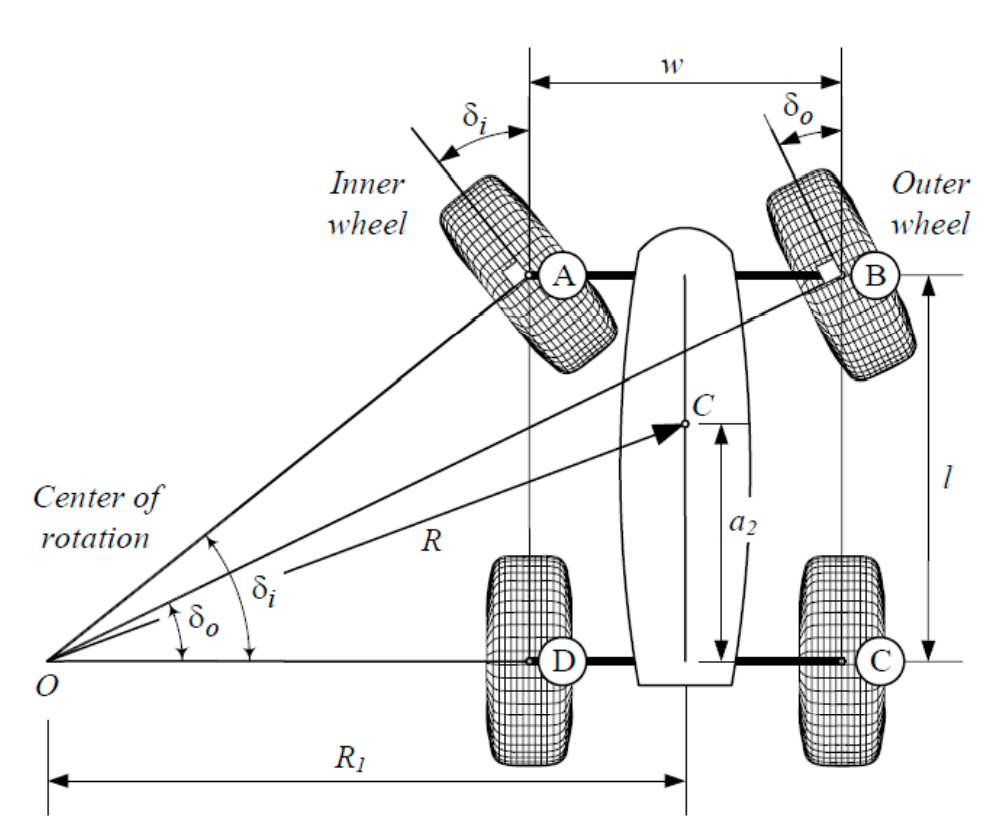

Works well for Euclidean spaces. Why is it hard to use for dynamical systems?

RRT for Dynamics

Works well for Euclidean spaces. Why is it hard to use for dynamical systems?

What is "Nearest" in a dynamical system?

Closest in Euclidean space might not be closest for dynamics.

Rajamani et al., "Vehicle Dynamics"

SDW 2018

RRT for Dynamics

Works well for Euclidean spaces. Why is it hard to use for dynamical systems?

How do we "grow towards" a chosen subgoal?

Need to find actions (inputs) that would drive the system to the chosen subgoal.

Rajamani et al., "Vehicle Dynamics"

SDW 2018

RRT for Dynamics

Works well for Euclidean spaces. Why is it hard to use for dynamical systems?

How do we "grow towards" a chosen subgoal?

Note that these decisions are coupled

SDW 2018

Rajamani et al., "Vehicle Dynamics"

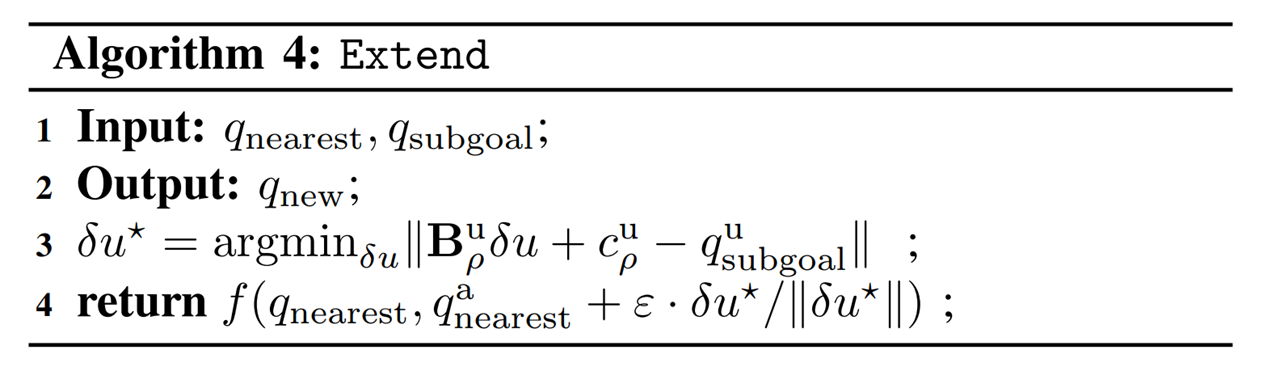

RRT for Dynamics

How do we "grow towards" a chosen subgoal?

We already know how to do this!

Inverse Dynamics

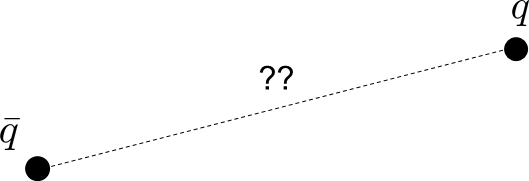

A Dynamically Consistent Distance Metric

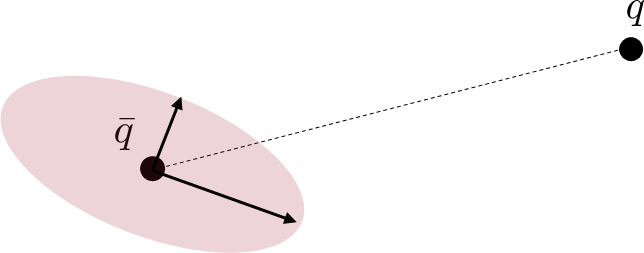

What is the right distance metric

Fix some nominal values for ,

How far is from ?

A Dynamically Consistent Distance Metric

What is the right distance metric

The least amount of "Effort"

to reach the goal

Fix some nominal values for ,

How far is from ?

A Dynamically Consistent Distance Metric

What is the right distance metric

What is the right distance metric

Fix some nominal values for ,

How far is from ?

The least amount of "Effort"

to reach the goal

A Dynamically Consistent Distance Metric

We can derive a closed-form solution under linearization of dynamics

Linearize around (no movement)

Jacobian of dynamics

A Dynamically Consistent Distance Metric

We can derive a closed-form solution under linearization of dynamics

Mahalanobis Distance induced by the Jacobian

Linearize around (no movement)

Jacobian of dynamics

A Dynamically Consistent Distance Metric

Mahalanobis Distance induced by the Jacobian

Locally, dynamics are:

Mahalanobis Distance induced by the Jacobian

A Dynamically Consistent Distance Metric

Mahalanobis Distance induced by the Jacobian

Locally, dynamics are:

Large Singular Values,

Less Required Input

A Dynamically Consistent Distance Metric

Locally, dynamics are:

(In practice, requires regularization)

Mahalanobis Distance induced by the Jacobian

Zero Singular Values,

Requires Infinite Input

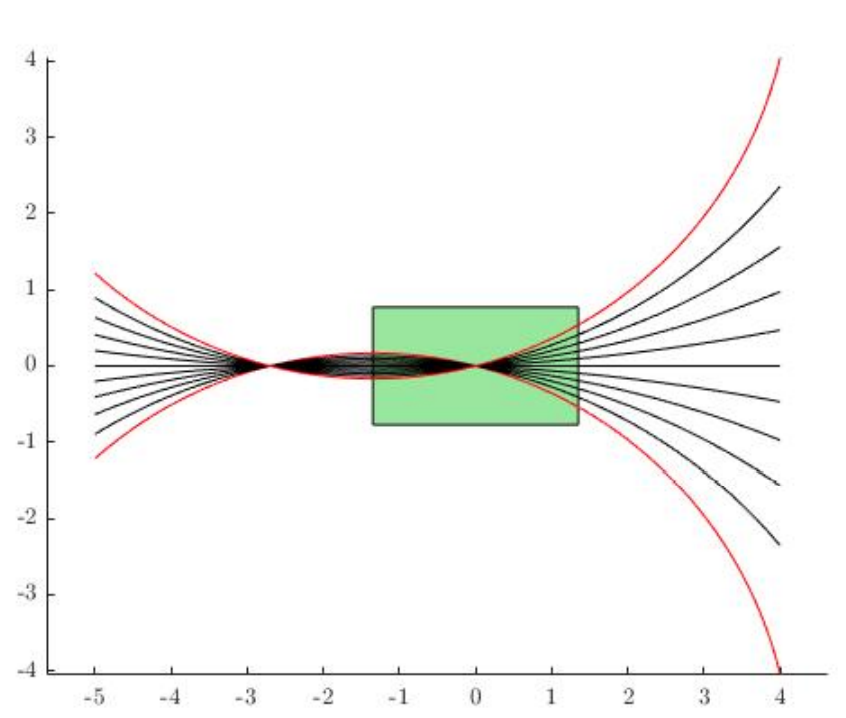

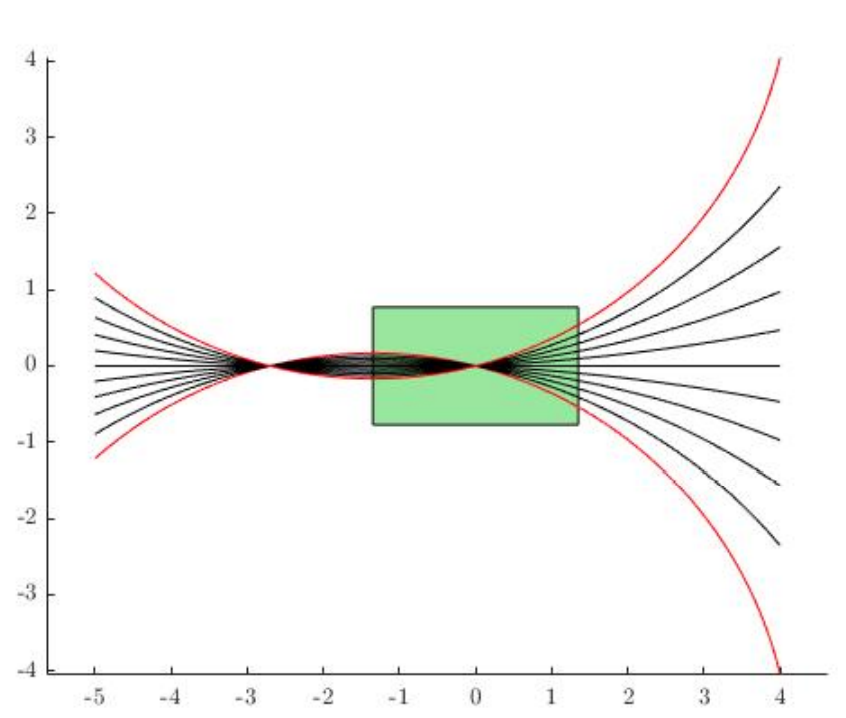

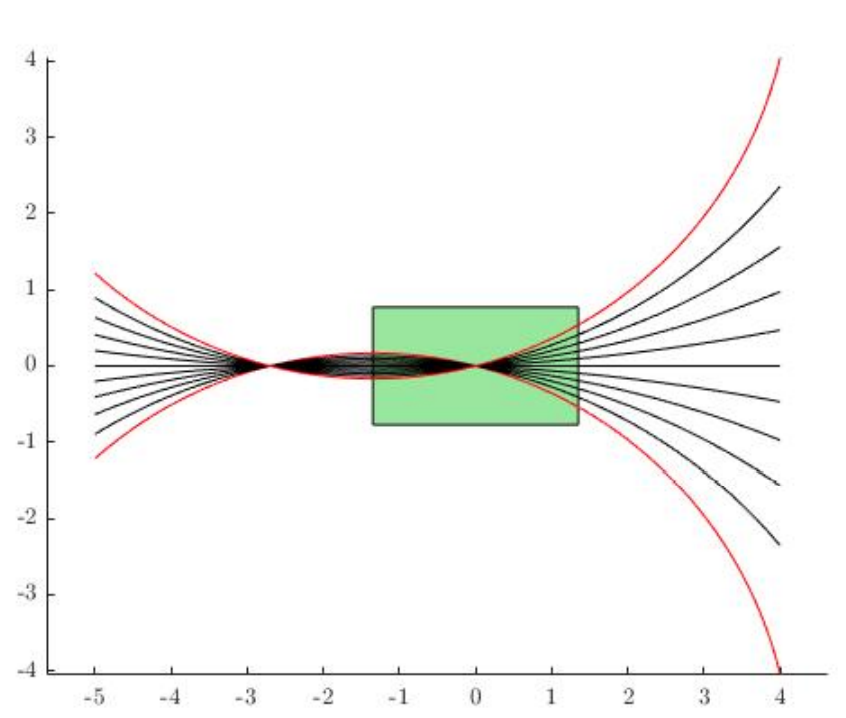

A Dynamically Consistent Distance Metric

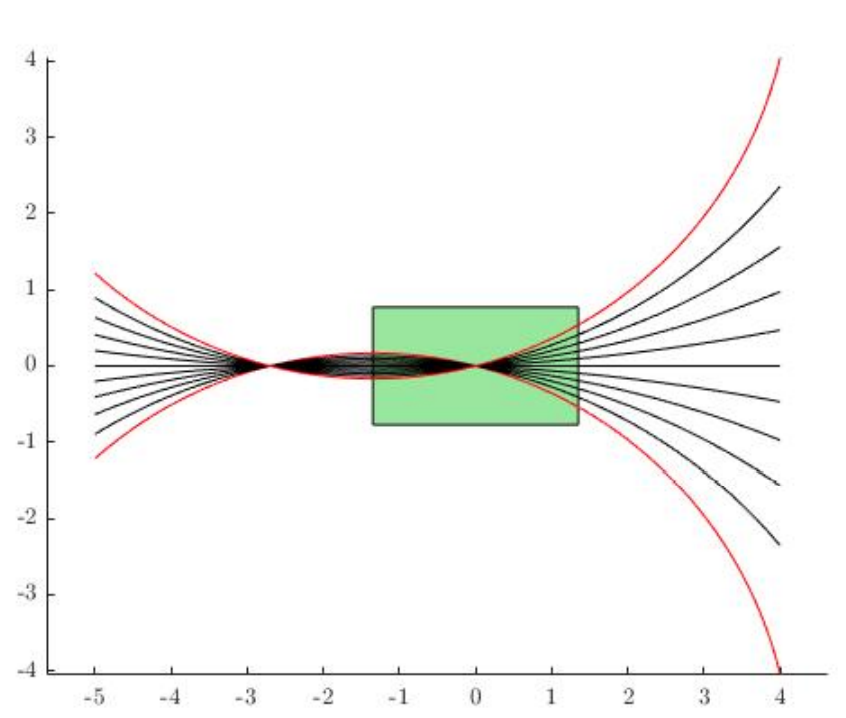

Contact problem strikes again.

According to this metric, infinite distance if no contact is made!

What if there is no contact?

Mahalanobis Distance induced by the Jacobian

A Dynamically Consistent Distance Metric

Again, smoothing comes to the rescue!

Mahalanobis Distance induced by the Jacobian

A Dynamically Consistent Distance Metric

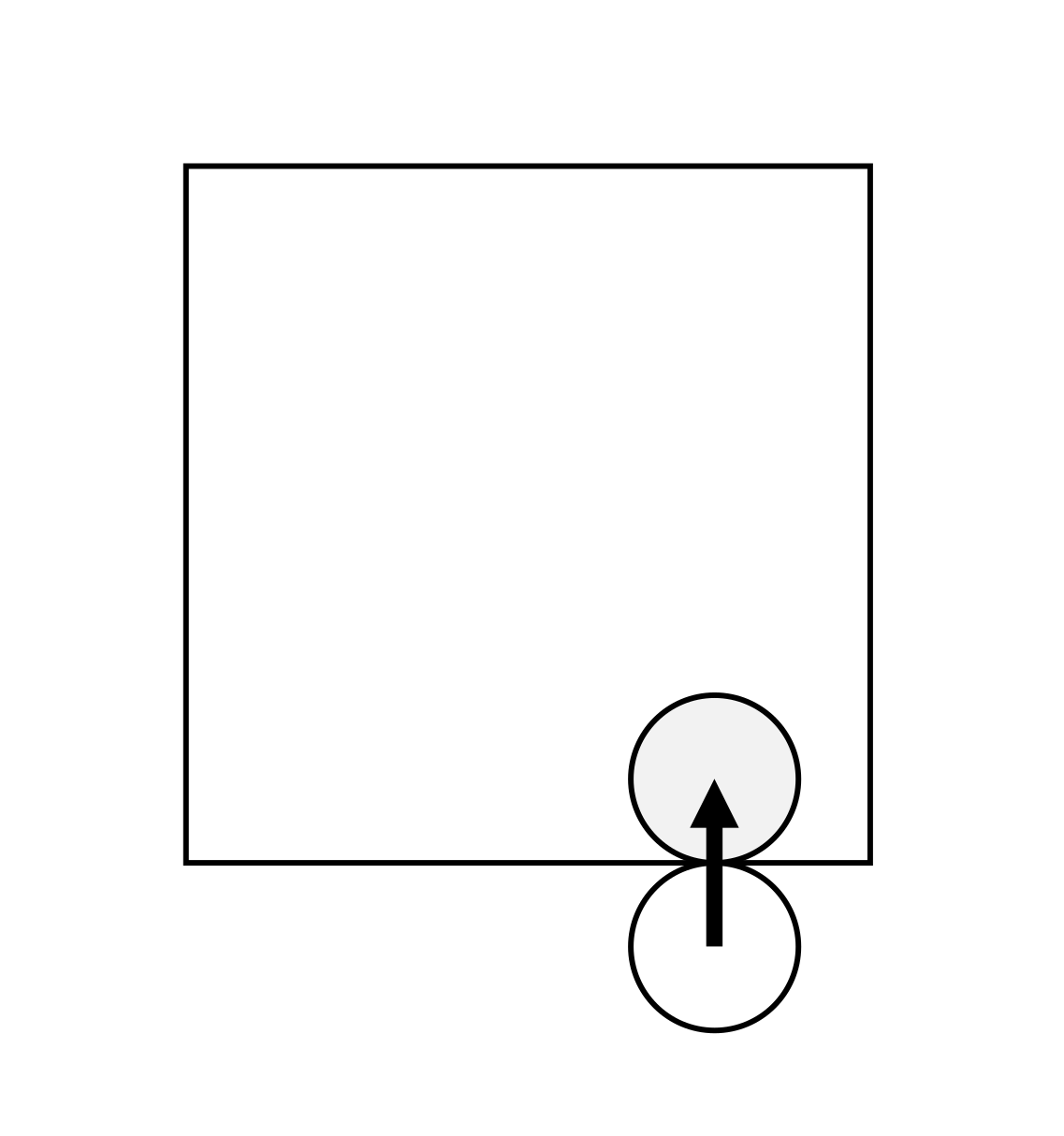

Now we can apply RRT to contact-rich systems!

A Dynamically Consistent Distance Metric

Now we can apply RRT to contact-rich systems!

However, these still require lots of random extensions!

A Dynamically Consistent Distance Metric

Now we can apply RRT to contact-rich systems!

However, these still require lots of random extensions!

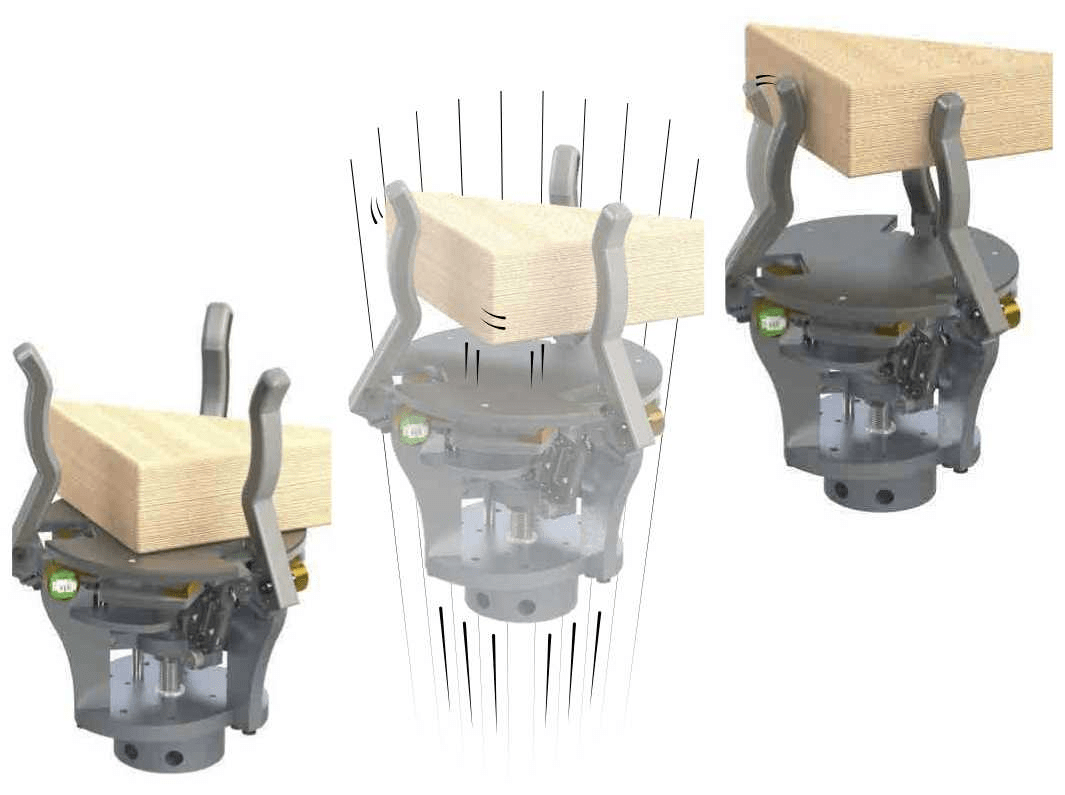

With some chance, place the actuated object in a different configuration.

(Regrasping / Contact-Sampling)

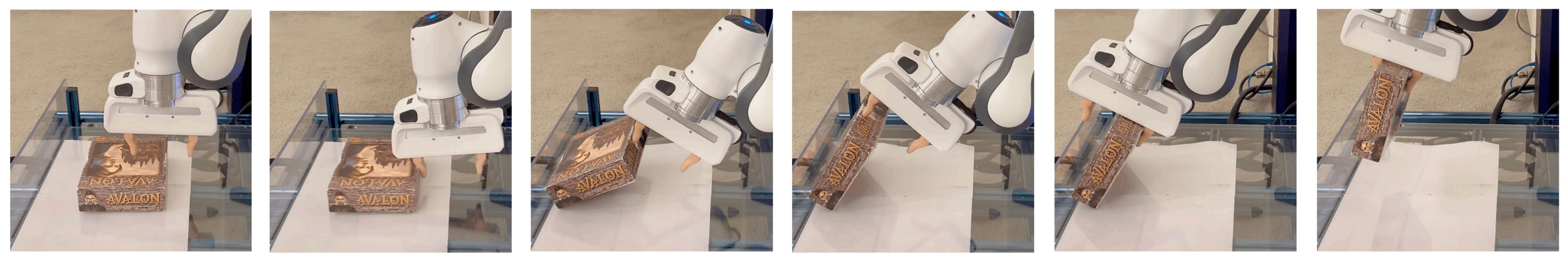

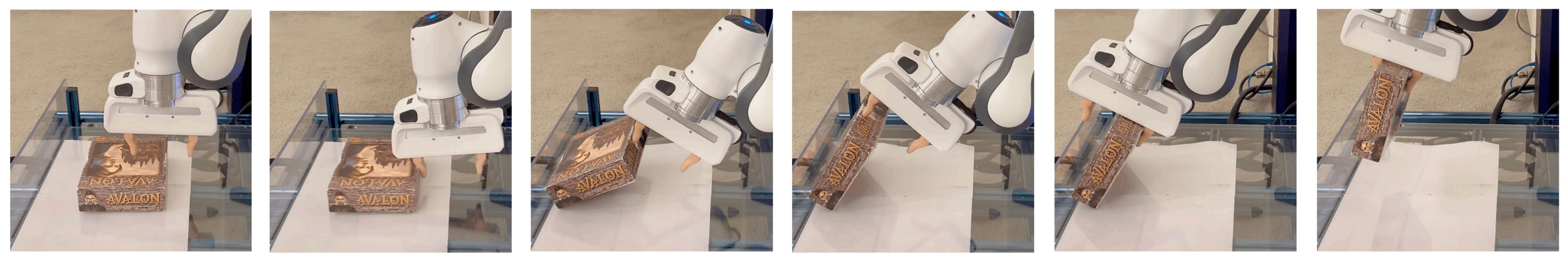

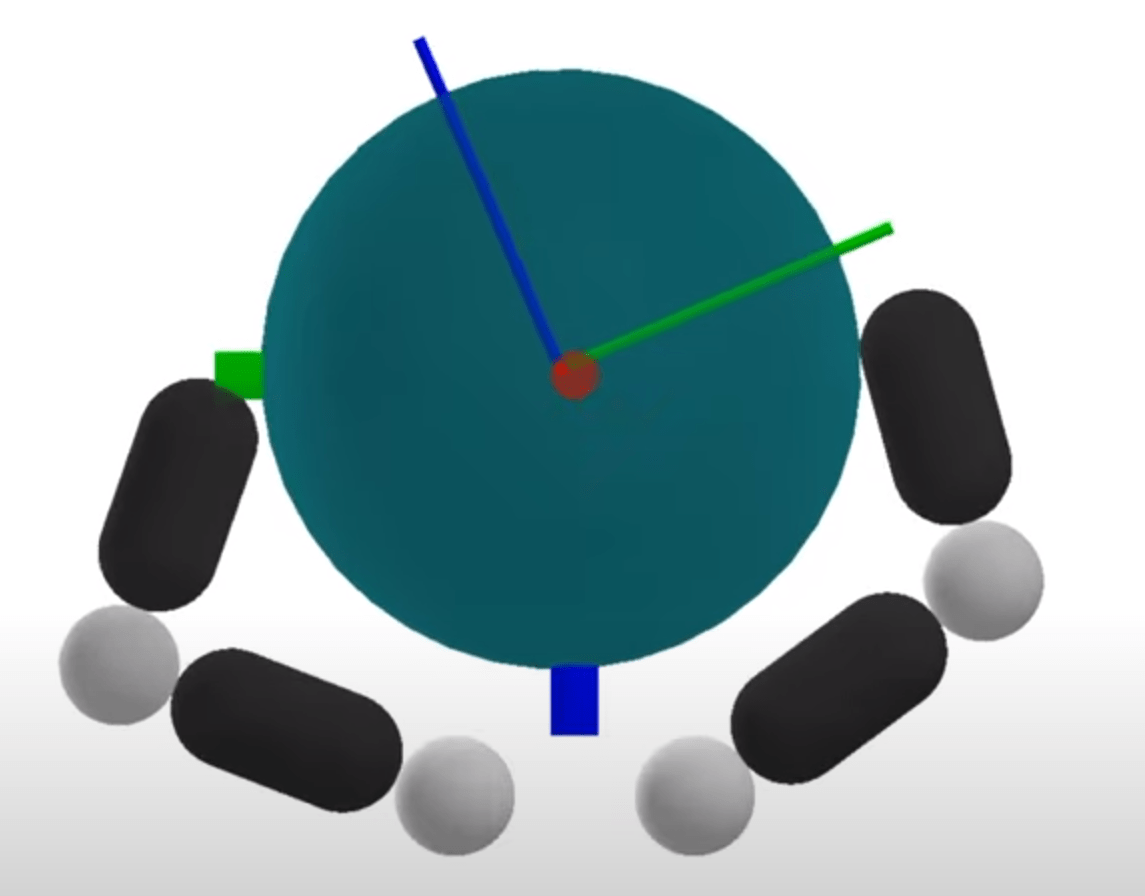

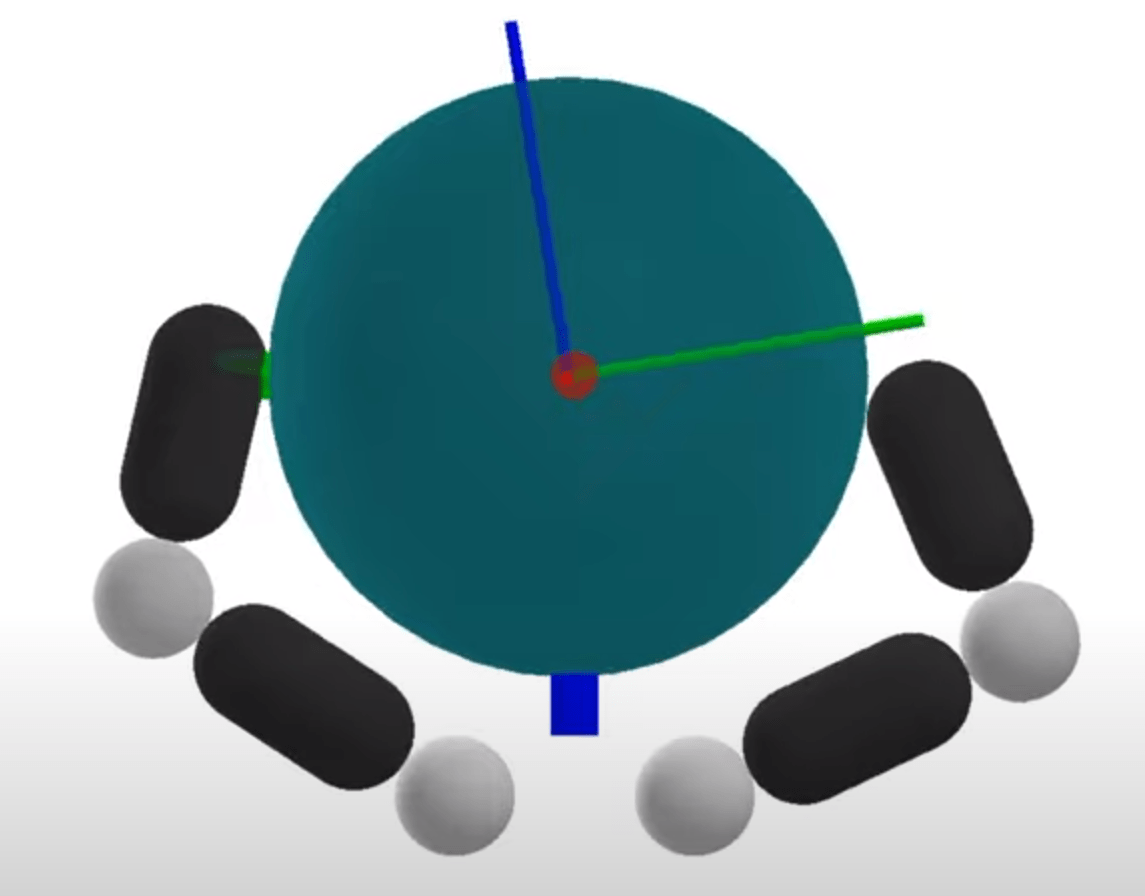

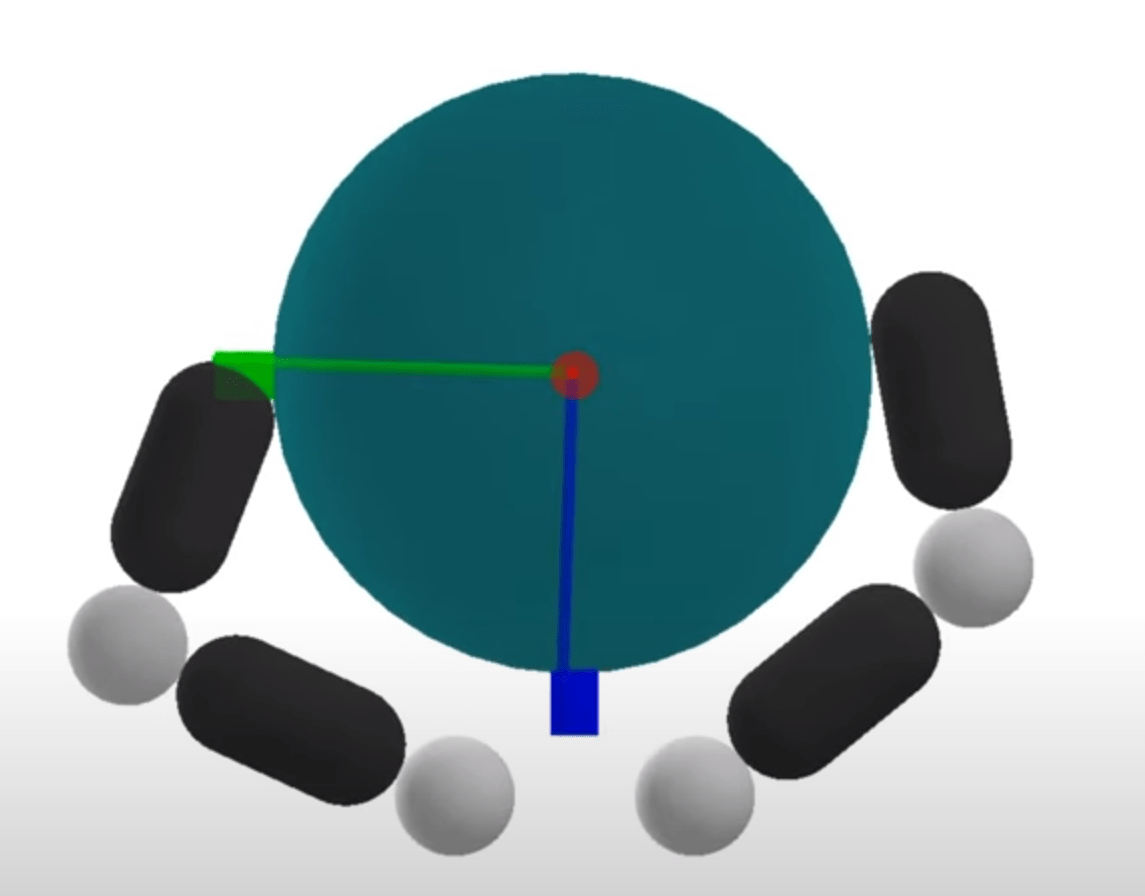

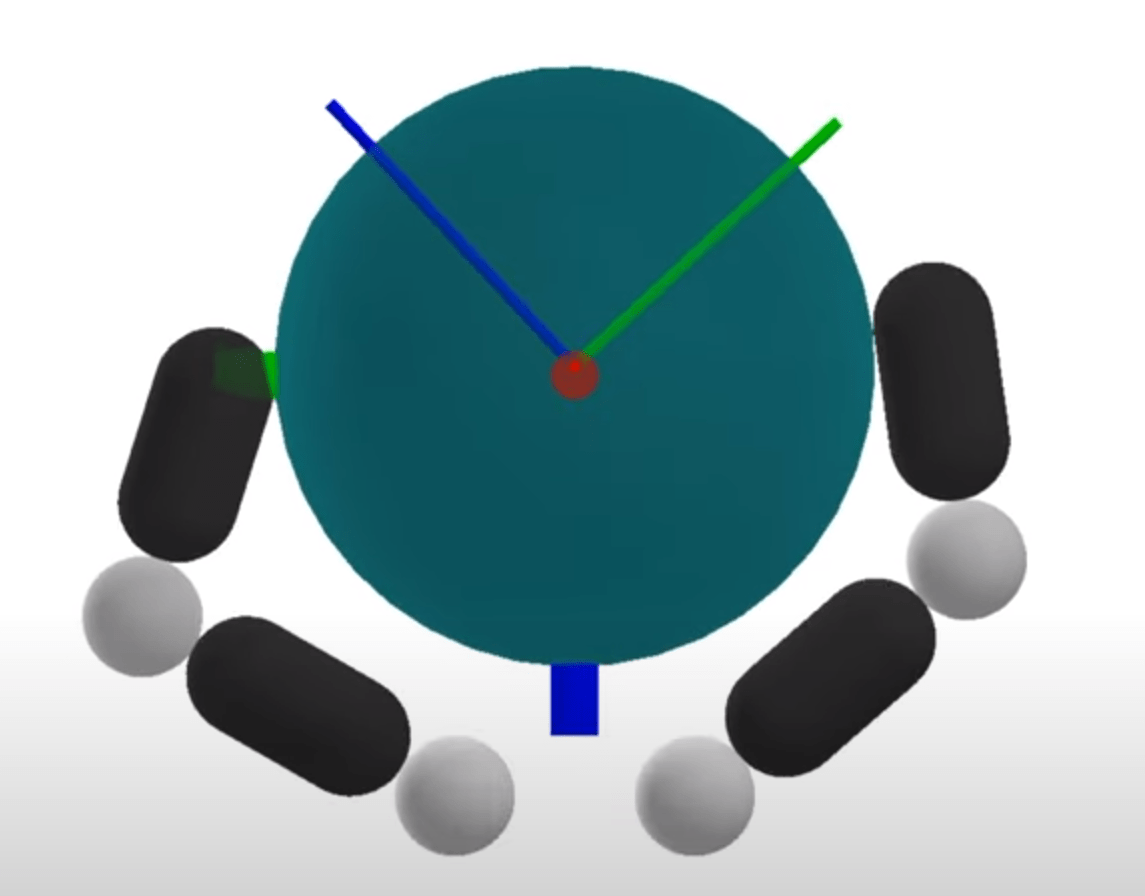

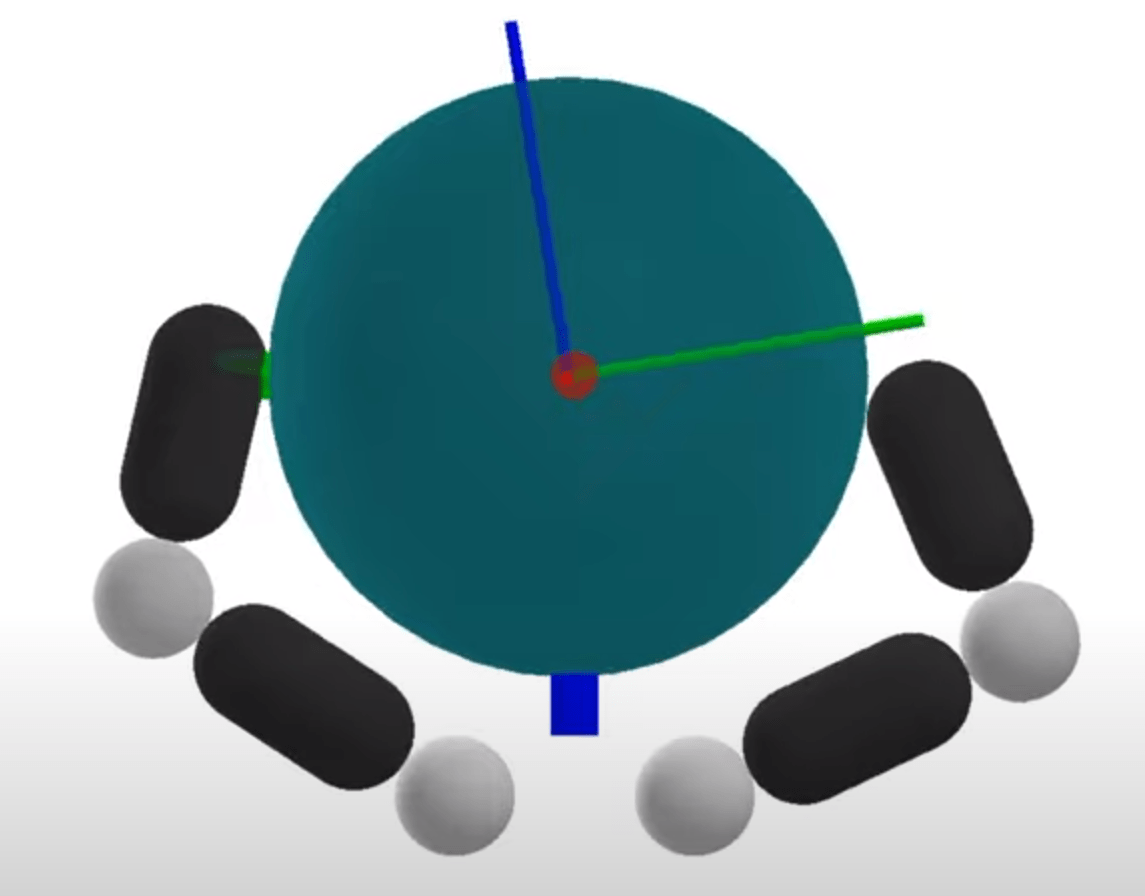

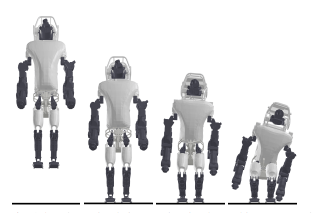

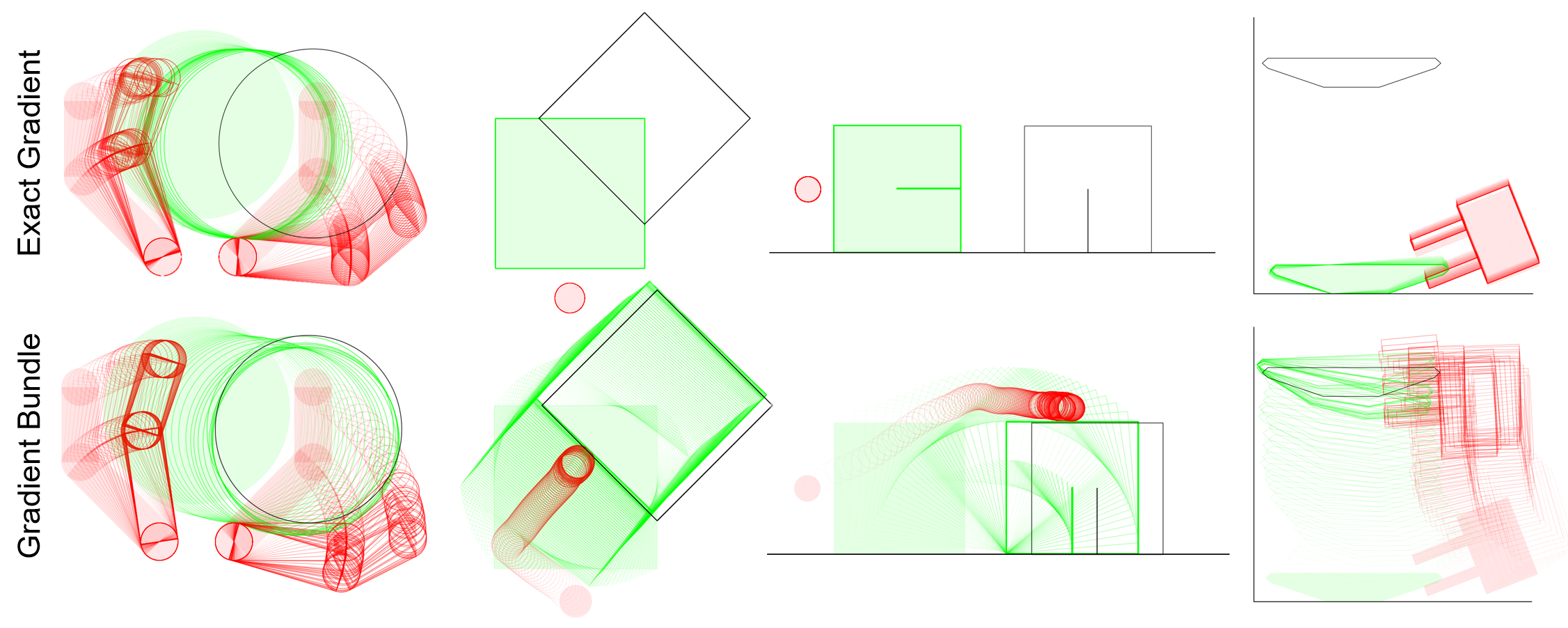

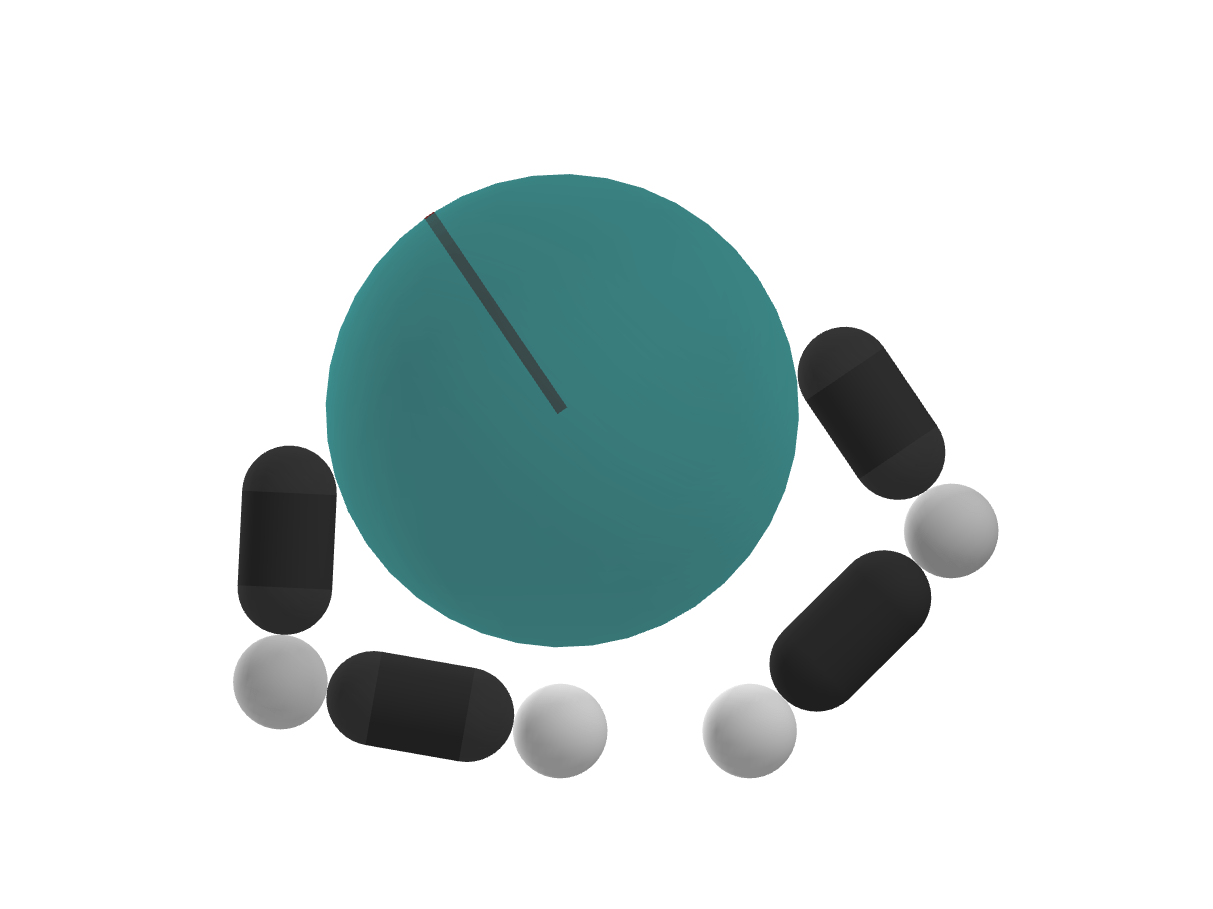

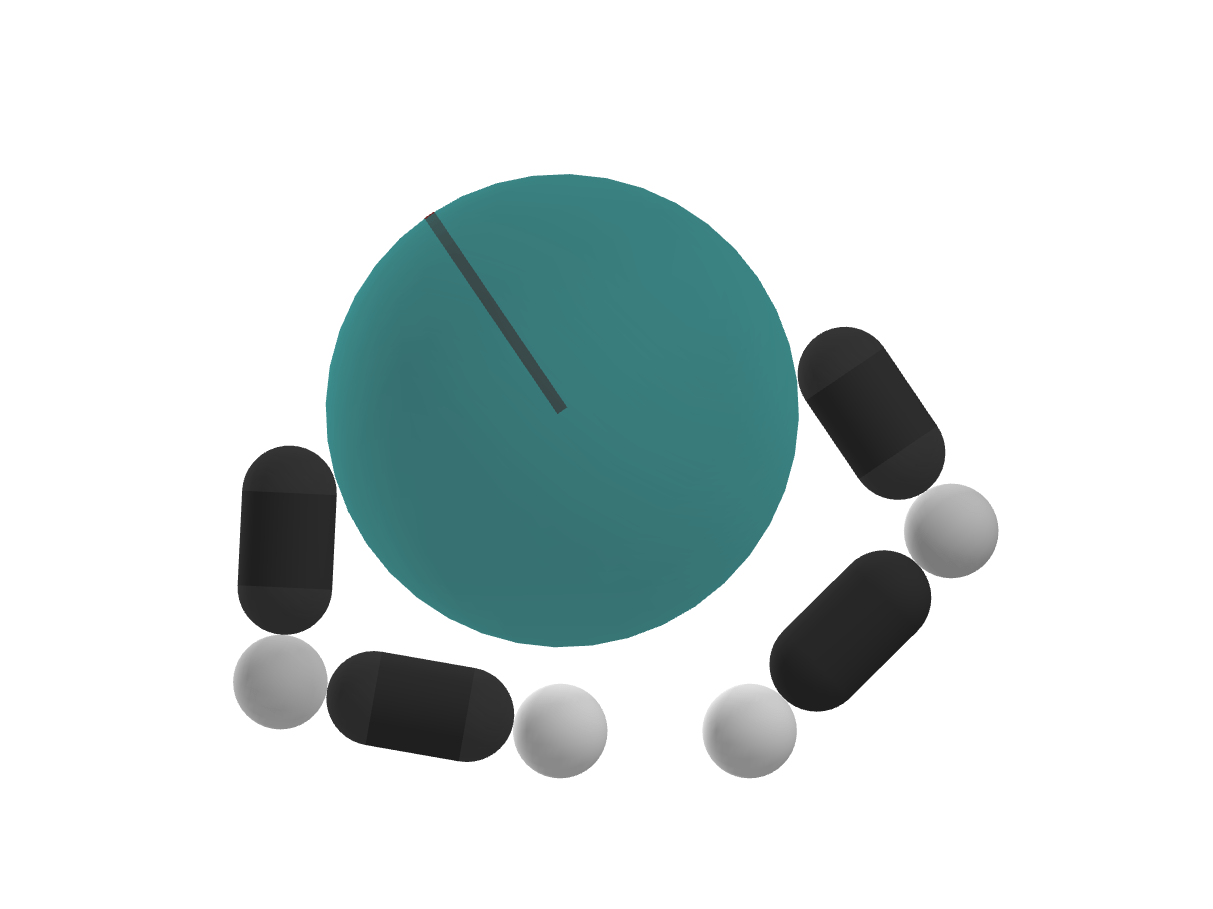

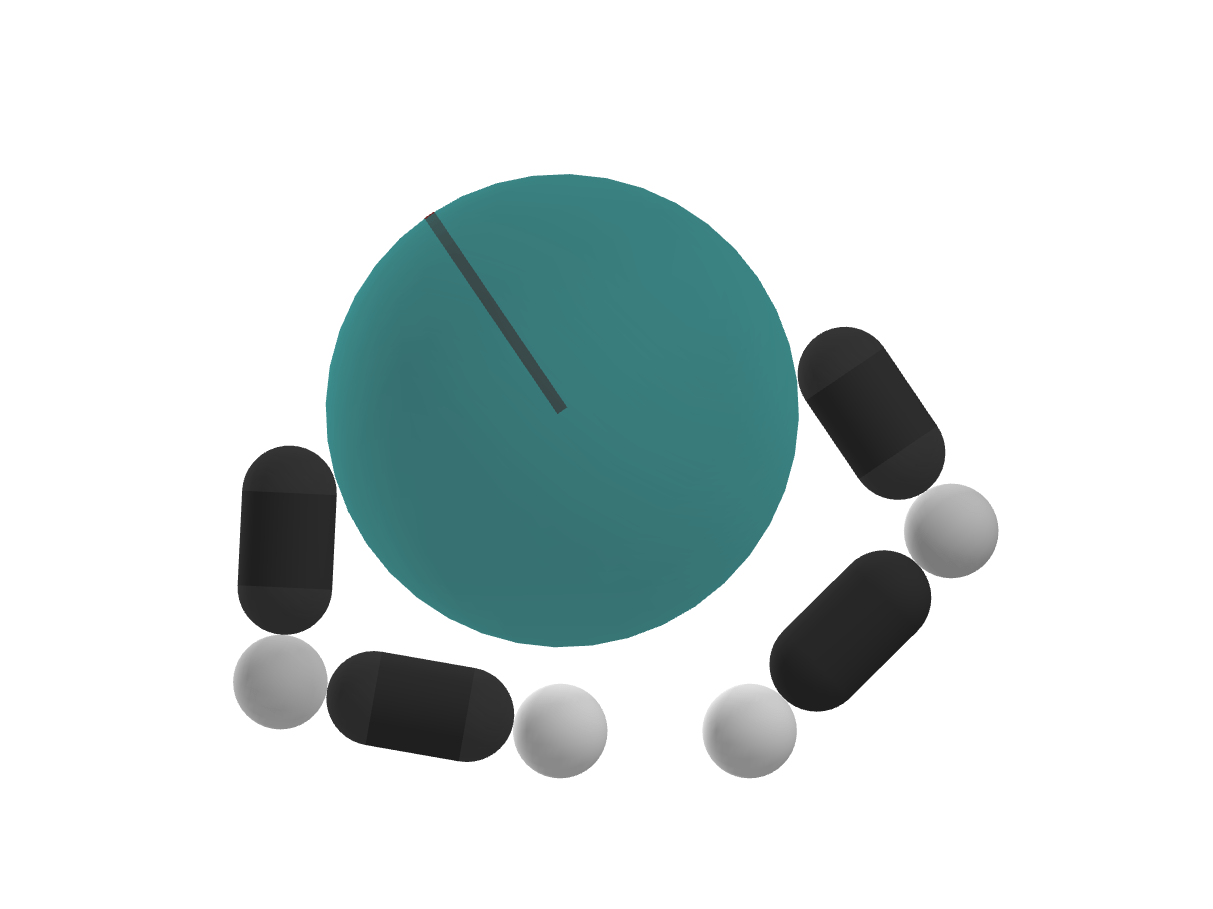

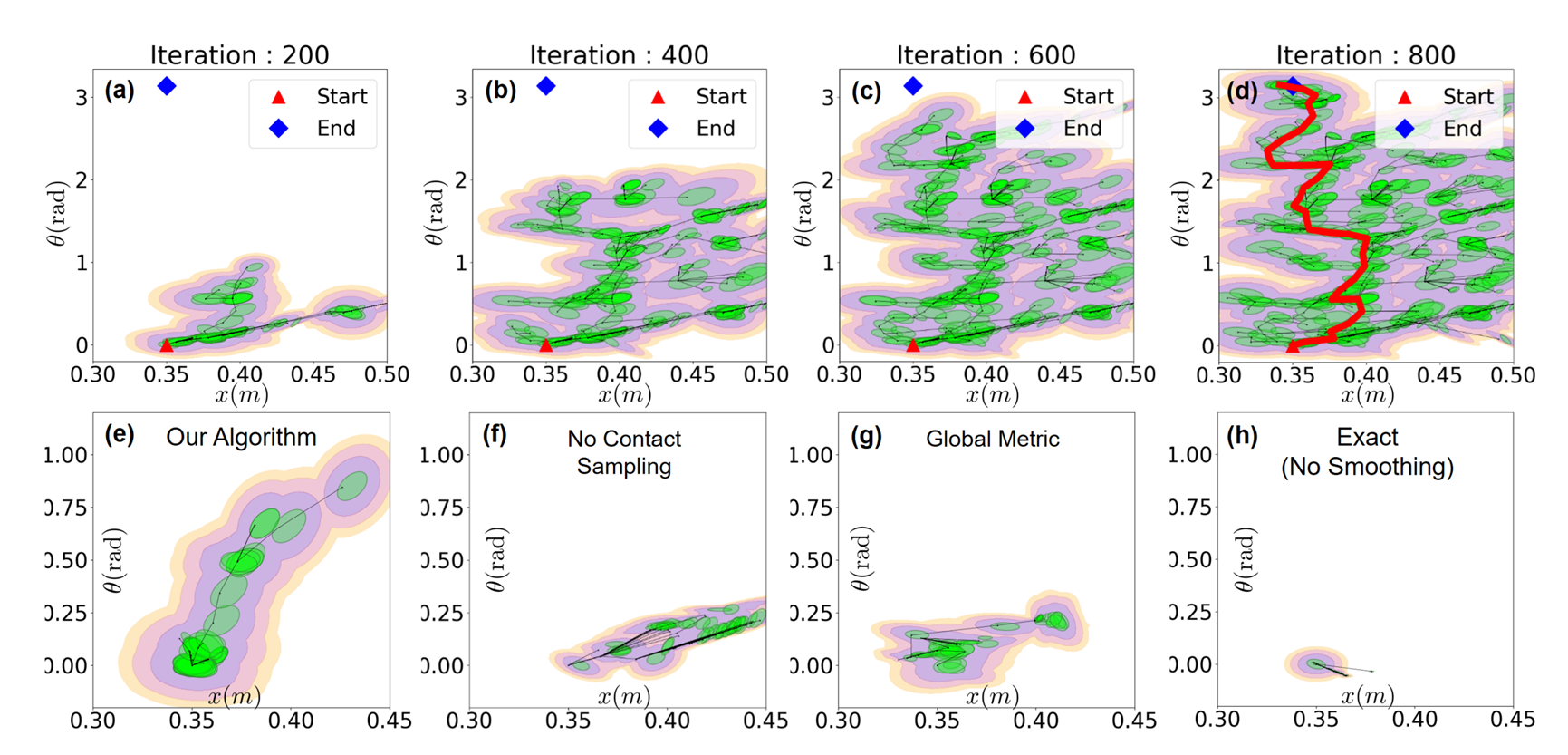

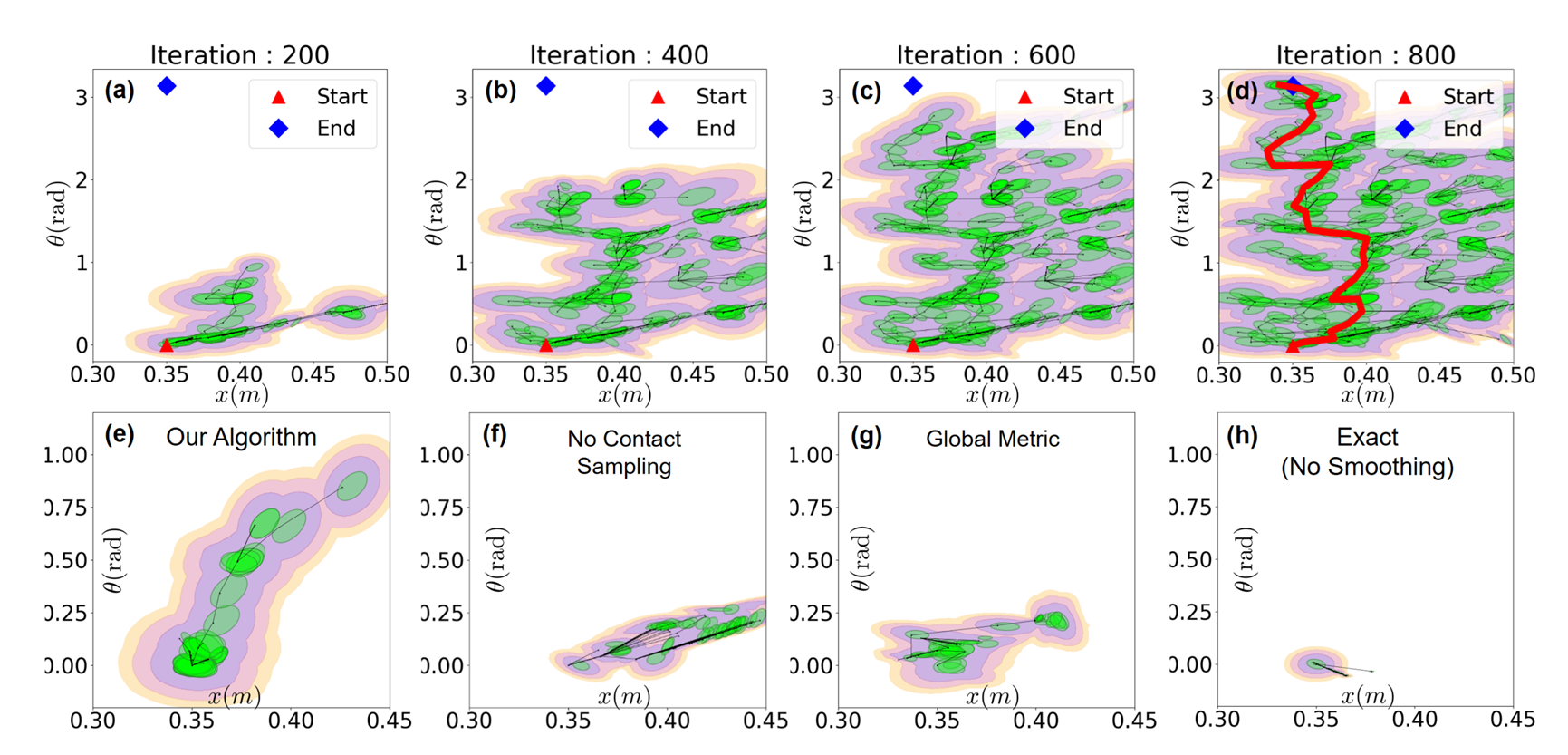

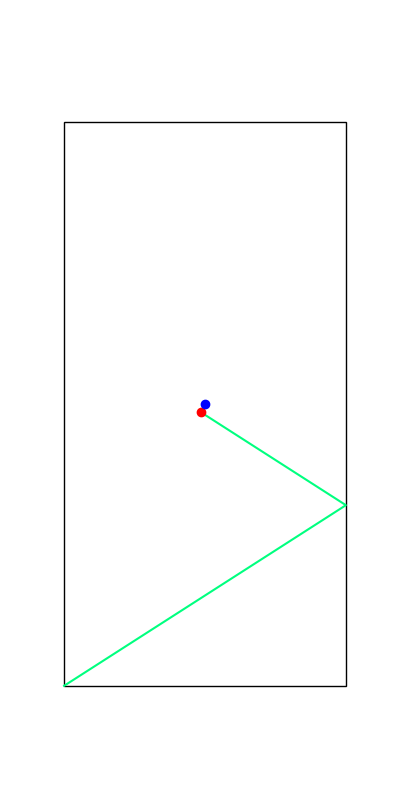

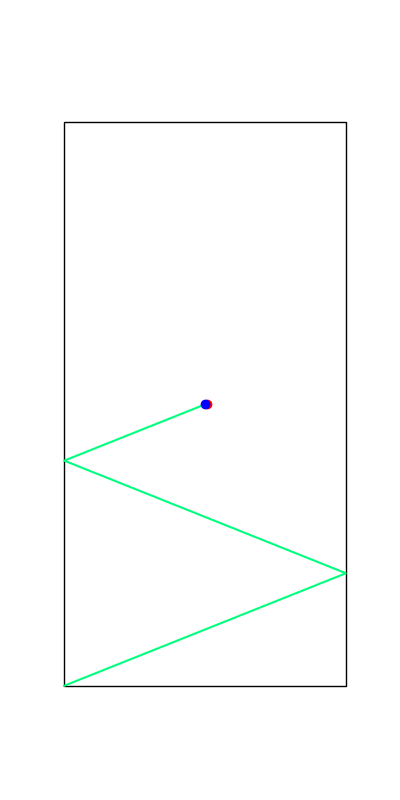

Contact-Rich RRT with Dynamic Smoothing

Our method can find solutions through contact-rich systems in few iterations! (~ 1 minute)

Contact-Rich RRT with Dynamic Smoothing

Without regrasping, the tree grows slowly.

Contact-Rich RRT with Dynamic Smoothing

Using a global Euclidean metric hinders the growth of the tree.

Contact-Rich RRT with Dynamic Smoothing

No dynamic smoothing gets completely stuck.

Efficient Global Planning for

Highly Contact-Rich Systems

Fast Solution Time

Beyond Local Solutions

Contact Scalability

RRT with Dynamics Smoothing

-

H.J. Terry Suh*, Tao Pang*, Russ Tedrake,

"Bundled Gradients through Contact via Randomized Smoothing",

RA-L 2022, Presented at ICRA 2022

-

H.J. Terry Suh, Max Simchowitz, Kaiqing Zhang, Russ Tedrake,

"Do Differentiable Simulators Give Better Policy Gradients?",

ICML 2022, Outstanding Paper Award

- Tao Pang*, H.J. Terry Suh*, Lujie Yang, Russ Tedrake,

"Global Planning for Contact-Rich Manipulation via Local Smoothing of Quasidynamic Contact Models",

TRO 2023, To be presented at ICRA 2024

Thank You

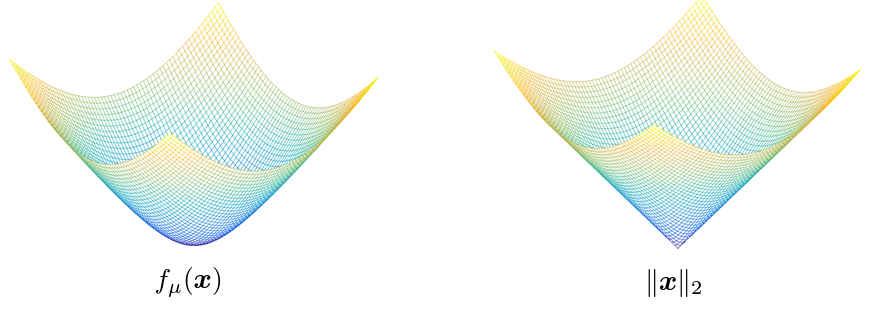

Smoothing Techniques for Non-Smooth Problems

Some non-smooth problems are successfully tackled by smooth approximations without sacrificing much from bias.

Is contact one of these problems?

*Figures taken from Yuxin Chen's slides on "Smoothing for Non-smooth Optimization"

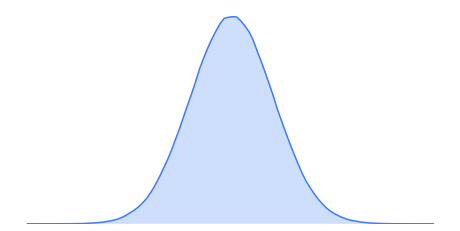

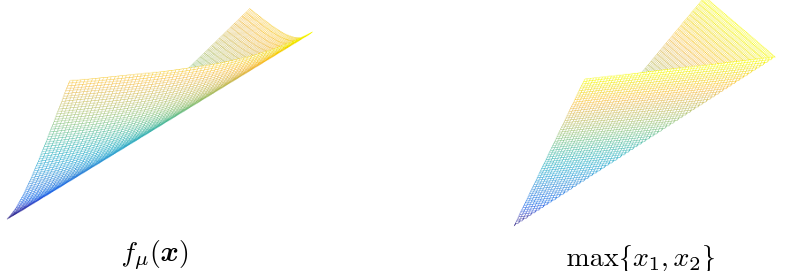

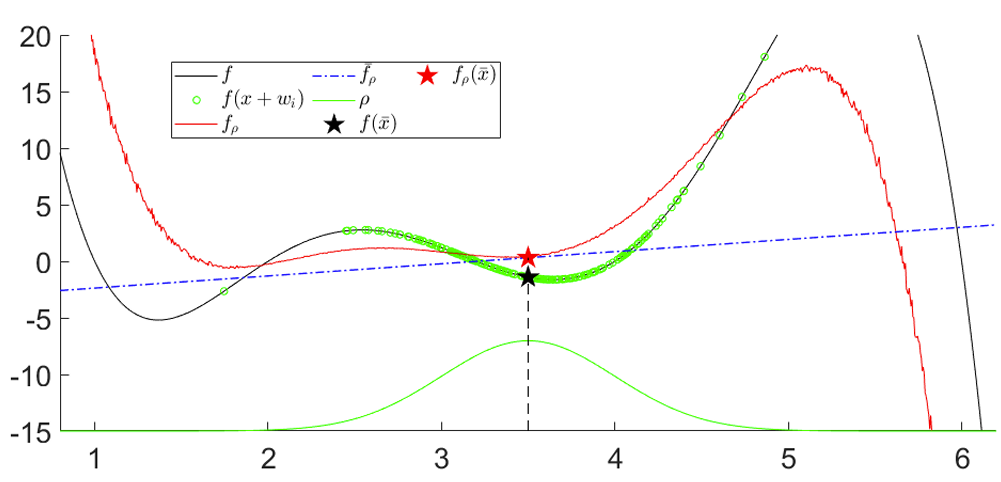

Smoothing in Optimization

We can formally define smoothing as a process of convolution with a smooth kernel,

In addition, for purposes of optimization, we are interested in methods that provide easy access to the derivative of the smooth surrogate.

Original Function

Smooth Surrogate

Derivative of the Smooth Surrogate:

These provide linearization Jacobians in the setting when f is dynamics, and policy gradients in the setting when f is a value function.

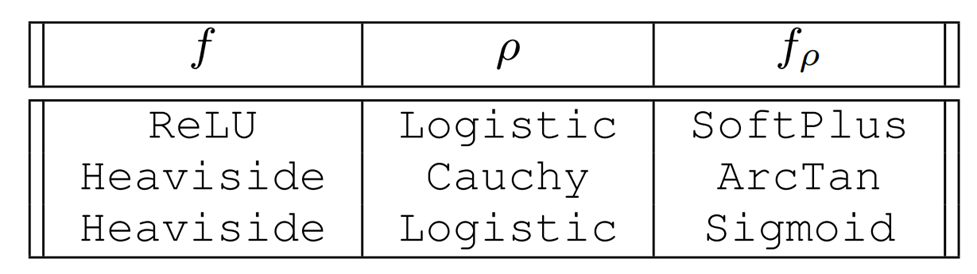

Taxonomy of Smoothing

Case 1. Analytic Smoothing

- If the original function f and the distribution rho is sufficiently structured, we can also evaluate the smooth surrogate in closed form by computing the integral.

- This can be analytically differentiated to give the derivative.

- Commonly used in ML as smooth nonlinearities.

Taxonomy of Smoothing

Case 2. Randomized Smoothing, First Order

- When we write convolution as an expectation, it motivates Monte-Carlo sampling methods that can estimate the value of the smooth surrogate.

- In order to obtain the derivative, we can use the Leibniz integral rule to exchange the expectation and the derivative.

- This means we can sample derivatives to approximate the derivative of the sampled function.

- Requires access to the derivative of the original function f.

- Also known as the Reparametrization (RP) gradient.

Taxonomy of Smoothing

Case 2. Randomized Smoothing, First Order

*Figures taken from John Duchi's slides on Randomized Smoothing

Taxonomy of Smoothing

Case 2. Randomized Smoothing, Zeroth-Order

- Interestingly, we can obtain the derivative of the randomized smoothing objective WITHOUT having access to the gradients of f.

- This gradient is derived from Stein's lemma

- Known by many names: Likelihood Ratio gradient, Score Function gradient, REINFORCE gradient.

This seems like it came out of nowhere? How can this be true?

Taxonomy of Smoothing

Rethinking Linearization as a Minimizer.

- The linearization of a function provides the best linear model (i.e. up to first order) to approximate the function locally.

- We could use the same principle for a stochastic function.

- Fix a point xbar. If we were to sample bunch of f(xbar + w_i) and run a least-squares procedure to find the best linear model, this converges to the linearization of the smooth surrogate.

Also provides a convenient way to compute the gradient in zeroth-order. Just sample and run least-squares!

Tradeoffs between structure and performance.

The generally accepted wisdom: more structure gives more performance.

Analytic smoothing

Randomized Smoothing

First-Order

Randomized Smoothing

Zeroth-Order

- Requires closed-form evaluation of the integral.

- No sampling required.

- Requires access to first-order oracle (derivative of f).

- Generally less variance than zeroth-order.

- Only requires zeroth-order oracle (value of f)

- High variance.

Structure Requirements

Performance / Efficiency

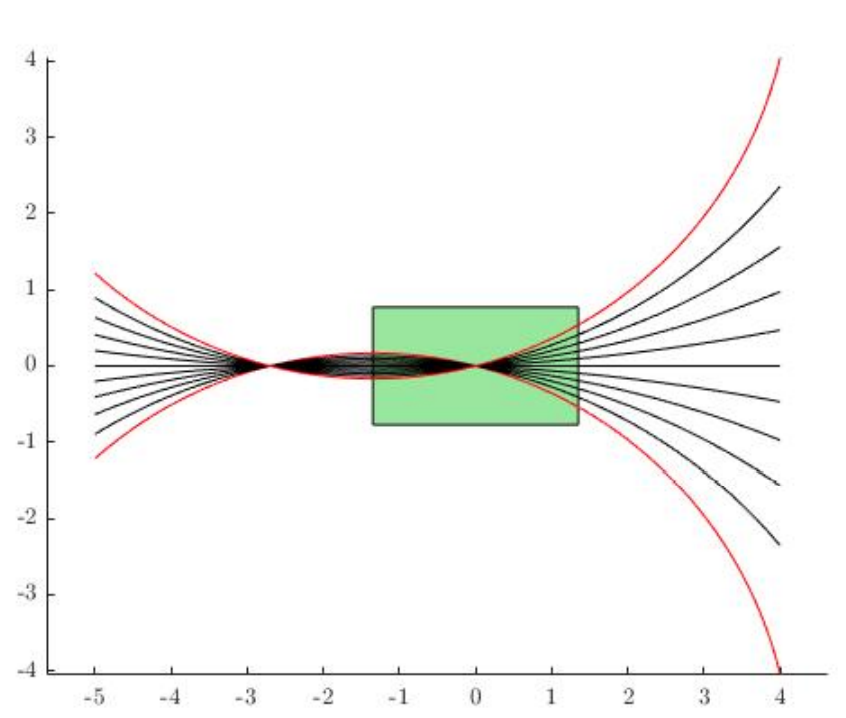

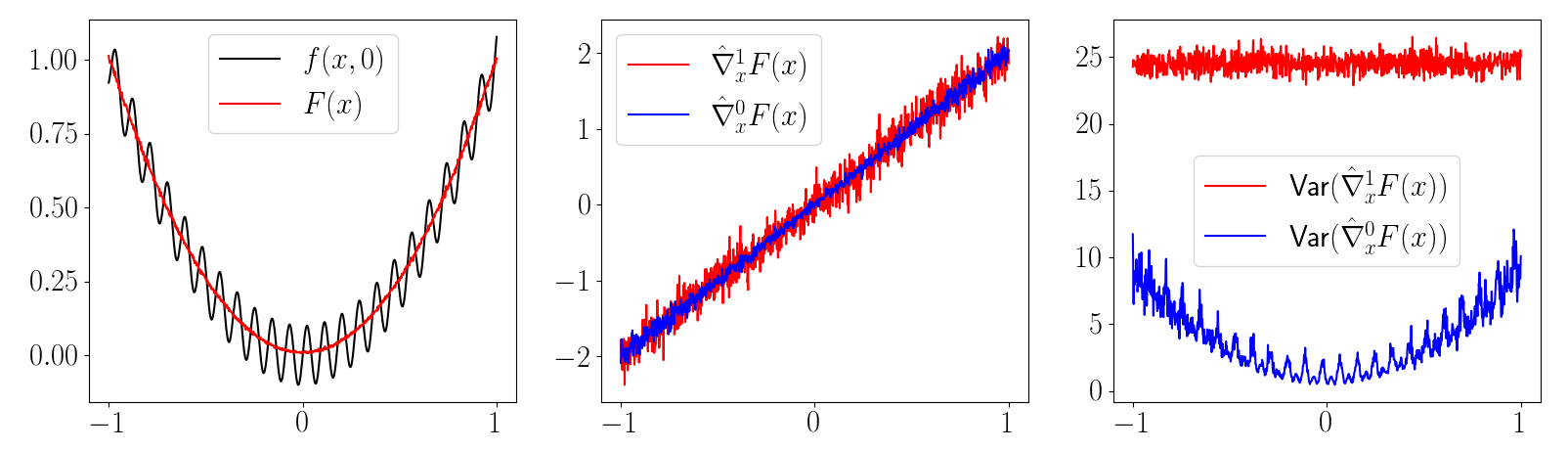

Smoothing of Optimal Control Problems

Optimal Control thorugh Non-smooth Dynamics

Policy Optimization

Cumulative Cost

Dynamics

Policy (can be open-loop)

Dynamics Smoothing

Value Smoothing

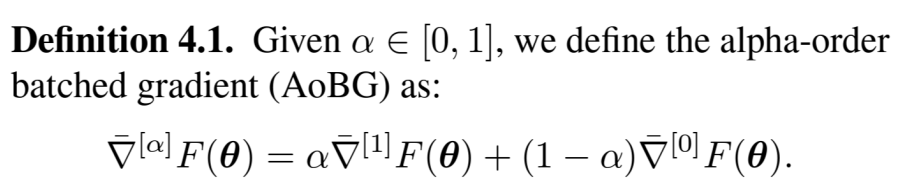

Smoothing of Optimal Control Problems

Dynamics Smoothing

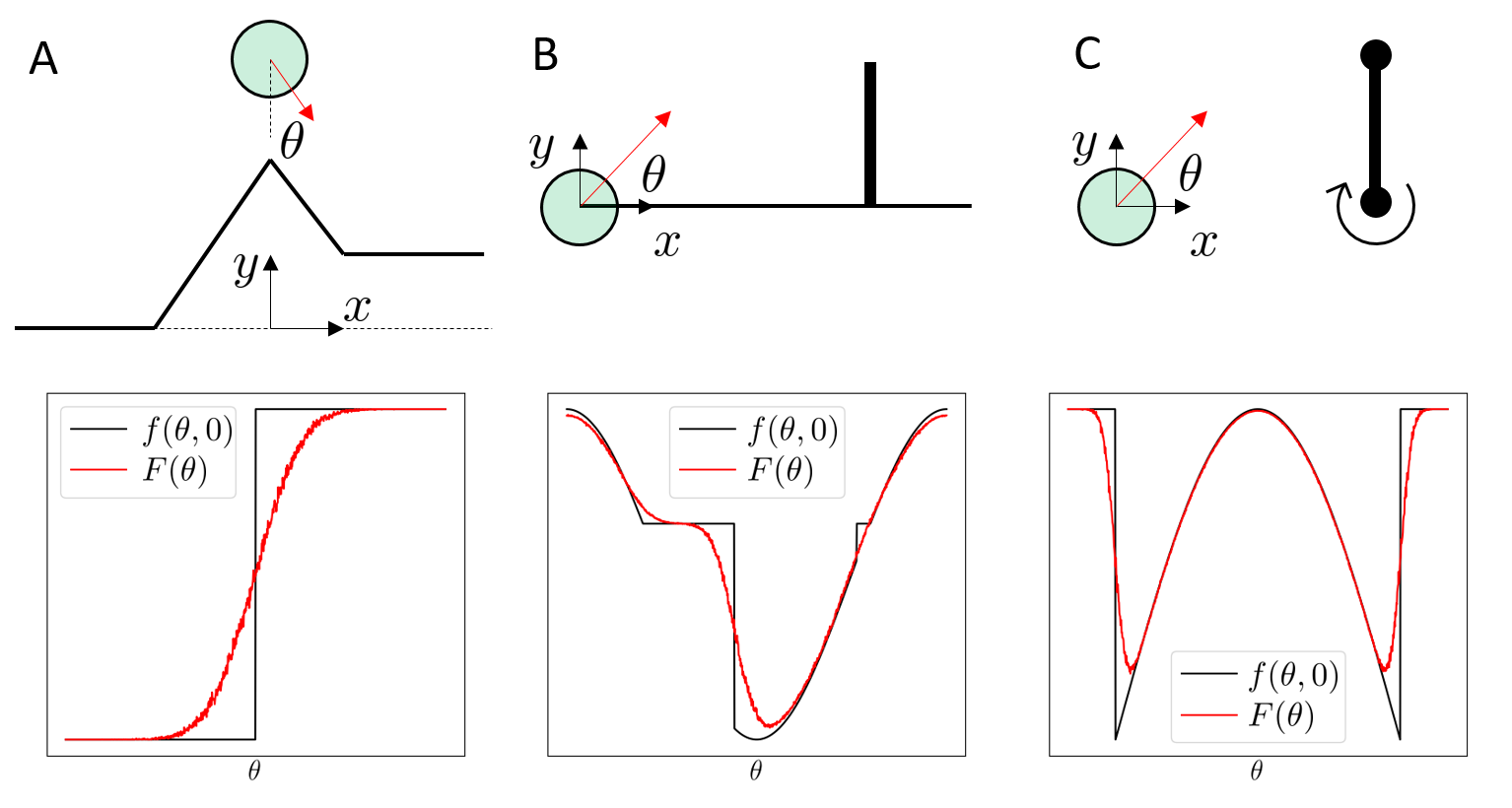

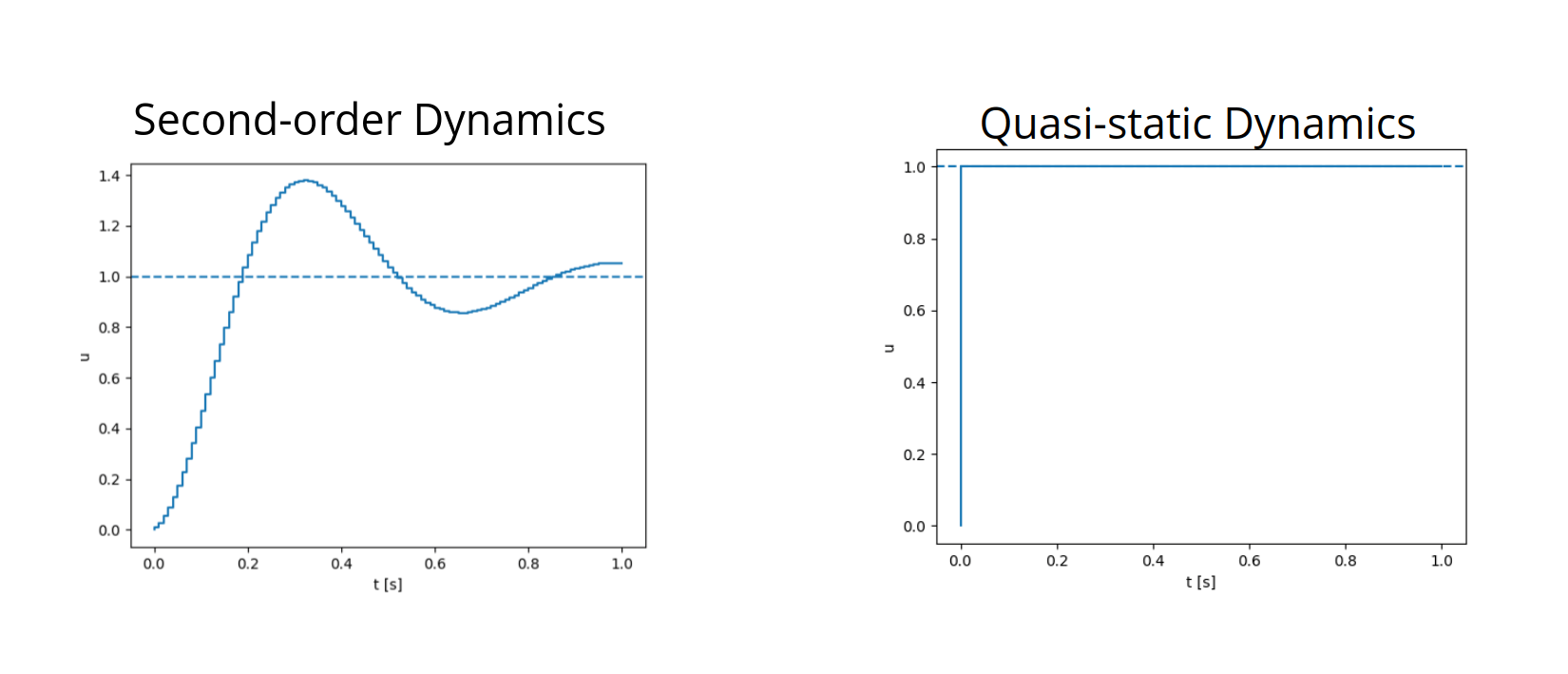

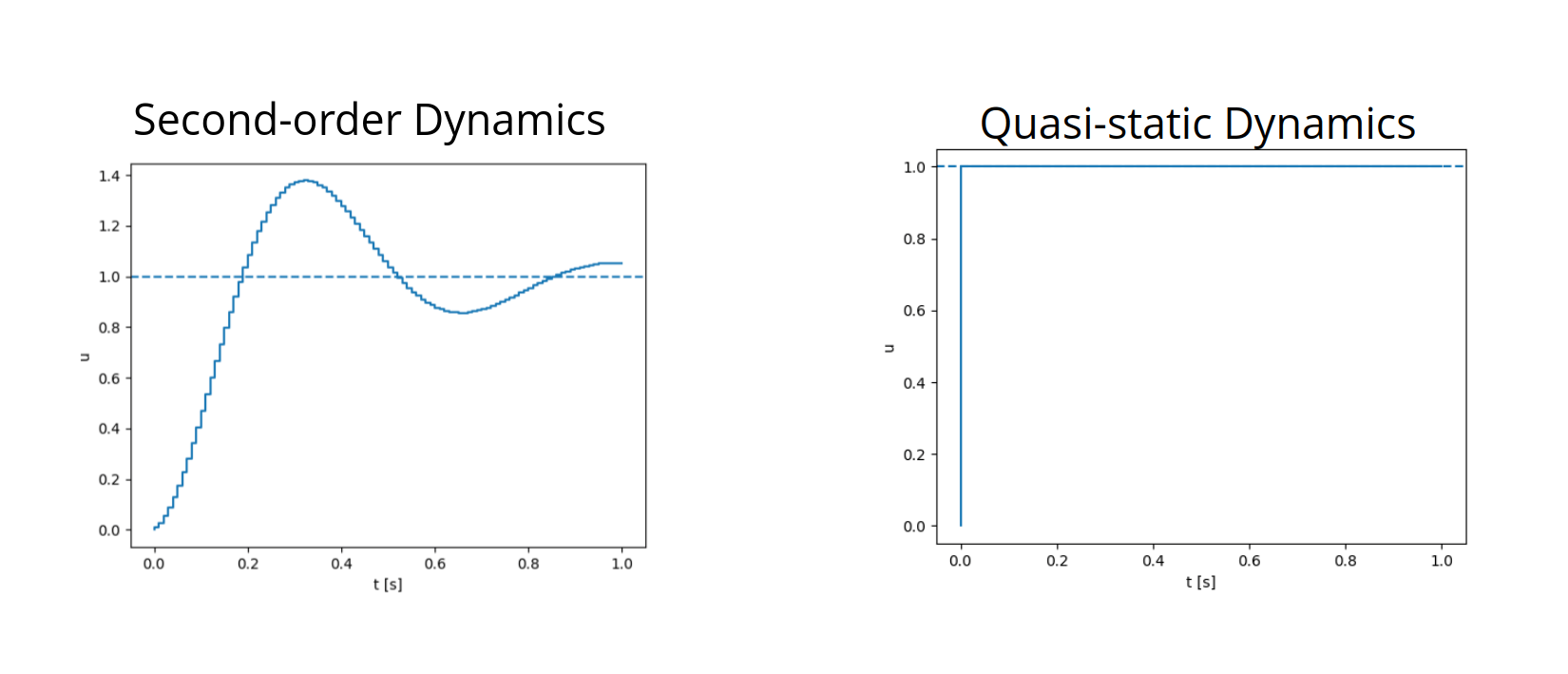

What does it mean to smooth contact dynamics stochastically?

Since some samples make contact and others do not, averaging these "discrete modes" creates force from a distance.

Smoothing of Optimal Control Problems

Dynamics Smoothing

Quadratic Programming

To numerically solve this problem, we rely on the fact that we have a known dynamic programming solution to linear dynamics with quadratic cost.

Until convergence:

- Rollout current iterate of input sequence.

- Linearize dynamics around the trajectory

- Solve for the optimal input under linearized dynamics

Sequential Quadratic Programming

Sequential Quadratic Programming

Importantly, the linearization utilizes stochastic gradient estimation techniques.

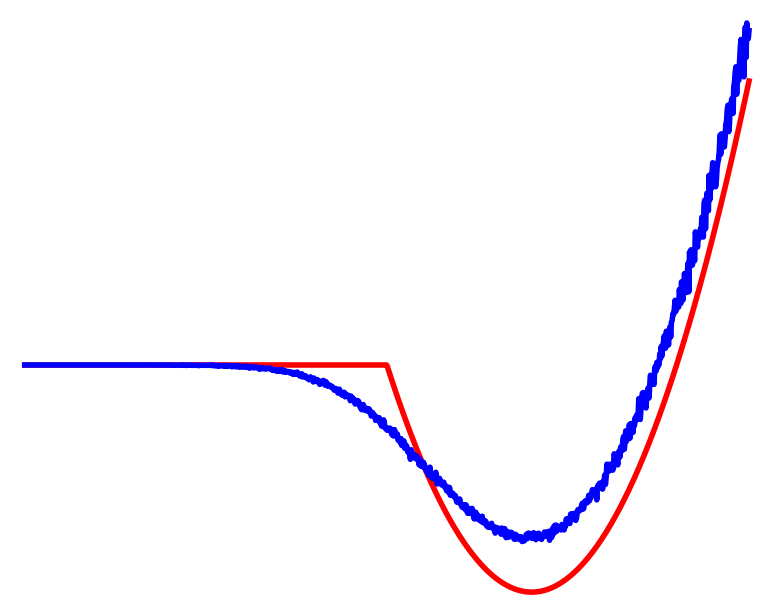

Optimal Control with Dynamics Smoothing

Exact

Smoothed

Smoothing of Value Functions.

Optimal Control thorugh Non-smooth Dynamics

Policy Optimization

Cumulative Cost

Dynamics

Policy (can be open-loop)

Dynamics Smoothing

Value Smoothing

Recall that smoothing turns into .

Why not just smooth the value function directly and run policy optimization?

Smoothing of Value Functions.

Original Problem

Long-horizon problems involving contact can have terrible landscapes.

Smoothing of Value Functions.

Smooth Surrogate

The benefits of smoothing are much more pronounced in the value smoothing case.

Beautiful story - noise sometimes regularizes the problem, developing into helpful bias.

How do we take gradients of smoothed value function?

Analytic smoothing

Randomized Smoothing

First-Order

Randomized Smoothing

Zeroth-Order

- Requires differentiability over dynamics, reward, policy.

- Generally lower variance.

- Only requires zeroth-order oracle (value of f)

- High variance.

Structure Requirements

Performance / Efficiency

Pretty much not possible.

How do we take gradients of smoothed value function?

First-Order Policy Search with Differentiable Simulation

Policy Gradient Methods in RL (REINFORCE / TRPO / PPO)

- Requires differentiability over dynamics, reward, policy.

- Generally lower variance.

- Only requires zeroth-order oracle (value of f)

- High variance.

Structure Requirements

Performance / Efficiency

Turns out there is an important question hidden here regarding the utility of differentiable simulators.

Do Differentiable Simulators Give Better Policy Gradients?

Very important question for RL, as it promises lower variance, faster convergence rates, and more sample efficiency.

What do we mean by "better"?

Consider a simple stochastic optimization problem

First-Order Gradient Estimator

Zeroth-Order Gradient Estimator

Then, we can define two different gradient estimators.

What do we mean by "better"?

First-Order Gradient Estimator

Zeroth-Order Gradient Estimator

Bias

Variance

Common lesson from stochastic optimization:

1. Both are unbiased under sufficient regularity conditions

2. First-order generally has less variance than zeroth order.

What happens in Contact-Rich Scenarios?

Bias

Variance

Common lesson from stochastic optimization:

1. Both are unbiased under sufficient regularity conditions

2. First-order generally has less variance than zeroth order.

Bias

Variance

Bias

Variance

We show two cases where the commonly accepted wisdom is not true.

1st Pathology: First-Order Estimators CAN be biased.

2nd Pathology: First-Order Estimators can have MORE

variance than zeroth-order.

Bias from Discontinuities

1st Pathology: First-Order Estimators CAN be biased.

What's worse: the empirical variance is also zero!

(The estimator is absolutely sure about an estimate that is wrong)

Not just a pathology, could happen quite often in contact.

Empirical Bias leads to High Variance

Perhaps it's a modeling artifact? Contact can be softened.

- From a low-sample regime, no statistical way to distinguish between an actual discontinuity and a very stiff function.

- Generally, stiff relaxations lead to high variance. As relaxation converges to true discontinuity, variance blows up to infinity, and suddenly turns into bias!

- Zeroth-order escapes by always thinking about the cumulative effect over some finite interval.

Variance of First-Order Estimators

2nd Pathology: First-order Estimators CAN have more variance than zeroth-order ones.

Scales with Gradient

Scales with Function Value

Scales with dimension of decision variables.

High-Variance Events

Case 1. Persistent Stiffness

Case 2. Chaotic

- Contact modeling using penalty method is a bad idea for differentiable policy search

- Gradients always has the spring stiffness value!

- High variance depending on initial condition

- Zeroth-order always bounded in value, but the gradients can be very high.

Motivating Contact-rich RRT

How do we overcome local minima of local gradient-based methods?

Our ideal solution

The RRT Algorithm

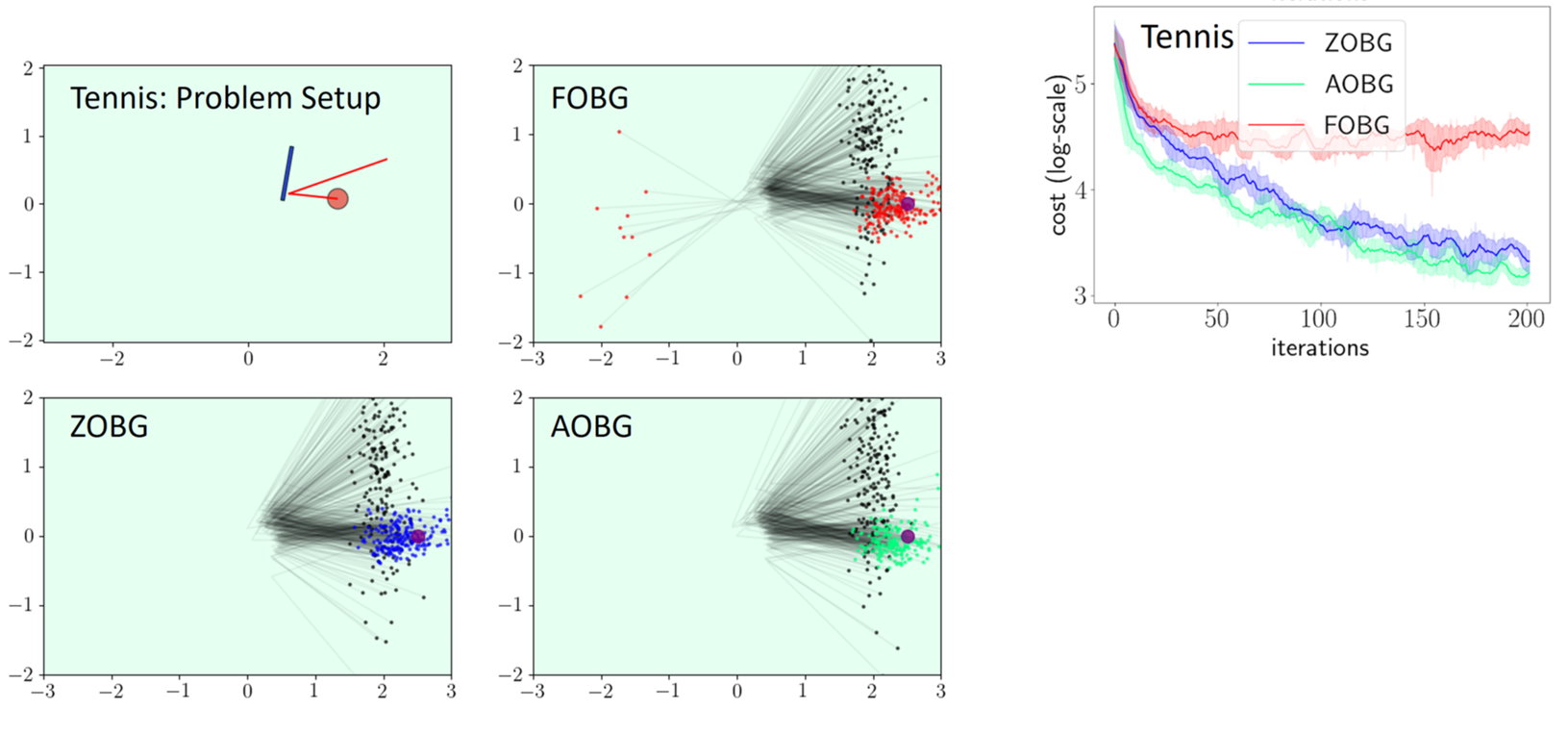

Global Search with Smoothing: Contact-Rich RRT

Motivating Contact-Rich RRT

Sampling-Based Motion Planning is a popular solution in robotics for complex non-convex motion planning

How do we define notions of nearest?

How do we extend (steer)?

- Nearest states in Euclidean space are not necessarily reachable according to system dynamics (Dubin's car)

- Typically, kinodynamic RRT solves trajopt

- Potentially a bit costly.

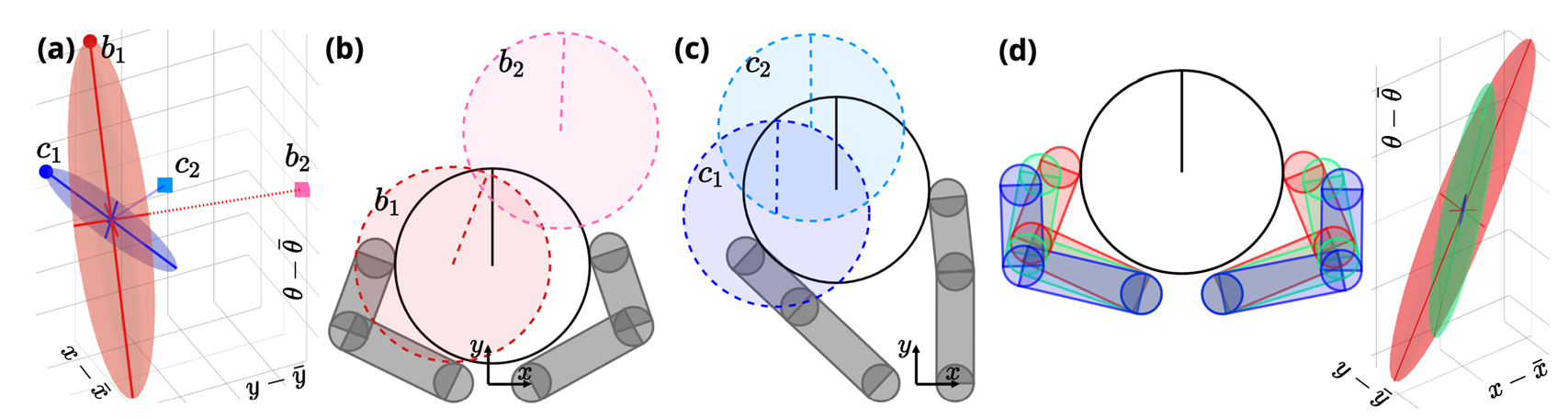

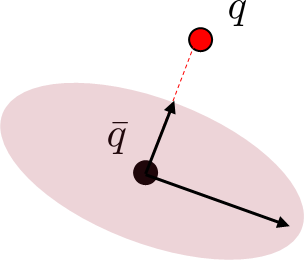

Reachability-Consistent Distance Metric

Reachability-based Mahalanobis Distance

How do we come up with a distance metric between q and qbar in a dynamically consistent manner?

Reachability-Consistent Distance Metric

Reachability-based Mahalanobis Distance

How do we come up with a distance metric between q and qbar in a dynamically consistent manner?

Consider a one-step input linearization of the system.

Then we could consider a "reachability ellipsoid" under this linearized dynamics,

Note: For quasidynamic formulations, ubar is a position command, which we set as the actuated part of qbar.

Reachability Ellipsoid

Reachability Ellipsoid

Intuitively, if B lengthens the direction towards q from a uniform ball, q is easier to reach.

On the other hand, if B decreases the direction towards q, q is hard to reach.

Mahalanobis Distance of an Ellipsoid

Reachability Ellipsoid

The B matrix induces a natural quadratic form for an ellipsoid,

Mahalanobis Distance using 1-Step Reachability

Note: if BBT is not invertible, we need to regularize to property define a quadratic distance metric numerically.

Smoothed Distance Metric

For Contact:

Don't use the exact linearization, but the smooth linearization.

Global Search with Smoothing

Dynamically consistent extension

Theoretically, it is possible to use long-horizon trajopt algorithms such as iLQR / DDP.

Here we simply do one-step trajopt and solve least-squares.

Importantly, the actuation matrix for least-squares is smoothed, but we rollout the actual dynamics with the found action.

Dynamically consistent extension

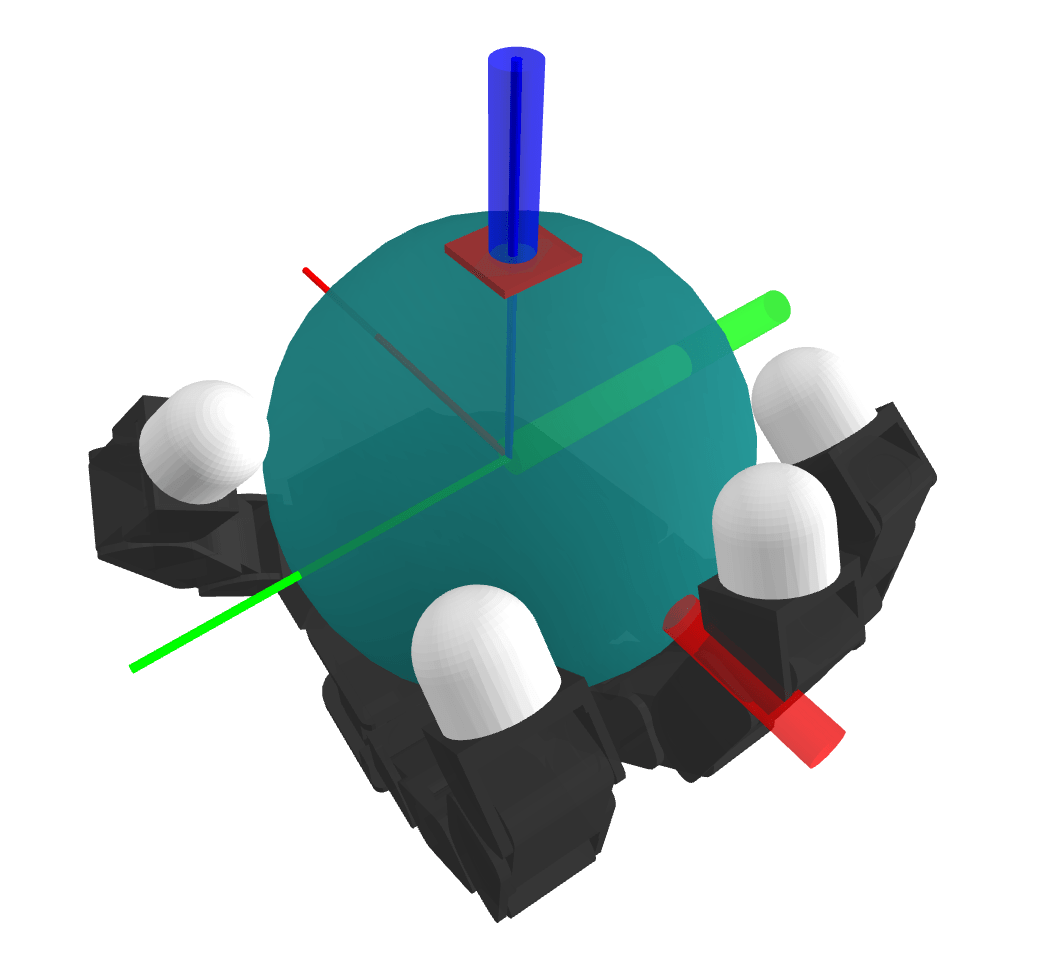

Contact Sampling

With some probability, we execute a regrasp (sample another valid contact configuration) in order to encourage further exploration.

Global Search with Smoothing

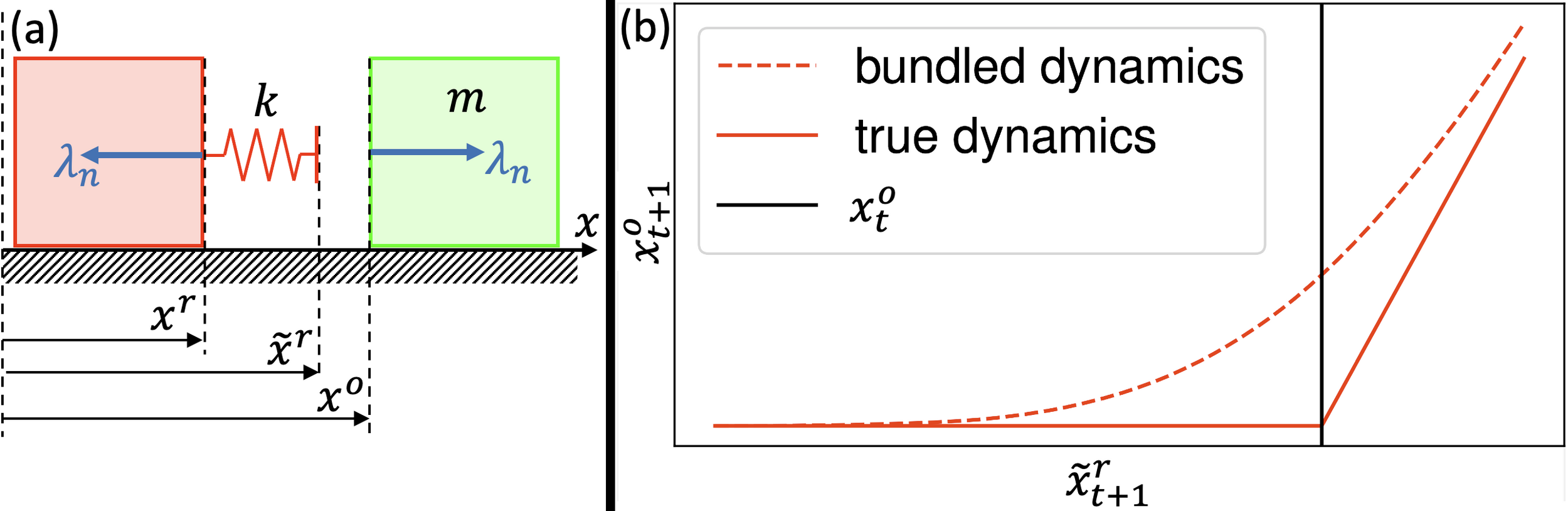

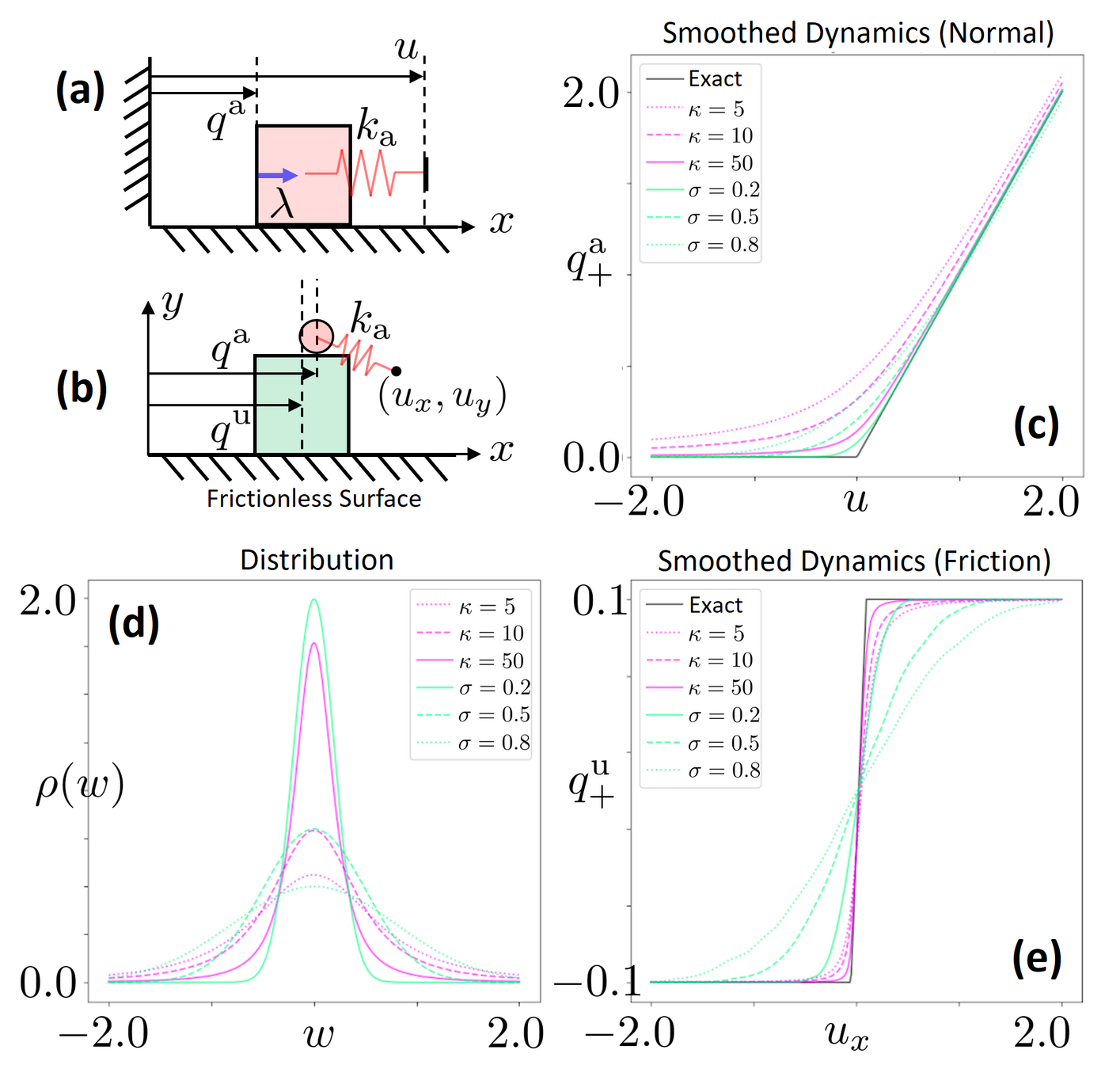

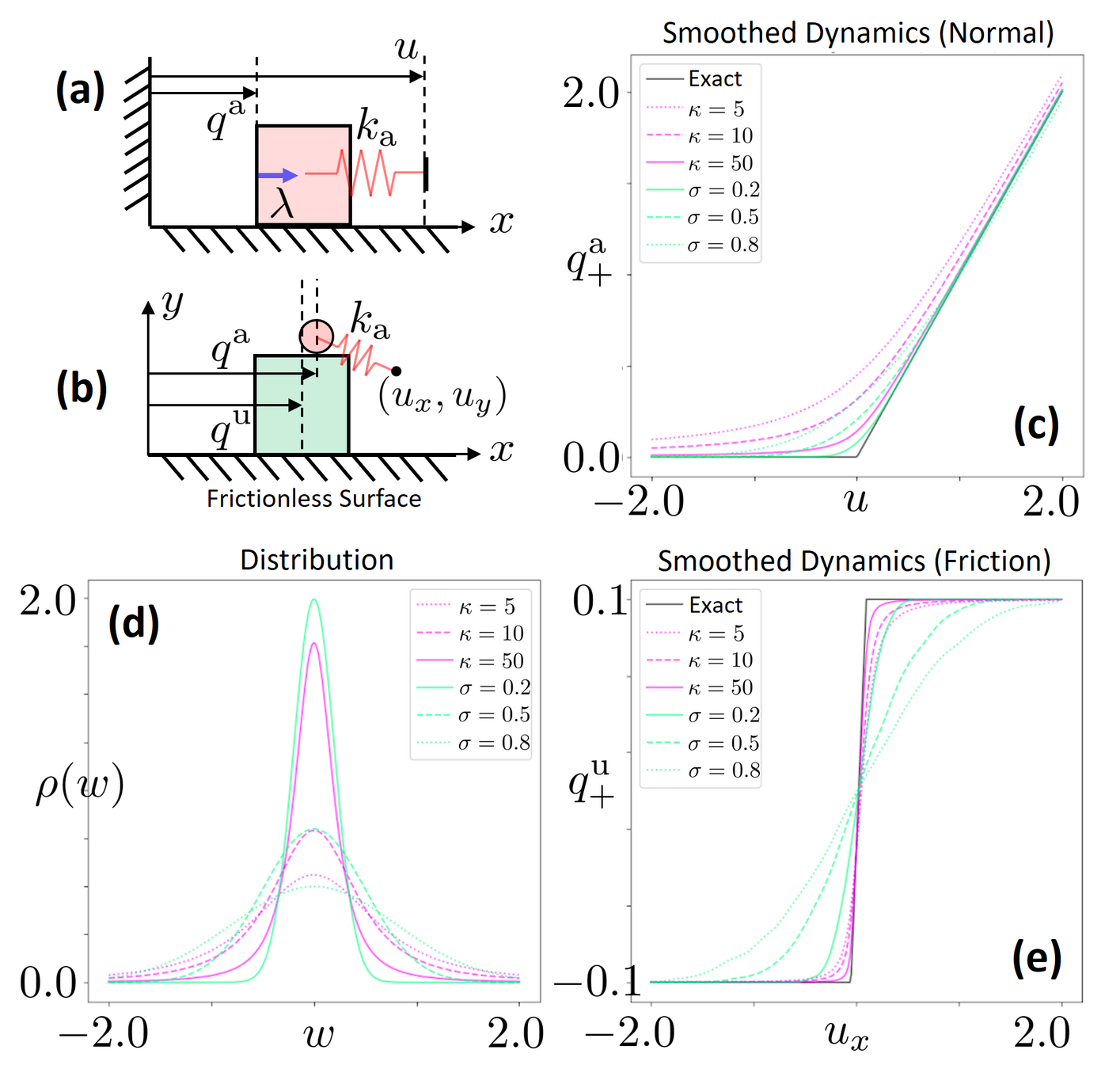

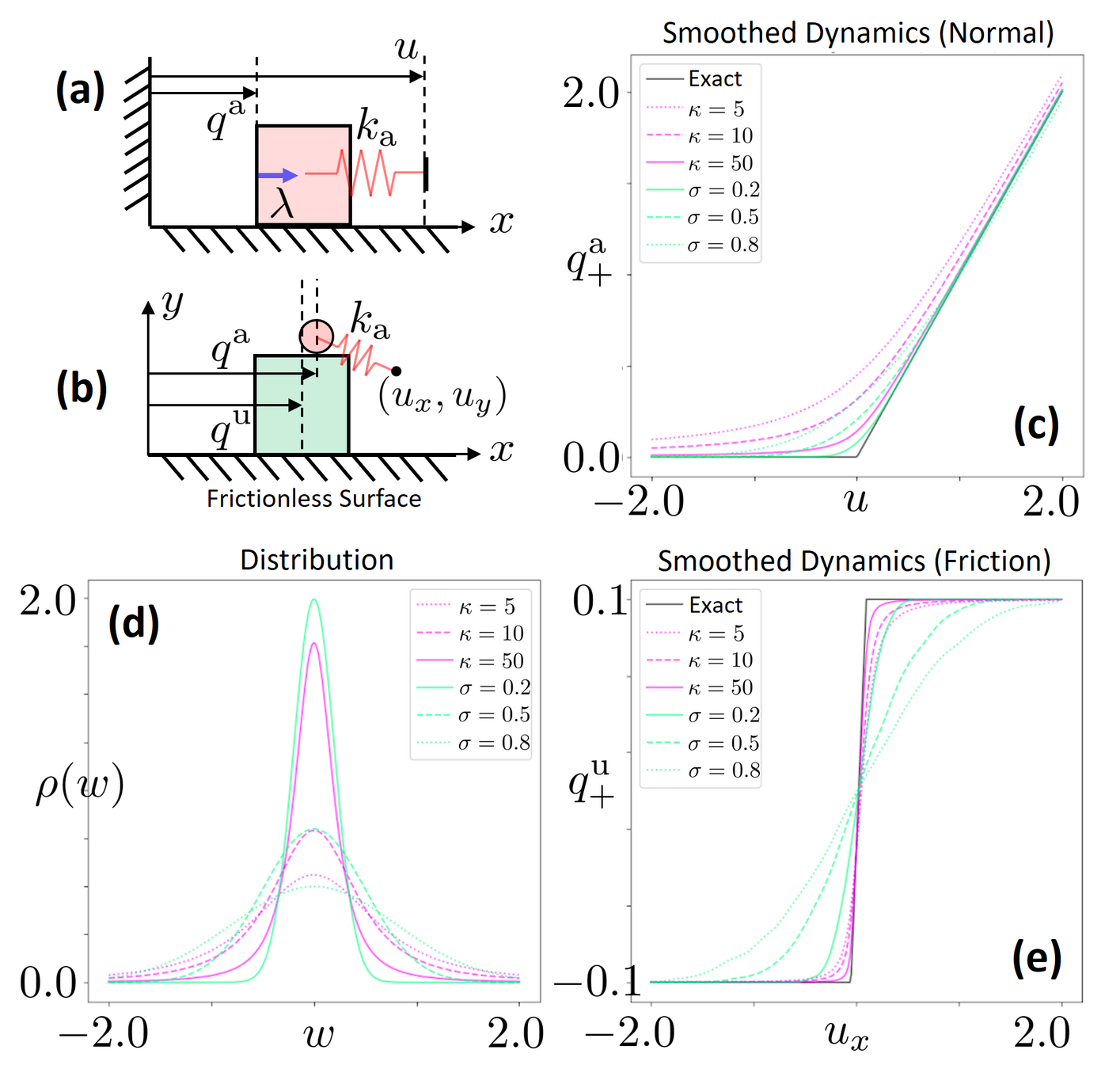

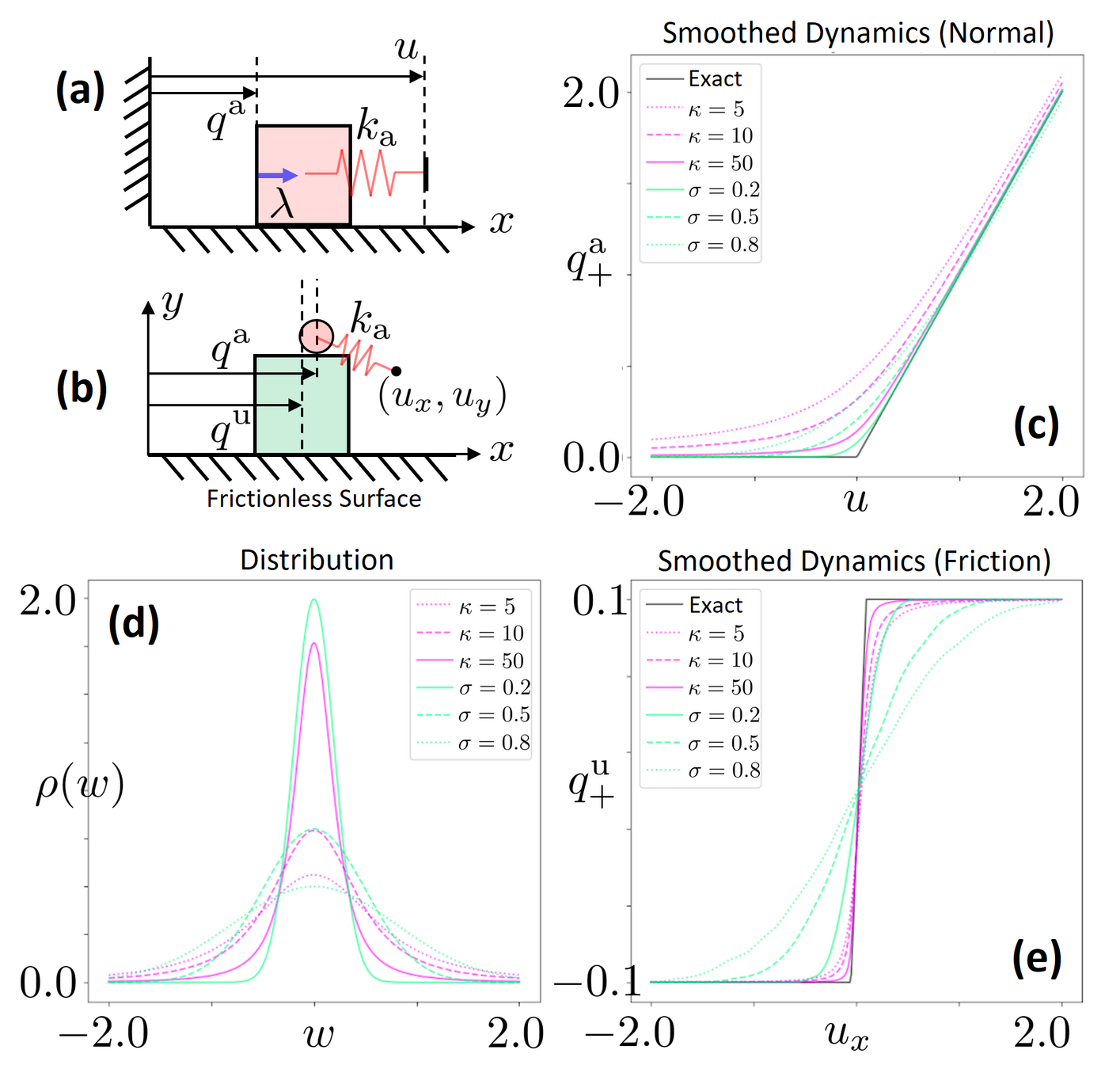

Smoothing of Contact Dynamics

Without going too much into details of multibody contact dynamics, we will use time-stepping, quasidynamic formulation of contact.

- We assume that velocities die out instantly

- Inputs to the system are defined by position commands to actuated bodies.

- The actuated body and the commanded position is connected through an impedance source k.

Equations of Motion (KKT Conditions)

Non-penetration

(Primal feasibility)

Complementary slackness

Dual feasibility

Force Balance

(Stationarity)

Quasistatic QP Dynamics

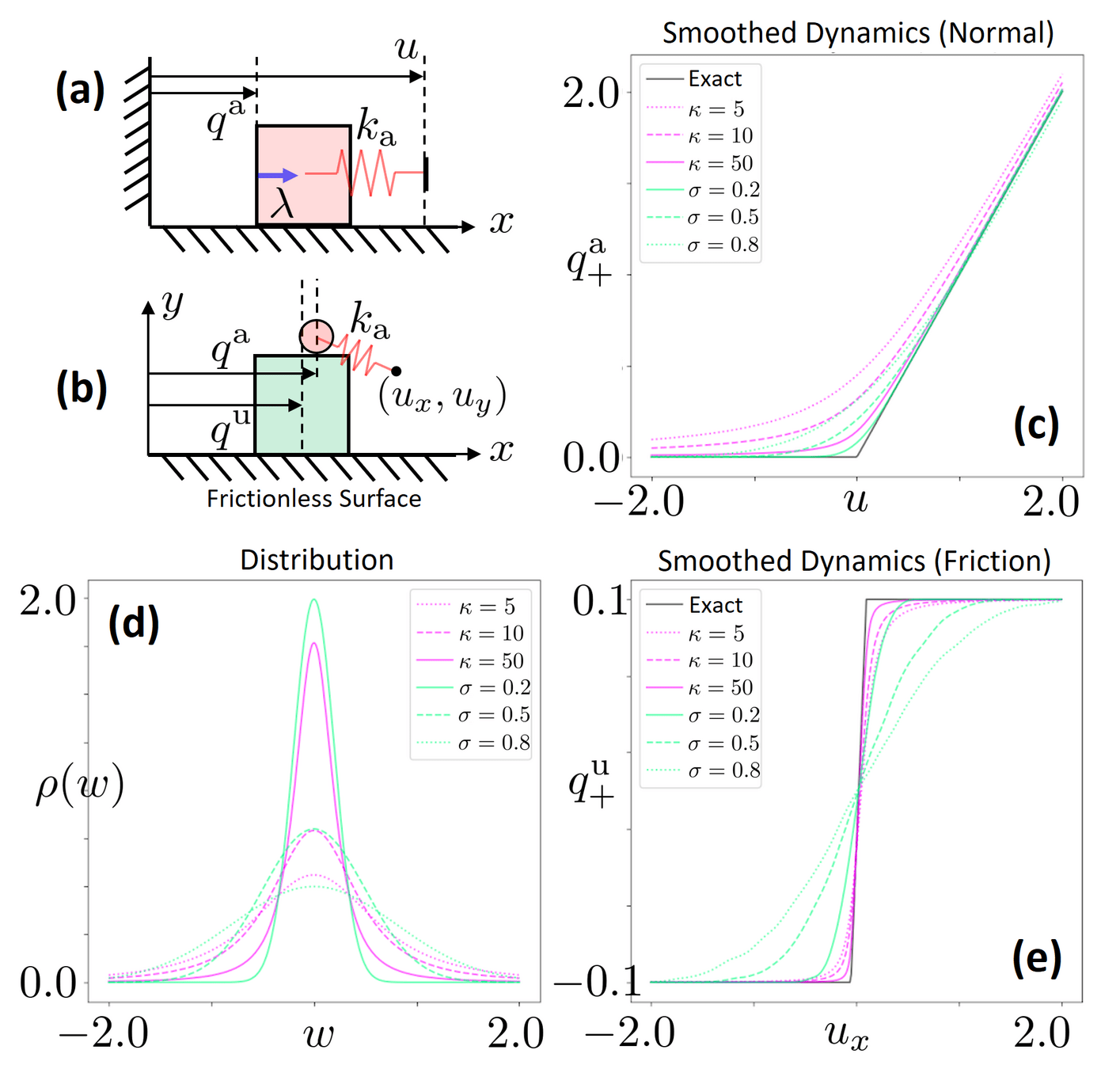

We can randomize smooth this with first order methods using sensitivity analysis or use zeroth-order randomized smoothing.

But can we smooth this analytically?

Barrier (Interior-Point) Smoothing

Quasistatic QP Dynamics

Equations of Motion (KKT Conditions)

Interior-Point Relaxation of the QP

Equations of Motion (Stationarity)

Impulse

Relaxation of complementarity

"Force from a distance"

What does smoothing do to contact dynamics?

- Both schemes (randomized smoothing and barrier smoothing) provides "force from a distance effects" where the exerted force increases with distance.

- Provides gradient information from a distance.

- In contrast, without smoothing, zero gradients and flat landscapes cause problems for gradient-based optimization.

Is barrier smoothing a form of convolution?

Equivalence of Randomized and Barrier Smoothing.

- For the simple 1D pusher system, it turns out that one can show that barrier smoothing also implements a convolution with the original function and a kernel.

- This is an elliptical distribution with a "fatter tail" compared to a Gaussian".

Later result shows that there always exists such a kernel for Linear Complementary Systems (LCS).

Limitations of Smoothing

Contact is non-smooth. But Is it truly "discrete"?

The core thesis of this talk:

The local decisions of where to make contact are better modeled as continuous decisions with some smooth approximations.

My viewpoint so far:

Limitations of Smoothing

These reveal true discrete "modes" of the decision making process.

Limitations of Smoothing

Apply negative impulse

to stand up.

Apply positive impulse to bounce on the wall.

Bias of Smoothing

rho subscript denotes smoothing

We have linearized the smoothened dynamics around u = qa.

Depending on where we set the goal to be, we see three distinct regions.

Bias of Smoothing

rho subscript denotes smoothing

0.5m

0.0m

Region 1. Beneficial Bias

Goal = 0.61m

Optimal input

The linearized model provides helpful bias, as the optimal input moves the actuated body towards making contact.

Bias of Smoothing

rho subscript denotes smoothing

0.5m

0.0m

Region 2. Hurtful Bias

Goal = 0.52m

Optimal input

If you command the actuated body to hold position, the unactuated body will be pushed away due to smoothing.

The actuated body wants to go backwards in order to decrease this effect if the goal is not too in front of the unactuated body.

Bias of Smoothing

rho subscript denotes smoothing

0.5m

0.0m

Region 3. Violation of unilateral contact

Goal = 0.45m

Optimal input

If you set the goal to behind the unactuated body, the linear model thinks that it can pull, and will move backwards.

Motivating Gradient Interpolation

Bias

Variance

Common lesson from stochastic optimization:

1. Both are unbiased under sufficient regularity conditions

2. First-order generally has less variance than zeroth order.

Bias

Variance

Bias

Variance

1st Pathology: First-Order Estimators CAN be biased.

2nd Pathology: First-Order Estimators can have MORE

variance than zeroth-order.

Can we automatically decide which of these categories we fall into based on statistical data?

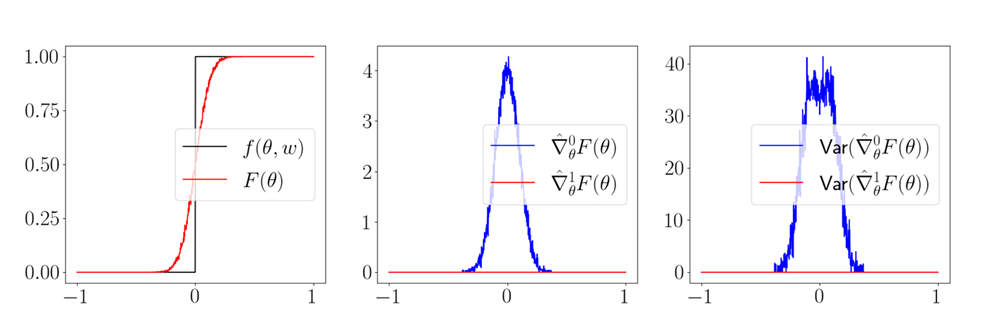

The Alpha-Ordered Gradient Estimator

Perhaps we can do some interpolation of the two gradients based on some criteria.

Previous works attempt to minimize the variance of the interpolated estimator using empirical variance.

Robust Interpolation

Thus, we propose a robust interpolation criteria that also restricts the bias of the interpolated estimator.

Robust Interpolation

Robust Interpolation

Implementation

Confidence Interval on the zeroth-order gradient.

Difference between the gradients.

Key idea: Unit-test the first-order estimate against the unbiased zeroth-order estimate to guarantee correctness probabilistically. .

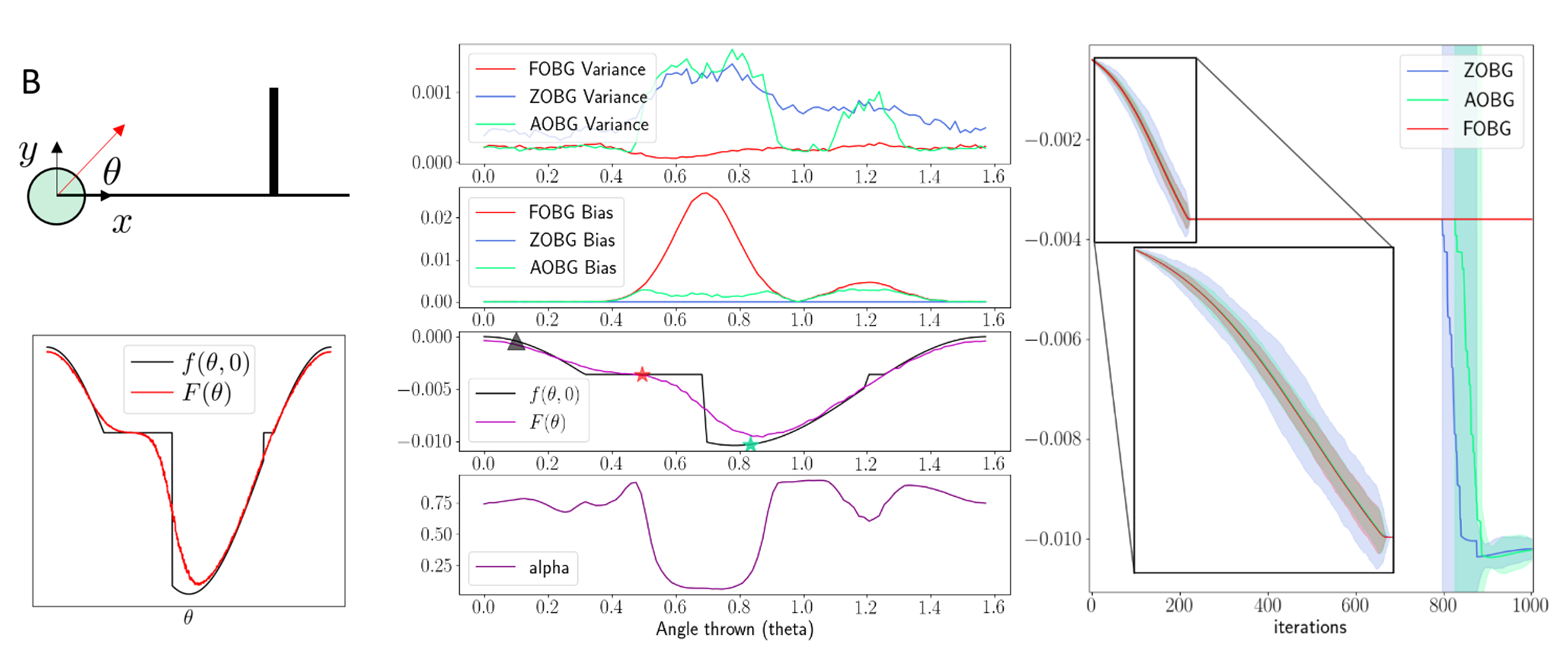

Results: Ball throwing on Wall

Key idea: Do not commit to zeroth or first uniformly,

but decide coordinate-wise which one to trust more.

Results: Policy Optimization

Able to capitalize on better convergence of first-order methods while being robust to their pitfalls.