Performance Testing with Playwright

John Hill

About Me

Web UI Test Engineer,

Space Mission Control Software, KBR

NASA Ames Research Center

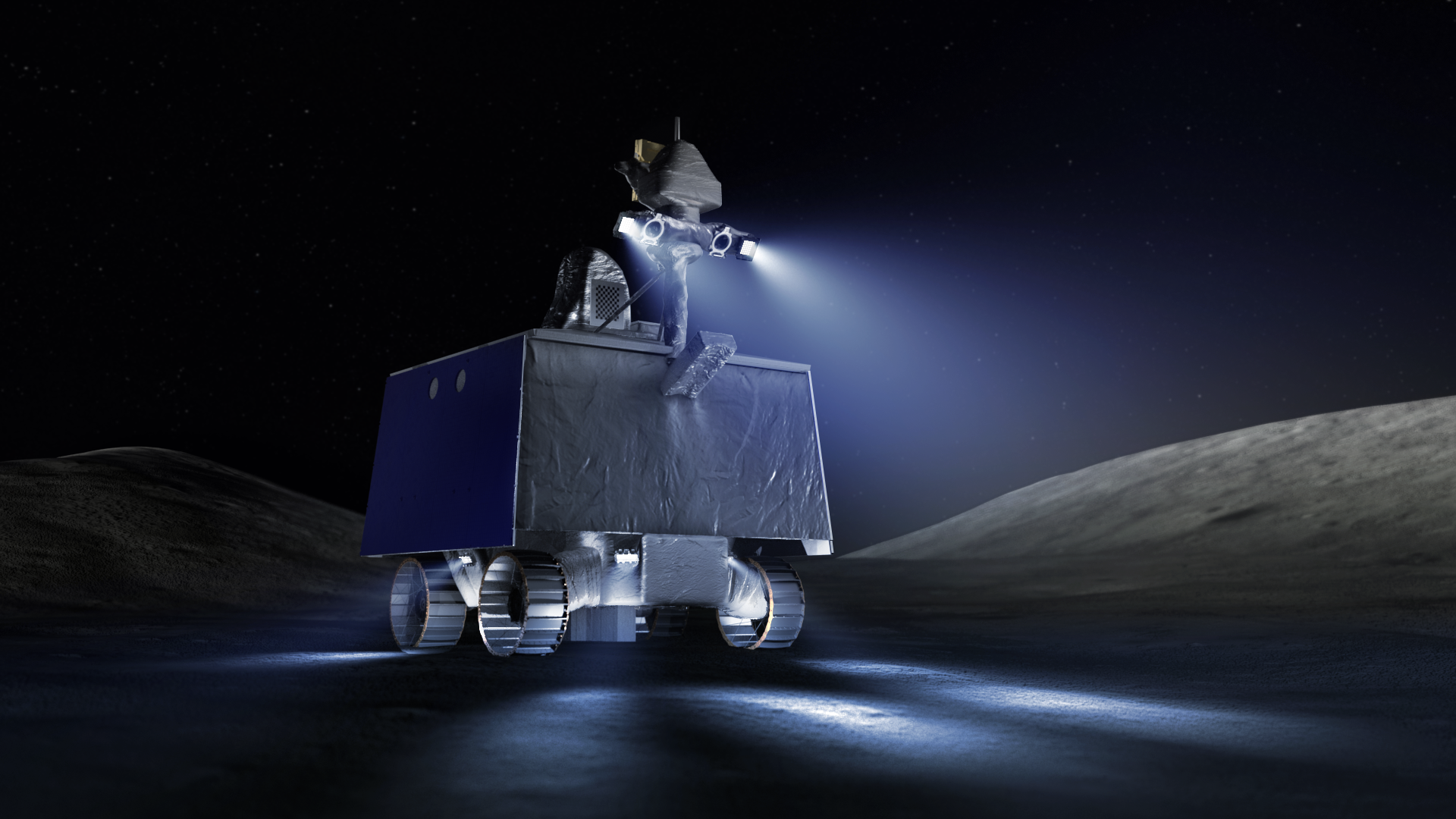

VIPER

In this talk

- Clientside Performance Testing

- What to Measure

- Playwright

- Playwright Capabilities

- Playwright Problems (and Solutions)

- State of the Union and What's Next!

In this talk

- How to fix performance problems.

- Part 1!

What is Clientside Performance?

- "User Experience... using a web application... in a web browser"

- Easiest to contextualize when things go wrong

Happy Accidents

What to measure?

- After the first load

- Network

- Still matters after the first load!

- Latency

- Number of requests

- CPU / GPU

- "Jank" or "Freezing"

- Frames Per Second

- Memory

- Leaks.

Where do measurements come from?

- Chrome, itself

- Browser/Web Standard APIs

- DevTools and DevTools APIs

Browser/Web APIs

- Specification on MDN

- ResourceTiming APIs

- How long does an individual resource request take and where does it spend it's time

- LongTasks APIs

- Provides a great proxy for CPU/GPU/Animation

- Keeps track of where the main thread is locked for 50ms or more

- UserTiming API

- Performance.mark()

- Twitter time-to-first-tweet example

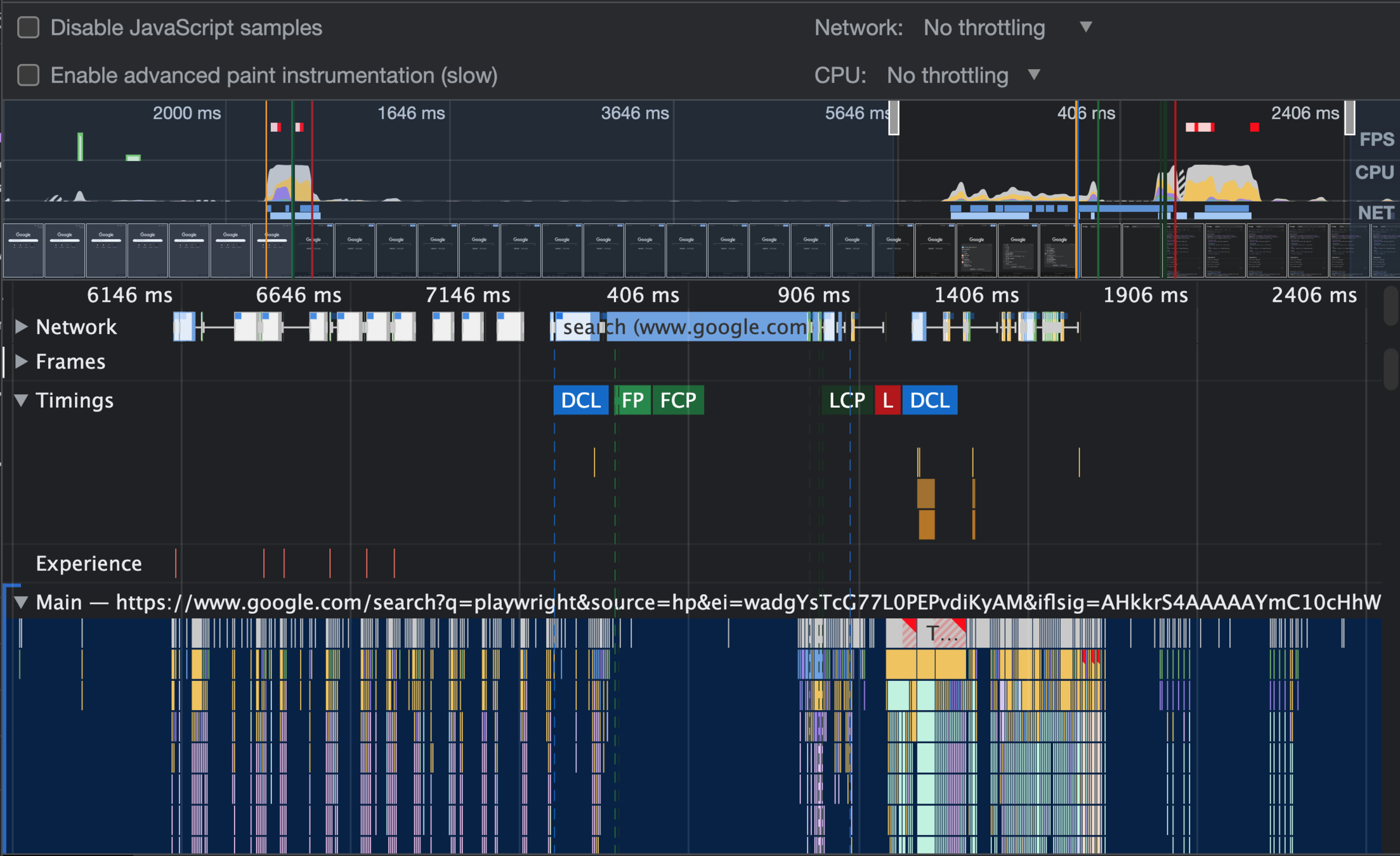

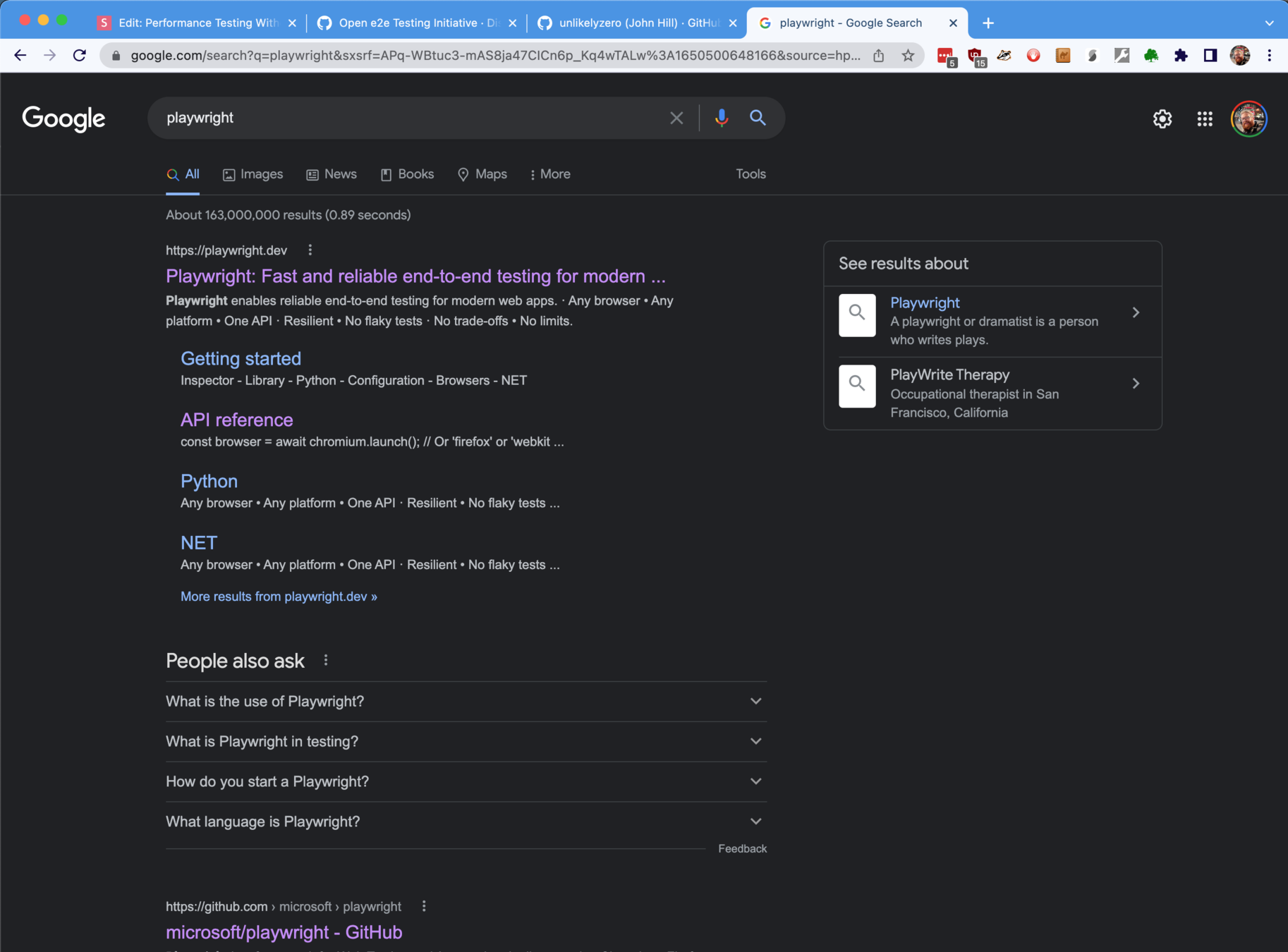

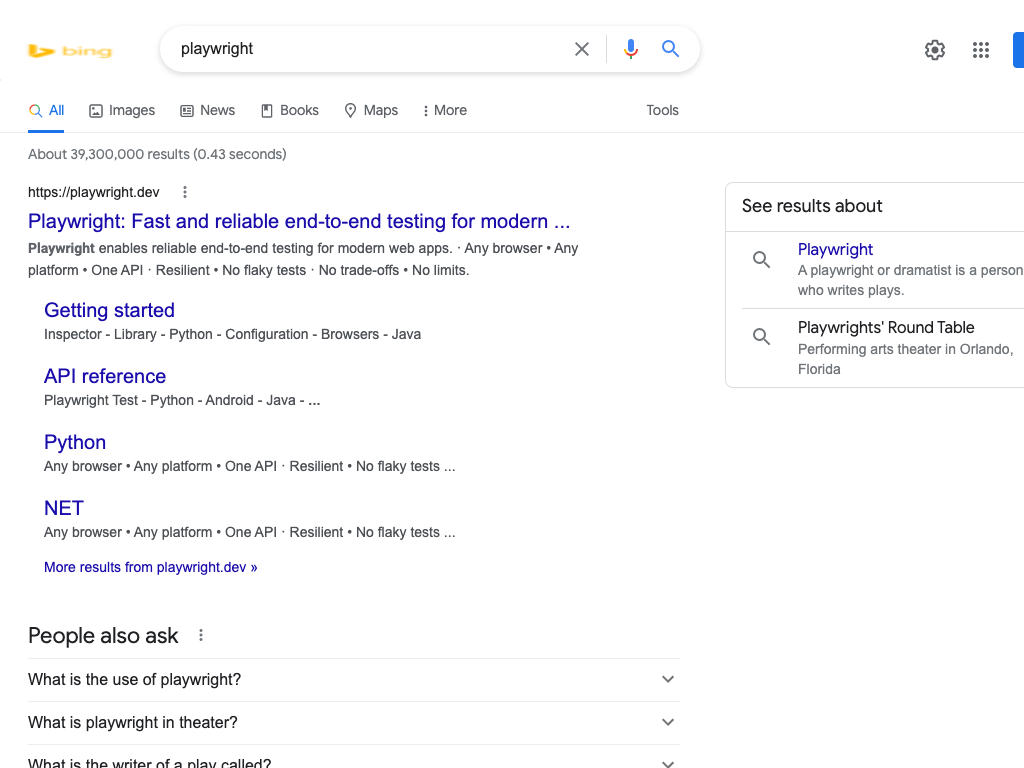

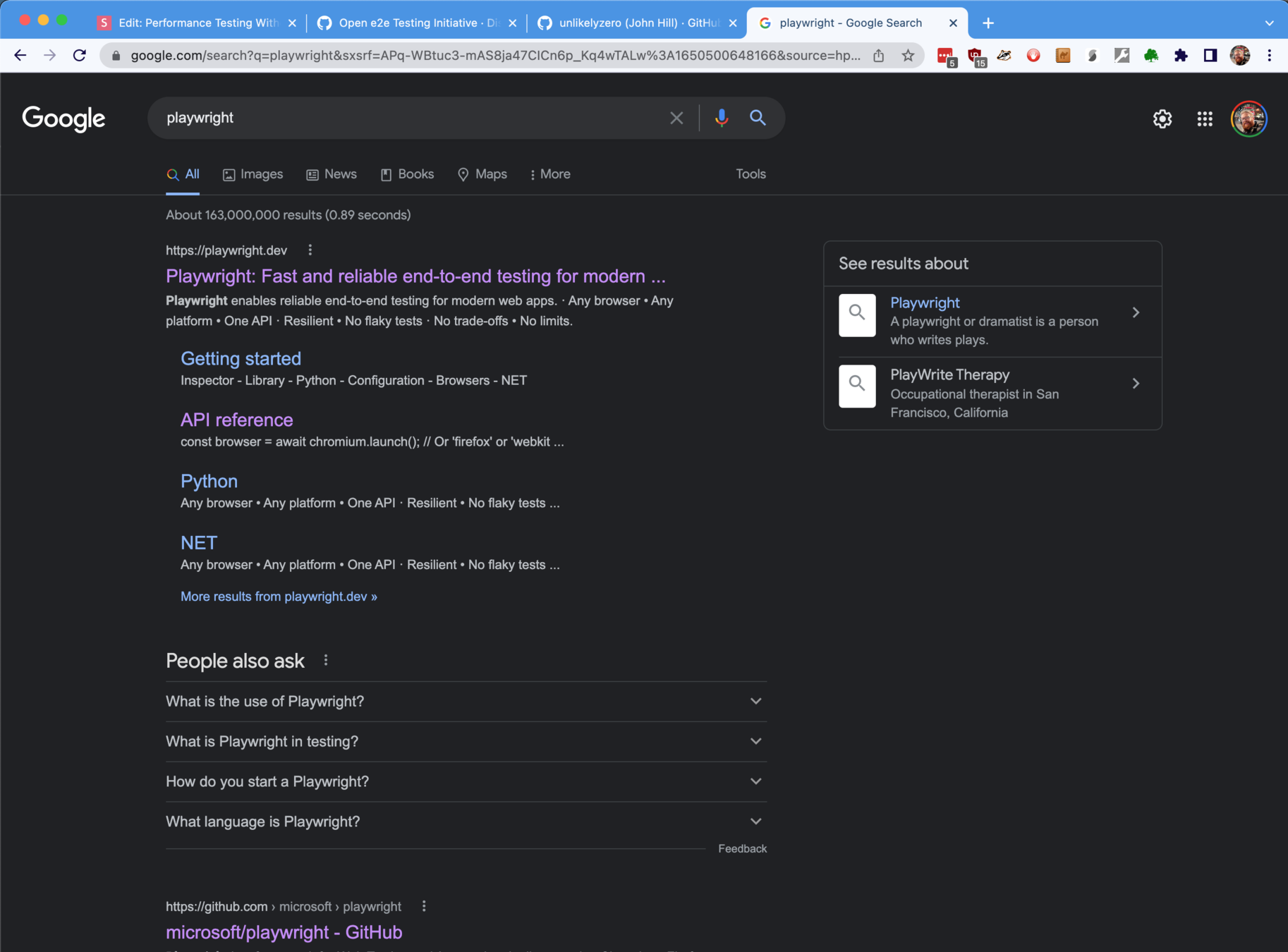

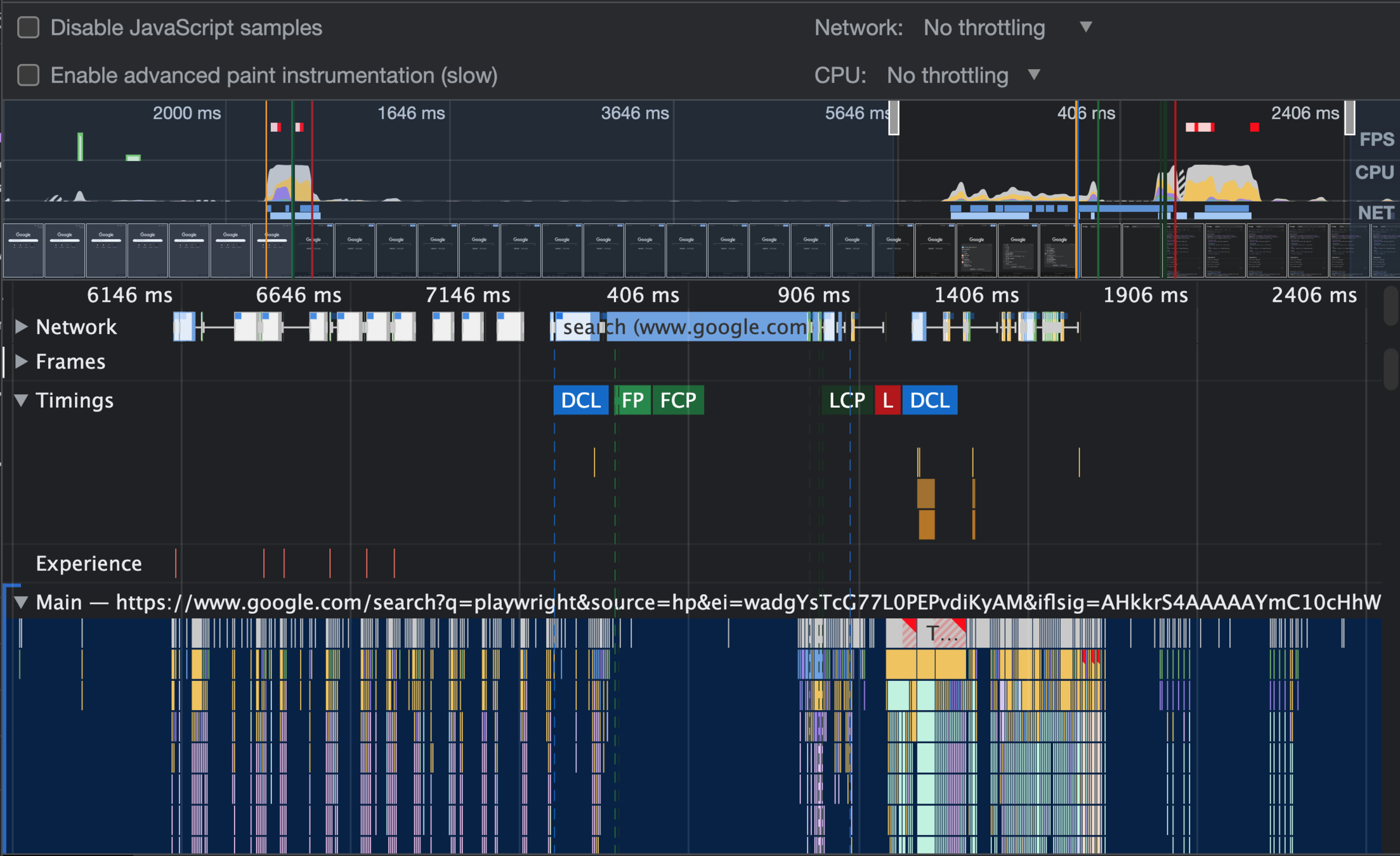

Google Search Devtools Demo

DevTools - Performance Tab

How do we get to these Chrome and Web measurements.... programmatically and automatically?

- Based on puppeteer project or "how Chrome tests Chrome"

- Supports Edge, Chrome, Firefox, Safari, iOS(ish)

- Java, CSharp, Python, Python Asyncio, JS, Typescript

- Tighter coupling to browser internals than Selenium

- aka "Inside-out Performance Measurements"

Getting started with Playwright Demo

npx install playwright

npx playwright codegen

Playwright Capabilities Demo

- Already showed how easy it is to get started

- Let's demonstrate the power

- Capabilities Demo 1: CDP and Performance API

- Capabilities Demo 2: Generated Performance.mark() and DevTool Performance Timeline.

- Capabilities Demo 3: API Interception and FPS Measurement

WIP Capabilities

- These "work" but are not proven

- PerformanceObserver, LongTasks

- HAR file generated during test.

- Send HAR to k6 or Jmeter to simulate many users

Challenges (and solutions) in Playwright Performance Testing

"Test Drift"

Synthetic tests aren’t very resilient, and they can easily fail when small UI changes are introduced, generating unnecessary alert noise. This means that whenever a minor application element such as a button is changed, the corresponding test must be, too."

dynatrace.com/news/blog/what-is-synthetic-monitoring/

https://github.com/nasa/openmct/pull/5203/files

Network Fix - Remove it

Your Internal Network OR

Localhost

CDP

Synthetic User Monitor

"Internet"

Network Fix - Control it

- Inside the browser with CDP access

- Inject CDP Network Profiles

const client = await page.target().createCDPSession();

await client.send('Network.enable');

await client.send('Network.emulateNetworkConditions', {

latency: 500

});Network Control Pt 2

- Once you're in the browser, you control it all

- Mock Network Responses with API Interception

- Modify Request OR Response on the wire

await page.route('**/*.png', route => {

route.fulfill({

body: './bing.png'

});

});

Measurement "Transferability"

- Named Performance Machines?

- Your machine is not the same as mine.

- "Chrome as a service" and way more than today's demo

- "Interface for Performance Testing"

- Chrome + essentials in a container. Hard.

- Exposes generic CDP endpoint

- Playwright remotely connects via .connectOverCPD() API

- Transferability!

browserless

browserless:

image: browserless/chrome:latest

cpus: 2.0

mem_limit: 4ggithub.com/browserless/chrome

Remote CDP

Your Internal Network

connectOverCDP()

emulateNetworkConditions

0 latency

500ms

"3g"

Machine Transferability

- Create a Machine Baseline to store alongside results

test('Zeroed baseline', async ({page, browser}) => {

await console.time('htmlfloor');

await page.setContent(`

<div class="visible">Hello world</div>

<input type="checkbox" enabled class="enabled">

`);

await page.locator('.visible').isVisible();

await console.timeLog('htmlfloor');

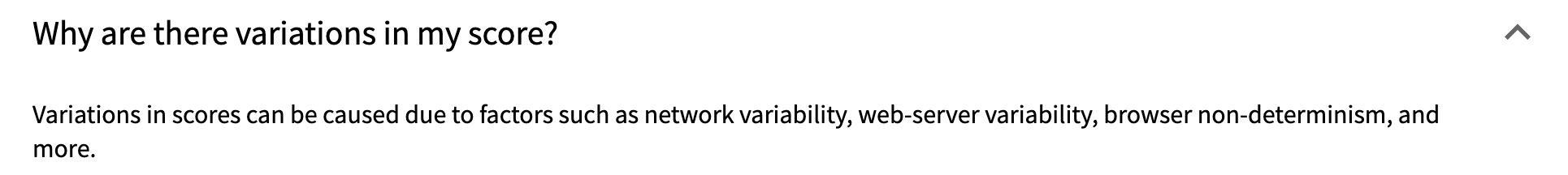

});Precision, Accuracy, and Variability

- Leverage "Browser Time" from Performance APIs. This includes measurements in the microsecond!

- Playwright has performance changes

- Chrome has performance changes

- Run over run variation

- How do we know if a 10% decrease in a metric in is an application change or... run-over-run variation?

- Controlled as many variables as possible!

- Run a lot. a lot a lot.

- Becomes a data problem.

InfluxDB JS Client

const { influx } = require('./influxUtils');

test.describe('feature foo', () => {

test('my test', async ({ page }) => {

await page.goto('/');

await influx.write(

'test_mesurement',

{ tag1: 'tag 1 value' },

{ field1: 12, field2: 2.3 },

Date.now()

);

});

});- Solve the "Data" problem and push results into TSDB

- InfluxDB has a native InfluxDB Client!

- InfluxDB Allows you to modify timestamps vs Prometheus Write's limitation to use TSDB-received time

Summary

- Clientside Performance

- What to Measure

- Playwright

- Automated(?) a Google Search Performance Test

- Playwright Performance Testing problems and Solutions

What's next?

xk6-browser

- Open Source

- k6 driving playwright APIs

- beta

- supports .connectOverCDP()

What it solves:

*Generate many, many

measurements!

** Tap into the huge k6 ecosystem to integrate and correlate with System Monitoring, Load Testing, etc

My next steps

- Push everything to Performance Observer

- Automated Memory Leak detection

- Performance Timeline Parsing Library?

Help NASA Open MCT!

- Open e2e Testing Initiative

- Your opportunity to contribute to NASA

- Traditional CLA applies to testers

- It's as easy as it looked!

Contact and Links

openmct

js-perf-toolkit

browserless/chrome

xk6-browser

Cutting Room Floor

How do we test for the intended and unintended frontend performance problems?

Synthetic User Monitoring

- Recent Practice of Automated Performance Testing

- "SRE Team running Selenium tests"

- Why? The frontend is bearer of bad news!

- Critical User Journeys covered

- Comes with a lot of asterisks

| First Load | Loaded Page | Session |

|---|

Why is *automated* frontend performance *testing* important?

- Google found that when they increased the search results from 10 to 30, the load time increased by half a second and resulted in a 20% decrease in ad revenues.

- Intentional Product Change

- One Change. Everyone's problem.

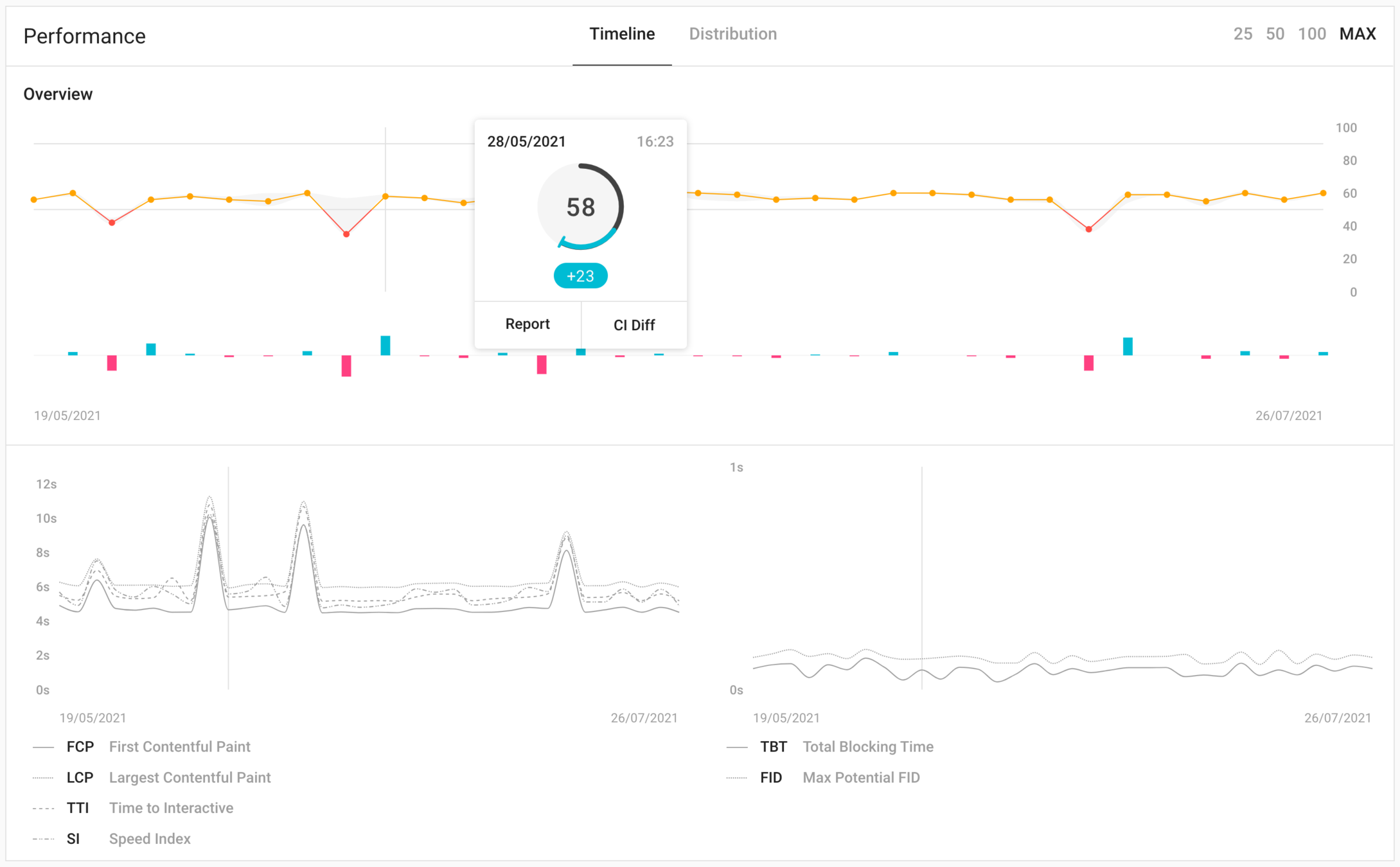

Performance Test Results

from browserstack.com/speedlab

** asterisk

Synthetic User Monitoring Challenges (Part Deux)

And lastly, many synthetic monitoring tools lack the context needed to explain why a specific failure happened or what the business implications might be, lengthening time to resolution and making it unnecessarily difficult to prioritize application performance issues."

dynatrace.com/news/blog/what-is-synthetic-monitoring/

***** Asterisk

User Experience on Single Page

- Twitter "Time to First Tweet"

- User Timing API

- Performance.marks() API

performance.mark('first-google-ad');

### Some number of clicks or steps ###

performance.mark('click-on-first-ad');

performance.measure('first-ad-click-duration','first-google-ad','click-on-first-ad');

###returned object

PerformanceMeasure {

name: 'first-ad-click-duration',

entryType: 'measure',

startTime: 4727964.299999952,

duration: 12436.700000047684

}

- Only applies to a single page!

- *No DevTools Tooling like Lighthouse :(

| First Load | Loaded Page | Session |

|---|

Lighthouse

- "First load for Frontend teams"

- Scoring and Tips

- Lighthouse-CI

- Only first load :(

| First Load | Loaded Page | Session |

|---|

Why is frontend performance important?

- Amazon found that every 100 milliseconds in load time correlated to 1% decrease in sales.

- Google found that when they increased the search results from 10 to 30, the load time increased by half a second and resulted in a 20% decrease in ad revenues.

- Frontend is almost always the bearer of bad news.

Creating a baseline of measurement for transferability

- Let’s get rid of all asterisks associated with our measurment.

- Create a HTML Webpage.

- Test and Time the page.

- Demo

- Run this before every run. Run between version changes. Run this local vs CI.

Summary

- Browser Performance and DevTools

- Load Testing and Perf Problems

- What to Measure

- Browserless

- Driving with Puppeteer

- js-perf-toolkit

Demo

What do we need?

- Stable Interface

- Controlled Environment

Demo

What do we need?

- Stable Interface

- Playwright "bless your own damn build" testing

- sitespeed.io is a better specialized tool

Hard?

- Variability

- We "only" want App-under-test variation

- Network

- CPU

- Chrome

- Test Framework

- Transferability

- CPU/GPU

- QA Team giving us bad builds!

"Hard" Demo

Capabilities Demo

JS-Perf-Toolkit

- github.com/unlikelyzero/js-perf-toolkit

- Moves everything* into containers

- browserless

- influxdb+prometheus+grafana

- Puppeteer/Playwright Examples

- NodeJS->InfluxDB (coming soon)

- xk6-browser*

- Network control with toxiproxy

- Integrates into monitoring systems with InfluxDB and Prometheus*

Why?

- Load Testing is what you do to the backend to approximate Rough Response Time

- HTTP Rest API can provide a rough estimate to User Experiences in the app

Selenium?

- Yes? No? Maybe?

- Variability in results due to waitFor

- Driver? Jmeter+Selenium

- 2 Cores per User!

- CDP in 4.0

How?

(Chrome! Devtools!)

Our first asterisk * !

Load Testing Can't Properly Approximate Frontend Performance Regressions

Chrome

- Loads and renders your web app via static assets, javascript, and APIs

- Web app / javascript

- How well it runs on your machine

- Static Assets

- You can't

- But!

- HTTP 1.1 only allows 6 sequential API responses

- Websockets?

- Graphql queries based on user data?

Network Variability*

- Network is defined by Latency and number of requests necessary to provide User the needed data to present on screen

- Variability in the internet and local machine

Why is *frontend* performance important?

- Amazon found that every 100 milliseconds in load time correlated to 1% decrease in sales.

- Google found that when they increased the search results from 10 to 30, the load time increased by half a second and resulted in a 20% decrease in ad revenues.

- Load time and User Experience

Quick Check-in

- Why performance test

- Load times and lighthouse

- What to look for

- After load and Long Tasks

- RUM, User Timing, and marks

How did Google know the users left?

Google found that when they increased the search results from 10 to 30, the load time increased by half a second and resulted in a 20% decrease in ad revenues."

Real User Monitoring (RUM)

- "Where real users go and what they do"

- Inject js code into application to report back your marketing team

- Session Replay

- Key Takeaway for Performance Testing

-

- User Timing

- Performance.marks()

What happens after the first load?

What happens after the first load?