warpforge

Build Anything

{fast,repeatedly,hermetically,reliably, withfriends}

--

# I want...

I have software I want to build

# I want...

I have software I want to build

use!

# I want...

I have software I want to build

use!

distribute! install!

compile!

okay, patch!

then compile and use!

and share..!

# I want...

I have software I want to build

use!

distribute! install!

compile!

okay, patch!

then compile and use!

and share..!

and spend days groking

how to do this...

and aligning versions...

and twiddling paths for my distro....

# I want...

> "so you want containers"

Well... no.

I don't want monoliths.

I don't want blobs.

I want to freely compose things.

And I want assembly instructions that are reproducible.

I want something a little more granular than containers.

Building a clean room, then getting a bucket of mud from outside the door, and upending the whole thing on the floor...

...defeats the purpose.

Immediate productivity -- at the cost of long-term sanity.

So let's look a little more at some goals:

# I want...

Goals

Decentralization

Comprehensibility

Hackability

Reliabilty

# I want...

Goals

Decentralization

Comprehensibility

Hackability

Reliabilty

...and mechanisms

cleanroom environments

declarative scene setup

100% content-addressed input

API-driven (not language)

no central naming authority

no single ruling convention

no blessed package suites

Assemble the fs as you want

# I want...

Goals

Decentralization

Comprehensibility

Hackability

Reliabilty

All of these are also just fancy ways to say:

"more shareable

with more people

over longer times"

# I want...

Goals

Or another much simpler way of saying this...

I want:

... and software for making them...

easily; and without distro-specifics.

# I want...

Goals

Success requires threading a careful balance:

The thing has to use primitives that support reliability and decentralization -- and defacto, that means lots of cryptographic hashes...

... and it also has to remain usable to humans. Productive. Friendly.

Tricky!

Nothing really meets all these goals right now.

NPM? PyPI? Go mod? ...etc?

Nothing really meets all these goals right now.

All of these Language Package Managers (LPMs) are fine

for their languages

... and fairly useless the instant the

scope goes beyond that.

Also most don't care about reproducibility at all,

and have centralized naming systems...

Make? It's a great invocation tool.

Nothing really meets all these goals right now.

... but it's not an environment manager.

Getting dependencies? Up to you.

Securing those supply chains? Up to you.

Versioning? Up to you.

Sandboxing? Up to you.

...? Up to you.

Nothing really meets all these goals right now.

They're whole build environment managers!

That's neat!

Bazel / Nix / Guix: Getting closer...

Nothing really meets all these goals right now.

Build descriptions depend on other build descriptions, not on actual content addressing.

(I believe this is a design error you cannot recover from.

More on this later.)

Bazel / Nix / Guix: Getting closer...

Bazel / Nix / Guix: Getting closer...

Nothing really meets all these goals right now.

(I'll just ask you: when's the last time you've seen a Bazel build

that lasts long outside of a corporate environment that's

capable of enforcing a monorepo?)

Build descriptions depend on other build descriptions, not on actual content addressing,

and that has a gnarly side effect:

It forms a cathedral.

Nix and Guix have a couple other interesting choices too...

Nothing really meets all these goals right now.

(Guix is largely Nix! But in lisp! Whee!)

The Nix/Guix library linking strategy is defacto {controlled by} / {entangled with} the path layout convention of its store.

And both have whole languages as the interface, rather than an API.

(Good luck detaching those design choices.)

Reprotest? Ostensibly not just debian, but practically... just debian.

Nothing really meets all these goals right now.

I'm not sure, but apparently something in these is the wrong level of abstraction, given the amount of adoption and reuse they have, vs the amount of maturity they have....

archlinux-repro? From the repo title alone, nuff said.

Bitbake, yocto, others in this field? Used

by distro-bootstrappers but nobody else? Why?

And then it gets more esoteric...

Nothing really meets all these goals right now.

Tor browser? Has reproducible builds!

And it's all custom...

github.com/freedomofpress/securedrop-builder ? Wonderful!

But... it's a one-off for that project.

If every project is doing this again solo,

we have failed.

So what now?

Warpforge

it helps you build things

a build tool

collaborative, decentralized --

information designed to be shared.

Work together, but not in lockstep.

and supply chains

... that you put other build tools in, to universalize things.

for everyone.

... secured by hash trees.

Warpforge

# The Difference

1. Hashes in low-level API.

The major

keys:

2. Merkle tree for

name->hash resolution.

3. Make it usable.

# The Difference

1. Hashes in low-level API.

The major

keys:

2. Merkle tree for

name->hash resolution.

3. Make it usable.

(This is the same playbook as if you were making git!)

# The Difference

Any time we refer to other content...

The major

keys, pt2:

We're going to recurse based on to content-addressed IDs.

Not on build instruction.

Some of these problems we solve because we have to

(...not because we really want to)

Supply chain management.

Content addressing large filesystem snapshots.

Name resolution.

Decentralized, offline.

And only then can we get onto containment, and execution...

(...and back onto library linking, oof...)

Quick Peek

There are Layers

(We'll start at the bottom)

Most Important Thing

Granular inputs.

At your choice of paths.

Compose freely.

warpforge

{"formula.v1": {

"inputs": {

"/zapp/bash": "ware:tar:57j2Ee9HEtDxRLE6uHA1xvmNB2LgkFyKqQuHFyGPN"

"/zapp/coreutils": "ware:tar:mNB2LgqL3HeT5pCXr57j2Ee9HEtDxRLE6FyGPN"

"/task/src": "ware:git:3616962f4dd2bda67b317583475f04486e763"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}2nd Important Thing

Hashes.

Everything is

ID by hash.

warpforge

{"formula.v1": {

"inputs": {

"/zapp/bash": "ware:tar:57j2Ee9HEtDxRLE6uHA1xvmNB2LgkFyKqQuHFyGPN"

"/zapp/coreutils": "ware:tar:mNB2LgqL3HeT5pCXr57j2Ee9HEtDxRLE6FyGPN"

"/task/src": "ware:git:3616962f4dd2bda67b317583475f04486e763"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}3rd Important Thing

This is an API.

It's just JSON.

warpforge

{"formula.v1": {

"inputs": {

"/zapp/bash": "ware:tar:57j2Ee9HEtDxRLE6uHA1xvmNB2LgkFyKqQuHFyGPN"

"/zapp/coreutils": "ware:tar:mNB2LgqL3HeT5pCXr57j2Ee9HEtDxRLE6FyGPN"

"/task/src": "ware:git:3616962f4dd2bda67b317583475f04486e763"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}0'th Important Thing

It has to actually run.

So it does.

warpforge

More JSON comes out.

but that was the low level.

that's brutal.

(hashes aren't human-usable.)

Quick Peek

at the high level

(e.g., now we'll hide the hashes again)

# Hiding the Hash

(Reproducible!) Name Resolution

The mission is simple:

We want to use human-readable names;

and we want to do lookup of content IDs

(e.g. cryptographic hashes)

from those human-readable names;

and then we want to use the human-readable names in our build instructions.

(Reproducible!) Name Resolution

The mission is simple:

the solution is pretty simple, too.

We're just going to use a merkle tree.

# Hiding the Hash

(Reproducible!) Name Resolution

You've seen merkle trees before:

It's what git internals use.

It's a good way to describe data in a way that can be assembled and verified incrementally.

# Hiding the Hash

warpforge

{"workflow.v1": {

"inputs": {

"/zapp/bash": "catalog:warpsys.org/bash:v4.14:linux-amd64-zapp"

"/zapp/coreutils": "catalog:warpsys.org/coreutils:v5000:linux-amd64-zapp"

"/task/src": "ingest:git:.:HEAD"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}

{"catalogroot.v1": {

"modules": {

"warpsys.org/gnu/bash": "zMwj3irr8o2j3ksljFKkemK3jrl5sm3e9mf"

"warpsys.org/gnu/coreutils": "zMwirsm3e9r8o2jemK3jrl53ksljFKkj3el5mf"

"warpsys.org/etc/etc": "zMwj2j3ksljFK3imK3jrtRrsm3e9r8okmf"

...

}

}}📦️

Warpforge "Catalogs"...

are a merkle tree

containing "modules"...

which contain "releases"...

...which point to the hashes of filesystems!

Human-readable Names

And now we can use these.

And if you have the catalog root hash, the names are resolvable in a reproducible way.

warpforge

{"workflow.v1": {

"inputs": {

"/zapp/bash": "catalog:warpsys.org/bash:v4.14:linux-amd64-zapp"

"/zapp/coreutils": "catalog:warpsys.org/coreutils:v5000:linux-amd64-zapp"

"/task/src": "ingest:git:.:HEAD"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}# Fancy Overdrive

Getting Even Fancier

{

"plot.v1": {

"inputs": {

"glibc": "catalog:warpsys.org/bootstrap/glibc:v2.35:amd64",

"ld": "catalog:warpsys.org/bootstrap/glibc:v2.35:ld-amd64",

"ldshim": "catalog:warpsys.org/bootstrap/ldshim:v1.0:amd64",

"make": "catalog:warpsys.org/bootstrap/make:v4.3:amd64",

"gcc": "catalog:warpsys.org/bootstrap/gcc:v11.2.0:amd64",

"grep": "catalog:warpsys.org/bootstrap/grep:v3.7:amd64",

"coreutils": "catalog:warpsys.org/bootstrap/coreutils:v9.1:amd64",

"binutils": "catalog:warpsys.org/bootstrap/binutils:v2.38:amd64",

"sed": "catalog:warpsys.org/bootstrap/sed:v4.8:amd64",

"gawk": "catalog:warpsys.org/bootstrap/gawk:v5.1.1:amd64",

"busybox": "catalog:warpsys.org/bootstrap/busybox:v1.35.0:amd64",

"src": "catalog:warpsys.org/bash:v5.1.16:src"

},

"steps": {

"build": {

"protoformula": {

"inputs": {

"/src": "pipe::src",

"/lib64": "pipe::ld",

"/pkg/glibc": "pipe::glibc",

"/pkg/make": "pipe::make",

"/pkg/coreutils": "pipe::coreutils",

"/pkg/binutils": "pipe::binutils",

"/pkg/gcc": "pipe::gcc",

"/pkg/sed": "pipe::sed",

"/pkg/grep": "pipe::grep",

"/pkg/gawk": "pipe::gawk",

"/pkg/busybox": "pipe::busybox",

"$PATH": "literal:/pkg/make/bin:/pkg/gcc/bin:/pkg/coreutils/bin:/pkg/binutils/bin:/pkg/sed/bin:/pkg/grep/bin:/pkg/gawk/bin:/pkg/busybox/bin",

"$CPATH": "literal:/pkg/glibc/include:/pkg/glibc/include/x86_64-linux-gnu"

},

"action": {

"script": {

"interpreter": "/pkg/busybox/bin/sh",

"contents": [

"mkdir -p /bin /tmp /prefix /usr/include/",

"ln -s /pkg/glibc/lib /prefix/lib",

"ln -s /pkg/glibc/lib /lib",

"ln -s /pkg/busybox/bin/sh /bin/sh",

"ln -s /pkg/gcc/bin/cpp /lib/cpp",

"cd /src/*",

"mkdir -v build",

"cd build",

"export SOURCE_DATE_EPOCH=1262304000",

"../configure --prefix=/warpsys-placeholder-prefix LDFLAGS=-Wl,-rpath=XORIGIN/../lib ARFLAGS=rvD",

"make",

"make DESTDIR=/out install",

"sed -i '0,/XORIGIN/{s/XORIGIN/$ORIGIN/}' /out/warpsys-placeholder-prefix/bin/*"

]

}

},

"outputs": {

"out": {

"from": "/out/warpsys-placeholder-prefix",

"packtype": "tar"

}

}

}

},

"pack": {

"protoformula": {

"inputs": {

"/pack": "pipe:build:out",

"/pkg/glibc": "pipe::glibc",

"/pkg/ldshim": "pipe::ldshim",

"/pkg/busybox": "pipe::busybox",

"$PATH": "literal:/pkg/busybox/bin"

},

"action": {

"script": {

"interpreter": "/pkg/busybox/bin/sh",

"contents": [

"mkdir -vp /pack/lib",

"mkdir -vp /pack/dynbin",

"cp /pkg/glibc/lib/libc.so.6 /pack/lib",

"cp /pkg/glibc/lib/libdl.so.2 /pack/lib",

"cp /pkg/glibc/lib/libm.so.6 /pack/lib",

"mv /pack/bin/bash /pack/dynbin",

"cp /pkg/ldshim/ldshim /pack/bin/bash",

"cp /pkg/glibc/lib/ld-linux-x86-64.so.2 /pack/lib",

"rm -rf /pack/lib/bash /pack/lib/pkgconfig /pack/include /pack/share"

]

}

},

"outputs": {

"out": {

"from": "/pack",

"packtype": "tar"

}

}

}

},

"test-run": {

"protoformula": {

"inputs": {

"/pkg/bash": "pipe:pack:out"

},

"action": {

"exec": {

"command": [

"/pkg/bash/bin/bash",

"--version"

]

}

},

"outputs": {}

}

}

},

"outputs": {

"amd64": "pipe:pack:out"

}

}

}

JSON can get to be a lot.

There's a reason other projects end up using a programming language.

<- That's the bash build in 5pt font.

# Fancy Overdrive

Getting Even Fancier

{

"plot.v1": {

"inputs": {

"glibc": "catalog:warpsys.org/bootstrap/glibc:v2.35:amd64",

"ld": "catalog:warpsys.org/bootstrap/glibc:v2.35:ld-amd64",

"ldshim": "catalog:warpsys.org/bootstrap/ldshim:v1.0:amd64",

"make": "catalog:warpsys.org/bootstrap/make:v4.3:amd64",

"gcc": "catalog:warpsys.org/bootstrap/gcc:v11.2.0:amd64",

"grep": "catalog:warpsys.org/bootstrap/grep:v3.7:amd64",

"coreutils": "catalog:warpsys.org/bootstrap/coreutils:v9.1:amd64",

"binutils": "catalog:warpsys.org/bootstrap/binutils:v2.38:amd64",

"sed": "catalog:warpsys.org/bootstrap/sed:v4.8:amd64",

"gawk": "catalog:warpsys.org/bootstrap/gawk:v5.1.1:amd64",

"busybox": "catalog:warpsys.org/bootstrap/busybox:v1.35.0:amd64",

"src": "catalog:warpsys.org/bash:v5.1.16:src"

},

"steps": {

"build": {

"protoformula": {

"inputs": {

"/src": "pipe::src",

"/lib64": "pipe::ld",

"/pkg/glibc": "pipe::glibc",

"/pkg/make": "pipe::make",

"/pkg/coreutils": "pipe::coreutils",

"/pkg/binutils": "pipe::binutils",

"/pkg/gcc": "pipe::gcc",

"/pkg/sed": "pipe::sed",

"/pkg/grep": "pipe::grep",

"/pkg/gawk": "pipe::gawk",

"/pkg/busybox": "pipe::busybox",

"$PATH": "literal:/pkg/make/bin:/pkg/gcc/bin:/pkg/coreutils/bin:/pkg/binutils/bin:/pkg/sed/bin:/pkg/grep/bin:/pkg/gawk/bin:/pkg/busybox/bin",

"$CPATH": "literal:/pkg/glibc/include:/pkg/glibc/include/x86_64-linux-gnu"

},

"action": {

"script": {

"interpreter": "/pkg/busybox/bin/sh",

"contents": [

"mkdir -p /bin /tmp /prefix /usr/include/",

"ln -s /pkg/glibc/lib /prefix/lib",

"ln -s /pkg/glibc/lib /lib",

"ln -s /pkg/busybox/bin/sh /bin/sh",

"ln -s /pkg/gcc/bin/cpp /lib/cpp",

"cd /src/*",

"mkdir -v build",

"cd build",

"export SOURCE_DATE_EPOCH=1262304000",

"../configure --prefix=/warpsys-placeholder-prefix LDFLAGS=-Wl,-rpath=XORIGIN/../lib ARFLAGS=rvD",

"make",

"make DESTDIR=/out install",

"sed -i '0,/XORIGIN/{s/XORIGIN/$ORIGIN/}' /out/warpsys-placeholder-prefix/bin/*"

]

}

},

"outputs": {

"out": {

"from": "/out/warpsys-placeholder-prefix",

"packtype": "tar"

}

}

}

},

"pack": {

"protoformula": {

"inputs": {

"/pack": "pipe:build:out",

"/pkg/glibc": "pipe::glibc",

"/pkg/ldshim": "pipe::ldshim",

"/pkg/busybox": "pipe::busybox",

"$PATH": "literal:/pkg/busybox/bin"

},

"action": {

"script": {

"interpreter": "/pkg/busybox/bin/sh",

"contents": [

"mkdir -vp /pack/lib",

"mkdir -vp /pack/dynbin",

"cp /pkg/glibc/lib/libc.so.6 /pack/lib",

"cp /pkg/glibc/lib/libdl.so.2 /pack/lib",

"cp /pkg/glibc/lib/libm.so.6 /pack/lib",

"mv /pack/bin/bash /pack/dynbin",

"cp /pkg/ldshim/ldshim /pack/bin/bash",

"cp /pkg/glibc/lib/ld-linux-x86-64.so.2 /pack/lib",

"rm -rf /pack/lib/bash /pack/lib/pkgconfig /pack/include /pack/share"

]

}

},

"outputs": {

"out": {

"from": "/pack",

"packtype": "tar"

}

}

}

},

"test-run": {

"protoformula": {

"inputs": {

"/pkg/bash": "pipe:pack:out"

},

"action": {

"exec": {

"command": [

"/pkg/bash/bin/bash",

"--version"

]

}

},

"outputs": {}

}

}

},

"outputs": {

"amd64": "pipe:pack:out"

}

}

}

We can generate this, though.

Warpforge supports starlark.

You can also BYOL.

We can generate this, though.

We see something important:

now focusing in the middle...

there's serializable waypoints.

And they have "no code".

warpforge

{"workflow.v1": {

"inputs": {

"/zapp/bash": "catalog:warpsys.org/bash:v4.14:linux-amd64-zapp"

"/zapp/coreutils": "catalog:warpsys.org/coreutils:v5000:linux-amd64-zapp"

"/task/src": "ingest:git:.:HEAD"

},

"action": {

"script": {

"interpreter": "/zapp/bash/bin/bash",

"contents": [

"mkdir /out && echo 'heyy' > /out/log"

"echo done!"

]

}

},

"outputs": {

"yourlabel": {

"packtype": "tar",

"from": "/out"

}

}

}}

{"catalogroot.v1": {

"modules": {

"warpsys.org/gnu/bash": "zMwj3irr8o2j3ksljFKkemK3jrl5sm3e9mf"

"warpsys.org/gnu/coreutils": "zMwirsm3e9r8o2jemK3jrl53ksljFKkj3el5mf"

"warpsys.org/etc/etc": "zMwj2j3ksljFK3imK3jrtRrsm3e9r8okmf"

...

}

}}📦️

Warpforge "Catalogs"...

have something else, too

"Replays"

Replays

... are just the build instruction JSON again.

But frozen.

Easy to do this.

warpforge

Some of these problems we solve because we have to

(...not because we really want to)

Supply chain management.

Content addressing large filesystem snapshots.

Name resolution.

Decentralized, offline.

And only then can we get onto containment, and execution...

(...and back onto library linking, oof...)

# Things Solved

Filesystem Snapshots

We wrote a canonical hasher for tar files.

I don't love it, but it solves a problem.

It gives us convergent hashes if the filesystem is the same.

Handles: attributes, permissions, etc.

Ignores: compression, etc.

# Things Solved

(Reproducible!) Name Resolution

... covered this earlier.

Recall all the slides about Catalogs.

# Things Solved

Containment & Execution

There's a lot of ways to do this.

Fortunately, these *are* reusable.

Runc works fine. Warpforge uses this.

Also fine: gvisor, firejail, lxc/d, many other things both lightweight and all the way to VMs, can provide the essential features.

# Things Solved

Supply Chain Management

Okay so:

✅ content-addressed snapshot IDs,

✅ a merkle tree handling the

name->ID lookups,

✅ allow-list inputs to the environment,

✅ explicitly selected outputs.

It's Already Done.

This just naturally falls out after you have:

What else is there?

# Litmus Tests

Litmus Test:

Hosting Various Systems

Yes: You can put a full container image in here.

Or assemble smaller filesets.

Trivially distro-agnostic.

# Litmus Tests

Litmus Test:

Building Real Things

Yes: We built emacs in this.

(And Vim.)

And a bunch of other things.

# Litmus Tests

Litmus Test:

Can You Use It?

I dunno!

But please come try!

// build from source --

// git clone && go install

$ warpforge catalog update

$ warpforge quickstart

$ warpforge status

$ warpforge runwarpforge.io

zapps.app

Stuff you can compose.

(Questions?)

catalog.warpsys.org

(extra slides)

Where are we going with this?

- Build environment manager

- For everything

- Packaging and sharing

- Datastructure driven

- (bring your own frontend?)

- CI and productivity

- ...Obviously.

-

Not quite a distro.

- But it should be very

easy to build one.

- But it should be very

So why did I care about that?

So why did I care about that?

I want to build a whole decentralized,

low-coordination,

high-sharing,

ecosystem.

So why did I care about that?

I want to build a whole decentralized,

low-coordination,

high-sharing,

ecosystem.

hashes help

with this

zapps & path-agno

help with this

catalog merkle-tree designed to sync and share

So why did I care about that?

And all that stuff I just showed

is reproducible

and ready to be shared.

Warpforge vs ...

Bazel/Blaze/Nix/Guix/{Etc}:

Build descriptions depend on other build descriptions.

– have to keep building farther and farther back

– presumes compiler determinism (🚩)

– eventually have bootstrapping problem

– seems to defacto require monorepo

Warpforge:

Build descriptions depend on content.

– pause wherever you like

– checkpoints for determinism

– no trust needed for "substitutes"

– update freely (or don’t)

– can describe cycles (!)

^ describe DDC / thompson-atk-resistance!

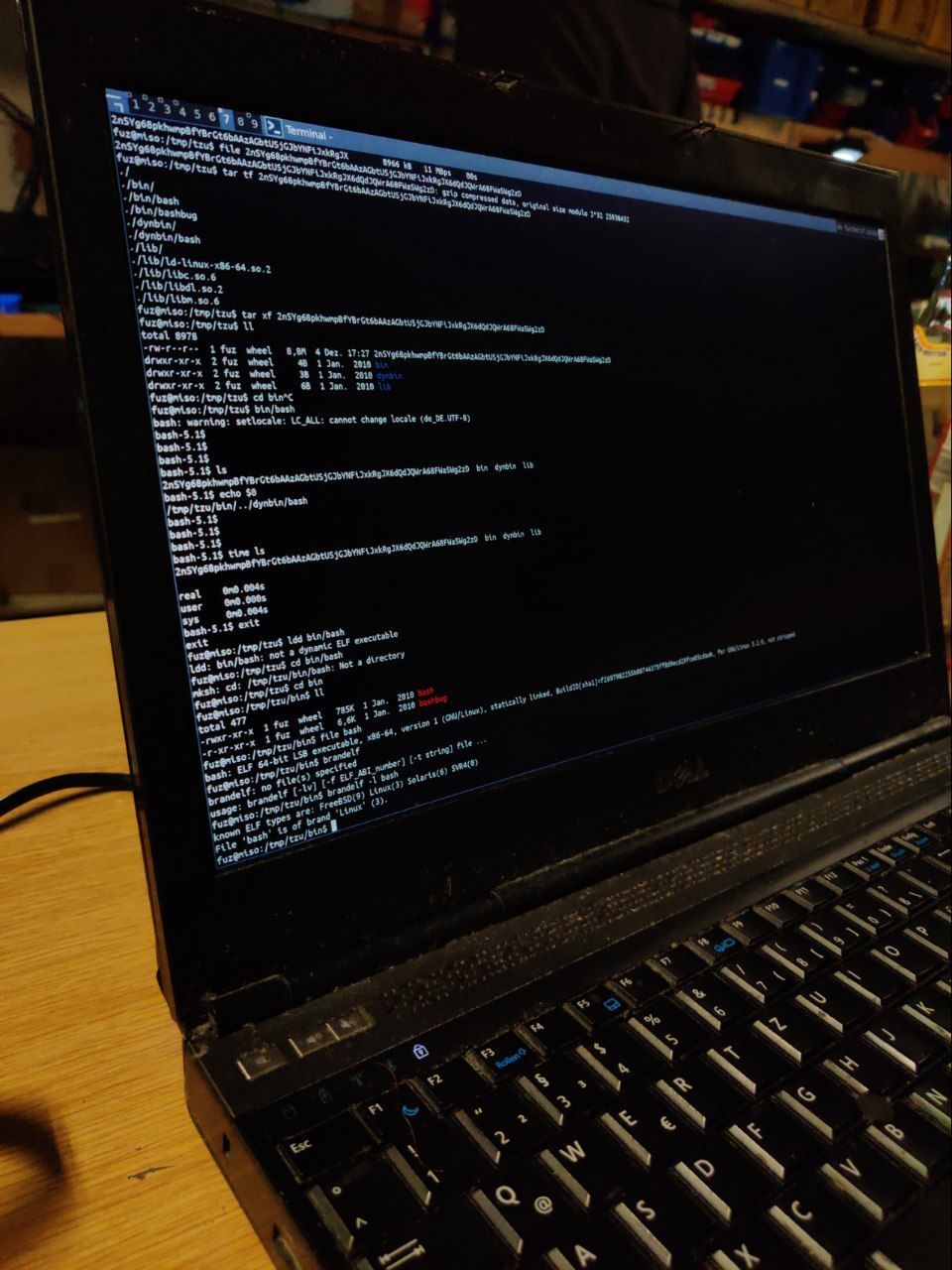

Tarball, regular executable, ELF, dynamic, and yet totally portable

there are no post install hooks. nothing.

read-only mount? jumpdrive? fine.

untar

run

done

DEMO

Recent discovery:

Zapps even work on FreeBSD.

(thanks largely to FreeBSD's capabilities to emulate linux...

but it's very cool that it "just worked" out-of-box!)

With many thanks to @FUZxxl

GOALS

- Want one answer.

No "it depends".

- Must work on any host -- no host lib deps; no conflicts

- Work on any install path. Even if unpredictable.

- No "post-install" steps.

Work even on RO mounts.

High level:

... And in

practice:

- Dynamic linking.

Need it for Reasons.

- Want: able to dedup

libraries on disk

(if something else has exact same version.)

of Zapps

GOALS

- Want one answer.

No "it depends".

- Must work on any host -- no host lib deps; no conflicts

- Work on any install path. Even if unpredictable.

- No "post-install" steps.

Work even on RO mounts.

High level:

... And in

practice:

- Dynamic linking.

Need it for Reasons.

- Want: able to dedup

libraries on disk

(if something else has exact same version.)

of Zapps

DONE.

The simplest thing that works

and nothing more

Please check out the Zapps website for more info:

https://zapps.app/

Demos again...

Let's see how we built the Zapps you saw in the previous demo.

Warpforge Replay instructions say exactly how.

warpforge

Demos again...

That was the "Catalog Site".

It's generated from a "Catalog" which is a merkle tree in JSON (or CBOR).

That's how we communicate about packages in Warpforge.

warpforge

In other words, that was the really high-level stuff.

...But low-level stuff

is Cool.

warpforge

warpforge

Formula

Results

L1

Compute

L2

Plot

Catalog

Release

Replay

Graphs

L3+

Starlark (?)

Other Scripts

Whatever

Generation

Warpforge API Layers

... because there's a lot of things going on here.

<--- Simple ------------------ Fun --->

WareID

Warehouses

L0

Transport

warpforge

Formula

Results

L1

Compute

L2

Plot

Catalog

Release

Replay

Graphs

L3+

Starlark (?)

Other Scripts

Whatever

Generation

Warpforge API Layers

... because there's a lot of things going on here.

<--- Simple ------------------ Fun --->

WareID

Warehouses

L0

Transport

We started here...

...now let's zoom in here.

warpforge

Formula

Results

L1

Compute

L2

Plot

Catalog

Release

Replay

Graphs

L3+

Starlark (?)

Other Scripts

Whatever

Generation

Warpforge API Layers

... because there's a lot of things going on here.

WareID

Warehouses

L0

Transport

Stuff on the left...

... creates the stuff on the right.

... Creates software.

There are more tools

- `rio` -- Reproducible I/O

- `linkwarp` -- $PATH management (a la Stow)

- `gittreehash` -- it's the primary key you want for shared lib dedup, funny enough

- `ldshim` -- part of the Zapp production system

# Langpkgs?

About language pkgman

LPMs do approx 4 things:

- package suggestion

- version selection

- lockfiles (hopefully!)

- transport & file unpack

First two are legit & interesting.

Second two are boring.

Can we do them once, please?

BHAG (Big Hairy Audacious Goals)

- Warpforge becomes the obvious choice for packaging, shipping, and CI.

- Every repo on git{platform} has a

.warpforge/dir, and it's always a familiar landmark.

- Language Pkgmgrs still exist... and focus on version selection and code integration. Can freely reuse Warpforge infrastructure (Catalogs, ware ID’ing, warehouses and transports) rather than custom solutions.