Empirical Analysis of Sim-and-Real Cotraining Of Diffusion Policies For Planar Pushing from Pixels

IROS 2025

Adam Wei, Abhinav Agarwal, Boyuan Chen, Rohan Bosworth, Nicholas Pfaff, Russ Tedrake

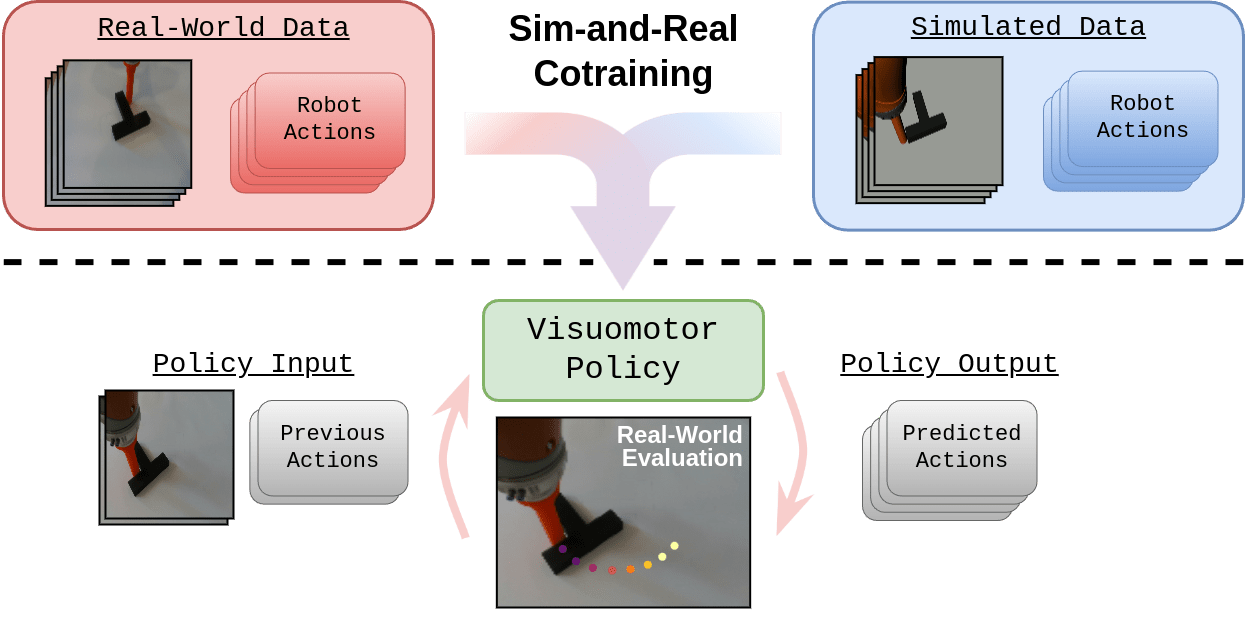

Sim-and-Real Cotraining

Cotrain from both simulated and real-world robot data to maximize a real-world performance objective

Planar Pushing From Pixels

50 real demos

50 real demos

2000 sim demos

Success rate: 10/20

Success rate: 19/20

2x

2x

Experimental Setup

Policy:

Performance Objective:

Success rate on a planar pushing task

Diffusion Policy [2]

[2] Chi et. al "Diffusion Policy: Visuomotor Policy Learning via Action Diffusion"

[1] Graedsal et. al, "Towards Tight Convex Relaxations For Contact-Rich Manipulation"

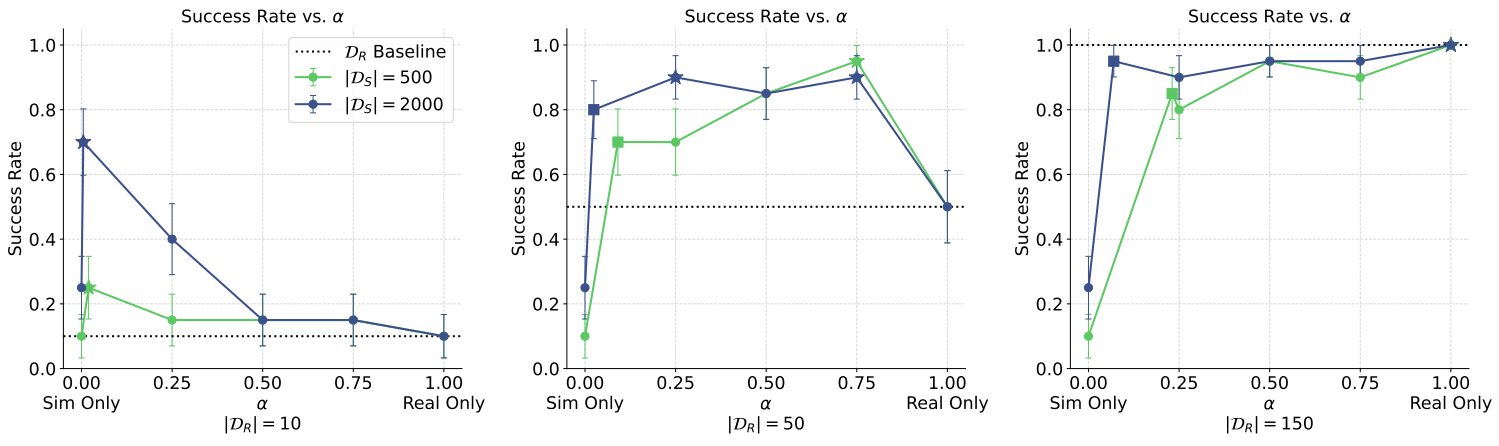

Data Scale and Data Mixtures

- Cotraining improves policy performance by up to 2-7x

- Scaling sim data improves performance and reduces sensitivity to \(\alpha\)

Low Real Data Regime

Med Real Data Regime

High Real Data Regime

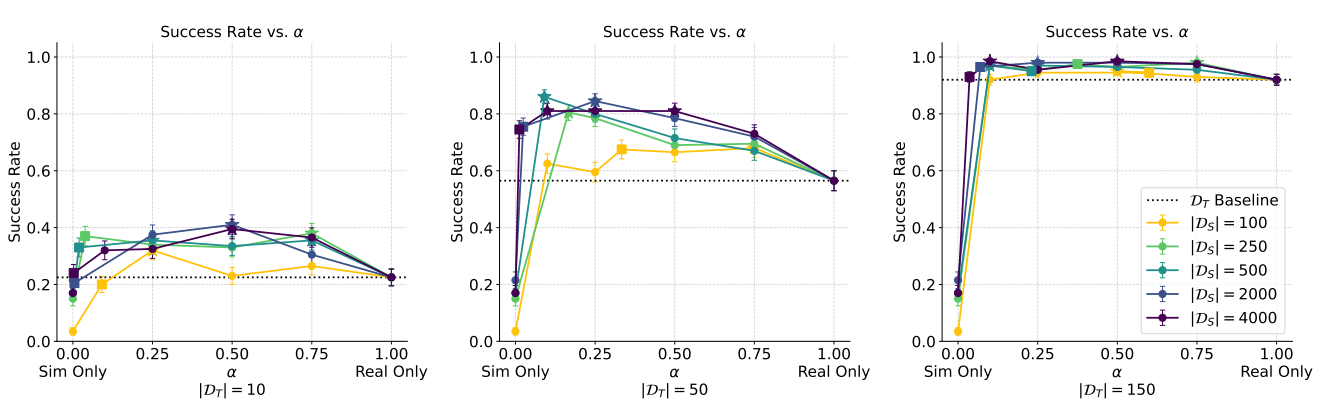

Data Scale and Data Mixtures

- Performance gains from scaling sim data plateau; additional real data raises the performance ceiling

Low Real Data Regime

Med Real Data Regime

High Real Data Regime

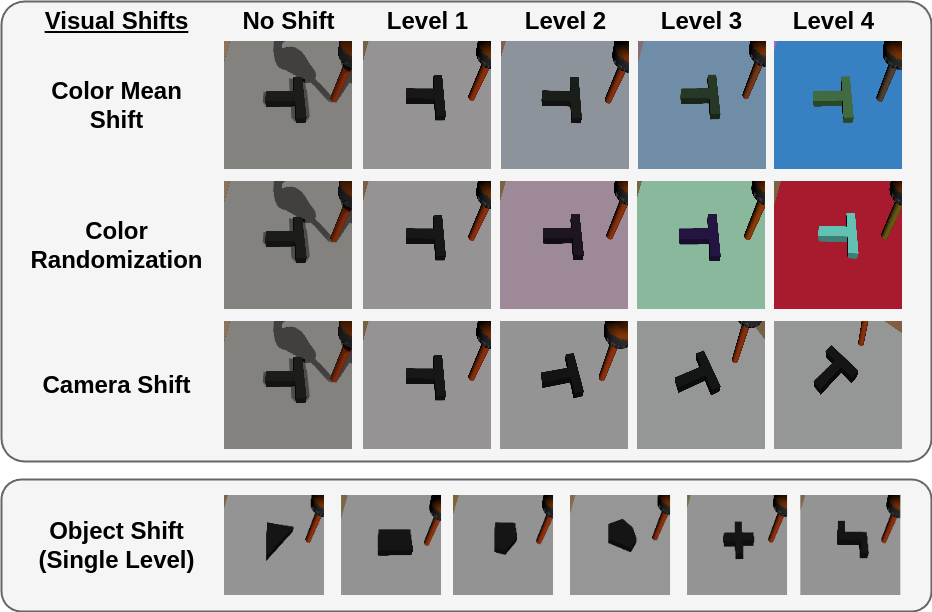

Distribution Shifts

How do visual, physics, and task shift on performance?

Reducing all shifts improves performance

Paradoxically, some visual shift is required for good performance!

Cotraining performance is more sensitive to physics and task shifts.

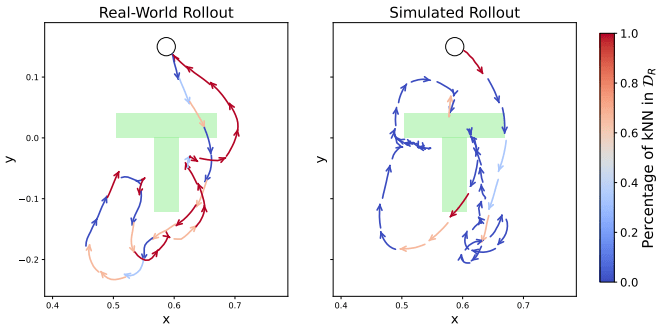

Sim-and-Real Distinguishability

Real-World Demo

Policy Rollout

(Cotrained)

Simulated Demo

- Fix orientation, then translation

- Sticking & sliding contacts

- Similar to real-world demos

- Fix orientation and translation simultaneously

- Sticking contacts only

High-performing policies must learn to identify sim vs real

since the physics of each environment requires different actions

2x

2x

2x

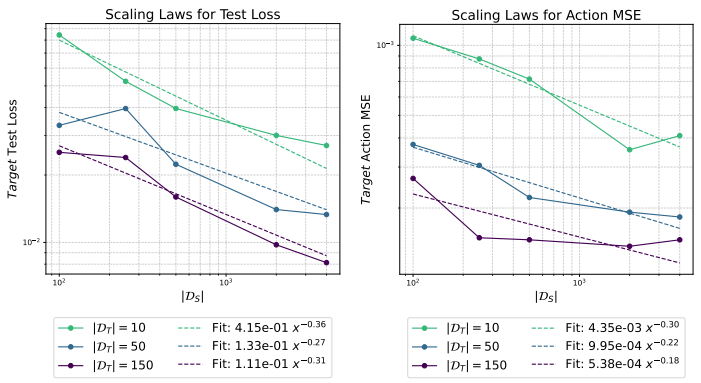

Positive Transfer

Sim data improves dataset coverage

Training on sim reduces test loss in real (power law)

Thank you!

Please refer to the paper for more experiments and details.