Python clean code

Florian Dambrine - Principal Engineer - GumGum

Python clean code

-

Getting Started

- Python Virtualenv / Pyenv & Pyenv-Virtualenv

- Project template with Cookiecutter

-

Project structure

- Code Style with Isort & Black

- Code Linter with Flake8 (PEP8)

- Code Testing with Pytest

- Code Automation tooling with Tox

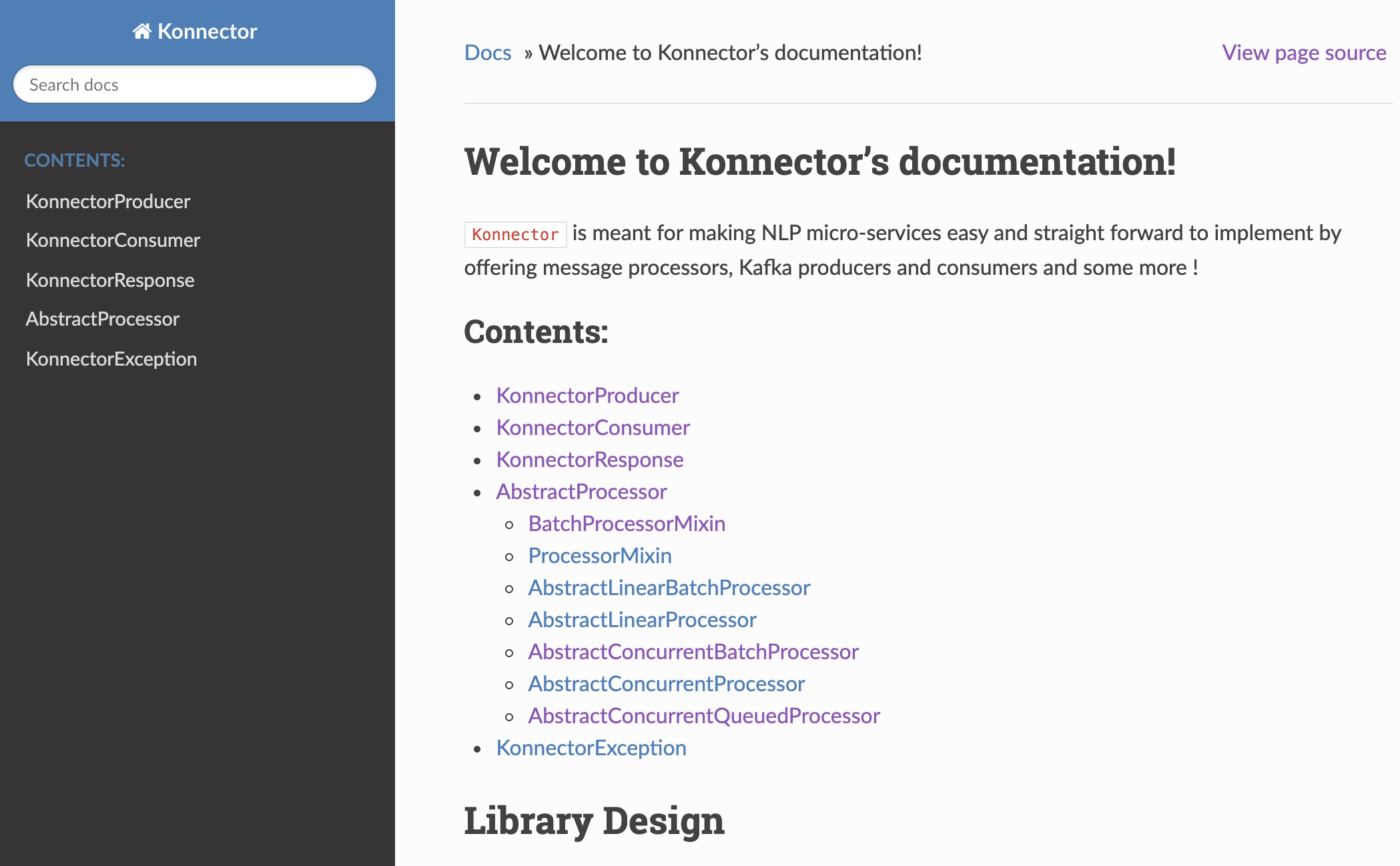

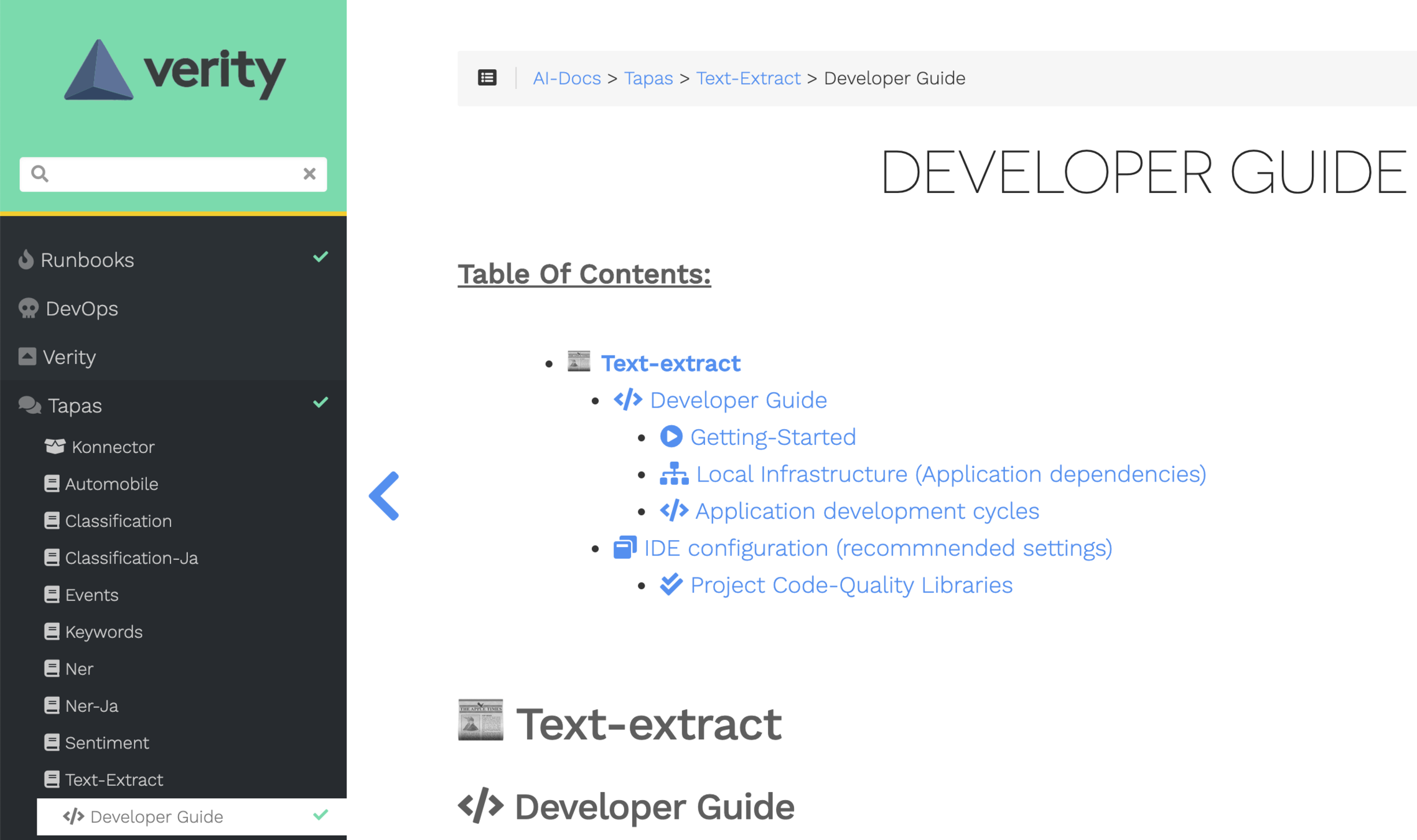

- Documentation with Sphinx

-

Microservice configuration handling

- The 12Factor Application & Dynaconf

> Isort

Python utility / library to sort imports alphabetically, and automatically separated into sections. It provides a command line utility, Python library and plugins for various editors to quickly sort all your imports. It currently cleanly supports Python 2.7 and 3.4+ without any dependencies.

Code style libraries

> Black

Black is the uncompromising Python code formatter. By using it, you agree to cede control over minutiae of hand-formatting. In return, Black gives you speed, determinism, and freedom from pycodestyle nagging about formatting. You will save time and mental energy for more important matters.

Code style libraries

> Flake8

Python library that wraps PyFlakes, pycodestyle and Ned Batchelder's McCabe script. It is a great toolkit for checking your code base against coding style (PEP8), programming errors (like “library imported but unused” and “Undefined name”) and to check cyclomatic complexity.

Code linter libraries

$ flake8 parse.py

scrapy/commands/parse.py:3:1: E302 expected 2 blank lines, found 0

scrapy/commands/parse.py:6:5: E303 too many blank lines (2)

scrapy/commands/parse.py:7:24: W291 trailing whitespace

scrapy/commands/parse.py:8:15: E221 multiple spaces before operator

scrapy/commands/parse.py:9:18: E701 multiple statements on one line (colon)

scrapy/commands/parse.py:10:13: E701 multiple statements on one line (colon)

scrapy/commands/parse.py:15:1: W391 blank line at end of file> Pytest

The pytest framework makes it easy to write small tests, yet scales to support complex functional testing for applications and libraries.

Code integration testing

#!/usr/bin/python

# -*- coding: utf-8 -*-

import logging

from dynaconf import settings

from app.processors import TextExtractProcessor

logger = logging.getLogger(__name__)

class TestTextExtractProcessor:

def test_process(self, processor, valid_message):

results = processor._process(valid_message)

# We should expect at least 3 responses per input message (dist, info, next)

assert len(results) >= 3

def test_on_failure(self, valid_message):

# Use a really low timeout to trigger _on_failure handler

results = TextExtractProcessor(max_workers=1, timeout=0.1).process(

[valid_message]

)

# Expects only 1 response as the timeout ensures we wont process

assert len(results) == 1

assert results[0].cancelled is True

assert results[0].topic is None

#!/usr/bin/python

# -*- coding: utf-8 -*-

import json

import boto3

import pytest

from moto import mock_s3

@pytest.fixture

def valid_message():

m = {

"destination": "https://localhost/page/tapas/save?pageUrl=blank", # noqa: E501

"request_sent_ts": 1578224176353,

"requester": "verity",

"task": "process",

"tid": "isverity",

"url": "https://www.neowin.net/news/dell-xps-13-review-the-first-hexa-core-u-series-processor-delivers",

}

return json.dumps(m)

@pytest.yield_fixture

def s3_client():

"""Mock S3 client"""

with mock_s3():

s3 = boto3.client("s3")

yield s3

conftest.py

> Tox

Aims to automate and standardize testing in Python. It is part of a larger vision of easing the packaging, testing and release process of Python software.

Code testing automation libraries

- lint

- checkstyle

- docs

- coverage

py37

py38

> Makefile

Aims to provide a simplified interface to run commonly used commands on a project (build / run / dependencies / docker run). Consider it as being list of shortcuts to ease your life

Code testing automation tools

.PHONY: dev tests lint checkstyle coverage docs cluster nltk

run:

ifeq ($(FORCE),)

docker-compose -f gumref/docker-compose.yml up $(DAEMON)

else

docker-compose -f gumref/docker-compose.yml up --build

endif

nltk:

@echo 🐍 Downloading Python TLK data...

python -m nltk.downloader -d tests/data/nltk-data/ punkt wordnet omw -q

tests:

$(MAKE) lint

$(MAKE) checkstyle

$(MAKE) coverage

lint:

@echo 💠 Linting code...

tox -e lint

checkstyle:

@echo ✅ Validating checkstyle...

tox -e checkstyle

docs:

@echo 📚 Generate documentation using sphinx...

tox -e docs$ make cluster

$ make run> Sphinx

Sphinx is a tool that makes it easy to create intelligent and beautiful documentation, written by Georg Brandl and licensed under the BSD license.

Code documentation

-

coverage.mle.va.sx.ggops.com/<PROJECT>/<VERSION>/docs/index.html

-

coverage.mle.va.sx.ggops.com/<PROJECT>/<VERSION>/coverage/index.html

Git hook scripts are useful for identifying simple issues before submission to code review. We run our hooks on every commit to automatically point out issues in code such as missing semicolons, trailing whitespace, and debug statements.

Precommit

> Dynaconf

A layered configuration system for Python applications - with strong support for 12-factor applications and extensions for Flask and Django.

Configuration handling

[default]

service_name = "text_extract"

dragnet_enabled = true

s3_upload_enabled = true

# Service In/Out Topics

service_kafka_topics_prefix = "ds_"

service_kafka_topics_postfix = "_dev"

[staging]

service_kafka_topics_postfix = "_stage"

service_kafka_input_topics = ["text_extract"]

service_kafka_output_topics__next = [

"keywords",

"automobile",

"classification",

"events",

"ner",

"threat",

"sentiment_short"

]

[production]

service_kafka_topics_postfix = ""

service_kafka_input_topics = ["text_extract"]

service_kafka_output_topics__next = [

"keywords",

"automobile",

"classification",

"events",

"ner",

"threat",

"sentiment_short"

]---

version: "3"

services:

tapas-text-extract:

image: tapas-text-extract

build:

context: ../

environment:

# Dynaconf settings

- ENV_FOR_DYNACONF=production

# Dynaconf overrides

- TEXT_EXTRACT_PROCESSOR__workers=100

- TEXT_EXTRACT_CONSUMER__batch_size=800

# -------------------------------------------------

# Please note the use of __ to override dict member

#

# Overrides [production.processor]

# {

# "workers": 100,

# "timeout": 20

# }

# -------------------------------------------------docker-compose.py

settings.toml