Large Behavior Models

(Foundation models for dexterous manipulation)

Russ Tedrake

CSAIL Alliances Meeting

April 4, 2024

Goal: Foundation Models for Manipulation

LLMs \(\Rightarrow\) VLMs \(\Rightarrow\) LBMs

large language models

visually-conditioned language models

large behavior models

\(\sim\) VLA (vision-language-action)

\(\sim\) EFM (embodied foundation model)

Q: Is predicting actions fundamentally different?

Why actions (for dexterous manipulation) could be different:

- Actions are continuous (language tokens are discrete)

- Have to obey physics, deal with stochasticity

- Feedback / stability

- ...

should we expect similar generalization / scaling-laws?

Recent success in (single-task) behavior cloning suggests that these are not blockers

but we don't have internet-scale action data yet.

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

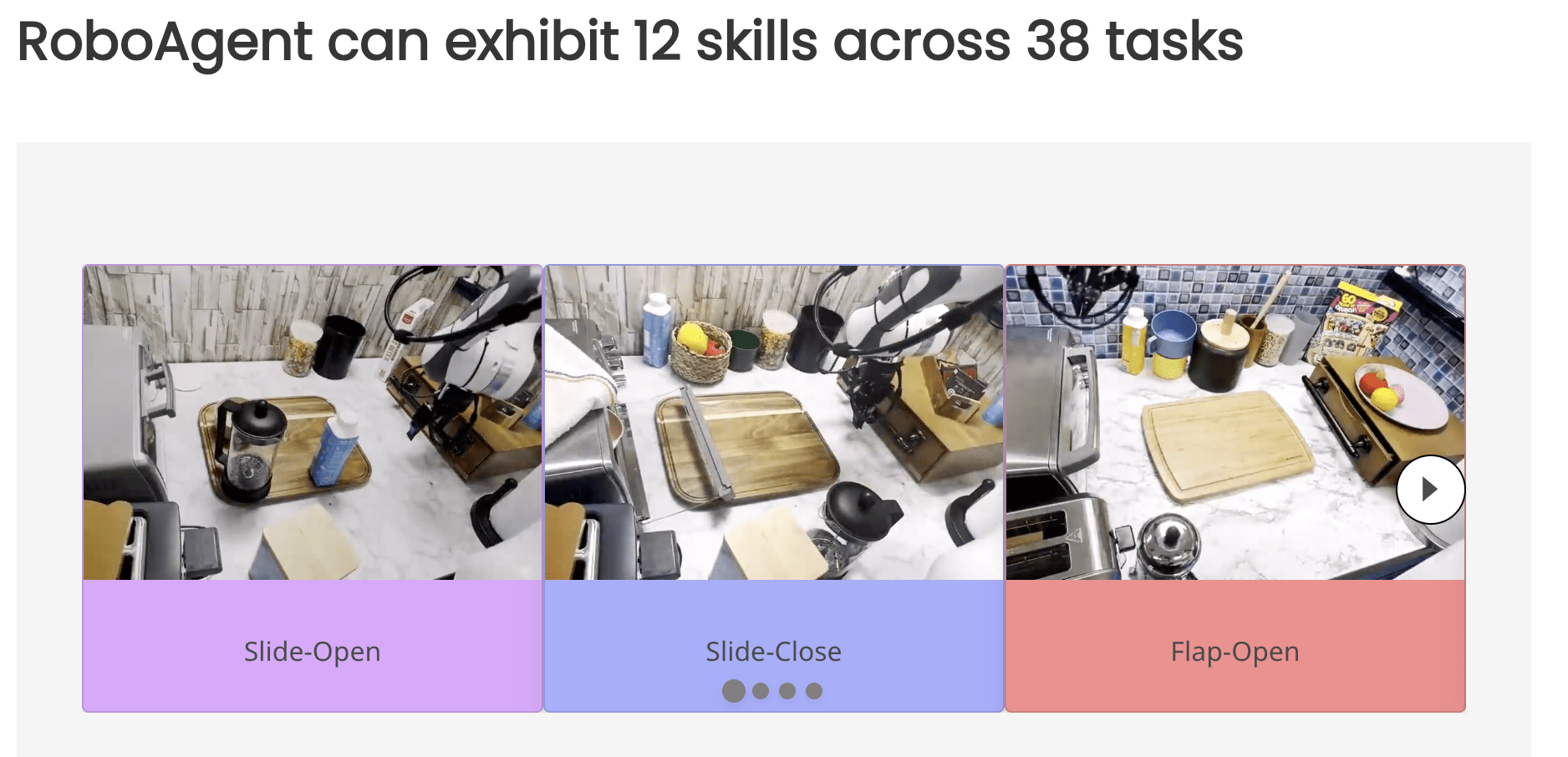

Ego-Exo

robot teleop

(the "transfer learning bet")

Open-X

simulation rollouts

World-class simulator for robotics

NVIDIA selected Drake and MuJoCo

(for potential inclusion in Omniverse)

"Hydroelastic contact" as implemented in Drake

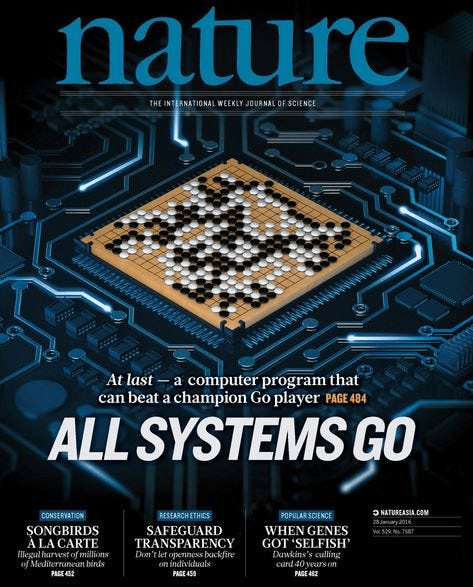

The AlphaGo Playbook

- Step 1: Behavior Cloning

- from human expert games

- Step 2: Self-play

- Policy network

- Value network

- Monte Carlo tree search (MCTS)

Scaling Monte-Carlo Tree Search

"Graphs of Convex Sets" (GCS)

Online classes (videos + lecture notes + code)

http://manipulation.mit.edu