Neural networks

Agenda:

- What is a neural network?

- Structure of simple neural network

- Training a neural network

- Convolutional neural network

Neural networks

What is a neural network?

Maybe this?

...or this?

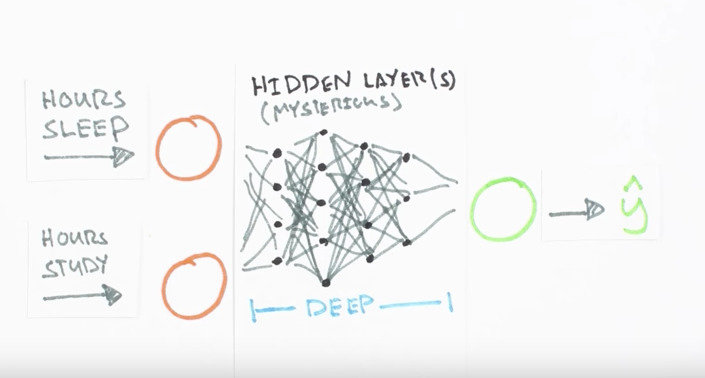

But all more simpler

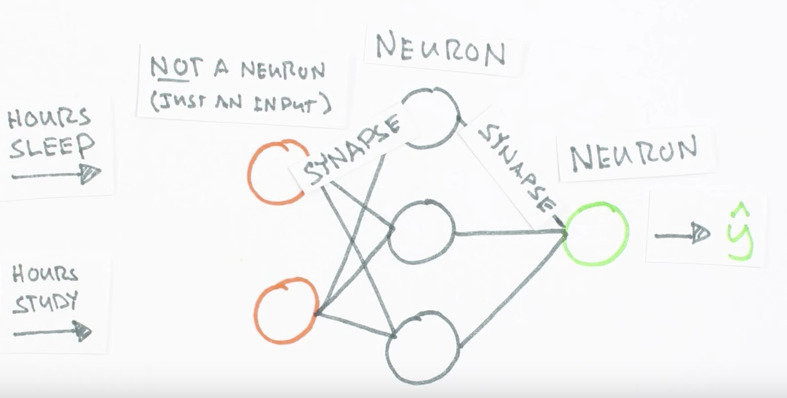

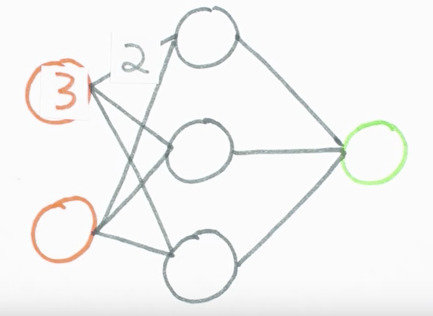

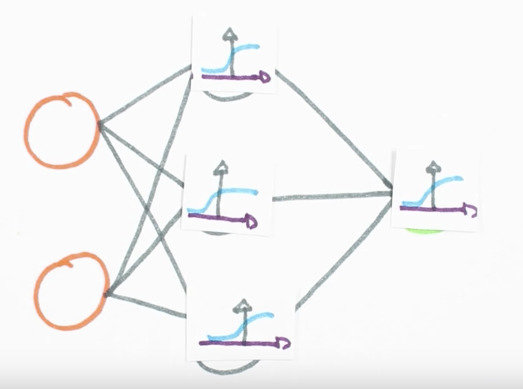

(simple neural network with 2 input and 5 hidden layers)

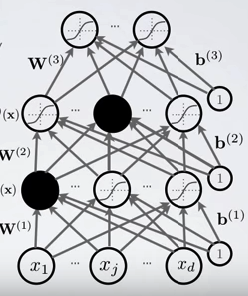

Structure of neural network

Structure of NN

Sinapse

Take the value from the input, multiply by specific weight and output the result.

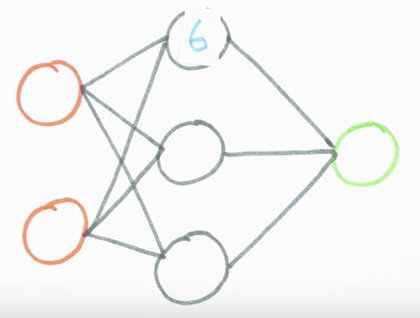

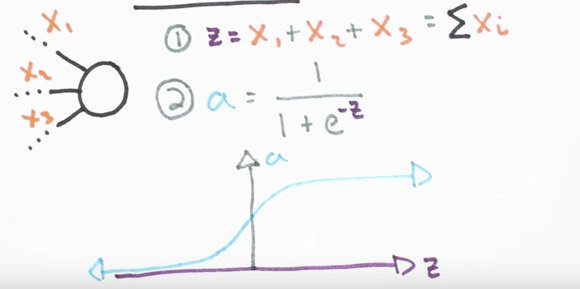

Neurons

Their job is to act together the output from other synapse and apply the activation function

(sigmoid activation function)

Neurons

And the apply the activation function to every neuron

Training of neural network

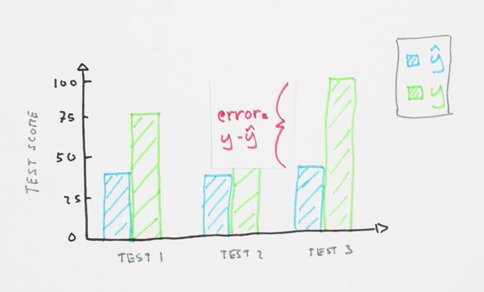

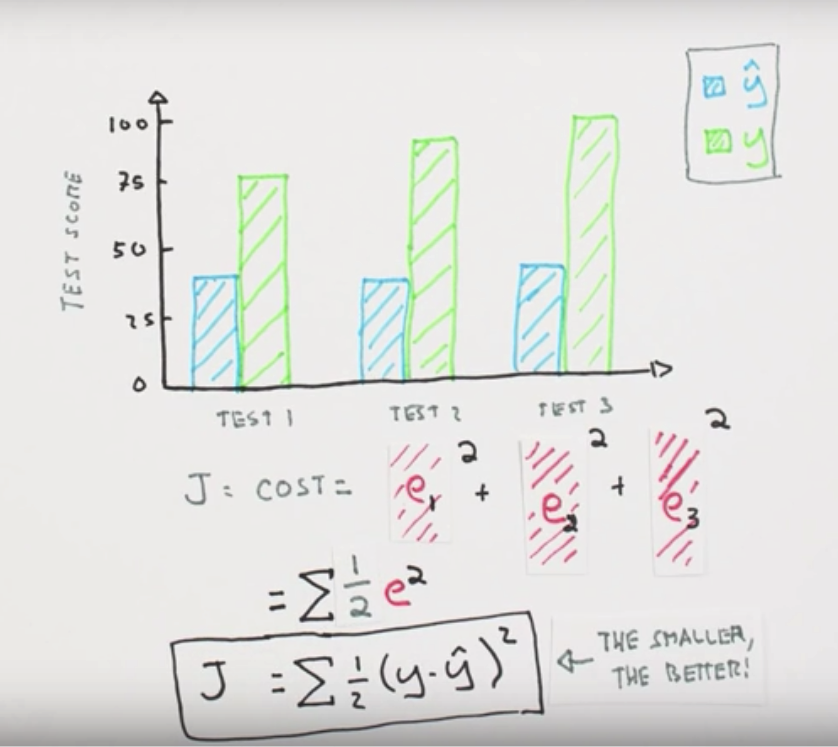

Error of NN

Cost function

Training rule

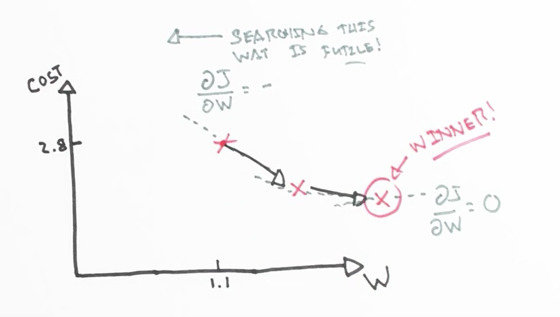

Gradient descent

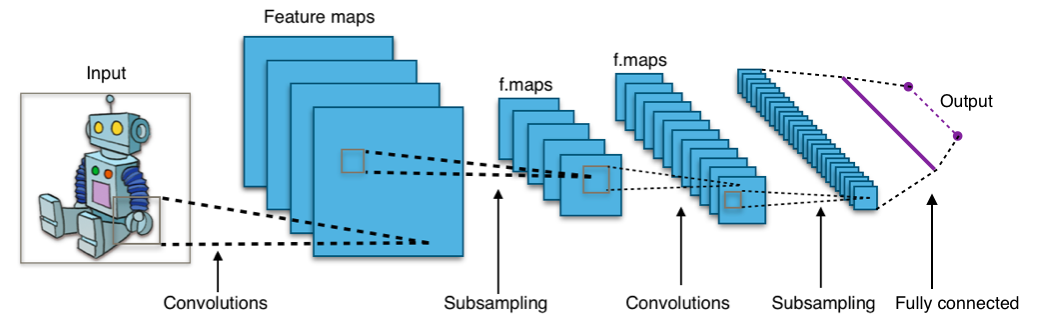

Convolutional neural network

Typical CNN architecture

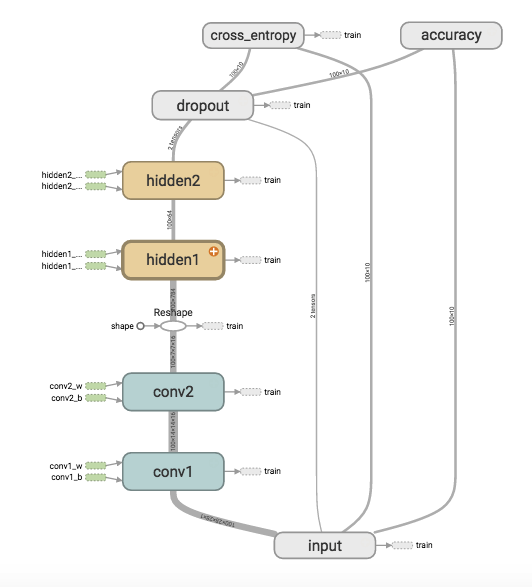

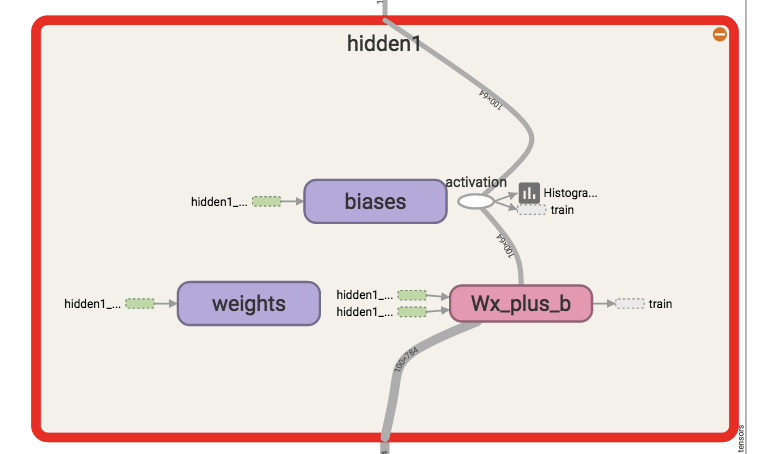

Graph of CNN

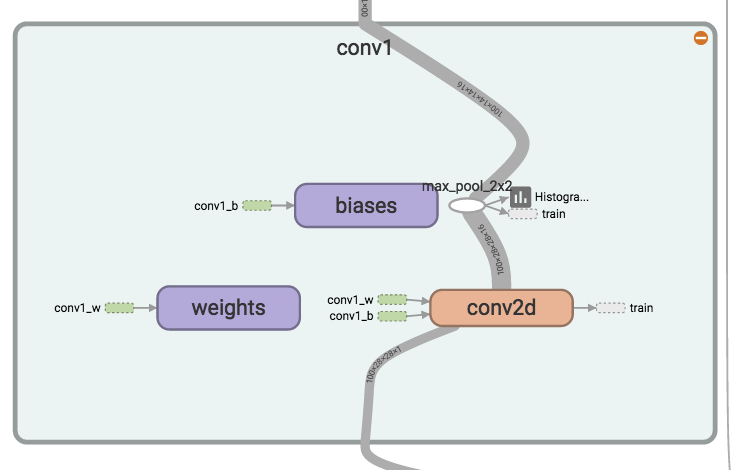

Convolution layer

Hidden layer

Dropout

Idea:

"cripple" neural network by removing hidden unit stochastically

Neural network research

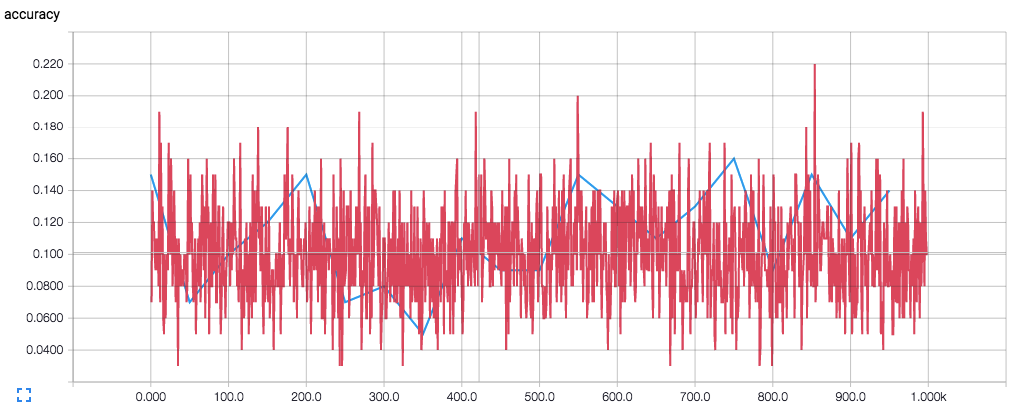

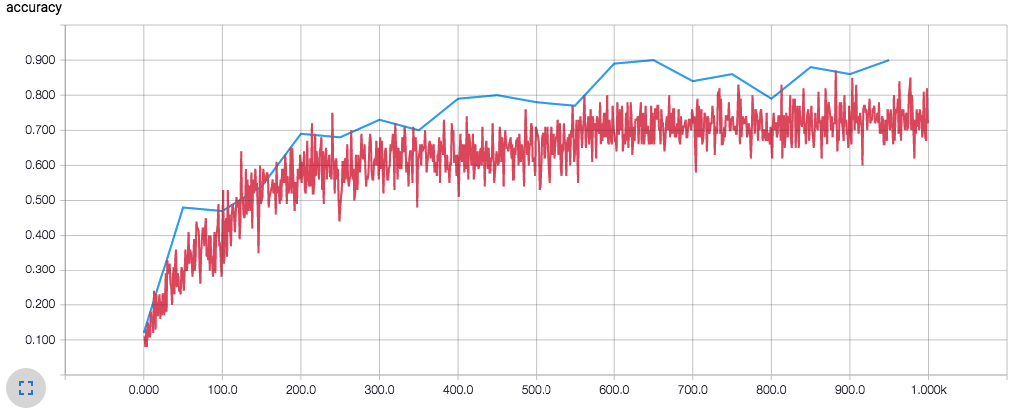

Dropout

Keep dropout: 0.5

Final result: 0.2

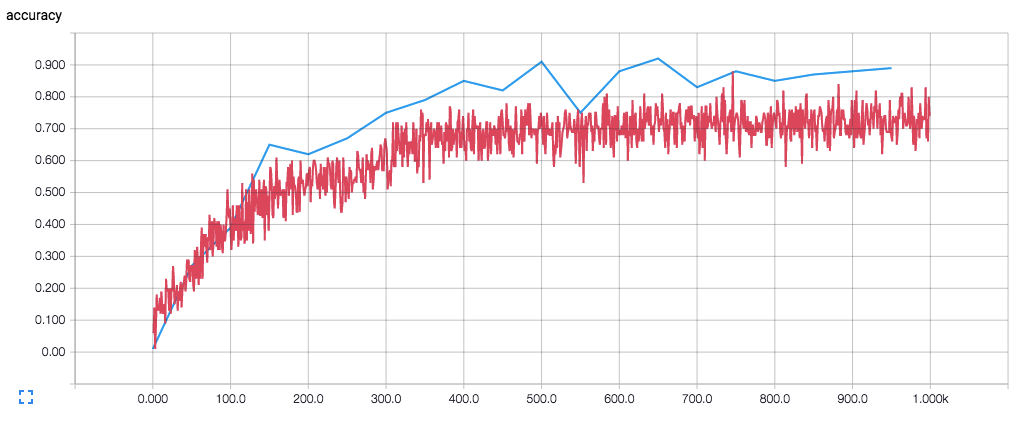

Keep dropout: 0.9

Final result: 0.9

Keep dropout: 1

Final result: 0.8

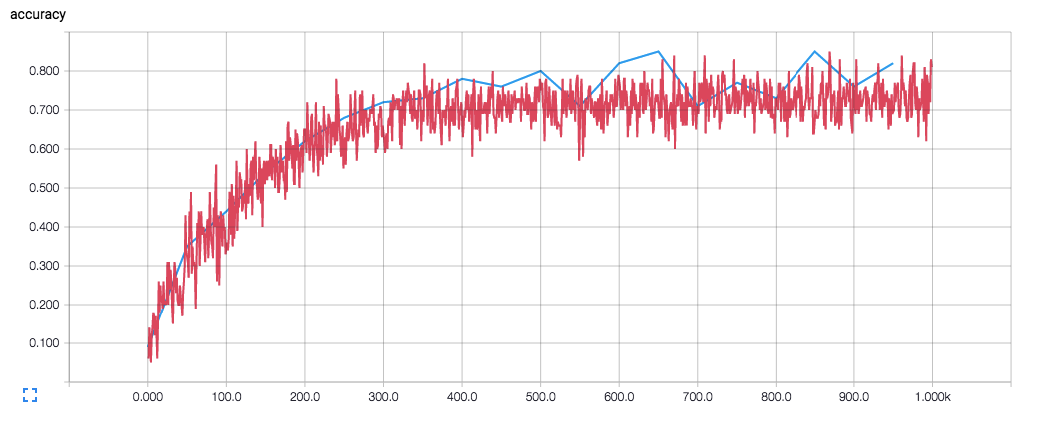

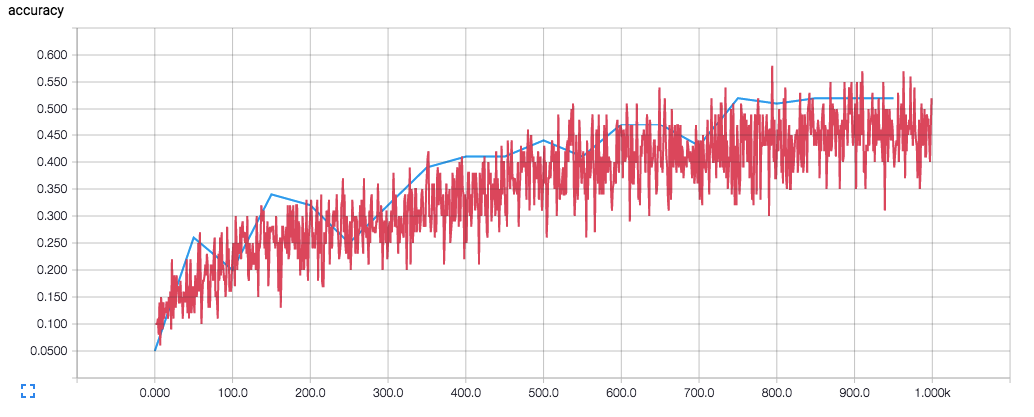

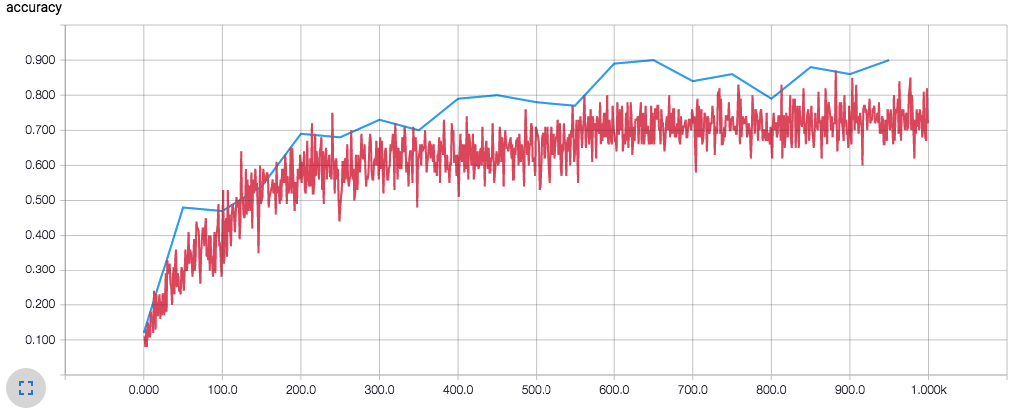

Learning rate

Learning rate: 0.001

Final result: 0.55

Learning rate: 0.005

Final result: 0.9

Learning rate: 0.05

Final result: 0.2

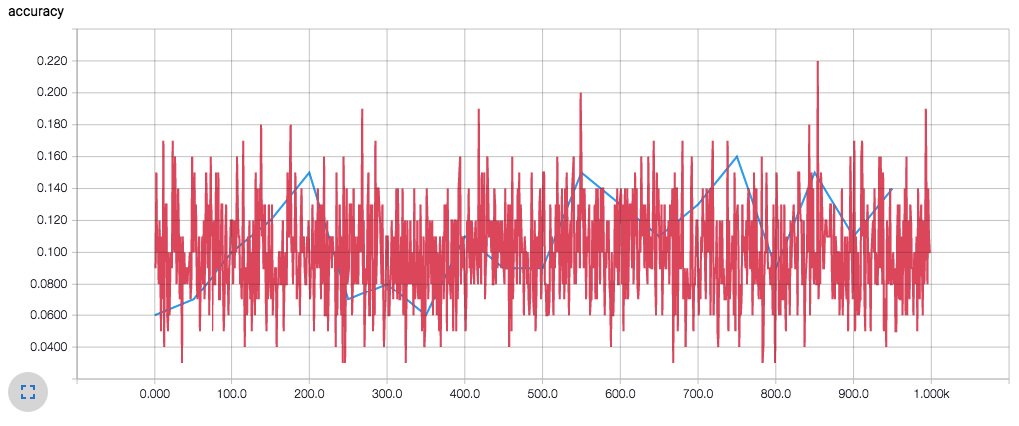

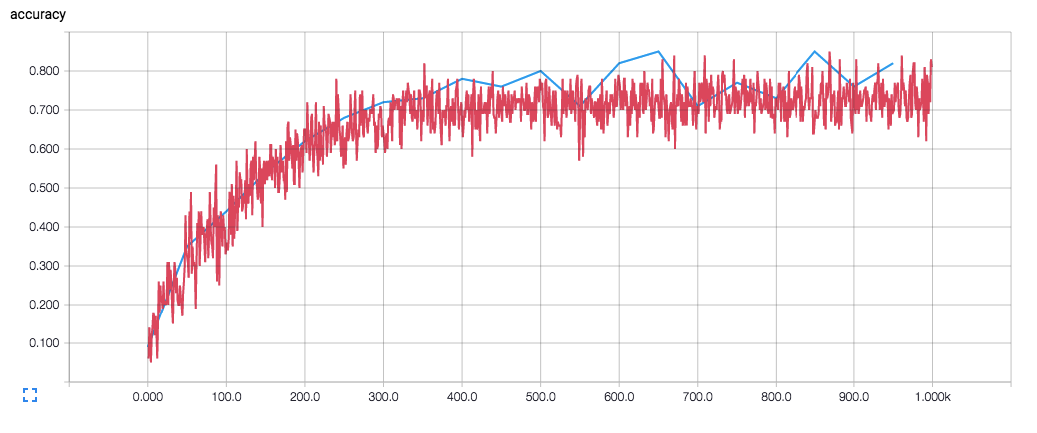

Optimizer

Optimizer: Gradient Descent

Average: 0.8

Optimizer: Adam

Average: 0.75

Conclusion