aws && docker && chef

adam meghji

Co-Founder and CTO at @universe

Entrepreneur & hacker.

Rails APIs, EmberJS, DevOps.

http://djmarmalade.com.

Always inspired!

The Social Marketplace For Events

Ticketing platform enabling event organizers to sell directly on their page(s), and through Universe.

Smart social integrations & cross-sell effect boost ticket sales.

Sell more tickets through better tools :)

today's talk

How Universe uses AWS AutoScaling + Docker + Chef

for happy DevOps

GOAL: Share one possible infrastructure

architecture & the necessary tooling

ACTUAL GOAL: Inspiration!

Go home and play around.

Go home and play around.

the universe

tech stack

CHALLENGES for devs

Different languages: Ruby, Node, Python

Different frameworks: Rails, Sinatra, Express, Flask

Each Microservice API runs slightly differently!

Jumping in requires learning each API's unique configuration

We achieved consistency through Containers

CHALLENGEs for ops

Ticketing business suffers from flash traffic surges

Need to scale microservice APIs independently

Microservice Architectures need to tolerate failure

It's the cloud -- sh*t happens!

We solve this through AWS AutoScaling Groups

CONTAINERIZING

YOUR APP

Why containerize?

Fast & reliable server provisioning

Consistency in development & production

Dependencies are organized

(private keys, certificates, libs)

Changes to depencies can be tested & staged

5 tips when containerizing

1. All app servers should be ephemeral

2. Separate your app servers & databases

3. Encrypt your secrets!

4. Team should connect via a Jump Server

5. Get a wildcard SSL certificate

TL;DR

The Twelve-Factor App

http://12factor.net

(covers ~90%? of what you need)

the original idea:

fat containers

FAT CONTAINERS

"Container runs a fully-baked app server VM"

1. CI server builds a Docker image if build passes

2. CI server tags the image by git commit

3. To deploy, pull specific image by commit tag, and restart container.

.. didn't work well!

Way too heavy!

Containers aren't VMs.

CI container is ephemeral, so could not incrementally build the images.

Added 20m to each CI job!!

docker ADD, push, and pull are SLOWWW

a better idea:

thin containers

THIN CONTAINERS

"Container runs a fully-baked app server PROCESS"

DOES NOT include application code.

DOES NOT include application gems, node_modules, etc.

Instead, app code & vendorized libs reside on host instance's FS,

and exposed to container's process via shared volumes.

Containers are much lighter, and infrequently built.

docker tip: baseimage-docker

http://phusion.github.io/baseimage-docker

Provides Ubuntu 14.04 LTS as base system

Provides a correct init process

(init @ PID 1, not docker CMD)

Includes patches for open issues (apt incompatibilities, /etc/hosts, etc)

Helpful daemons & tools: syslog, cron, runit, setuser, etc.

docker tip: passenger-docker

https://github.com/phusion/passenger-docker

baseimage-docker

Ruby 1.9.3, 2.0.0, 2.1.5, and 2.2.0; JRuby 1.7.18 (optional)

JRuby + OpenJDK 8 from the openjdk-r PPA (optional)

Python 2.7, 3.0 (optional)

NodeJS 0.10 (optional)

nginx (optional)

Passenger 4 (optional)

example docker run

docker run --name web_staging -v /home/ubuntu/apps/web/staging:/home/app/web -w /home/app/web -p 80:80 uniiverse/web-staging

--name web_staging, makes it easy to work with running container

docker exec web_staging tail -f /some/log/file

-v <host path>:<container path>, mounts codebase on host

-w <container path>, sets the cwd to the app root

-p 80:80, expose nginx in container to port 80 on host VM

app SERVER

RELOADS

The challenge

Application code & packages are stored on host VM's FS

During deployment, changes happen to app code & packages

The app server process running within the Docker container needs to reload the app responsibly:

-

For HTTP, need zero downtime

-

For job workers, need safe restarts

ZERO-DOWNTIME RELOADS:

PASSENGER

Super easy to enable via passenger-docker image

Passenger handles app reloads automatically!

Supports Ruby, Node, Python

ZERO-DOWNTIME RELOADS:

OTHER WEB SERVERS

When not handled automatically,

manually issue a reload via webserver CLI

i.e. for puma

docker exec web_staging bundle exec pumactl reload

SAFE RESTARTS: SIDEKIQ, KUE, etc.

Send a SIGTERM, then SIGINT, then SIGKILL

rerun gem

launches your program, then watches the filesystem. If a relevant file changes, then it restarts your program.

i.e. sidekiq

docker run web_staging rerun --background "bundle exec sidekiq"

i.e. kue

docker run kaiju_staging rerun --background "node kue.js"awS

autoscaling

aws autoscaling in 1 slide

AUTO SCALING GROUP:

CloudWatch events

+

EC2 Launch Configuration

+

Elastic Load Balancer (optional)

== healthy EC2 instances

UNIVERSE AUTOSCALING

AUTO SCALING GROUP

1 per <app>_<environment>

(same as Docker images)

Defines which ELB is used for new instances

Defines min/max cluster size, and CloudWatch events which trigger scaling up/down

universe autoscaling

LAUNCH CONFIGURATION

Specifies machine type, disk config, etc.

Associates instance with IAM role

user_data.sh, executed on first boot

UNIVERSE AUTOSCALING

EC2 INSTANCES

Multi-AZ

Tagged with Name=<app>_<environment>

Runs the Docker container against app codebase

UNIVERSE AUTOSCALING

ELASTIC LOAD BALANCER

1 per <app>_<environment>

Terminates SSL via wildcard cert

New EC2 instances are automatically linked

(optional, only for HTTP services)

USER_data.sh

#!/bin/bash -v

# install pre-requisites

curl -L https://www.opscode.com/chef/install.sh | bash

DEBIAN_FRONTEND=noninteractive apt-get update -y

DEBIAN_FRONTEND=noninteractive apt-get install -y awscli

aws s3 --region=us-east-1 cp s3://uniiverse-bucket/uniiverse-validator.pem /etc/chef/

# write first-boot.json

(

cat << 'EOF'

{"run_list": ["role[boxoffice]"]}

EOF

) > /etc/chef/first-boot.json

# write client.rb

(

cat << 'EOF'

environment 'production'

log_level :info

log_location STDOUT

client_key '/etc/chef/uniiverse.pem'

chef_server_url 'https://api.opscode.com/organizations/uniiverse'

validation_client_name 'uniiverse-validator'

validation_key '/etc/chef/uniiverse-validator.pem'

EOF

) > /etc/chef/client.rb

# bootstrap via chef

chef-client -j /etc/chef/first-boot.jsonUSER_DATA.SH

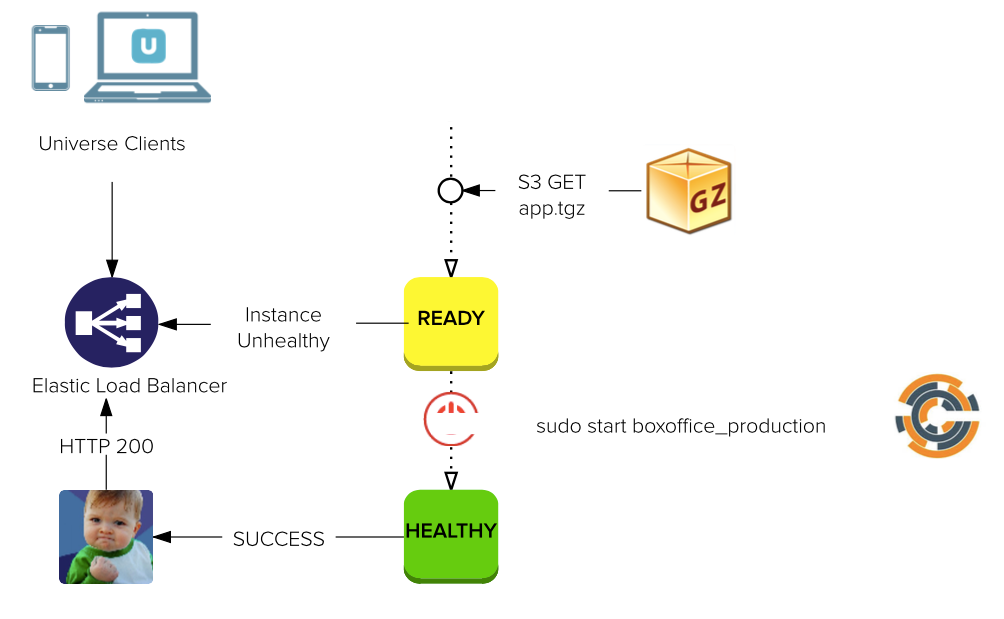

1. installs Chef Client

2. writes Chef Client configuration to /etc/chef

(incl. Chef Role and Environment)

3. installs awscli (for S3 access)

4. S3 GET Chef private key via IAM role

(set in Launch Configuration)

5. runs Chef Client!

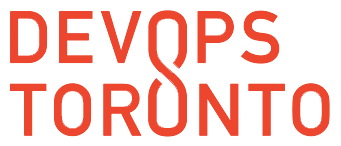

BOOTSTRAPPING AN inSTANCE

LET'S ZOOM IN ..

CHEF COOKBOOK: unii-base

1. Hardens a new instance (SSH configuration, firewalling, etc.)

2. Applies any security updates (HeartBleed, etc.)

3. Configures helpful daemons (fail2ban, log aggregation, etc.)

Consistently applied to all servers across any roles and environments

CHEF COOKBOOK: unii-DOCKER

1. Installs Docker daemon

2. Pulls private keys to auth with Private Repository

CHEF COOKBOOK: unii-app-server

1. Determines the `docker run` command

2. Pulls latest Docker image for <app>_<environment>

3. Downloads <app>_<environment>.tgz from S3 and extracts

4. Generates upstart configuration

5. Starts service, which launches Docker container

Zooming out again ..

DEPLOYMENTS

RE-APPLY UNII-APP-SERVER!

HIPCHAT + HUBOT CHATOPS

Deployments are executed by "Uniiverse ☃"

DEPLOYMENT:

BEHIND THE SCENES

DEPLOY SCRIPT: 4 PHASES

1. BUILD

an app.tgz

2. UPLOAD

to S3 bucket

3. SYNC

with all app servers in cluster

4. MIGRATE

any data

STEP 1: BUILD

if Gemfile?

bundle install --path=vendor/bundle

(for vendorized gems)

if "app/assets/"?

RAILS_ENV=staging RAILS_GROUPS=assets bundle exec rake assets:precompile (i.e. Rails assets)

if package.json?

NODE_ENV=staging make dist (builds node_modules/, compiles coffeescript, etc)

STEP 2: UPLOAD

1. Creates app.tgz archive, including app codebase, vendorized gems, etc. from STEP 1.

2. Excludes certain stuff (logs, caches, temp directories, etc)

3. Uploads app.tgz to special S3 bucket accessible only by app servers via IAM role

STEP 3: SYNC

1. Discovers all EC2 instances tagged with

Name=<app>_<environment>

2. Issues 1 Ansible ad-hoc command to reapply chef cookbooks

ansible all -i server1,server2, -a "chef-client -j /etc/chef/app-server.json" STEP 4: MIGRATE

if "db/migrate/"?

ansible all -i server3 -a "sudo docker exec web_staging bundle exec rake db:migrate" (i.e. runs any Rails migrations)

Only runs on 1 randomly-chosen server in the cluster

helpful

devops tools

./ssh.sh

quick CLI to SSH into any instance:

./ssh.sh <app> <environment> [command]

./ssh.sh web staging./ssh.sh web staging free -m

Resolves EC2 instances by

Name tag

Solves the problem of server discovery for remote access

Uses the Jump Server to access the instance

./attach.sh

quick CLI to SSH into any app container:

./attach.sh <app> <environment> [command]

./attach.sh web staging./attach.sh web staging bundle exec rails console staging./attach.sh web staging bundle exec rake cache:clear Uses ./ssh.sh and docker exec

Simplifies connecting to the running container.

Perfect for opening an interactive console, etc.

THE

OUTCOME?

BENEFITS

A consistent way to ship microservices in Containers despite underlying language, framework, or dependencies

Services are multi-region, can auto-scale with traffic, and auto-heal during failure

Developers have 1 way of shipping code, with gory details neatly abstracted. No longer daunting to add a new microservice.

Helpful tools and scripts can be written once and reused everywhere.

AREA OF OPTIMIZATION #1

OPTIMIZE COST:

Requires lots of ELB instances

(2 environments * N microservices)

ONE SOLUTION?

1 ELB & 1 ASG of HAProxy machines

incl. subdomain routing,

health detection, etc.

AREA OF OPTIMIZATION #2

OPTIMIZE UTILIZATION:

EC2 instances are single-purpose

(only run 1 docker container)

ONE SOLUTION?

Marathon: execute long-running tasks via Mesos & REST API

All instance CPU & RAM is pooled, and tasks (i.e. containers) are evenly distributed by resource utilization

AREA OF OPTIMIZATION #3

A FEW NEW POINTS OF FAILURE:

-

Hosted Chef

-

Dockerhub Private Repo

- Github

SOLUTION:

Build redundancy into the 3rd party services you rely on

IS THIS totally over-engineered?

We spiked working implementations of these awesome alternatives, all of which support Docker.

-

AWS Elastic Beanstalk

-

AWS OpsWorks

-

AWS EC2 Container Service

-

Google Container Engine (via kubernetes)

-

Marathon

We cherry-picked the parts we liked and rolled out own!

For us, it makes sense. YMMV

happy

hacking!

THANK YOU :)

https://universe.com

@AdamMeghji

adam@universe.com