Explainable Deep Learning

Ahmad Haj Mosa

Fabian Schneider

ahmad.haj.mosa@pwc.com

LinkedIn: ahmad-haj-mosa

schneider.fabian@pwc.com

LinkedIn: fabian-schneider-24122379

When, Why and How

Marvin Minsky

The definition of intelligence

Our minds contain processes that enable us to solve problems we consider difficult.

"Intelligence" is our name of those processes we don't yet understand.

| Interpretation | Explanation |

|---|---|

| Between AI and its designer | Between AI and its consumer |

| External analytics | Learned by the model |

| Model specific or agnostic | Model specific |

| Local or global | local |

| examples: LIME and TSNE | examples: Attention Mechanism |

Interpretation vs explanation

The need for XAI depends on the task, domain and process an AI is automating

Self Driving Cars

Autonomous Drones

Recommendation system

Driver Assistant

System

source: USPAS

Hazard – a state or set of conditions of a system (or an object) that, together with other conditions in the environment of the system (or object), will lead inevitably to an accident (loss event).

The need for XAI depends on the legal, life and cost risk of the automated process

Why XAI?

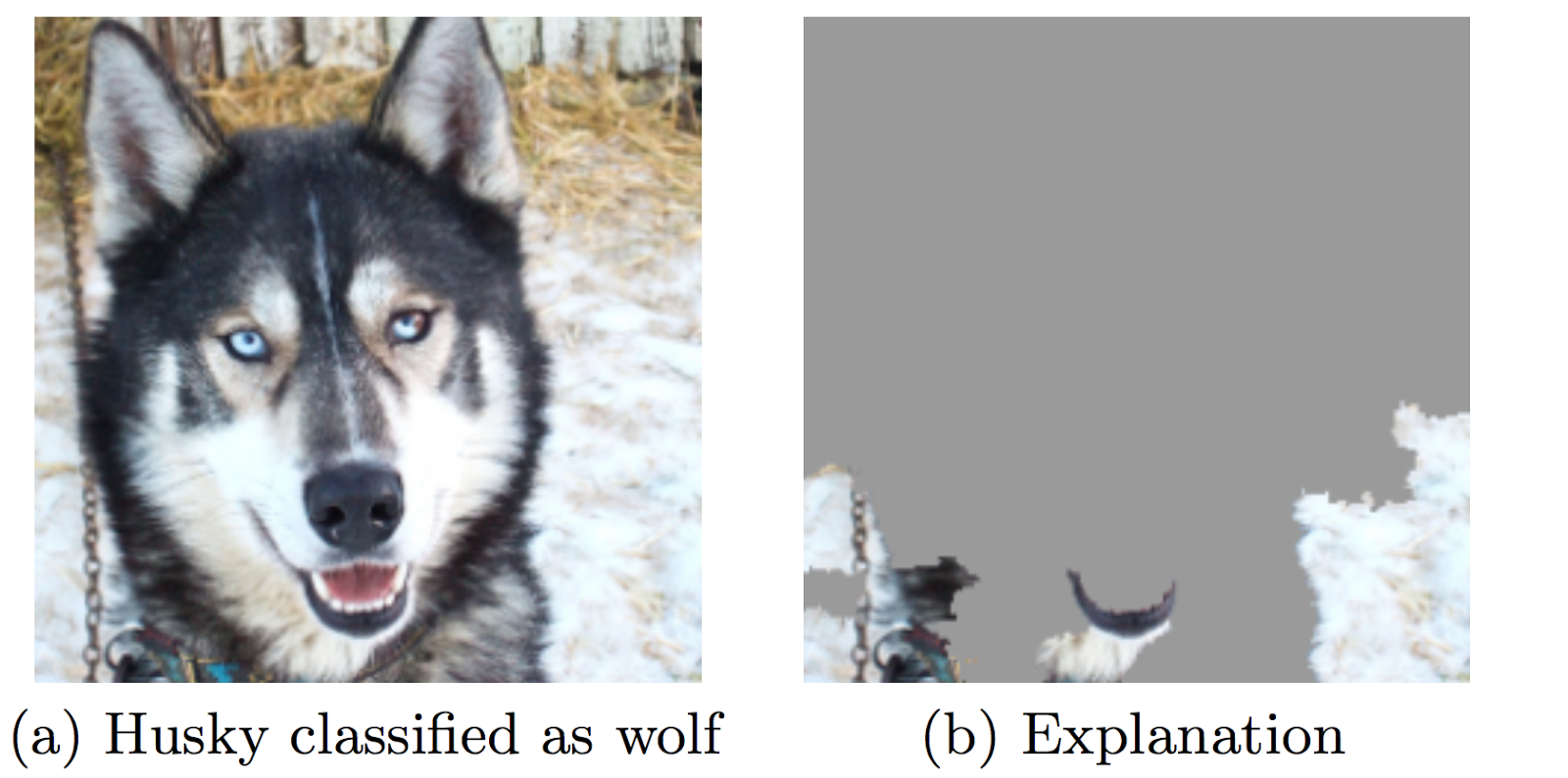

Classification: wolf or husky?

source: “Why Should I Trust You?” Explaining the Predictions of Any Classifier

-

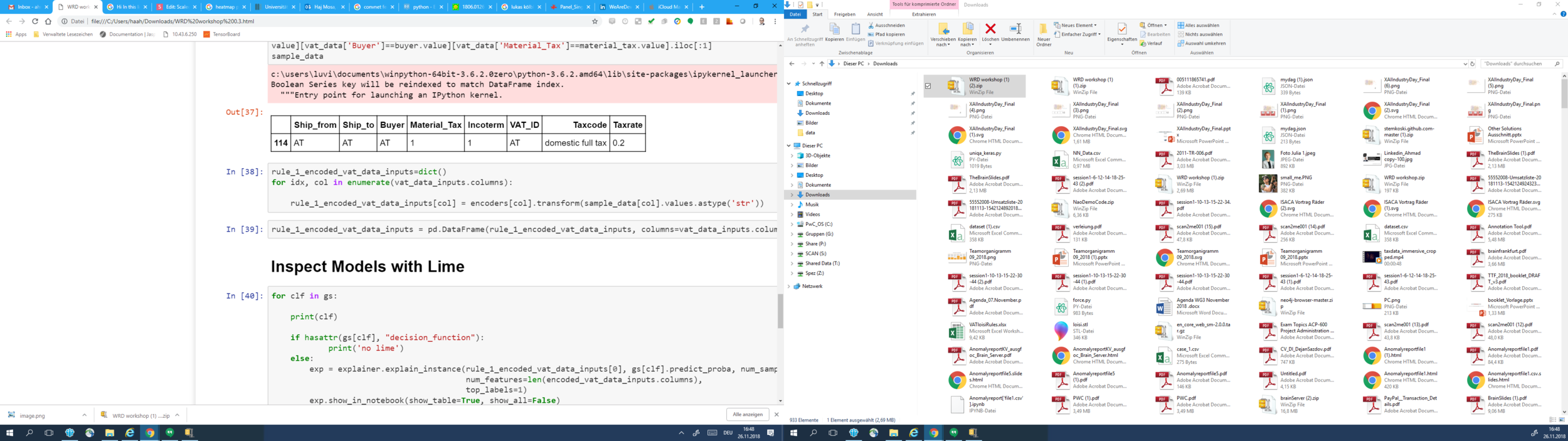

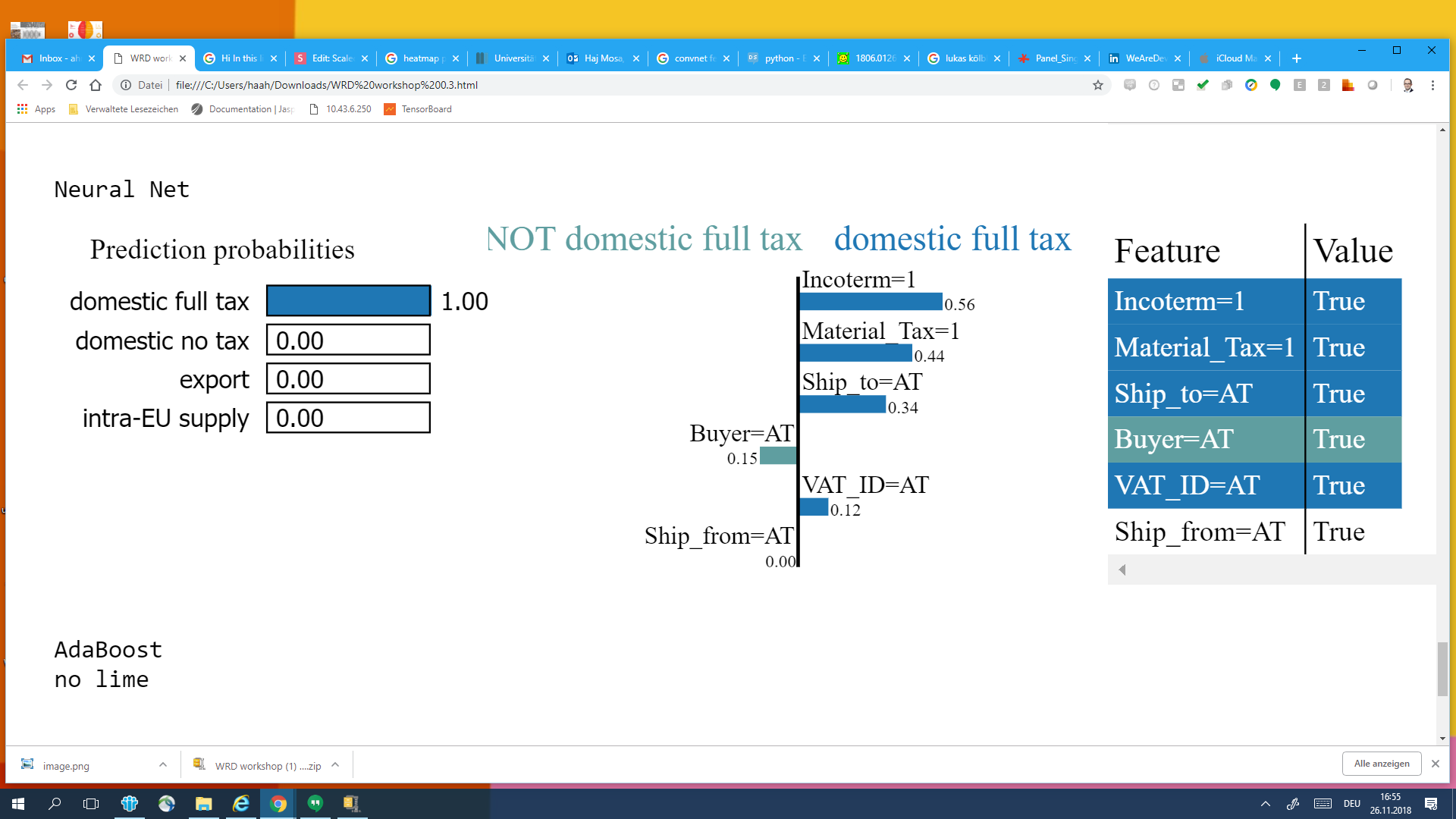

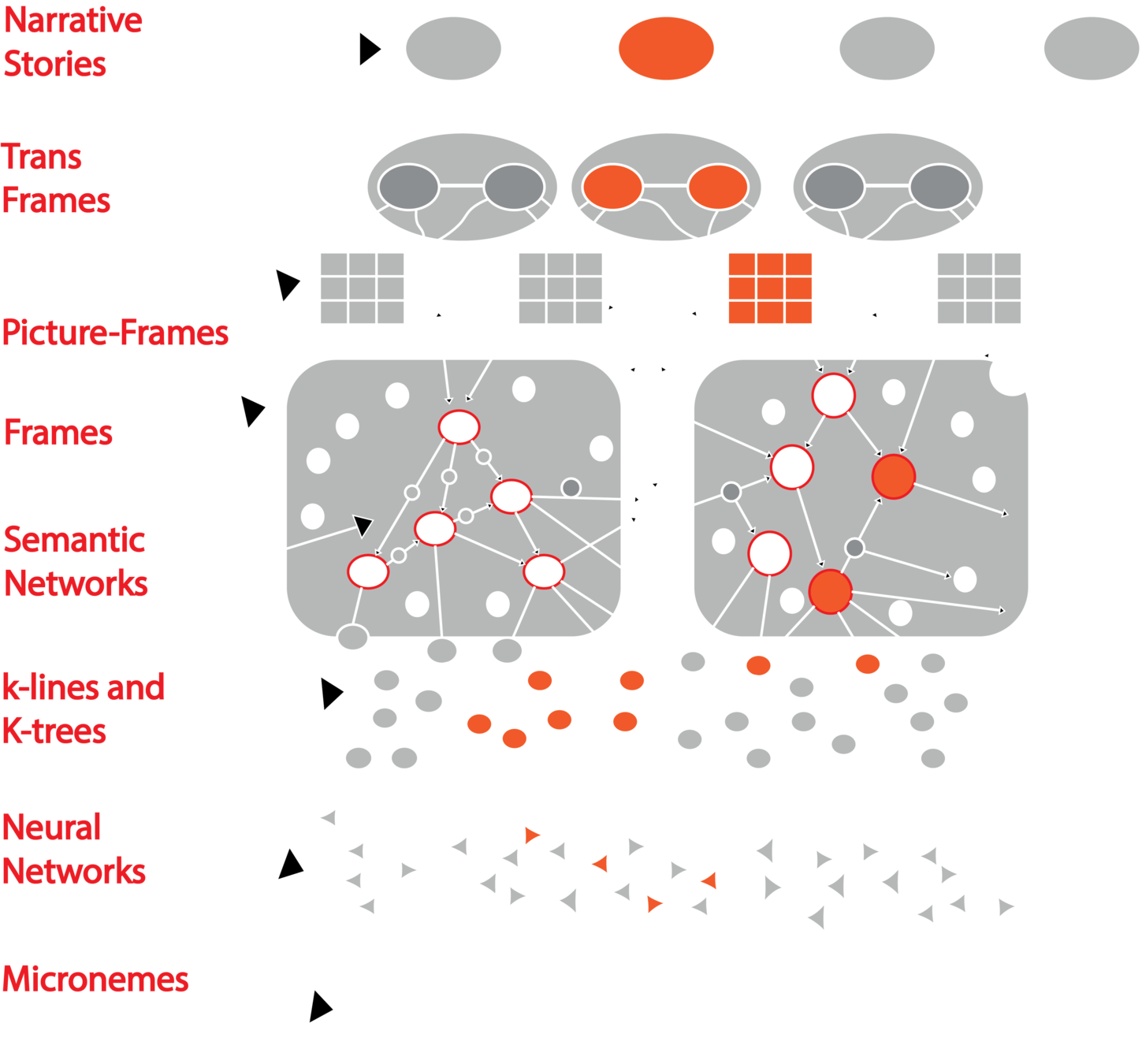

The first rule is: if your are shipping a product from Austria to Austria and the shipped material is according to law taxable, then the Taxcode is: domestic full tax -> 20%

Classification of Value Added Tax

source: DARPA

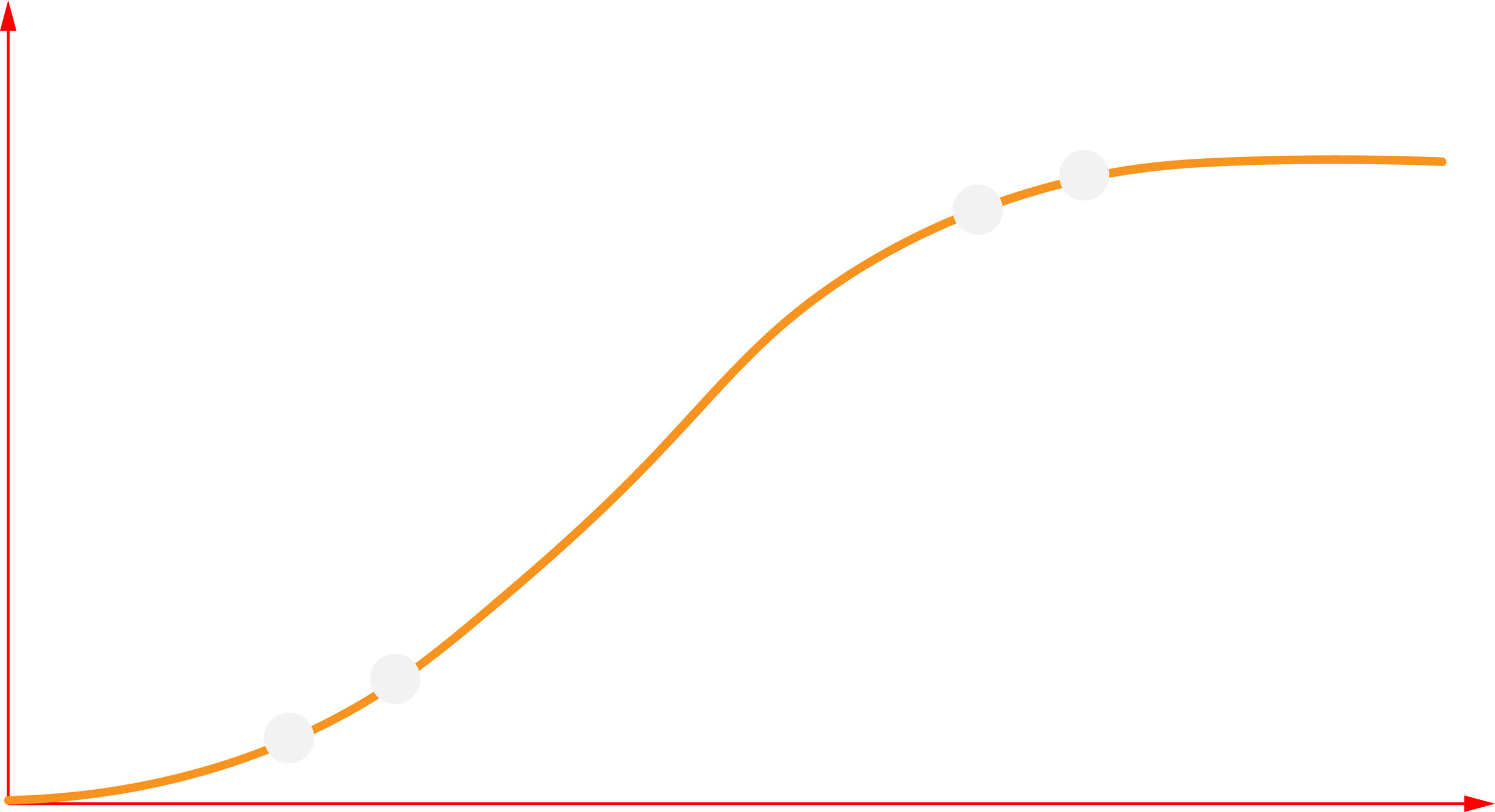

Is it explainability vs accuracy?

Explainability

(notional)

Explainability

Prediction Accuracy

Neural Nets

Deep

Learning

Statistical

Models

AOGs

SVMs

Graphical

Models

Bayesian

Belief Nets

SRL

MLNs

Markov

Models

Decision

Trees

Ensemble

Methods

Random

Forests

Learning Techniques (today)

Non relational inductive bias

Spatial and Temporal relational inductive bias

Attention based Spatial and Temporal relational inductive bias

Multi relational inductive bias

Attention based Multi relational inductive bias

Disetangled Representation

Graph Neural Networks

Attention Mechanism

ConvNets & LSTM

Feed Forward

Performance

Explainability

Current

Future

Is it explainability vs accuracy?

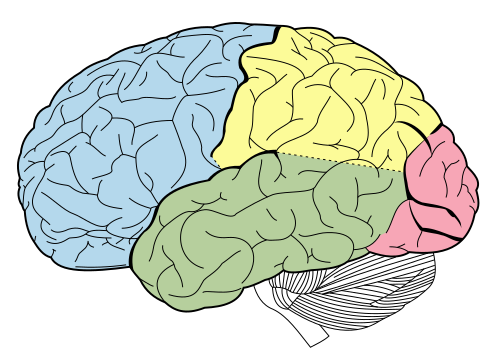

Relation between abstraction and attention

prefrontal cortex

parietal lobe

parietal lobe

Top-down attention

Bottom-up attention

Attention allows us to focus on few elements out of a large set

Attention focus on a few appropriate abstract or concrete elements of mental representation

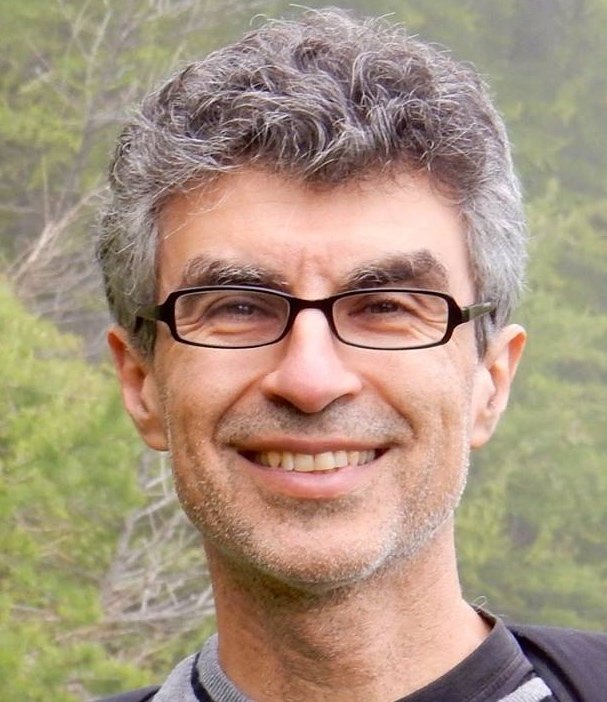

source: Yoshua Bingo

What is next in Deep Learning?

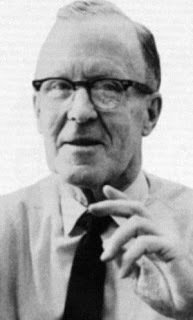

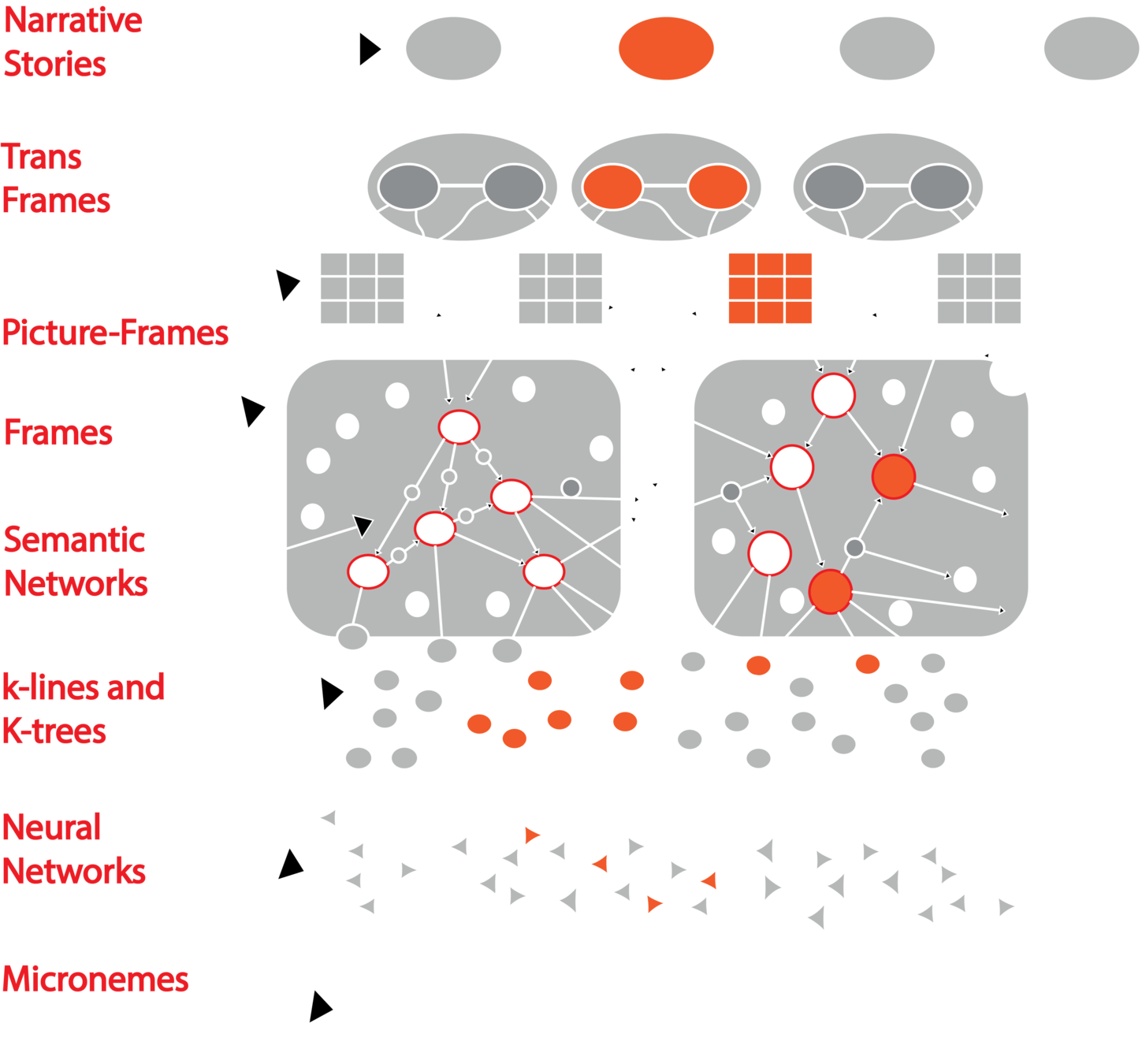

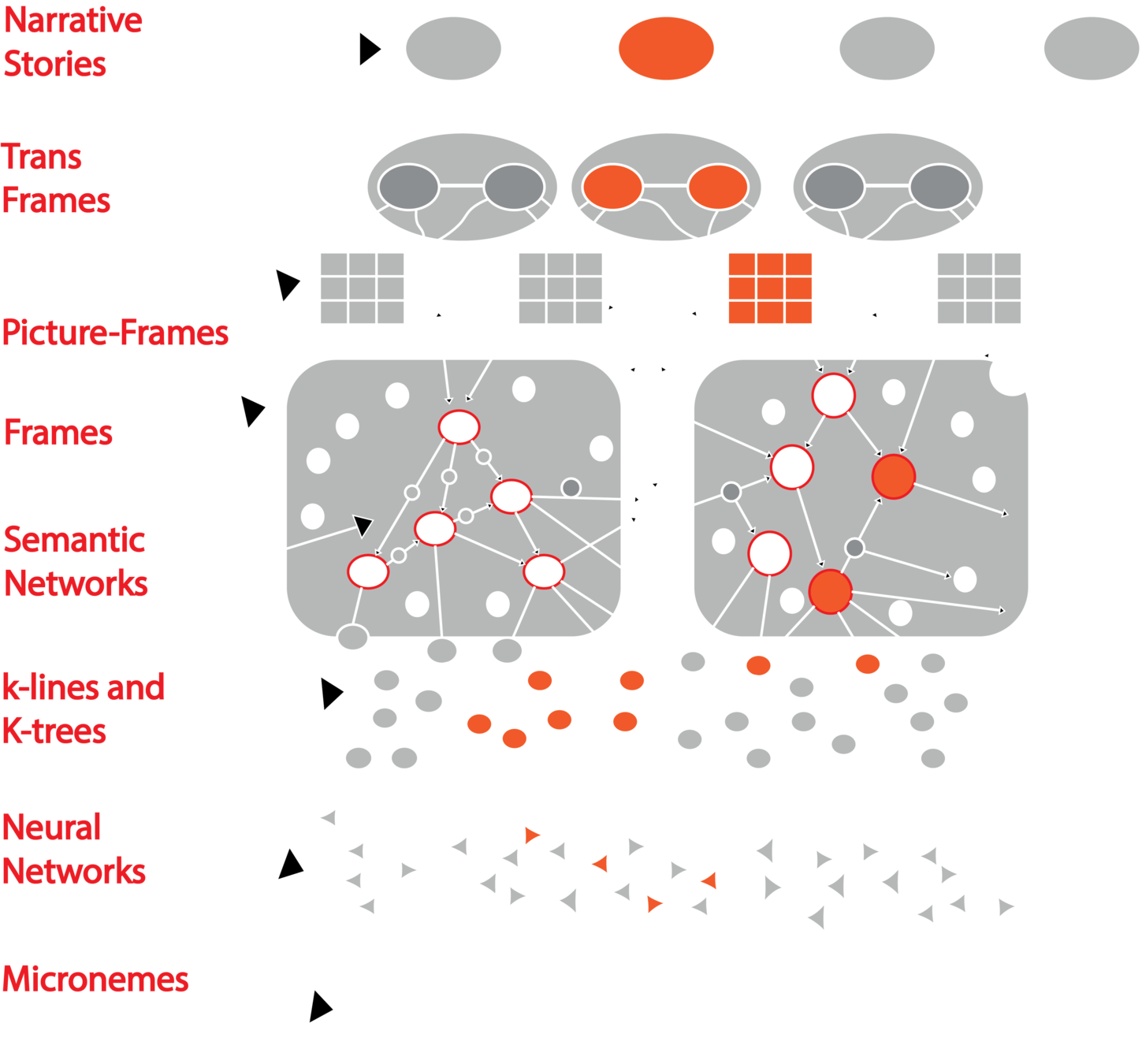

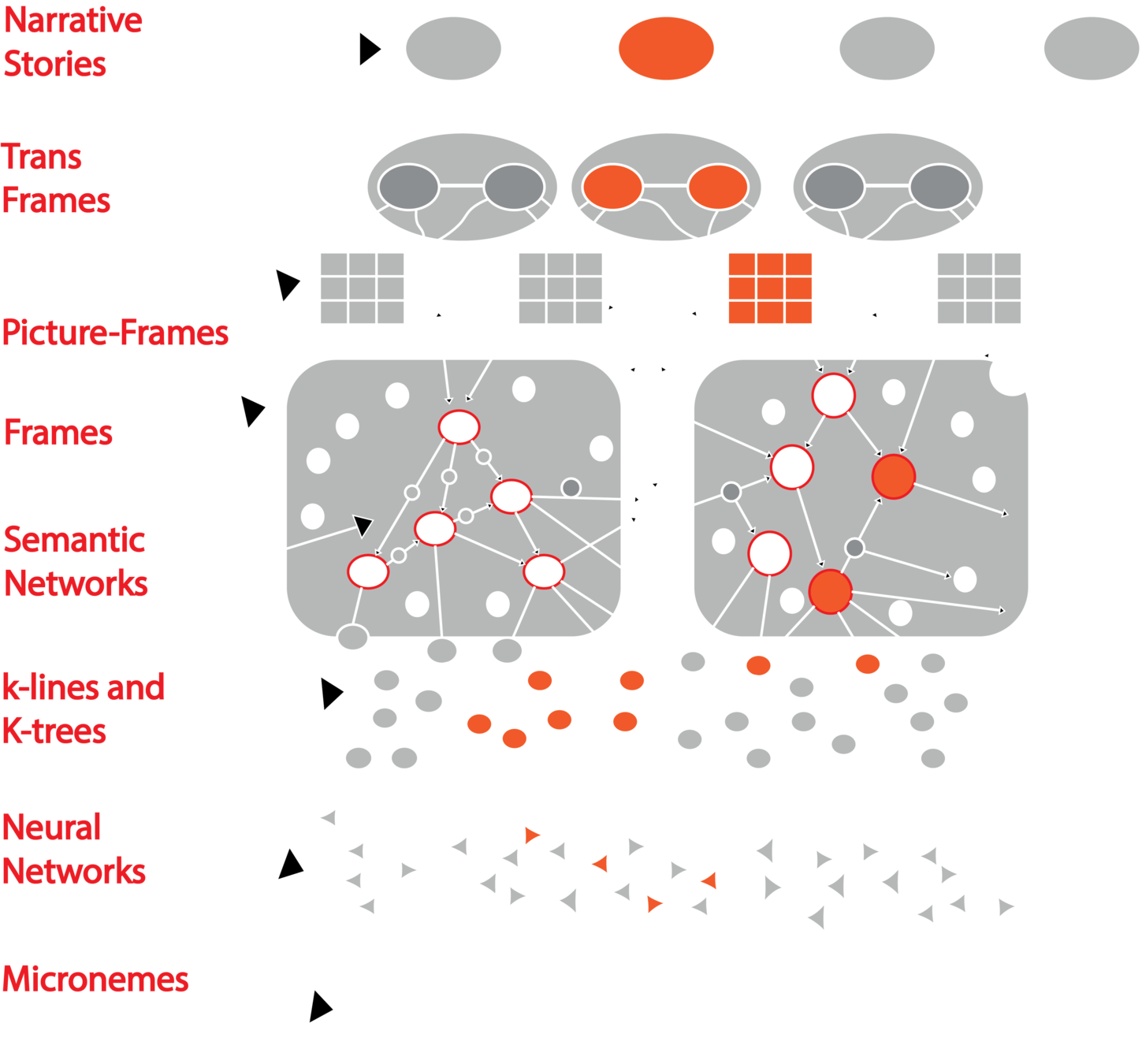

Marvin Minsky

Knowledge

Representation

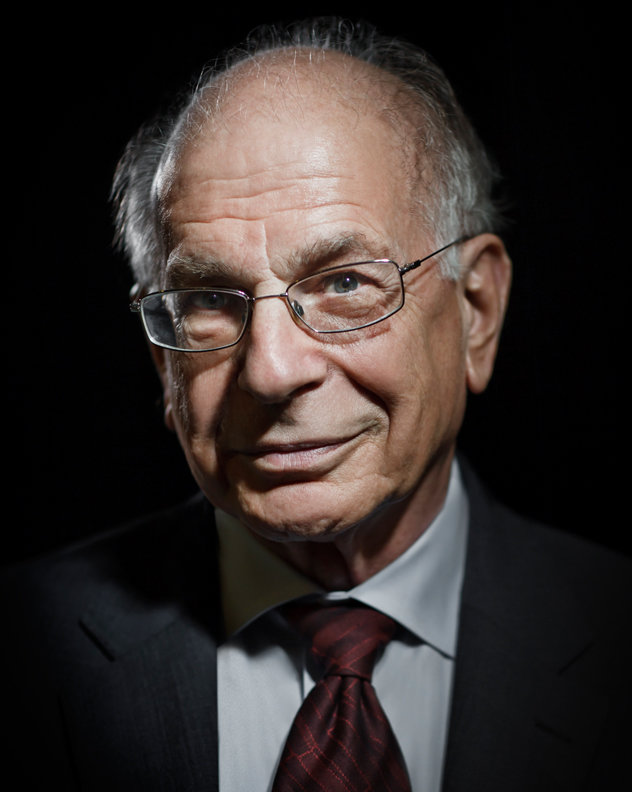

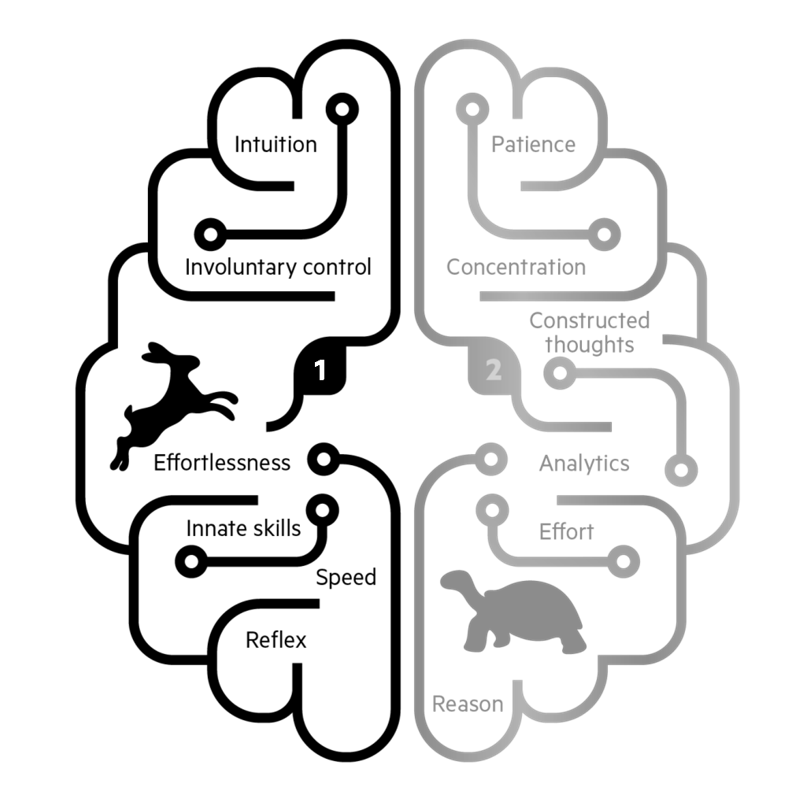

Daniel Kahneman

Thinking fast and slow

Yoshua Bengio

Disetangled

Representation

Donald O. Hebb

Hebbian Learning

Research inspiration and references

Slow

Fast

Automatic

Effortful

Logical

Emotional

Conscious

Unconscious

Stereotypic

Reasoning

| System 1 | System 2 |

|---|---|

| drive a car on highways | drive a car in cities |

| come up with a good chess move (if you're a chess master) | point your attention towards the clowns at the circus |

| understands simple sentences | understands law clauses |

| correlation | causation |

| hard to explain | easy to explain |

Thinking fast and slow

source: Thinking fast and slow by Daniel Kahneman

"When you "get an idea," or "solve a problem" ... you create what we shall call a K-line. ... When that K-line is later "activated", it reactivates ... mental agencies, creating a partial mental state "resembling the original."

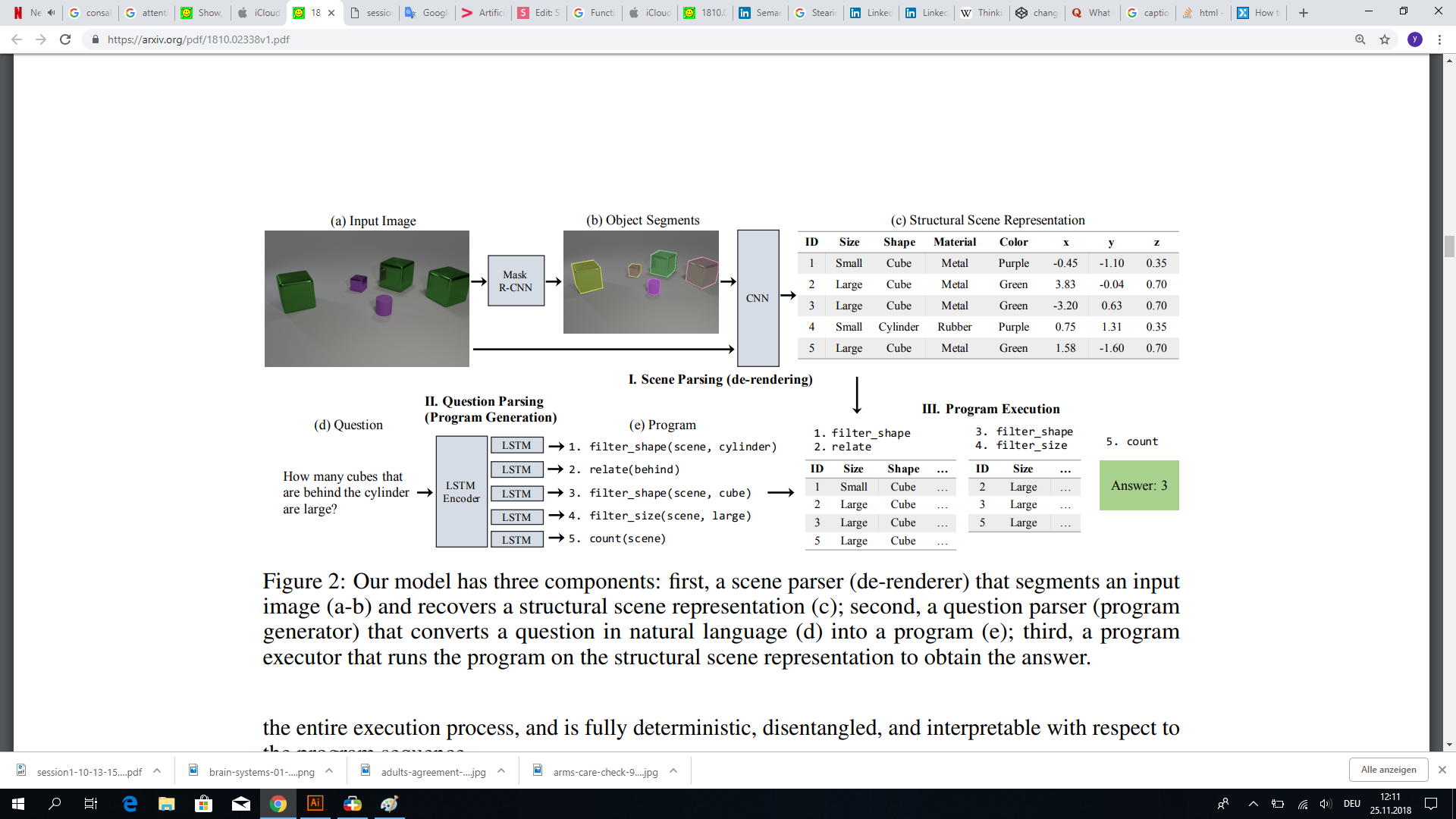

"A frame is a data structure with typical knowledge about a particular object or concept."

A framework for representing knowledge

source: The Emotion Machine, Marvin Minsky

thinking fast

thinking slow

consciousness prior

learning slow

learning fast

hard explanation

easy

explanation

A framework for representing knowledge

A framework for representing knowledge

Trend 1 for XDL

Train a readable/visible representation before

training a decision.

Steering Angle

Representation model

Decision model

Representation learning

Steering Angle

How to explain that?

Disentangled Representation + Neural Symbolic Learning

decision Model

Representation model

Encoded Representation

Representation then decision

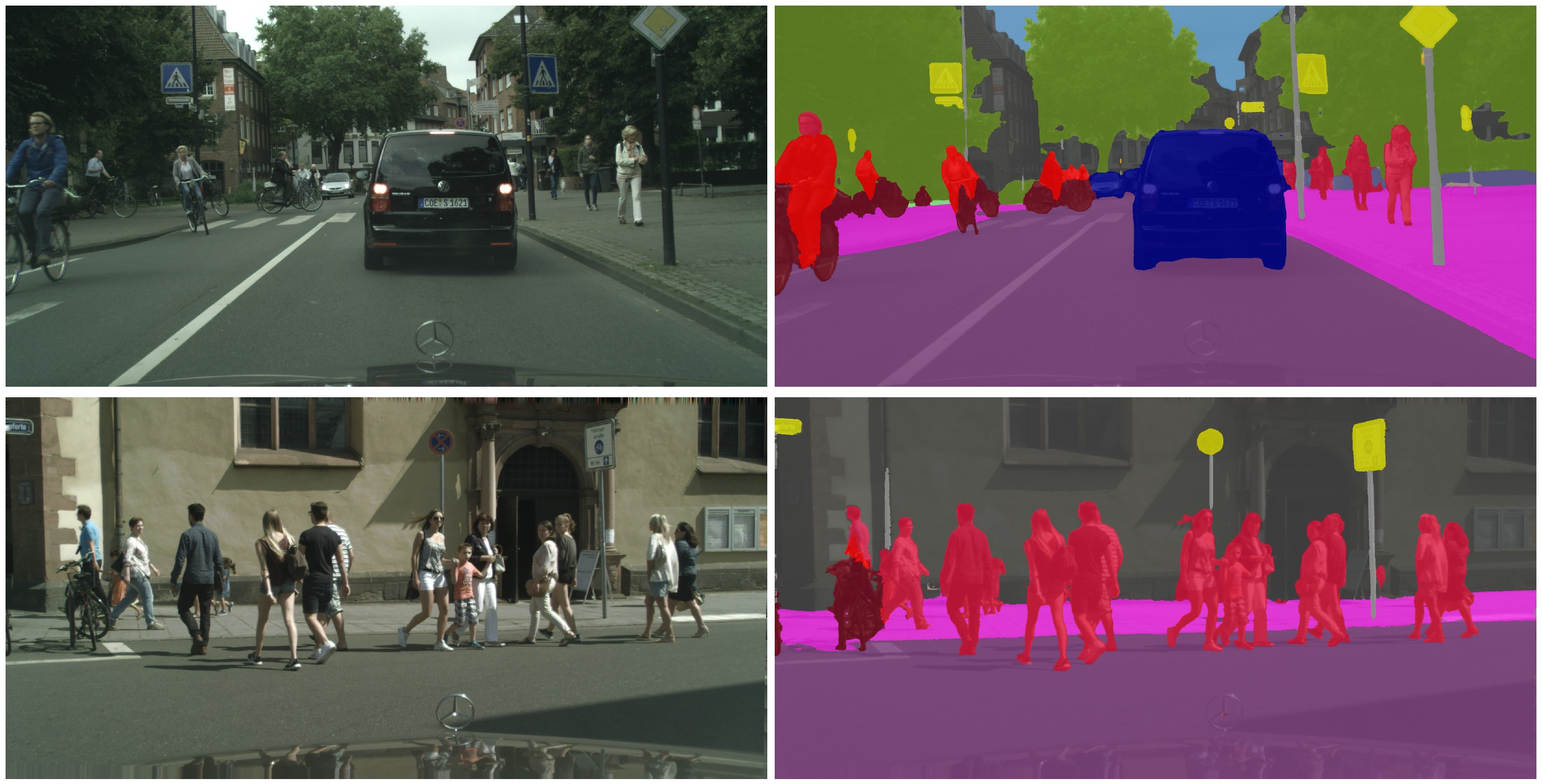

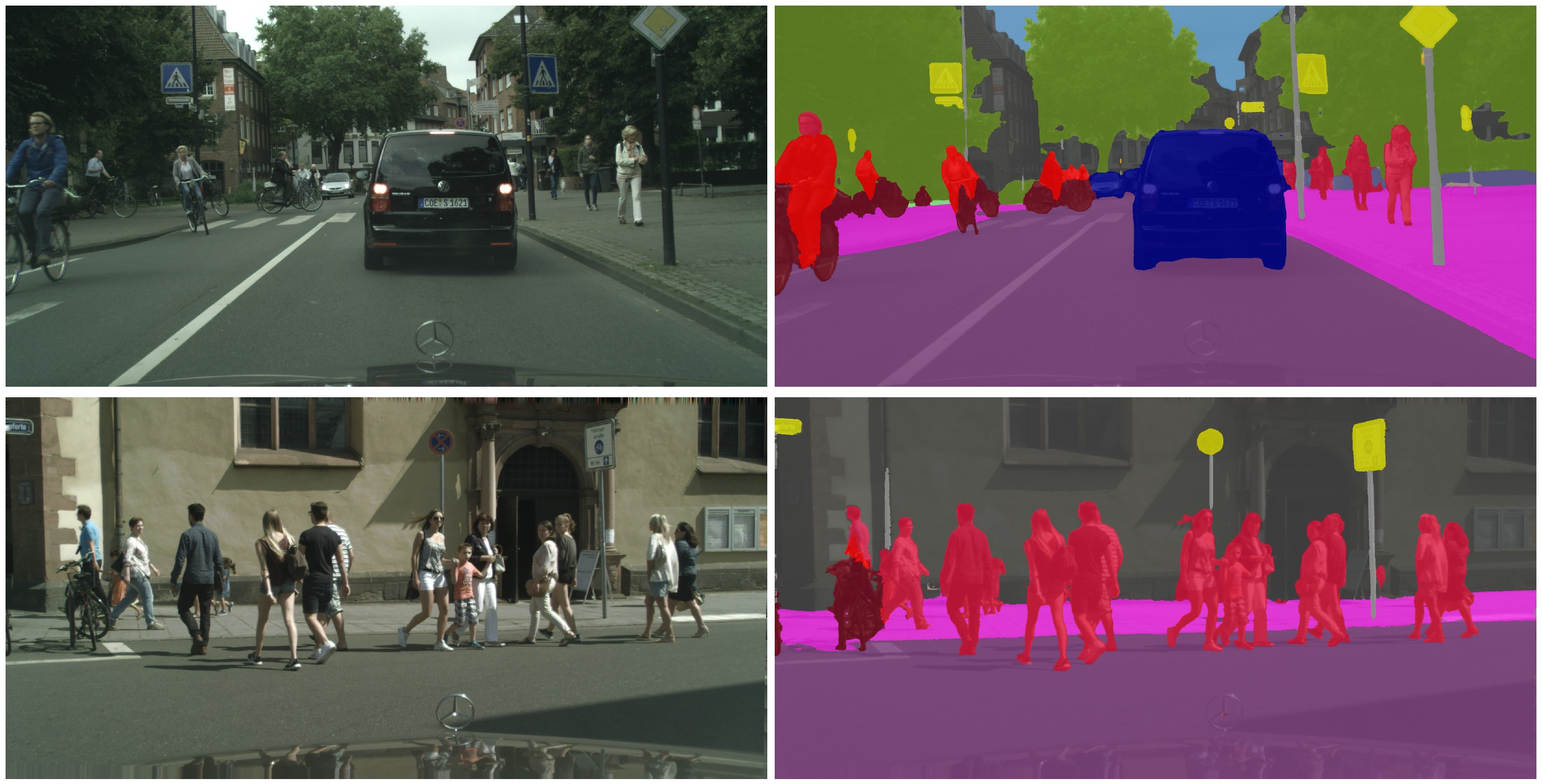

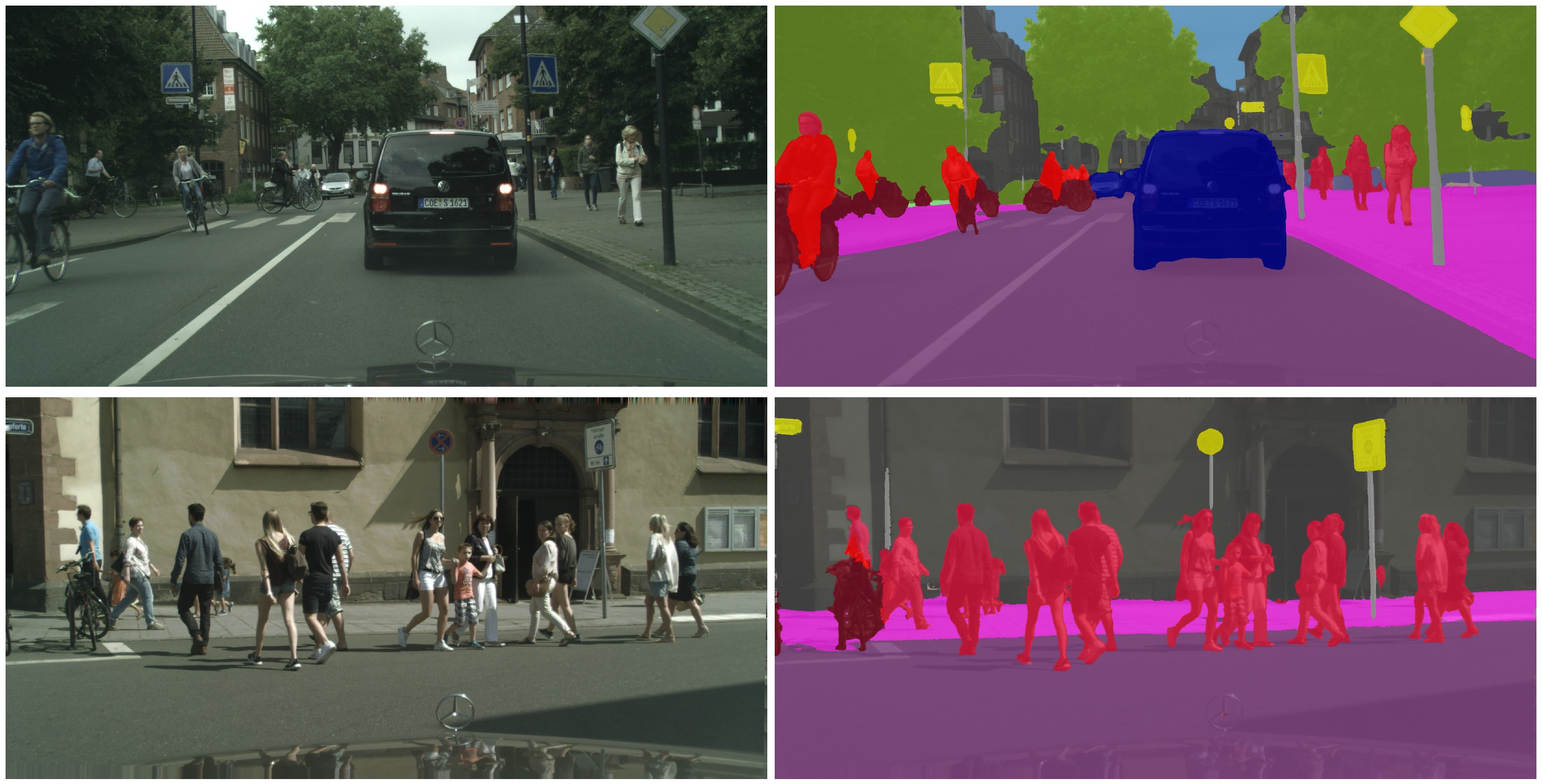

Trend 2 for XDL

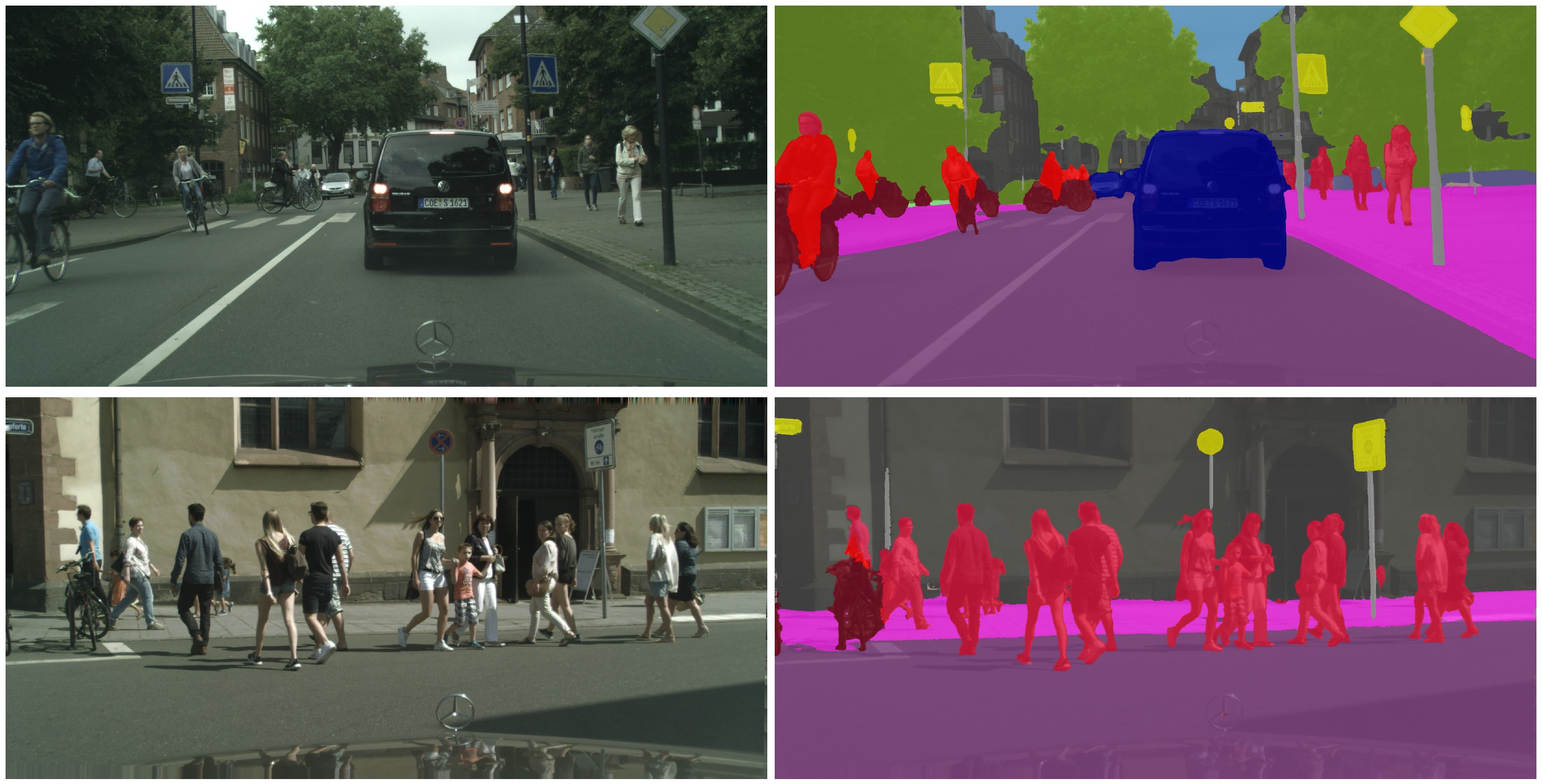

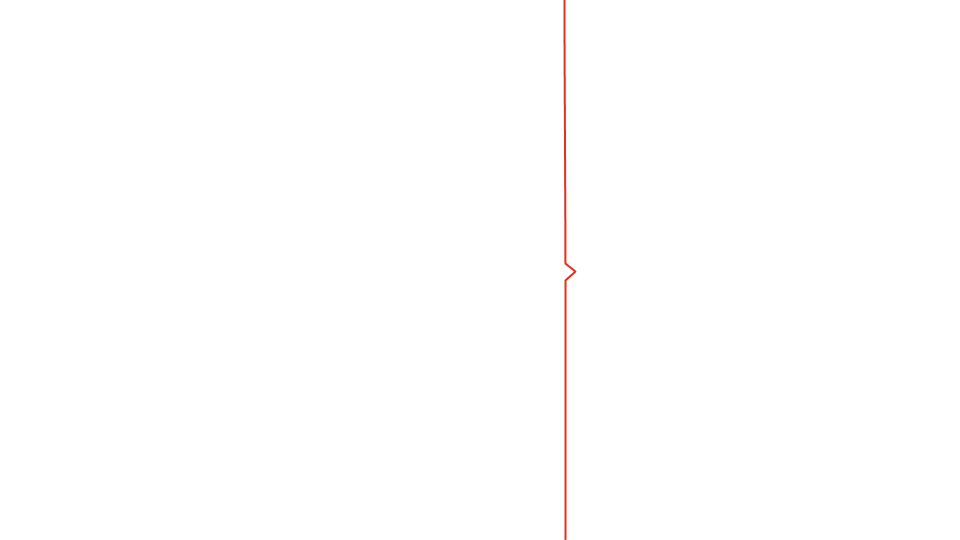

Object-oriented-learning

instead of

End-end learning.

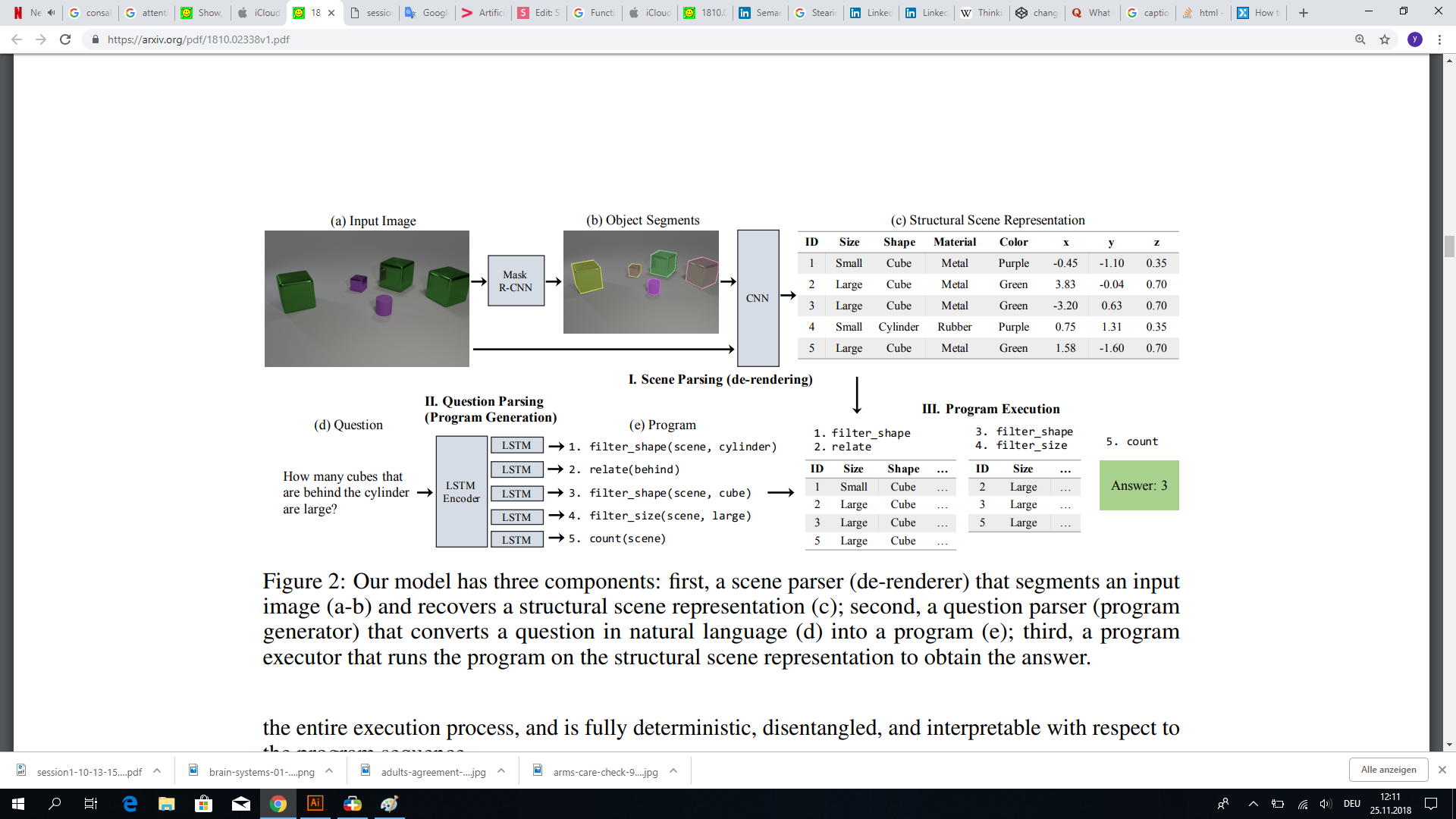

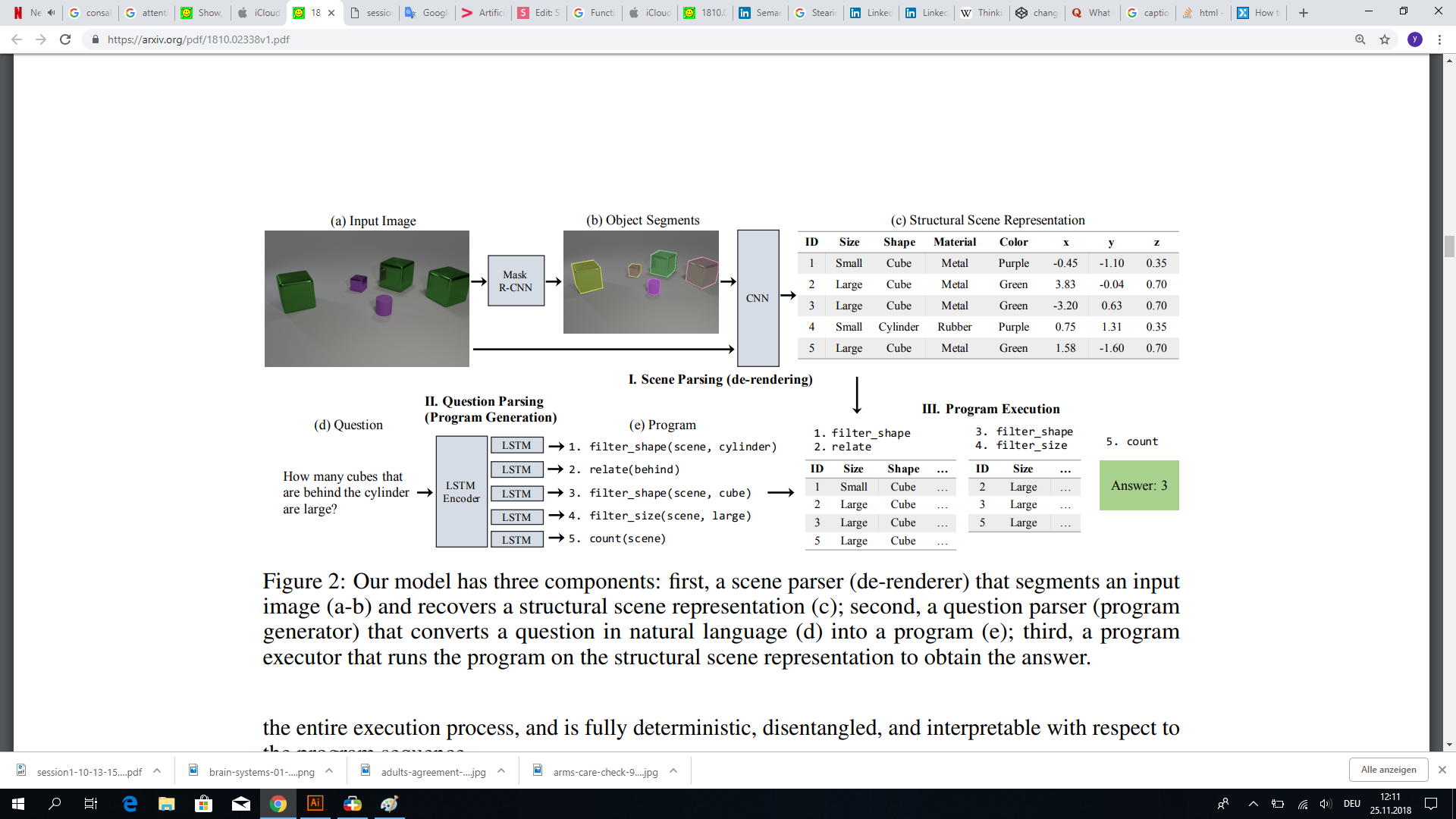

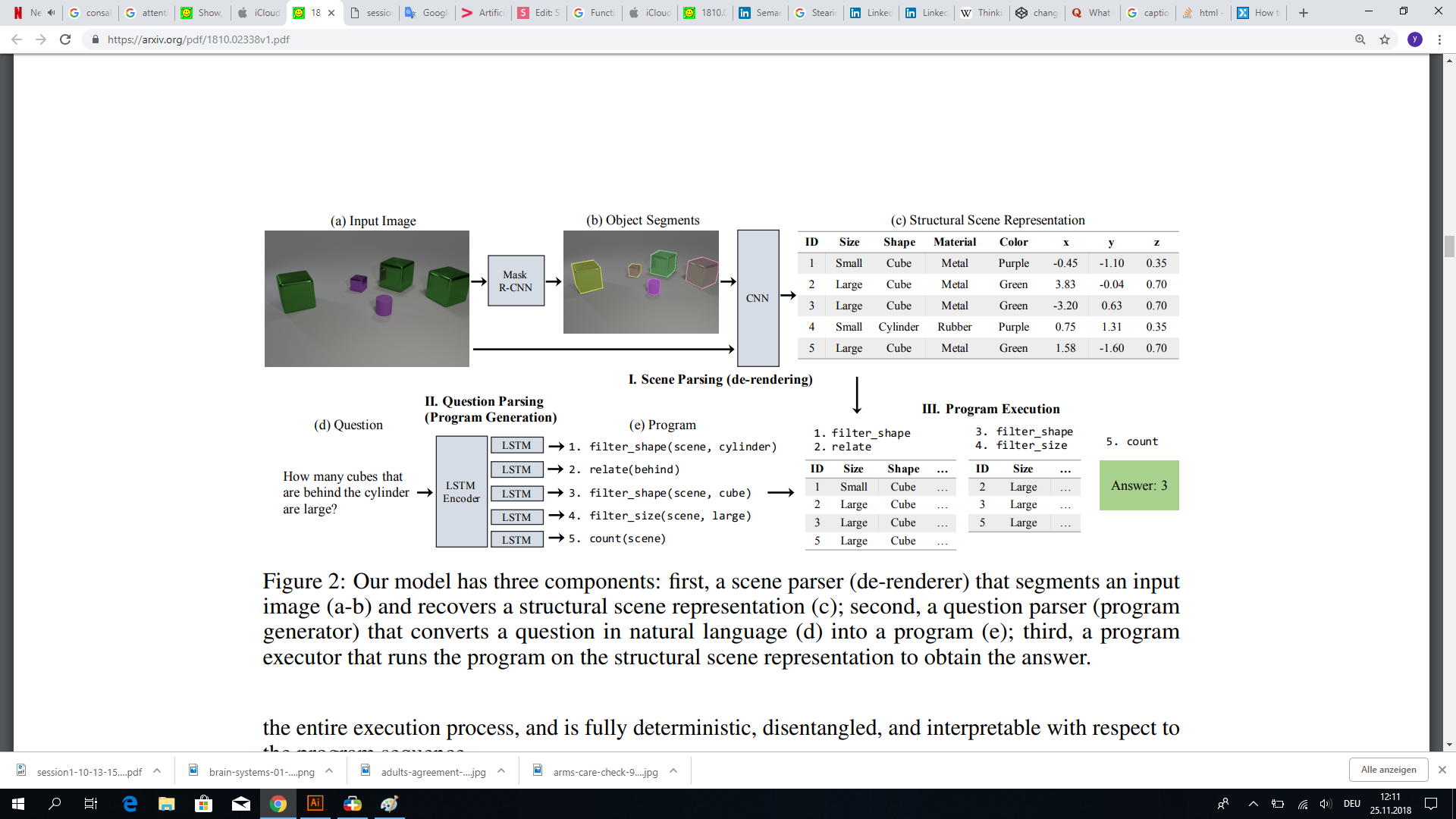

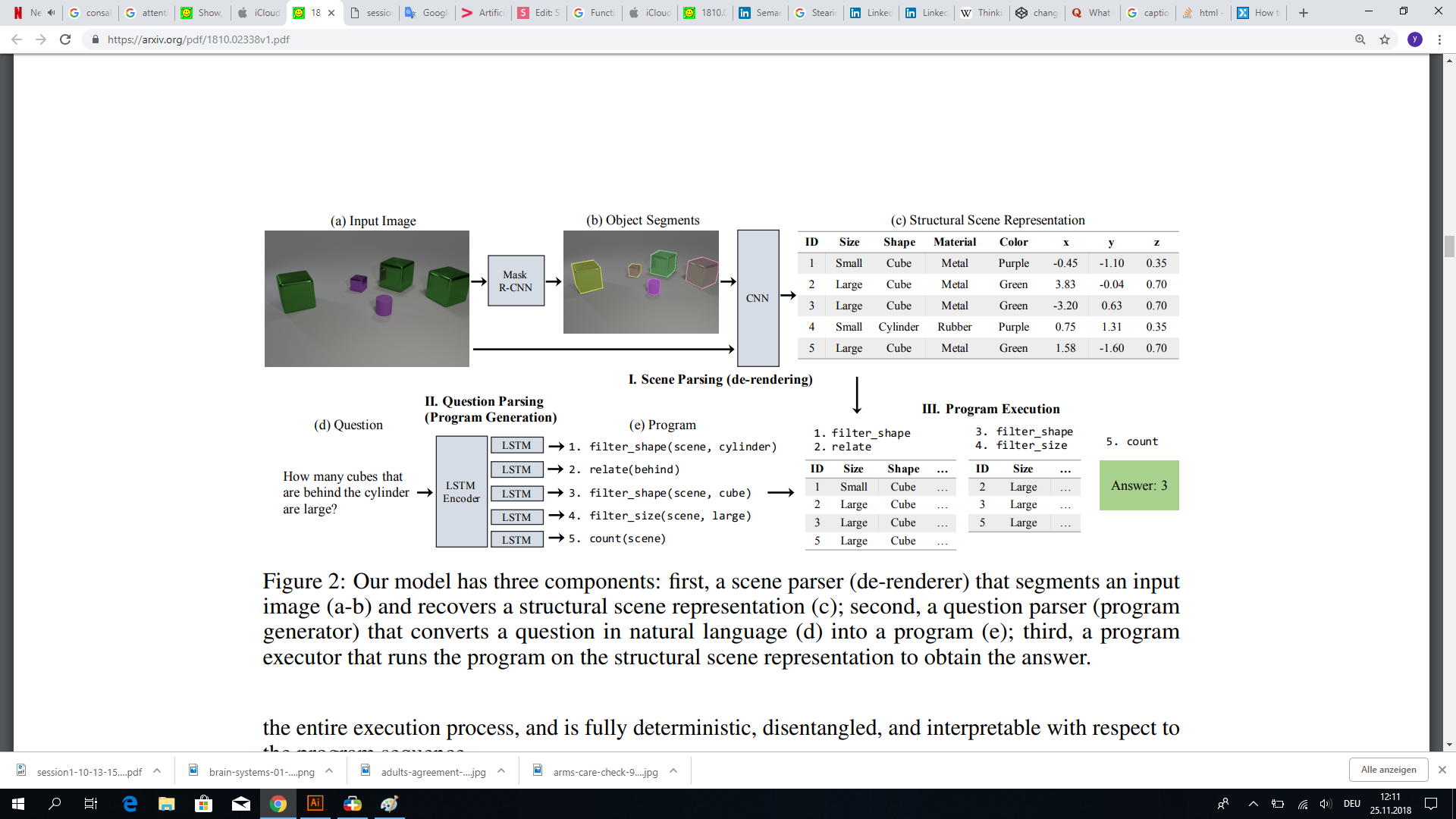

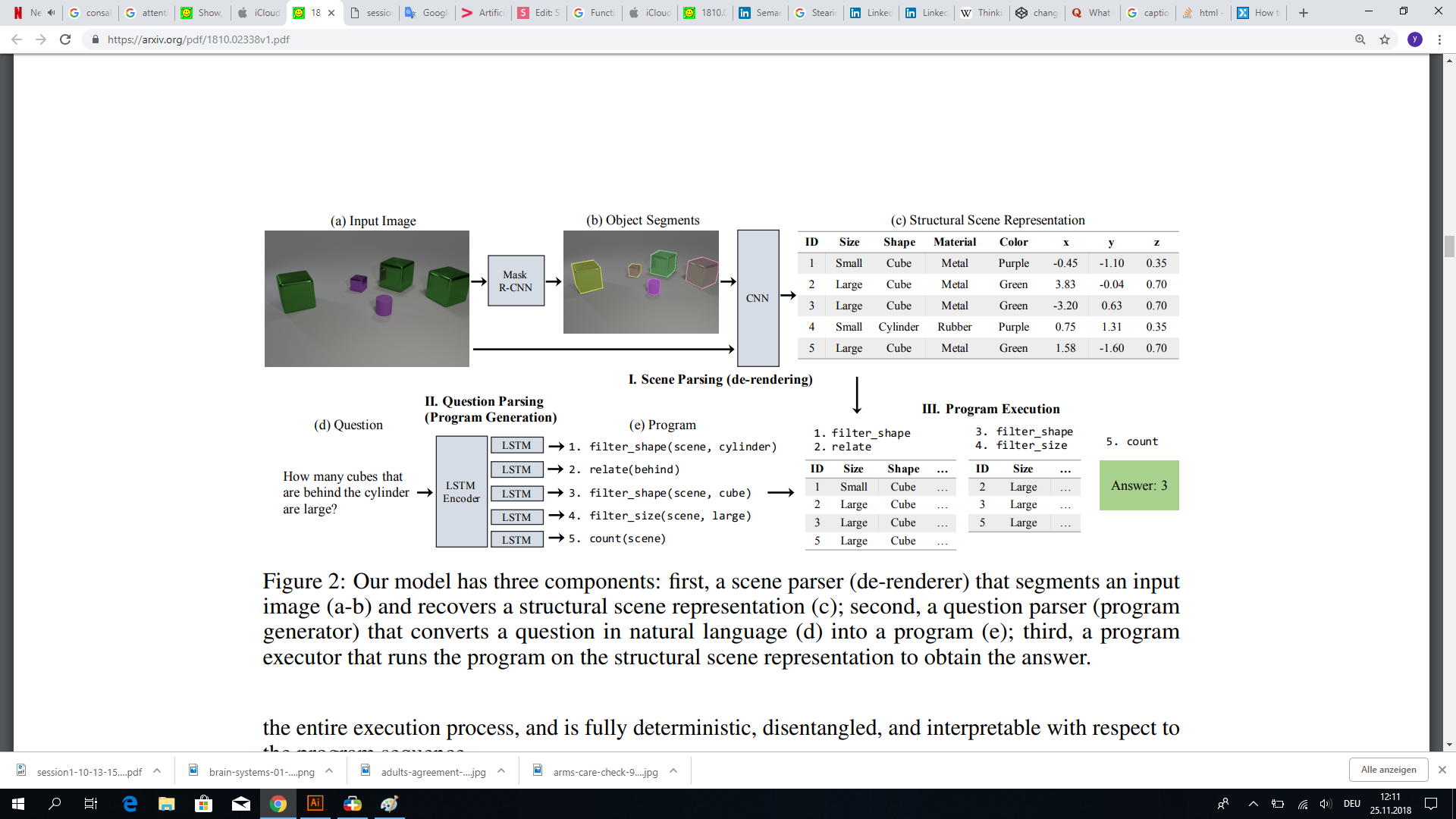

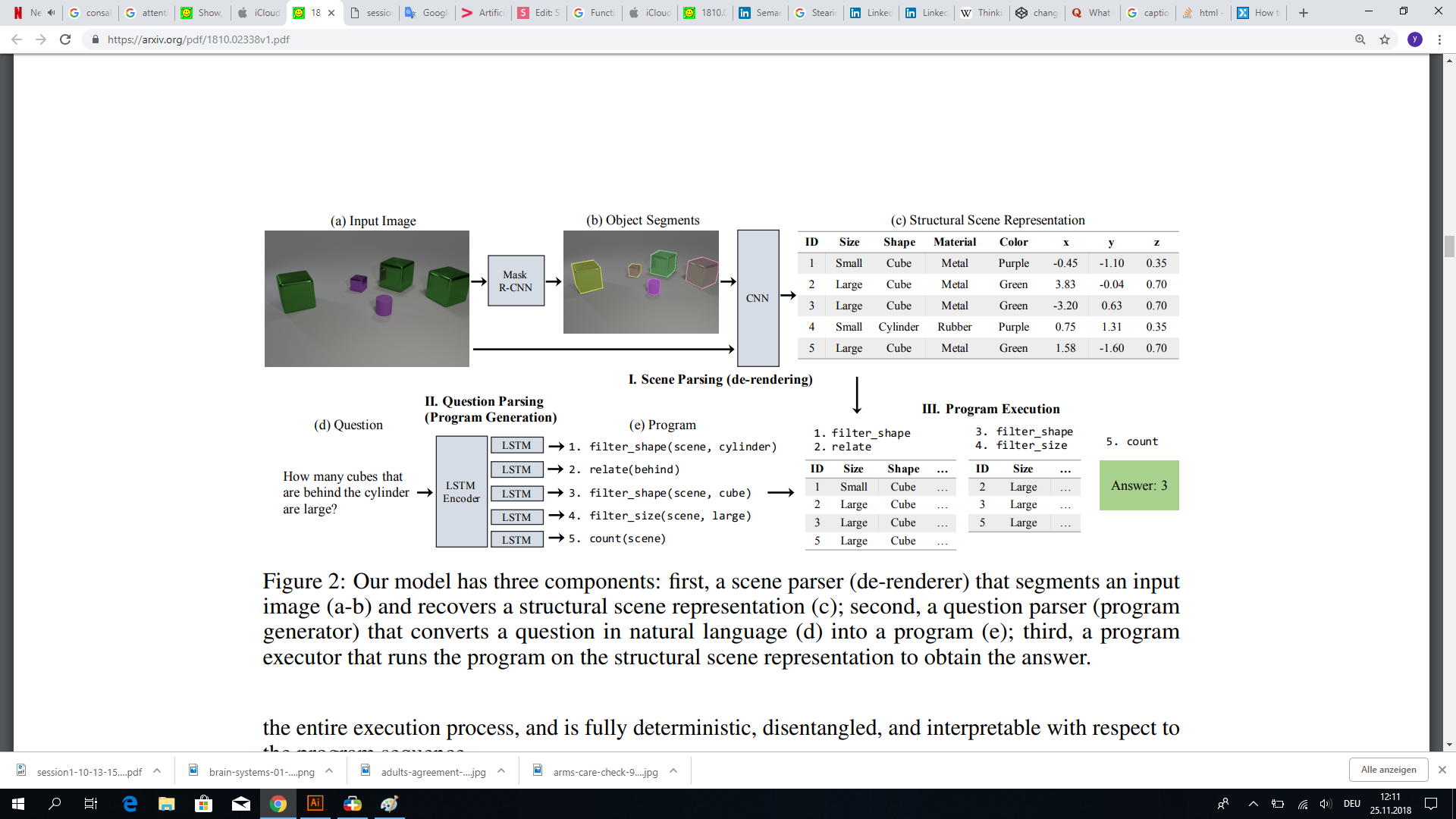

source: arXiv:1810.02338: Neural-Symbolic VQA: Disentangling Reasoning from Vision and Language Understanding

Neural symbolic and disentangled reasoning

Visible and readable

Mask segmentation

De-rendering

Program execution

Question parsing

program generation

Neural symbolic and disentangled reasoning

Disentangled, distributed or semantic representation

Program generation

Consciousness priors

"When you "get an idea," or "solve a problem" ... you create what we shall call a K-line. ... When that K-line is later "activated", it reactivates ... mental agencies, creating a partial mental state "resembling the original."

A framework for representing knowledge

Representation

Program

generation

Consciousness priors / attention

Thinking fast

Thinking slow

Learning slow

Learning fast

Explaining hard

Explaining easy

Tax-VAT data

Text

Program generation using Hebbian Theory

"Any two cells or systems of cells that are repeatedly active at the same time will tend to become 'associated', so that activity in one facilitates activity in the other."

Neuron A

Neuron A

Neuron B

Neuron B

Neuron A

Neuron B

Time

Hebbian Theory

Text

Program generation using Hebbian Theory

"When one cell repeatedly assists in firing another, the axon of the first cell develops synaptic knobs (or enlarges them if they already exist) in contact with the soma of the second cell."

-

If Neuron A is active and conntected to Neuron B, then Neuron A will put Neuron B in a predicted state

-

If the prediction is correct (Neuron B is observed in an active state), then reward/increase the connection between them

-

If the prediction is wrong (Neuron B is not observed in an active state), then weaken/decrease the connections between them

Neuron A

Neuron A

Neuron B

Neuron B

Neuron A

Neuron B

Time

Program generation using Hebbian Theory

-

Find the optimum programs path using the REINFORCE algorithm

-

The state represents the graph and the cells state (active, predictive and inactive)

-

Actions are binaries (connect or disconnet two programs)

-

Reward the winner path and weaken the loser paths

Hebbian Reinforce Theory

Input 1

Program 1

Program 5

Program 2

Program 4

Program 7

Program 8

Program 6

Program 3

Program 9

Output 2

Output 1

Input 1

Program 1

Program 5

Program 2

Program 4

Program 7

Program 6

Program 8

Program 9

Program 3

Output 1

Output 2

Hebbian Reinforce Theory

Input 1

Program 1

Program 5

Program 2

Program 4

Program 7

Program 6

Program 8

Program 9

Program 3

Output 1

Output 2

Hebbian Reinforce Theory

Input 1

Program 1

Program 5

Program 2

Program 4

Program 7

Program 6

Program 8

Program 9

Program 3

Output 1

Output 2

Hebbian Reinforce Theory

Input 1

Program 1

Program 5

Program 2

Program 4

Program 7

Program 6

Program 8

Program 9

Program 3

Output 1

Output 2

Hebbian Reinforce Theory

Function Composition

Function Composition

modular

interpretable

parallelizable

Computational Graph Network

fully deterministic (after graph generation)

[ not ( A || C ) || ( C && not ( B && E ) ) , f7 ( f1 ( A ) ) , not ( f8 ( f7 ( f1 ( A ) ) ) ) , not ( A || B ) || implication( C, D ) , ... ]

--List of Parameters for Node Function f (x, y):

[ 0, 1, 2 ]

--Parameter Permutation:

[ (0,1), (1,2), (2,3), (3,2), (2,1), (1,0) ]Combinatorial Explosion

Text

--List of Parameters for Node Function f (x, y):

[ 0, 1, 2 ]

--Parameter Permutation:

[ (0,1), (1,2), (2,3), (3,2), (2,1), (1,0) ]

--Parameter Permutation with commutative function f (x, y):

[ (0,1), (1,2), (2,3) ](using ingenuity instead of computational power)

Commutativity as Complexity Barrier

Haskell

(.-) [[a] -> [b]] -> ([[b]] -> [a] -> [c]) -> [a] -> [c] (.-) funcList newFunc inp = newFunc (map ($inp) funcList) inp -- where -- funcList = Previous Node Function List -- newFunc = Node Function -- inp = Sample Input

~Curry-Howard-Lambek Isomorphism

a proof is a program, and the formula it proves is the type for the program

Formal Software Verification

- Accuracy is not enough to trust an AI

- Accuracy vs Explainability is not a trade-off

Look for solutions in weird places:

- Try Functional Programming & Category Theory

Trends in XAI:

- closing the gap between Symbolic AI and DL

- training DL representation models not decision models

- using Object-Oriented-Learning

- using Computational Graph Networks

Conclusion

+ Don't let your robot read legal texts ;)

Thank you for your attention

Interested in more AI projects?

-

Visit us at the PwC booth (ground floor)

- Join our workshop: "Open the Blackbox using local interpretable model agnostic explanation"

(5.12. 14:30-16:30, Room LAB 1.0)

Fabian Schneider

PoC-Engineer & Researcher

schneider.fabian@pwc.com

Ahmad Haj Mosa

AI Researcher

ahmad.haj.mosa@pwc.com