Stevens Institute of Technology

Amaury Gutiérrez

During natural disasters, damage in infrastructure often occurs. To measure the level of damage in economic on-site visits are performed. This studies prove to be difficult and expensive due to the adverse conditions often found after such an event.

In this study we research the use of drone imagery and computer vision techniques to automate this task. The purpose is offer a low cost alternative to on-site visits, in which dones can fly into the scene with ease.

Damage Assesment

Problem

We want to develop a method that let us automatically classify the level of damage in pictures taken in areas where natural disasters occur. These pictures might come from different sources such as pictures taken on site, drones or satellite imagery.

State of the Art

Currently it is possible to classify the elements of an image using a large training data set known as ImageNet. It was retrieved as an effort to train Deep Learning algorithms.

State of the Art

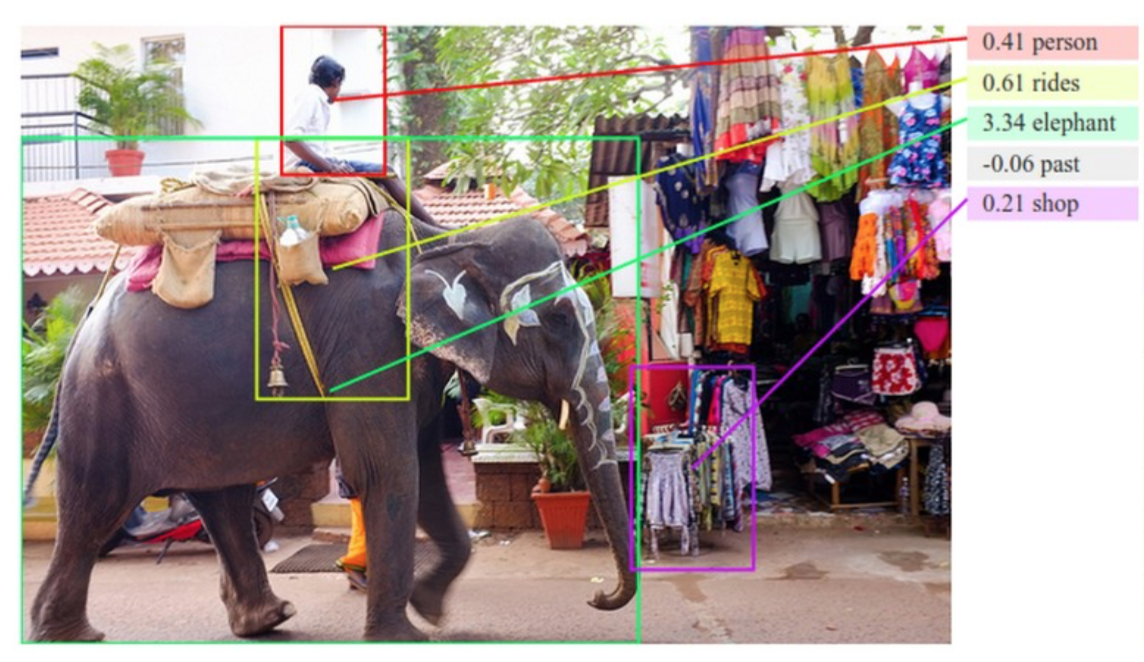

Another goal that has being attained, is to create semantic descriptions of a picture only by inspecting its pixels.

Deep Learning

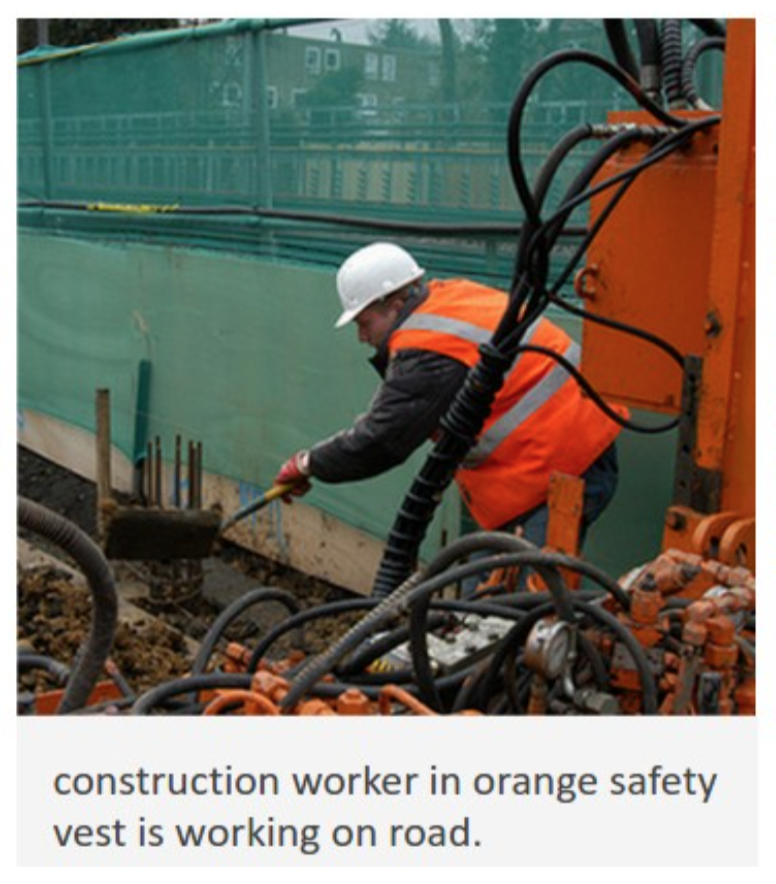

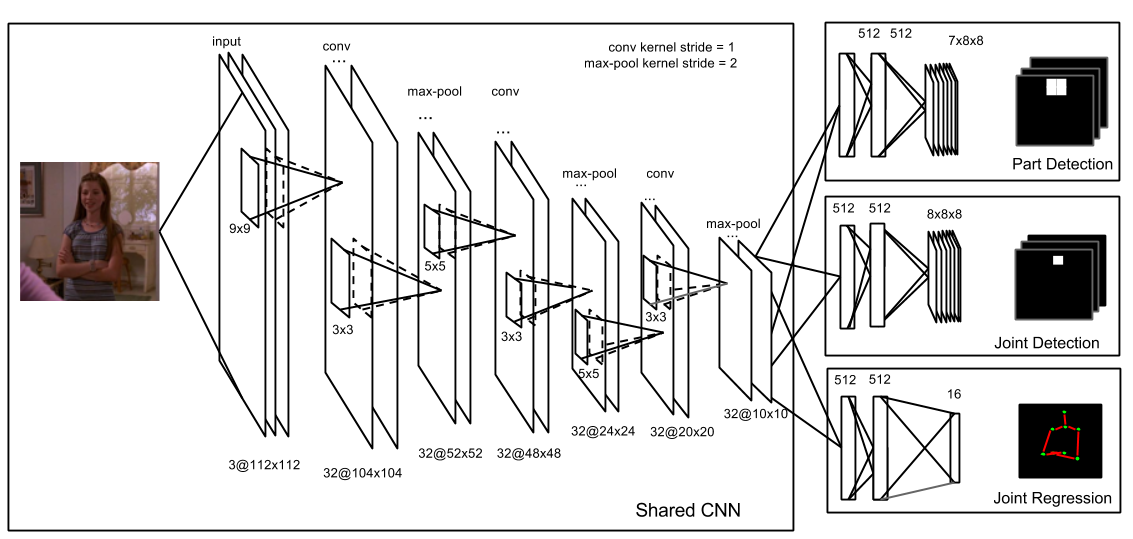

In order to get these results a specific technique is widely used. Deep Convolutional Neural Networks, create features on the fly to detect and classify elements in images. Using these methods, we hope to be able to detect levels of damage in places where the natural disaster occurred. The downside is that these techniques need a lot of fuel to work, in other words we need several thousand images which are already tagged with the classification of interest.

Deep Learning

Deep Learning

What we want to produce is a pipeline that will be capable of doing the damage assessment in an automatic process.

Deep Learning

| Framework | Advantages | Disadvantages |

|---|---|---|

| Keras | Fast Prototype | Not many pretrained nets |

| Caffe | Well tunned for images | Adding new layers must be done in Cuda C |

| TensorFlow | Own experience | Slow compared to other options |

| cuDNN | No intermediate layers | Very hard to write |

Tensor Flow

- Easy GPU use via nvidia-docker

- Many examples doing similar things

- Available pre-trained models

- Extensive documentation

Transfer Learning

- Transfer learning is the process of using a pretrained neural network and tune it to match a specific problem

- The training of a deep neural network over a huge datasets often takes weeks and many dedicated GPUs

- This technique allows to fine tune a trained net in a fraction of the time with a very small dataset.

- No need to use GPUs if the hardware specs are a constraint.

Information Sources

Text

As we have discussed before, we need a large source of images that preferably are already tagged and classified to use as training data. We hope to get access to a large image database provided by the Red Cross. We established contact with CENAPRED (National Center for Disaster Prevention) and FONDEN (Natural Disaster Fund Trust).

Information Sources

A tecnique to get news from news papers online was explorered. I used the online version of the mexican newspaper El Excelsior. News where then filtered by keywords related to our subject.The scrapped news can be found here:

https://amaurs.com/stevens/

Research Methodology

Text

- Gather the data.

- Data exploratory analysis, and visual inspection of images.

- Formulate an hypothesis from the insights we produce from the data.

- Query the data to support our claims, and summarize our findings.

Gather the Data

Text

Marco from the Red Cross wrote back to us. We agreed to meet via Skype to talk about our project.

Exploratory Analysis

Text

When we get the images and the data, we should explore it to see if we can make sense of it. By visually inspecting the images we will have an idea about the potential results that we can get by applying the proposed algorithms.

Hypothesis

Text

After the exploratory analysis we would be in good shape to formulate an hypothesis. This will be supported or refuted by our research. After formulating the hypothesis, we should get back to the data and follow a systematic process that is valid from a scientific point of view.

Report Findings

Text

We need to describe the process that was involved to generate the results. This description must be detailed and should be reproducible.

Best Case

The available information from public sources is enough.

Students can help to manually tag the images.

Worse Case

Images can be extracted from the web using a crawler

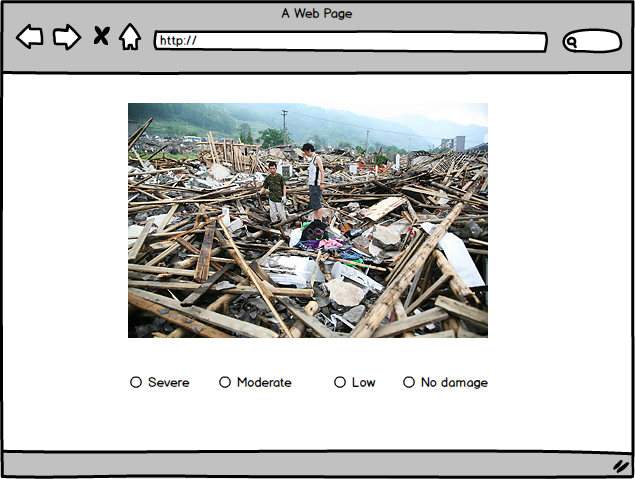

Image can be tagged using crowdsourcing or services like Amazon Turk

Scenarios

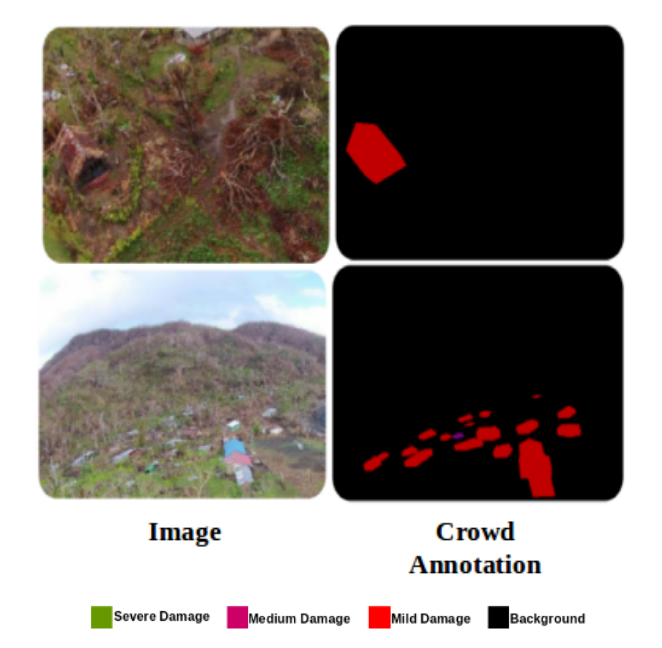

A proposal in case that the image database is not already tagged is to manually tag the images using crowdsourcing efforts. In order to do so, we propose a web application that shows the images and lets the user to choose a classification.

Scenarios

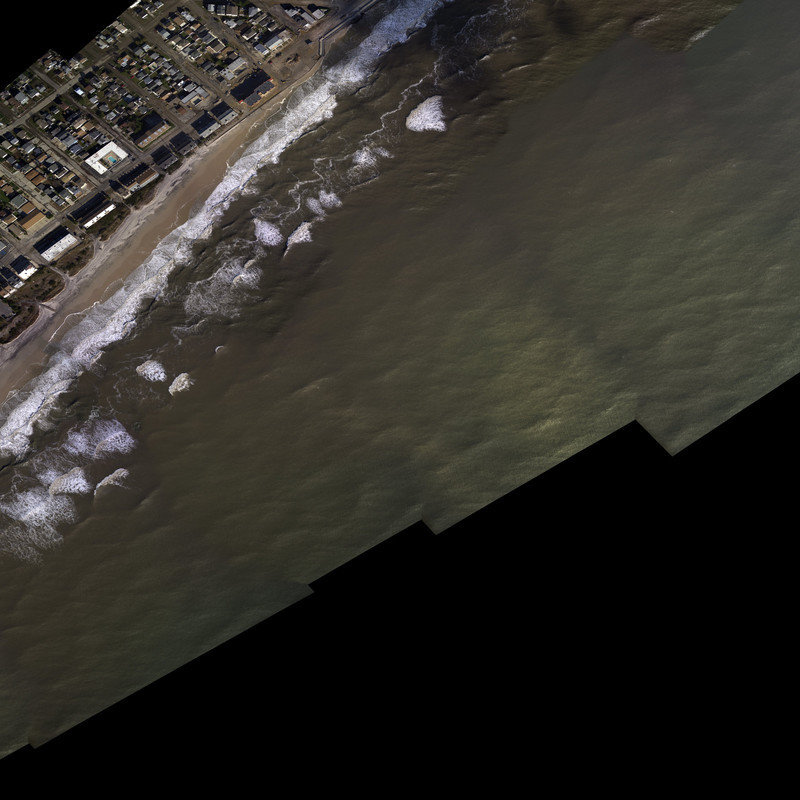

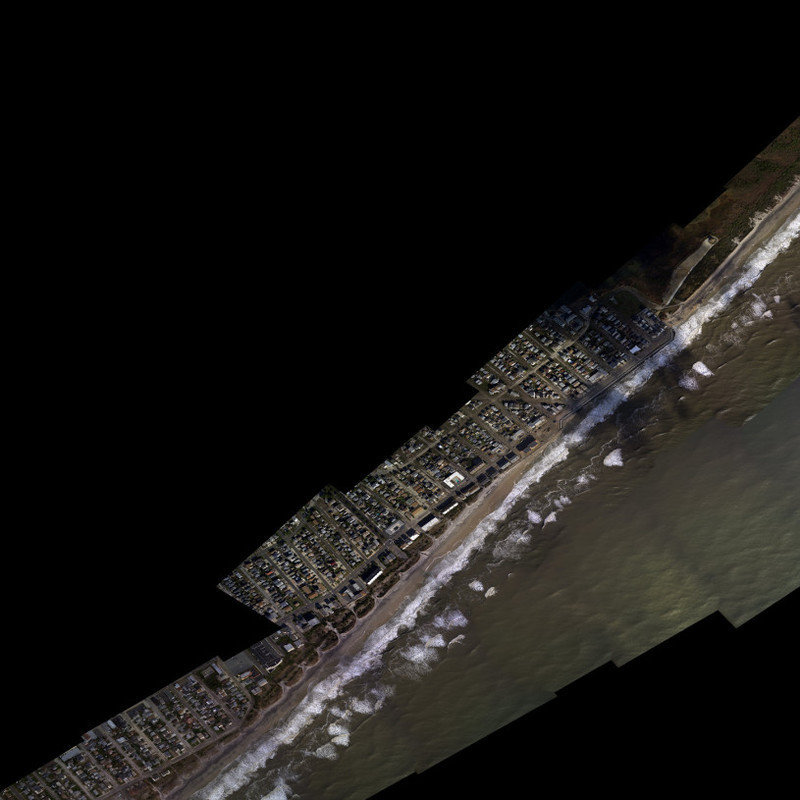

Hurricane Sandy

http://storms.ngs.noaa.gov/storms/sandy/

Pixel resolution: 35cm

Manual Tagging

References

Nazr-CNN: Object Detection and Fine-Grained Classification in Crowdsourced UAV Images N. Attari and F. Ofli and M. Awad and J. Lucas and S. Chawla Qatar Computing Reserach Institute Hamad bin Khalifa University {nattari, fofli, mawad, jlucas, schawla}@qf.org.qa

https://www.mapbox.com/blog/jakarta-flooding-map/

Text

Categorizing natural disaster damage assessment using

satellite-based geospatial techniques S. W. Myint, M. Yuan, R. S. Cerveny, and C. Giri Natural Hazards and Earth System Sciences

Deep Visual-Semantic Alignments for Generating Image Descriptions Andrej Karpathy Li Fei-Fei

Very Deep Convolutional Networks for Large-Scale Image Recognition

K. Simonyan, A. Zisserman

arXiv:1409.1556

References

Text

CNN Features off-the-shelf: an Astounding Baseline for Recognition Ali Sharif Razavian Hossein Azizpour Josephine Sullivan Stefan Carlsson CVAP, KTH https://arxiv.org/pdf/1403.6382.pdf