Welcome...

I AM...

Amir Gal-or

10+ yr in software dev.

But first - a Dad

IN PANAYA

For 4.5 years

Some titles...

Senior SW dev

FrontEnd Tech Lead

Scale Platform Team & Tech Lead

DESTROYER OF BUILDS

About this presentation

This presentation is

a general overview

It's not a technical drill-down

If something is not clear - ask

BUT - technical questions - later

First of all...

It's a word in Thai

According to Legend...

No body knows what it means

Seriously...

Panaya is all about disruptive SAAS (Cloud Based) solutions for ERP lifecycle process...

?!

Example

Who here worked with AngularJS ?

you have an Angular 1.x app

you want to upgrade to Angular 2.0 app

What do YOU need to do for that to happen ?

- Read Documentation ?

- Do tutorials ?

- Take deep breaths ?

- Fix Code

- Compile/Lint

- Probably more

Example

Is it a simple process ?

Relatively yes...

- Read Documentation ?

- Do tutorials ?

- Take deep breaths ?

- Fix Code

- Compile

Good luck finding...

250 million lines of vanilla code !!!

did you read my previous bullet ?

did you read my previous bullet ?

Relative to what ?

How do we do it ?

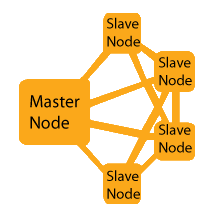

Divide and Conquer - Large Scale !

Divide into small "computational" units

Each "unit" is sent to "Computational Engine"

Coordinate

Coordinate

Thread

Process

Machine

How do we do it ?

Divide and Conquer - Large Scale !

Coordinate

In Cloud - Over EC2

We have our own Framework for Elastic Cloud Computing

Written ~ 8 years ago

Today - you have

Over

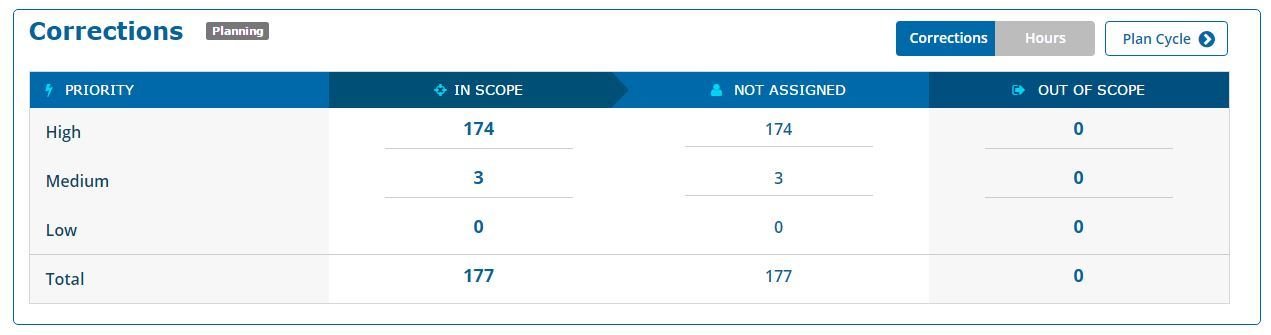

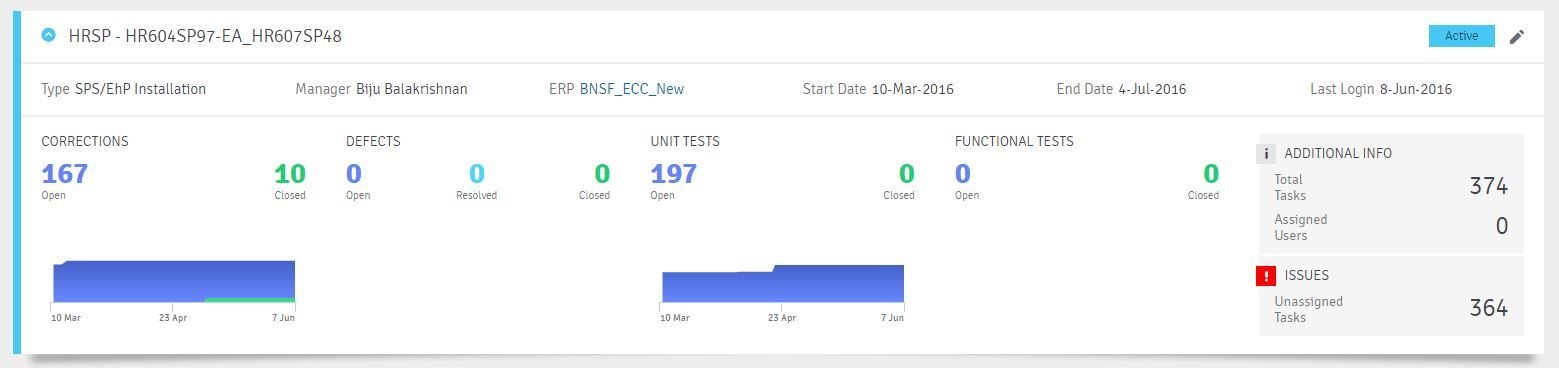

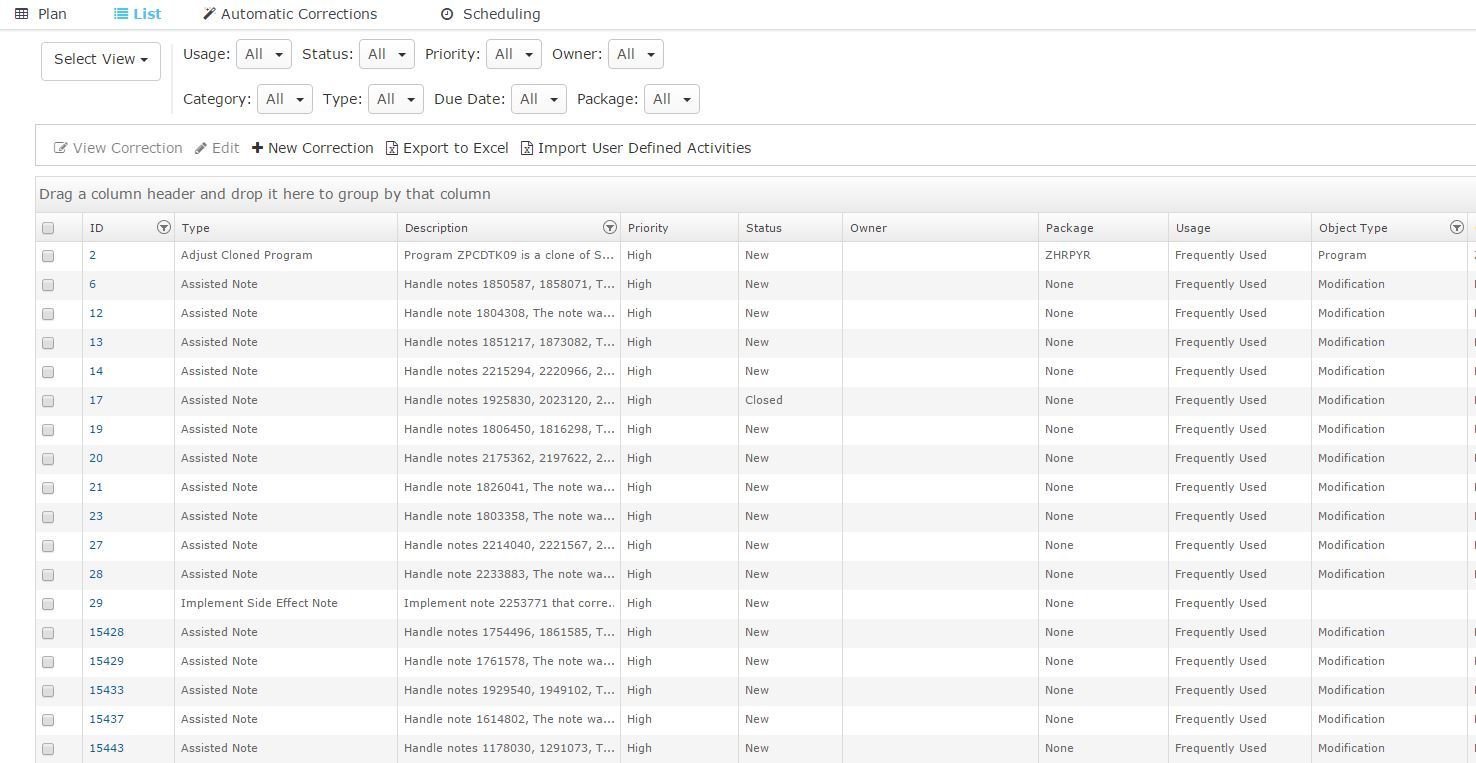

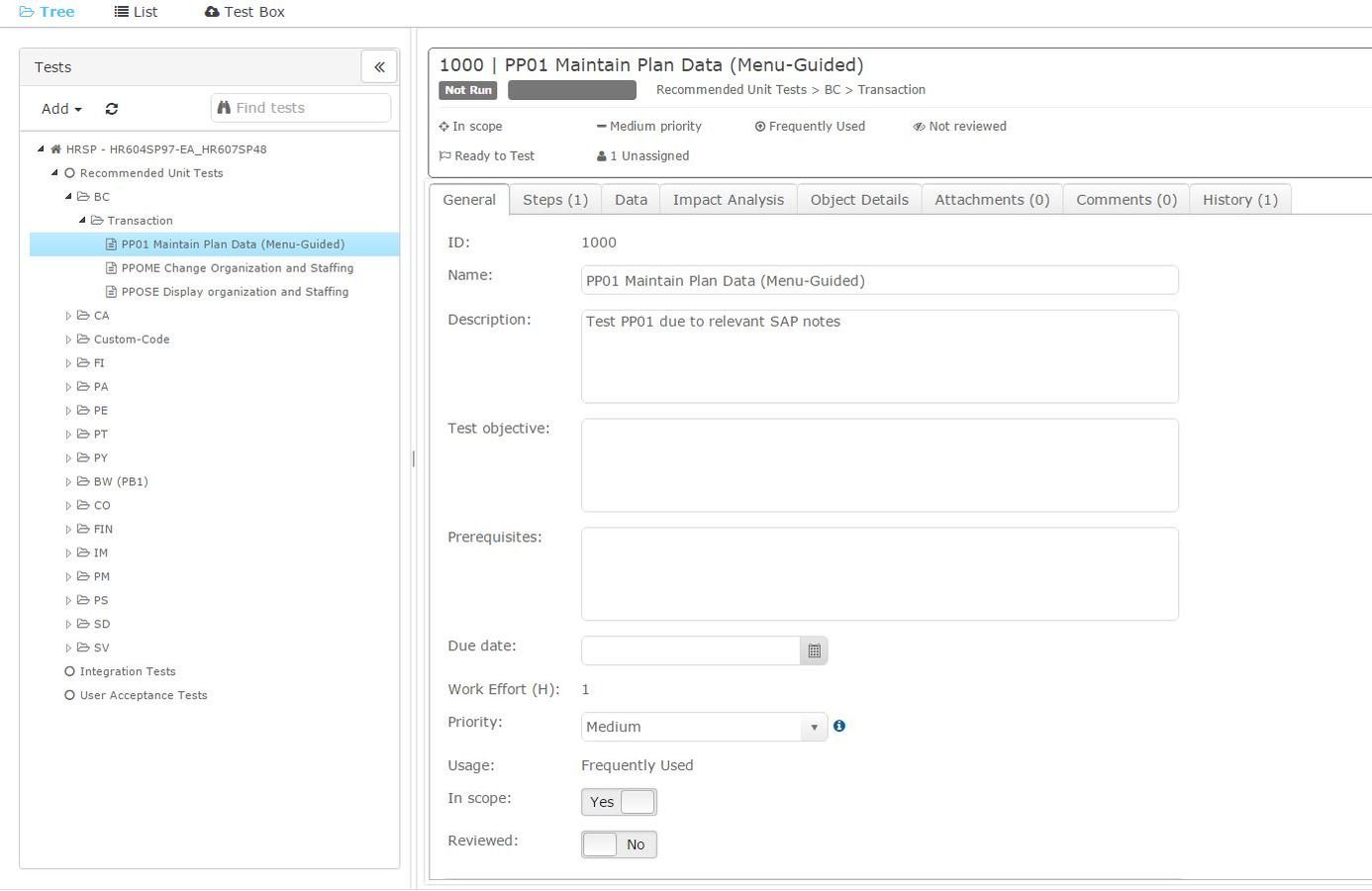

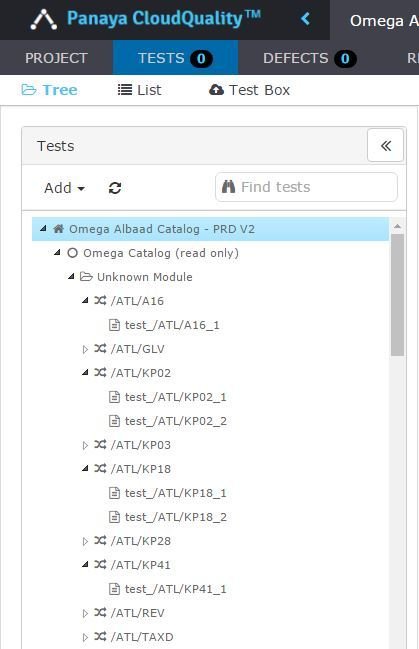

What will Customer see ?

What will Customer GET ?

Ability to manage such a difficult project

Scope/Assign/Dev/QA...

So PANAYA is

first and foremost - A Technology company

OR

R&D Rolezzz

Technologies

And sooo many more

Development

We're Agile

I'm aware - today every company say they are AGILE

But they are all doing it differently!

Logicaly ?! can they all be right ?

PERHAPS...

Development

We're Agile

When we started - it was more like ADHD

Development

We're Agile

Now... It's better

(with room for improvement)

We have our ceremonies

PLANNING

DEMO

RETROSPECTIVE

DAILY

BACKLOG GROOMING

But - It's actually not about the ceremonies

Development

We're Agile

It's a mindset !

more than a methodology

It's a culture

more than a way to manage

Continuous Improvment

Value to Customer

Trust

Transparancy

Priority

TEAM WORK

Development

We're Agile

Visit

By Uri Nativ of Klarna

ADD

Technological Challanges

Which track ?

Front-end ? Big-Data ?

Development

Tools

What is "Big-Data"

Questions...

When is data considered "BIG"

Answer:

Answer:

"Big Data" is a term for data sets or flows that are so large or complex that traditional "handling" are inadequate

"handling" ?!

Data

Capture

Curate

Analysis

Search

Transfer

Querying

and so much more

Data is "Big Data" if you need to hire a team of smart engineers to just to handle it (distribute...)

Technologies

And these are just I know of...

And platforms

How do you choose ?!

Intuition ?

Premenition ?

Experience ?

We have non of those

How do you choose ?!

Sit with experienced people, developers, architects

Listen

Do homework

Research

Understand your expectations & limitations

How do you choose ?!

And eventually ?

Listen to Amir

How do you choose ?!

Seriously ?

NO!!!

Understand your "Expectations"

Understand your "Limitations"

What do you need to support for the next year (or 2)

Capture "rate"

SLA

SLA

SLA

SLA

Curation "period"

Processing time

OR

Do we have the required knowledge ?

Dev Support

DevOps support

Ops support

+

Troubleshooting

How do you choose ?!

Understand principals in Scalability & Big-Data

There are allot of good options

Choices we make now might (and should) be invalidated in the future.

SO - you can't plan for everything - but you can try

Why ?

- Product ?

- Pricing ?

- Better options ?

Let's Think where our "BIG" is

"Event Handling"

"Processing"

"Persistancy"

What did we choose ?

Also...

Over

"Event Handling"

"Processing"

"Persistancy"

+

Why did we choose ?

Is the devil we know ;)

S3 became the standard "de facto" for scaling data curation, it is cheap, high availability, easy to use, and has extension in many processing Frameworks

Spark Over EMR is currently one of the best contenders as a "Big Data Processing FW" - it continues to remain so due to a large community of users and feature developers - relentlesly making it better

High security requirements - in all aspects.

AWS maintain security standards and has a built-in encryption and key management solution we're currently researching into.

Why did we choose ?

But perhaps it's biggest advantage over other tools is

Reduce the requirement of devop as "scaling" is handled internally

Architecture @ High-Level

Server

DB

Utils

Panaya Server

Panaya DB

Architecture @ High-Level

Server

DB

Lambda

Kinesis

Firehose

S3

encrypted

" Raw Event Handling"

Other Events

RAW

Supervised by

IAM + KMS

API Gateway

Architecture @ High-Level

Lambda

Kinesis

Firehose

S3

encrypted

API Gateway

"front door" for applications to access data, BL, functionality in the BackEnd

event-driven function, code run in response to events from API Gateway

auto-magically buffers, than "dump" to S3 (every MB / seconds)

It's not a file-storage, It's a Key-Value storage

This is a requirement - "Key Per Customer"

IAM + KMS

Identity / Auth Management including Encrypt/Decrypt Key Management

Again - why we're these ?

Lambda

Kinesis

Firehose

S3

encrypted

API Gateway

"Single Point Of entrance" - will allow us not to bind code of "monitor" to AWS (by SDK). Good practice to control traffic and "Versioning"

Handling of incoming data for uses cases such as "License Validation" && / || "BlackList", as well as JSON validity and more.

As S3 is a by Key-Value storage (and not an FS) - there's no support for ops like "Append", so to generate a large file, a buffer is required

It's a Key-Value storage - sensitive data should be encrypted

This is a requirement - "Key Per Customer"

IAM + KMS

Identity / Auth Management including Encrypt/Decrypt Key Management

Architecture @ High-Level

S3

encrypted

"Processing"

Over

"Timed Batch"

We have will have 2 types

"On Demand Batch"

Prepare data for "On Demand" Batch-Processing

This is the thing we were waiting got - Run the Main Algorithm

Architecture @ High-Level

S3

encrypted

"Rollout to Panaya"

?

Consider

Scenarios still contain "sensitive" data