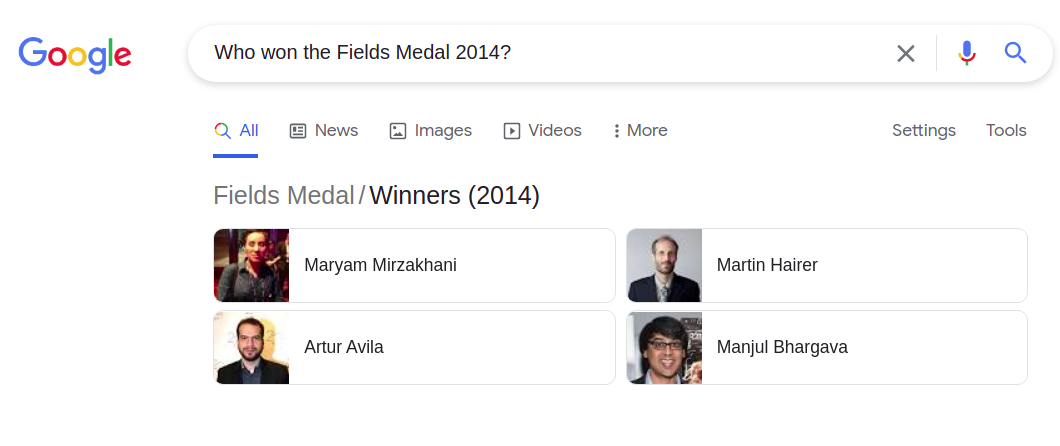

Question

Answering

What is QA?

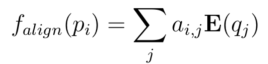

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP), which is concerned with building systems that automatically answer questions posed by humans in a natural language.[1]

Question Answering is the task of answering questions (typically reading comprehension questions), but abstaining when presented with a question that cannot be answered based on the provided context.[2]

Multi disciplinary field

Information Retrieval

- System returns a list of documents

Query driven

Question Answering

- System returns the list of short answer

Answer driven

knowledge base question answering.

example paper

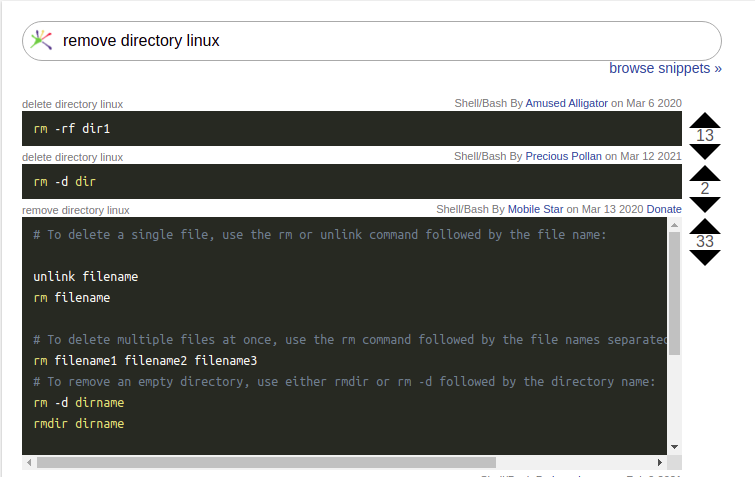

The Query & Answer System for the Ambitious Developer codegrepper

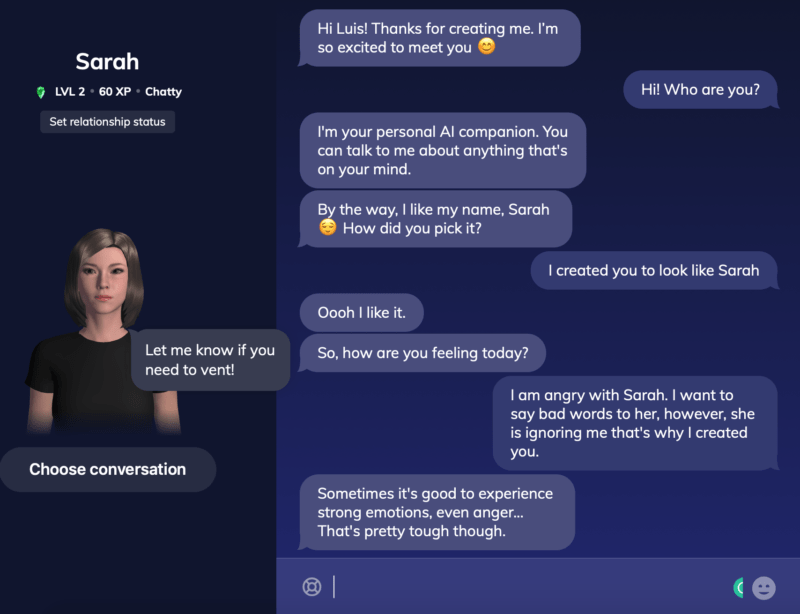

Replika.ai got 500K Android downloads to clone people

GitHub research

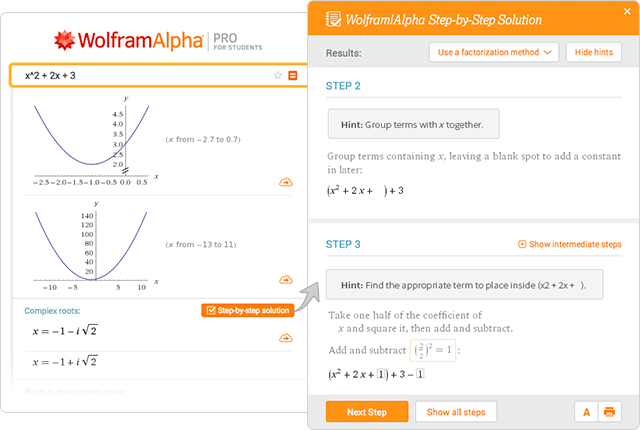

Examples for Step-by-Step Solutions

over 60 topics within math, chemistry and physics.

#:~:text=intransitive verb,in order or in readiness

link

Motivation: Question answering

With massive collections of full-text documents, i.e., the web,

simply returning relevant documents is of limited use

we often want answers to our questions

#Mobile

#Alexa

Motivation: Question answering

Finding documents that (might) contain an answer

traditional information retrieval/web search

Finding an answer in a paragraph or a document

Reading Comprehension

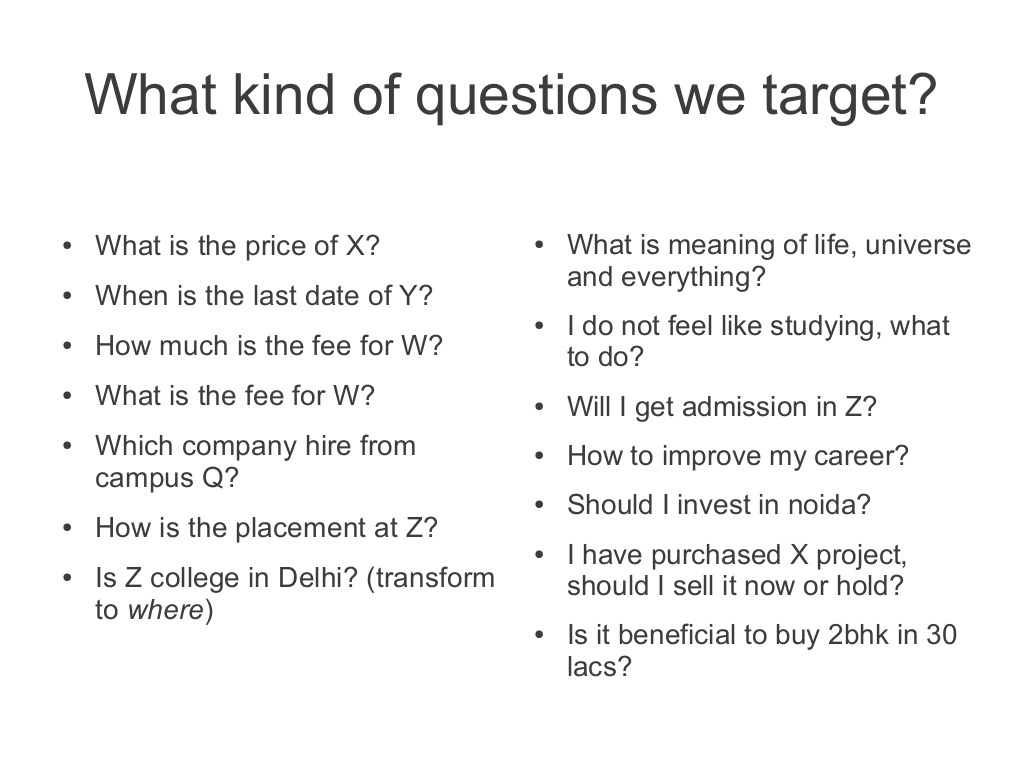

Kind of Questions

Factoids

Open-ended

Different categories of

Natural Language queries

- Factoid : WH questions like when, who, where

- Yes/No queries

- Definition queries: What is platypus?

- Cause/consequence queries: How, why, what. what are the consequences of the vaccination

- Procedural queries: Which are the steps for getting a Master degree?

- Comparative queries: What are the difference between the mode A and B?

- Queries with examples: list of hard disks similar to hard disk X.

- etc

Type of QA Systems

Close-domain

are limited to specific domains

+ High accuracy

- Limited coverage over the possible queries

- Needs domain expert

History Of QA

- Yale A.I. Project in 1977

- Lynette Hirschman in 1999

- MCTest in 2013

- Floodgates 2015-16

- Hermann et al. (NIPS 2015) DeepMind CNN/DM dataset

- Rajpurkar et al. (EMNLP 2016) SQuAD

- MS MARCO, TriviaQA, RACE, NewsQA, NarrativeQA, ...

The first question answering system, baseball , (Green et al., 1961) was able to answer domain-specific natural language questions which was about the baseball games played in American league over one season. This system was simply a database-centered system which used to translate a natural language question to a canonical query on database.[6]

early 1960s

QA research

TREC ( Text REtrieval Conference)

Factoid 1992

CLEF ( Cross Language Evaluation Forum)

ir, language resources 2001

NTCIR ( NII Test Collection for IR System)

ir, question answering, summarization, extraction

1997

Machine Comprehention

Machine Reading Comprehension is one of the key problems in Natural Language Understanding, where the task is to read and comprehend a given text passage, and then answer questions based on it.[4]

A machine comprehends a passage of text if, for any question regarding that text can be answered correctly by a majority of native speakers, that machine can provide a string which those speakers would agree both answers that question, and does not contain information irrelevant to that

question.

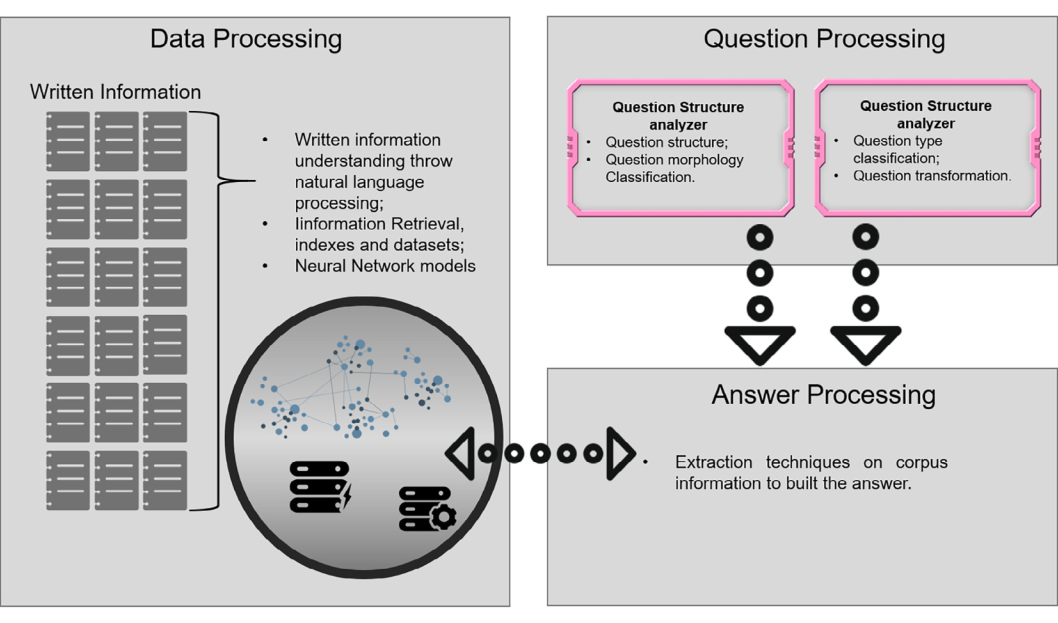

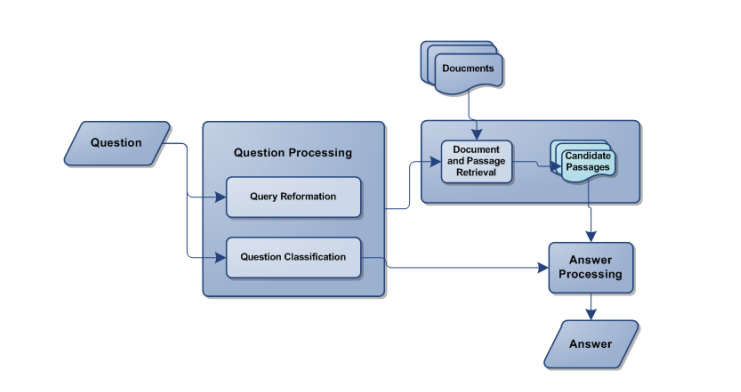

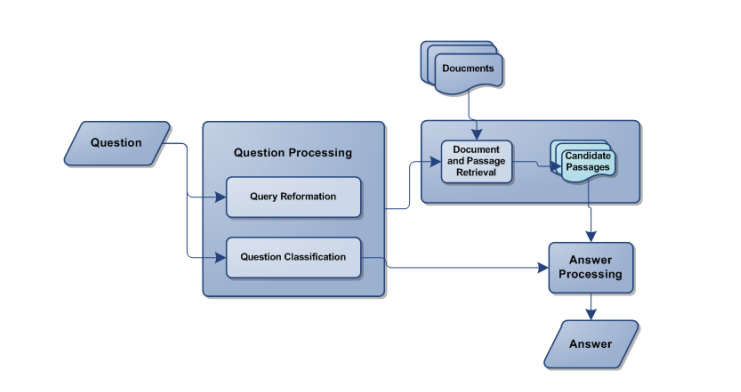

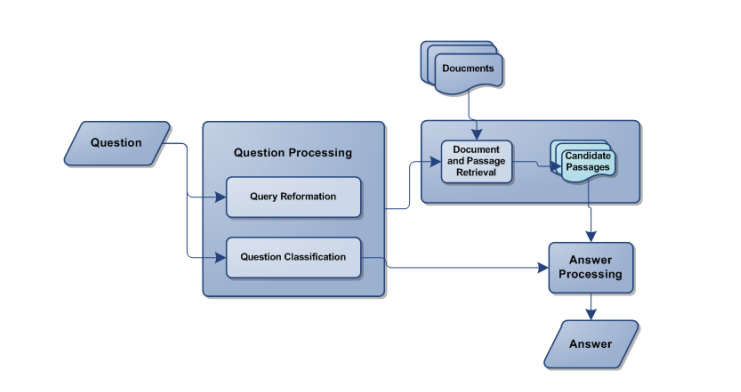

Automated QA system

three common stages

( Jurafsky and Martin, 2008 )

- Question processing

- Information (document) retrieval

- Answer processing.

What is platypus?

question

General architecture

Question

Classification & Analysis

knowledge base

text Retrieval="The platypus, sometimes referred to as the duck-billed platypus, is a semi-aquatic, egg-laying mammal which is endemic to eastern Australia, including Tasmania ...." wiki

Information Retrieval

Answer Extraction

"platypus is"

query

a semi-aquatic, egg-laying mammal

/A is

platypus a semi-aquatic, egg-laying mammal.

/Q

"platypus"

/Q is /A

/Q is /A

Some challenges

Language variability (paraphrase)

necessary pre-computed resources

finding relevant pieces of information

deduction & extra linguistic knowledge

Quality of the text

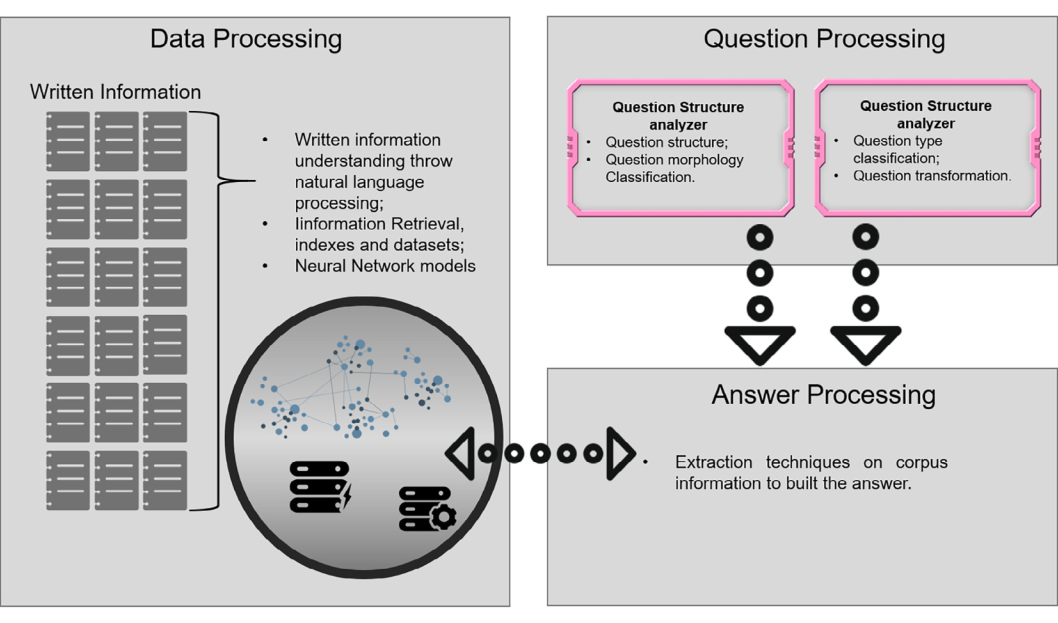

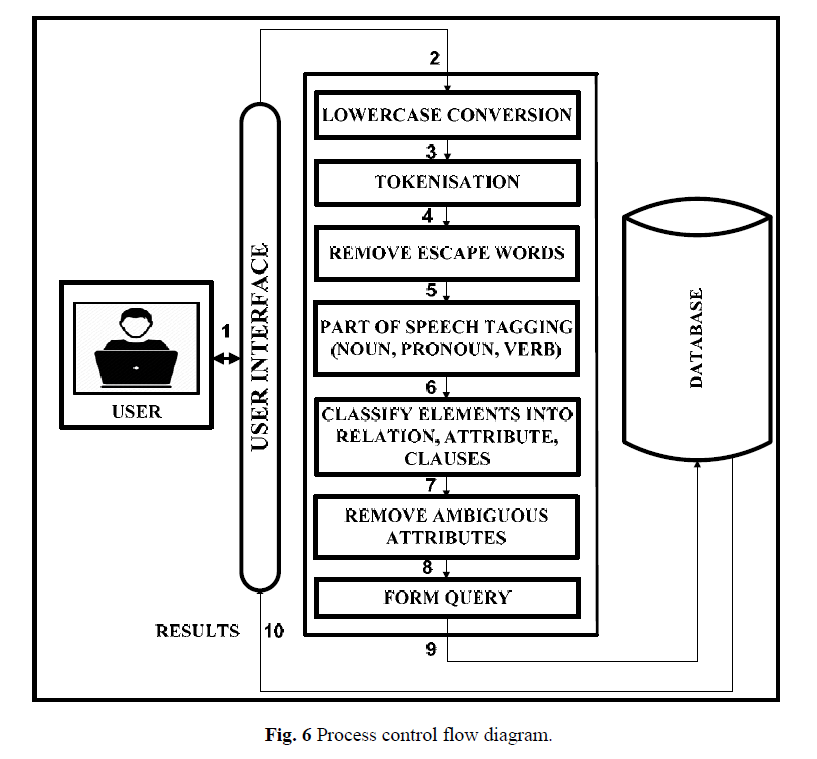

Question Processing receives a question in natural language, to analyze and classify it.

The analysis find out the type of question & meaning of the question focus . (avoid ambiguities in the answer)

The classification is one of the main steps of a QA system. manual or automatic (Ray et al., 2010)

--------------------------------------------------------------------

Transform the question into a meaningful question formula compatible with QA’s domain

Question Processing

Question Processing

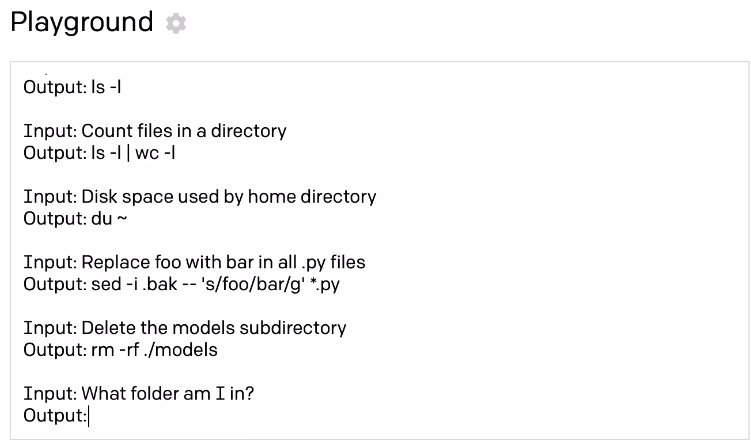

query reformation: analyze the question and create a proper ir query

question classification: detect the entity type of the answer

Question Analysis

- features needed answer extraction

- identify keywords to be matched

- identify answer type to matched answer candidate

definition

Quantity

date

&...

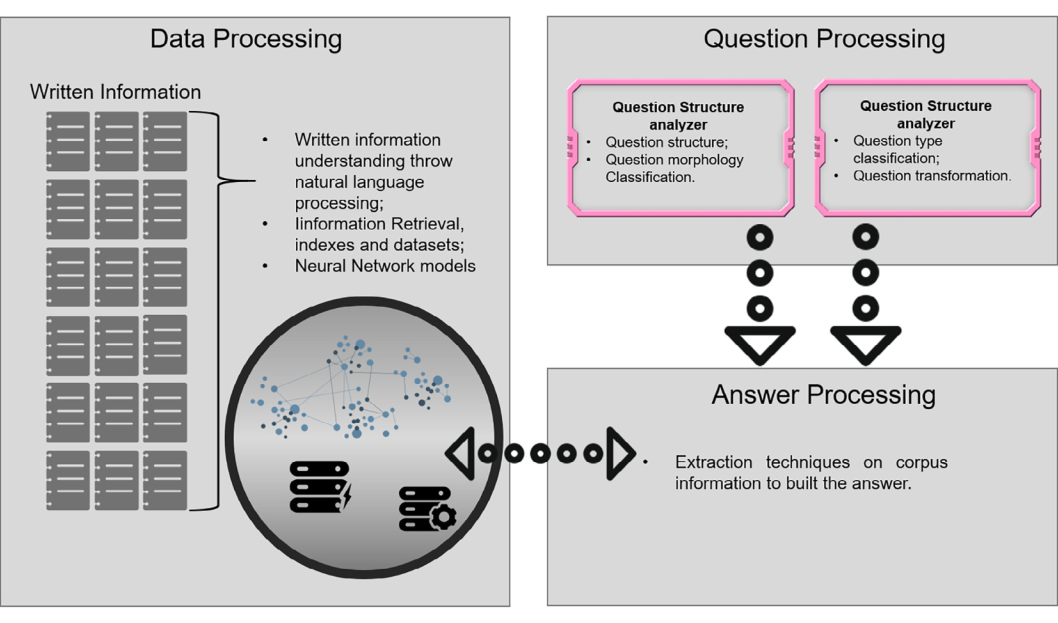

Document processing (data processing)

Document Processing has as its main

feature the selection of a set of relevant documents and the extraction of a set of paragraphs depending on the focus of the question or text understanding throw natural language processing. This task can generate a data-set or a neural model

which will provide the source for the answer extraction. The

retrieved data can be ranked according to its relevance for the

question

query over the ir engine, process the returned documents and return candidate passages that are likely to contain the answer

Passage Retrieval

process the candidate passages and extract a segment of word(s) that is likely to be the answer of the question.

Answer Processing

question answering modules all together

Question Classification

The task of a question classifier is to assign one or more class labels, depending on classification strategy, to a given question written in natural language

“who was the president of U.S. in 1934?”

“What London street is the home of British journalism?”

“What is a pyrotechnic display?”

location

human

date

&...

Question Classification Approaches

Rule based approaches:

try to match the question with some manually handcrafted rules

What tourist attractions are there in Reims?

What are the names of the tourist attractions in Reims?

What do most tourist visit in Reims?

What attracts tourists to Reims?

What is worth seeing in Reims?

needs too many defined rules

difficult to scale

poor performance on a new dataset

Question Classification Approaches

learning based

hybrid approaches

the most successful approaches on question classification

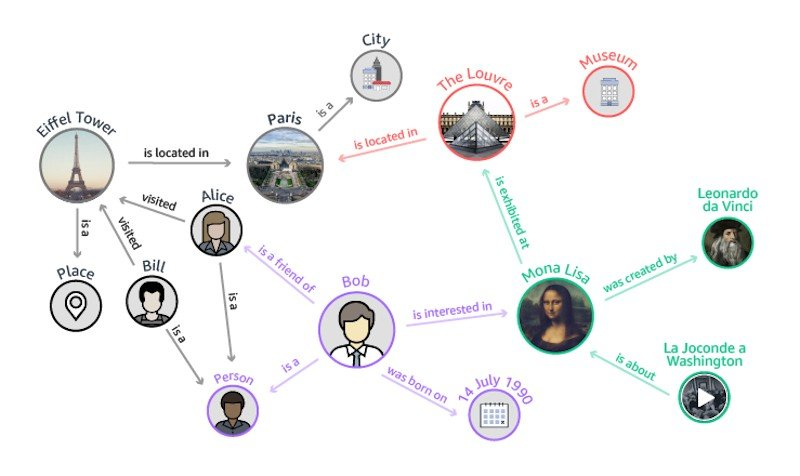

Knowledge Base

Which place did Alice visit in paris?

MATCH (:User{name:"Alice"})-[:VISIT]->(p:Place)-[:IN]->(:City {name: 'paris'})

Text

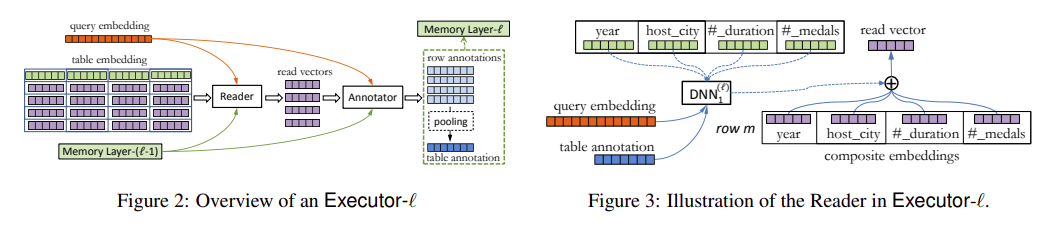

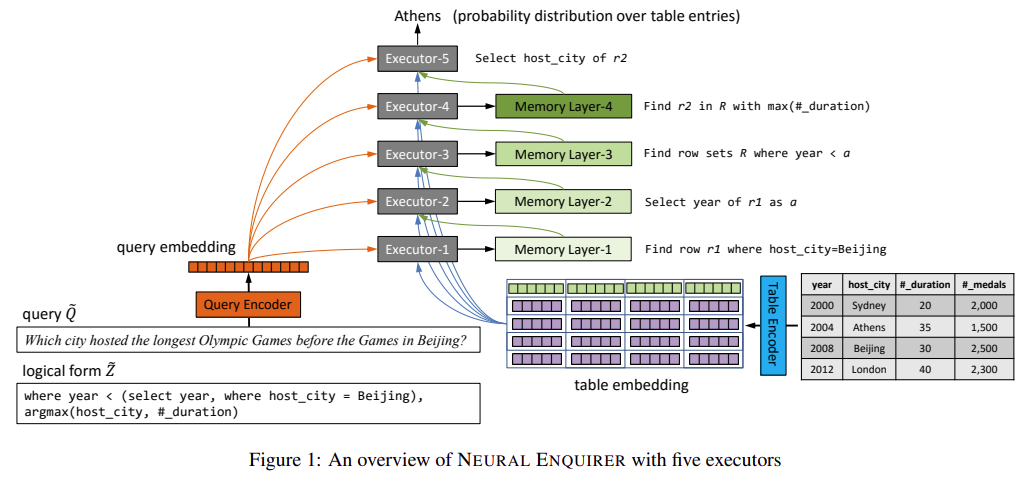

Neural Enquirer: Learning to Query Tables in Natural Language [8]

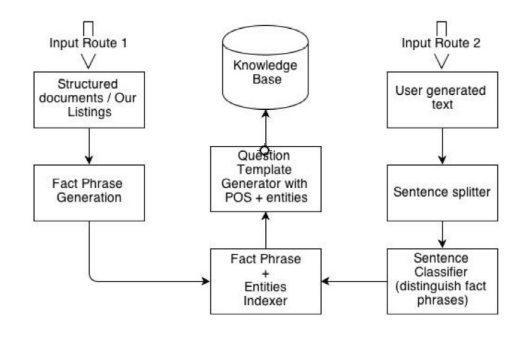

Knowledge base generation

Answer Retreival

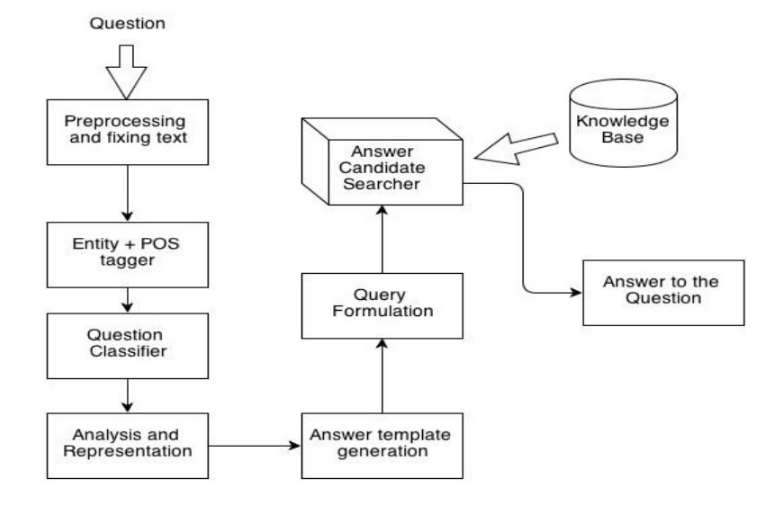

Text pre-processing

i'm, im, i m i am

short forms

spell correction

repeated punctuation

where is olympic !???

Answer candidate searcher

<Question, qTypes, entities, answer template>

index in training corpus

<Question, qTypes, entities, answer template>

Retrieve set of n questions

Decide base on score and similarities

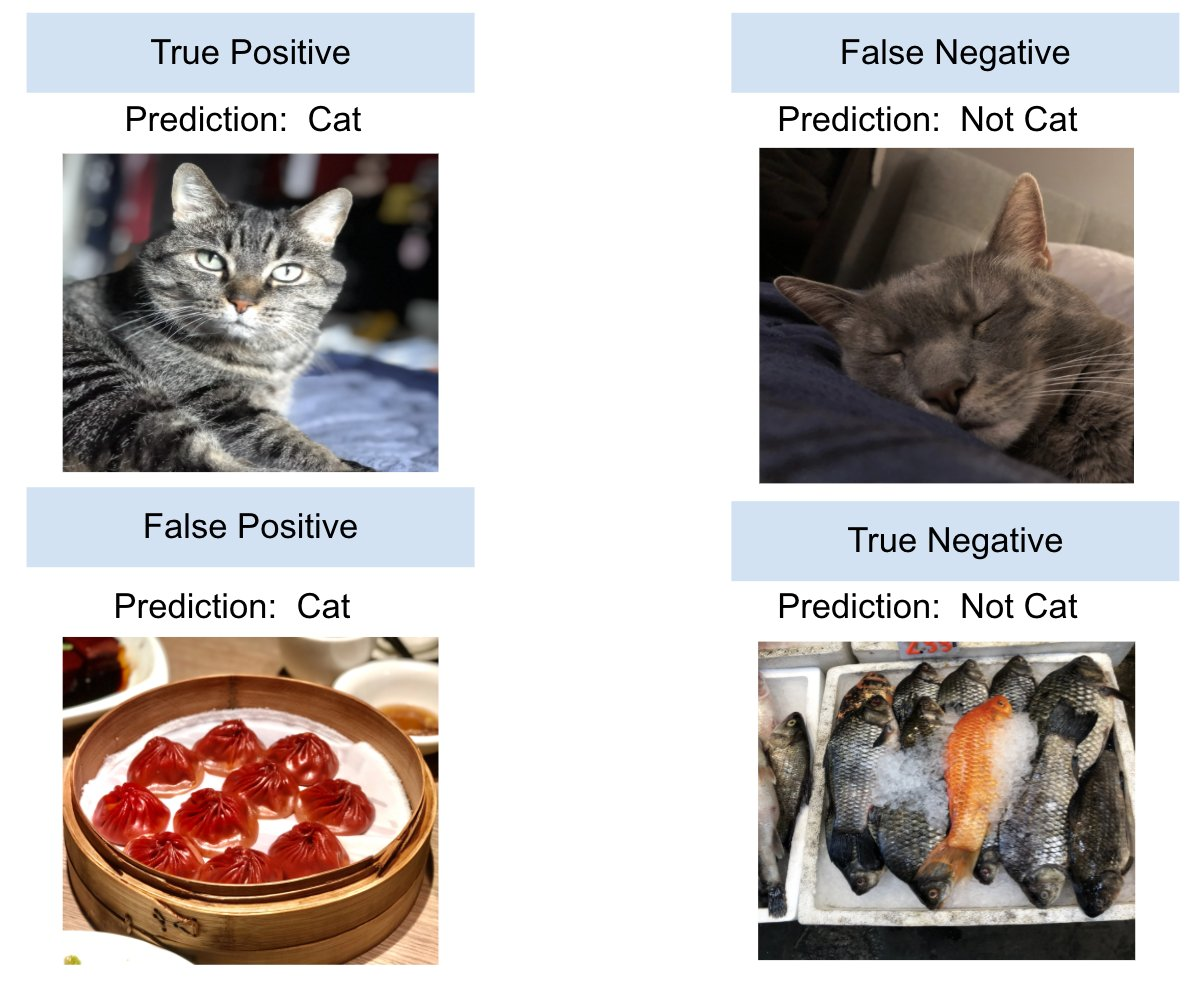

Evaluation

- Authors collected 3 gold answers

- Exact match: (0/1) accuracy

- F1: Take system and each gold answer as bag of words

- Both metrics ignore punctuation and articles (a, an, the only)

precision = percentage of predicted cats which are actuality a cat

recall = percentage of cats which are truly predicted as a cat

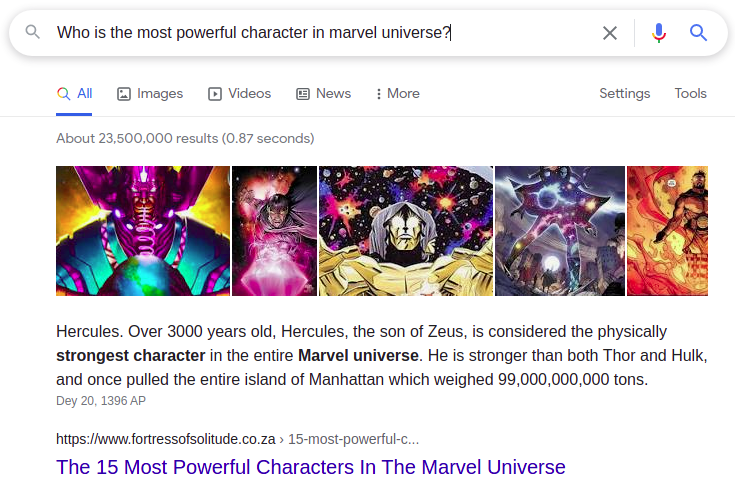

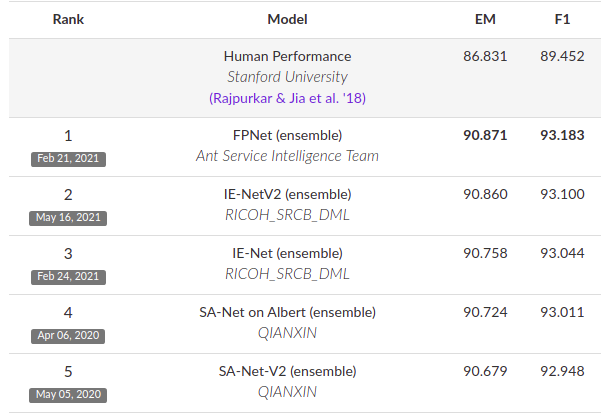

reading comprehension dataset, consisting of questions posed by crowdworkers on a set of Wikipedia articles, where the answer to every question is a segment of text, or span, from the corresponding reading passage, or the question might be unanswerable. v2

Simple QA System

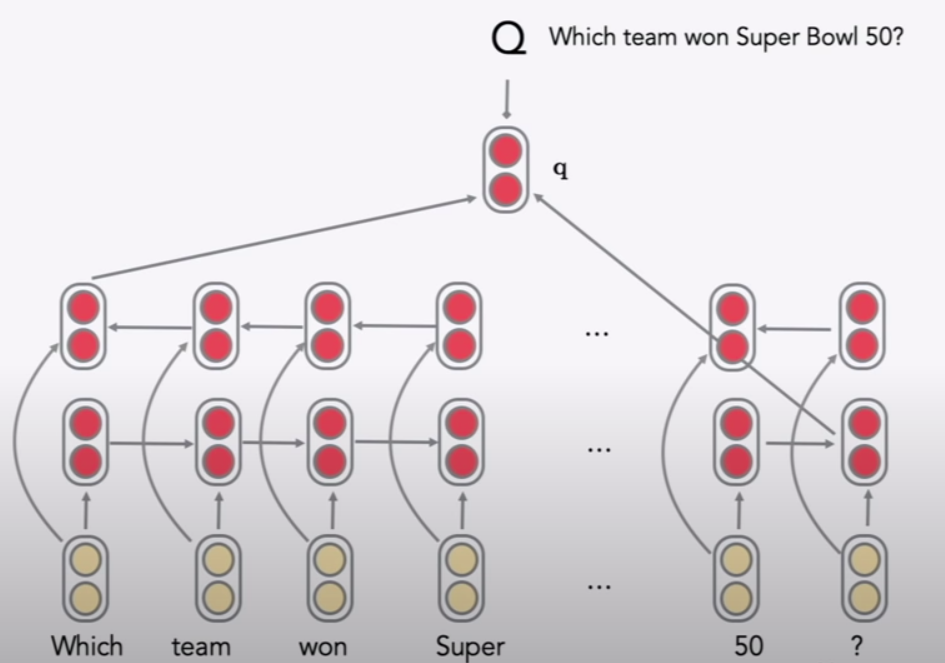

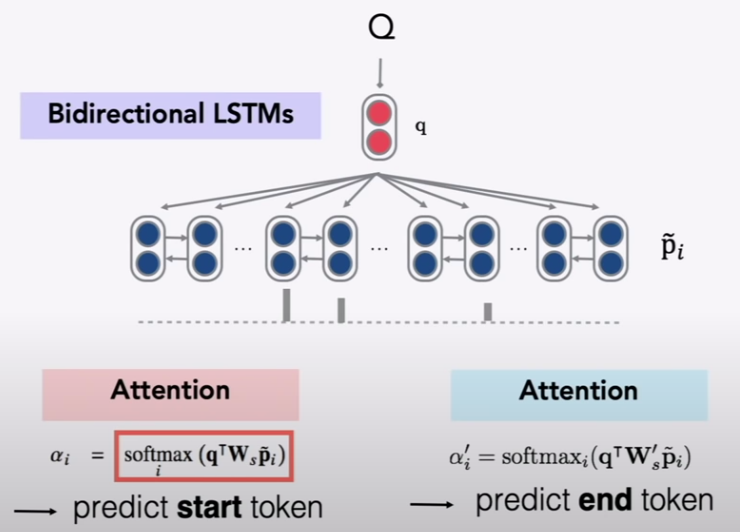

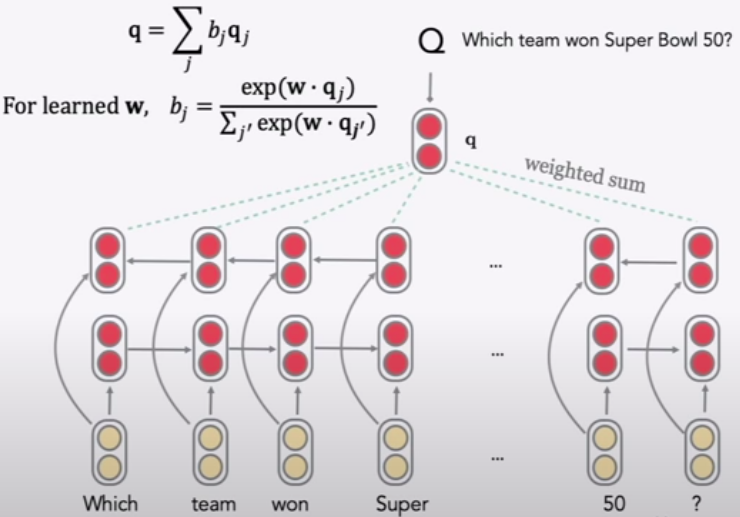

Stanford Attentive Reader (DrQA)

Super Bowl 50 was an American football game to determine the champion of the National Football League (NFL) for the 2015 season. The American Football Conference (AFC) champion Denver Broncos defeated the National Football Conference (NFC) champion Carolina Panthers 24–10 to earn their third Super Bowl title. The game was played on February 7, 2016, at Levi's Stadium in the San Francisco Bay Area at Santa Clara, California. As this was the 50th Super Bowl, the league emphasized the "golden anniversary" with various gold-themed initiatives, as well as temporarily suspending the tradition of naming each Super Bowl game with Roman numerals (under which the game would have been known as "Super Bowl L"), so that the logo could prominently feature the Arabic numerals 50. SQuAD v1.1

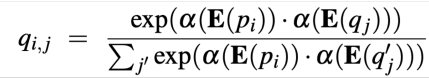

Stanford Attentive Reader ++

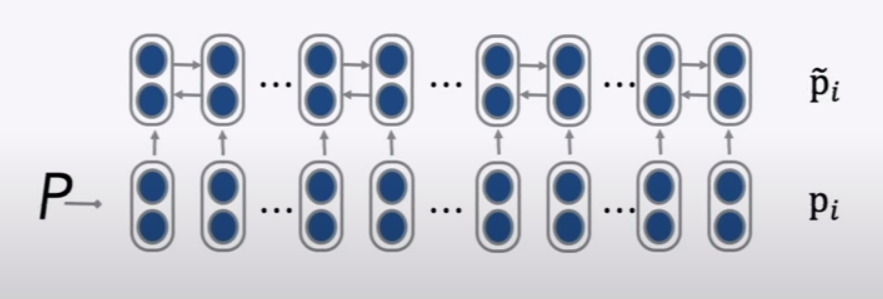

Pi : Vector representation of each token in passage

made from concatenation of:

• Word embedding (GloVe 300d)

• Linguistic features: POS & NER tags, one-hot encoded

• Term frequency (unigram probability)

• Exact match: whether the word appears in the question

• 3 binary features: exact, uncased, lemma

• Aligned question embedding (“car” vs “vehicle”)