DATAFRAMES

WHY Data Scientists don't use SQL

Online transaction processing (OLTP):

- transaction safe

- CRUD (Create, Read, Update, Delete) rows of data

- data stored row-wise

- SQL

- LINQ (C#)

- Examples: PostgreSQL, MySQL, SQLite, ...

OLTP

see: Wikipedia:LINQ

Online analytical processing (OLAP):

- complex queries

- reading, aggregation, filtering of historical data

- data stored column-wise

- MDX (MultiDimensional eXpressions)

- ELT pipeline to ingest data

- Examples: Clickhouse, Apache Druid, IBM Cognos, ...

OLAP

- in-memory OLAP tables

- complex queries

- reading, aggregation, filtering of historical data

- data stored column-wise

- custom query API

- Examples: pandas (Python), data.tables (R), pola-rs (Rust)

DATAFRAMES

see: https://www.pola.rs

Python dataframes

- In-memory, single-threaded: pandas

- In-memory, parallel on clusters: dask, ray

- Out-of-memory: vaex, modin

- Out-of-memory, parallel on clusters: pyspark

- In-memory, GPU-accelerated: RAPIDS cuDF

- In-memory, multi-threaded: pola-rs

R dataframes

- In-memory, multithreaded: data.table

- In-memory, out-of-memory, on clusters: dplyr

Other dataframes

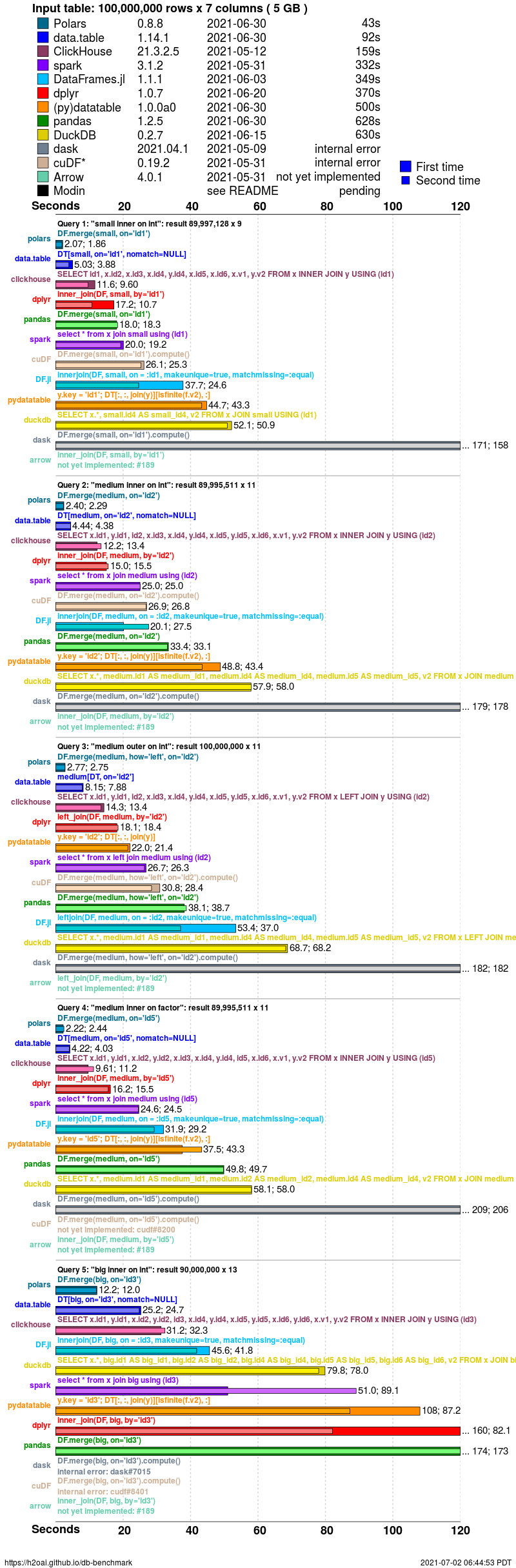

Benchmark of dataframes

SQL PROS

- in-memory SQL DBs are as fast as dataframes

- no need to learn a new query language/API

- SQL statements are shorter

- SQL query optimiser (multithreaded)

- easy to scale data processing steps to persistent DBs

- SQL is a battle-proof industry standard

SQL CONS

- distributed SQL on clusters very difficult

- SQL standard has many dialects

- A single huge and confusing SQL statement is needed for best performance

- SQL is illogical (fixed with LINQ)

-

SELECT column FROM table

-

FROM table SELECT column

-

- SQL strings are not typesafe (fixed with LINQ)

- No GPU support

DATAFrames PROS

- in-memory dataframes need no database connection

- object oriented and type-safe query APIs

- API better suited for data exploration

- Easy to scale out to clusters

- GPU support

DATAFrames CONS

- every dataframe has a different query API

- difficult to scale data processing steps to persistent DBs (but see DuckDB)

- No query optimizer (except pyspark)

- Out-of-memory dataframe performance often slow

- No transaction safety

- No concurrent multi-user access

SQL

query = """

select

a.name,

p.year,

p.title,

p.abstract,

p.paper_text

from authors a

inner join paper_authors pa on pa.author_id = a.id

left join papers p on p.id = pa.paper_id

and p.event_type not in ('Oral', 'Spotlight', 'Poster')

order by name, year asc

"""

pd.read_sql(query, con=con)

Pandas

df_authors \

.merge(

df_papers_authors.drop(columns=["id"]),

left_on="id",

right_on="author_id",

how="inner") \

.drop(columns=["id"]) \

.merge(

df_papers \

.query("event_type in ('Oral', 'Spotlight', 'Poster')") \

[["id", "year", "title", "abstract", "paper_text"]],

left_on="paper_id",

right_on="id",

how="left") \

.drop(columns=["id", "paper_id", "author_id"]) \

.sort_values(by=["name", "year"], ascending=True)

- OLAP + OLTR combined:

SAP HANA is an OLTAP system

it is a column-oriented, in-memory database, that combines OLAP and OLTP operations into a single system

- But it better be open-source, easy-to-install and support more client languages

- Other interesting developments:

- pola-rs

- trino

- DuckDB

- Clickhouse

- see https://db-engines.com/en/ranking