Adicionando recursos no código

Lembrando...

- Construímos uma lib para treinar um modelo

- A lib pode ser usada em notebooks e aplicações independentes

- Métricas são exportadas para o standard output

Dependencia na interface

def train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int,

report_step: int) -> ImageClassifierdef train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int, report_step: int,

metric_exporter: Optional[BaseMetricExporter] = None) -> ImageClassifier:Nova assinatura

def train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int, report_step: int,

metric_exporter: Optional[BaseMetricExporter] = None) -> ImageClassifier:- Dependência na interface BaseMetricExporter

- Mantém retrocompabilidade (parametro opcional)

def train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int,

report_step: int) -> ImageClassifier:

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for epoch in range(train_epochs):

logging.info(f"Starging epoch {epoch}")

for step, (batch_inputs, batch_labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

logits = model(batch_inputs, training=True)

loss_value = tf.keras.losses.categorical_crossentropy(batch_labels, logits)

gradients = tape.gradient(loss_value, model.trainable_weights)

optimizer.apply_gradients(zip(gradients, model.trainable_weights))

if step % report_step == 0:

mean_loss = tf.reduce_mean(loss_value)

logging.info(f"epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_loss:8.8f}")

evaluation_loss = tf.keras.metrics.CategoricalCrossentropy()

for step, (batch_inputs, batch_labels) in enumerate(evaluation_dataset):

logits = model(batch_inputs)

evaluation_loss.update_state(batch_labels, logits)

evaluation_loss_value = evaluation_loss.result()

mean_evaluation_loss_value = tf.reduce_mean(evaluation_loss_value)

logging.info(f"test epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_evaluation_loss_value:8.8f}")

evaluation_loss.reset_state()

return model

def train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int,

report_step: int) -> ImageClassifier:

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for epoch in range(train_epochs):

logging.info(f"Starging epoch {epoch}")

for step, (batch_inputs, batch_labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

logits = model(batch_inputs, training=True)

loss_value = tf.keras.losses.categorical_crossentropy(batch_labels, logits)

gradients = tape.gradient(loss_value, model.trainable_weights)

optimizer.apply_gradients(zip(gradients, model.trainable_weights))

if step % report_step == 0:

mean_loss = tf.reduce_mean(loss_value)

logging.info(f"epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_loss:8.8f}")

evaluation_loss = tf.keras.metrics.CategoricalCrossentropy()

for step, (batch_inputs, batch_labels) in enumerate(evaluation_dataset):

logits = model(batch_inputs)

evaluation_loss.update_state(batch_labels, logits)

evaluation_loss_value = evaluation_loss.result()

mean_evaluation_loss_value = tf.reduce_mean(evaluation_loss_value)

logging.info(f"test epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_evaluation_loss_value:8.8f}")

evaluation_loss.reset_state()

return model

def train_model(model: ImageClassifier, learning_rate: float, train_dataset: tf.data.Dataset,

evaluation_dataset: tf.data.Dataset, train_epochs: int, report_step: int,

metric_exporter: Optional[BaseMetricExporter] = None) -> ImageClassifier:

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for epoch in range(train_epochs):

logging.info(f"Starging epoch {epoch}")

for step, (batch_inputs, batch_labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

logits = model(batch_inputs, training=True)

loss_value = tf.keras.losses.categorical_crossentropy(batch_labels, logits)

gradients = tape.gradient(loss_value, model.trainable_weights)

optimizer.apply_gradients(zip(gradients, model.trainable_weights))

if step % report_step == 0:

mean_loss = tf.reduce_mean(loss_value)

logging.info(f"epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_loss:8.8f}")

if metric_exporter:

metric_exporter.write_scalar("training_loss", mean_loss, step, epoch)

evaluation_loss = tf.keras.metrics.CategoricalCrossentropy()

for step, (batch_inputs, batch_labels) in enumerate(evaluation_dataset):

logits = model(batch_inputs)

evaluation_loss.update_state(batch_labels, logits)

evaluation_loss_value = evaluation_loss.result()

mean_evaluation_loss_value = tf.reduce_mean(evaluation_loss_value)

logging.info(f"test epoch = {epoch:4d} step = {step:5d} "

f"categorical cross entroypy = {mean_evaluation_loss_value:8.8f}")

if metric_exporter:

metric_exporter.write_scalar("evaluation_loss",

mean_evaluation_loss_value, 0, epoch)

evaluation_loss.reset_state()

return modelDepência de interface

-

train_modeldepende da classe abstrata, não da implementação - Podemos ter várias iimplementações sem afetar train_model

- Responsabilidade segregada

MetricValue = Union[tf.Tensor, float, int]

class BaseMetricExporter(abc.ABC):

@abc.abstractmethod

def write_scalar(

self, metric_name: str,

metric_value: MetricValue,

step: int, epoch: int) -> None:

pass

Implementação

import os

import json

from datetime import datetime

from typing import Any, Union, Dict

from uuid import uuid4

from fine_tunner.persistence.metadata import MetricValue, BaseMetricExporter

class FileExporter(BaseMetricExporter):

def __init__(self, ouput_folder: str):

os.makedirs(ouput_folder, exist_ok=True)

self._ouput_folder = ouput_folder

def write_scalar(self, metric_name: str, metric_value: MetricValue, step: int,

epoch: int) -> None:

filename = f"{uuid4()}.json"

now = datetime.now().timestamp()

output_path = os.path.join(self._ouput_folder, metric_name, filename)

data = dict(value=metric_value, step=step, epoch=epoch, timestamp=now)

with open(output_path, "w") as output_file:

json.dump(data, output_file)Implementação

import os

import requests

import json

from datetime import datetime

from typing import Dict, Optional

from uuid import uuid4

from fine_tunner.persistence.metadata import MetricValue, BaseMetricExporter

class ApiExporter(BaseMetricExporter):

def __init__(self, endpoint: str, prefix: str,

headers: Optional[Dict[str, str]] = None):

self._endpoint = endpoint

self._headers = headers

self._prefix = prefix

def write_scalar(self, metric_name: str, metric_value: MetricValue,

step: int, epoch: int) -> None:

now = datetime.now().timestamp()

data = dict(value=metric_value, step=step, epoch=epoch, timestamp=now)

endpoint = f"{self._endpoint}/{self._prefix}"

requests.post(endpoint, json=data, headers=self._headers)Implementação

endpoint = "http://metric"

metric_exporter = ApiExporter(endpoint, "treinamento 001")

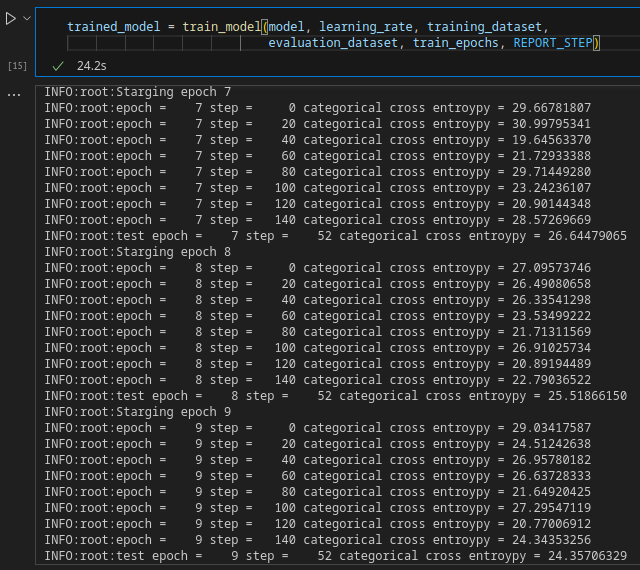

trained_model = train_model(model, learning_rate, training_dataset,

evaluation_dataset, train_epochs, REPORT_STEP, metric_exporter)

#################

output_folder = "~/treinamento-001/metrics"

metric_exporter = FileExporter(output_folder, "treinamento 001")

trained_model = train_model(model, learning_rate, training_dataset,

evaluation_dataset, train_epochs, REPORT_STEP, metric_exporter)

################

trained_model = train_model(model, learning_rate, training_dataset,

evaluation_dataset, train_epochs, REPORT_STEP)