Docker and Kubernetes 101

Omar Wit,

Mission Critical Engineer @ Schuberg Philis

@SethKarlo

arepton@schubergphilis.com

Andy Repton,

Mission Critical Engineer @ Schuberg Philis

owit@schubergphilis.com

@owit

Agenda

- Intro

- What is a container?

- Lab: Working with Images

- What is Kubernetes?

- Lab: Getting started with kubectl

- Lab: Developing a Wordpress website in Kubernetes

- Lab: Bringing your App to production

Important links for today

- https://sbp.link/bkwi-slack

- https://sbp.link/bkwi-github

- https://sbp.link/bkwi-slides

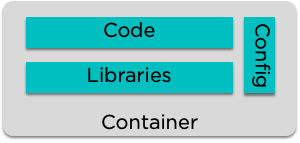

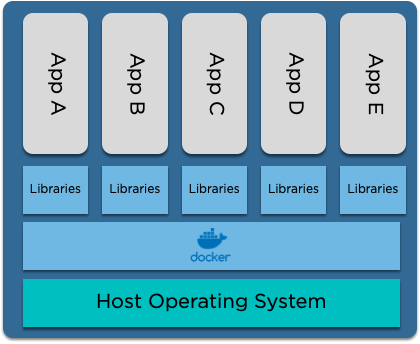

What is a container?

A container is a packaged unit of software

- Put all of your code and dependencies together

- Only include what you need

- Isolate your code from the rest of the operating system

- Share the host kernel

Docker - a current standard

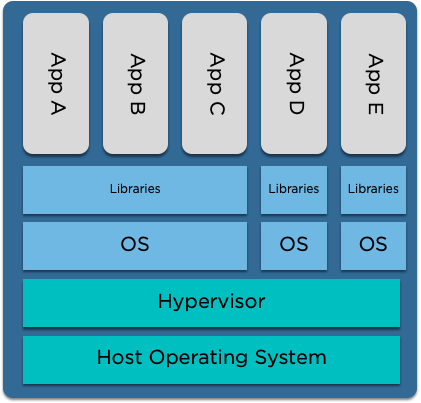

What's the difference to a VM?

Immutable vs. Converging infrastructure

Why should you use them?

Example usecases of docker containers in day to day life

What questions do you have about them?

Lab: Working with images

Building a Docker Image

Building our image

- Docker images are built using a Dockerfile

- Make sure you're inside the workshop repository and create a new file named 'Dockerfile' using your favourite text editor

# If on Linux/Mac:

$ cd docker-and-kubernetes-101/lab01/src

$ vim Dockerfile

# If on Windows:

$ Set-Location docker-and-kubernetes-101\lab01\src

#use notepad to create the file named 'Dockerfile'Building our Dockerfile

# Base image from the default nginx image

FROM nginx:1.15-alpineInside the Dockerfile, we start with a FROM keyword. This allows us to pull in a preexisting container image and build on top of it

Here, we're going to start with the official nginx image (when the URL is not set Docker will default to the Docker Hub) and use the alpine tag of that image

# Docker format:

<repository>/<image>:<tag>Building our Dockerfile

# Base image from the default Node 8 image

FROM nginx:1.15-alpine

# Set /usr/share/nginx/html as the directory where our site resides

WORKDIR /usr/share/nginx/htmlNext, we'll set the WORKDIR for the container. This will be the where docker will run the process from

Every new command in the Dockerfile will create a new layer. Any layers that already exist on the host will be reused rather than recreated. Docker keeps track of these using a hash

Building our Dockerfile

# Base image from the default nginx

FROM nginx:1.15-alpine

# Set /usr/share/nginx/html as the directory where our app resides

WORKDIR /usr/share/nginx/html

# Copy the source of the website to the container

COPY site .And now we can COPY our Website into the container

You cannot COPY from above the directory you're currently in

# Base image from the default nginx

FROM nginx:1.15-alpine

# Set /usr/share/nginx/html as the directory where our app resides

WORKDIR /usr/share/nginx/html

# Copy /etc/passwd

COPY /etc/passwd /usr/share/nginx/htmlFor example, this won't work:

docker build .

Sending build context to Docker daemon 230.4kB

Step 1/2 : FROM nginx:1.15-alpine

---> df48b68da02a

Step 2/2 : COPY /etc/passwd /usr/share/nginx/html

COPY failed: stat /var/lib/docker/tmp/docker-builder918813293/etc/passwd: no such file or directory

Building our Dockerfile

# Base image from the default nginx

FROM nginx:1.15-alpine

# Set /usr/share/nginx/html as the directory where our app resides

WORKDIR /usr/share/nginx/html

# Copy the source of the website to the container

COPY site .

#Exposing port 80

EXPOSE 80Next, we will EXPOSE the container on port 80, so we can reach the application

Tagging our image

Tagging

$ docker build . -t organisation/<image-name>:<tag>

organisation: sbpdemo

image name : bkwi-yourname

Or:

$ docker tag <image-id> mysuperwebserver:v1

1. During build

2. Afterwards (re-tagging)

Running our image locally

$ docker run --name my-website -d sbpdemo/bkwi-omar:latest

...how do we view it?

Re-running with a port-forward

$ docker run --name my-website -p 8080:80 -d sbpdemo/bkwi-omar:latestAnd now we can view it at http://localhost:8080

Why didn't that work? We need to stop the existing container first

Stopping, restarting and viewing our container

$ docker ps

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a1a144e99570 bkwi-omar:latest "nginx -g 'daemon of…" About a minute ago Up About a minute 80/tcp my-websiteWe can view our running containers with:

And stop it, before starting it again:

$ docker rm -f a1a144e99570

$ docker run --name my-website -p 8080:80 bkwi-omar:latestAnd now we can actually view it!

Pushing our image to our registry

$ docker push sbpdemo/<my-image>:latest

Why didn't that work? Logging into the registry

Logging into a registry

$ docker login

Login with your Docker ID to push and pull images from Docker Hub.

Username: sbpdemo

Password: *Pass*What is Kubernetes?

Kubernetes Building Blocks

- Pods

- Replica Sets

- Deployments

- Services

- Ingresses

- Secrets

- Config Maps

- Daemon Sets

- Stateful Sets

- Namespaces

Pods

When we think of 'Bottom Up', we start with the node:

Pods

Then we add the container runtime

Pods

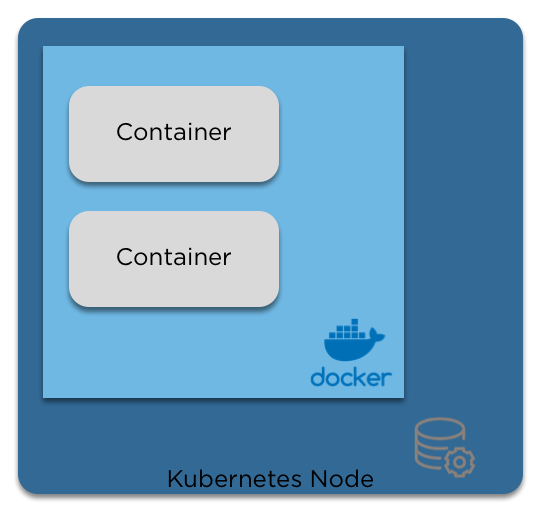

And then the containers

Pods

When we move from the 'physical' layer to kubernetes, the logical wrapper around containers is a pod

Pods

- Pods are the basic building blocks of Kubernetes.

- A pod is a collection of containers, storage and options dictating how the pod should run.

- Pods are mortal, and are expected to die in order to rebalance the cluster or to upgrade them.

- Each pod has a unique IP in the cluster, and can listen on any ports required for your application.

Pods support Health checks and liveness checks. We’ll go through those later

Pod Spec

apiVersion: v1 # The APiVersion of Kubernetes to use

kind: Pod # What it is!

metadata:

name: pod # The name

namespace: default # The namespace (covered later)

spec:

containers:

- image: centos:7 # The image name, if the url/repo is not specified docker defaults to docker hub

imagePullPolicy: IfNotPresent # When to pull a fresh copy of the image

name: pod # The name again

command: ["ping"] # What to run

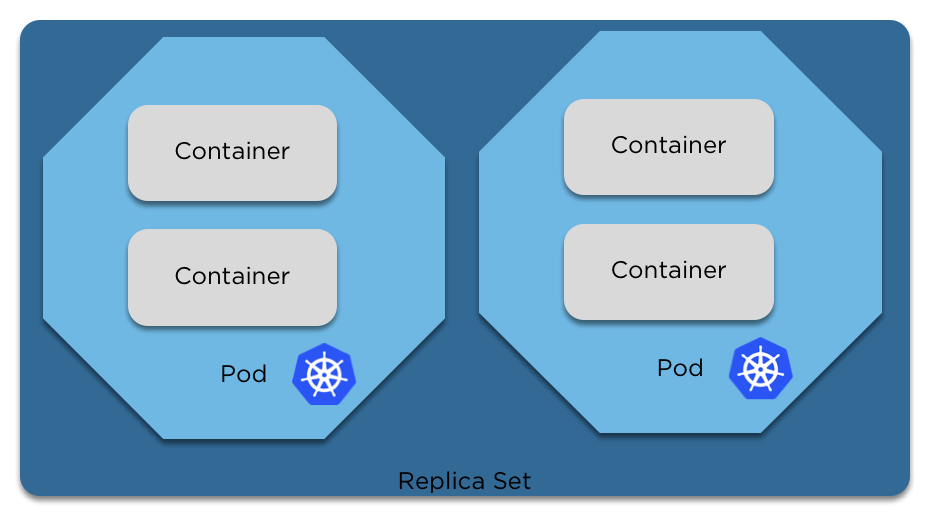

args: ["-c", "4", "8.8.8.8"] # Args to pass to the containerReplica Sets

Replica Sets

- Replica sets define the number of pods that should be running at any given time.

- With a replica set, if a pod dies or is killed and the replica set no longer matches the required number, the scheduler will create a new pod and deploy it inside the cluster.

- If too many pods are running, the replica set will delete a pod to bring it back to the correct number.

- Replica sets can be adjusted on the fly, to scale up or down the number of pods running.

-

Editing a replica set has no impact on running pods, only new ones

Replica Set Spec

apiVersion: apps/v1 # New API here!

kind: ReplicaSet # What it is

metadata:

name: replica-set # Name of the replica set, *not* the pod

labels: # Used for a bunch of things, such as the service finding the pods (still to come)

app: testing

awesome: true # Because we are

spec:

replicas: 2 # How many copies of the pod spec below we want

selector:

matchLabels: # Needs to be the same as below, this is how the replica set finds its pods

awesome: true

template: # This below is just pod spec! Everything valid there is valid here

metadata:

labels:

awesome: true

spec:

containers:

- name: pod

image: centos:7Deployments

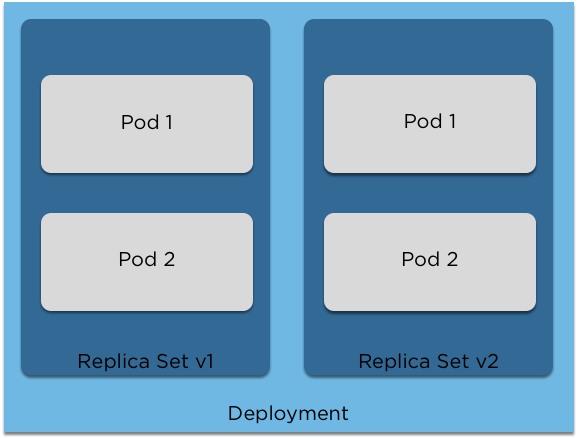

Deployments

- A Deployment is a copy of a replica set and pods.

- If a deployment changes, the cluster will automatically create a new replica set with the new version and will automatically live upgrade your pods.

- A deployment can also be rolled back to a previous version if there is an error.

Deployment Spec

apiVersion: apps/v1 # There's that API again

kind: Deployment # Pretty self explanatory at this point

metadata:

name: pod-deployment

labels:

app: pod

awesome: true

spec: # Anyone recognise this? Yep, it's Replica Set Spec

replicas: 2

selector:

matchLabels:

app: pod

template:

metadata:

labels:

app: pod

spec: # Aaaaand yep, this is pod spec

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80Services

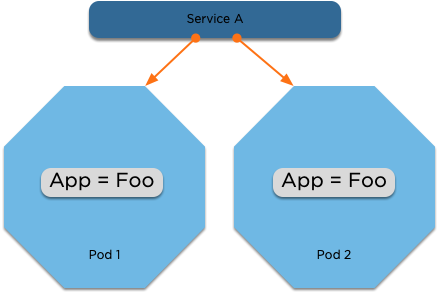

Services

- Services expose your pods to other pods inside the cluster or to the outside world.

- A service uses a combination of labels and selectors to match the pods that it’s responsible for, and then will balance over the pods.

- Using a service IP, you can dynamically load balance inside a cluster using a service of type ‘ClusterIP’ or expose it to the outside world using a ‘NodePort’ or a ‘LoadBalancer’ if your cloud provider supports it.

- Creating a service automatically creates a DNS entry, which allows for service discovery inside the cluster.

Service Spec

kind: Service

apiVersion: v1

metadata:

name: my-service

spec:

selector: # This needs to match the labels of your deployment and pods!

app: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376Ingresses

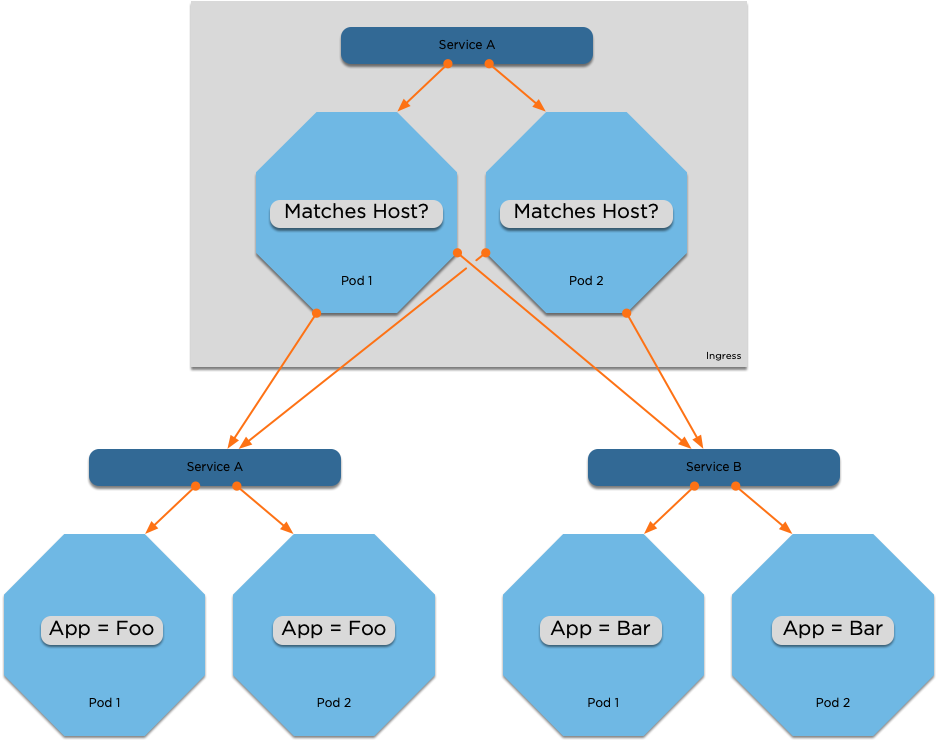

Ingresses

- An Ingress is a resource that allows you to proxy connections to backend services

- Similar to a normal reverse proxy, but these are configured via Kubernetes annotations

Ingress Spec

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: simple-fanout-example

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: service1

servicePort: 4200

- path: /bar

backend:

serviceName: service2

servicePort: 8080Secrets

- A Kubernetes Secret is a base64 encoded piece of information

- It can be mounted as a file inside of a container in the cluster

- It can also be made available as an environment variable

Secret Spec

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: YWRtaW4=

password: MWYyZDFlMmU2N2RmConfigMaps

- A config map is a file that is stored inside the kubernetes API.

- You can mount a config map inside a pod to override a configuration file, and if you update the config map in the API, kubernetes will automatically push out the update file to the pods that are consuming it.

- Config maps allow you to specify your configuration separately from your code, and with secrets, allow you to move the differences between your DTAP environments into the kubernetes API and out of your docker container

ConfigMap Spec

apiVersion: v1

data:

game.properties: |

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

ui.properties: |

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

kind: ConfigMap

metadata:

name: game-config

namespace: defaultDaemonSets

- A daemonset is a special type of deployment that automatically creates one pod on each node in the cluster. If nodes are removed or added to the cluster, the daemonset will resize to match.

- Daemonsets are useful for monitoring solutions that need to run on each node to gather data for example.

DaemonSet Spec

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: awesome-app

labels:

awesome: true

spec:

selector:

matchLabels:

name: awesome-app

template:

metadata:

labels:

awesome: true

spec: # Yep, Pod Spec again

containers:

- name: awesome-app

image: gcr.io/awesome-app:latest

volumes:

- name: varlog

hostPath:

path: /var/logStatefulSets

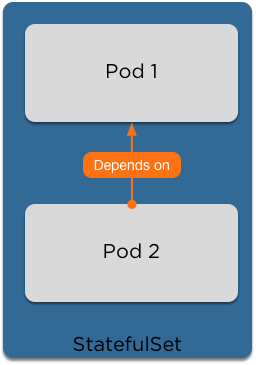

StatefulSets

- StatefulSets (formerly PetSets) are a way to bring up stateful applications that require specific startup rules in order to function.

- With a StatefulSet, the pods are automatically added to the Cluster DNS in order to find each other.

- You can then add startup scripts to determine the order in which the pods will be started, allowing a master to start first and for slaves to join to the master.

StatefulSet Spec

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # has to match .spec.template.metadata.labels

serviceName: "nginx"

replicas: 3 # by default is 1

template:

metadata:

labels:

app: nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: k8s.gcr.io/nginx-slim:0.8

ports:

- containerPort: 80

name: webNamespaces

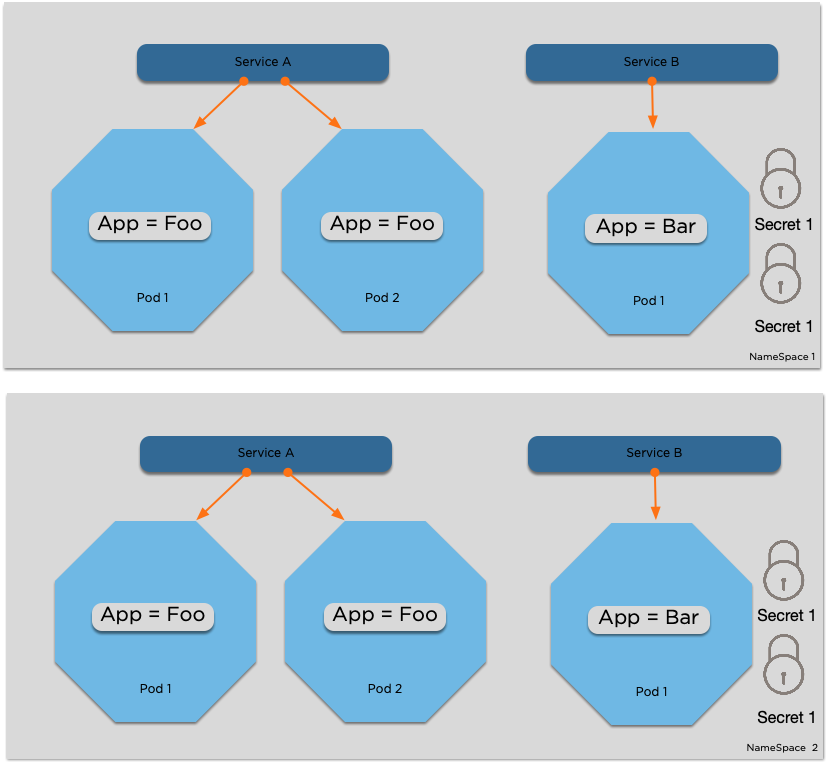

Namespaces

- A namespace is a logical division of other Kubernetes resources

- Most resources (but not all) are namespace bound

- This allows you to have a namespace for acc and prod for example, or one for Bob and one for Omar

- Namespace names are appended to Service names. So, to reach service 'nginx' in namespace 'bob' from namespace 'omar', you can connect to nginx.bob

Namespace Spec

apiVersion: v1

kind: Namespace

metadata:

name: defaultLab: Hands on with kubectl

Connecting to your clusters

kubectl config set-cluster workshop --insecure-skip-tls-verify=true --server https://<replace>

kubectl config set-credentials bkwi-admin --username <username> --password <password>

kubectl config set-context workshop --cluster workshop --user <username>

kubectl config use-context workshop

kubectl get nodeDeploying a container on your cluster

Creating our deployment

$ kubectl run my-website --image eu.gcr.io/pur-owit-playground/bkwi:lab01

Using a Port-Forward to view our site

$ kubectl port-forward my-website-<randomid> 9000:80What is it, why is it helpful and how does it work?

Now we can view it at http://localhost:9000

Patching our image

$ kubectl set image deployment my-website *=eu.gcr.io/pur-owit-playground/bkwi:lab02

$ # Escape the asterisk if you need to

$ kubectl set image deployment my-website \*=eu.gcr.io/pur-owit-playground/bkwi:lab02Debugging why your container didn't work

$ kubectl get pods

$ kubectl describe pod

$ kubectl logs <name-of-the-pod>

Fixing your container

- With the image you have from Lab 1, how can you fix this to get it working?

Fixing your deployment to use your new image

- Using your image you built in lab01

- Update your deployment to use the new image

- Port forward again to view your image

Lab: Building a Wordpress site

Deploying the Kubernetes dashboard is super simple

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

Viewing the dashboard

$ kubectl proxyThen click here:

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

Getting the token to login. What is a Service Account?

Creating your namespace for your wordpress deployment

$ kubectl create namespace 101-k8s

Deploying mysql

Let's look at 03.2/mysql-deployment.yml together.

Edit the MYSQL_ROOT_PASSWORD variable to be a password of your choice

We can deploy it using:

$ kubectl create -f 03.2/mysql-deployment.ymlHow do we reach our MySQL?

$ kubectl describe service wordpress-mysqlServices of type ClusterIP can be found inside the cluster by their name as a DNS record!

Deploying Wordpress

Let's look at 03.2/wordpress.yml together.

Edit the WORDPRESS_DB_PASSWORD to be what you set above.

We can deploy it using:

$ kubectl create -f 03.2/wordpress.ymlAdding a Persistent Volume

As containers are usually immutable, a persistent volume allows us to persist that data across a restart

Adding a Persistent Volume

Let's look at 03.3/persistent-volume-yml together.

We can deploy it using:

$ kubectl create -f 03.3/persistent-volume.ymlUpdating our site to use our volume

Let's look at 03.3/wordpress.yml together (note, NOT 03.2!).

Edit the WORDPRESS_DB_PASSWORD to be what you set above.

We can deploy it using:

$ kubectl apply -f 03.3/wordpress.yml

$ kubectl apply -f 03.3/mysql-deployment.ymlInstall your wordpress site

We can use the kubectl port-forward command we learned before to reach our websites

Lab: Bring our app to production

Adding requests and limits

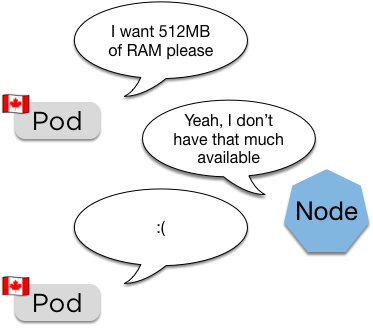

Requests

(Canadian pods are very polite)

spec:

containers:

resources:

requests:

memory: "64Mi"

cpu: "250m"- Requests are the pod asking for a certain amount of RAM and CPU to be available

- The scheduler uses this to place the pod

Pod will remain pending until space becomes available (also how the cluster autoscaler works)

Let's look at 04.2/wordpress.yml together.

Edit the MYSQL_ROOT_PASSWORD variable to be a password of your choice

We can deploy it using:

$ kubectl apply -f 04.2/wordpress.ymlAdding Requests

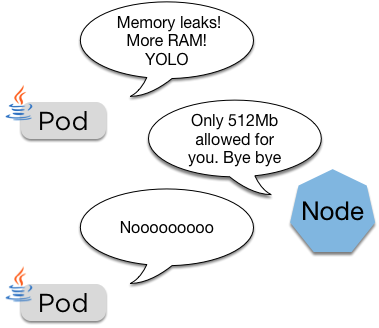

Limits

spec:

containers:

resources:

limits:

memory: "128Mi"

cpu: "500m"- Limits are a hard limit on the resources the pod is allowed

- These are passed directly to the container runtime, so usually this is docker

If the pod goes over its limit it'll be restarted

Let's look at 04.3/wordpress.yml together.

Edit the MYSQL_ROOT_PASSWORD variable to be a password of your choice

We can deploy it using:

$ kubectl apply -f 04.3/wordpress.ymlAdding Limits

Adding a quota to our namespace

Quotas

apiVersion: v1

kind: ResourceQuota

metadata:

name: k8s-quota

spec:

hard:

requests.cpu: "2"

requests.memory: 2Gi

limits.cpu: "2"

limits.memory: 2Gi- Quotas limit total resources allowed

- Quotas are assigned to namespaces

Developer:

Cluster Admin:

Let's look at 04.4/quota.yml together.

We can deploy it using:

$ kubectl create -f 04.3/quota.ymlAdding a Namespace Quota

Moving our secrets to a secret

$ kubectl -n 101-k8s create secret generic mysql-pass --from-literal=password=YOUR_PASSWORD

Creating a secret to hold our mysql password

Editing our deployment to use the new secret

Let's look at the files in 04.5 together.

We can deploy it using:

$ kubectl apply -f 04.5/Making our app available to the internet

Exposing our wordpress to the world

$ kubectl get svc wordpress

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wordpress 100.70.168.153 <none> 80/TCP 3mLet's edit that and make it type 'LoadBalancer'

$ kubectl edit svc wordpress

service "wordpress" edited

$ kubectl get svc wordpress -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

wordpress 100.70.168.153 a252b84715a5a11e788170a75658e305-2028720367.eu-west-1.elb.amazonaws.com 80:30867/TCP 4m app=wordpress,tier=frontendAt the moment our service is type 'ClusterIP'

And now we can view our website on the web!

Questions and wrap up

Credits, License and Thanks

Thanks to:

Omar Wit, for helping to build this deck

License:

You may reuse this, but must credit the original!