Processor Architecture

Objectives

- show understanding of the differences between RISC and CISC processors

- show understanding of the importance/use of pipelining and registers in RISC processors

- show understanding of interrupt handling on CISC and RISC processors

- show awareness of the four basic computer architectures: SISD, SIMD, MISD, MIMD

- show awareness of the characteristics of massively parallel computers

Research

What kind of Processor (CPU) used in different devices?

| Your Smartphone | A tablet - different OS from your phone | Apple Macbook pro | Gaming laptop | Dell PowerEdge R940 Rack Server | |

|---|---|---|---|---|---|

| Model of the device | PowerEdge R940 | ||||

| Brand | Intel | ||||

| Model | Xeon® Gold 5215 | ||||

| Architecture | x86-64 | ||||

| Clock Frequency | 2.7G | ||||

| Core | 10 |

https://www.anandtech.com/show/16226/apple-silicon-m1-a14-deep-dive

CISC

- Complex Instruction Set Computers

- Instruction for CPU is more complex

- More instruction types

- Less line of code, but takes longer time to complete

- More complex design, often requires Microprogramming control unit

RISC

- Reduced Instruction Set Computers

- Shorter instruction

- Less types of instruction

- More lines of codes to complete some task, but faster

- Simpler in hardware design, control unit is hard-wired

Micro-programming control unit

- Machine instructions are not directly executed by control unit, but first interpreted to micro-instructions, then control unit will perform those micro-instructions, this is usually found in CISC CPUs

- The micro-instructions is stored in a ROM inside CPU which is the instructions for a micro-programming type control unit

- The micro-instructions is referred as "Firmware"

- For RISC, control unit is hardwired, meaning that instruction is handled directly in hardware

CISC

- Intel x86 CPUs are examples of CISC

- Example of multiply two numbers from 0x001 and 0x002 and store the result in 0x001 will be:

MULT 0x001, 0x002

RISC

- Example includes ARM based CPU (found in mobile devices)

- The same instruction for RISC can become:

LDD 0x001

MUL 0x002

STO 0x001

Fetch-decode-execute Cycle

- The fetch-decode-execute cycle in CPU can be model as 5 stages: (Also refer to p. 87 flowchart)

- Instruction Fetch (IF)

- Instruction Decode (ID)

- Operand Fetch (OF)

- Instruction Execute (IE)

- Result write back (WB)

- Assume each stage required one CPU cycle to run, an instruction will take 5 cycle to complete if we done sequentially

Pipelining

- RISC computer allows more efficient Pipelining, one of the reason why RISC CPU is adopted

- Pipelining allows CPU instructions to run in parallel

- Refer to page 343, fig 18.01

- Assume there are 5 independent pipelines (hardware) in a CPU, each one handling one of the 5 stages

- After 5 cycles, the CPU is fully utilized

Think Pair Share

- What will be the problem to pipeline in case of an interrupt happens?

- How to handle interrupt in the pipeline system?

More on pipeline

- When interrupt happens, two possible implementations for CPU

- After WB, remove all contents in pipeline, PC set to -4

(Simple but waste a few cycles to restart the pipeline - Store the states of the pipeline and handle the interrupt (maybe more efficient, but require extra set of storage)

- After WB, remove all contents in pipeline, PC set to -4

- There are other cases pipeline will not work, e.g.

- Instructions depending on result from previous

- Branches

- CPU might have branch predictor circuit that guess the branching result (http://users.cs.fiu.edu/~downeyt/cop3402/prediction.html)

Parallel computing

- A computer can have multiple processors running in parallel

- Note: Pipeline is a instruction-level parallelism

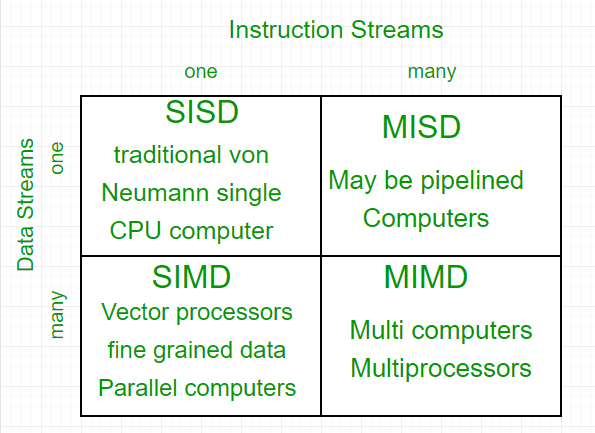

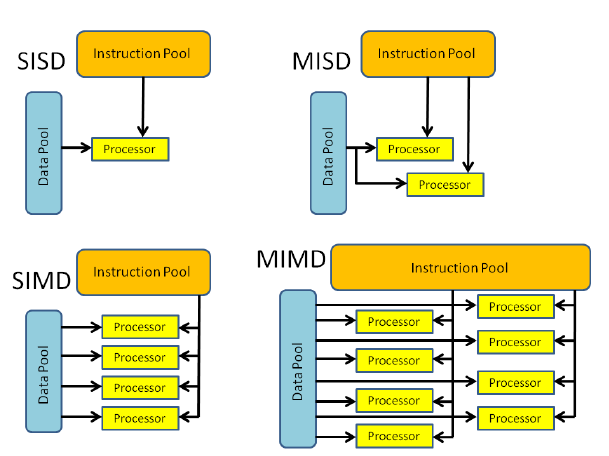

- There are four categories of parallelism

Different modes of parallelism

Flynn’s taxonomy

GPU vs CPU illustrated

CPU GPU and TPU

Parallel computer systems

- One example of multi computer parallel system is massively parallel computers

- Traditionally called supercomputers

- Massively parallel computers are MIMD

- The computers are communicate using computer network

- Another example of multi computer parallel is cluster computing, where large number of networked PC works together (e.g. Folding@Home)

https://youtube.com/shorts/X4HoGr0QSBQ?feature=share

Supercomputer from >1000 PS3

folding@home

an example of Cluster computer