Machine learning Threat Modeling

Threat Modeling ✨Magic✨

Agenda

"Threat modeling is a process of identifying potential security threats and vulnerabilities in a system, application, or network infrastructure. It involves a systematic approach to identify, prioritize, and mitigate security risks. The goal of threat modeling is to understand the security posture of a system and to develop a plan to prevent or minimize the impact of potential attacks."

Threat Modeling RECAP

1

Defining the scope. (What are we working on?)

2

Building a model of the system.

(Dataflow diagram)

3

Thinking on what can go wrong.

(Threat scenarios)

5

Review and Iterate on what we could do better.

(Bypasses and defense in depth)

4

Design what can we do about it.

(Mitigations)

| Name | Description |

|---|---|

| STRIDE | Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, and Elevation of Privilege |

| DREAD | Damage, Reproducibility, Exploitability, Affected Users, and Discoverability |

| PASTA | Process for Attack Simulation and Threat Analysis |

| CAPEC | Common Attack Pattern Enumeration and Classification |

Frameworks

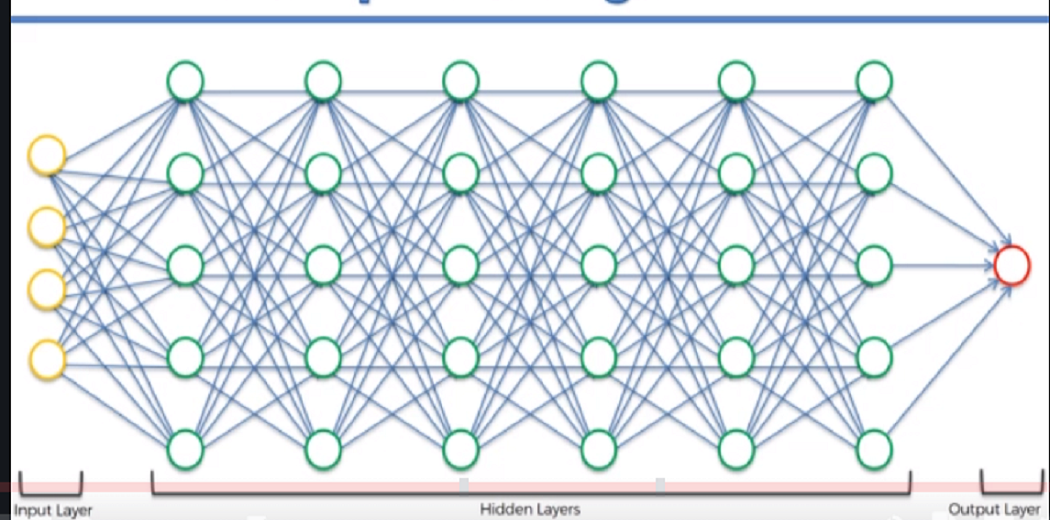

"Machine learning is a subfield of artificial intelligence that involves developing algorithms and statistical models that enable computer systems to learn and improve from experience without being explicitly programmed."

Machine Learning RECAP

Data

Data

Data

Data

Supervised Learning

Most common

Trained on labeled data

Make predictions

Unsupervised Learning

Trained on unlabeled data

Identify clusters of similarity

Reduce data dimensionality

Semi-Supervised Learning

Mix of previous two

Improved performance

Types of Learning

Performance != Speed || Memory Consumption

Reinforcement Learning

Based on feedback

Rewards system

Commonly seen on game bots

Deep Learning

Deep neural networks

multiple layers of nodes

Seen on image and speech recognition, natural processing, recommendations

Transfer Learning

Based on pre-trained models

Finetuning for performance

Types of Models

| Name | Description |

|---|---|

| Decision Trees | Split the data into smaller subsets and recursively repeat the process, creating a tree of decisions |

| Random Forests | Combination of multiple decision trees |

| SVMs (Support Vector Machines) |

Classification task by separating data into different classes (finding hyperplanes) |

| Naive Bayes | Probabilistic algorithm for classification. Computes probability of data belonging to a given class |

Techniques

ML Threat Modeling

How is it trained?

We need to understand what kind of learning will the model use and what kind of data.

How is it called?

We need to understand what kind of usage will this model have. Who is calling it and from where.

How is it deployed?

We need to understand where is this model running and with what supporting code.

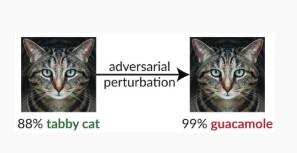

Adversarial Perturbation

The attacker stealthily modifies the query to get a desired response. Breach of model input integrity, leads to fuzzing-style attacks that compromise the model’s classification performance

Adversarial Perturbation

Adversarial Perturbation

Classification Attacks

POison Attacks

mODEL iNVERSION Attacks

mODEL sTEALING Attacks

rEPROGRAMING Attacks

sUPPLY CHAIN Attacks

tHIRD-PARTY Attacks

membership inference Attacks

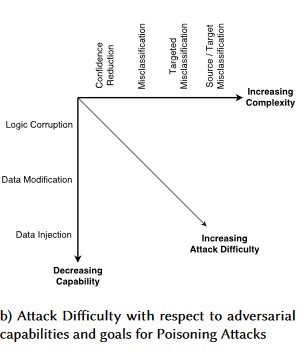

On Classification attacks an attacker attempts to deceive the model by manipulating the input data. The attacker aims to misclassify the input data in a way that benefits them.

cLASSIFICATION aTTACKS

We have multiple sub-types of this attack:

- Target Misclassification

- Source/Target Misclassification

- Random Misclassification

- Confidence Reduction

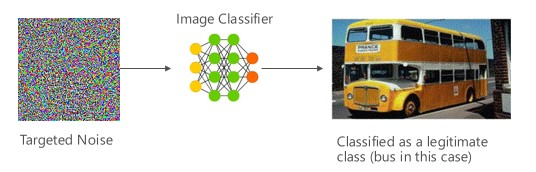

Generate a sample that is not the input class of the target classifier but gets classified as such by the model.

The sample can appear like a random noise to human eyes but attackers have some knowledge of the target machine learning system to generate a white nosie that is not random but is exploiting some specific aspects of the target model.

target Misclassification

Causing a malicious image to bypass a content filter by injecting targeted noise

- Use Highly Confident Near Neighbor - combines confidence information and nearest neighbor search to reinforce the base model.

Mitigations

- Adversarial inputs are not robust in attribution space. Natural inputs are robust in attribution space. Check other properties.

An attempt by an attacker to get a model to return their desired label for a given input.

This usually forces a model to return a false positive or false negative. The end result is a subtle takeover of the model’s classification accuracy, whereby an attacker can induce specific bypasses at will.

source/target Misclassification

- Implement a minimum time threshold between calls to the API providing classification results.

- Feature Denoising

- Train with known adversarial samples to build resilience

- Invest in developing monotonic classification with selection of monotonic features

- Semi-definite relaxation that outputs a certificate that for a given network and test input

- Issue alerts on classification results with high variance between classifiers

Mitigation

The attacker’s target classification can be anything other than the legitimate source classification

Random Misclassification

Causing a car to identify a stop sign as something else

Mitigation

- Use Highly Confident Near Neighbor - combines confidence information and nearest neighbor search to reinforce the base model.

- Adversarial inputs are not robust in attribution space. Natural inputs are robust in attribution space. Check other properties.

(Same as Target Misclassification)

An attacker can craft inputs to reduce the confidence level of correct classification, especially in high-consequence scenarios.

This can also take the form of a large number of false positives meant to overwhelm administrators or monitoring systems with fraudulent alerts indistinguishable from legitimate alerts.

confidence Reduction

mITIGATIONS

- Use Highly Confident Near Neighbor - combines confidence information and nearest neighbor search to reinforce the base model.

- Adversarial inputs are not robust in attribution space. Natural inputs are robust in attribution space. Check other properties.

(Same as Target Misclassification)

An attacker manipulates the training data used to train the model.

The goal of a poisoning attack is to introduce malicious data points into the training set that will cause the model to make incorrect predictions on new, unseen data

pOISON aTTACKS

The goal of the attacker is to contaminate the machine model generated in the training phase, so that predictions on new data will be modified in the testing phase.

Targeted pOISON aTTACKS

Submitting AV software as malware to force its misclassification as malicious and eliminate the use of targeted AV software on client systems

- Define anomaly sensors to look at data distribution on day to day basis and alert on variations

- Input validation, both sanitization and integrity checking

- Poisoning injects outlying training samples

- Reject-on-Negative-Impact (RONI) defense

- Pick learning algorithms that are robust in the presence of poisoning samples (Robust Learning)

mITIGATIONS

Goal is to ruin the quality/integrity of the data set being attacked.

Many datasets are public/untrusted/uncurated, so this creates additional concerns around the ability to spot such data integrity violations in the first place.

Indiscriminate Data Poisoning

A company scrapes a well-known and trusted website for oil futures data to train their models. The data provider’s website is subsequently compromised via SQL Injection attack. The attacker can poison the dataset at will and the model being trained has no notion that the data is tainted.

mITIGATIONS

Same as in Targeted Data Poisoning

- Define anomaly sensors to look at data distribution on day to day basis and alert on variations

- Input validation, both sanitization and integrity checking

- Poisoning injects outlying training samples

- Reject-on-Negative-Impact (RONI) defense

- Pick learning algorithms that are robust in the presence of poisoning samples (Robust Learning)

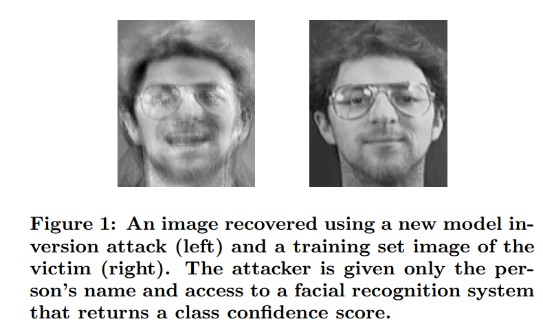

The private features used in machine learning models can be recovered.

This includes reconstructing private training data that the attacker does not have access to. (Hill Climbing)

mODEL iNVERSION aTTACKS

Recovering an image using only the person's name and access to the facial recognition system

- Strong access control

- Rate-limit queries allowed by model

- Implement gates between users/callers and the actual model

- Perform input validation on all proposed queries

- Return only the minimum amount of information needed

mITIGATIONS

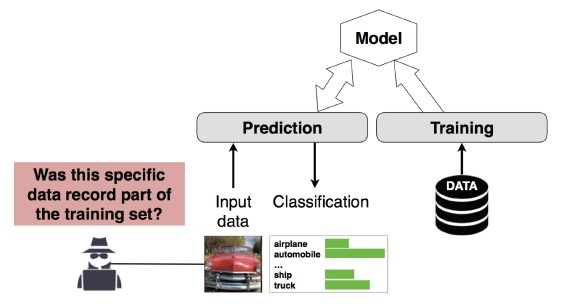

The attacker can determine whether a given data record was part of the model’s training dataset or not.

Membership iNference aTTACKS

Predict a patient’s main procedure (e.g: Surgery the patient went through) based on their attributes (e.g: age, gender, hospital)

- Differential Privacy can be an effective mitigation (theory)

- Usage of neuron dropout and model stacking

Mitigations

Differential privacy is a concept in data privacy that aims to provide strong privacy guarantees for individuals whose data is used in statistical analysis. The idea is to design algorithms that can answer queries about a dataset while protecting the privacy of individuals whose data is included in the dataset.

Model stacking, also known as stacked generalization, is a technique in machine learning where multiple predictive models are combined to improve the accuracy of predictions. In model stacking, the output of several individual models is used as input for a final model, which makes the final prediction.

The attackers recreate the underlying model by legitimately querying the model.

mODEL sTEALING aTTACKS

Call an API to get scores and based on some properties of a malware, craft evasions

- Minimize or obfuscate the details returned

- Define a well-formed query for your model inputs

- Return results only in response to a completed query

- Return rounded confidence values

mITIGATIONS

By means of a specially crafted query from an adversary, Machine learning systems can be reprogrammed to a task that deviates from the creator’s original intent.

rEPROGRAMMING aTTACKS

A sticker is added to an image to trigger a specific response from the network. The attacker trains a separate network to generate the patch, which can then be added to any image to fool the target network and make the car speed up instead of slowdown when detecting a crosswalk.

- Strong Authentication and Authorization

- Takedown of the offending accounts

- Enforce SLAs for our APIs

mITIGATIONS

Owing to large resources (data + computation) required to train algorithms, the current practice is to reuse models trained by large corporations and modify them slightly for task at hand. These can be biased or trained with compromised data.

sUPPLY cHAIN aTTACKS

- Minimize 3rd-party dependencies for models and data

- Incorporate these dependencies into your threat modeling

- Leverage strong authentication, access control and encryption

mITIGATIONS

The training process is outsourced to a malicious 3rd party who tampers with training data and delivered a trojaned model which forces targeted mis-classifications, such as classifying a certain virus as non-malicious

tHIRD-PARTY aTTACKS

- Train all sensitive models in-house

- Catalog training data or ensure it comes from a trusted third party

- Threat model the interaction between the MLaaS provider and your own systems

mITIGATIONS

?

- https://learn.microsoft.com/en-us/security/engineering/threat-modeling-aiml

- https://www.cs.cmu.edu/~mfredrik/papers/fjr2015ccs.pdf

- https://learn.microsoft.com/en-us/security/engineering/failure-modes-in-machine-learning

- https://www.usenix.org/system/files/conference/usenixsecurity16/sec16_paper_tramer.pdf