Hands-On Session-

How to use Edge Computing on Real-Time Applications

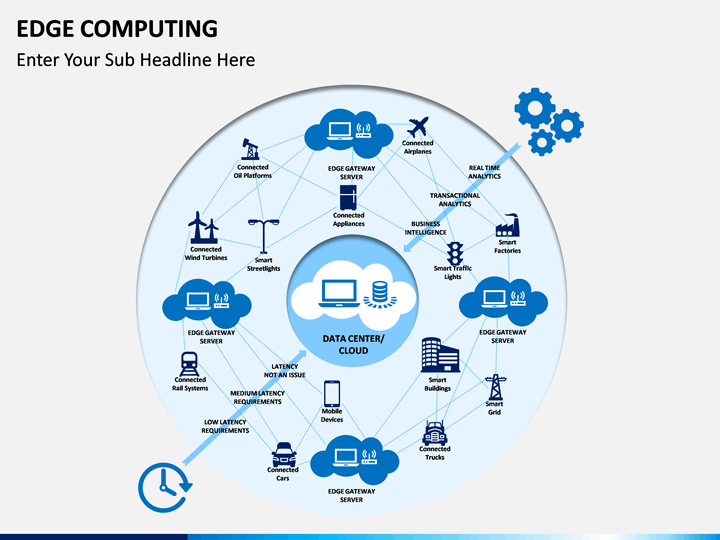

What is Edge Computing?

- The new era of IoT(Internet of Things) the number of devices connected to the web is increasing by the million.

- The existence of such devices demands cloud-based services for data collection. But there is a higher need to get the data centers closer to the devices.

Machine Learning Models for the Edge

Some Considerations for Running Models on the Pi

-

Memory Requirements -

Larger and more complicated models will require more memory -

Model Loading Time -

Models that require more memory will take a longer time to load -

Inference Time -

If your models take a long time for inference, then you might as well stick to the cloud

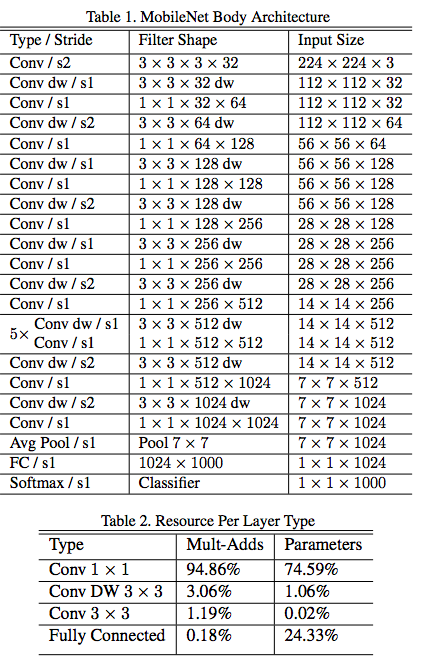

MobileNets

-

Uses two global hyperparameters

-

Helps to carry out tasks in a timely fashion on a computationally limited platform

-

It has a low latency model

-

It is built primarily from depthwise separable convolutions initially introduced and then which are subsequently used in the Inception Model