Streams

"We should have some ways of connecting programs like garden hose--screw in another segment when it becomes necessary to massage data in another way. This is the way of IO also."

What are Streams?

- Streams are abstractions of source and destination.

- Streams are collections of data — just like arrays or strings.

- The difference is that streams might not be available all at once, and they don’t have to fit in memory.

Why Streams?

- Streams are really powerful when working with large amounts of data, or data that’s coming from an external source one chunk at a time.

- They also give us the power of composability in our code.

- In Unix, streams have proven themselves over the decades as a dependable way to compose large systems out of small components that do one thing well.

- Streams can help to separate your concerns because they restrict the implementation surface area into a consistent interface that can be reused.

- Memory efficient: you don’t need to load large amounts of data in memory before you are able to process it

- Time efficiency: it takes significantly less time to start processing data as soon as you have it

Example 1

const server1 = http.createServer(function (req, res) {

let response = counter++;

console.log('S1: Received request ' + response);

fs.readFile(__dirname + '/bigFile', function (err, data) {

if (err) {

console.log(err);

} else {

console.log(`Sending response of ${response}`);

res.end(data);

}

});

});const server2 = http.createServer((req, res) => {

let response = counter++;

console.log('S2: Received request ' + response);

const reader = fs.createReadStream(__dirname + '/bigFile');

// console.log(`Sending response of ${response}`);

reader.pipe(res);

});Types (based on data value) of Steams

- All streams created by Node.js APIs operate exclusively on strings and Buffer (or Uint8Array) objects.

- It is possible, however, for stream implementations to work with other types of JavaScript values (with the exception of null, which serves a special purpose within streams).

- Such streams are considered to operate in "object mode".

- For example - Readable.from creates an object mode strings

Types of Stream

- Readable: streams from which data can be read (for example, fs.createReadStream()).

- Writable: streams to which data can be written (for example, fs.createWriteStream()).

- Duplex: streams that are both Readable and Writable (for example, net.Socket).

- Transform: Duplex streams that can modify or transform the data as it is written and read (for example, zlib.createDeflate()).

Readable Stream

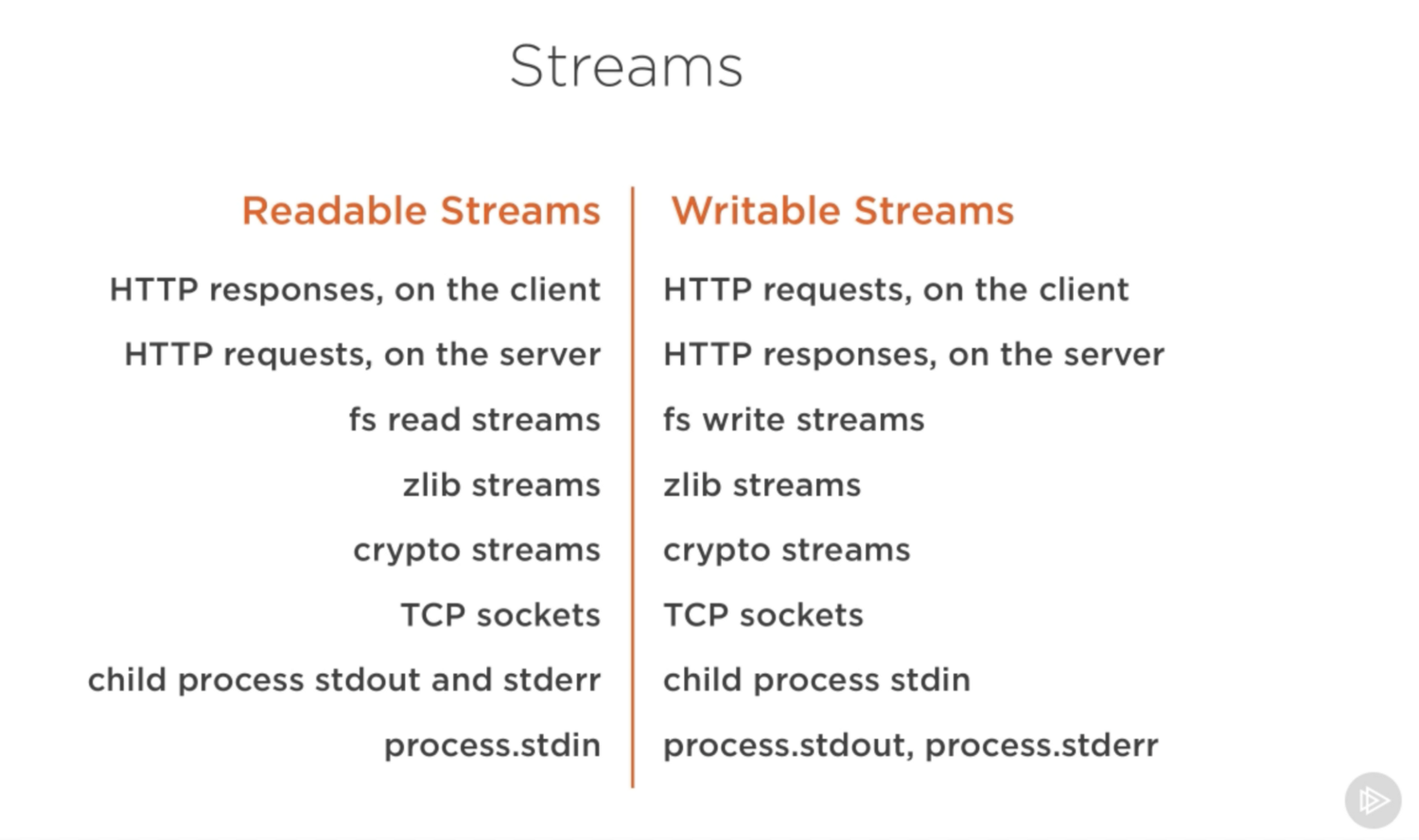

Examples of Readable streams include:

- HTTP responses, on the client

- HTTP requests, on the server

- fs read streams

- zlib streams

- crypto streams

- TCP sockets

- child process stdout and stderr

- process.stdin

Reading Modes

- Flowing Mode: data is read from the underlying system automatically and provided to an application as quickly as possible using events via the EventEmitter interface.

- Paused mode: the stream.read() method must be called explicitly to read chunks of data from the stream.

All Readable streams begin in paused mode but can be switched to flowing mode in one of the following ways:

- Adding a 'data' event handler.

- Calling the stream.resume() method.

- Calling the stream.pipe() method to send the data to a Writable.

Readable Stream

- A readable stream is an abstraction for a source from which data can be consumed.

- An example of that is the fs.createReadStream method.

const { createReadStream } = require('fs');

const stream = createReadStream('filetoread.txt');

All streams are instances of EventEmitter. They emit events that can be used to read and write data.

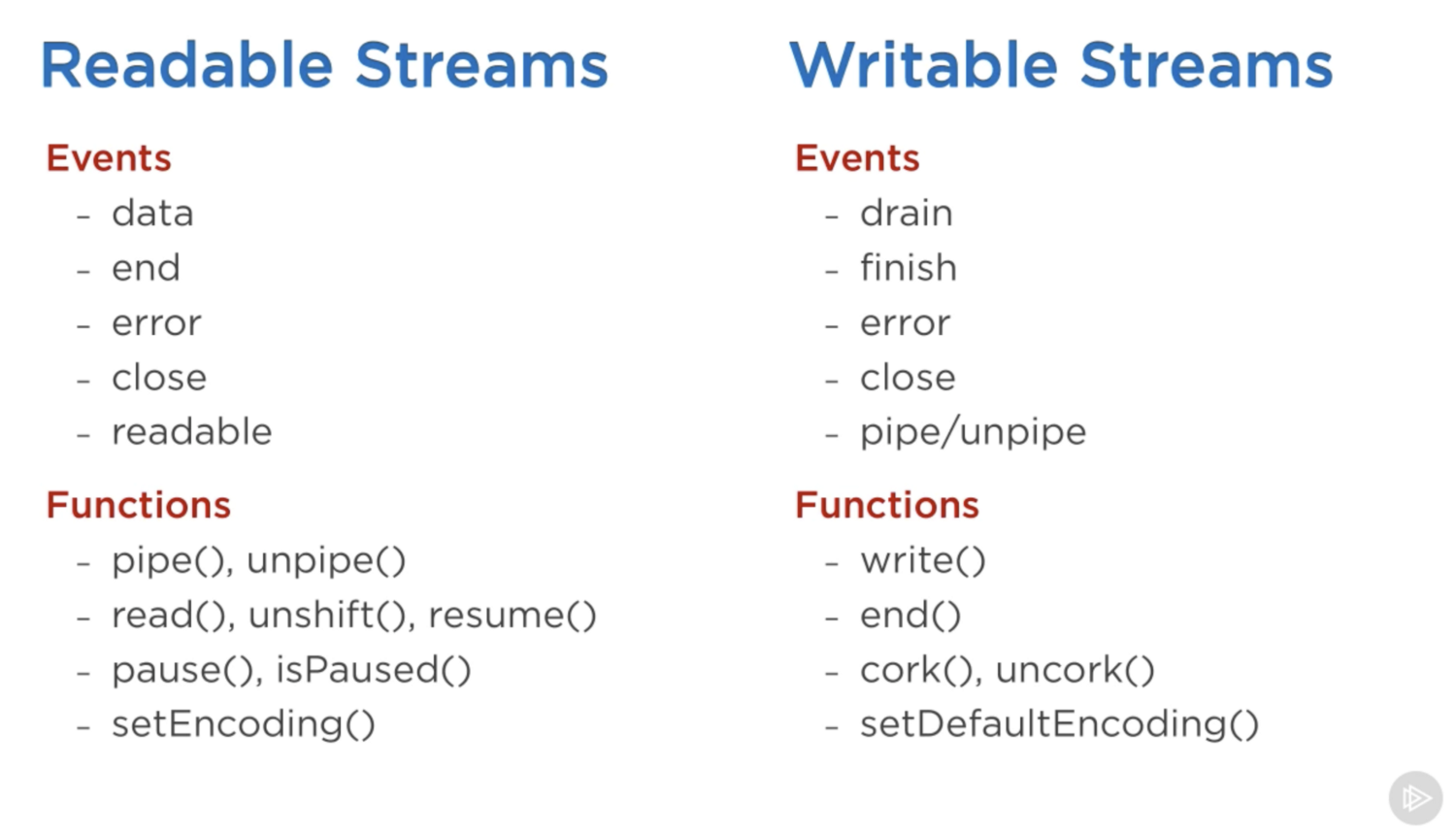

Readable Stream Events

- Event: 'close'

- Event: 'data'

- Event: 'end'

- Event: 'error'

- Event: 'pause'

- Event: 'readable'

- Event: 'resume'

Consuming a Readable Stream

- Using 'data' event

- Using 'readable' event

- Using 'pipe' method

- Using async iterators

readableSrc.pipe(writableDest)

- The pipe method returns the destination stream, which enabled us to do the chaining above.

- For streams a (readable), b and c (duplex), and d (writable), we can:

a.pipe(b).pipe(c).pipe(d)

# Which is equivalent to:

a.pipe(b)

b.pipe(c)

c.pipe(d)

# Which, in Linux, is equivalent to:

$ a | b | c | dAdvantages of Node pipe method

- Beside reading from a readable stream source and writing to a writable destination, the pipe method automatically manages a few things along the way.

- For example, it handles errors, end-of-files, and the cases when one stream is slower or faster than the other.

// readable.pipe(writable)

readable.on('data', (chunk) => {

writable.write(chunk);

});

readable.on('end', () => {

writable.end();

});

The most important events on a readable stream are:

- The data event, which is emitted whenever the stream passes a chunk of data to the consumer

- The end event, which is emitted when there is no more data to be consumed from the stream.

- The close event is emitted when the stream and any of its underlying resources (a file descriptor, for example) have been closed.

| Use-case | Class | Method(s) to implement |

|---|---|---|

| Reading only | Readable | _read() |

| Writing only | Writable | _write(), _writev(), _final() |

| Reading and writing | Duplex | _read(), _write(), _writev(), _final() |

| Operate on written data, then read the result | Transform | _transform(), _flush(), _final() |

Implementation

Implementing a Readable Stream

- The stream.Readable class is extended to implement a Readable stream.

- Custom Readable streams must call the new stream.Readable([options]) constructor and implement the readable._read() method.

- Using classes

- Using util.inhertis

- Using contstructor

Three ways

To implement a readable stream, we require the Readable interface, and construct an object from it, and implement a read() method in the stream’s configuration parameter:

const { Readable } = require('stream');

const inStream = new Readable({

read() {}

});

inStream.push('ABCDEFGHIJKLM');

inStream.push('NOPQRSTUVWXYZ');

inStream.push(null); // No more data

inStream.pipe(process.stdout);const inStream = new Readable({

read(size) {

this.push(String.fromCharCode(this.currentCharCode++));

if (this.currentCharCode > 90) {

this.push(null);

}

}

});

inStream.currentCharCode = 65;

inStream.pipe(process.stdout);

Writable Streams

Writable streams are an abstraction for a destination to which data is written.

const myStream = fs.createWriteStream('file');

myStream.write('some data');

myStream.write('some more data');

myStream.end('done writing data');

- Event: 'close'

- Event: 'drain'

- Event: 'error'

- Event: 'finish'

- Event: 'pipe'

- Event: 'unpipe'

Events

Writable Streams

- HTTP requests, on the client

- HTTP responses, on the server

- fs write streams

- zlib streams

- crypto streams

- TCP sockets

- child process stdin

- process.stdout, process.stderr

Implementing Writable Streams

Class-based / utils

- _construct(callback)

- _write(chunk, encoding, callback)

- _writev(chunks, callback)

- _destroy(err, callback)

- _final(callback)

Note: the Writable constructor does not have use _ for the method names

const { Writable } = require('stream');

const dest = [];

const writer = new Writable({

write(chunk, _, cb) {

dest.push(chunk);

cb();

},

writev(chunks, cb) {

dest.push(chunks);

cb();

}

});Duplex Streams

A Duplex stream is one that implements both Readable and Writable, such as a TCP socket connection.

Methods to implement

- _read

- _write

Transform Streams

A Transform stream is a Duplex stream where the output is computed in some way from the input.

Examples include zlib streams or crypto streams that compress, encrypt, or decrypt data.

- There is no requirement that the output be the same size as the input, the same number of chunks, or arrive at the same time.

- For example, a Hash stream will only ever have a single chunk of output which is provided when the input is ended.

Implementing a Transform Stream

- _flush(callback)

- _transform(chunk, encoding, callback)

const capitalise = new Transform({

transform(chunk, enc, cb) {

this.push(chunk.toString().toUpperCase());

cb();

},

decodeStrings: true,

});

Readable.from

By default, objectMode is set to true

Readable.from(iterable, [options]) it’s a utility method for creating Readable Streams out of iterators, which holds the data contained in iterable.

async function * generate() {

yield 'hello';

yield 'streams';

}

const readable = Readable.from(generate());

readable.on('data', (chunk) => {

console.log(chunk);

});

stream.finished

A function to get notified when a stream is no longer readable, writable or has experienced an error or a premature close event.

Especially useful in error handling scenarios where a stream is destroyed prematurely (like an aborted HTTP request), and will not emit 'end' or 'finish'.

const { finished } = require('stream');

const rs = fs.createReadStream('archive.tar');

finished(rs, (err) => {

if (err) {

console.error('Stream failed.', err);

} else {

console.log('Stream is done reading.');

}

});

rs.resume(); // Drain the stream.

stream.pipeline

- source <Stream> | <Iterable> | <AsyncIterable> | <Function>

- ...transforms <Stream> | <Function>

- destination <Stream> | <Function>

-

callback <Function> Called when the pipeline is fully done.

pipeline(

fs.createReadStream('file'),

zlib.createGzip(),

fs.createWriteStream('outfile.gz'),

(err) => {

if (err) {

console.error('Pipeline failed', err);

} else {

console.log('Pipeline succeeded');

}

}

);Using async iterators as streams

async function* cap(stream) {

for await (const chunk of stream) {

yield chunk.toString().toUpperCase();

}

}

pipeline(

createReadStream('filetoread.txt'),

cap,

createWriteStream('pipeline.txt'),

() => {

console.log('pipeline done');

}

);