Machine Learning

how to begin to learn the machine in PHP

ARKADIUSZ KONDAS

Senior Software Developer

@ Versum

Zend Certified Engineer

Code Craftsman

Blogger

Ultra Runner

@ ArkadiuszKondas

itcraftsman.pl

Zend Certified Architect

Agenda:

- Terminology

- Ways of learning

- Types of problems

- Example applications

Why Machine Learning?

Why Machine Learning?

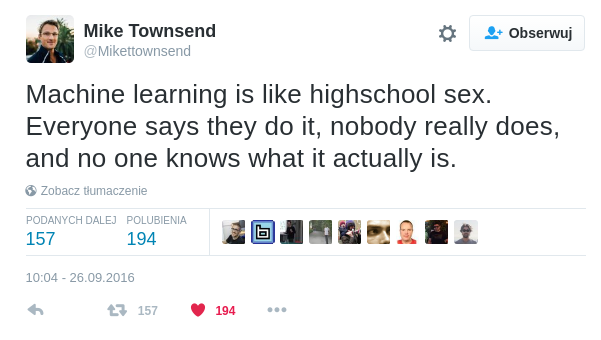

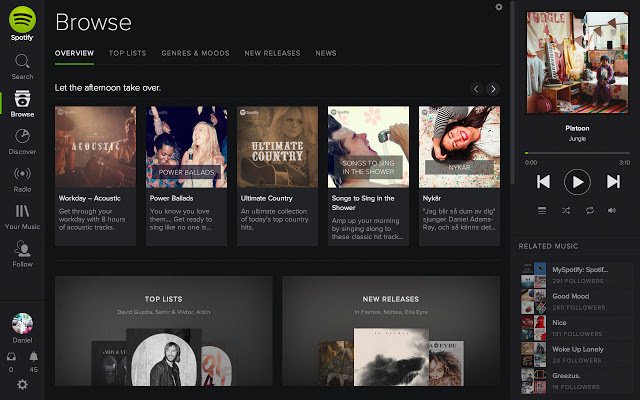

- Develop systems that can automatically adapt and customize themselves to individual users.

- personalized news or mail filter

Why Machine Learning?

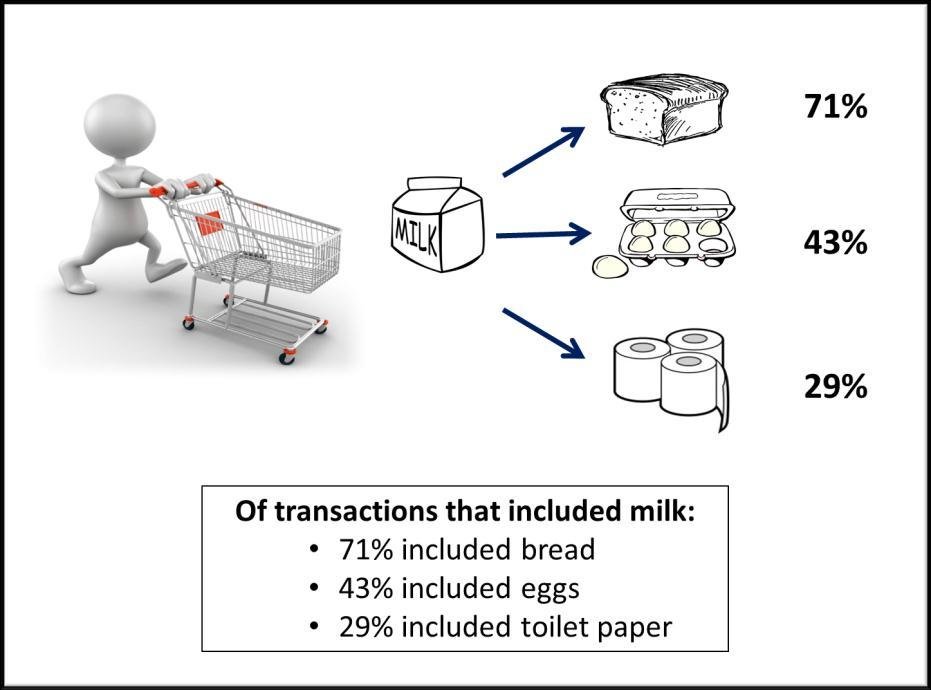

- Discover new knowledge from large databases (data mining).

- market basket analysis

Source: https://blogs.adobe.com/digitalmarketing/analytics/shopping-for-kpis-market-basket-analysis-for-web-analytics-data/

Why Machine Learning?

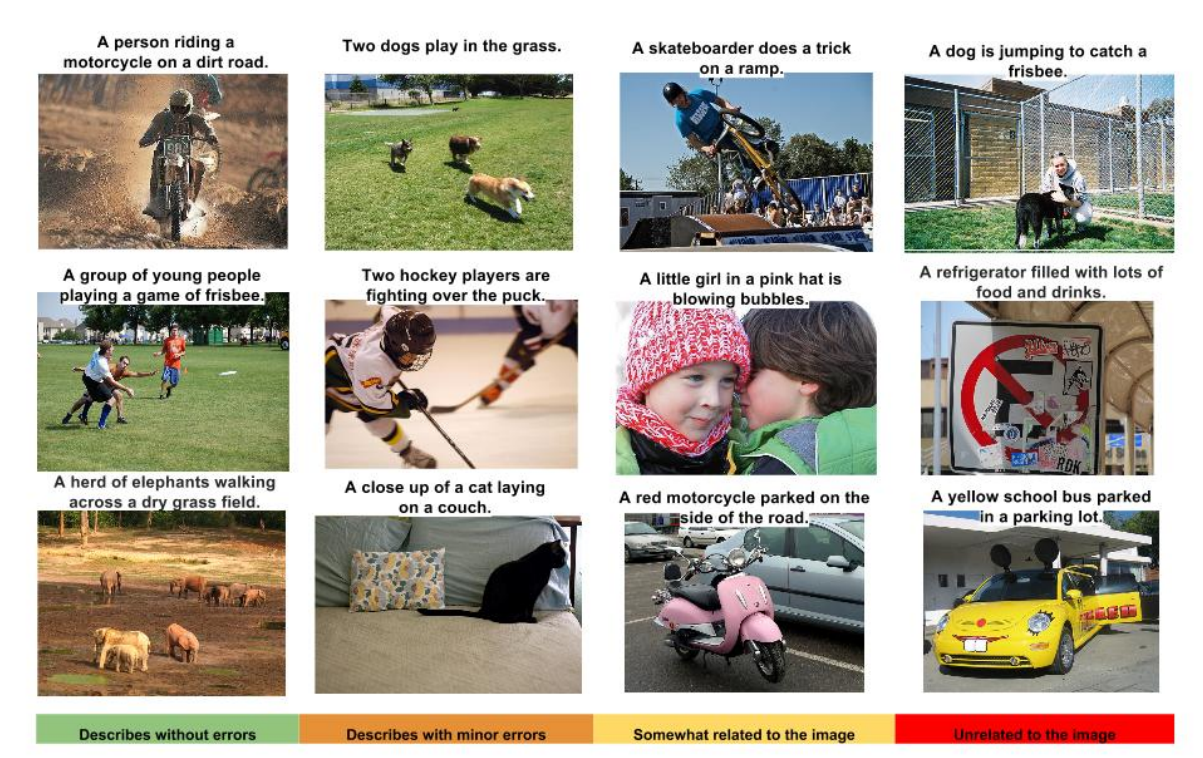

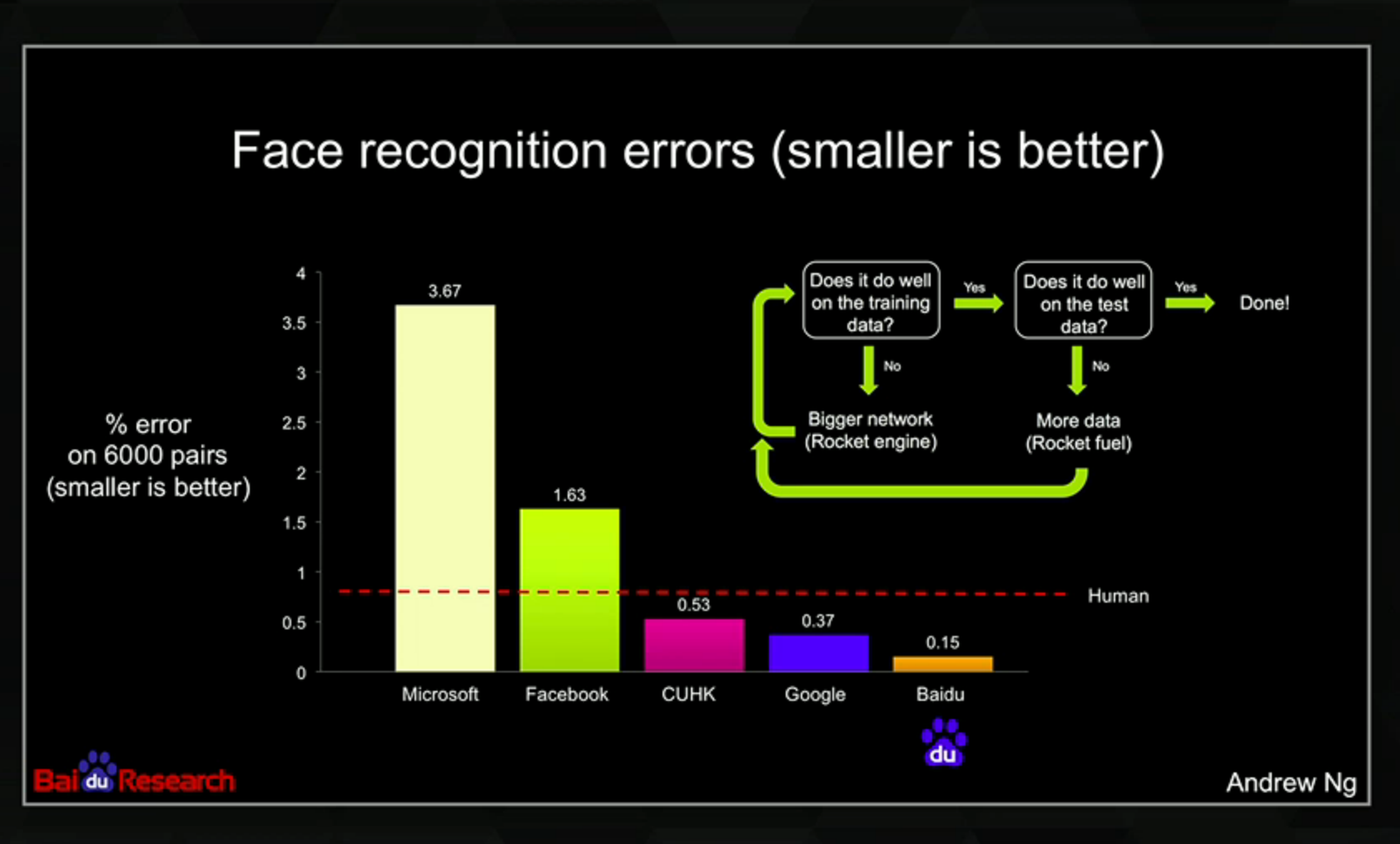

- Ability to mimic human and replace certain monotonous tasks - which require some intelligence.

- like recognizing handwritten characters

https://github.com/tensorflow/models/tree/master/im2txt

Why Machine Learning?

http://articles.concreteinteractive.com/nicole-kidmans-fake-nose/

Why Machine Learning?

- Develop systems that are too difficult/expensive to construct manually because they require specific detailed skills or knowledge tuned to a specific task (knowledge engineering bottleneck)

Why now?

Why now?

Data availability

internet, smart devices, IoT

Why now?

Computation power

CPU, GPU (TensorFlow)

Terminology

Terminology

Machine Learning

Learning is any process by which a system improves performance from experience

Terminology

Samples

a sample is an item to process (e.g. classify). It can be a document, a picture, a sound, a video, a row in database or CSV file, or whatever you can describe with a fixed set of features.

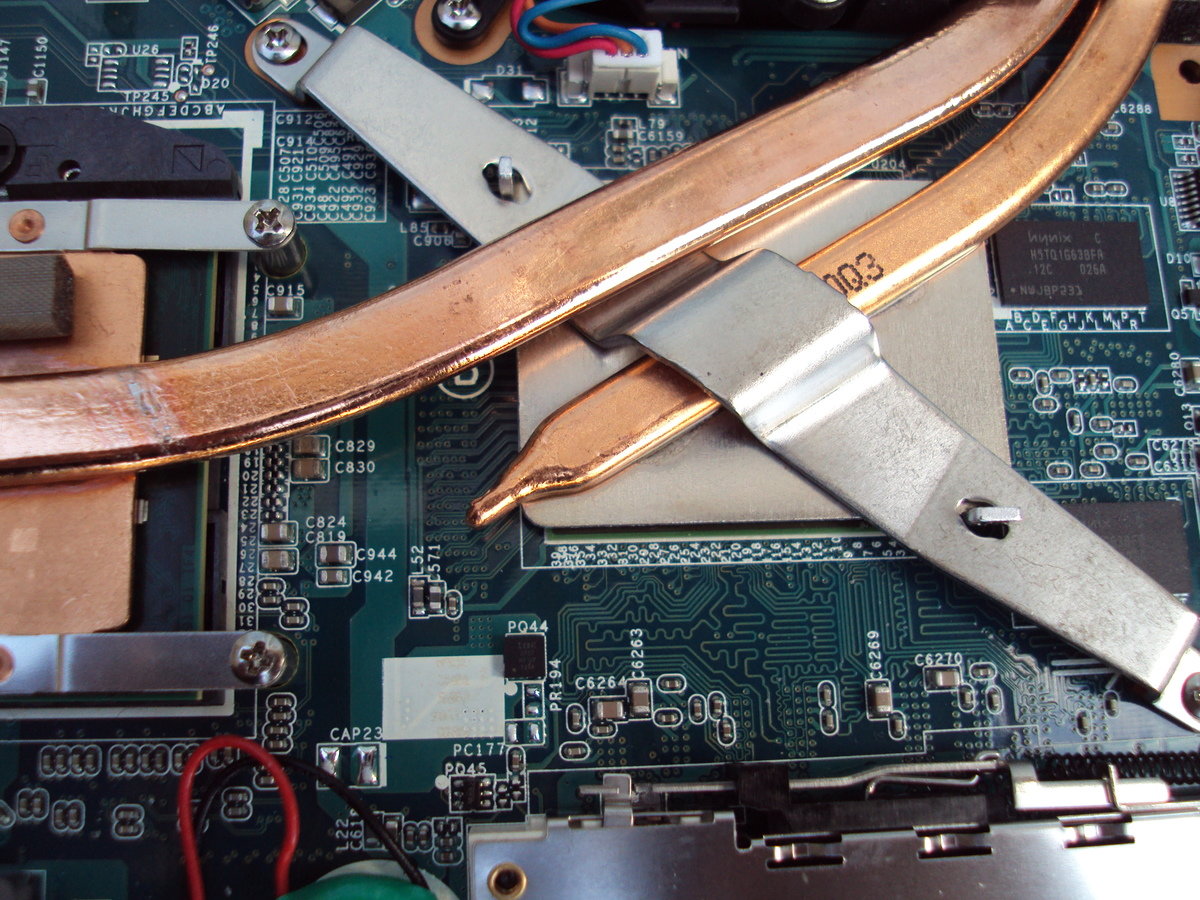

Terminology

Features

the number of features or distinct traits that can be used to describe each item in a quantitative manner

IBU: 45 (0 - 120) International Bittering Units

ALK: 4,7% (0% - 12%)

EXT: 12,0 (0 - 30) BLG, PLATO

EBC: 9 (0 - 80) European Brewery Convention

Terminology

Feature vector

is an n-dimensional vector of numerical features that represent some object.

$beer = [45, 4.7, 12.0, 9];Terminology

Feature extraction

preparation of feature vector – transforms the data in the high-dimensional space to a space of fewer dimensions

$beer = [?, 7.0, 17.5, 9];Terminology

Training / Evolution set

Set of data to discover potentially predictive relationships.

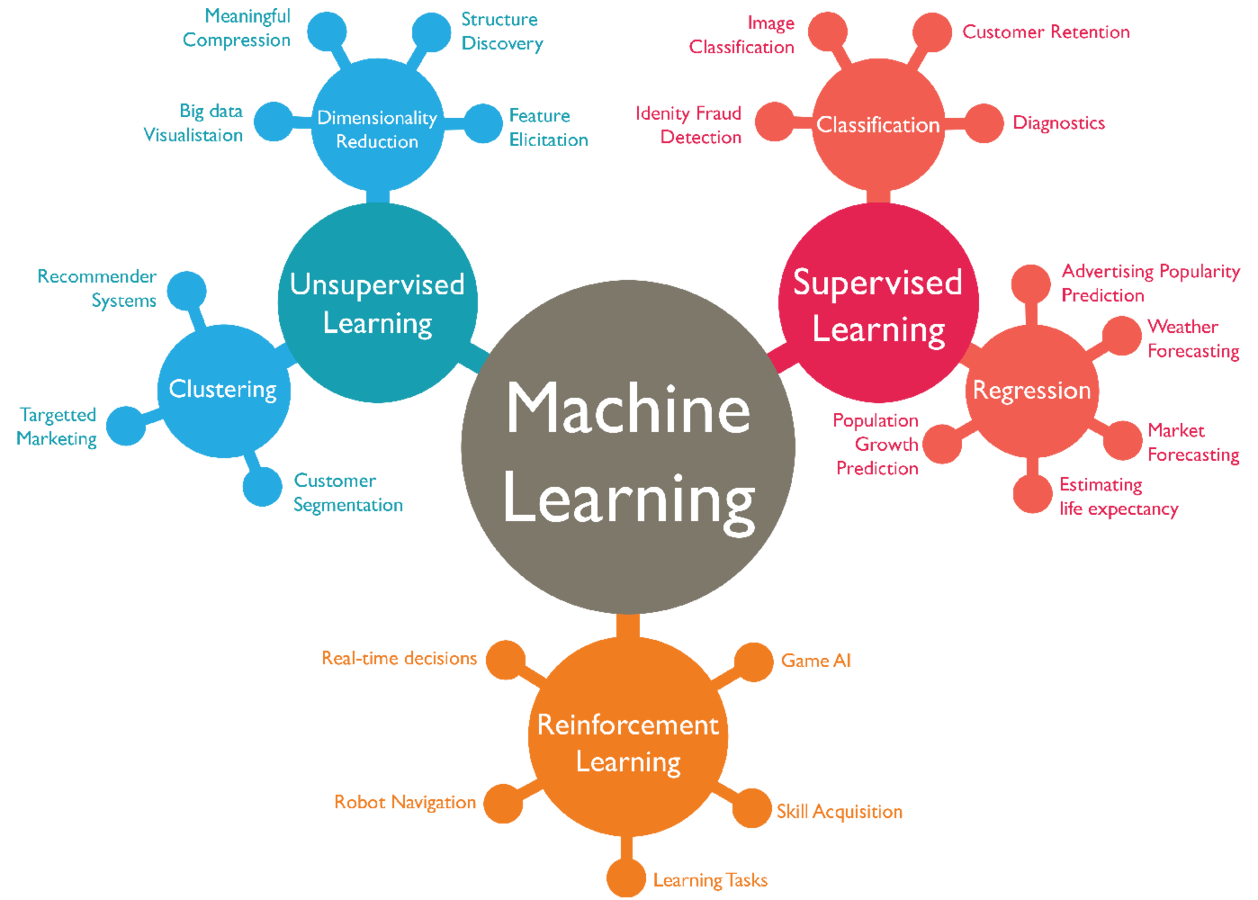

Ways of learning

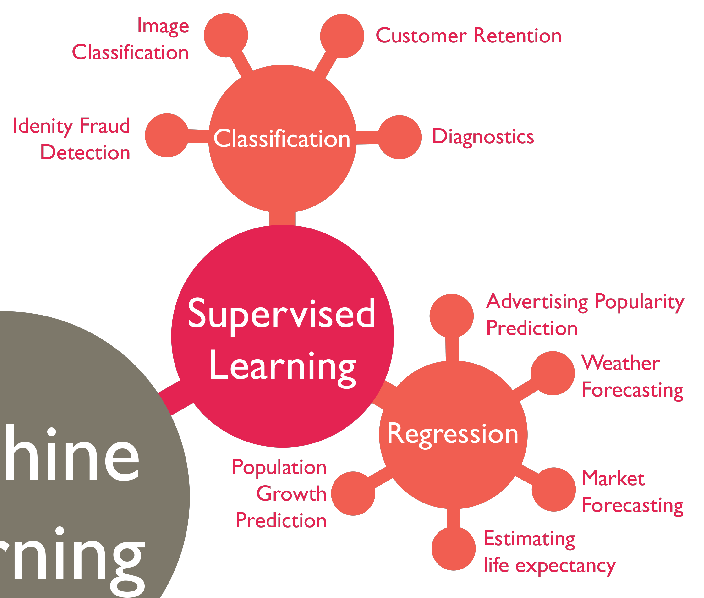

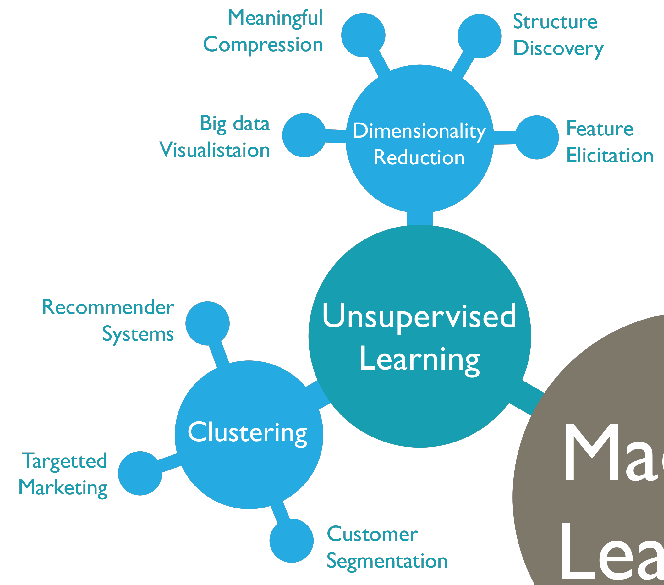

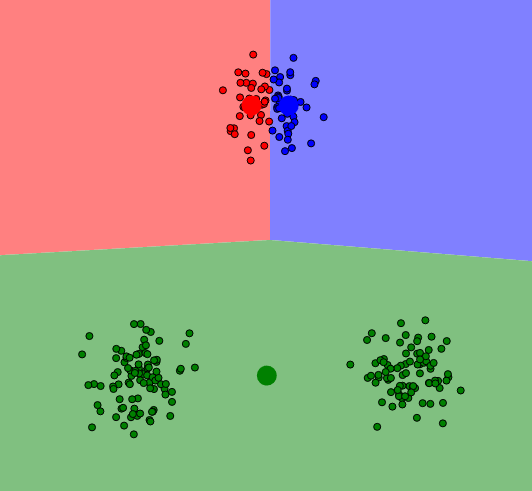

Supervised learning

Source: http://www.simplilearn.com/what-is-machine-learning-and-why-it-matters-article

Supervised learning

Unsupervised learning

Source: http://www.simplilearn.com/what-is-machine-learning-and-why-it-matters-article

Unsupervised learning

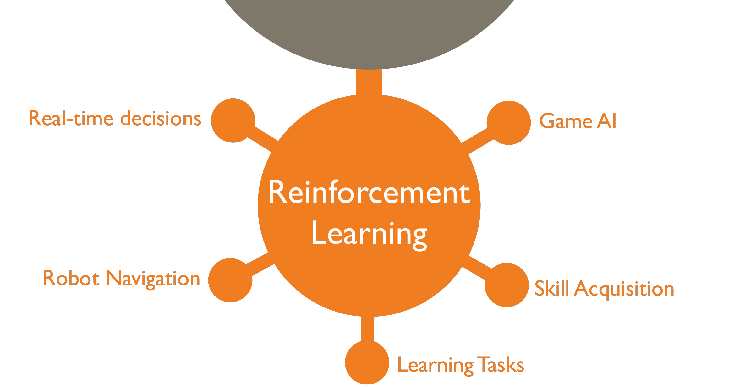

Reinforcement learning

Source: http://www.simplilearn.com/what-is-machine-learning-and-why-it-matters-article

Reinforcement learning

Types of problems

https://github.com/php-ai/php-ml

PHP-ML - Machine Learning library for PHP

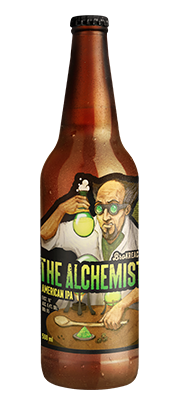

Classification

Source: http://www.boozology.com/beer-101-different-types-of-beer/

ibu,alk,ext,style,name

75,6.5,16,"American IPA","Szalony Alchemik"

28,4.2,12.5,"Summer Ale","Miss Lata"

40,4,10.5,"Session IPA","Tajemniczy Jeździec"

42,5.7,14.5,"Pils","Dziki Samotnik"

20,2.9,7.7,"Pszeniczne","Dębowa Panienka"

36,5.2,12.5,"Pszeniczne","Piękna Nieznajoma"

28,4.8,14.0,"Ale","Mała Czarna"

35,4.6,12.5,"American IPA","Nieproszony Gość"

20,5.2,12.5,"Pils","Ostatni sprawiedliwy"

30,4.8,12.5,"Pszeniczne","Dziedzic Pruski"

75,7.5,18.0,"Summer Ale","Bawidamek"

45,4.7,12.0,"Pils","Miś Wojtek"

20,5.2,13.0,"Pils","The Dancer"

30,4.7,12.0,"Pszeniczne","The Dealer"

120,8.9,19.0,"American IPA","The Fighter"

85,6.4,16.0,"American IPA","The Alchemist"

100,10.3,24,"Pszeniczne","The Gravedigger"

40,4.8,12.0,"American IPA","The Teacher"

75,7.0,16.0,"Ale","The Butcher"

80,6.7,16.0,"Ale","The Miner"Classification

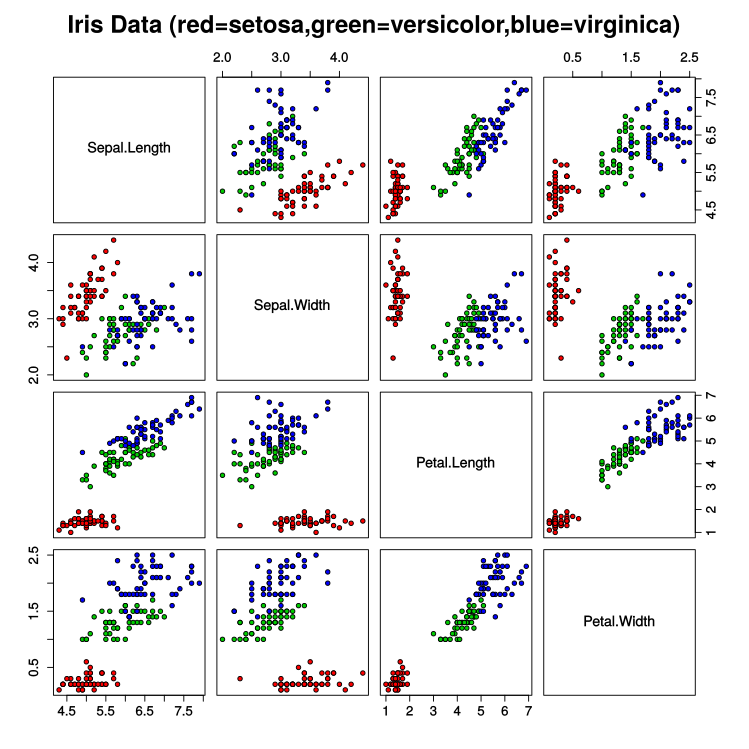

5.1,3.8,1.6,0.2,setosa

4.6,3.2,1.4,0.2,setosa

5.3,3.7,1.5,0.2,setosa

5,3.3,1.4,0.2,setosa

7,3.2,4.7,1.4,versicolor

6.4,3.2,4.5,1.5,versicolor

6.9,3.1,4.9,1.5,versicolor

5.5,2.3,4,1.3,versicolor

5.9,3,5.1,1.8,virginica

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

5.4,3.9,1.7,0.4,setosa

4.6,3.4,1.4,0.3,setosa

5,3.4,1.5,0.2,setosa

4.4,2.9,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5.4,3.7,1.5,0.2,setosa

4.8,3.4,1.6,0.2,setosa

4.8,3,1.4,0.1,setosa

4.3,3,1.1,0.1,setosa

5.8,4,1.2,0.2,setosa

5.7,4.4,1.5,0.4,setosa

5.4,3.9,1.3,0.4,setosa

5.1,3.5,1.4,0.3,setosa

5.7,3.8,1.7,0.3,setosa

5.1,3.8,1.5,0.3,setosa

5.4,3.4,1.7,0.2,setosa

5.1,3.7,1.5,0.4,setosa

4.6,3.6,1,0.2,setosa

5.1,3.3,1.7,0.5,setosa

4.8,3.4,1.9,0.2,setosa

5,3,1.6,0.2,setosa

5,3.4,1.6,0.4,setosa

5.2,3.5,1.5,0.2,setosa

5.2,3.4,1.4,0.2,setosa

4.7,3.2,1.6,0.2,setosa

4.8,3.1,1.6,0.2,setosa

5.4,3.4,1.5,0.4,setosa

5.2,4.1,1.5,0.1,setosa

5.5,4.2,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5,3.2,1.2,0.2,setosa

5.5,3.5,1.3,0.2,setosa

4.9,3.1,1.5,0.1,setosa

4.4,3,1.3,0.2,setosa

5.1,3.4,1.5,0.2,setosa

5,3.5,1.3,0.3,setosa

4.5,2.3,1.3,0.3,setosa

4.4,3.2,1.3,0.2,setosa

5,3.5,1.6,0.6,setosa

5.1,3.8,1.9,0.4,setosa

4.8,3,1.4,0.3,setosa

5.1,3.8,1.6,0.2,setosa

4.6,3.2,1.4,0.2,setosa

5.3,3.7,1.5,0.2,setosa

5,3.3,1.4,0.2,setosa

7,3.2,4.7,1.4,versicolor

6.4,3.2,4.5,1.5,versicolor

6.9,3.1,4.9,1.5,versicolor

5.5,2.3,4,1.3,versicolor

6.5,2.8,4.6,1.5,versicolor

5.7,2.8,4.5,1.3,versicolor

6.3,3.3,4.7,1.6,versicolor

4.9,2.4,3.3,1,versicolor

6.6,2.9,4.6,1.3,versicolor

5.2,2.7,3.9,1.4,versicolor

5,2,3.5,1,versicolor

5.9,3,4.2,1.5,versicolor

6,2.2,4,1,versicolor

6.1,2.9,4.7,1.4,versicolor

5.6,2.9,3.6,1.3,versicolor

6.7,3.1,4.4,1.4,versicolor

5.6,3,4.5,1.5,versicolor

5.8,2.7,4.1,1,versicolor

6.2,2.2,4.5,1.5,versicolor

5.6,2.5,3.9,1.1,versicolor

5.9,3.2,4.8,1.8,versicolor

6.1,2.8,4,1.3,versicolor

6.3,2.5,4.9,1.5,versicolor

6.1,2.8,4.7,1.2,versicolor

6.4,2.9,4.3,1.3,versicolor

6.6,3,4.4,1.4,versicolor

6.8,2.8,4.8,1.4,versicolor

6.7,3,5,1.7,versicolor

6,2.9,4.5,1.5,versicolor

5.7,2.6,3.5,1,versicolor

5.5,2.4,3.8,1.1,versicolor

5.5,2.4,3.7,1,versicolor

5.8,2.7,3.9,1.2,versicolor

6,2.7,5.1,1.6,versicolor

5.4,3,4.5,1.5,versicolor

6,3.4,4.5,1.6,versicolor

6.7,3.1,4.7,1.5,versicolor

6.3,2.3,4.4,1.3,versicolor

5.6,3,4.1,1.3,versicolor

5.5,2.5,4,1.3,versicolor

5.5,2.6,4.4,1.2,versicolor

6.1,3,4.6,1.4,versicolor

5.8,2.6,4,1.2,versicolor

5,2.3,3.3,1,versicolor

5.6,2.7,4.2,1.3,versicolor

5.7,3,4.2,1.2,versicolor

5.7,2.9,4.2,1.3,versicolor

6.2,2.9,4.3,1.3,versicolor

5.1,2.5,3,1.1,versicolor

5.7,2.8,4.1,1.3,versicolor

6.3,3.3,6,2.5,virginica

5.8,2.7,5.1,1.9,virginica

7.1,3,5.9,2.1,virginica

6.3,2.9,5.6,1.8,virginica

6.5,3,5.8,2.2,virginica

7.6,3,6.6,2.1,virginica

4.9,2.5,4.5,1.7,virginica

7.3,2.9,6.3,1.8,virginica

6.7,2.5,5.8,1.8,virginica

7.2,3.6,6.1,2.5,virginica

6.5,3.2,5.1,2,virginica

6.4,2.7,5.3,1.9,virginica

6.8,3,5.5,2.1,virginica

5.7,2.5,5,2,virginica

5.8,2.8,5.1,2.4,virginica

6.4,3.2,5.3,2.3,virginica

6.5,3,5.5,1.8,virginica

7.7,3.8,6.7,2.2,virginica

7.7,2.6,6.9,2.3,virginica

6,2.2,5,1.5,virginica

6.9,3.2,5.7,2.3,virginica

5.6,2.8,4.9,2,virginica

7.7,2.8,6.7,2,virginica

6.3,2.7,4.9,1.8,virginica

6.7,3.3,5.7,2.1,virginica

7.2,3.2,6,1.8,virginica

6.2,2.8,4.8,1.8,virginica

6.1,3,4.9,1.8,virginica

6.4,2.8,5.6,2.1,virginica

7.2,3,5.8,1.6,virginica

7.4,2.8,6.1,1.9,virginica

7.9,3.8,6.4,2,virginica

6.4,2.8,5.6,2.2,virginica

6.3,2.8,5.1,1.5,virginica

6.1,2.6,5.6,1.4,virginica

7.7,3,6.1,2.3,virginica

6.3,3.4,5.6,2.4,virginica

6.4,3.1,5.5,1.8,virginica

6,3,4.8,1.8,virginica

6.9,3.1,5.4,2.1,virginica

6.7,3.1,5.6,2.4,virginica

6.9,3.1,5.1,2.3,virginica

5.8,2.7,5.1,1.9,virginica

6.8,3.2,5.9,2.3,virginica

6.7,3.3,5.7,2.5,virginica

6.7,3,5.2,2.3,virginica

6.3,2.5,5,1.9,virginica

6.5,3,5.2,2,virginica

6.2,3.4,5.4,2.3,virginica

5.9,3,5.1,1.8,virginicaClassification

Classification

use Phpml\Classification\KNearestNeighbors;

use Phpml\CrossValidation\RandomSplit;

use Phpml\Dataset\Demo\IrisDataset;

use Phpml\Metric\Accuracy;

$dataset = new IrisDataset();

$split = new RandomSplit($dataset);

$classifier = new KNearestNeighbors();

$classifier->train(

$split->getTrainSamples(),

$split->getTrainLabels()

);

$predicted = $classifier->predict($split->getTestSamples());

echo sprintf("Accuracy: %s",

Accuracy::score($split->getTestLabels(), $predicted)

);

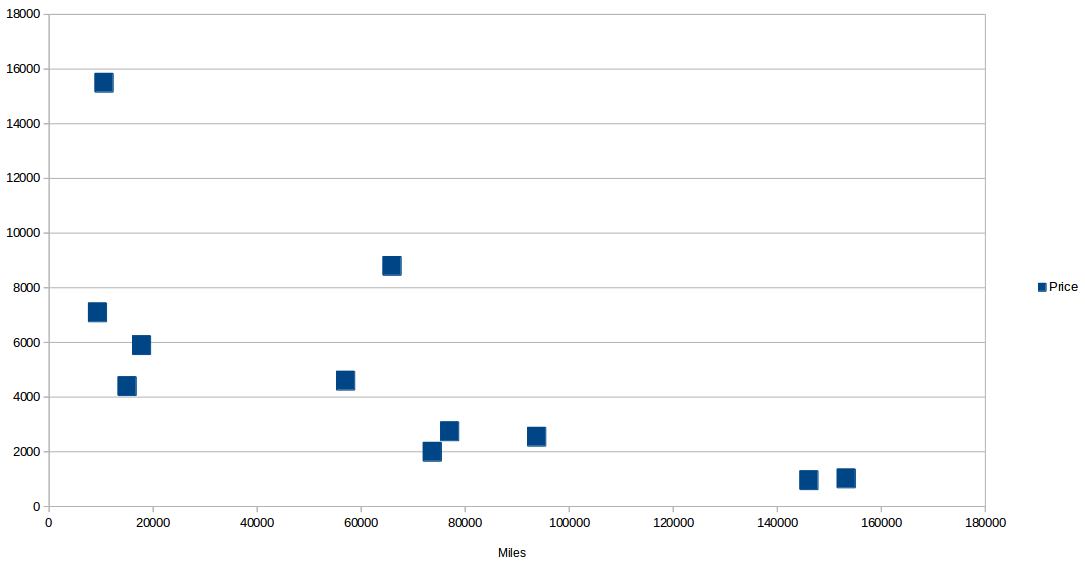

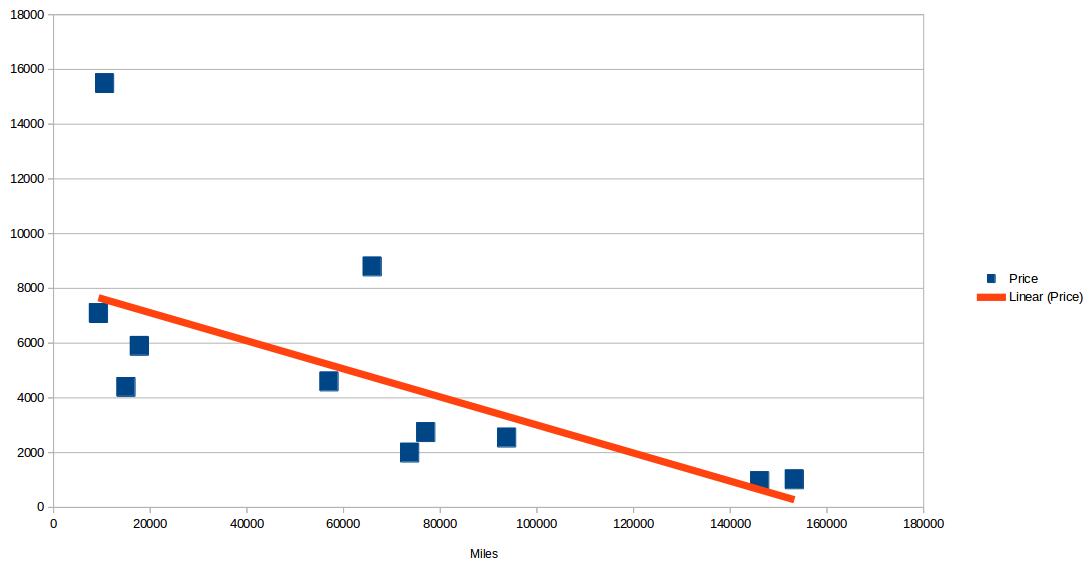

Regression

Miles Price

9300 7100

10565 15500

15000 4400

15000 4400

17764 5900

57000 4600

65940 8800

73676 2000

77006 2750

93739 2550

146088 960

153260 1025

Regression

use Phpml\Regression\LeastSquares;

$samples = [[9300], [10565], [15000], [15000], [17764], [57000], [65940], [73676], [77006], [93739], [146088], [153260]];

$targets = [7100, 15500, 4400, 4400, 5900, 4600, 8800, 2000, 2750, 2550, 960, 1025];

$regression = new LeastSquares();

$regression->train($samples, $targets);

$regression->getCoefficients();

$regression->getIntercept();

$price = $regression->predict([35000]);

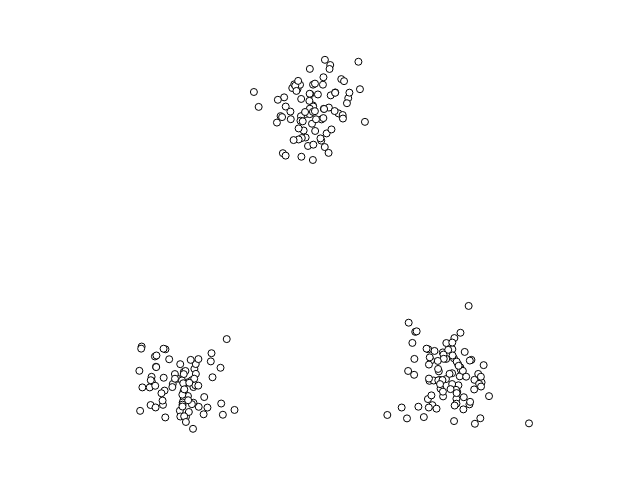

// $regression->getR2(); not implementedClustering

338.04200965504333, 340.96099477471597

325.29926486005047, 351.5486679994454

578.4499100418299, 337.60567937295593

636.6010916396216, 337.1655634700131

463.61495372140104, 87.30781540000328

309.68095048053567, 354.79933984182196

569.0001374385495, 330.5023290963775

304.07877801351003, 382.1851806595662

346.4318094249369, 310.88359489761524

334.2663485285672, 344.5296863511734

299.4530082502729, 372.35350555098955

316.6104171289118, 375.41602001034096

591.0246508090806, 367.94797729389813

444.34991671401906, 118.45496995943586`

433.8227728530299, 71.72222842689047

444.1053454998834, 88.83183753321407

324.12063379205586, 395.6336572036508

301.51836226006776, 386.3882932443487

290.99261735355236, 344.87568081586755

581.4049866784304, 371.3100282327115

337.3816662630702, 355.6395349632366

498.01819058795814, 108.48920265724882

633.0636222044708, 326.3110287785337

626.3134372729498, 348.71913526515345

445.42859704368556, 75.27305470137435

306.81410851018825, 378.7736686118892

439.09865975254024, 76.66971977093272

568.7282712141936, 358.41194912805474

484.31765468419354, 106.46382764011003

449.07188156546613, 124.09596458236962

436.2664927059137, 65.18430339142009

317.17960432822883, 383.0054323942884

617.9759662145998, 344.4047557583218

616.3127444692847, 370.06136729095704

321.7158651152831, 362.67159106183084

346.4608886567032, 314.51981989975513

618.2526036244853, 357.0196182265154

590.7945765999675, 324.6498992628791

449.38056945433436, 118.60287536865769

472.066687997031, 80.37719565025702

294.3118847109533, 353.4865615033949

478.97062534720897, 95.70649681953358

567.7841441303771, 320.75547779107023

299.2327209106677, 352.1922118198445

292.16634675253886, 373.83696459447344

461.85741799708296, 117.44946869574665

299.07107909731684, 335.77658215111626

319.910986973631, 384.62564615284293

471.30428556038567, 106.28818985606335

559.5279075420794, 310.54351840325637

634.3642075285027, 335.5029769601599

439.8317690837323, 99.23099273365165

596.8791693350236, 381.59392224565795

604.1516435053849, 405.67736285856677

420.9873305290532, 90.75617345698856

437.59770111677204, 102.76018890480634

458.53847739928796, 82.72050379444829

608.088322572504, 376.039913501605

307.97097959822054, 324.722286003262

312.8947110796786, 342.3285548762767

277.14935633237906, 335.3623461390265

583.5560528138124, 359.803102482816

281.5020161290845, 317.9752805728433

560.5928946888674, 391.38894054488634

588.8035684901414, 318.5353923361487

411.6735472125374, 101.13213926573758

335.27072033693, 350.83097073880435

587.7683201110738, 376.82513508927434

611.1566402943419, 357.26648853430015

427.2108675492812, 123.9355948432858

299.39380762681003, 355.28019695638045

602.0009869194577, 347.296571408476

486.3169307223569, 83.6416789424083

325.289213170922, 363.1833076023479

442.75071528370154, 87.48454115478904

330.48743133797694, 379.067400358574

472.6320292955885, 90.23629371803918

587.4838699987308, 380.34546667208446

588.1129869337481, 381.2727150846652

591.2110482590026, 356.4409869262666

576.6265527643077, 365.78582049509833

593.9074313070807, 391.83088813346376

608.1987741896169, 418.608525504521

444.1151674614539, 104.33389362734681

290.6610271195223, 335.67104011710535

589.4047360621089, 334.42201557780413

434.8858913992243, 59.69518765296425

295.2734178813325, 369.4223946120387

585.5138327920143, 371.80999860054123

329.18314608591544, 367.39749113835285

600.0886391882641, 350.76731943539863

425.5700946282092, 75.53475894151018

573.7681709045746, 386.07330136408166

300.05777637102244, 372.88029897823975

448.8305033529923, 63.695405132884275

587.6650652701011, 368.9692221019065

490.8909673624537, 96.29103599971592

330.6332057590241, 304.0525581468305

588.5894529768525, 326.3580453764175

589.8695007238415, 366.44966849044806

327.5091798778813, 404.35590896990914Clustering

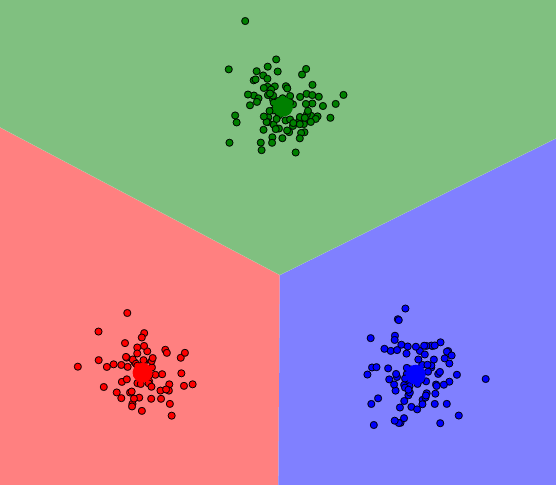

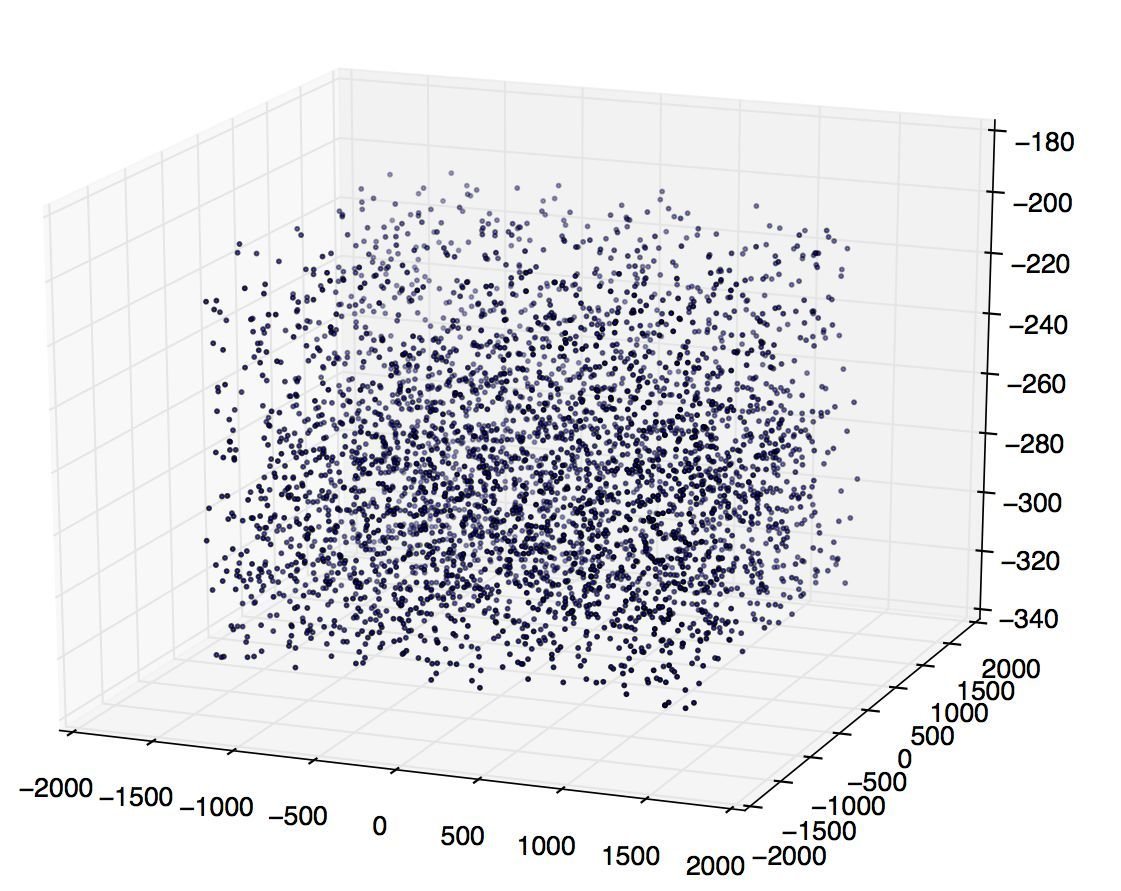

Clustering

Clustering

Clustering

Clustering

use Phpml\Clustering\KMeans;

$samples = [

[1, 1], [8, 7], [1, 2],

[7, 8], [2, 1], [8, 9]

];

$kmeans = new KMeans(2);

$clusters = $kmeans->cluster($samples);

$clusters = [

[[1, 1], [1, 2], [2, 1]],

[[8, 7], [7, 8], [8, 9]]

];Preprocessing

use Phpml\Preprocessing\Imputer;

use Phpml\Preprocessing\Imputer\Strategy\MeanStrategy;

$data = [

[1, null, 3, 4],

[4, 3, 2, 1],

[null, 6, 7, 8],

[8, 7, null, 5],

];

$imputer = new Imputer(

null, new MeanStrategy(), Imputer::AXIS_COLUMN, $data

);

$imputer->transform($data);

$data = [

[1, 5.33, 3, 4],

[4, 3, 2, 1],

[4.33, 6, 7, 8],

[8, 7, 4, 5],

];Feature Extraction

use Phpml\FeatureExtraction\TokenCountVectorizer;

use Phpml\Tokenization\WhitespaceTokenizer;

$samples = [

'Lorem ipsum dolor sit amet dolor',

'Mauris placerat ipsum dolor',

'Mauris diam eros fringilla diam',

];

$vectorizer = new TokenCountVectorizer(new WhitespaceTokenizer());

$vectorizer->fit($samples);

$vectorizer->getVocabulary()

$vectorizer->transform($samples);

$tokensCounts = [

[0 => 1, 1 => 1, 2 => 2, 3 => 1, 4 => 1, 5 => 0, 6 => 0, 7 => 0, 8 => 0, 9 => 0],

[0 => 0, 1 => 1, 2 => 1, 3 => 0, 4 => 0, 5 => 1, 6 => 1, 7 => 0, 8 => 0, 9 => 0],

[0 => 0, 1 => 0, 2 => 0, 3 => 0, 4 => 0, 5 => 1, 6 => 0, 7 => 2, 8 => 1, 9 => 1],

];Model selection

use Phpml\CrossValidation\RandomSplit;

use Phpml\CrossValidation\StratifiedRandomSplit;

use Phpml\Dataset\ArrayDataset;

$dataset = new ArrayDataset(

$samples = [[1], [2], [3], [4]],

$labels = ['a', 'a', 'b', 'b']

);

$randomSplit = new RandomSplit($dataset, 0.5);$dataset = new ArrayDataset(

$samples = [[1], [2], [3], [4], [5], [6], [7], [8]],

$labels = ['a', 'a', 'a', 'a', 'b', 'b', 'b', 'b']

);

$split = new StratifiedRandomSplit($dataset, 0.5);Workflow

$transformers = [

new Imputer(null, new MostFrequentStrategy()),

new Normalizer(),

];

$estimator = new SVC();

$samples = [

[1, -1, 2],

[2, 0, null],

[null, 1, -1],

];

$targets = [

4,

1,

4,

];

$pipeline = new Pipeline($transformers, $estimator);

$pipeline->train($samples, $targets);

$predicted = $pipeline->predict([[0, 0, 0]]);

// $predicted == 4Example applications

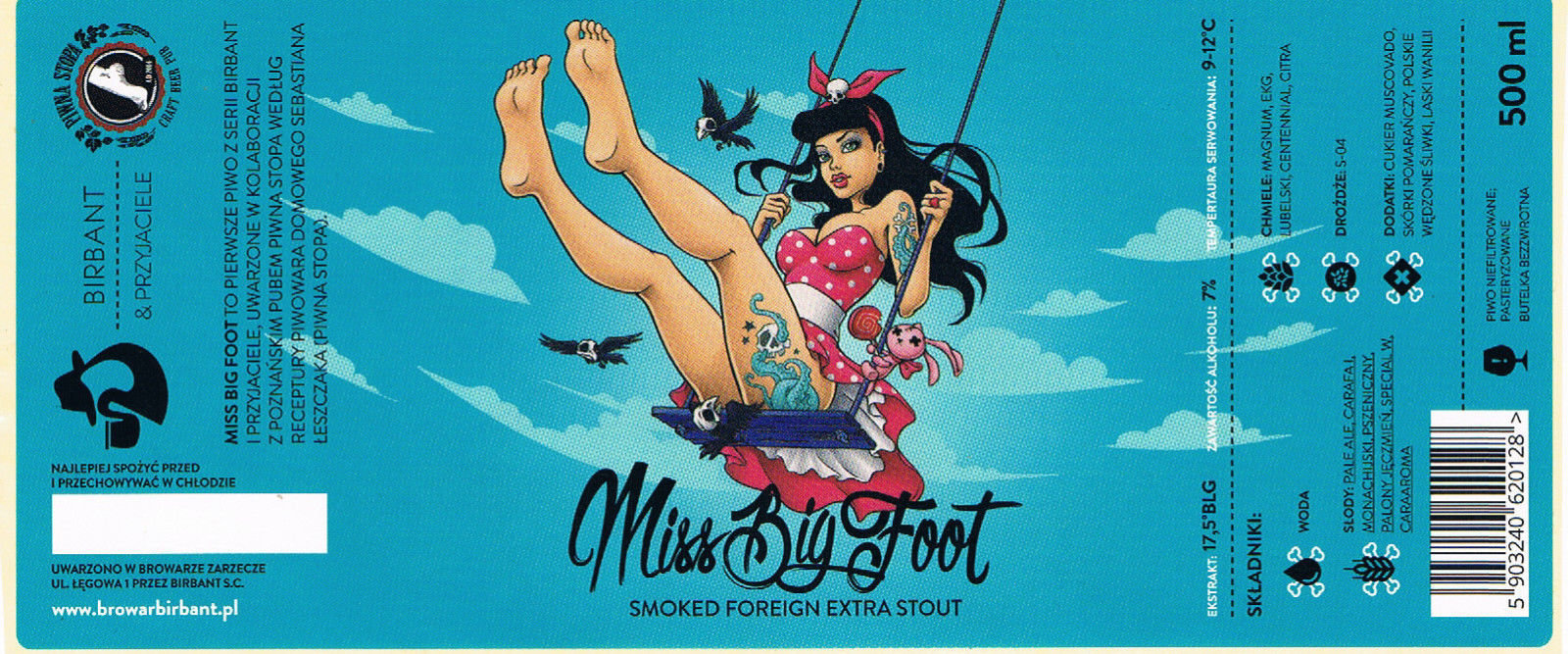

Beer judge

ibu,alk,ext,score,name

75,6.5,16,7,"Szalony Alchemik"

28,4.2,12.5,6,"Miss Lata"

40,4,10.5,7,"Tajemniczy Jeździec"

42,5.7,14.5,6,"Dziki Samotnik"

20,2.9,7.7,4,"Dębowa Panienka"

36,5.2,12.5,5,"Piękna Nieznajoma"

28,4.8,14.0,3,"Mała Czarna"

35,4.6,12.5,7,"Nieproszony Gość"

20,5.2,12.5,8,"Ostatni sprawiedliwy"

30,4.8,12.5,6,"Dziedzic Pruski"

75,7.5,18.0,9,"Bawidamek"

45,4.7,12.0,8,"Miś Wojtek"

20,5.2,13.0,8,"The Dancer"

30,4.7,12.0,4,"The Dealer"

120,8.9,19.0,3,"The Fighter"

85,6.4,16.0,9,"The Alchemist"

100,10.3,24,4,"The Gravedigger"

40,4.8,12.0,8,"The Teacher"

75,7.0,16.0,7,"The Butcher"

80,6.7,16.0,5,"The Miner"Beer judge

use Phpml\Classification\SVC;

use Phpml\CrossValidation\StratifiedRandomSplit;

use Phpml\Dataset\CsvDataset;

use Phpml\Metric\Accuracy;

use Phpml\SupportVectorMachine\Kernel;

$dataset = new CsvDataset('examples/beers.csv', 3);

$split = new StratifiedRandomSplit($dataset, 0.1);

$classifier = new SVC(Kernel::RBF);

$classifier->train($split->getTrainSamples(), $split->getTrainLabels());

$predicted = $classifier->predict($split->getTestSamples());

echo sprintf("Accuracy: %s\n", Accuracy::score($split->getTestLabels(), $predicted));

$newBeer = [20, 2.5, 7];

echo sprintf("New beer score: %s\n", $classifier->predict($newBeer));

Language recognition

// languages.csv

"Hello, do you know what time the movie is tonight?","english"

"I am calling to make reservations","english"

"I would like to know if it is at all possible to check in","english"

"What time does the swimming pool open?","english"

"Where is the games room?","english"

"We must call the police.","english"

"How many pupils are there in your school?","english"

"Twenty litres of unleaded, please.","english"

"Is it near here?","english"

"Je voudrais une boîte de chocolates.","french"

"Y a-t-il un autre hôtel près d'ici?","french"

"Vérifiez la batterie, s'il vous plaît.","french"

"La banque ouvre à quelle heure?","french"

"Est-ce que je peux l'écouter?","french"

"Vous devez faire une déclaration de perte.","french"

"Combien des élèves y a-t-il dans votre collège?","french"

"Est-ce qu-il ya un car qui va à l'aéroport?","french"

"Nous voudrions rester jusqu'à Dimanche prochain.","french"

"Je ne comprends pas.","french"

"Voici une ordonnance pour des comprimés.","french"

"Je n'ai qu'un billet de cinquante francs.","french"

"À quelle heure commence le dernière séance?","french"

"Faut-il changer?","french"

"Il n'avait pas la priorité.","french"

"Puis-je prendre les places à l'avance?","french"

"Elle a trente et un ans.","french"

"Pour aller à la pharmacie, s'il vous plaît?","french"

"Voulez-vous me peser ce colis, s'il vous plaît.","french"

"Ciao, ti va di andare al cinema?","italian"

"Mi sai dire quando aprono il negozio?","italian"

"Quando c'è l'ultimo autobus?","italian"

"Lo posso provare addosso?","italian"

"C'è una presa elettrica per il nostro caravan?","italian"

"Vorrei una scatola di cioccolatini.","italian"

"Non e' stata colpa mia.","italian"

Language recognition

<?php

$dataset = new CsvDataset('examples/languages.csv', 1);

$vectorizer = new TokenCountVectorizer(new WordTokenizer());

$samples = $vectorizer->transform($dataset->getSamples());

$dataset = new ArrayDataset($samples, $dataset->getLabels());

$randomSplit = new RandomSplit($dataset, 0.3);

$classifier = new SVC(Kernel::RBF, 100);

$classifier->train(

$randomSplit->getTrainSamples(),

$randomSplit->getTrainLabels()

);

$predictedLabels = $classifier->predict(

$randomSplit->getTestSamples()

);

echo Accuracy::score($randomSplit->getTestLabels(), $predictedLabels);Flappy Learning

https://github.com/xviniette/FlappyLearning

Flappy Learning

var inputs = [

this.birds[i].y / this.height,

nextHoll

];

var res = this.gen[i].compute(inputs);

if(res > 0.5){

this.birds[i].flap();

}

if(this.birds[i].isDead(this.height, this.pipes)){

this.birds[i].alive = false;

this.alives--;

Neuvol.networkScore(this.gen[i], this.score);

if(this.isItEnd()){

this.start();

}

}Summary

Summary

- the most important is question, data is secondary (but also important)

- many algorithms and techniques

- application can be very simple but also extremely sophiticated

- sometimes difficult to find correct answer

- base math skills are important

PHP 7.0

206,128 instances classified in 30 seconds

(6,871 per second)

https://github.com/syntheticminds/php7-ml-comparison

Python 2.7

106,879 instances classified in 30 seconds

(3,562 per second)

NodeJS v5.11.1

245,227 instances classified in 30 seconds

(8,174 per second)

Java 8

16,809,048 instances classified in 30 seconds

(560,301 per second)Q&A

Thanks for listening

@ ArkadiuszKondas

https://slides.com/arkadiuszkondas

https://github.com/itcraftsmanpl/phpcon-2016-ml