Funtional Concurrency in Scala 101

Piotr Gawryś | twitter.com/p_gawrys

Slides: https://slides.com/avasil/funtional-concurrency-in-scala-101#/

Plan

- Brief Overview

- Introduction to Functional Programming

- Introduction to Concurrency (with FP!)

- Exercises

Schedule

| 9.00 - 10:30 | workshop |

| 10:30 - 11:00 | break |

| 11:00 - 12:30 | workshop |

| 12:30 - 14:00 | lunch break |

| 14:00 - 15:30 | workshop |

| 15:30 - 16:00 | break |

| 16:00 - 17:00 | workshop |

What's ahead

- Couple of concurrent puzzles for a warm up

- Using newly acquired skills in real use case

Battle City (Tank 1990)

Before we begin...

git clone git@github.com:Avasil/fp-concurrency-101.git

or https://github.com/Avasil/fp-concurrency-101.git

git checkout exercises

sbt exercises/compile

sbt client/fastOptJS

sbt server/compileFunctional Programming

Functional Programming

- Programming with pure, referentially transparent functions

- Replacing an expression by its bound value doesn't alter the behavior of your program

- Lawful, algebraic structures

Benefits

- Consistent, known behavior

- Compositionality

- Easy refactoring and optimization rules

What prevents composition?

- Connected sequence

- Leaky abstraction

- Side effects!

Side effects

Source of example:

"Rúnar Óli Bjarnason - Composing Programs"

class Cafe {

def buyCoffee(cc: CreditCard): Coffee = {

val cup = new Coffee()

cc.charge(cup.price)

cup

}

}Side effects

Source of example:

"Rúnar Óli Bjarnason - Composing Programs"

class Cafe {

def buyCoffee(cc: CreditCard): (Coffee, Charge) = {

val cup = new Coffee()

(cup, new Charge(cc, cup.price))

}

}So how to write pure functions?

So how to write pure functions?

val a: Int = 10

val b: Int = a + a // 20

val c: Int = 10 + 10 // 20val r = scala.util.Random

val a: Int = r.nextInt // 7

val b: Int = a + a // 7 + 7

val c: Int = r.nextInt + r.nextInt // 7 + 5So how to write pure functions?

import monix.eval.Task

import cats.implicits._

val r = scala.util.Random

// when run, generates 7

val a: Task[Int] = Task(r.nextInt)

a.runSyncUnsafe // 7

val b: Task[Int] = (a, a).mapN(_ + _)

b.runSyncUnsafe // 7 + 5

val c: Task[Int] = (Task(r.nextInt), Task(r.nextInt)).mapN(_ + _)

c.runSyncUnsafe // 7 + 5

val d: Task[Int] = a.map(x => x + x)

d.runSyncUnsafe // 7 + 7Wait, but it still does side effect so what's the point?

Referential Transparency - Benefits

- Local reasoning

- Representing the program as value

Check out great explanations there:

https://www.reddit.com/r/scala/comments/8ygjcq/can_someone_explain_to_me_the_benefits_of_io/e2jfp9b/

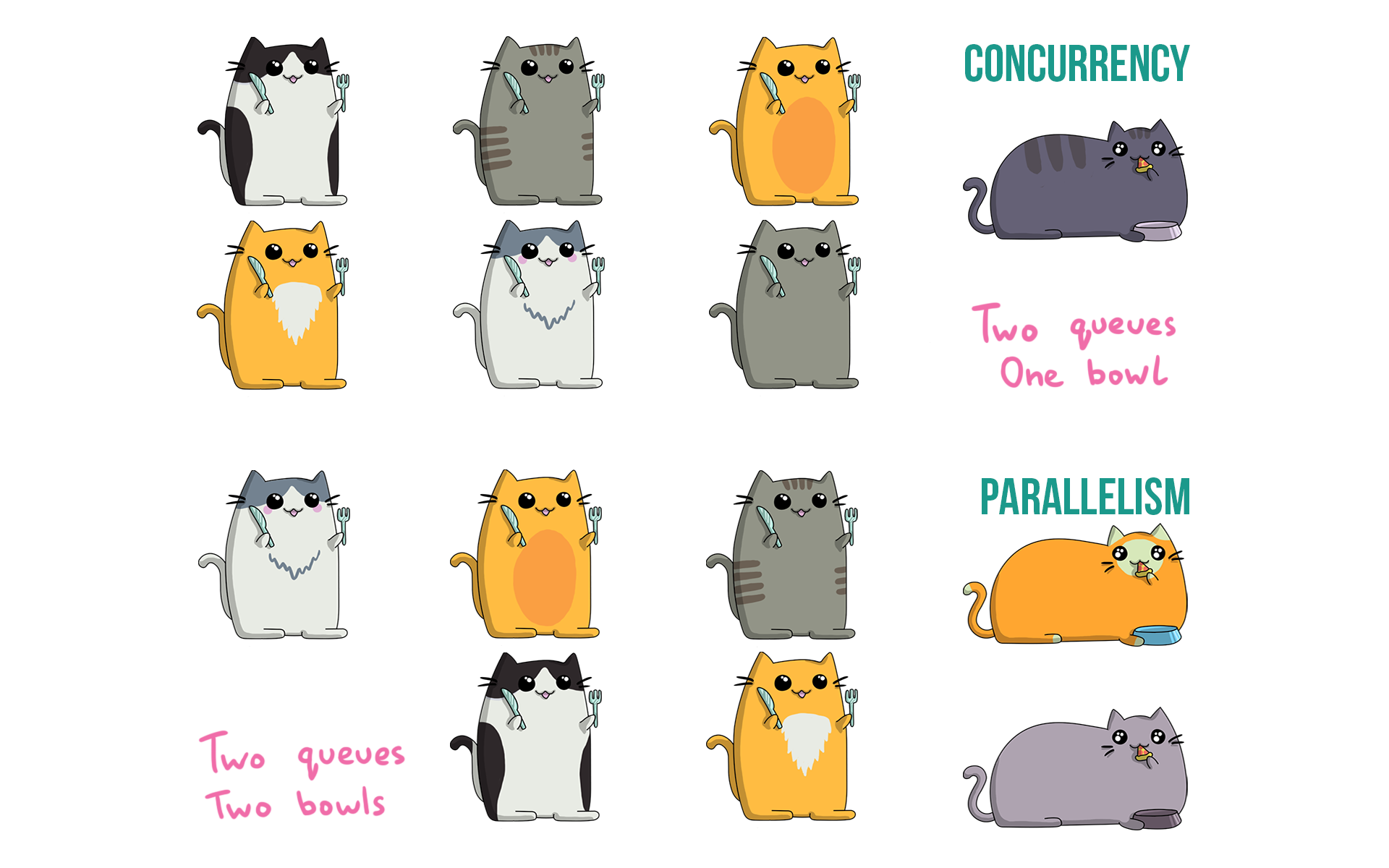

Concurrency

Doing things interleaved

Parallelism

Doing things at the same time to finish faster

Credit: https://twitter.com/impurepics

Concurrency on JVM

Threads

Map 1:1 to OS native threads which means we can execute up to N threads in parallel (N = number of cores)

Context Switch

Before a thread can start doing work, the OS needs to store state of earlier task, restore the new one etc.

Concurrency on JVM

Thread Pools

Takes care of creating and reusing threads, distributing work

Blocking Threads

Nothing else can be run on blocked thread, resources are wasted.

Thread Scheduling

Preemptive multitasking

Tasks are interrupted to allow other tasks to run. Guarantees that each task will get its own "slice" of time.

Cooperative multitasking

Tasks voluntarily yield control to the scheduler.

Monix

- Scala / Scala.js library for composing asynchronous programs

- Lots of cool data types such as Task, Observable, ConcurrentQueue and many more

- Purely functional API

- Cats-Effect integration

Monix Task

- Lazily evaluated

- Lots of concurrency related features

- Error Handling

- Cancelation

- Parallelism

- Resource Safety

- ... and it composes like a charm!

Basic Usage

import monix.eval.Task

val task = Task { println("hello!") }

// nothing happens yet, it's just a description

// of evaluation that will produce `Unit`, end

// with error or never complete

val program: Task[Unit] =

for {

_ <- task

_ <- task

} yield ()

// prints "hello!" twice

program.runSyncUnsafe()import monix.eval.Task

import cats.syntax.flatmap._

val program: Task[Unit] =

taskA.flatMap(_ => taskB)

val program: Task[Unit] =

taskA >> taskBimport monix.eval.Task

import scala.concurrent.duration._

// waits 100 millis (without blocking any Thread!)

// then prints "woke up"

Task.sleep(100.millis) >> Task(println("woke up"))

// never finishes

Task.never >> Task(println("woke up"))Error Handling

import monix.eval.Task

val taskA = Task(println("A"))

val taskB = Task.raiseError(new Exception("B"))

val taskC = Task(println("C"))

val program: Task[Int] =

for {

_ <- taskA

_ <- taskB // short circuits Task with error

_ <- taskC // taskC will never happen!

} yield 42

// A

// Exception in thread "main" java.lang.Exception: B

program.runSyncUnsafe

// A

// Process finished with exit code 0

val safeProgram: Task[Either[Throwable, Int]] =

program.attempt

safeProgram.runSyncUnsafe// will print A, B and C

val program: Task[Int] =

for {

_ <- taskA

// in case of error executes the Task in argument

_ <- taskB.handleErrorWith(_ => Task(println("B")))

_ <- taskC

} yield 42

Error Handling

def retryBackoff[A](source: Task[A])

(maxRetries: Int, delay: FiniteDuration): Task[A] = {

source.onErrorHandleWith { ex =>

if (maxRetries > 0)

retryBackoff(source)(maxRetries - 1, delay * 2)

.delayExecution(delay)

else

Task.raiseError(ex)

}

}Parallelism

import monix.eval.Task

import cats.implicits._

val taskA = Task(println("A"))

val taskB = Task(println("B"))

val listOfTasks: List[Task[Unit]] = List(taskA, taskB)

val taskOfList: Task[List[Unit]] = Task.gather(listOfTasks)

// using Parallel type class instance from Cats

val parTask: Task[Unit] =

(taskA, taskB).parTupledval taskInParallel: Task[Unit] =

for {

// run in "background"

fibA <- taskA.start

fibB <- taskB.start

// wait for ioa result

_ <- fibA.join

_ <- fibB.join

} yield ()

// run both in parallel

// and cancel the loser

val raceTask: Task[Either[Unit, Unit]] =

Task.race(taskA, taskB)Scheduler

- Thread Pool on steroids

- Configurable Execution Model

- Tracing Scheduler (for TaskLocal support)

- Test Scheduler that can simulate passing time

Scheduler

import monix.execution.schedulers.TestScheduler

import scala.concurrent.duration._

def retryBackoff[A](source: Task[A])

(maxRetries: Int, delay: FiniteDuration): Task[A] = {

source.onErrorHandleWith { ex =>

if (maxRetries > 0)

retryBackoff(source)(maxRetries - 1, delay * 2)

.delayExecution(delay)

else

Task.raiseError(ex)

}

}

val sc = TestScheduler()

val failedTask: Task[Int] = Task.raiseError[Int](new Exception("boom"))

val task: Task[Int] = retryBackoff(failedTask)(5, 2.hours)

val f: CancelableFuture[Int] = task.runToFuture(sc)

println(f.value) // None

sc.tick(10.hours)

println(f.value) // None

sc.tick(1000.days)

println(f.value) // Some(Failure(java.lang.Exception: boom))Observable

- Inspired by RxJava / ReactiveX

- Push-based streaming with backpressure

- (mostly) Purely functional API

- Choose your adventure

See Alex Nedelcu (author of Monix) presentation for origins: https://monix.io/presentations/2018-tale-two-monix-streams.html

Observable

trait Observable[+A] {

def subscribe(o: Observer[A]): Cancelable

}

trait Observer[-T] {

def onNext(elem: T): Future[Ack]

def onError(ex: Throwable): Unit

def onComplete(): Unit

}Observable

val list: Observable[Long] =

Observable.range(0, 1000)

.take(100)

.map(_ * 2)

val task: Task[Long] =

list.foldLeftL(0L)(_ + _)val task: Task[List[Int]] =

for {

queue <- ConcurrentQueue.unbounded[Task, Int]()

// feeds queue in the background

_ <- runProducer(queue).start

list <- Observable

.repeatEvalF(queue.poll())

.throttleLast(150.millis)

.takeWhile(_ > 100)

.toListL

} yield listObservable

import monix.eval.Task

import monix.execution.schedulers.TestScheduler

import monix.reactive.Observable

import scala.concurrent.duration._

// using `TestScheduler` to manipulate time

implicit val sc = TestScheduler()

val stream: Task[Unit] = {

Observable

.intervalWithFixedDelay(2.second)

.mapEval(l => Task.sleep(2.second).map(_ => l))

.foreachL(println)

}

stream.runToFuture(sc)

sc.tick(2.second) // prints 0

sc.tick(4.second) // prints 1

sc.tick(4.second) // prints 2

sc.tick(4.second) // prints 3Concurrent Data Structures

- cats-effect and monix.catnap provide several purely functional data structures for sharing state and synchronization

cats.effect.concurrent.Ref

Must watch: https://vimeo.com/294736344

Purely functional wrapper for AtomicRef. Allows for safe concurrent access and mutation but preserves referential transparency.

abstract class Ref[F[_], A] {

def get: F[A]

def set(a: A): F[Unit]

def modify[B](f: A => (A, B)): F[B]

// ... and more

}cats.effect.concurrent.Ref

import monix.eval.Task

import cats.effect.concurrent.Ref

val stringsRef: Task[Ref[Task, List[String]]] =

Ref.of[IOTaskList[String]](List())

def take(ref: Ref[Task, List[String]]): Task[Option[String]] =

ref.modify(list => (list.drop(1), list.headOption))

def put(ref: Ref[Task, List[String]], s: String): Task[Unit] =

ref.modify(list => (s :: list, ()))

val program: Task[Unit] =

for {

_ <- stringsRef.flatMap(put(_, "Scala"))

element <- stringsRef.flatMap(take)

} yield println(element)

program.runSyncUnsafe() // prints Nonecats.effect.concurrent.Ref

val program: Task[Unit] =

for {

ref <- stringsRef

_ <- put(ref, "Scala")

element <- take(ref)

} yield println(element)

program.runSyncUnsafe() // prints "Some(Scala)"- The only way to share a state is to pass it as a parameter. :)

cats.effect.concurrent.Deferred

abstract class Deferred[F[_], A] {

def get: F[A]

def complete(a: A): F[Unit]

}val program =

for {

stopRunning <- Deferred[Task, Unit]

_ <- runImportantService(healthcheck).start

_ <- Task.race(stopRunning.get, runOtherService.loopForever)

} yield ()

// somewhere in ImportantService

stopRunning.complete(())