Navigation in 2.5D spaces using Natural User Interfaces

Avraam Mavridis

Supervisor: Erik Berglund

Presentation Outline

- Natural User Interfaces

- Kinect

- Unity

- Navigation Methods

- Evaluation Techiques

- Future Work

- Questions

Natural User Interfaces

The term Natural User Interace is used from developers to refer to interfaces between human and machine that are invisible through their use and remain invisible as the user learn more complex interactions with the machine

The main design guidelines that a NUI must have :

- It must create an experience that feels natural to both novice and expert users

-

It must create an experience that the users can feel like an extension of their body

-

The user interface must consider the context and take advance of it

-

Avoid copying existing user interface patterns

The design of NUI can mimic some activity with which the user is already comfortable

Users are very impatient, they want interfaces with no much response time, they don't want to wait until they are able to start a new interaction

The development team should also take into consideration the constraints of the context and enviroment inside which the system will be used since these may be barriers to a succesful NUI implementation

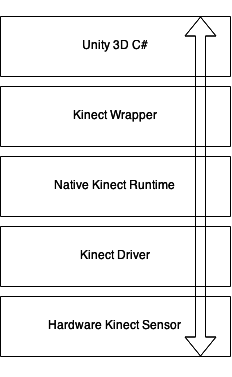

Kinect

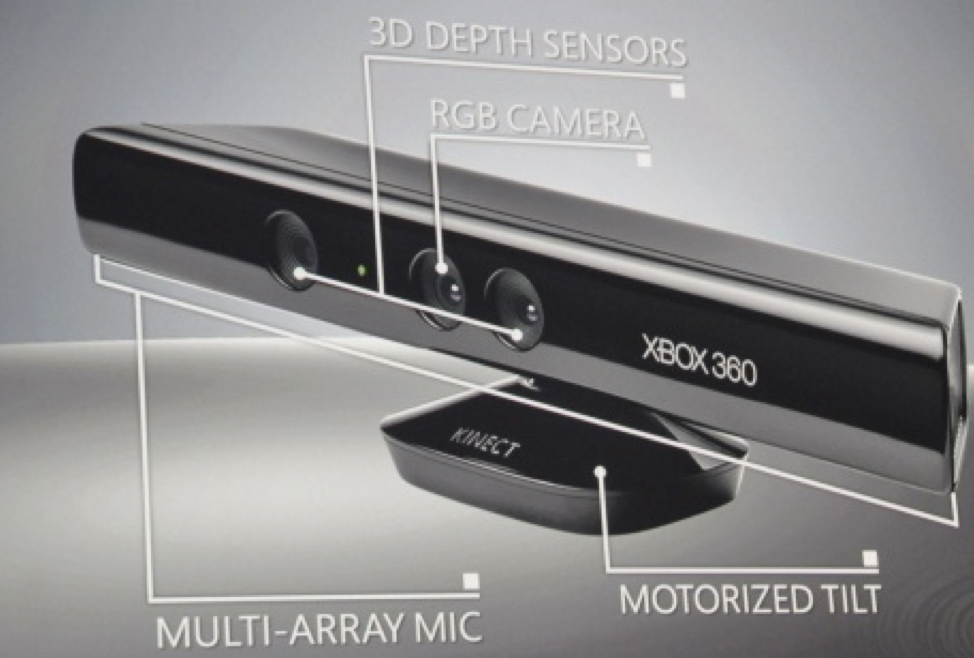

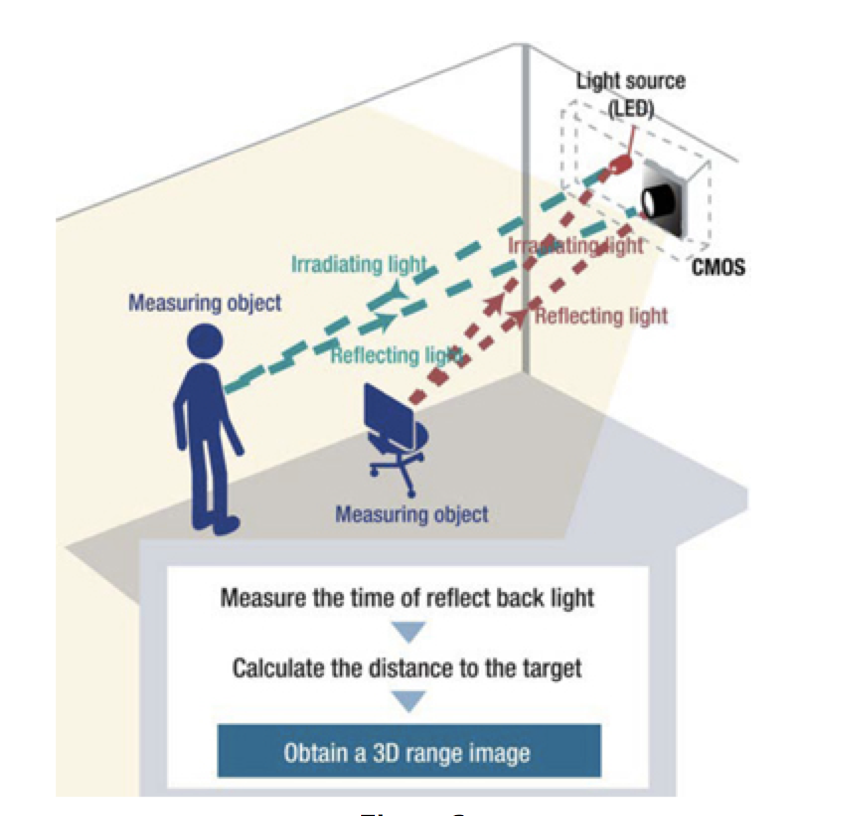

The Kinect is a motion sensing device which is consisted from an RGB camera, a depth sensor and a number of microphones that cooperate in tandem. This setup provide a 3D motion capture, voice and facial recognition

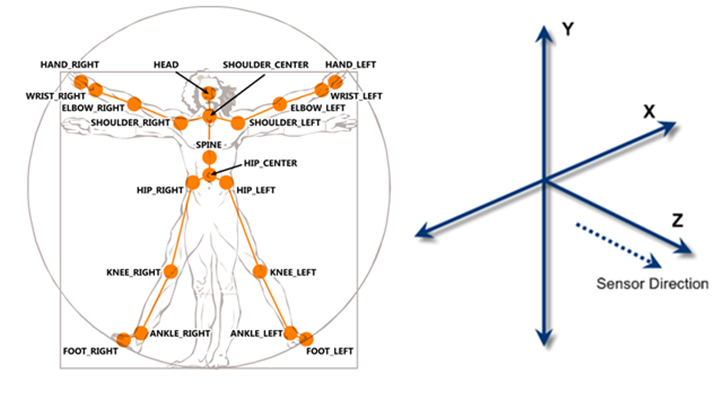

Kinect Join Points

namespace HumanBones

{

{

public enum Bones

{

HipCenter = 0,

Spine,

ShoulderCenter,

Head,

ShoulderLeft,

ElbowLeft,

WristLeft,

HandLeft,

ShoulderRight,

ElbowRight,

WristRight,

HandRight,

HipLeft,

KneeLeft,

AnkleLeft,

FootLeft,

HipRight,

KneeRight,

AnkleRight,

FootRight,

PositionCount

}

public class BonesIndex

{

public static int getBoneIndex(Bones bone){

return (int)bone;

}

}

} Mapping Kinect Join Points in C#

Kinect’s middleware is responsible for skeletal tracking, the way that Kinect achieve that is by segmenting the user’s body into a skeleton with a series of body data joints. These segments are then exposed to the developers though the Kinect’s SDK.

2.5D

2.5D ("two-and-a-half-dimensional"), ¾ perspective, and pseudo-3D are terms, mainly in the video game industry, used to describe either 2D graphical projections and similar techniques used to cause a series of images (or scenes) to simulate the appearance of being three-dimensional (3D) when in fact they are not, or gameplay in an otherwise three-dimensional video game that is restricted to a two-dimensional plane

2.5D

The term "2.5D" is reffering to 3D games that use polygonal graphics to render the world and/or characters, but whose gameplay is restricted to a 2D plane. Examples include League of Legends, Street Fighter IV, Mortal Kombat etc.

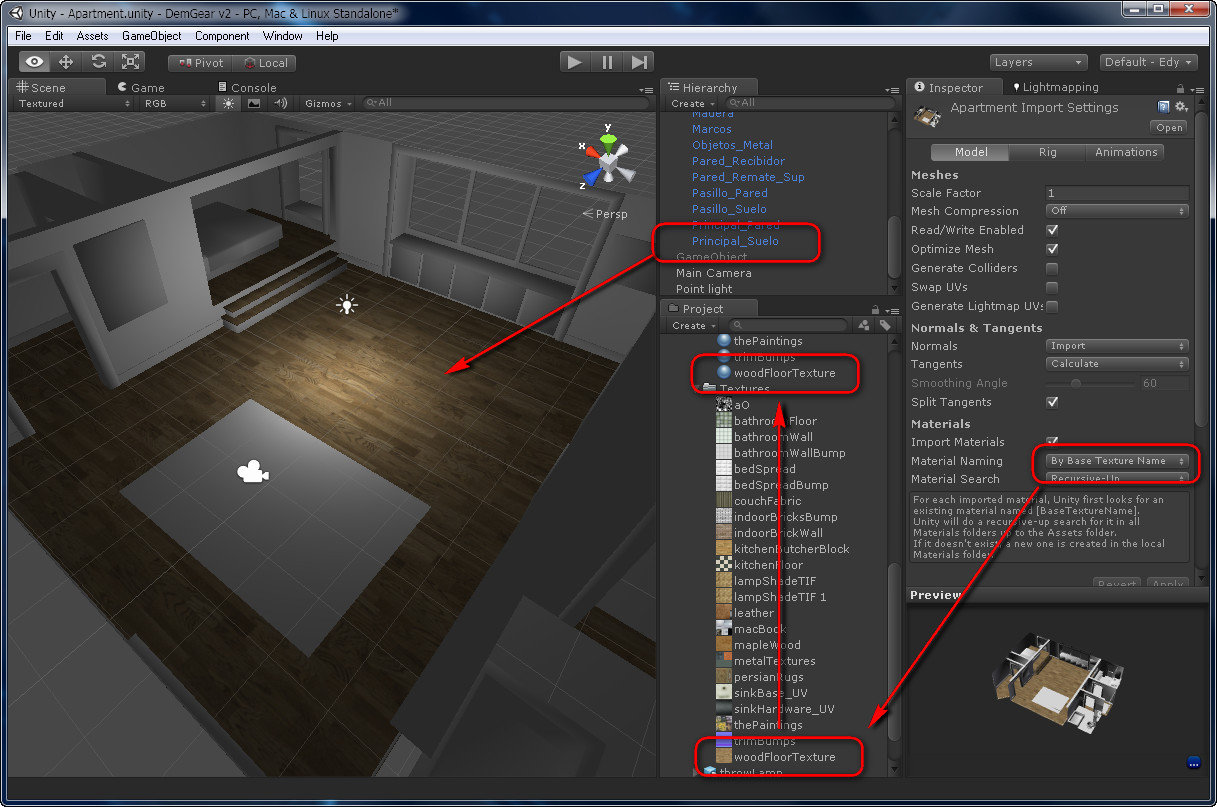

Unity 3D

Unity is a game creation system that includes a game engine and an IDE that was lanched on 2005 (Unity3D, 2005), since then it supports a range of platforms including Windows, Linux, iOS, Android. Unity has been used alogn with motion capturing sensors in both the academic and the commercial areas

Unity 3D

The setup of my work

Navigation Methods

Method 1

Method 1: steering

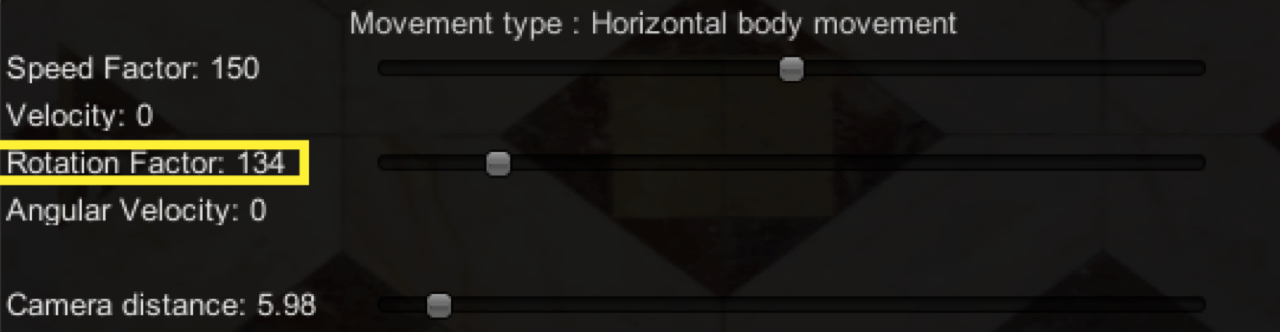

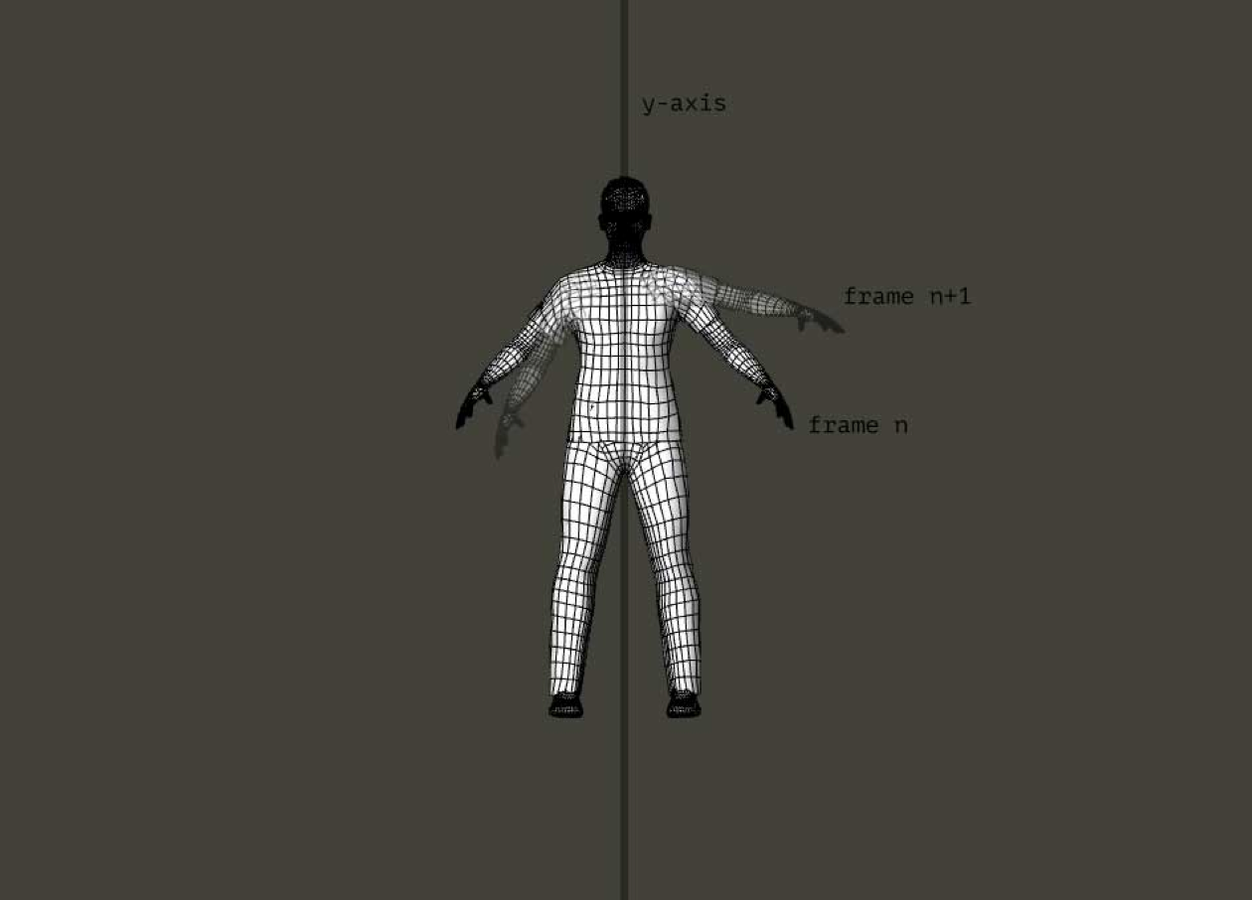

We apply torque to the character based on the movement of the user on the x-axis. I calculated the difference between the user’s position in a frame and his/her position in the next frame . The more the user is moved in the x-axis between two frames, the more torque we apply to the character.

Method 1: steering

NewHipXPosition = sw.bonePos[0, (int)Bones.HipCenter].x;

rigidbody.AddTorque(new Vector3(0, rotationFactor * (NewHipXPosition - OldHipPosition), 0));

The chose to use the Hip Center instead of the feet’s join points is because there are cases that these joint points are not visible to Kinect e.g. when the user is too close to the sensor

Method 1: steering

rigidbody.AddTorque(new Vector3(0, rotationFactor * (NewHipXPosition - OldHipPosition), 0));

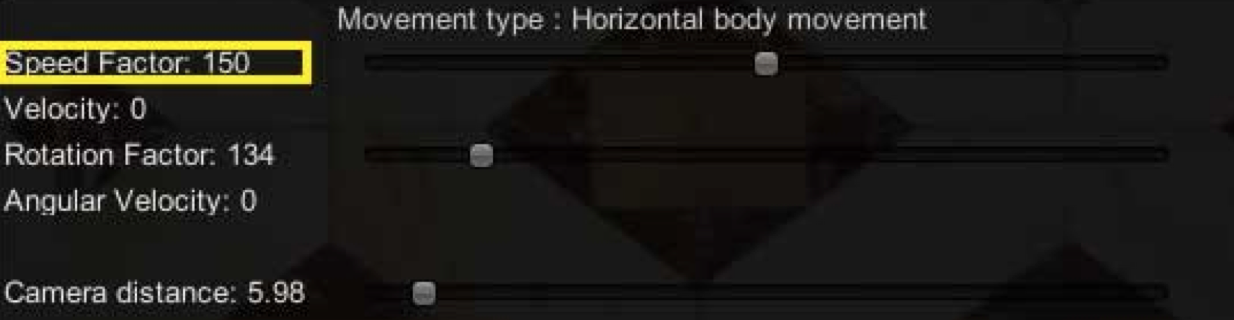

If the user haven’t move signifficanlty, I set the avatar's velocity to zero forcing the avatar to stop rotating. For the purpose of the thesis and for my experiments I used a rotationFactor on my equation, this factor is multiplied by the difference of the user’s position and can be set by the user

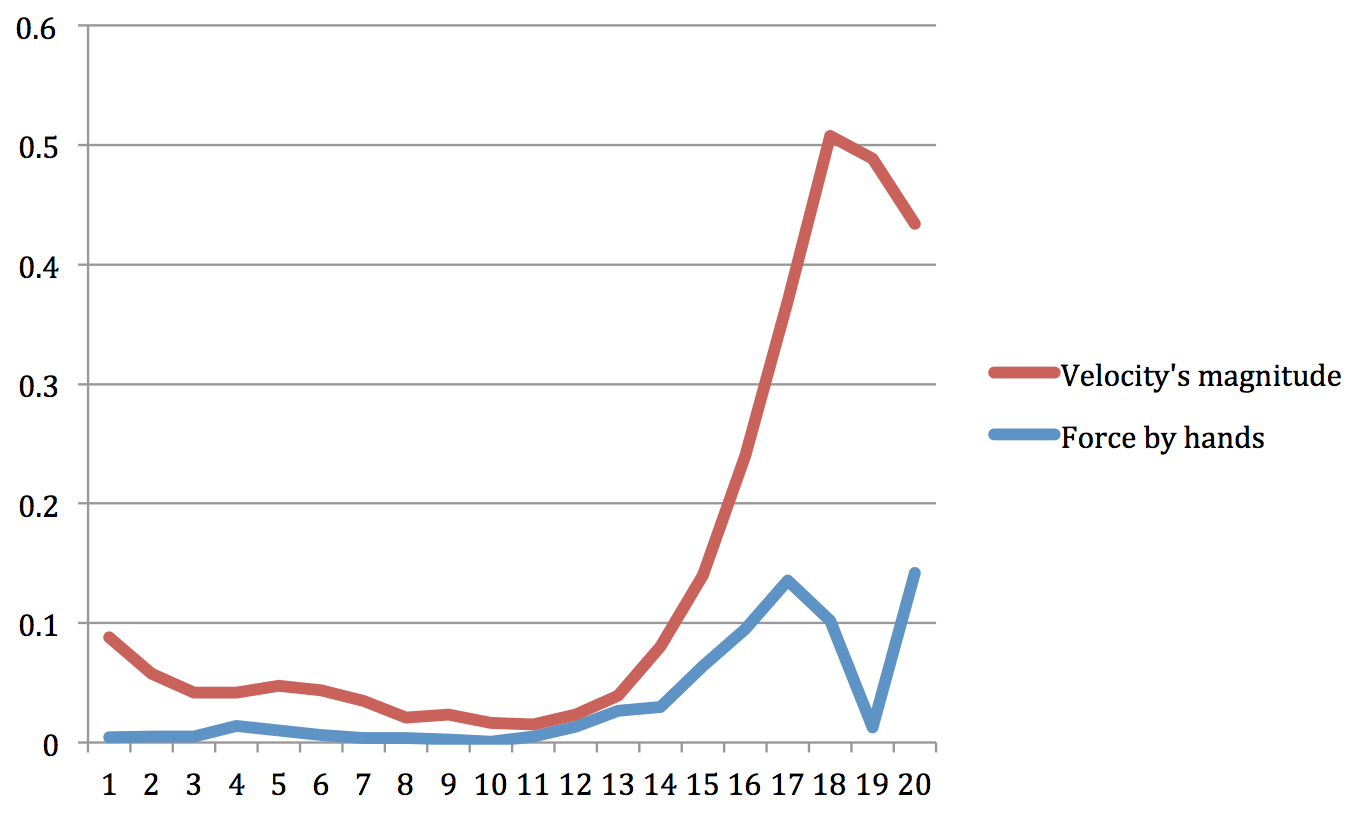

Method 1: forward movement

For the forward movement, first we calculate the difference between the position of the left hand in a frame and its position in the next frame, the same for the right hand. Then I find which is the maximum distance that have been covered (between the left and the right hand)

Method 1: forward movement

NewLeftHandPosition = sw.bonePos[0, (int)Bones.HandLeft].y;

NewRightHandPosition = sw.bonePos[0, (int)Bones.HandRight].y;

DifferenceBetweenOldAndNewRightHandPosition = Math.Abs(OldRightHandPosition > NewRightHandPosition);

DifferenceBetweenOldAndNewLeftHandPosition= Math.Abs(OldLeftHandPosition > NewLeftHandPosition);

forceByHands = Math.Max(DifferenceBetweenOldAndNewRightHandPosition, DifferenceBetweenOldAndNewLeftHandPosition);

rigidbody.AddForce(rigidbody.transform.TransformDirection((new Vector3(0, 0, 1)) * forceByHands * speedFactor));There is also a speed factor that can be set by the user, this is used for the same reason I used the rotation factor as explained previously

Method 1: forward movement

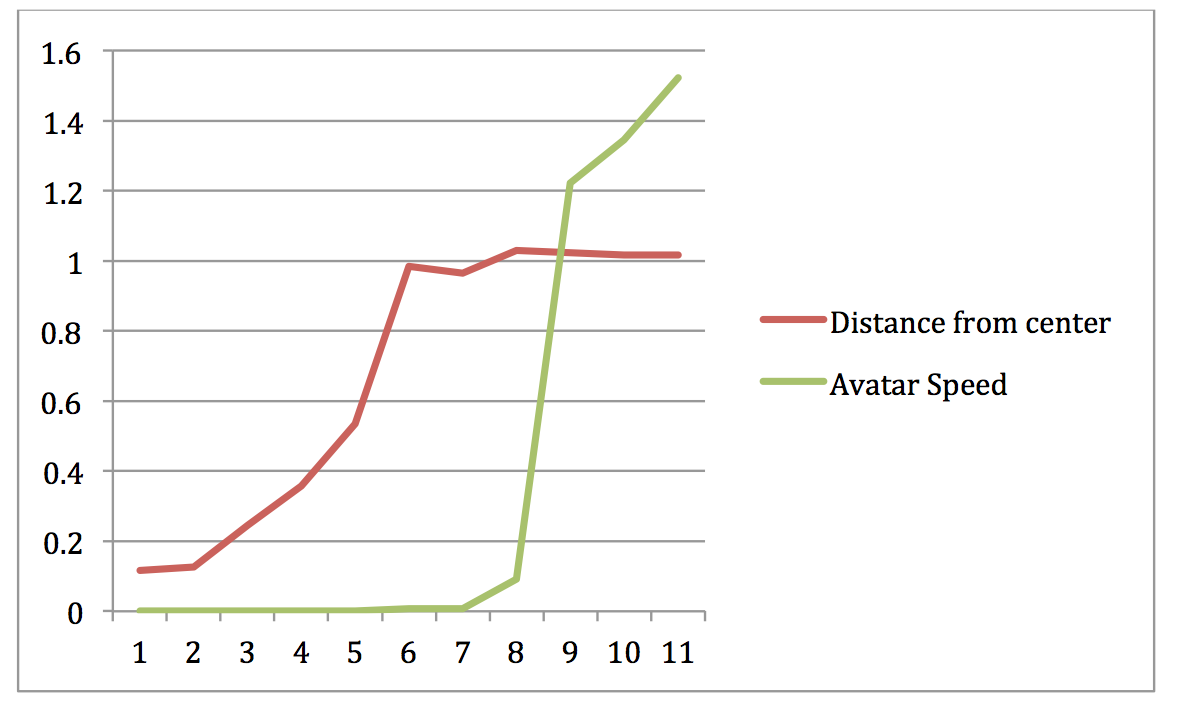

As we can see from the graph above chart increasing the force because of the hands movement leads to increase of the rigidbody’s velocity, on the 16th frame the user stopped moving his hands but the rigidbody has still some velocity magnitude which start decreasing, on frame 19th the player start moving his hands again but the velocity magnitude is not increasing because the rigidbody is collided with another gameobject.

Method 1: constrains

The most significant problem of this method is the rotation of the character when the user is at the edges of Kinect’s visible field, e.g. when the user is on the left edge of the visible field and he/she wants to rotate the character to the right .

Method 2

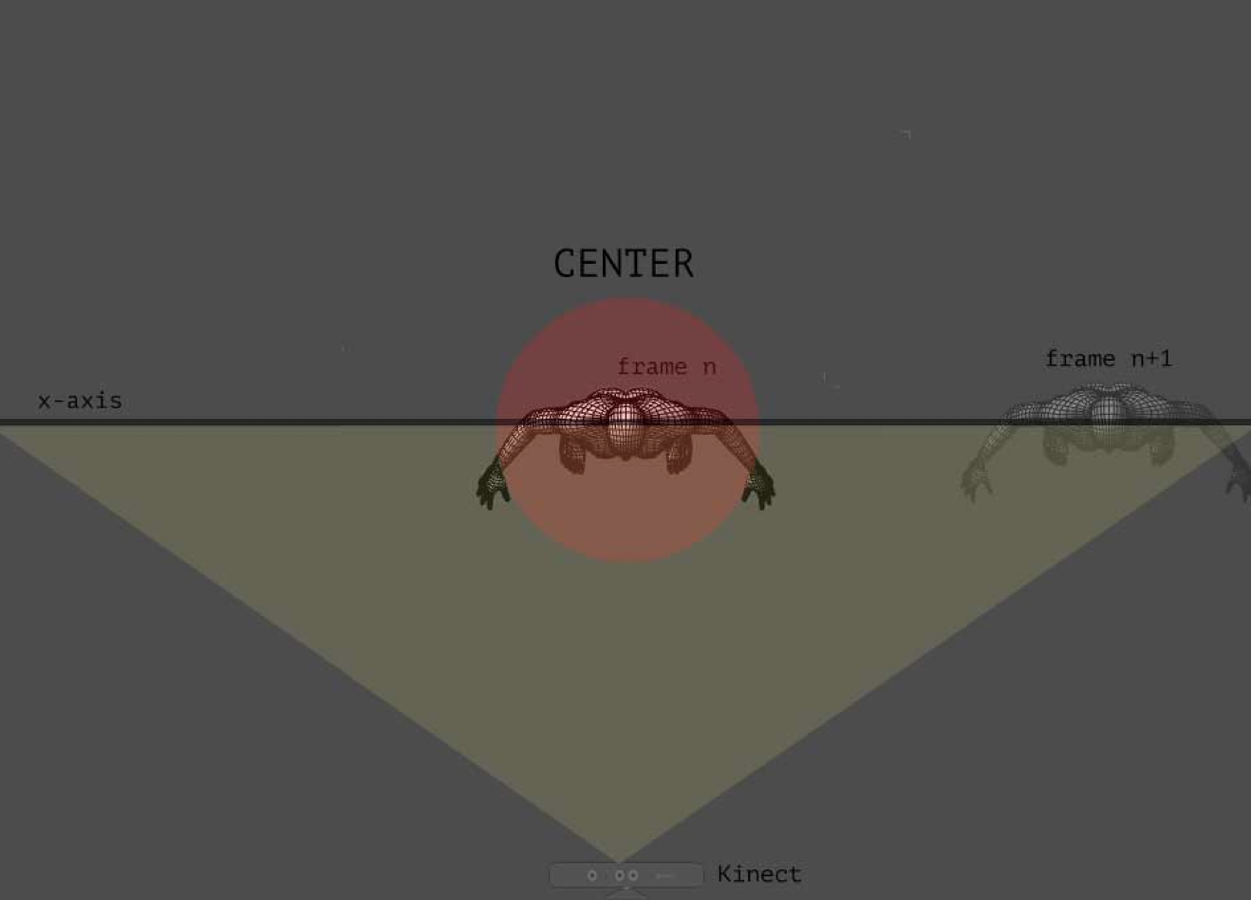

Method 2: steering

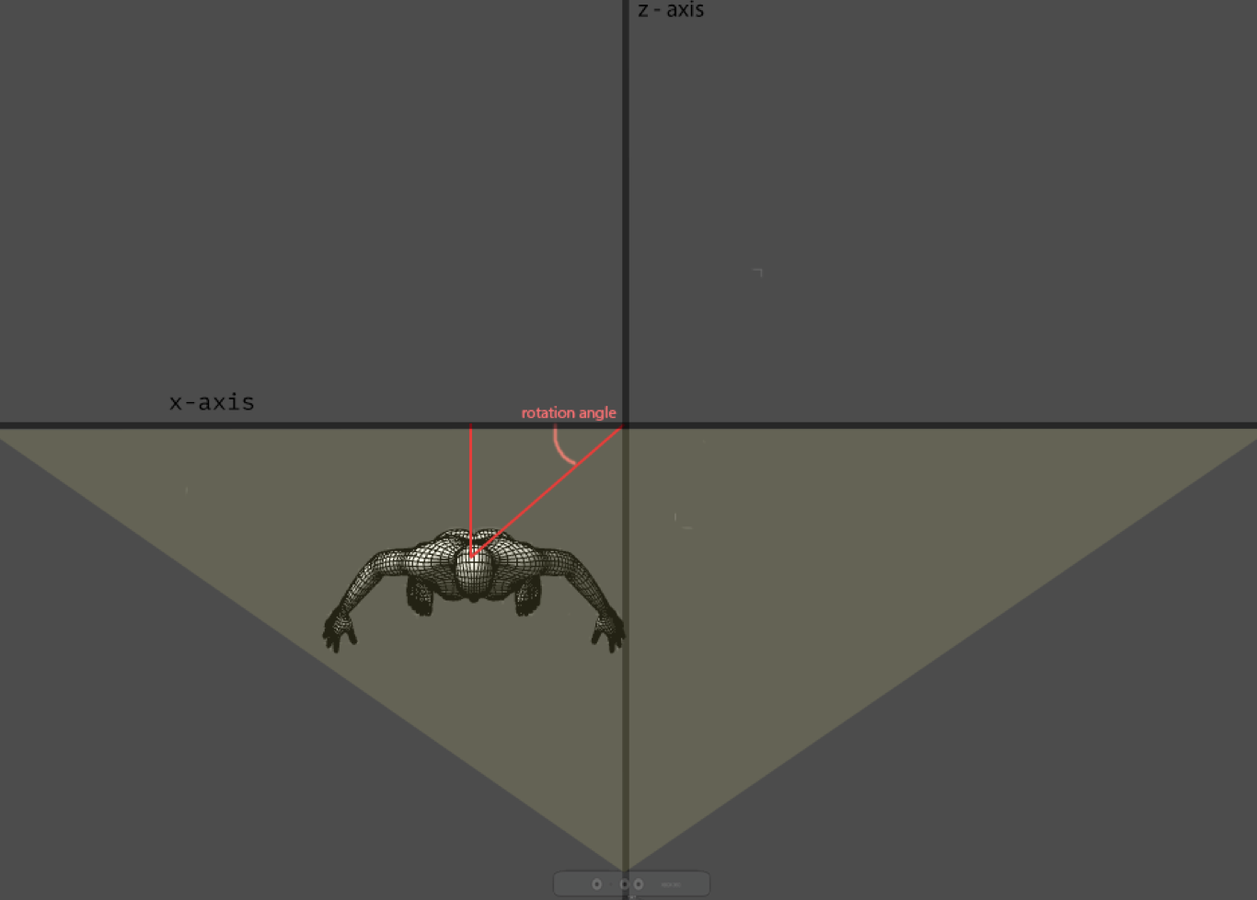

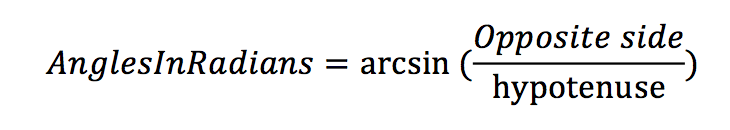

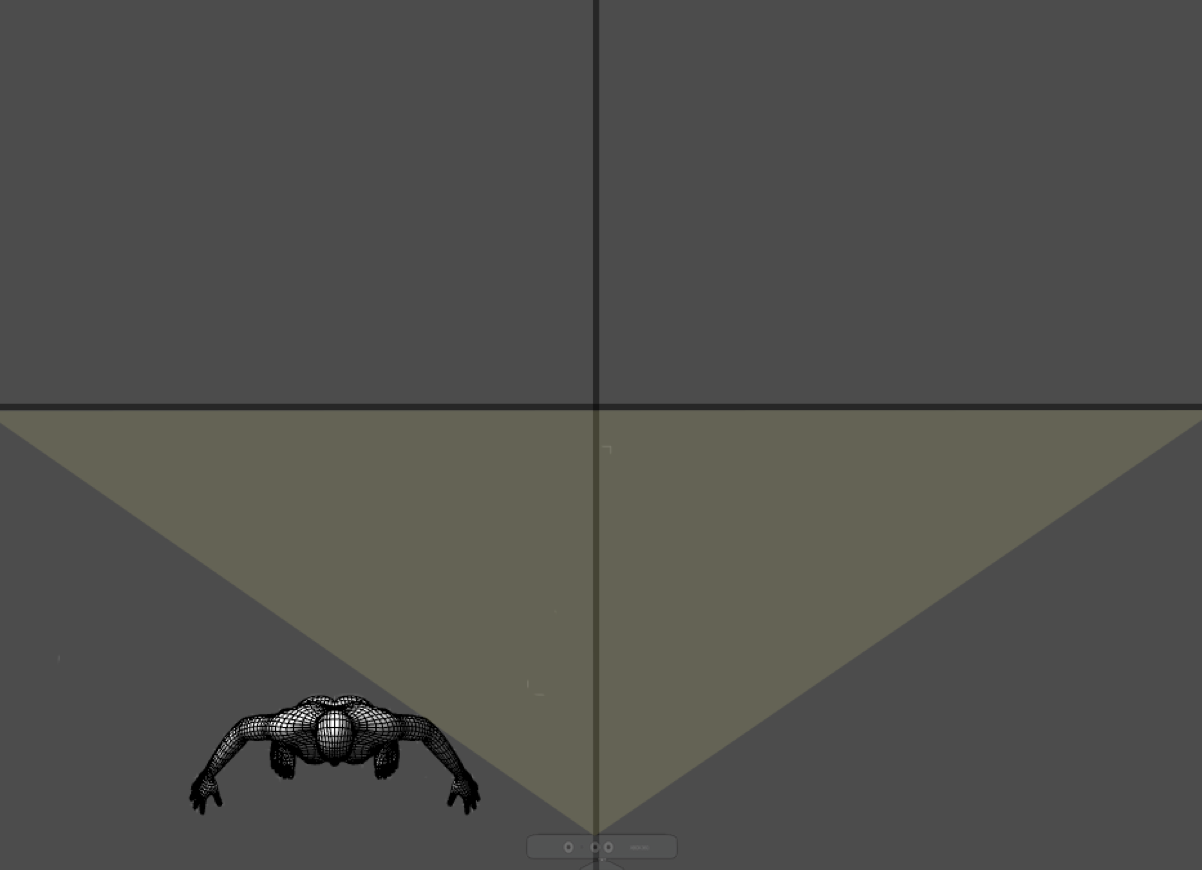

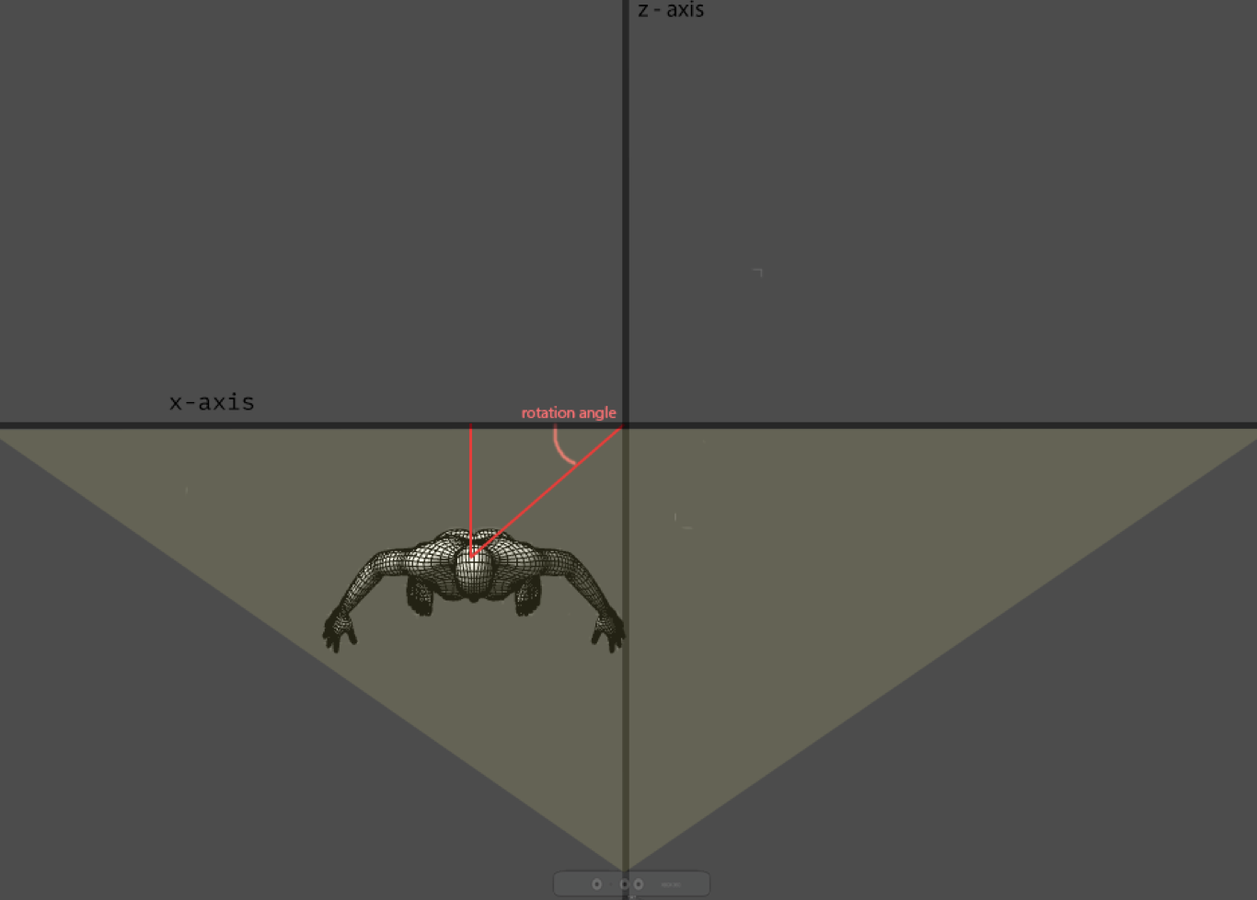

In the second method I apply torque to the avatar based on the position of the user regards to the center point. The center point is where HipCenter.z and HipCenter.x are equal to zero. To calculate the rotation angle I used the simple formula:

The avatar is rotating the same degrees as the user to the same direction

Method 2: steering

var rotation = Quaternion.Euler(0, 30, 0);For the rotation of this method I am using the Quaternion.Euler method

Example:

double hypotenusePower2 = Math.Pow(NewHipXPosition, 2) +

Math.Pow(NewHipZetPosition, 2);

double hypotenuse = Math.Sqrt(hypotenusePower2);

double angle = Math.Asin(NewHipZetPosition / hypotenuse);

rigidbody.transform.rotation = Quaternion.Euler(0, (float)(90 - Math.Abs(degrees)), 0);Method 2: forward movement

In method 2 we keep the same technique for forward movement of the avatar as in method 1

Method 2: constrains

As noticed during the evaluation of the method there was many cases where the player have been moved outside of Kinect’s visible field, the player were keep moving but the avatar remained static, something that lead to confusion

Method 3

Method 3: steering

Method 3 is using the same technique for steering as in Method 2

Method 3: forward movement

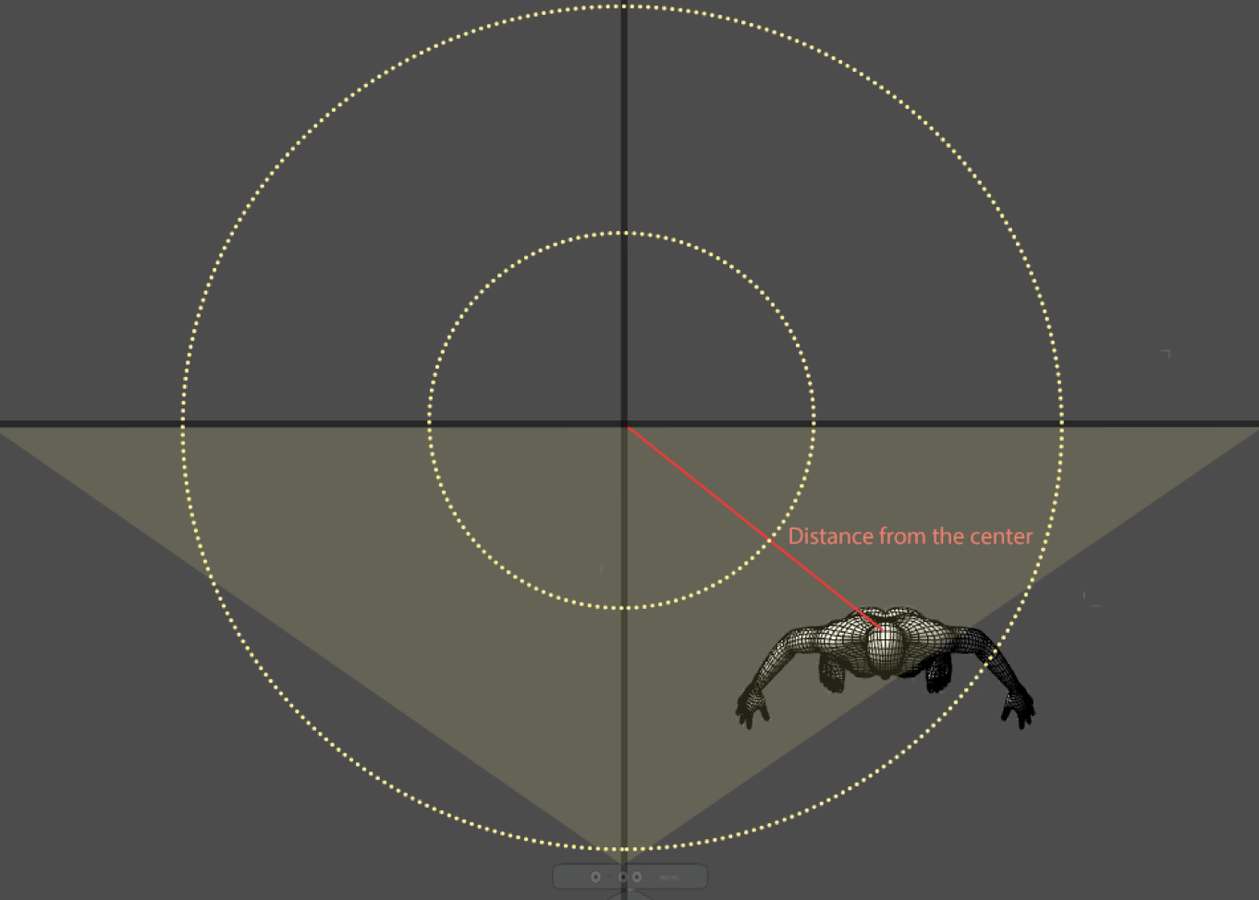

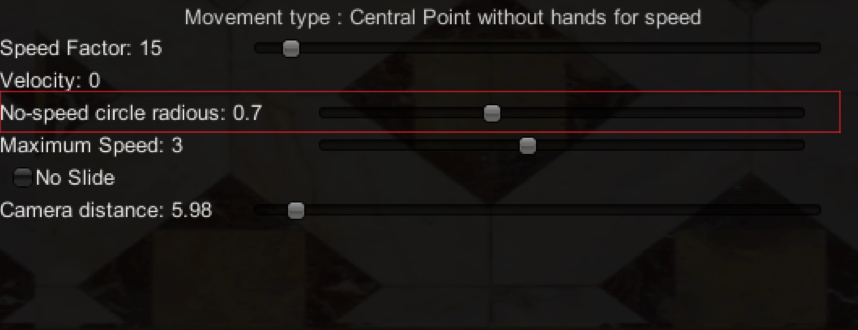

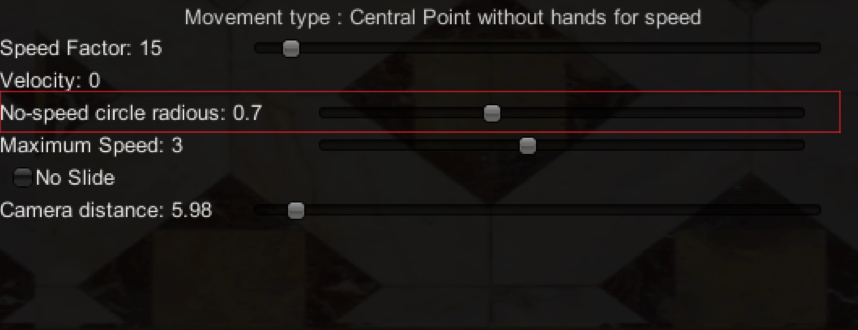

In this method the user is not using his/her hands for the movement. The speed of the forward movement is calculated based on his distance from the center of Kinect’s view. In addition there is a cycle inside which no force is applied to the avatar

Method 3: forward movement

The user is able to set radius of the inner cycle

Force is applied to the avatar only if the user is out of the inner cycle

if (force > PlayerController.circleradious)

{

rigidbody.AddForce(rigidbody.transform.TransformDirection((new Vector3(0, 0, 1)) * speedFactor * force));

}Method 3: forward movement

The user is able to set radius of the inner cycle

Force is applied to the avatar only if the user is out of the inner cycle

if (force > PlayerController.circleradious)

{

rigidbody.AddForce(rigidbody.transform.TransformDirection((new Vector3(0, 0, 1)) * speedFactor * force));

}Method 3: forward movement

Example

Method 3: constraints

Method 3 have the same limitations as the method 2 regards to the steering of the avatar and also it was difficult for the users to determine the optimal speed factor for their session. Another difficulty was to determine the radius of the circle inside which no force to the avatar was applied.

Evaluation Techniques

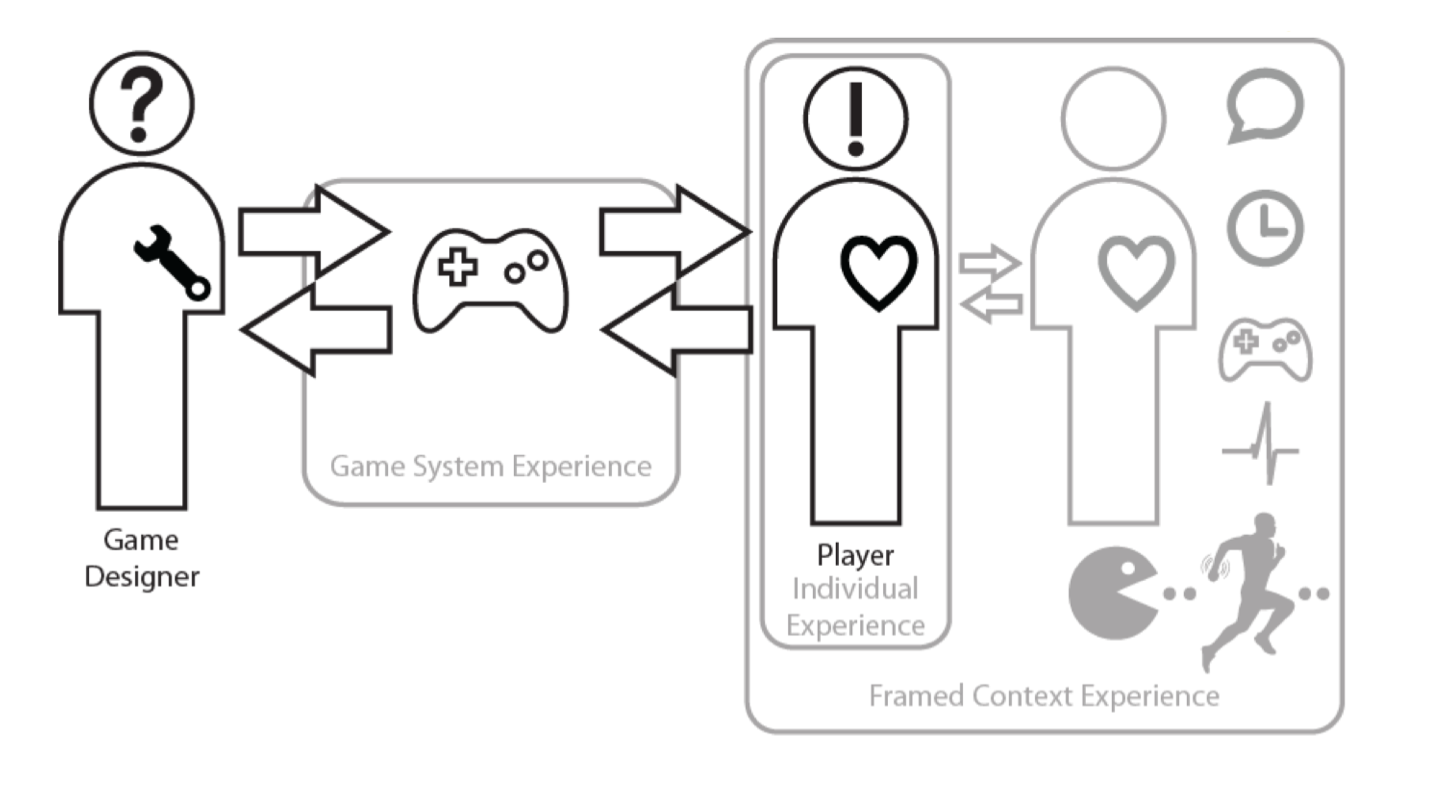

What are the ingredients that lead to a successful game release?

Gameplay is an important factor

The term gameplay means the specific ways with which players interact with the game, the patterns, the goals and the challenges that are used in a virtual world to arouse the interest of the users and force them to act

Who should evaluate an implementation?

It is important to evaluate a game using people outside of the team that design and develop the game. Developers and designers of the game are more aware of the types of actions that they are allowed to perform, they have spend hours for testing the game and balancing its variables and thus this affects the whole gameplay experience.

Gameplay

- Manipulation rules: These are the set of rules that determine what the player can do inside the game, which are the allowed actions.

- Goal rules: These are the set of goals inside the game, which are the goals that the user should achieve.

- Metarules: These are the set of actions that the user can do to modify the game.

Gameplay in my implementation

- The ability of the user to navigate inside a 2.5D virtual space consist the Manipulation rules.

- The need of the player to escape from the maze avoiding the guards are the set of goal rules.

- The ability of the user to change the movement technique –the way he/she interacts with the NUI to navigate the avatar- consist the Metatarules of the game.

Proposed evaluation techniques

- Questionnaire: Simple forms with questions that are filled by the users at the end of their session, that try to identify the satisfaction of the user based on a scale (e.g. negative, positive, neutral)

- Eye tracking: Used to measure the attention levels of a user in a specified action or task.

- Interviews: Discussion with the user’s about their experience after the end of the game session trying to gather user feedback

- RITE Method: A method that designed and executed by Dennis Wixon at Microsoft Labs in which there is defined test script that the users have to execute while the engage a verbal protocol (think aloud) (Wixon, 2003)

- Advanced sensor techniques: Use of sensors to measure various physical and physiological characteristics of the participant while he/she is playing the game, like Electromyography (electrical activation of muscles) and Electroencephalography (brane waves measurement).

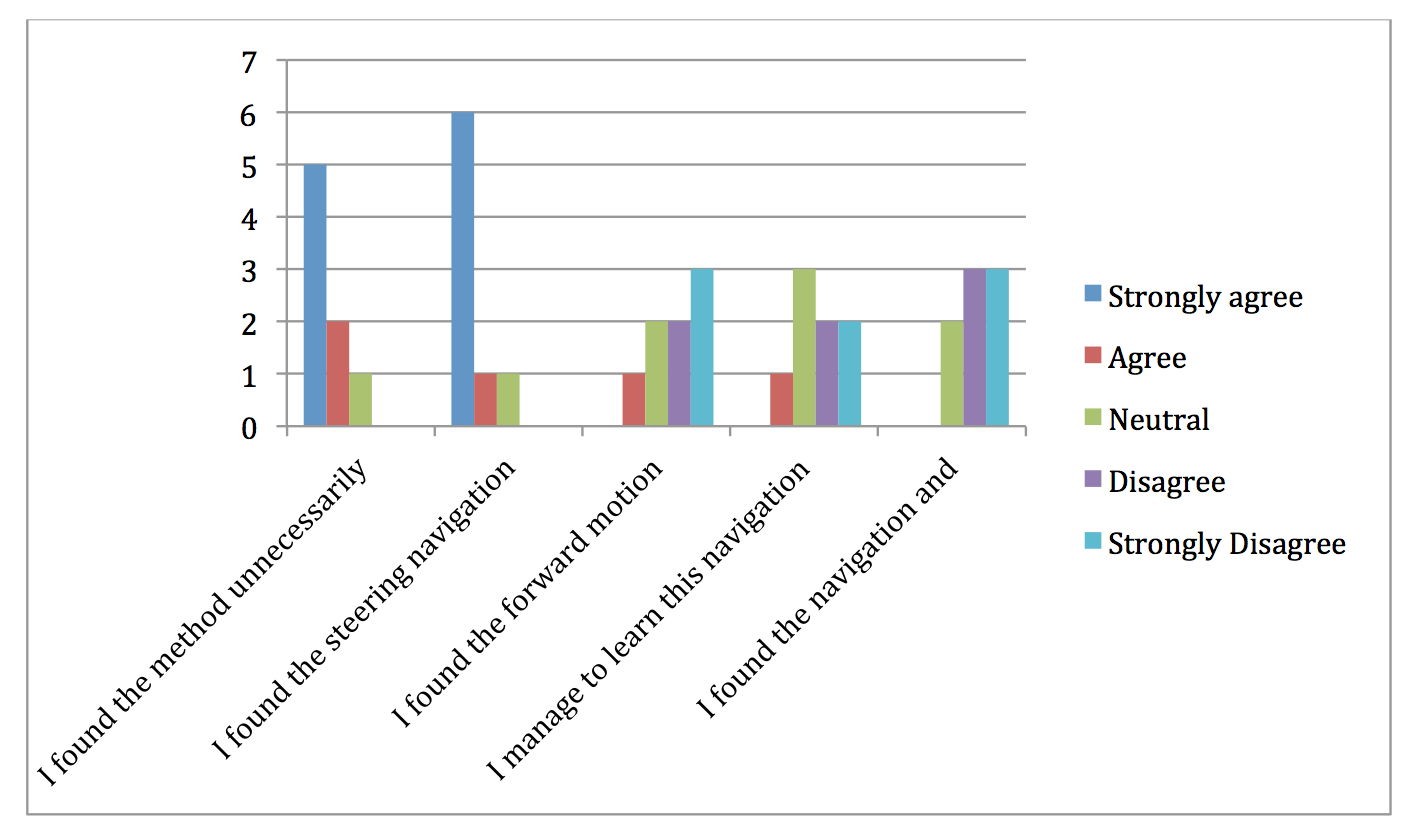

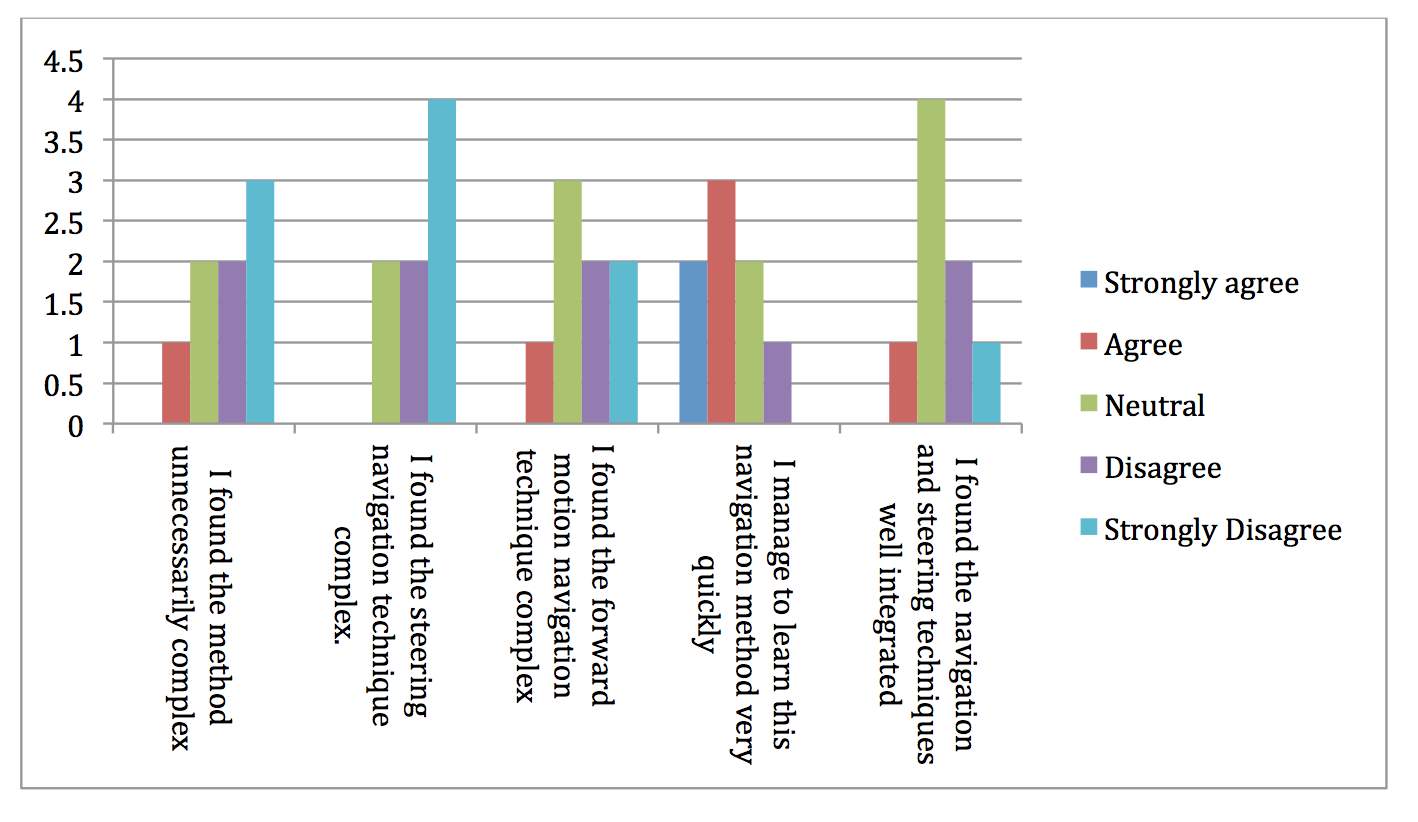

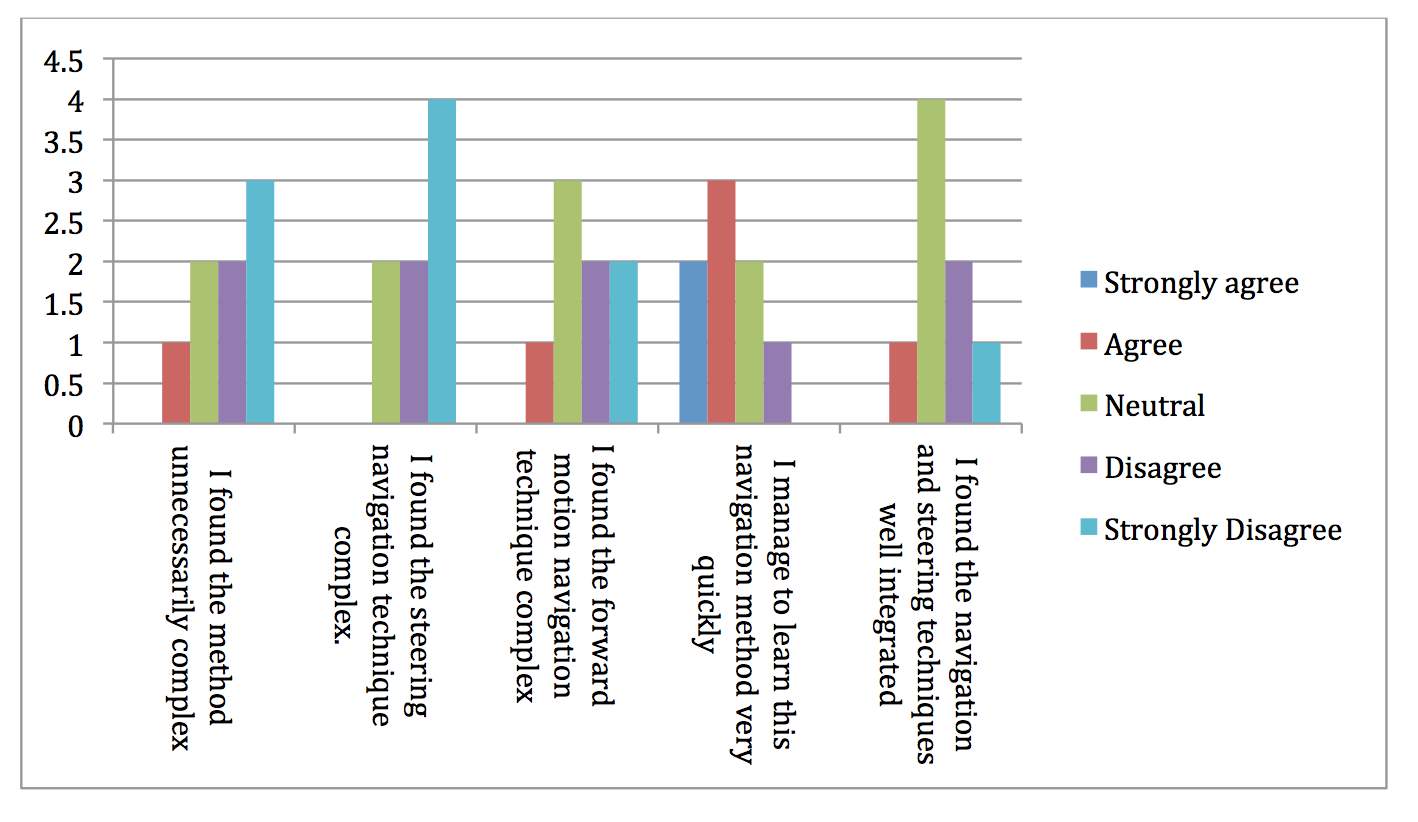

Evaluation of navigation methods

For the evaluation of the navigation methods which are the core of the gameplay of my work I used the method of questionnaire. My questionnaire is a variation of the System Usability Scale (SUS) (Brooke, 1996) and has 15 questions that try to identify the emotions and the level of satisfaction of the player, 5 questions for each movement method.

1. I found the method unnecessarily complex

2. I found the steering navigation technique complex.

3. I found the forward motion navigation technique complex.

4. I manage to learn this navigation method very quickly

5.I found the navigation and steering techniques well integrated

Evaluation of navigation methods

The evaluations where taken place during meetups of the Game Developers Berlin meetup group. The user group is small (8 people participate and answer the questionarrie). The answers are based on a 5 value scale with a range from Strongly Agree to Strongly disagree.

Evaluation Results: Method 1

Most players found the method complex, specially the noticed that the steering technique is uncomfortable and unusual.

Evaluation Results: Method 2

Most players found the rotation method feasible but during the discussion with them after the session they mentioned that the speed factor and the position of the camera may affected their opinion about the method.

Evaluation Results: Method 3

Most playes found the rotation technique of this method was feasible while it was difficult for them to determine the optimal speed factor and the radius of the “no-force” circle.

Evaluation

The users felt that the steering technique in which the avatar was rotating using the position of their body from the center was more natular while in case of forward motion the technique in which force applied to the avatar based on how fast the player was moving his hands (method 1 and method 2) was more comfortable for them. The evaluation group was small and mostly belonged from people that had previous experience using Kinect or similar NUIs, these facts may had influence on their judge.

Future Work

- Evaluation of the navigation method by other user groups

- Another aspect than can change is the evaluation technique. I used a Likert like questionarrie, however different evaluation techniques may lead to different results

- During the sessions the users were able to change the various variables of the system. A study can be done to investigate the optimal values of these variables and with microptimizations find out in which values of these variables each method is more „natural“ to the users

Future Work

- New techniques for steering and forward motion can be implemented and evaluated e.g. forward motion of the avatar based on the movement of knees joint points.

- It could be interesting to investigate the same techniques using other NUIs and compare their results with the results from Kinect.

Future Work