Reproducible Science

Bioinformatics

- Lots of experimental tools

- Lots of bash/R/python scripts

- Manual steps in data processing pipelines

- Hacks around software dependencies and requirements

Astrophysics

- Monolithic code base for analysis tools

- Various programming languages used for various pipelines

- Inefficient steps in larger data processing pipelines.

Standards are more important than software.

Lots of headaches for people trying to reproduce or build on the work of others

Standards are better than Software.

Workflow Languages

Ways to standardize the flow of a data processing pipeline

- Arvardos

- CWL

- Nextflow

- Galaxy

Common Workflow Language

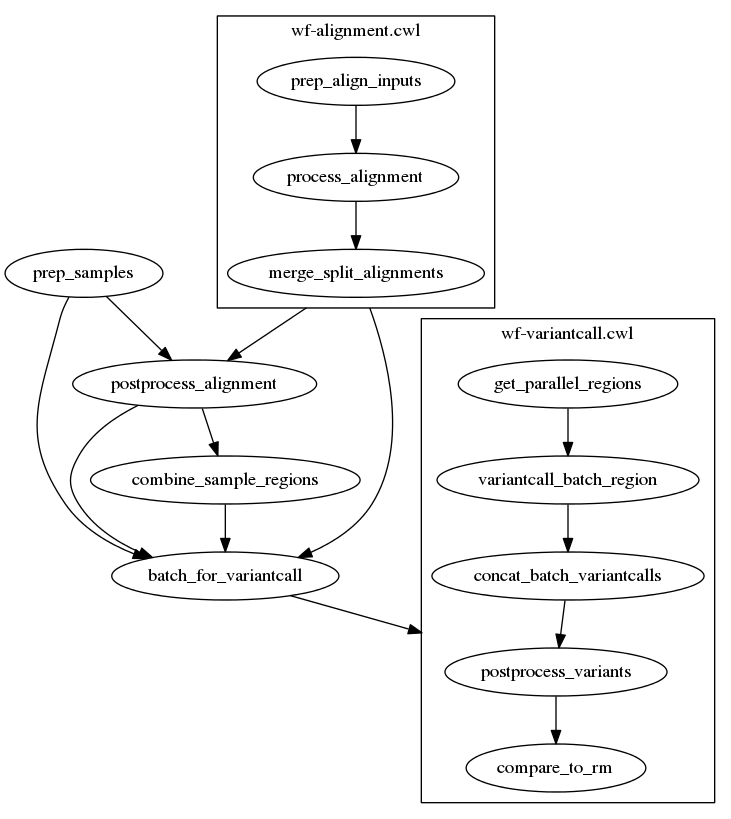

- YAML

- Scripts become tools

- Predictable inputs and outputs

- Tools are chained in workflows

- Tools are packaged up utilizing container technologies

- Implicit dependencies