Dead Poets Soc ty

AI

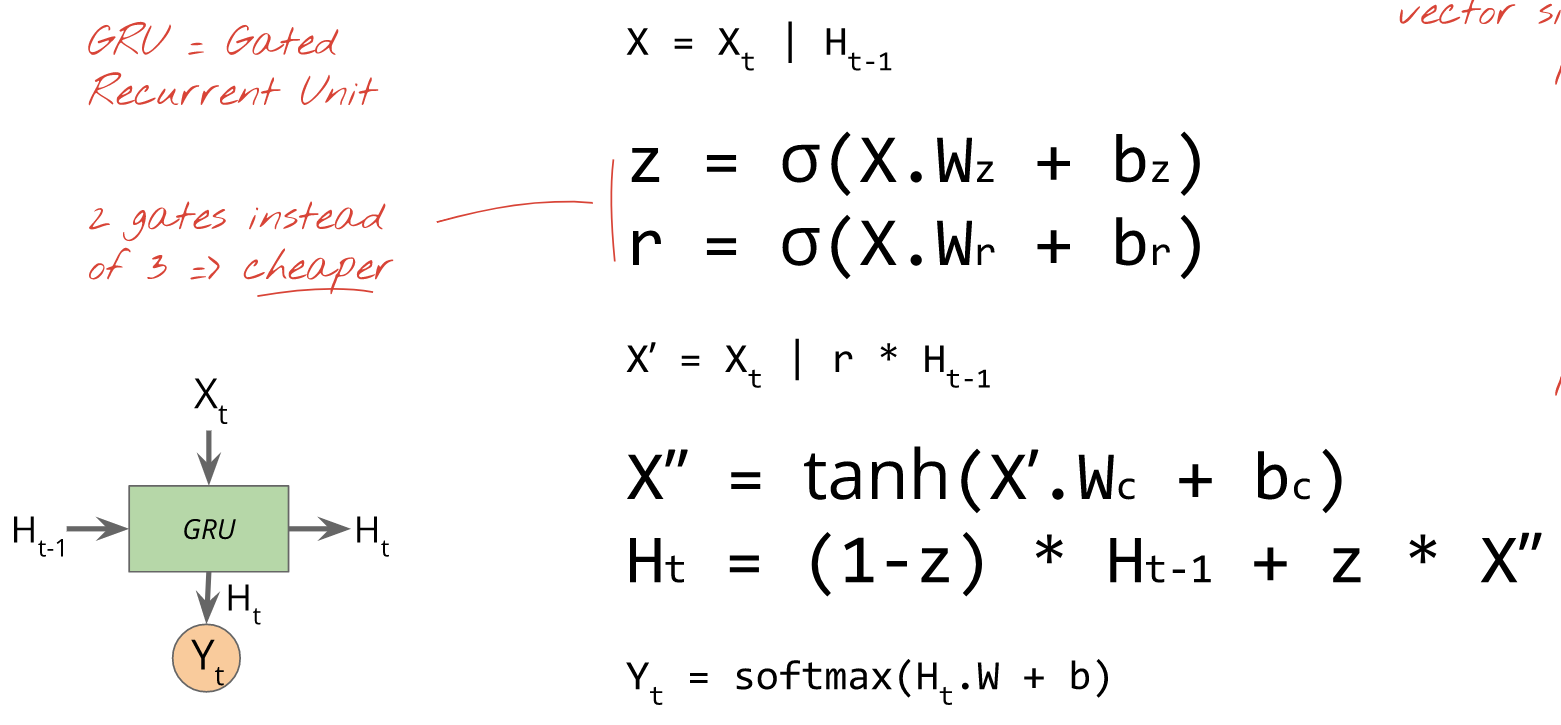

RNN - LSTM/GRU Text Generation

source of everything: Martin Gorner, https://goo.gl/zTDm7D

??!!!?!!,,,

EDGAR ALLAN NO

- Forgot to log accuracy

- 300k characters

- 100 iterations

- 2 GPUs

- 2 hours?

WHITMAN

- 60k characters

- 100 iterations

- 2 GPUs

- 15 minutes ???

- 97% accuracy

- ???!?!?!

WALT

WILL. I. AM.

SHAKESPEARE

- Whole night + more

- 8 CPUs

- No GPU

- Martin Gorner's work

- Complete works

Around and around we go...

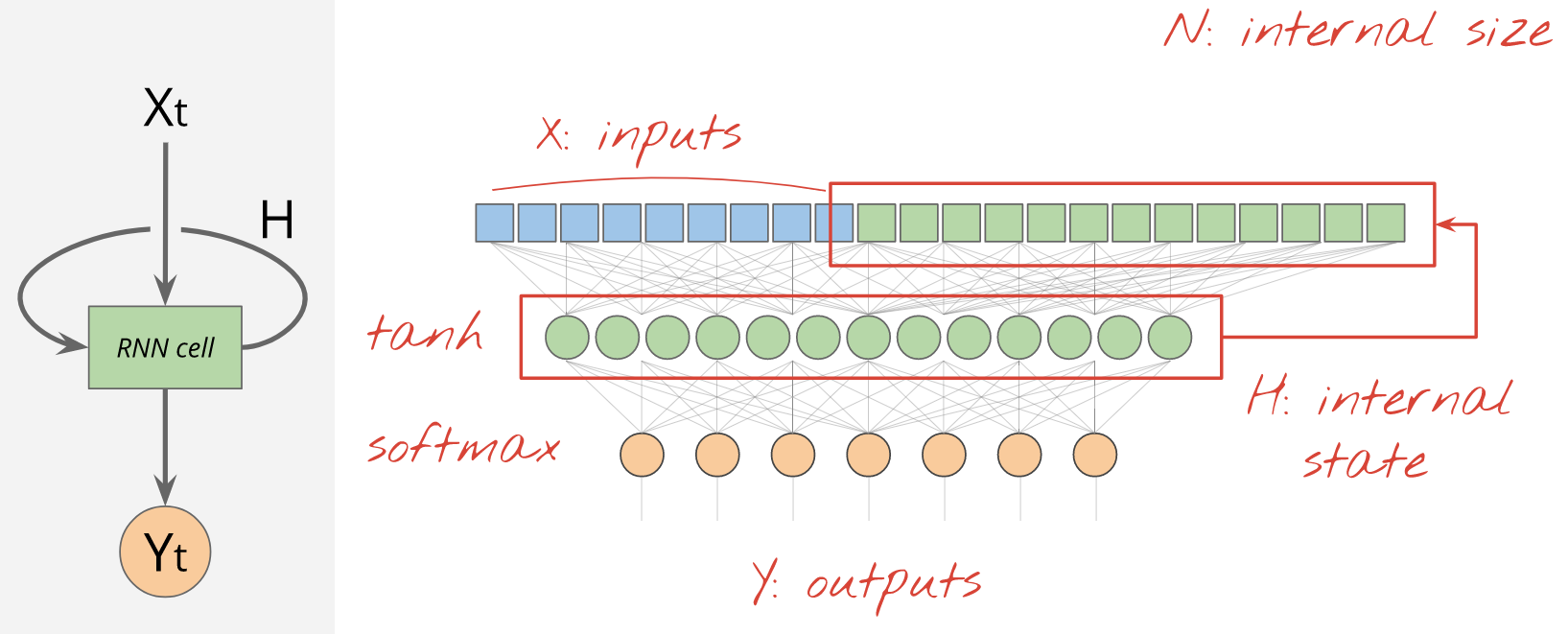

RNN? WTF?

#######################

# LE KERAS CODE - WOW #

#######################

# Around 60-ish lines of code

# Around 1000000 headaches

# 40 chars at a time, chars = total no of unique lowercase chars

model.add(LSTM(128, input_shape=(40, len(chars))))

# Softmax layer, just as many neurons as chars then get highest prob

model.add(Dense(len(chars)))

model.add(Activation('softmax'))

Meth

MATH!

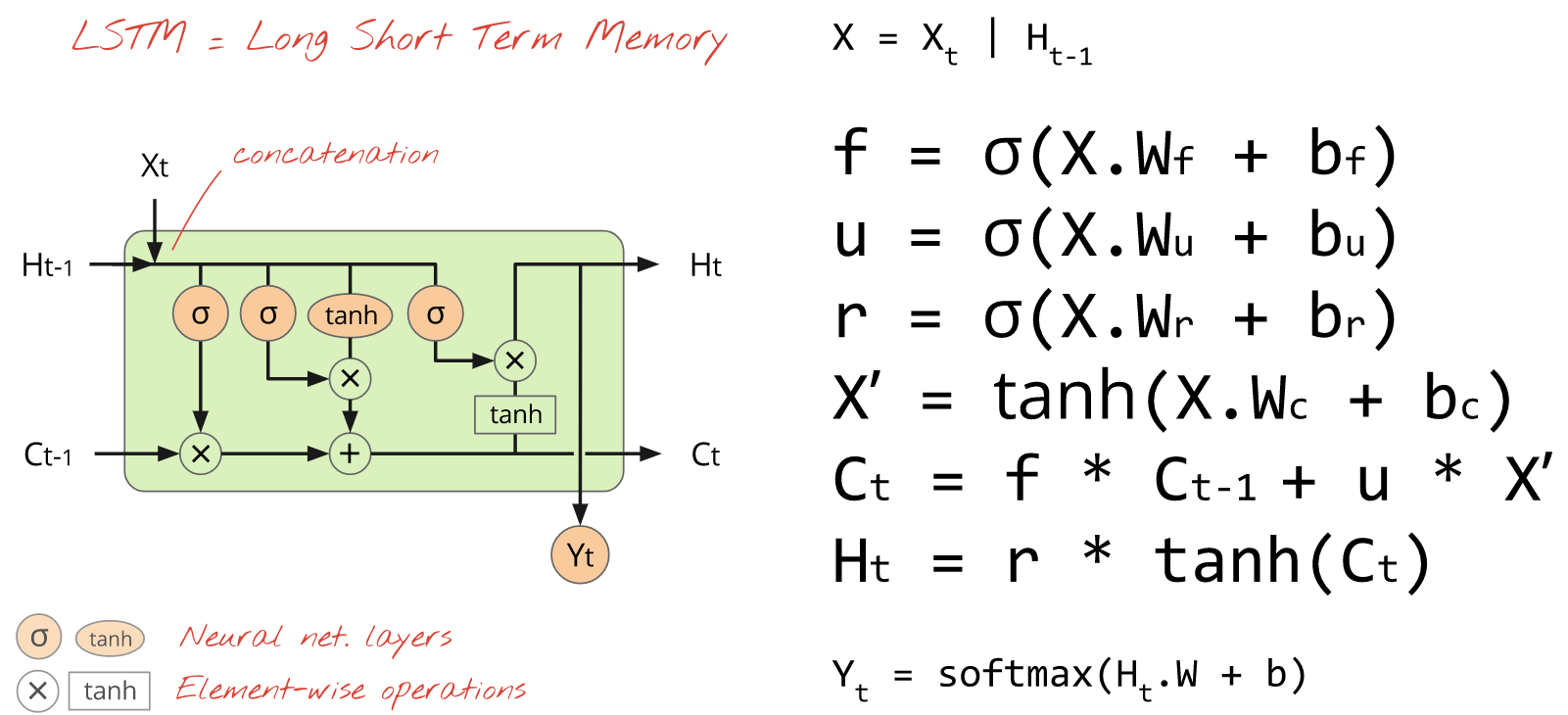

TMMDR: Use gates to transform shiite.

Takeaways

- Applications: speech recog, lang translation, text prediction

- Improve: Time. RNNs take a lot of computing power and time to train.

- Wish we had PH data to work with

- ML is weird. We got 97% accuracy (accdg to Keras) on Walt Whitman.

- Clean your data too????!!!???,,,,.

- ML is hard but fun