Make life less miserable

Sort of...

Ben

@QUASIBEN

CONTINUUM ANALYTICS

Misery...

Converting Data

Moving Data

Converting then Moving Data

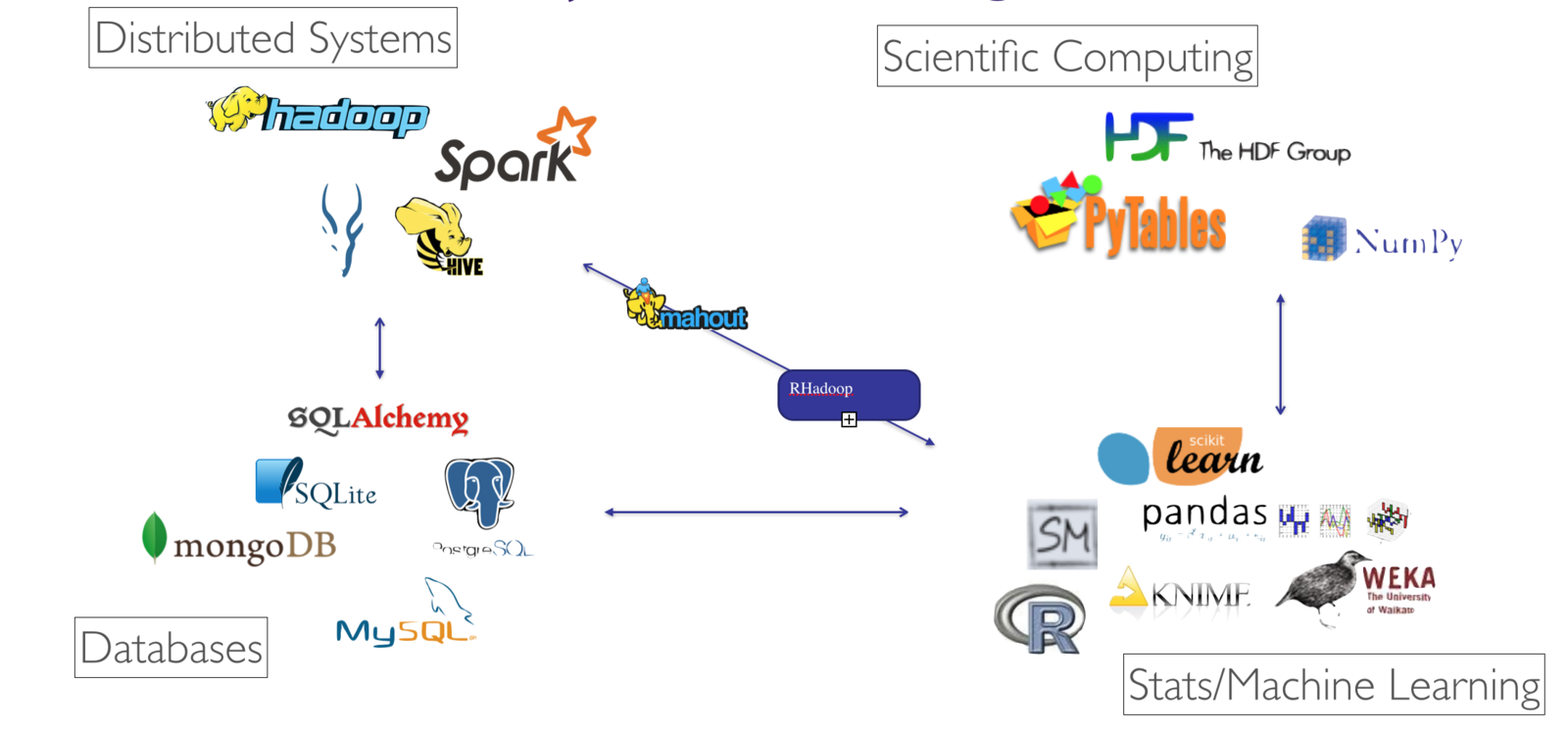

Data Analytics Entangled Bank

And Growing...

"Pretty Much my life..."

--D. Rodriguez

STOP THE INSANITY!!!

Actually...Lots of good tools

https://csvkit.readthedocs.org/en/latest/

https://dataset.readthedocs.org/en/latest/

http://paulfitz.github.io/daff/

unix: cat, head, awk/sed, tail, |, grep, sort, uniq, cut...

Still -- There are problems

CSV->Python

import csv

f = open("file.csv")

f_csv = csv.reader(f)

for line in f_csv...

f_csv.next()

...

munge()

get_money()Data->DataFrame

df = pd.read_csv("/path/to/file.csv")pd.read_clipboard

pd.read_csv

pd.read_excel

pd.read_fwf

pd.read_gbq

pd.read_hdf

pd.read_html

pd.read_json

pd.read_msgpack

pd.read_pickle

pd.read_sql

pd.read_sql_query

pd.read_sql_table

pd.read_stata

pd.read_tabledf.to_clipboard

df.to_csv

df.to_dense

df.to_dict

df.to_excel

df.to_gbq

df.to_hdf

df.to_html

df.to_json

df.to_latex

df.to_msgpack

df.to_panel

df.to_period

df.to_pickle

df.to_records

df.to_sparse

df.to_sql

df.to_stata

df.to_string

df.to_wideInput

Output

Data->DataFrame

import pandas as pd

from glob import glob

df_list = []

csv_list = glob("iris*.csv")

for csv in csv_list:

df_list.append(pd.read_csv(csv))

df = pd.concat(df_list)How about a pile of CSVs?

CSV->DataFrame

from odo import odo

import pandas as pd

df = odo("*.csv", pd.DataFrame)def read_csv_dir(...):

df_list = []

csv_list = glob(...)

for csv in csv_list:

df_list.append(pd.read_csv(csv))

return pd.concat(df_list)

Codify Function in a Framework

Let's Talk about Databases

DBA

MS SQL

MySQL

PostgreSQL

MongoDB

Oracle

Can be Crazy?

Done everything?

But you're not a DBA...

CSV->DB

conn = get_connect()

cursor = conn.cursor()

sql = "CREATE TABLE iris(s_length INT, s_width INT, ..., species TEXT);"

cursor.execute(sql)

data = read_csv(...)

sql = "INSERT INTO iris(s_length, s_width, ..., species) " \

"VALUES(?, ?, ..., ?)"

cursor.executemany(sql,data)

cursor.commit()

conn = get_connect()

df = pd.read_csv(...)

df.to_sql(name=tbl_name, con=conn...)

## pile of csvs

df = odo("*.csv", pd.DataFrame)

df.to_sql(...)Wait, what?!

CSV->DB

What is `to_sql` doing?

Type Conversion

sqlalchemy.types

Table Building

Columns and Types

Chunked Insert

CSV->DB

I knew a DBA -- she said,

"Bulk Loading is a Thing"

COPY FROM # PostgreSQL

LOAD DATA # MySQL

BULK INSERT # MS SQL

BCP

BULK COLLECT # ORACLE

.import # SQLITE

CSV->DB

COPY {tblname} FROM '{abspath}'

(FORMAT {format_str},

DELIMITER E'{delimiter}',

NULL '{na_value}',

QUOTE '{quotechar}',

ESCAPE '{escapechar}',

HEADER {header},

ENCODING '{encoding}');"""

odo("iris.csv", "sqlite:///iris.db")

odo("iris.csv", "postgresql://localhost::iris")Odo: A Framework for leveraging other peoples misery...

CSV->DB

COPY {tblname} FROM '{abspath}'

(FORMAT {format_str},

DELIMITER E'{delimiter}',

NULL '{na_value}',

QUOTE '{quotechar}',

ESCAPE '{escapechar}',

HEADER {header},

ENCODING '{encoding}');"""

odo("iris.csv", "sqlite:///iris.db")

odo("iris.csv", "postgresql://localhost::iris")Odo: A Framework for leveraging other people's misery...

If you let people go to crazytown they will everytime

odo("*.csv", db://hostname::tablename)

odo("iris.json", mongodb://hostname/db::collection")

odo("*.json", mongodb://hostname/db::collection")

## Full URI

sqldb://username:password@hostname:port/database::tablenameThat looks familiar...

# scp filename username@hostname:/path/to/file

# paramiko is an ssh client in Python

ssh.connect(...)

ftp = ssh.open_sftp()

ftp.put(local_file, remote_file)

odo('iris.csv', 'ssh://hostname:myfile.csv',

username='user', key_filename='.ssh/id_rsa', port=22)Let's Talk about HDFS/Cloud

DE?

HDfs

S3

REDSHIFT

HIVE

IMpala

Data Engineer?

Emoji Algebra is Weird

Cloud/Hdfs++ <-> Data

oDO("IRIS.CSV", "HDFS://HDFS_IP:/TMP/IRIS/IRIS.CSV", ...)

from pywebhdfs.webhdfs import PyWebHdfsClient

hdfs = PyWebHdfsClient(host=host, port=str(port),

user_name=user)

hdfs.read_file(...)

hdfs.append_file(...)Cloud/Hdfs++ <-> Data

odo("iris.csv", "s3://bucket/iris.csv"...) <->

odo("iris.csv", "hdfs://HDFS_IP:/tmp/iris/iris.csv", ...) <->

odo("hdfs://.../iris/", "hive://database-name::table-name", ...)

odo("iris.csv", "redshift://user:pass@host:port/database::table", ...) <->?

Let's Talk about Binary Stores

Binary StoreS

HDF5

Portable

tabular

compressible

Chunked

Columnar

Bcolz

Parquet

pytables

h5py

HDFSTORE

No Var Len Str

No Python

missing data

some rough edges

kind of new

Binary Stores <-> Data

ODO("TRIP.CSV, "TRIP.BCOLZ" )

odo(hdfstore://...)

ODO(PYTABLES://...)

ODO(h5py://...)

does this work?

odo('s3://mybucket/path/to/data.json',

'postgresql://user:passwd@localhost:port/db::data')Yes!

Uh, How?

Outlinks

INLINKS

Network solves conversions...

URL -> Data

http://raw.githubusercontent.com...../file.csv

Load to Pandas

Load To HDFS

....

Not a new idea:

import pandas.io.data as web

web.DataReader("IBM", 'yahoo', start, end)

web.DataReader("IBM", 'google', start, end)

$ FRED

$ Yahoo! Option

$ Fama/French

...Four Functions

discover - Return the DataShape of an object

convert - Convert data to new type

append - Append data on to existing data source

resource - Identify data by a string URI

Drop - deletes resource (Optional)

URL -> Data

URL -> Data

@append.register(CSV, URL(CSV))

def append_urlX_to_X(target, source, **kwargs):

with closing(urlopen(source.url)) as r:

chunk_size = 16 * source.chunk_size

with open(target.path, 'wb') as fp:

for chunk in iter(curry(r.read, chunk_size), ''):

fp.write(chunk)

return target

APPEND

URL -> Data

@discover.register((URL(CSV), URL(JSONLines)))

def discover_url_line_delimited(c, lines=5, encoding='utf-8', **kwargs):

"""Discover CSV and JSONLines files from URL."""

with sample(c, lines=lines, encoding=encoding) as fn:

return discover(c.subtype(fn, **kwargs), **kwargs)Discover

Sample: sip a few lines of data

URL -> Data

@convert.register(Temp(CSV), (Temp(URL(CSV)), URL(CSV)))

def url_file_to_temp_file(data, **kwargs):

fn = '.%s' % uuid.uuid1()

target = Temp(data.subtype)(fn, **kwargs)

return append(target, data, **kwargs)

COnvert

data: url(csv) Temp(CSV): CSV with garbage collection

URL -> Data

@resource.register('ftp://.+', priority=16)

@resource.register('http://.+', priority=16)

@resource.register('https://.+', priority=16)

def resource_url(uri, **kwargs):

path = os.path.basename(urlparse(uri).path)

try:

subtype = types_by_extension[ext(path)]

except KeyError:

subtype = type(resource(path))

return URL(subtype)(uri, **kwargs)

Resource

URL -> Data

from odo import odo

import pandas as pd

url = "http://foo.com/file.csv"

df = odo(url, pd.DataFrame)

auth = {'user': 'hdfs','port': '14000'}

odo(url, 'hdfs://hdfs_ip:/tmp/iris/iris.txt', **auth)Example

Some Thoughts

-

frameworks are good

-

Small functions to move and Convert Data

-

-

Verify Folk tales

-

DataBases require DBas

-

Mongo Doens't Suck -- Try It

-

What's BCOLZ -- Try it

-

-

ODO -- it's for happy People

Thanks!

Install

conda install odo

pip install odo